Abstract

THE statistical estimation of the unknown fractions Π1 (i = 1, … k), and, when unknown, further parameters θj (j = 1, … m), in a mixed population which is denoted by its cumulative distribution (taken to be univariate)  is, in general, cumbersome. It therefore seems worth noting the convenience and comparative efficiency, at least in relation to the estimation of Πi, of estimators of “least-squares” type, defined by the minimization of the integral

is, in general, cumbersome. It therefore seems worth noting the convenience and comparative efficiency, at least in relation to the estimation of Πi, of estimators of “least-squares” type, defined by the minimization of the integral  where Fs(x) denotes the cumulative distribution of the empirical sample (from, for example, n independent observations), and G(x) is a suitable increasing function of x. Taking for simplicity the case m = 0, the estimators of the Πi are automatically unbiased with exact variances readily calculable, and with asymptotic normality. For example, if k= 2, we have an estimate p1 for Π1,

where Fs(x) denotes the cumulative distribution of the empirical sample (from, for example, n independent observations), and G(x) is a suitable increasing function of x. Taking for simplicity the case m = 0, the estimators of the Πi are automatically unbiased with exact variances readily calculable, and with asymptotic normality. For example, if k= 2, we have an estimate p1 for Π1,  for example, where H=(dF1−dF2)/dG, with the expected value E(p1)=Π1, and variance

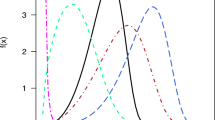

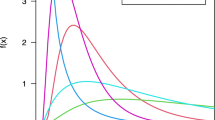

for example, where H=(dF1−dF2)/dG, with the expected value E(p1)=Π1, and variance  A considerable choice of H is possible. Thus, considering for definiteness the density case, we define the unweighted least-squares estimator of Π1 by the choice dG = dx, H=f1−f2. We should, however, expect weighted estimators to be better. In fact, because the variance of dFs is fdx/n, we should try to choose dG=fdx; the term ∫HdF in the expression for σ2(p1), arising from the covariance of dFs(x) and dFs(y), then vanishes, and σ2(p1) becomes identical with the reciprocal of the information function I(Π1). If we take dG =Π0dF1+(1−Π0)dF2, then this weighting will be most efficient when Π0 is close to Π1. Thus if we suspect Π1 to be near 1, ½ or 0, suitable choices for Π0 would be 1, ½ or 0, respectively. An alternative to ½(dF1+dF2) is the geometric mean of dF1 and dF2, and another is max(dF1, dF2). The geometric mean has some convenience over the arithmetic mean in theoretical investigations of efficiency (for example, for f1 and f2 normal), but the latter (or alternatively max(dF1, dF2)) has the advantage of approximating to the maximum likelihood estimator, for any value of Π1, in both the extreme cases of f1 and f2 well separated and of f1→f2. It has been shown by Hill1 that the information function I(Π1) for Π1 may be written n[1−S(Π1)]/(Π1Π2), where

A considerable choice of H is possible. Thus, considering for definiteness the density case, we define the unweighted least-squares estimator of Π1 by the choice dG = dx, H=f1−f2. We should, however, expect weighted estimators to be better. In fact, because the variance of dFs is fdx/n, we should try to choose dG=fdx; the term ∫HdF in the expression for σ2(p1), arising from the covariance of dFs(x) and dFs(y), then vanishes, and σ2(p1) becomes identical with the reciprocal of the information function I(Π1). If we take dG =Π0dF1+(1−Π0)dF2, then this weighting will be most efficient when Π0 is close to Π1. Thus if we suspect Π1 to be near 1, ½ or 0, suitable choices for Π0 would be 1, ½ or 0, respectively. An alternative to ½(dF1+dF2) is the geometric mean of dF1 and dF2, and another is max(dF1, dF2). The geometric mean has some convenience over the arithmetic mean in theoretical investigations of efficiency (for example, for f1 and f2 normal), but the latter (or alternatively max(dF1, dF2)) has the advantage of approximating to the maximum likelihood estimator, for any value of Π1, in both the extreme cases of f1 and f2 well separated and of f1→f2. It has been shown by Hill1 that the information function I(Π1) for Π1 may be written n[1−S(Π1)]/(Π1Π2), where  If f1 and f2 differ by some parameter μ (for example, the mean), and Δμ=μ2−μ1, then also as f1→f2

If f1 and f2 differ by some parameter μ (for example, the mean), and Δμ=μ2−μ1, then also as f1→f2  where I(μ) is the information function for μ. Thus for the maximum likelihood estimator Π̂1 the variance, which is Π1Π2/n for f1 and f2 well separated, is 1/[n(Δμ)2I(μ)] as f1→f2.

where I(μ) is the information function for μ. Thus for the maximum likelihood estimator Π̂1 the variance, which is Π1Π2/n for f1 and f2 well separated, is 1/[n(Δμ)2I(μ)] as f1→f2.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Hill, B. M., J. Amer. Stat. Assoc., 58, 918 (1963).

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

BARTLETT, M., MACDONALD, P. “Least-squares” Estimation of Distribution Mixtures. Nature 217, 195–196 (1968). https://doi.org/10.1038/217195b0

Received:

Published:

Issue date:

DOI: https://doi.org/10.1038/217195b0

This article is cited by

-

Partitioning mixed pbobability distributions into their constituents

Journal of Soviet Mathematics (1977)