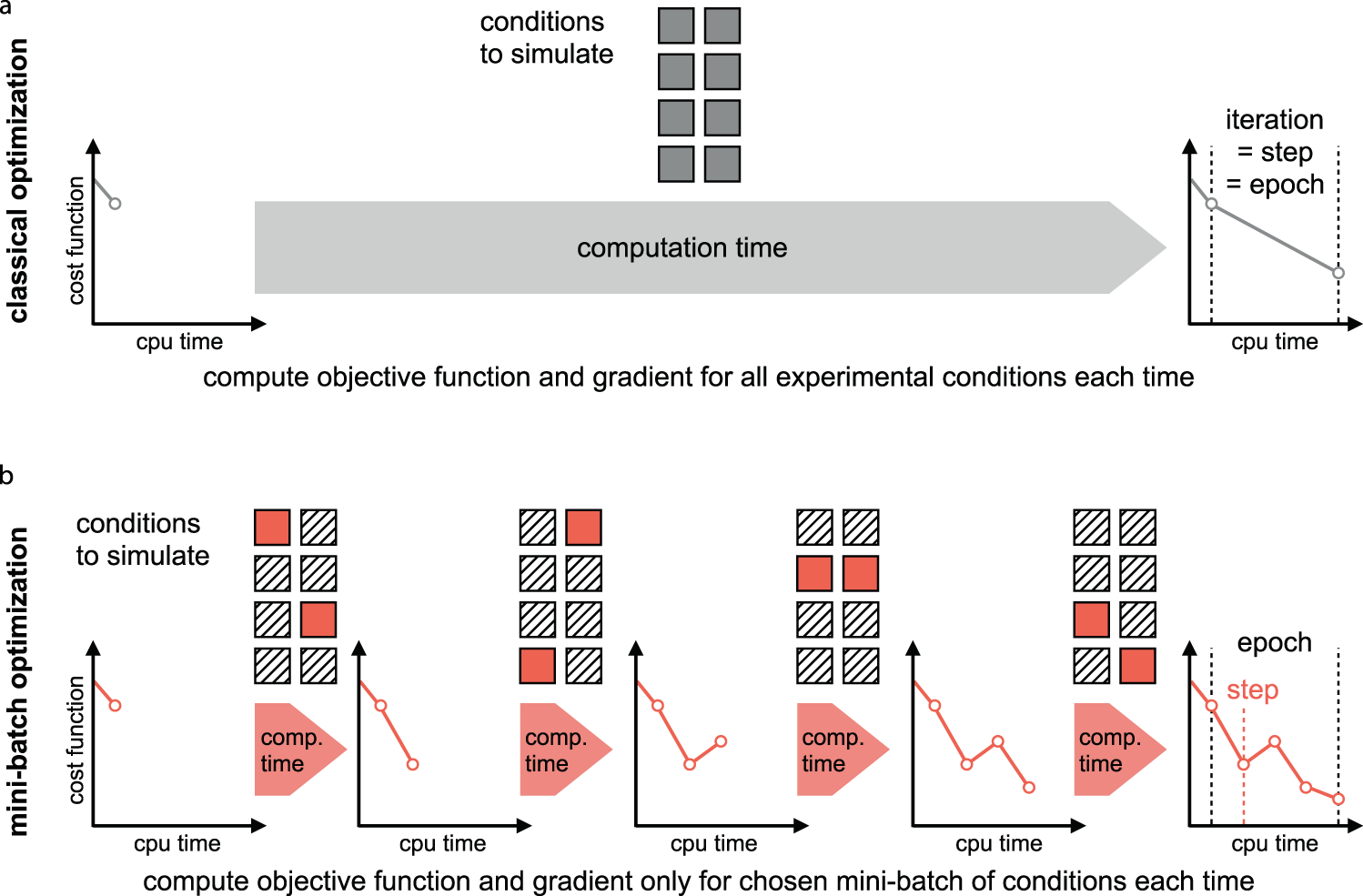

Fig. 1: Visualization of full-batch and mini-batch optimization.

From: Mini-batch optimization enables training of ODE models on large-scale datasets

a Classic full-batch optimization methods evaluate the contribution of all data points—and thus all experimental conditions—to the objective function in each step. The computation time scales linearly with the number of independently evaluable experimental conditions (depicted as gray squares). b In mini-batch optimization, the independent experimental conditions are randomly divided into disjoint subsets, the mini-batches (depicted as squares). Per the optimization step, only the contribution of the chosen mini-batch is evaluated (red squares). Hence, possibly many optimization steps can be performed during one epoch, which is the time until the whole dataset has been evaluated.