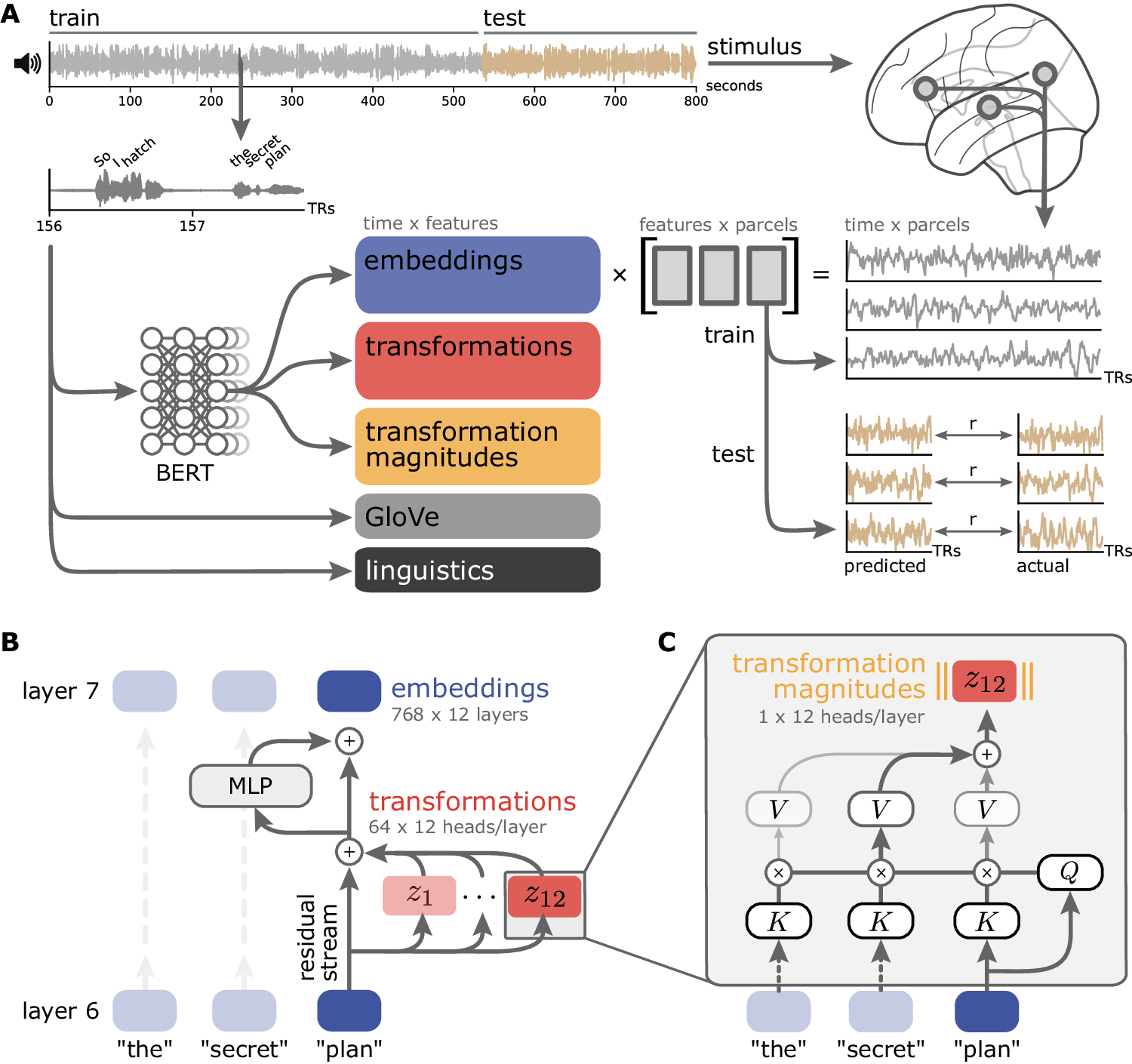

Fig. 1: Encoding models for predicting brain activity from the internal components of language models.

From: Shared functional specialization in transformer-based language models and the human brain

A Various features are used to predict fMRI time series acquired while subjects listened to naturalistic stories. Based on the stimulus transcript, we extracted classical linguistic features (e.g., parts of speech; black), non-contextual semantic features (e.g., GloVe vectors; gray), and internal features from a widely studied Transformer model (BERT-base). The encoding models are estimated from a training subset of each story using banded ridge regression and evaluated on a left-out test segment of each story using three-fold cross-validation. Model predictions are evaluated by computing the correlation between the predicted and actual time series for the test set. B We consider two core components of the Transformer architecture at each layer (BERT-base and GPT-2 each have 12 layers): embeddings (blue) and transformations (red). Embeddings represent the contextualized semantic content of the text. Transformations are the output of the self-attention mechanism for each attention head (BERT-base and GPT-2 have 12 heads per layer, each producing a 64-dimensional vector). Transformations capture the contextual information incrementally added to the embedding in that layer. Finally, we consider the transformation magnitudes (yellow; the L2 norm of each attention head’s 64-dimensional transformation vector), which represent the overall activity of a given attention head. MLP: multilayer perceptron. C Attention heads use learned matrices to produce content-sensitive updates to each token. For a single input token (“plan”) passing through a single head (layer 7, head 12), the token vector is multiplied by the head’s learned weight matrices (which are invariant across inputs) to produce query (Q), key (K), and value (V) vectors. The inner product between the query vector for this token (“plan”, Q) and the key vector (K) for each other token yields a set of “attention weights” that describe how relevant the other tokens are to “plan.” These “attention weights” are used to linearly combine the value vectors (V) from the other tokens. The summed output is the transformation for each head (here, Z12). The results from each attention head in this layer are concatenated and added back to the token’s original representation. Figure made using Nilearn, Matplotlib, seaborn, and Inkscape.