Abstract

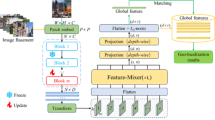

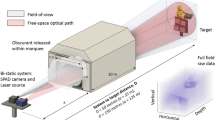

Visual Place Recognition (VPR) systems typically exhibit reduced robustness when subjected to changes in scene appearance produced by illumination dynamics or heterogeneity across different types of visual sensors. This paper proposes a novel framework that exploits depth estimation techniques to overcome these challenges. Our approach transforms omnidirectional images into depth maps using Distill Any Depth, a state-of-the-art depth estimator based on Depth Anything V2. These depth maps are then converted into pseudo-LiDAR point clouds, which serve as input to the MinkUNeXt architecture, which generates global-appearance descriptors. A key innovation lies in our novel data augmentation technique that exploits different distilled variants of depth estimation models to enhance robustness across varying conditions. Despite training with a limited set of images captured only under cloudy conditions, our system demonstrates robust performance when evaluated across diverse lighting scenarios, and further tests with different datasets and camera types confirm its generalization to geometrically dissimilar inputs. Extensive comparisons with state-of-the-art methods prove that our approach performs competitively across diverse lighting conditions, particularly excelling in scenarios with significant illumination changes. Furthermore, the generation of pseudo-LiDAR information from standard cameras provides a cost-effective alternative to 3D sensors. In summary, this work presents a fundamentally different approach to scene representation for VPR, with promising implications for robot localization in challenging environments. The implementation is publicly available at https://juanjo-cabrera.github.io/projects-pL-MinkUNeXt/.

Similar content being viewed by others

Data availability

Data is publicly available on the project website: https://juanjo-cabrera.github.io/projects-pL-MinkUNeXt/.

References

Chen, X. et al. OverlapNet: A siamese network for computing LiDAR scan similarity with applications to loop closing and localization. Auton. Robots 46, 1–21. https://doi.org/10.1007/s10514-021-09999-0 (2022).

Jin, X., Junping, Y., Juan, Z. & Tianyan, G. Enhancing cross view geo localization through global local quadrant interaction network. Sci. Rep. 15, 33431. https://doi.org/10.1038/s41598-025-18935-6 (2025).

Flores, M., Valiente, D., Gil, A., Reinoso, O. & Payá, L. Efficient probability-oriented feature matching using wide field-of-view imaging. Eng. Appl. Artif. Intell. 107, 104539. https://doi.org/10.1016/j.engappai.2021.104539 (2022).

Zhou, Z., Xu, J., Xiong, G. & Ma, J. LCPR: A multi-scale attention-based LiDAR-Camera fusion network for place recognition. IEEE Robot. Autom. Lett. 8, 2025–2032. https://doi.org/10.1109/LRA.2023.3346753 (2023).

Gallagher, J. E. & Oughton, E. J. Assessing thermal imagery integration into object detection methods on air-based collection platforms. Sci. Rep. 13, 8491. https://doi.org/10.1038/s41598-023-34791-8 (2023).

Wang, Y. et al. Pseudo-LiDAR from visual depth estimation: Bridging the gap in 3D object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8445–8453 (2019).

He, X. et al. Distill any depth: Distillation creates a stronger monocular depth estimator. arXiv preprint arXiv:2502.19204 (2025).

Zaffar, M. et al. VPR-bench: An open-source visual place recognition evaluation framework with quantifiable viewpoint and appearance change. Int. J. Comput. Vis. 129, 2136–2174. https://doi.org/10.1007/s11263-021-01469-5 (2021).

Humenberger, M. et al. Investigating the role of image retrieval for visual localization: An exhaustive benchmark. Int. J. Comput. Vis. 130, 1811–1836. https://doi.org/10.1007/s11263-022-01615-7 (2022).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778 (2016).

Arandjelovic, R., Gronat, P., Torii, A., Pajdla, T. & Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5297–5307 (2016).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 10012–10022 (2021).

Liu, Z. et al. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 12009–12019 (2022).

Caron, M. et al. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 9650–9660 (2021).

Oquab, M. et al. DINOv2: Learning robust visual features without supervision. arXiv preprint arXiv:2304.07193 (2023).

Berton, G., Masone, C. & Caputo, B. Rethinking visual geo-localization for large-scale applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4878–4888 (2022).

Berton, G., Trivigno, G., Caputo, B. & Masone, C. Eigenplaces: Training viewpoint robust models for visual place recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 11080–11090 (2023).

Keetha, N. et al. AnyLoc: towards universal visual place recognition. IEEE Robot. Autom. Lett. 8, 1265–1272. https://doi.org/10.1109/LRA.2023.3343602 (2023).

Ali-Bey, A., Chaib-Draa, B. & Giguere, P. MixVPR: Feature mixing for visual place recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2998–3007 (2023).

Izquierdo, S. & Civera, J. Optimal transport aggregation for visual place recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 17658–17668 (2024).

Rostkowska, M. & Skrzypczyński, P. Optimizing appearance-based localization with catadioptric cameras: Small-footprint models for real-time inference on edge devices. Sensors 23, 6485. https://doi.org/10.3390/s23146485 (2023).

Alfaro, M., Cabrera, J. J., Jiménez, L. M., Reinoso, Ó. & Payá, L. Triplet Neural Networks for the Visual Localization of Mobile Robots. In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO), 125–132, 2024, https://doi.org/10.5220/0012927400003822.

Yin, H. et al. A survey on global LiDAR localization: Challenges, advances and open problems. Int. J. Comput. Vis. 132, 3139–3171. https://doi.org/10.1007/s11263-024-02019-5 (2024).

Uy, M. A. & Lee, G. H. PointNetVLAD: Deep point cloud based retrieval for large-scale place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4470–4479 (2018).

Komorowski, J. Improving point cloud based place recognition with ranking-based loss and large batch training. In 2022 26th International Conference on Pattern Recognition (ICPR), 3699–3705, https://doi.org/10.1109/ICPR56361.2022.9956458 (IEEE, 2022).

Cabrera, J. J., Santo, A., Gil, A., Viegas, C. & Payá, L. MinkUNeXt: Point cloud-based large-scale place recognition using 3D sparse convolutions. Array 28, 100569. https://doi.org/10.1016/j.array.2025.100569 (2025).

Zhao, H., Jiang, L., Jia, J., Torr, P. H. & Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 16259–16268 (2021).

Ma, J. et al. OverlapTransformer: An efficient and yaw-angle-invariant transformer network for LiDAR-based place recognition. IEEE Robot. Autom. Lett. 7, 6958–6965. https://doi.org/10.1109/LRA.2022.3178797 (2022).

Komorowski, J., Wysoczańska, M. & Trzcinski, T. Minkloc++: lidar and monocular image fusion for place recognition. In 2021 International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2021).

Zhao, Z., Yu, H., Lyu, C., Yang, W. & Scherer, S. Attention-enhanced cross-modal localization between spherical images and point clouds. IEEE Sens. J. 23, 23836–23845. https://doi.org/10.1109/JSEN.2023.3306377 (2023).

Karypidis, E., Kakogeorgiou, I., Gidaris, S. & Komodakis, N. DINO-Foresight: Looking into the future with DINO. arXiv preprint arXiv:2412.11673 (2024).

Xian, K., Cao, Z., Shen, C. & Lin, G. Towards robust monocular depth estimation: A new baseline and benchmark. Int. J. Comput. Vis. 132, 2401–2419. https://doi.org/10.1007/s11263-023-01979-4 (2024).

Gui, M. et al. DepthFM: Fast monocular depth estimation with flow matching. arXiv preprint arXiv:2403.13788 (2024).

Ke, B. et al. Repurposing diffusion-based image generators for monocular depth estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9492–9502 (2024).

Ranftl, R., Lasinger, K., Hafner, D., Schindler, K. & Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 44, 1623–1637. https://doi.org/10.1109/TPAMI.2020.3019967 (2020).

Bhat, S. F., Birkl, R., Wofk, D., Wonka, P. & Müller, M. ZoeDepth: Zero-shot transfer by combining relative and metric depth. arXiv preprint arXiv:2302.12288 (2023).

Yang, L. et al. Depth Anything: Unleashing the power of large-scale unlabeled data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 10371–10381 (2024).

Yang, L. et al. Depth Anything v2. Advances in Neural Information Processing Systems 37, 21875–21911 (2025).

Hu, W. et al. Depthcrafter: Generating consistent long depth sequences for open-world videos. arXiv preprint arXiv:2409.02095 (2024).

Chen, S. et al. Video Depth Anything: Consistent depth estimation for super-long videos. arXiv preprint arXiv:2501.12375 (2025).

Guo, Y., Garg, S., Miangoleh, S. M. H., Huang, X. & Ren, L. Depth Any Camera: Zero-shot metric depth estimation from any camera. arXiv preprint arXiv:2501.02464 (2025).

Xu, G. et al. What matters when repurposing diffusion models for general dense perception tasks? arXiv preprint arXiv:2403.06090 (2024).

Yan, L., Yan, P., Xiong, S., Xiang, X. & Tan, Y. Monocd: Monocular 3d object detection with complementary depths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 10248–10257 (2024).

Ganj, A., Zhao, Y., Su, H. & Guo, T. Mobile AR depth estimation: Challenges & prospects. In Proceedings of the 25th International Workshop on Mobile Computing Systems and Applications, 21–26, https://doi.org/10.1145/3638550.3641122 (2024).

Han, J. J., Acar, A., Henry, C. & Wu, J. Y. Depth anything in medical images: A comparative study. arXiv preprint arXiv:2401.16600 (2024).

Hettiarachchi, D., Tian, Y., Yu, H. & Kamijo, S. Depth as attention to learn image representations for visual localization, using monocular images. J. Vis. Commun. Image Represent. 98, 104012. https://doi.org/10.1016/j.jvcir.2023.104012 (2024).

Suvorov, R. et al. Resolution-robust large mask inpainting with fourier convolutions. arXiv preprint arXiv:2109.07161 (2021).

Radenović, F., Tolias, G. & Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1655–1668. https://doi.org/10.1109/TPAMI.2018.2846566 (2018).

Choy, C., Gwak, J. & Savarese, S. 4D spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 3075–3084 (2019).

Pronobis, A. & Caputo, B. COLD: The COsy localization database. Int. J. Robot. Res. 28, 588–594. https://doi.org/10.1177/0278364909103912 (2009).

Bochkovskii, A. et al. Depth Pro: Sharp monocular metric depth in less than a second. arXiv preprint arXiv:2410.02073 (2024).

Xia, Y. et al. Casspr: Cross attention single scan place recognition. In Proceedings of the IEEE/CVF international conference on computer vision, 8461–8472 (2023).

Cabrera, J. J., Román, V., Gil, A., Reinoso, O. & Payá, L. An experimental evaluation of siamese neural networks for robot localization using omnidirectional imaging in indoor environments. Artif. Intell. Rev. 57, 198. https://doi.org/10.1007/s10462-024-10840-0 (2024).

Lu, F. et al. Cricavpr: Cross-image correlation-aware representation learning for visual place recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16772–16782 (2024).

Bonarini, A. et al. Rawseeds: Robotics advancement through web-publishing of sensorial and elaborated extensive data sets. In proceedings of IROS 6, 93 (2006).

Funding

The Ministry of Science, Innovation and Universities (Spain) has supported this work through FPU21/04969 (J.J. Cabrera) and FPU23/00587 (M. Alfaro). This research is part of the project CIPROM/2024/8, funded by Generalitat Valenciana, Conselleria de Educación, Cultura, Universidades y Empleo (program PROMETEO 2025). It is also part of the project PID2023-149575OB-I00 funded by MICIU/AEI/10.13039/501100011033 and by FEDER, UE.

Author information

Authors and Affiliations

Contributions

Conceptualization: A.G., O.R.; Methodology: A.G.,L.P.; Software: J.J.C., M.A.; Validation: J.J.C., M.A.; Formal Analysis: O.R.; Investigation: J.J.C., M.A.; Resources: O.R.; Data Curation: J.J.C., M.A.; Writing - original draft: J.J.C., M.A.; Writing - review & editing: A.G., L.P.; Visualization: O.R.; Supervision: A.G., L.P.; Project administration: A.G., L.P.; Funding acquisition: A.G., L.P.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cabrera, J.J., Alfaro, M., Gil, A. et al. Robust place recognition under illumination changes using pseudo-LiDAR from omnidirectional images. Sci Rep (2026). https://doi.org/10.1038/s41598-026-39848-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-026-39848-y