Abstract

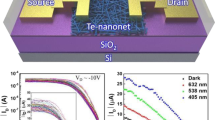

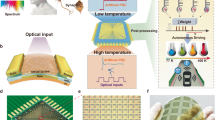

The growth of data-intensive computing tasks requires processing units with higher performance and energy efficiency, but these requirements are increasingly difficult to achieve with conventional semiconductor technology. One potential solution is to combine developments in devices with innovations in system architecture. Here we report a tensor processing unit (TPU) that is based on 3,000 carbon nanotube field-effect transistors and can perform energy-efficient convolution operations and matrix multiplication. The TPU is constructed with a systolic array architecture that allows parallel 2 bit integer multiply–accumulate operations. A five-layer convolutional neural network based on the TPU can perform MNIST image recognition with an accuracy of up to 88% for a power consumption of 295 µW. We use an optimized nanotube fabrication process that offers a semiconductor purity of 99.9999% and ultraclean surfaces, leading to transistors with high on-current densities and uniformity. Using system-level simulations, we estimate that an 8 bit TPU made with nanotube transistors at a 180 nm technology node could reach a main frequency of 850 MHz and an energy efficiency of 1 tera-operations per second per watt.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and the other findings of this study are available from the corresponding author upon reasonable request.

Code availability

The custom codes for this study are available from the corresponding author upon request.

References

Ionescu, A. M. Energy efficient computing and sensing in the zettabyte era: from silicon to the cloud. In Proc. 2017 IEEE International Electron Devices Meeting (IEDM) 1.2.1–1.2.8 (IEEE, 2017); https://doi.org/10.1109/IEDM.2017.8268307

Li, H., Ota, K. & Dong, M. Learning IoT in edge: deep learning for the Internet of Things with edge computing. IEEE Netw. 32, 96–101 (2018).

Service, R. F. Is silicon’s reign nearing its end? Science 323, 1000–1002 (2009).

Markov, I. L. Limits on fundamental limits to computation. Nature 512, 147–154 (2014).

Dean, J., Patterson, D. & Young, C. A new golden age in computer architecture: empowering the machine-learning revolution. IEEE Micro 38, 21–29 (2018).

Qiu, C. et al. Scaling carbon nanotube complementary transistors to 5-nm gate lengths. Science 355, 271–276 (2017).

Liu, L. et al. Aligned, high-density semiconducting carbon nanotube arrays for high-performance electronics. Science 368, 850–856 (2020).

Hills, G. et al. Modern microprocessor built from complementary carbon nanotube transistors. Nature 572, 595–602 (2019).

Franklin, A. D. et al. Sub-10 nm carbon nanotube transistor. Nano Lett. 12, 758–762 (2012).

Sabry Aly, M. M. et al. The N3XT approach to energy-efficient abundant-data computing. Proc. IEEE 107, 19–48 (2019).

Shulaker, M. M. et al. Three-dimensional integration of nanotechnologies for computing and data storage on a single chip. Nature 547, 74–78 (2017).

Gomez-Luna, J. et al. Benchmarking memory-centric computing systems: analysis of real processing-in-memory hardware. In Proc. 12th International Green and Sustainable Computing Conference (IGSC) 1–7 (IEEE, 2021); https://doi.org/10.1109/IGSC54211.2021.9651614

Mutlu, O., Ghose, S., Gómez-Luna, J. & Ausavarungnirun, R. Processing data where it makes sense: enabling in-memory computation. Microprocess. Microsyst. 67, 28–41 (2019).

Kang, M., Keel, M.-S., Shanbhag, N. R., Eilert, S. & Curewitz, K. An energy-efficient VLSI architecture for pattern recognition via deep embedding of computation in SRAM. In Proc. 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 8326–8330 (IEEE, 2014); https://doi.org/10.1109/ICASSP.2014.6855225

Fujiki, D., Mahlke, S. & Das, R. Duality cache for data parallel acceleration. In Proc. ACM/IEEE 46th Annual International Symposium on Computer Architecture (ISCA) 1–14 (IEEE, 2019).

Seshadri, V. et al. Ambit: in-memory accelerator for bulk bitwise operations using commodity DRAM technology. In Proc. 50th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO) 273–287 (ACM, 2017).

Seshadri, V. et al. RowClone: accelerating data movement and initialization using DRAM. Preprint at https://doi.org/10.48550/arXiv.1805.03502 (2018).

Gómez-Luna, J. et al. Benchmarking a new paradigm: experimental analysis and characterization of a real processing-in-memory system. IEEE Access 10, 52565–52608 (2022).

memBrainTM Products. Silicon Storage Technology https://www.sst.com/membraintm-products

Mahmoodi, M. R. & Strukov, D. An ultra-low energy internally analog, externally digital vector-matrix multiplier based on NOR flash memory technology. In Proc. 55th Annual Design Automation Conference 1–6 (ACM, 2018); https://doi.org/10.1145/3195970.3195989

Wong, H.-S. P. & Salahuddin, S. Memory leads the way to better computing. Nat. Nanotechnol. 10, 191–194 (2015).

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Cai, F. et al. A fully integrated reprogrammable memristor–CMOS system for efficient multiply–accumulate operations. Nat. Electron. 2, 290–299 (2019).

Lin, P. et al. Three-dimensional memristor circuits as complex neural networks. Nat. Electron. 3, 225–232 (2020).

Zidan, M. A. et al. A general memristor-based partial differential equation solver. Nat. Electron. 1, 411–420 (2018).

Hung, J.-M. et al. A four-megabit compute-in-memory macro with eight-bit precision based on CMOS and resistive random-access memory for AI edge devices. Nat. Electron. 4, 921–930 (2021).

Joshi, V. et al. Accurate deep neural network inference using computational phase-change memory. Nat. Commun. 11, 2473 (2020).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Nandakumar, S. R. et al. Experimental demonstration of supervised learning in spiking neural networks with phase-change memory synapses. Sci. Rep. 10, 8080 (2020).

Sarwat, S. G., Kersting, B., Moraitis, T., Jonnalagadda, V. P. & Sebastian, A. Phase-change memtransistive synapses for mixed-plasticity neural computations. Nat. Nanotechnol. 17, 507–513 (2022).

Berdan, R. et al. Low-power linear computation using nonlinear ferroelectric tunnel junction memristors. Nat. Electron. 3, 259–266 (2020).

Shi, Y. et al. Neuroinspired unsupervised learning and pruning with subquantum CBRAM arrays. Nat. Commun. 9, 5312 (2018).

Mennel, L. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020).

Jung, S. et al. A crossbar array of magnetoresistive memory devices for in-memory computing. Nature 601, 211–216 (2022).

Torrejon, J. et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017).

Raina, R., Madhavan, A. & Ng, A. Y. Large-scale deep unsupervised learning using graphics processors. In Proc. 26th Annual International Conference on Machine Learning – ICML ’09 1–8 (ACM, 2009); https://doi.org/10.1145/1553374.1553486

Wang, C. et al. DLAU: a scalable deep learning accelerator unit on FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 36, 513–517 (2016).

Chen, Y., Chen, T., Xu, Z., Sun, N. & Temam, O. DianNao family: energy-efficient hardware accelerators for machine learning. Commun. ACM 59, 105–112 (2016).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proc. ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA) 1–12 (IEEE, 2017).

Kung, H. T. Why systolic architectures?. Computer 15, 37–46 (1982).

Jouppi, N. P. et al. Ten lessons from three generations shaped Google’s tpuv4i: industrial product. In Proc. ACM/IEEE 48th Annual International Symposium on Computer Architecture (ISCA) 1–14 (IEEE, 2021).

Hu, Y. H. & Kung, S.-Y. in Handbook of Signal Processing Systems (eds. Bhattacharyya, S. S. et al.) 817–849 (Springer, 2010); https://doi.org/10.1007/978-1-4419-6345-1_29

Gysel, P., Motamedi, M. & Ghiasi, S. Hardware-oriented approximation of convolutional neural networks. Preprint at arxiv.org/abs/1604.03168 (2016).

Khwa, W.-S. et al. A 65nm 4kb algorithm-dependent computing-in-memory SRAM unit-macro with 2.3ns and 55.8TOPS/W fully parallel product-sum operation for binary DNN edge processors. In Proc. 2018 IEEE International Solid - State Circuits Conference - (ISSCC) 496–498 (IEEE, 2018); https://doi.org/10.1109/ISSCC.2018.8310401

Tang, J. et al. A reliable all-2D materials artificial synapse for high energy-efficient neuromorphic computing. Adv. Funct. Mater. 31, 2011083 (2021).

Liu, C. et al. Complementary transistors based on aligned semiconducting carbon nanotube arrays. ACS Nano 16, 21482–21490 (2022).

Zhang, Z. et al. Complementary carbon nanotube metal–oxide–semiconductor field-effect transistors with localized solid-state extension doping. Nat. Electron. 6, 999–1008 (2023).

Zhao, C. et al. Exploring the performance limit of carbon nanotube network film field-effect transistors for digital integrated circuit applications. Adv. Funct. Mater. 29, 1808574 (2019).

Lin, Y. et al. Enhancement-mode field-effect transistors and high-speed integrated circuits based on aligned carbon nanotube films. Adv. Funct. Mater. 32, 2104539 (2022).

Lee, C.-S., Pop, E., Franklin, A. D., Haensch, W. & Wong, H.-S. P. A compact virtual-source model for carbon nanotube FETs in the sub-10-nm regime—Part I. Intrinsic elements. IEEE Trans. Electron. Devices 62, 3061–3069 (2015).

Acknowledgements

This work was supported by the National Key Research and Development Program of China (Project Nos. 2022YFB4401600 and 2021YFA1202904 to Z.Z.), the Natural Science Foundation of China (Project No. 62274006 to J.S. and Project Nos. 62225101 and U21A6004 to Z.Z.) and Peking Nanofab.

Author information

Authors and Affiliations

Contributions

Z.Z. and L.-M.P. proposed and supervised the project. J.S. designed the system and circuit and performed the fabrication and measurements. P.Z., D.L. and J.J. performed the simulations. J.S., C.Z., H.X., L.X. and L.L. analysed the data. J.S., Z.Z. and L.-M.P. co-wrote the manuscript. All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Electronics thanks Franz Kreupl, Jinbo Pang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–9 and Tables 1–3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Si, J., Zhang, P., Zhao, C. et al. A carbon-nanotube-based tensor processing unit. Nat Electron 7, 684–693 (2024). https://doi.org/10.1038/s41928-024-01211-2

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41928-024-01211-2

This article is cited by

-

Bottom–up-synthesized graphene nanoribbons for nanoelectronics

Nature Reviews Materials (2026)

-

A flexible digital compute-in-memory chip for edge intelligence

Nature (2026)

-

Boosting carbon nanotube transistors through γ-ray irradiation

Nature Communications (2026)

-

Advanced Design for High-Performance and AI Chips

Nano-Micro Letters (2026)

-

Near-infrared organic photoelectrochemical synaptic transistors by wafer-scale photolithography for neuromorphic visual system

Nature Communications (2025)