Abstract

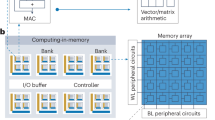

Compute-in-memory (CIM) accelerators based on emerging memory devices are of potential use in edge artificial intelligence and machine learning applications due to their power and performance capabilities. However, the privacy and security of CIM accelerators needs to be ensured before their widespread deployment. Here we explore the development of safe, secure and trustworthy CIM accelerators. We examine vulnerabilities specific to CIM accelerators, along with strategies to mitigate these threats including adversarial and side-channel attacks. We then discuss the security opportunities of CIM systems, leveraging the intrinsic randomness of the memory devices. Finally, we consider the incorporation of security considerations into the design of future CIM accelerators for secure and privacy-preserving edge AI applications.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016); https://doi.org/10.1109/CVPR.2016.90

Feng, S. et al. Dense reinforcement learning for safety validation of autonomous vehicles. Nature 615, 620–627 (2023).

Brown, T. B. et al. Language models are few-shot learners. In NIPS'20: Proc. 34th International Conference on Neural Information Processing Systems 1877–1901 (ACM, 2020).

Achiam J. et al. GPT-4 technical report. Preprint at http://arxiv.org/abs/2303.08774 (2023).

Dally, B. Hardware for deep learning. In 2023 IEEE Hot Chips 35 Symposium 1–58 (IEEE, 2023); https://doi.org/10.1109/HCS59251.2023.10254716

Choquette, J. NVIDIA Hopper H100 GPU: scaling performance. IEEE Micro 43, 9–17 (2023).

Xu, X. et al. Scaling for edge inference of deep neural networks. Nat. Electron. 1, 216–222 (2018).

Wu, C.-J. et al. Machine learning at Facebook: understanding inference at the edge. In 2019 IEEE International Symposium on High Performance Computer Architecture 331–344 (IEEE, 2019); https://doi.org/10.1109/HPCA.2019.00048

Chi, P. et al. PRIME: a novel processing-in-memory architecture for neural network computation in ReRAM-based main memory. ACM SIGARCH Comput. Archit. News 44, 27–39 (2016).

Zidan, M. A., Strachan, J. P. & Lu, W. D. The future of electronics based on memristive systems. Nat. Electron. 1, 22–29 (2018).

Yan, B. et al. A 1.041-Mb/mm2 27.38-TOPS/W signed-INT8 dynamic-logic-based ADC-less SRAM compute-in-memory macro in 28nm with reconfigurable bitwise operation for AI and embedded applications. In 2022 IEEE International Solid-State Circuits Conference 188–190 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731545

Chiu, Y.-C. et al. A 22nm 8Mb STT-MRAM near-memory-computing macro with 8b-precision and 46.4-160.1TOPS/W for edge-AI devices. In 2023 IEEE International Solid-State Circuits Conference 496–498 (IEEE, 2023); https://doi.org/10.1109/ISSCC42615.2023.10067563

Tu, F. et al. A 28nm 15.59µJ/token full-digital bitline-transpose CIM-based sparse transformer accelerator with pipeline/parallel reconfigurable modes. In 2022 IEEE International Solid-State Circuits Conference 466–468 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731645

Khwa, W.-S. et al. A 40-nm, 2M-cell, 8b-precision, hybrid SLC-MLC PCM computing-in-memory macro with 20.5 - 65.0TOPS/W for tiny-Al edge devices. In 2022 IEEE International Solid- State Circuits Conference 1–3 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731670

Le Gallo, M. et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat. Electron. 6, 680–693 (2023).

Hu, H.-W. et al. A 512Gb in-memory-computing 3D-NAND flash supporting similar-vector-matching operations on edge-AI devices. In 2022 IEEE International Solid-State Circuits Conference 138–140 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731775

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022).

Huo, Q. et al. A computing-in-memory macro based on three-dimensional resistive random-access memory. Nat. Electron. 5, 469–477 (2022).

Zhang, W. et al. Edge learning using a fully integrated neuro-inspired memristor chip. Science 381, 1205–1211 (2023).

Zhang, J. J. et al. Building robust machine learning systems: current progress, research challenges, and opportunities. In Proc. 56th Annual Design Automation Conference 2019 1–4 (ACM, 2019); https://doi.org/10.1145/3316781.3323472

Singh, A., Chawla, N., Ko, J. H., Kar, M. & Mukhopadhyay, S. Energy efficient and side-channel secure cryptographic hardware for IoT-edge nodes. IEEE Internet Things J. 6, 421–434 (2019).

Deogirikar, J. & Vidhate, A. Security attacks in IoT: a survey. In 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) 32–37 (IEEE, 2017); https://doi.org/10.1109/I-SMAC.2017.8058363

Hu, X. et al. DeepSniffer: a DNN model extraction framework based on learning architectural hints. In Proc. Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems 385–399 (ACM, 2020); https://doi.org/10.1145/3373376.3378460

Goodfellow, I. J., Shlens, J. & Szegedy, C. Explaining and harnessing adversarial examples. Preprint at https://arxiv.org/abs/1412.6572 (2014).

Sze, V., Chen, Y.-H., Yang, T.-J. & Emer, J. S. Efficient processing of deep neural networks: a tutorial and survey. Proc. IEEE 105, 2295–2329 (2017).

Meng, F. & Lu, W. D. Compute-in-memory technologies for deep learning acceleration. IEEE Nanotechnol. Mag. 18, 44–52 (2024).

Wang, Z., Meng, F., Park, Y., Eshraghian, J. K. & Lu, W. D. Side-channel attack analysis on in-memory computing architectures. IEEE Trans. Emerg. Top. Comput. https://doi.org/10.1109/TETC.2023.3257684 (2024).

Read, J., Li, W. & Yu, S. A method for reverse engineering neural network parameters from compute-in-memory accelerators. In 2022 IEEE Computer Society Annual Symposium on VLSI 302–307 (IEEE, 2022); https://doi.org/10.1109/ISVLSI54635.2022.00066

Wu, Y., Wang, X. & Lu, W. D. Dynamic resistive switching devices for neuromorphic computing. Semicond. Sci. Technol. 37, 024003 (2022).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Wang, Z. et al. Resistive switching materials for information processing. Nat. Rev. Mater. 5, 173–195 (2020).

Ielmini, D. & Wong, H.-S. P. In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018).

Loke, D. et al. Breaking the speed limits of phase-change memory. Science 336, 1566–1569 (2012).

Zeinali, B., Madsen, J. K., Raghavan, P. & Moradi, F. Ultra-fast SOT-MRAM cell with STT current for deterministic switching. In 2017 IEEE International Conference on Computer Design 463–468 (IEEE, 2017); https://doi.org/10.1109/ICCD.2017.81

Joshi, V. et al. Accurate deep neural network inference using computational phase-change memory. Nat. Commun. 11, 2473 (2020).

Wu, Y. et al. Demonstration of a multi-level μA-range bulk switching ReRAM and its application for keyword spotting. In 2022 International Electron Devices Meeting 18.4.1–18.4.4 (IEEE, 2022); https://doi.org/10.1109/IEDM45625.2022.10019450

Khan, A. I. et al. Ultralow-switching current density multilevel phase-change memory on a flexible substrate. Science 373, 1243–1247 (2021).

Jan, G. et al. Demonstration of ultra-low voltage and ultra low power STT-MRAM designed for compatibility with 0x node embedded LLC applications. In 2018 IEEE Symposium on VLSI Technology 65–66 (IEEE, 2018); https://doi.org/10.1109/VLSIT.2018.8510672

Xue, C.-X. et al. 15.4 A 22nm 2Mb ReRAM compute-in-memory macro with 121-28TOPS/W for multibit MAC computing for tiny AI edge devices. In 2020 IEEE International Solid-State Circuits Conference 244–246 (IEEE, 2020); https://doi.org/10.1109/ISSCC19947.2020.9063078

Yang, Y. et al. Observation of conducting filament growth in nanoscale resistive memories. Nat. Commun. 3, 732 (2012).

Yang, Y. et al. Electrochemical dynamics of nanoscale metallic inclusions in dielectrics. Nat. Commun. 5, 4232 (2014).

Govoreanu, B. et al. Vacancy-modulated conductive oxide resistive RAM (VMCO-RRAM): an area-scalable switching current, self-compliant, highly nonlinear and wide on/off-window resistive switching cell. In 2013 IEEE International Electron Devices Meeting 10.2.1–10.2.4 (IEEE, 2013); https://doi.org/10.1109/IEDM.2013.6724599

Sangwan, V. K. et al. Multi-terminal memtransistors from polycrystalline monolayer molybdenum disulfide. Nature 554, 500–504 (2018).

Yan, X., Qian, J. H., Sangwan, V. K. & Hersam, M. C. Progress and challenges for memtransistors in neuromorphic circuits and systems. Adv. Mater. 34, 2108025 (2022).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Jiang, H. et al. Multicore spiking neuromorphic chip in 180-nm with ReRAM synapses and digital neurons. IEEE J. Emerg. Sel. Top. Circuits Syst. 13, 975–985 (2023).

Chang, M. et al. A 73.53TOPS/W 14.74TOPS heterogeneous RRAM in-memory and sram near-memory SoC for hybrid frame and event-based target tracking. In 2023 IEEE International Solid- State Circuits Conference 426–428 (IEEE, 2023); https://doi.org/10.1109/ISSCC42615.2023.10067544

Moon, J. et al. Temporal data classification and forecasting using a memristor-based reservoir computing system. Nat. Electron. 2, 480–487 (2019).

Choi, S. et al. 3D-integrated multilayered physical reservoir array for learning and forecasting time-series information. Nat. Commun. 15, 2044 (2024).

Gallo, M. L. & Sebastian, A. An overview of phase-change memory device physics. J. Phys. Appl. Phys. 53, 213002 (2020).

Raoux, S., Wełnic, W. & Ielmini, D. Phase change materials and their application to nonvolatile memories. Chem. Rev. 110, 240–267 (2010).

Khaddam-Aljameh, R. et al. HERMES core—a 14nm CMOS and PCM-based in-memory compute core using an array of 300ps/LSB linearized CCO-based ADCs and local digital processing. In 2021 Symposium on VLSI Circuits 1–2 (IEEE, 2021); https://doi.org/10.23919/VLSICircuits52068.2021.9492362

Ambrogio, S. et al. An analog-AI chip for energy-efficient speech recognition and transcription. Nature 620, 768–775 (2023).

Karunaratne, G. et al. In-memory hyperdimensional computing. Nat. Electron. 3, 327–337 (2020).

Sebastian, A. et al. Temporal correlation detection using computational phase-change memory. Nat. Commun. 8, 1115 (2017).

Jung, S. et al. A crossbar array of magnetoresistive memory devices for in-memory computing. Nature 601, 211–216 (2022).

Borders, W. A. et al. Integer factorization using stochastic magnetic tunnel junctions. Nature 573, 390–393 (2019).

Singh, N. S. et al. CMOS plus stochastic nanomagnets enabling heterogeneous computers for probabilistic inference and learning. Nat. Commun. 15, 2685 (2024).

Wu, M.-H. et al. Compact probabilistic poisson neuron based on back-hopping oscillation in STT-MRAM for all-spin deep spiking neural network. In 2020 IEEE Symposium on VLSI Technology 1–2 (IEEE, 2020); https://doi.org/10.1109/VLSITechnology18217.2020.9265033

Jerry, M. et al. Ferroelectric FET analog synapse for acceleration of deep neural network training. In 2017 IEEE International Electron Devices Meeting 6.2.1–6.2.4 (IEEE, 2017); https://doi.org/10.1109/IEDM.2017.8268338

Long, Y. et al. A ferroelectric FET-based processing-in-memory architecture for DNN acceleration. IEEE J. Explor. Solid State Comput. Devices Circuits 5, 113–122 (2019).

Chen, P. et al. Open-loop analog programmable electrochemical memory array. Nat. Commun. 14, 6184 (2023).

Cui, J. et al. CMOS-compatible electrochemical synaptic transistor arrays for deep learning accelerators. Nat. Electron. 6, 292–300 (2023).

Lanza, M. et al. Memristive technologies for data storage, computation, encryption, and radio-frequency communication. Science 376, eabj9979 (2022).

Soliman, T. et al. First demonstration of in-memory computing crossbar using multi-level cell FeFET. Nat. Commun. 14, 6348 (2023).

Fick, L., Skrzyniarz, S., Parikh, M., Henry, M. B. & Fick, D. Analog matrix processor for edge AI real-time video analytics. In 2022 IEEE International Solid-State Circuits Conference 260–262 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731773

Rasch, M. J. et al. Hardware-aware training for large-scale and diverse deep learning inference workloads using in-memory computing-based accelerators. Nat. Commun. 14, 5282 (2023).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Wu, Y. et al. Bulk‐switching memristor‐based compute‐in‐memory module for deep neural network training. Adv. Mater. 35, 2305465 (2023).

Wang, Q., Park, Y. & Lu, W. D. Device non-ideality effects and architecture-aware training in RRAM in-memory computing modules. In 2021 IEEE International Symposium on Circuits and Systems 1–5 (IEEE, 2021); https://doi.org/10.1109/ISCAS51556.2021.9401307

Wang, X. et al. TAICHI: a tiled architecture for in-memory computing and heterogeneous integration. IEEE Trans. Circuits Syst. II 69, 559–563 (2022).

Kocher, P. C. in Advances in Cryptology—CRYPTO ’96 Vol. 1109 (ed. Koblitz, N.) 104–113 (Springer, 1996).

Goos, G. et al. in Advances in Cryptology—CRYPTO’ 99 Vol. 1666 (ed. Wiener, M.) 388–397 (Springer, 1999).

Carboni, R. & Ielmini, D. Stochastic memory devices for security and computing. Adv. Electron. Mater. 5, 1900198 (2019).

Gentry, C. Fully homomorphic encryption using ideal lattices. In Proc. Forty-first Annual Acm Symposium on Theory of Computing 169–178 (ACM, 2009); https://doi.org/10.1145/1536414.1536440

Papernot, N., McDaniel, P., Sinha, A. & Wellman, M. P. SoK: security and privacy in machine learning. In 2018 IEEE European Symposium on Security and Privacy 399–414 (IEEE, 2018); https://doi.org/10.1109/EuroSP.2018.00035

Kurakin, A., Goodfellow, I. & Bengio, S. Adversarial examples in the physical world. Preprint at https://arxiv.org/abs/1607.02533 (2016).

Papernot, N. et al. Practical black-box attacks against machine learning. In Proc. 2017 ACM on Asia Conference on Computer and Communications Security 506–519 (ACM, 2017); https://doi.org/10.1145/3052973.3053009

Papernot, N., McDaniel, P. & Goodfellow, I. Transferability in machine learning: from phenomena to black-box attacks using adversarial samples. Preprint at https://arxiv.org/abs/1605.07277 (2016).

Biggio, B., Nelson, B. & Laskov, P. Poisoning attacks against support vector machines. In ICML'12: Proc. 29th International Conference on Machine Learning 1467–1474 (ACM, 2012).

Kocher, P. et al. Spectre attacks: exploiting speculative execution. In 2019 IEEE Symposium on Security and Privacy 1–19 (IEEE, 2019); https://doi.org/10.1109/SP.2019.00002

Lipp, M. et al. Meltdown: reading kernel memory from user space. Commun. ACM 63, 46–56 (2020).

Liu, F., Yarom, Y., Ge, Q., Heiser, G. & Lee, R. B. Last-level cache side-channel attacks are practical. In 2015 IEEE Symposium on Security and Privacy 605–622 (IEEE, 2015); https://doi.org/10.1109/SP.2015.43

Hutle, M. & Kammerstetter, M. in Smart Grid Security (Skopik, F. & Smith, P.) 79–112 (Elsevier, 2015); https://doi.org/10.1016/B978-0-12-802122-4.00004-3

Wang, Z. et al. PowerGAN: a machine learning approach for power side‐channel attack on compute‐in‐memory accelerators. Adv. Intell. Syst. 5, 2300313 (2023).

Wei, L., Luo, B., Li, Y., Liu, Y. & Xu, Q. I know what you see: power side-channel attack on convolutional neural network accelerators. In Proc. 34th Annual Computer Security Applications Conference 393–406 (ACM, 2018); https://doi.org/10.1145/3274694.3274696

Hettwer, B., Gehrer, S. & Güneysu, T. Applications of machine learning techniques in side-channel attacks: a survey. J. Cryptogr. Eng. 10, 135–162 (2020).

Goodfellow, I. J. et al. Generative adversarial nets. In NIPS'14: Proc. 27th International Conference on Neural Information Processing Systems 2672–2680 (ACM, 2014).

Zou, M. et al. Security enhancement for RRAM computing system through obfuscating crossbar row connections. In 2020 Design, Automation & Test in Europe Conference & Exhibition 466–471 (IEEE, 2020); https://doi.org/10.23919/DATE48585.2020.9116549

Zou, M., Zhou, J., Cui, X., Wang, W. & Kvatinsky, S. Enhancing security of memristor computing system through secure weight mapping. In 2022 IEEE Computer Society Annual Symposium on VLSI 182–187 (IEEE, 2022); https://doi.org/10.1109/ISVLSI54635.2022.00044

Huang, S., Peng, X., Jiang, H., Luo, Y. & Yu, S. New security challenges on machine learning inference engine: chip cloning and model reverse engineering. Preprint at http://arxiv.org/abs/2003.09739 (2020).

Wang, Y., Jin, S. & Li, T. A low cost weight obfuscation scheme for security enhancement of ReRAM based neural network accelerators. In Proc. 26th Asia and South Pacific Design Automation Conference 499–504 (ACM, 2021); https://doi.org/10.1145/3394885.3431599

Fang, Q., Lin, L., Wong, Y. Z., Zhang, H. & Alioto, M. Side-channel attack counteraction via machine learning-targeted power compensation for post-silicon HW security patching. In 2022 IEEE International Solid-State Circuits Conference 1–3 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731755

Agosta, G., Barenghi, A., Maggi, M. & Pelosi, G. Compiler-based side channel vulnerability analysis and optimized countermeasures application. In Proc. 50th Annual Design Automation Conference 1–6 (ACM, 2013); https://doi.org/10.1145/2463209.2488833

Gao, Y., Al-Sarawi, S. F. & Abbott, D. Physical unclonable functions. Nat. Electron. 3, 81–91 (2020).

Pang, Y. et al. 25.2 A reconfigurable RRAM physically unclonable function utilizing post-process randomness source with <6 × 10−6 native bit error rate. In 2019 IEEE International Solid-State Circuits Conference 402–404 (IEEE, 2019); https://doi.org/10.1109/ISSCC.2019.8662307

Nili, H. et al. Hardware-intrinsic security primitives enabled by analogue state and nonlinear conductance variations in integrated memristors. Nat. Electron. 1, 197–202 (2018).

Wang, Z., Zhu, X., Jeloka, S., Cline, B. & Lu, W. D. Physical unclonable function systems based on pattern transfer of fingerprint-like patterns. IEEE Electron Device Lett. 43, 655–658 (2022).

Jiang, H. et al. A provable key destruction scheme based on memristive crossbar arrays. Nat. Electron. 1, 548–554 (2018).

Jiang, H. et al. A novel true random number generator based on a stochastic diffusive memristor. Nat. Commun. 8, 882 (2017).

Lin, B. et al. A high-speed and high-reliability TRNG based on analog RRAM for IoT security application. In 2019 IEEE International Electron Devices Meeting 14.8.1–14.8.4 (IEEE, 2019); https://doi.org/10.1109/IEDM19573.2019.8993486

Chiu, Y.-C. et al. A CMOS-integrated spintronic compute-in-memory macro for secure AI edge devices. Nat. Electron. 6, 534–543 (2023).

Xie, C., Zhang, Z., Yuille, A. L., Wang, J. & Ren, Z. Mitigating adversarial effects through randomization. Preprint at https://arxiv.org/abs/1711.01991 (2017).

Buckman, J., Roy, A., Raffel, C. & Goodfellow, I. Thermometer encoding: one hot way to resist adversarial examples. In Proc. 6th International Conference on Learning Representations 1–22 (2018); https://openreview.net/forum?id=S18Su--CW

Roy, D., Chakraborty, I., Ibrayev, T. & Roy, K. On the intrinsic robustness of nvm crossbars against adversarial attacks. In 2021 58th ACM/IEEE Design Automation Conference 565–570 (IEEE, 2021); https://doi.org/10.1109/DAC18074.2021.9586202

Huang, S., Jiang, H. & Yu, S. Mitigating adversarial attack for compute-in-memory accelerator utilizing on-chip finetune. In 2021 IEEE 10th Non-Volatile Memory Systems and Applications Symposium 1–6 (IEEE, 2021); https://doi.org/10.1109/NVMSA53655.2021.9628739

He, Z., Rakin, A. S. & Fan, D. Parametric noise injection: trainable randomness to improve deep neural network robustness against adversarial attack. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition 588–597 (IEEE, 2019); https://doi.org/10.1109/CVPR.2019.00068

Cherupally, S. K. et al. Leveraging noise and aggressive quantization of in-memory computing for robust DNN hardware against adversarial input and weight attacks. In 2021 58th ACM/IEEE Design Automation Conference 559–564 (IEEE, 2021); https://doi.org/10.1109/DAC18074.2021.9586233

Dowlin, N. et al. CryptoNets: applying neural networks to encrypted data with high throughput and accuracy. In Proc. 33rd International Conference on Machine Learning Vol. 48, 201–210 (PMLR, 2016).

Nejatollahi, H. et al. CryptoPIM: in-memory acceleration for lattice-based cryptographic hardware. In 2020 57th ACM/IEEE Design Automation Conference 1–6 (IEEE, 2020); https://doi.org/10.1109/DAC18072.2020.9218730

Park, Y., Wang, Z., Yoo, S. & Lu, W. D. RM-NTT: an RRAM-based compute-in-memory number theoretic transform accelerator. IEEE J. Explor. Solid State Comput. Devices Circuits 8, 93–101 (2022).

Li, X. et al. First demonstration of homomorphic encryption using multi-functional RRAM arrays with a novel noise-modulation scheme. In 2022 International Electron Devices Meeting 33.5.1–33.5.4 (IEEE, 2022); https://doi.org/10.1109/IEDM45625.2022.10019409

Li, M., Geng, H., Niemier, M. & Hu, X. S. Accelerating polynomial modular multiplication with crossbar-based compute-in-memory. In 2023 IEEE/ACM International Conference on Computer Aided Design 1–9 (IEEE, 2023); https://doi.org/10.1109/ICCAD57390.2023.10323790

Kumar, S., Wang, X., Strachan, J. P., Yang, Y. & Lu, W. D. Dynamical memristors for higher-complexity neuromorphic computing. Nat. Rev. Mater. 7, 575–591 (2022).

Acknowledgements

This work was supported in part by the Semiconductor Research Corporation (SRC) and Defense Advanced Research Projects Agency (DARPA) through the Applications Driving Architectures (ADA) Research Center and in part by the National Science Foundation under grants CCF-1900675 and CCF-2413293.

Author information

Authors and Affiliations

Contributions

Z.W. and W.D.L. conceived the project. All authors had discussions on the conceptualization of the paper and contributed to the writing of the paper at all stages.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Electronics thanks Yanan Guo, Piergiulio Mannocci and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Z., Wu, Y., Park, Y. et al. Safe, secure and trustworthy compute-in-memory accelerators. Nat Electron 7, 1086–1097 (2024). https://doi.org/10.1038/s41928-024-01312-y

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41928-024-01312-y

This article is cited by

-

Self-Rectifying Memristors for Beyond-CMOS Computing: Mechanisms, Materials, and Integration Prospects

Nano-Micro Letters (2026)