Abstract

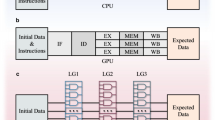

The era of exascale computing presents both exciting opportunities and unique challenges for quantum mechanical simulations. Although the transition from petaflops to exascale computing has been marked by a steady increase in computational power, it is accompanied by a shift towards heterogeneous architectures, with graphical processing units (GPUs) in particular gaining a dominant role. The exascale era therefore demands a fundamental shift in software development strategies. This Perspective examines the changing landscape of hardware and software for exascale computing, highlighting the limitations of traditional algorithms and software implementations in light of the increasing use of heterogeneous architectures in high-end systems. We discuss the challenges of adapting quantum chemistry software to these new architectures, including the fragmentation of the software stack, the need for more efficient algorithms (including reduced precision versions) tailored for GPUs, and the importance of developing standardized libraries and programming models.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Bader, D. A. Petascale Computing: Algorithms and Applications (Computational Science) (Chapman and Hall/CRC, 2007).

Geist, A. & Lucas, R. Major computer science challenges at exascale. Int. J. High Perform. Comput. Appl. 23, 427–436 (2009).

Vetter, J. S. Contemporary High Performance Computing (Chapman and Hall/CRC, 2017).

Dongarra, J. J. & Walker, D. W. The quest for petascale computing. Comput. Sci. Eng. 3, 32–39 (2001).

Pedretti, K. et al. Chronicles of Astra: challenges and lessons from the first petascale arm supercomputer. https://www.osti.gov/biblio/1822114 (2020).

Kogge, P. M. & Dally, W. J. Frontier vs the Exascale Report: why so long? and are we really there yet? In 2022 IEEE/ACM International Workshop on Performance Modeling, Benchmarking and Simulation of High Performance Computer Systems (PMBS), 26–35 (IEEE, 2022).

Sinha, P. et al. Not all GPUs are created equal: characterizing variability in large-scale, accelerator-rich systems. In SC22: International Conference for High Performance Computing, Networking, Storage and Analysis, 01–15 (IEEE, 2022).

Patrizio, A. ISC ’22: The AMD-Intel-Nvidia HPC race heats up. Network World https://www.networkworld.com/article/3662114/isc-22-the-amd-intel-nvidia-hpc-race-heats-up.html (2022).

Loh, G. H. et al. A research retrospective on AMD’s Exascale Computing Journey. In ISCA ’23: Proceedings of the 50th Annual International Symposium on Computer Architecture, 1–14 (ACM, 2023).

Lu, Y., Qian, D., Fu, H. & Chen, W. Will supercomputers be super-data and super-AI machines? Commun. ACM 61, 82–87 (2018).

Huerta, E. A. et al. Convergence of artificial intelligence and high performance computing on NSF-supported cyberinfrastructure. J. Big Data 7, 1–12 (2020).

Gepner, P. Machine learning and high-performance computing hybrid systems, a new way of performance acceleration in engineering and scientific applications. In 2021 16th Conference on Computer Science and Intelligence Systems (FedCSIS), 27–36 (Polskie Towarzystwo Informatyczne, 2021).

Liang, B.-S. AI computing in large-scale era: pre-trillion-scale neural network models and exa-scale supercomputing. In 2023 International VLSI Symposium on Technology, Systems and Applications (VLSI-TSA/VLSI-DAT) 1–3 (IEEE, 2023).

Dongarra, J. et al. The International Exascale Software Project roadmap. Int. J. High Perform. Comput. Appl. 25, 3–60 (2011).

Fiore, S., Bakhouya, M. & Smari, W. W. On the road to exascale: advances in high performance computing and simulations — an overview and editorial. Future Gener. Comput. Syst. 82, 450–458 (2018).

Richard, R. M. et al. Developing a computational chemistry framework for the exascale era. Comput. Sci. Eng. 21, 48–58 (2018).

Gordon, M. S. et al. Novel computer architectures and quantum chemistry. J. Phys. Chem. A 124, 4557–4582 (2020).

Luo, L. et al. Pre-exascale accelerated application development: the ORNL Summit experience. IBM J. Res. Dev. 64, 11:1–11:21 (2020).

McInnes, L. C. et al. How community software ecosystems can unlock the potential of exascale computing. Nat. Comput. Sci. 1, 92–94 (2021).

Matsuoka, S., Domke, J., Wahib, M., Drozd, A. & Hoefler, T. Myths and legends in high-performance computing. Int. J. High Perform. Comput. Appl. 37, 245–259 (2023).

Geist, A. & Reed, D. A. A survey of high-performance computing scaling challenges. Int. J. High Perform. Comput. Appl. 31, 104–113 (2015).

Evans, T. M. et al. A survey of software implementations used by application codes in the Exascale Computing Project. Int. J. High Perform. Comput. Appl. 36, 5–12 (2021).

Scemama, A. QMCkl: A unified approach to accelerating quantum Monte Carlo Codes. Zenodo https://doi.org/10.5281/zenodo.10622933 (2024).

Lehtola, S. A call to arms: making the case for more reusable libraries. J. Chem. Phys. https://doi.org/10.1063/5.0175165 (2023).

Kowalski, K. et al. From NWChem to NWChemEx: evolving with the computational chemistry landscape. Chem. Rev. 121, 4962–4998 (2021).

Pototschnig, J. V. et al. Implementation of relativistic coupled cluster theory for massively parallel GPU-accelerated computing architectures. J. Chem. Theory Comput. 17, 5509–5529 (2021).

Yokelson, D., Tkachenko, N. V., Robey, R., Li, Y. W. & Dub, P. A. Performance analysis of CP2K code for ab initio molecular dynamics on CPUs and GPUs. J. Chem. Inf. Model. 62, 2378–2386 (2022).

Gavini, V. et al. Roadmap on electronic structure codes in the exascale era. Model. Simul. Mater. Sci. Eng. 31, 063301 (2023).

Kim, I. et al. Kohn–Sham time-dependent density functional theory with Tamm–Dancoff approximation on massively parallel GPUs. npj Comput. Mater. 9, 1–12 (2023).

Galvez Vallejo, J. L. et al. Toward an extreme-scale electronic structure system. J. Chem. Phys. 159, 044112 (2023).

Corzo, H. H. et al. Coupled cluster theory on modern heterogeneous supercomputers. Front. Chem. 11, 1154526 (2023).

Schade, R. et al. Breaking the exascale barrier for the electronic structure problem in ab-initio molecular dynamics. Int. J. High Perform. Comput. Appl. 37, 530–538 (2023).

Yu, H. S., Li, S. L. & Truhlar, D. G. Perspective: Kohn–Sham density functional theory descending a staircase. J. Chem. Phys. 145, 130901 (2016).

Spiegelman, F. et al. Density-functional tight-binding: basic concepts and applications to molecules and clusters. Adv. Phys. X 5, 1710252 (2020).

David Sherrill, C. & Schaefer, H. F. The configuration interaction method: advances in highly correlated approaches. Adv. Quantum Chem. 34, 143–269 (1999).

Lyakh, D. I., Musiał, M., Lotrich, V. F. & Bartlett, R. J. Multireference nature of chemistry: the coupled-cluster view. Chem. Rev. 112, 182–243 (2012).

Jung, Y., Sodt, A., Gill, P. M. W. & Head-Gordon, M. Auxiliary basis expansions for large-scale electronic structure calculations. Proc. Natl Acad. Sci. USA 102, 6692–6697 (2005).

Pedersen, T. B., Lehtola, S., Galván, I. Fdez & Lindh, R. The versatility of the Cholesky decomposition in electronic structure theory. WIREs Comput. Mol. Sci. 14, e1692 (2024).

Davidson, E. R. The iterative calculation of a few of the lowest eigenvalues and corresponding eigenvectors of large real-symmetric matrices. J. Comput. Phys. 17, 87–94 (1975).

Bartlett, R. J. & Musiał, M. Coupled-cluster theory in quantum chemistry. Rev. Mod. Phys. 79, 291–352 (2007).

Barca, G. M. J. et al. Enabling large-scale correlated electronic structure calculations: scaling the RI-MP2 method on summit. In SC ’21: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, 1–15 (ACM, 2021).

Burke, K., Werschnik, J. & Gross, E. K. U. Time-dependent density functional theory: past, present, and future. J. Chem. Phys. 123, 062206 (2005).

Casida, M. E. & Huix-Rotllant, M. Progress in time-dependent density-functional theory. Annu. Rev. Phys. Chem. 63, 287–323 (2012).

Barca, G. M. J. et al. Recent developments in the general atomic and molecular electronic structure system. J. Chem. Phys. 152, 154102 (2020).

Aidas, K. et al. The Dalton quantum chemistry program system. WIREs Comput. Mol. Sci. 4, 269–284 (2014).

Reine, S., Helgaker, T. & Lindh, R. Multi-electron integrals. WIREs Comput. Mol. Sci. 2, 290–303 (2012).

Sun, Q. et al. PySCF: the Python-based simulations of chemistry framework. WIREs Comput. Mol. Sci. 8, e1340 (2018).

Sun, Q. et al. Recent developments in the PySCF program package. J. Chem. Phys. 153, 024109 (2020).

Erba, A. et al. Crystal23: a program for computational solid state physics and chemistry. J. Chem. Theory Comput. 19, 6891–6932 (2023).

Pisani, C. et al. Cryscor: a program for the post-Hartree–Fock treatment of periodic systems. Phys. Chem. Chem. Phys. 14, 7615–7628 (2012).

Kühne, T. D. et al. CP2K: an electronic structure and molecular dynamics software package — Quickstep: efficient and accurate electronic structure calculations. J. Chem. Phys. 152, 194103 (2020).

Blum, V., Rossi, M., Kokott, S. & Scheffler, M. The FHI-aims code: all-electron, ab initio materials simulations towards the exascale. Preprint at https://arxiv.org/abs/2208.12335 (2022).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558–561 (1993).

Carnimeo, I. et al. Quantum ESPRESSO: one further step toward the exascale. J. Chem. Theory Comput. 19, 6992–7006 (2023).

Gonze, X. et al. The Abinit project: impact, environment and recent developments. Comput. Phys. Commun. 248, 107042 (2020).

Clark, S. J. et al. First principles methods using CASTEP. Z. Kristallogr. Cryst. Mater. 220, 567–570 (2005).

Tancogne-Dejean, N. et al. Octopus, a computational framework for exploring light-driven phenomena and quantum dynamics in extended and finite systems. J. Chem. Phys. 152, 124119 (2020).

Mortensen, J. J. et al. GPAW: an open Python package for electronic structure calculations. J. Chem. Phys. 160, 092503 (2024).

Xu, Q. et al. Sparc: simulation package for ab-initio real-space calculations. SoftwareX 15, 100709 (2021).

Motamarri, P. et al. DFT-FE — a massively parallel adaptive finite-element code for large-scale density functional theory calculations. Comput. Phys. Commun. 246, 106853 (2020).

Das, S., Motamarri, P., Subramanian, V., Rogers, D. M. & Gavini, V. DFT-FE 1.0: a massively parallel hybrid CPU-GPU density functional theory code using finite-element discretization. Comput. Phys. Commun. 280, 108473 (2022).

Kronik, L. et al. Parsec — the pseudopotential algorithm for real-space electronic structure calculations: recent advances and novel applications to nano-structures. Phys. Status Solidi B 243, 1063 (2006).

Cc4s User Documentation. https://cc4s.github.io/user-manual (2022).

Leng, X., Jin, F., Wei, M. & Ma, Y. Gw method and Bethe-Salpeter equation for calculating electronic excitations. WIREs Comput. Mol. Sci. 6, 532–550 (2016).

Blase, X., Duchemin, I., Jacquemin, D. & Loos, P.-F. The Bethe-Salpeter equation formalism: from physics to chemistry. J. Phys. Chem. Lett. 11, 7371–7382 (2020).

Deslippe, J. et al. BerkeleyGW: a massively parallel computer package for the calculation of the quasiparticle and optical properties of materials and nanostructures. Comput. Phys. Commun. 183, 1269–1289 (2012).

Gulans, A. et al. exciting: a full-potential all-electron package implementing density-functional theory and many-body perturbation theory. J. Phys. Condens. Matter 26, 363202 (2014).

Sangalli, D. et al. Many-body perturbation theory calculations using the yambo code. J. Phys. Condens. Matter 31, 325902 (2019).

Foulkes, W. M. C., Mitas, L., Needs, R. J. & Rajagopal, G. Quantum Monte Carlo simulations of solids. Rev. Mod. Phys. 73, 33–83 (2001).

Lester, W. A., Mitas, L. & Hammond, B. Quantum Monte Carlo for atoms, molecules and solids. Chem. Phys. Lett. 478, 1–10 (2009).

Austin, B. M., Zubarev, D. Y. & Lester, W. A. J. Quantum Monte Carlo and related approaches. Chem. Rev. 112, 263–288 (2012).

Kolorenč, J. & Mitas, L. Applications of quantum Monte Carlo methods in condensed systems. Rep. Prog. Phys. 74, 026502 (2011).

Kim, J. et al. Qmcpack: an open source ab initio quantum Monte Carlo package for the electronic structure of atoms, molecules and solids. J. Phys. Condens. Matter 30, 195901 (2018).

Kent, P. R. C. et al. Qmcpack: advances in the development, efficiency, and application of auxiliary field and real-space variational and diffusion quantum Monte Carlo. J. Chem. Phys. 152, 174105 (2020).

Nakano, K. et al. Turborvb: a many-body toolkit for ab initio electronic simulations by quantum Monte Carlo. J. Chem. Phys. 152, 204121 (2020).

Shinde, R. et al. Cornell-Holland ab-initio materials package (champ-eu). https://github.com/filippi-claudia/champ (accessed 9 January 2025).

Scemama, A., Caffarel, M., Oseret, E. & Jalby, W. QMC=Chem: a quantum Monte Carlo program for large-scale simulations in chemistry at the petascale level and beyond. In High Performance Computing for Computational Science — VECPAR 2012, 118–127 (Springer, 2013).

Wagner, L. K., Bajdich, M. & Mitas, L. QWalk: a quantum Monte Carlo program for electronic structure. J. Comput. Phys. 228, 3390–3404 (2009).

Needs, R. J., Towler, M. D., Drummond, N. D., López Ríos, P. & Trail, J. R. Variational and diffusion quantum Monte Carlo calculations with the CASINO code. J. Chem. Phys. 152, 154106 (2020).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355, 602–606 (2017).

Pfau, D., Spencer, J. S., Matthews, A. G. D. G. & Foulkes, W. M. C. Ab initio solution of the many-electron Schrödinger equation with deep neural networks. Phys. Rev. Res. 2, 033429 (2020).

Hermann, J., Schätzle, Z. & Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 12, 891–897 (2020).

Wilson, M. et al. Neural network ansatz for periodic wave functions and the homogeneous electron gas. Phys. Rev. B 107, 235139 (2023).

Han, J., Zhang, L. & E, W. Solving many-electron Schrödinger equation using deep neural networks. J. Comput. Phys. 399, 108929 (2019).

Lu, D. et al. 86 PFLOPS deep potential molecular dynamics simulation of 100 million atoms with ab initio accuracy. Comput. Phys. Commun. 259, 107624 (2021).

Muniz, M. C., Car, R. & Panagiotopoulos, A. Z. Neural network water model based on the MB-pol many-body potential. J. Phys. Chem. B 127, 9165–9171 (2023).

Batzner, S., Musaelian, A. & Kozinsky, B. Advancing molecular simulation with equivariant interatomic potentials. Nat. Rev. Phys. 5, 437–438 (2023).

Bystrom, K. & Kozinsky, B. Nonlocal machine-learned exchange functional for molecules and solids. Phys. Rev. B 110, 075130 (2024).

Owen, C. J. et al. Unbiased atomistic predictions of crystal dislocation dynamics using Bayesian force fields. Preprint at https://arxiv.org/abs/2401.04359 (2024).

van der Oord, C., Sachs, M., Kovács, D. P., Ortner, C. & Csányi, G. Hyperactive learning for data-driven interatomic potentials. npj Comput. Mater. 9, 168 (2023).

Klawohn, S. et al. Gaussian approximation potentials: theory, software implementation and application examples. J. Chem. Phys. 159, 174108 (2023).

Bigi, F., Langer, M. & Ceriotti, M. The dark side of the forces: assessing non-conservative force models for atomistic machine learning. Preprint at https://arxiv.org/abs/2412.11569 (2024).

Chong, S. et al. Robustness of local predictions in atomistic machine learning models. J. Chem. Theory Comput. 19, 8020–8031 (2023).

Chandrasekaran, A. et al. Solving the electronic structure problem with machine learning. npj Comput. Mater. 5, 22 (2019).

Jain, A. et al. The Materials Project: Accelerating Materials Design Through Theory-Driven Data and Tools, 1–34 (Springer, 2018).

Fare, C., Fenner, P., Benatan, M., Varsi, A. & Pyzer-Knapp, E. O. A multi-fidelity machine learning approach to high throughput materials screening. npj Comput. Mater. 8, 257 (2022).

Batatia, I. et al. A foundation model for atomistic materials chemistry. Preprint at https://arxiv.org/abs/2401.00096 (2023).

Dongarra, J. J., Luszczek, P. & Petitet, A. The LINPACK benchmark: past, present and future. Concurrency Computat. Pract. Exper. 15, 803–820 (2003).

Dongarra, J., Heroux, M. A. & Luszczek, P. High-performance conjugate-gradient benchmark: a new metric for ranking high-performance computing systems. Int. J. High Perform. Comput. Appl. 30, 3–10 (2015).

TOP500.org. HPCG — November 2024. https://www.top500.org/lists/hpcg/2024/11 (accessed 1 December 2024).

ACM Gordon Bell Prize. https://awards.acm.org/bell (accessed 1 August 2024).

Liu, Y. A. et al. Closing the ‘quantum supremacy’ gap: achieving real-time simulation of a random quantum circuit using a new sunway supercomputer. In SC ’21: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, https://doi.org/10.1145/3458817.348739 (ACM, 2021).

Das, S. et al. Fast, scalable and accurate finite-element based ab initio calculations using mixed precision computing: 46 PFLOPS simulation of a metallic dislocation system. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, https://doi.org/10.1145/3295500.3357157 (ACM, 2019).

Ben, M. D. et al. Accelerating large-scale excited-state GW calculations on leadership HPC systems. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, 1–11 (IEEE, 2020).

Das, S. et al. Large-scale materials modeling at quantum accuracy: ab initio simulations of quasicrystals and interacting extended defects in metallic alloys. In SC23: International Conference for High Performance Computing, Networking, Storage and Analysis, 1–12 (IEEE, 2023).

Bloomberg and Digitimes. TSMC top 10 customers revealed: Apple accounts for quarter of revenue. https://www.gizmochina.com/2021/12/15/tsmc-top-10-customers-revealed-apple-accounts-for-quarter-of-revenue/ (2021).

Sorokin, A., Malkovsky, S. & Tsoy, G. Comparing the performance of general matrix multiplication routine on heterogeneous computing systems. J. Parallel Distrib. Comput. 160, 39–48 (2022).

4th Gen AMD EPYC Review (AMD Genoa). https://www.storagereview.com/review/4th-gen-amd-epyc-review-amd-genoa (2022).

Bertoni, C. et al. Performance portability evaluation of OpenCL benchmarks across Intel and nvidia platforms. In 2020 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 330–339 (IEEE, 2020).

Hammond, J. Shifting through the gears of GPU programming: understanding performance and portability trade-offs. https://www.nvidia.com/en-us/on-demand/session/gtcspring22-s41620/ (2022).

Herten, A. Many cores, many models: GPU programming model vs. vendor compatibility overview. In Proceedings of the SC ’23 Workshops of the International Conference on High Performance Computing, Network, Storage, and Analysis, https://doi.org/10.1145/3624062.3624178 (ACM, 2023).

Carter Edwards, H., Trott, C. R. & Sunderland, D. Kokkos: enabling manycore performance portability through polymorphic memory access patterns. J. Parallel Distrib. Comput. 74, 3202–3216 (2014).

Beckingsale, D. A. et al. Raja: Portable performance for large-scale scientific applications. In 2019 IEEE/ACM International Workshop on Performance, Portability and Productivity in HPC (P3HPC), 71–81 (IEEE, 2019).

Matthes, A. et al. Tuning and Optimization for a Variety of Many-Core Architectures Without Changing a Single Line of Implementation Code Using the Alpaka Library, 496–514 (Springer, 2017).

Alpay, A., Soproni, B., Wünsche, H. & Heuveline, V. Exploring the possibility of a hipSYCL-based implementation of oneAPI. In Proceedings of the 10th International Workshop on OpenCL, https://doi.org/10.1145/3529538.3530005 (ACM, 2022).

Markomanolis, G. S. et al. Evaluating GPU Programming Models for the LUMI Supercomputer, 79–101 (Springer, 2022).

Genovese, L. et al. Density functional theory calculation on many-cores hybrid central processing unit-graphic processing unit architectures. J. Chem. Phys. 131, 034103 (2009).

Asadchev, A. & Gordon, M. S. New multithreaded hybrid CPU/GPU approach to Hartree–Fock. J. Chem. Theory Comput. 8, 4166–4176 (2012).

Manathunga, M., Miao, Y., Mu, D., Götz, A. W. & Merz Jr, K. M. Parallel implementation of density functional theory methods in the Quantum Interaction Computational Kernel program. J. Chem. Theory Comput. 16, 4315–4326 (2020).

Barca, G. M. J., Galvez-Vallejo, J. L., Poole, D. L., Rendell, A. P. & Gordon, M. S. High-performance, graphics processing unit-accelerated Fock build algorithm. J. Chem. Theory Comput. 16, 7232–7238 (2020).

Stone, J. E. et al. Accelerating molecular modeling applications with graphics processors. J. Comput. Chem. 28, 2618–2640 (2007).

Seritan, S. et al. TeraChem: accelerating electronic structure and ab initio molecular dynamics with graphical processing units. J. Chem. Phys. 152, 224110 (2020).

Seritan, S. et al. TeraChem: a graphical processing unit-accelerated electronic structure package for large-scale ab initio molecular dynamics. WIREs Comput. Mol. Sci. 11, e1494 (2021).

Ma, W., Krishnamoorthy, S., Villa, O. & Kowalski, K. GPU-based implementations of the noniterative regularized-CCSD(T) corrections: applications to strongly correlated systems. J. Chem. Theory Comput. 7, 1316–1327 (2011).

Deprince, A. E. I. & Hammond, J. R. Coupled cluster theory on graphics processing units I. The coupled cluster doubles method. J. Chem. Theory Comput. 7, 1287–1295 (2011).

Deprince, A. E. III, Kennedy, M. R., Sumpter, B. G. & Sherrill, C. D. Density-fitted singles and doubles coupled cluster on graphics processing units. Mol. Phys. https://doi.org/10.1080/00268976.2013.874599 (2014).

Bhaskaran-Nair, K. et al. Noniterative multireference coupled cluster methods on heterogeneous CPU–GPU systems. J. Chem. Theory Comput. 9, 1949–1957 (2013).

Shen, T., Zhu, Z., Zhang, I. Y. & Scheffler, M. Massive-parallel implementation of the resolution-of-identity coupled-cluster approaches in the numeric atom-centered orbital framework for molecular systems. J. Chem. Theory Comput. 15, 4721–4734 (2019).

Wang, L.-W. Divide-and-conquer quantum mechanical material simulations with exascale supercomputers. Natl Sci. Rev. 1, 604–617 (2014).

Høyvik, I.-M., Kristensen, K., Jansik, B. & Jørgensen, P. The divide–expand–consolidate family of coupled cluster methods: numerical illustrations using second order Møller-Plesset perturbation theory. J. Chem. Phys. 136, 014105 (2012).

Kjærgaard, T., Baudin, P., Bykov, D., Kristensen, K. & Jørgensen, P. The divide–expand–consolidate coupled cluster scheme. WIREs Comput. Mol. Sci. 7, e1319 (2017).

Shee, J., Arthur, E. J., Zhang, S., Reichman, D. R. & Friesner, R. A. Phaseless auxiliary-field quantum Monte Carlo on graphical processing units. J. Chem. Theory Comput. 14, 4109–4121 (2018).

Fales, B. S. & Martìnez, T. J. Efficient treatment of large active spaces through multi-GPU parallel implementation of direct configuration interaction. J. Chem. Theory Comput. 16, 1586–1596 (2020).

Hacene, M. et al. Accelerating VASP electronic structure calculations using graphic processing units. J. Comput. Chem. 33, 2581–2589 (2012).

Del Ben, M. et al. Accelerating Large-Scale Excited-State GW Calculations on Leadership HPC Systems (IEEE Computer Society, 2020).

Yasuda, K. Two-electron integral evaluation on the graphics processor unit. J. Comput. Chem. 29, 334–342 (2008).

Ufimtsev, I. S. & Martínez, T. J. Quantum chemistry on graphical processing units. 1. Strategies for two-electron integral evaluation. J. Chem. Theory Comput. 4, 222–231 (2008).

Asadchev, A. et al. Uncontracted Rys quadrature implementation of up to g functions on graphical processing units. J. Chem. Theory Comput. 6, 696–704 (2010).

Asadchev, A. & Gordon, M. S. Mixed-precision evaluation of two-electron integrals by Rys quadrature. Comput. Phys. Commun. 183, 1563–1567 (2012).

Luehr, N., Ufimtsev, I. S. & Martínez, T. J. Dynamic precision for electron repulsion integral evaluation on graphical processing units (GPUs). J. Chem. Theory Comput. 7, 949–954 (2011).

Miao, Y. & Merz Jr, K. M. Acceleration of electron repulsion integral evaluation on graphics processing units via use of recurrence relations. J. Chem. Theory Comput. 9, 965–976 (2013).

Miao, Y. & Merz Jr, K. M. Acceleration of high angular momentum electron repulsion integrals and integral derivatives on graphics processing units. J. Chem. Theory Comput. 11, 1449–1462 (2015).

Asadchev, A. & Valeev, E. F. High-performance evaluation of high angular momentum 4-center Gaussian integrals on modern accelerated processors. J. Phys. Chem. A 127, 10889–10895 (2023).

Rák, Á. & Cserey, G. The BRUSH algorithm for two-electron integrals on GPU. Chem. Phys. Lett. 622, 92–98 (2015).

Song, C., Wang, L.-P. & Martínez, T. J. Automated code engine for graphical processing units: application to the effective core potential integrals and gradients. J. Chem. Theory Comput. 12, 92–106 (2016).

Kussmann, J. & Ochsenfeld, C. Hybrid CPU/GPU integral engine for strong-scaling ab initio methods. J. Chem. Theory Comput. 13, 3153–3159 (2017).

Tornai, G., Ladjánszki, I., Rák, Á., Kis, G. & Cserey, G. Calculation of quantum chemical two-electron integrals by applying compiler technology on GPU. J. Chem. Theory Comput. 15, 5319–5331 (2019).

Johnson, K. G. et al. Multinode multi-GPU two-electron integrals: code generation using the Regent language. J. Chem. Theory Comput. 18, 6522–6536 (2022).

Galvez Vallejo, J. L., Barca, G. M. J. & Gordon, M. S. High-performance GPU-accelerated evaluation of electron repulsion integrals. Mol. Phys. 121, e2112987 (2023).

Buttari, A. et al. in High Performance Computing and Grids in Action, 19–36 (IOS, 2008).

Vysotskiy, V. P. & Cederbaum, L. S. Accurate quantum chemistry in single precision arithmetic: correlation energy. J. Chem. Theory Comput. 7, 320–326 (2011).

Olivares-Amaya, R. et al. Accelerating correlated quantum chemistry calculations using graphical processing units and a mixed precision matrix multiplication library. J. Chem. Theory Comput. 6, 135–144 (2010).

Tsuchida, E. & Choe, Y.-K. Iterative diagonalization of symmetric matrices in mixed precision and its application to electronic structure calculations. Comput. Phys. Commun. 183, 980–985 (2012).

Scemama, A., Caffarel, M., Oseret, E. & Jalby, W. Quantum Monte Carlo for large chemical systems: implementing efficient strategies for petascale platforms and beyond. J. Comput. Chem. 34, 938–951 (2013).

Pokhilko, P., Epifanovsky, E. & Krylov, A. I. Double precision is not needed for many-body calculations: emergent conventional wisdom. J. Chem. Theory Comput. 14, 4088–4096 (2018).

Negre, C. F. A. et al. Recursive factorization of the inverse overlap matrix in linear-scaling quantum molecular dynamics simulations. J. Chem. Theory Comput. 12, 3063–3073 (2016).

Alvermann, A. et al. Benefits from using mixed precision computations in the ELPA-AEO and ESSEX-II eigensolver projects. Jpn. J. Ind. Appl. Math. 36, 699–717 (2019).

Abdelfattah, A., Tomov, S. & Dongarra, J. Towards half-precision computation for complex matrices: a case study for mixed precision solvers on GPUs. In 2019 IEEE/ACM 10th Workshop on Latest Advances in Scalable Algorithms for Large-Scale Systems (ScalA), 17–24 (IEEE, 2019).

Poulos, A., McKee, S. A. & Calhoun, J. C. Posits and the state of numerical representations in the age of exascale and edge computing. Softw. Pract. Exper. 52, 619–635 (2022).

Dawson, W., Ozaki, K., Domke, J. & Nakajima, T. Reducing numerical precision requirements in quantum chemistry calculations. J. Chem. Theory Comput. 20, 10826–10837 (2024).

Ozaki, K., Ogita, T., Oishi, S. & Rump, S. M. Error-free transformations of matrix multiplication by using fast routines of matrix multiplication and its applications. Numer. Algorithms 59, 95–118 (2011).

Yu, V. W.-z & Govoni, M. GPU acceleration of large-scale full-frequency GW calculations. J. Chem. Theory Comput. 18, 4690–4707 (2022).

Vinson, J. Faster exact exchange in periodic systems using single-precision arithmetic. J. Chem. Phys. 153, 204106 (2020).

Borštnik, U., VandeVondele, J., Weber, V. & Hutter, J. Sparse matrix multiplication: the distributed block-compressed sparse row library. Parallel Comput. 40, 47–58 (2014).

Asadchev, A. & Valeev, E. F. 3-Center and 4-center 2-particle Gaussian AO integrals on modern accelerated processors. J. Chem. Phys. 160, 244109 (2024).

Zhe Yu, V. W. et al. ELSI — an open infrastructure for electronic structure solvers. Comput. Phys. Commun. 256, 107459 (2020).

Marek, A. et al. The ELPA library: scalable parallel eigenvalue solutions for electronic structure theory and computational science. J. Phys.: Condens. Matter 26, 213201 (2014).

Hasik, J., Poilblanc, D. & Becca, F. Investigation of the Néel phase of the frustrated Heisenberg antiferromagnet by differentiable symmetric tensor networks. https://scipost.org/submissions/scipost_202011_00009v2 (2020).

Fishman, M., White, S. & Stoudenmire, E. M. The ITensor software library for tensor network calculations. SciPost Phys. Codebases 004 (2022).

Menczer, A. et al. Parallel implementation of the density matrix renormalization group method achieving a quarter petaFLOPS performance on a single DGX-H100 GPU node. J. Chem. Theory Comput 20, 8397–8404 (2024).

Calvin, J. A., Lewis, C. A. & Valeev, E. F. Scalable task-based algorithm for multiplication of block-rank-sparse matrices. In Proceedings of the 5th Workshop on Irregular Applications: Architectures and Algorithms, https://doi.org/10.1145/2833179.2833186 (2015).

Solomonik, E., Matthews, D., Hammond, J. R., Stanton, J. F. & Demmel, J. A massively parallel tensor contraction framework for coupled-cluster computations. J. Parallel Distrib. Comput. 74, 3176–3190 (2014).

Matthews, D. A. High-performance tensor contraction without transposition. SIAM J. Sci. Comput. https://doi.org/10.1137/16M108968X (2018).

Solcà, R. et al. Efficient implementation of quantum materials simulations on distributed CPU-GPU systems. In SC ’15: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, https://doi.org/10.1145/2807591.2807654 (IEEE, 2015).

Kulik, H. J. et al. Roadmap on machine learning in electronic structure. Electron. Struct. 4, 023004 (2022).

GPU NVIDIA H100 Tensor Core. https://www.nvidia.com/fr-fr/data-center/h100 (2024).

AMD EPYC 9754. https://www.amd.com/en/products/processors/server/epyc/4th-generation-9004-and-8004-series/amd-epyc-9754.html (2024).

Acknowledgements

The authors acknowledge partial support from the European Centre of Excellence in Exascale Computing TREX — Targeting Real Chemical Accuracy at the Exascale. This project has received funding in part from the European Union’s Horizon 2020 — Research and Innovation Program — under grant agreement no. 952165.

Author information

Authors and Affiliations

Contributions

All authors contributed to all aspects of this work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Reviews Physics thanks the anonymous referees for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shinde, R., Filippi, C., Scemama, A. et al. Shifting sands of hardware and software in exascale quantum mechanical simulations. Nat Rev Phys 7, 378–387 (2025). https://doi.org/10.1038/s42254-025-00823-7

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s42254-025-00823-7