Abstract

Large language models (LLMs) hold great potential for augmenting psychotherapy by enhancing accessibility, personalization and engagement. However, a systematic understanding of the roles that LLMs can play in psychotherapy remains underexplored. In this Perspective, we propose a taxonomy of LLM roles in psychotherapy that delineates six specific roles of LLMs across two key dimensions: artificial intelligence autonomy and emotional engagement. We discuss key computational and ethical challenges, such as emotion recognition, memory retention, privacy and emotional dependency, and offer recommendations to address these challenges.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Chen, Y. et al. SoulChat: Improving LLMs’ empathy, listening, and comfort abilities through fine-tuning with multi-turn empathy conversations. In Findings of the Association for Computational Linguistics: EMNLP 2023 (eds Bouamor, H. et al.) 1170–1183 (Association for Computational Linguistics, 2023).

Lawrence, H. R. et al. The opportunities and risks of large language models in mental health. JMIR Ment. Health 11, 59479 (2024).

Li, H., Zhang, R., Lee, Y.-C., Kraut, R. E. & Mohr, D. C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. npj Digit. Med.6, 236 (2023).

He, Y. et al. Conversational agent interventions for mental health problems: systematic review and meta-analysis of randomized controlled trials. J. Med. Internet Res. 25, 43862 (2023).

Yang, Y., Viranda, T., Van Meter, A. R., Choudhury, T. & Adler, D. A. Exploring opportunities to augment psychotherapy with language models. In Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, CHI EA ’24 (Association for Computing Machinery, 2024).

Li, H. & Zhang, R. Finding love in algorithms: deciphering the emotional contexts of close encounters with AI chatbots. J. Comp. Mediat. Commun. 29, 015 (2024).

Schäfer, L. M., Krause, T. & Köhler, S. Exploring user characteristics, motives and expectations and therapeutic alliance in the mental health conversational AI Clare®: a baseline study. Front. Digital Health 7, 1576135 (2025).

Tanaka, H., Negoro, H., Iwasaka, H. & Nakamura, S. Embodied conversational agents for multimodal automated social skills training in people with autism spectrum disorders. PLoS ONE12, 0182151 (2017).

Rathnayaka, P. et al. A mental health chatbot with cognitive skills for personalised behavioural activation and remote health monitoring. Sensors 22, 3653 (2022).

Fitzpatrick, K. K., Darcy, A. & Vierhile, M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment. Health 4, 7785 (2017).

Beatty, C., Malik, T., Meheli, S. & Sinha, C. Evaluating the therapeutic alliance with a free-text CBT conversational agent (Wysa): a mixed-methods study. Front. Digit. Health 4, 847991 (2022).

Fiske, A., Henningsen, P. & Buyx, A. Your robot therapist will see you now: ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J. Med. Internet Res. 21, 13216 (2019).

De Choudhury, M., Pendse, S. R. & Kumar, N. Benefits and harms of large language models in digital mental health. Preprint at https://arxiv.org/abs/2311.14693 (2023).

Zhang, R. et al. The dark side of AI companionship: A taxonomy of harmful algorithmic behaviors in human-ai relationships. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, CHI ’25, 13 (Association for Computing Machinery, 2025).

Stade, E. C. et al. Large language models could change the future of behavioral healthcare: a proposal for responsible development and evaluation. npj Ment. Health Res. 3, 12 (2024).

Elvins, R. & Green, J. The conceptualization and measurement of therapeutic alliance: an empirical review. Clin. Psychol. Rev. 28, 1167–1187 (2008).

Ferrario, A., Sedlakova, J. & Trachsel, M. The role of humanization and robustness of large language models in conversational artificial intelligence for individuals with depression: a critical analysis. JMIR Ment. Health 11, 56569 (2024).

Leichsenring, F. & Rabung, S. Effectiveness of long-term psychodynamic psychotherapy: a meta-analysis. JAMA 300, 1551–1565 (2008).

Sharma, A., Rushton, K., Lin, I. W., Nguyen, T. & Althoff, T. Facilitating self-guided mental health interventions through human-language model interaction: A case study of cognitive restructuring. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI ’24 (Association for Computing Machinery, 2024).

Kuleindiren, N. et al. Optimizing existing mental health screening methods in a dementia screening and risk factor app: observational machine learning study. JMIR Form. Res.6, 31209 (2022).

Lin, Y. et al. A deep learning-based model for detecting depression in senior population. Front. Psychiatry 13, 1016676 (2022).

Liao, L., Yang, G. H. & Shah, C. Proactive conversational agents in the post-chatgpt world. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’23, 3452–3455 (Association for Computing Machinery, 2023).

Jain, A., Sandhu, R., Singh, G. & Rakhra, M. The role of ai counselling in journaling for mental health improvement. In 2024 International Conference on Electrical Electronics and Computing Technologies (ICEECT) 1–6 (IEEE, 2024).

Adler, D. A. et al. Measuring algorithmic bias to analyze the reliability of AI tools that predict depression risk using smartphone sensed-behavioral data. npj Ment. Health Res. 3, 17 (2024).

Taylor, S., Jaques, N., Nosakhare, E., Sano, A. & Picard, R. Personalized multitask learning for predicting tomorrow’s mood, stress, and health. IEEE Trans. Affect. Comput. 11, 200–213 (2017).

Goyal, S. et al. HealAI: a healthcare LLM for effective medical documentation. In Proc. 17th ACM International Conference on Web Search and Data Mining 1167–1168 (2024).

Lai, T. et al: Psy-LLM: scaling up global mental health psychological services with AI-based large language models. Preprint at https://arxiv.org/abs/2307.11991 (2023).

Levkovich, I. & Omar, M. Evaluating of BERT-based and large language mod for suicide detection, prevention, and risk assessment: a systematic review. J. Med. Syst. 48, 113 (2024).

Ji, S. et al. MentalBERT: Publicly available pretrained language models for mental healthcare. In Proceedings of the Thirteenth Language Resources and Evaluation Conference (eds Calzolari, N. et al.) 7184–7190 (European Language Resources Association, 2022).

Tanana, M. J. et al. How do you feel? Using natural language processing to automatically rate emotion in psychotherapy. Behav. Res. Methods 53, 2069–2082 (2021).

Aafjes-van Doorn, K., Kamsteeg, C., Bate, J. & Aafjes, M. A scoping review of machine learning in psychotherapy research. Psychother. Res. 31, 92–116 (2021).

Kornfield, R. et al. A text messaging intervention to support the mental health of young adults: user engagement and feedback from a field trial of an intervention prototype. Internet Interv. 34, 100667 (2023).

Zhang, R. et al. Clinically meaningful use of mental health apps and its effects on depression: mixed methods study. J. Med. Internet Res. 21, 15644 (2019).

Viduani, A., Cosenza, V., Araújo, R. M. & Kieling, C. Chatbots in the Field of Mental Health: Challenges and Opportunities, 133–148 (Springer International Publishing, 2023).

Lee, Y.-C., Yamashita, N., Huang, Y. & Fu, W. "I hear you, I feel you": Encouraging deep self-disclosure through a chatbot. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20, 1–12 (Association for Computing Machinery, 2020).

Levitt, H. M., Pomerville, A. & Surace, F. I. A qualitative meta-analysis examining clients’ experiences of psychotherapy: a new agenda. Psychcol. Bull. 142, 801 (2016).

Russell, K. A., Swift, J. K., Penix, E. A. & Whipple, J. L. Client preferences for the personality characteristics of an ideal therapist. Couns. Psychol. Q. 35, 243–259 (2022).

Xie, H., Chen, Y., Xing, X., Lin, J. & Xu, X. PsyDT: Using LLMs to construct the digital twin of psychological counselor with personalized counseling style for psychological counseling. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (eds Che, W. et al.) 1081–1115 (Association for Computational Linguistics, 2025).

Baile, W. F. & Blatner, A. Teaching communication skills: using action methods to enhance role-play in problem-based learning. Simul. Healthc. 9, 220–227 (2014).

Shaikh, O., Chai, V. E., Gelfand, M., Yang, D. & Bernstein, M. S. Rehearsal: Simulating conflict to teach conflict resolution. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI ’24 (Association for Computing Machinery, 2024).

Sharma, A., Miner, A., Atkins, D. & Althoff, T. A computational approach to understanding empathy expressed in text-based mental health support. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) (eds Webber, B. et al.) 5263–5276 (Association for Computational Linguistics, 2020).

Sharma, A., Lin, I. W., Miner, A. S., Atkins, D. C. & Althoff, T. Human–AI collaboration enables more empathic conversations in text-based peer-to-peer mental health support. Nat. Mach. Intell. 5, 46–57 (2023).

Starke, C. et al. Risks and protective measures for synthetic relationships. Nat. Hum. Behav. 8, 1834–1836 (2024).

Ventura, A., Starke, C., Righetti, F. & Köbis, N. Relationships in the age of AI: a review on the opportunities and risks of synthetic relationships to reduce loneliness. Preprint at https://osf.io/preprints/psyarxiv/w7nmz_v1 (2025).

De Freitas, J., Uğuralp, Z. O., Uğuralp, A. K. & Stefano, P. AI companions reduce loneliness. J. Consum. Res. https://doi.org/10.1093/jcr/ucaf040 (2025).

Skjuve, M., Følstad, A., Fostervold, K. I. & Brandtzaeg, P. B. A longitudinal study of human–chatbot relationships. Int. J. Hum. Comput. Stud. 168, 102903 (2022).

Siddals, S., Torous, J. & Coxon, A. “It happened to be the perfect thing”: experiences of generative AI chatbots for mental health. npj Ment. Health Res. 3, 48 (2024).

Bedi, R. P. Concept mapping the client’s perspective on counseling alliance formation. J. Couns. Psychol. 53, 26 (2006).

Mohr, J. & Woodhouse, S. Looking inside the therapeutic alliance: assessing clients’ visions of helpful and harmful psychotherapy. Psychother. Bull. 36, 15–16 (2001).

Lu, Y., Bartolo, M., Moore, A., Riedel, S. & Stenetorp, P. Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (eds Muresan, S. et al.) 8086–8098 (Association for Computational Linguistics, 2022).

Tong, S., Mao, K., Huang, Z., Zhao, Y. & Peng, K. Automating psychological hypothesis generation with AI: when large language models meet causal graph. Humanit. Soc. Sci. Commun.11, 896 (2024).

Meng, H. et al. Deconstructing depression stigma: integrating AI-driven data collection and analysis with causal knowledge graphs. In Proc. 2025 CHI Conference on Human Factors in Computing Systems, CHI ’25 (Association for Computing Machinery, 2025).

Khattab, O. et al. DSPy: compiling declarative language model calls into state-of-the-art pipelines. In The Twelfth International Conference on Learning Representations, sY5N0zY5Od (OpenReview, 2024).

Tai, R. H. et al. An examination of the use of large language models to aid analysis of textual data. Int. J. Qual. Methods 23, 16094069241231168 (2024).

Zhou, H. et al. Batch calibration: rethinking calibration for in-context learning and prompt engineering. In The Twelfth International Conference on Learning Representations, L3FHMoKZcS (OpenReview, 2024).

Zeng, X., Lin, J., Hu, P., Huang, R. & Zhang, Z. A framework for inference inspired by human memory mechanisms. In The Twelfth International Conference on Learning Representations, vBo7544jZx (OpenReview, 2024).

Feng, Y. et al. Memristor- based storage system with convolutional autoencoder-based image compression network. Nat. Commun. 15, 1132 (2024).

Gutierrez, B. J. et al. HippoRAG: neurobiologically inspired long-term memory for large language models. In The Thirty-eighth Annual Conference on Neural Information Processing Systems https://openreview.net/forum?id=hkujvAPVsg (OpenReview, 2024).

Spens, E. & Burgess, N. A generative model of memory construction and consolidation. Nat. Hum. Behav. 8, 526–543 (2024).

Gershman, S. J. & Daw, N. D. Reinforcement learning and episodic memory in humans and animals: an integrative framework. Annu. Rev. Psychol. 68, 101–128 (2017).

Nuxoll, A. M. & Laird, J. E. Extending cognitive architecture with episodic memory. In Proceedings of the 22nd National Conference on Artificial Intelligence, 2, AAAI’07, 1560–1565 (The AAAI Press, 2007).

Atkinson, R. C. Human memory: a proposed system and its control processes. Psychol. Learn. Motiv. 2, 89–195 (1968).

Ji, Z. et al. RHO: Reducing hallucination in open-domain dialogues with knowledge grounding. In Findings of the Association for Computational Linguistics: ACL 2023 (eds Rogers, A. et al.) 4504–4522 (Association for Computational Linguistics, 2023).

Muqtadir, A., Bilal, H. S. M., Yousaf, A., Ahmed, H. F. & Hussain, J. Mitigating hallucinations using ensemble of knowledge graph and vector store in large language models to enhance mental health support. Preprint at https://arxiv.org/abs/2410.10853 (2024).

Lopez, I. et al. Clinical entity augmented retrieval for clinical information extraction. npj Digit. Med.8, 45 (2025).

Waaler, P. N., Hussain, M., Molchanov, I., Bongo, L. A. & Elvevåg, B. Prompt engineering an informational chatbot for education on mental health using a multi-agent approach for enhanced compliance with prompt instructions: algorithm development and validation. JMIR AI 4, 69820 (2025).

Martinez-Martin, N. et al. Ethical issues for direct-to-consumer digital psychotherapy apps: addressing accountability, data protection, and consent. JMIR Ment. Health 5, 9423 (2018).

Karcher, N. R. & Presser, N. R. Ethical and legal issues addressing the use of mobile health (mHealth) as an adjunct to psychotherapy. Ethics Behav. 28, 1–22 (2018).

Alfano, L., Malcotti, I. & Ciliberti, R. Psychotherapy, artificial intelligence and adolescents: ethical aspects. J. Prev. Med. Hyg. 64, 438 (2023).

Khalid, N., Qayyum, A., Bilal, M., Al-Fuqaha, A. & Qadir, J. Privacy-preserving artificial intelligence in healthcare: techniques and applications. Comput. Biol. Med. 158, 106848 (2023).

Abadi, M. et al. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, CCS ’16, 308–318 (Association for Computing Machinery, 2016).

Lee, H.-P. H., Yang, Y.-J., Von Davier, T. S., Forlizzi, J. & Das, S. Deepfakes, phrenology, surveillance, and more! a taxonomy of AI privacy risks. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI ’24 (Association for Computing Machinery, 2024).

Nguyen, J. & Pepping, C. A. The application of chatgpt in healthcare progress notes: a commentary from a clinical and research perspective. Clin. Transl. Med. 13, e1324 (2023).

Cuadra, A. et al. The illusion of empathy? notes on displays of emotion in human-computer interaction. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI ’24 (Association for Computing Machinery, 2024).

Nayak, S. et al. Benchmarking vision language models for cultural understanding. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (eds Al-Onaizan, Y. et al.) 5769–5790 (Association for Computational Linguistics, 2024).

Li, C. et al. Culturepark: Boosting cross-cultural understanding in large language models. In Advances in Neural Information Processing Systems (eds Globerson, A. et al.) Vol. 37, 65183–65216 (Curran Associates, Inc., 2024).

Timmons, A. C. et al. A call to action on assessing and mitigating bias in artificial intelligence applications for mental health. Perspect. Psychol. Sci. 18, 1062–1096 (2023).

Yeo, Y. H. et al. Evaluating for evidence of sociodemographic bias in conversational AI for mental health support. Cyberpsychol. Behav. Soc. Netw. 28, 44–51 (2025).

Luxton, D. D. Recommendations for the ethical use and design of artificial intelligent care providers. Artif. Intell. Med. 62, 1–10 (2014).

Sabuncu, M. R., Wang, A. Q. & Nguyen, M. Ethical Use of Artificial Intelligence in Medical Diagnostics Demands a Focus on Accuracy, Not Fairness (Massachusetts Medical Society, 2025).

Raji, I. D., Daneshjou, R. & Alsentzer, E. It’s Time to Bench the Medical Exam Benchmark (Massachusetts Medical Society, 2025).

Nißen, M. et al. The effects of health care chatbot personas with different social roles on the client–chatbot bond and usage intentions: development of a design codebook and web-based study. J. Med. Internet Res. 24, 32630 (2022).

Iyengar, S. S. & Lepper, M. R. When choice is demotivating: can one desire too much of a good thing? J. Personal. Soc. Psychol. 79, 995 (2000).

Inkster, B. et al. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR mHealth uHealth6, 12106 (2018).

Sharma, M. et al. Towards understanding sycophancy in language models. In The Twelfth International Conference on Learning Representations, tvhaxkMKAn (OpenReview, 2024).

Turk, D. A. R. Stimulating a gradual and progressive shift to personalize learning for all: there is magic in the middle. Middle Grades Rev. 7, 2 (2021).

Malmqvist, L. Sycophancy in large language models: Causes and miti- gations. In Intelligent Computing (ed. Arai, K.) 61–74 (Springer Nature, 2025).

Chen, W. et al. From yes-men to truth-tellers: addressing sycophancy in large language models with pinpoint tuning. In Proceedings of the 41st International Conference on Machine Learning, ICML’24 (JMLR, 2024).

Perry, A. AI will never convey the essence of human empathy. Nat. Hum. Behav. 7, 1808–1809 (2023).

Morris, R. R., Kouddous, K., Kshirsagar, R. & Schueller, S. M. Towards an artificially empathic conversational agent for mental health applications: system design and user perceptions. J. Med. Internet Res. 20, 10148 (2018).

Montemayor, C., Halpern, J. & Fairweather, A. In principle obstacles for empathic AI: why we can’t replace human empathy in healthcare. AI Soc. 37, 1353–1359 (2022).

Sanjeewa, R., Iyer, R., Apputhurai, P., Wickramasinghe, N. & Meyer, D. Empathic conversational agent platform designs and their evaluation in the context of mental health: systematic review. JMIR Ment. Health 11, 58974 (2024).

Laestadius, L., Bishop, A., Gonzalez, M., Illenčík, D. & Campos-Castillo, C. Too human and not human enough: a grounded theory analysis of mental health harms from emotional dependence on the social chatbot replika. N. Media Soc. 26, 5923–5941 (2024).

Banks, J. Deletion, departure, death: experiences of AI companion loss. J. Soc. Personal. Relatsh. 41, 3547–3572 (2024).

Goktas, P. & Grzybowski, A. Shaping the future of healthcare: ethical clinical challenges and pathways to trustworthy AI. J. Clin. Med. 14, 1605 (2025).

Thieme, A. et al. Designing human-centered AI for mental health: developing clinically relevant applications for online CBT treatment. ACM Trans. Comput. Hum. Interact. 30, 1–50 (2023).

Lee, K. S., Yeung, J., Kurniawati, A. & Chou, D. T. Designing human- centric ai mental health chatbots: A case study of two apps. In Information Systems for Intelligent Systems (eds Iglesias, A. et al.) 435–452 (Springer Nature, 2025).

Grabb, D., Lamparth, M. & Vasan, N. Risks from language models for automated mental healthcare: Ethics and structure for implementation (extended abstract). In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, Vol 7, 519–519 (The AAAI Press, 2024).

De Freitas, J., Uğuralp, A. K., Oğuz-Uğuralp, Z. & Puntoni, S. Chatbots and mental health: insights into the safety of generative AI. J. Consum. Psychol. 34, 481–491 (2024).

Lewis, P. et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. Adv. Neural Inf. Process. Syst. 33, 9459–9474 (2020).

Acknowledgements

Our research has been supported by the Ministry of Education, Singapore (A-8002610-00-00 (Y.-C.L.) and A-8000877-00-00 (R.Z.)) and an NUS Start-up Grant (A-8000529-00-00). This research work is partially supported by NUS CSSH Seed Fund Grant funding (CSSH-SF24-07 YCL).

Author information

Authors and Affiliations

Contributions

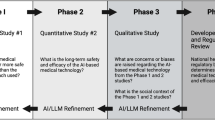

R.Z. conceptualized the study, developed the primary theoretical framework and drafted the paper. H.M. authored sections on computational challenges, and developed and designed the tables and figures. M.N. provided expertise in ensuring the clinical validity and practical applicability of the proposed approaches within mental health settings. Y.-C.L. contributed to the paper writing and made critical revisions for intellectual content. All authors reviewed, edited and approved the final paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Dan Adler, Nils Köbis and Ryan Louie for their contribution to the peer review of this work. Primary Handling Editor: Ananya Rastogi, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, R., Meng, H., Neubronner, M. et al. Computational and ethical considerations for using large language models in psychotherapy. Nat Comput Sci 5, 854–862 (2025). https://doi.org/10.1038/s43588-025-00874-x

Received:

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s43588-025-00874-x