Abstract

Adolescents and young adults (AYA) often face mental health challenges and are heavily influenced by technology. Digital health interventions (DHIs), leveraging smartphone data and artificial intelligence, offer immense potential for personalized and accessible mental health support. However, ethical guidelines for DHI research fail to address AYA’s unique developmental and technological needs and leave crucial ethical questions unanswered. This gap creates risks of either over- or under-protecting AYA in DHI research, slowing progress and causing harm. This Perspective examines ethical gaps in DHI research for AYA, focusing on three critical domains: challenges of passive data collection and artificial intelligence, consent practices, and risks of exacerbating inequities. We propose an agenda for ethical guidance based on bioethical principles autonomy, respect for persons, beneficence and justice, developed through participatory research with AYA, particularly marginalized groups. We discuss methodologies to achieve this agenda, ensuring ethical, youth-focused and equitable DHI research for the mental health of AYA.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 digital issues and online access to articles

$79.00 per year

only $6.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Chavira, D. A., Ponting, C. & Ramos, G. The impact of COVID-19 on child and adolescent mental health and treatment considerations. Behav. Res. Ther. 157, 104169 (2022).

Lee, C. M., Cadigan, J. M. & Rhew, I. C. Increases in loneliness among young adults during the COVID-19 pandemic and association with increases in mental health problems. J. Adolesc. Health 67, 714–717 (2020).

Cotton, N. K. & Shim, R. S. Social determinants of health, structural racism, and the impact on child and adolescent mental health. J. Am. Acad. Child Adolesc. Psychiatry 61, 1385–1389 (2022).

Copeland, W. E. et al. Increase in untreated cases of psychiatric disorders during the transition to adulthood. Psychiatr. Serv. 66, 397–403 (2015).

Stroud, C., Walker, L. R., Davis, M. & Irwin, C. E. Jr. Investing in the health and well-being of young adults. J. Adolesc. Health 56, 127–129 (2015).

Lehtimaki, S., Martic, J., Wahl, B., Foster, K. T. & Schwalbe, N. Evidence on digital mental health interventions for adolescents and young people: systematic overview. JMIR Ment. Health 8, e25847 (2021).

Ferrante, M., Esposito, L. E. & Stoeckel, L. E. From palm to practice: prescription digital therapeutics for mental and brain health at the National Institutes of Health. Front. Psychiatry 15, 1433438 (2024).

Wright, M., Reitegger, F., Cela, H., Papst, A. & Gasteiger-Klicpera, B. Interventions with digital tools for mental health promotion among 11–18 year olds: a systematic review and meta-analysis. J. Youth Adolesc. 52, 754–779 (2023).

Chen, T., Ou, J., Li, G. & Luo, H. Promoting mental health in children and adolescents through digital technology: a systematic review and meta-analysis. Front. Psychol. 15, 1356554 (2024).

Piers, R., Williams, J. M. & Sharpe, H. Review: can digital mental health interventions bridge the ‘digital divide’ for socioeconomically and digitally marginalised youth? A systematic review. Child Adolesc. Ment. Health 28, 90–104 (2023).

Chen, B. et al. Comparative effectiveness and acceptability of internet-based psychological interventions on depression in young people: a systematic review and network meta-analysis. BMC Psychiatry 25, 321 (2025).

Jahedi, F., Fay Henman, P. W. & Ryan, J. C. Personalization in digital psychological interventions for young adults. Int. J. Hum. Comput. Interact. 40, 2254–2264 (2024).

Hightow-Weidman, L. B., Horvath, K. J., Scott, H., Hill-Rorie, J. & Bauermeister, J. A. Engaging youth in mHealth: what works and how can we be sure? MHealth 7, 23 (2021).

Zhu, S., Wang, Y. & Hu, Y. Facilitators and barriers to digital mental health interventions for depression, anxiety, and stress in adolescents and young adults: scoping review. J. Med. Internet Res. 27, e62870 (2025).

Götzl, C. et al. Artificial intelligence-informed mobile mental health apps for young people: a mixed-methods approach on users’ and stakeholders’ perspectives. Child Adolesc. Psychiatry Ment. Health 16, 86 (2022).

Hornstein, S., Zantvoort, K., Lueken, U., Funk, B. & Hilbert, K. Personalization strategies in digital mental health interventions: a systematic review and conceptual framework for depressive symptoms. Front. Digit. Health 5, 1170002 (2023).

Li, H., Zhang, R., Lee, Y.-C., Kraut, R. E. & Mohr, D. C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. npj Digit. Med. 6, 236 (2023).

Heinz, M. V. et al. Randomized trial of a generative AI chatbot for mental health treatment. NEJM AI https://doi.org/10.1056/AIoa2400802 (2025).

Gutierrez, G., Stephenson, C., Eadie, J., Asadpour, K. & Alavi, N. Examining the role of AI technology in online mental healthcare: opportunities, challenges, and implications, a mixed-methods review. Front. Psychiatry 15, 1356773 (2024).

Ehtemam, H. et al. Role of machine learning algorithms in suicide risk prediction: a systematic review-meta analysis of clinical studies. BMC Med. Inform. Decis. Mak. 24, 138 (2024).

Atmakuru, A. et al. Artificial intelligence-based suicide prevention and prediction: a systematic review (2019–2023). Inf. Fusion 114, 102673 (2025).

Goodin, P., Van Kolfschooten, H. B. & Centola, F. Artficial Intelligence in Mental Healthcare (Mental Health Europe, 2025).

Young, J. et al. The role of AI in peer support for young people: a study of preferences for human- and AI-generated responses. In Proc. CHI Conference on Human Factors in Computing Systems (CHI ’24) 1006 (Association for Computing Machinery, 2024).

Schaaff, C. et al. Youth perspectives on generative AI and its use in health care. J. Med. Internet Res. 27, e72197 (2025).

Smith, K. A. et al. Digital mental health: challenges and next steps. BMJ Ment. Health 26, e300670 (2023).

Laacke, S., Mueller, R., Schomerus, G. & Salloch, S. Artificial intelligence, social media and depression. A new concept of health-related digital autonomy. Am. J. Bioeth. 21, 4–20 (2021).

Seroussi, B. & Zablit, I. Implementation of digital health ethics: a first step with the adoption of 16 European ethical principles for digital health. Stud. Health Technol. Inform. 310, 1588–1592 (2024).

Nebeker, C., Gholami, M., Kareem, D. & Kim, E. Applying a digital health checklist and readability tools to improve informed consent for digital health research. Front. Digit. Health 3, 690901 (2021).

Nebeker, C., Torous, J. & Bartlett Ellis, R. J. Building the case for actionable ethics in digital health research supported by artificial intelligence. BMC Med. 17, 137 (2019).

Psihogios, A. M., King-Dowling, S., Mitchell, J. A., McGrady, M. E. & Williamson, A. A. Ethical considerations in using sensors to remotely assess pediatric health behaviors. Am. Psychol. 79, 39–51 (2024).

Chng, S. Y. et al. Ethical considerations in AI for child health and recommendations for child-centered medical AI. npj Digit. Med. 8, 152 (2025).

Mello, M. M. & Cohen, I. G. Regulation of health and health care artificial intelligence. JAMA 333, 1769–1770 (2025).

Tavory, T. Regulating AI in mental health: ethics of care perspective. JMIR Ment. Health 11, e58493 (2024).

van Kolfschooten, H. & van Oirschot, J. The EU Artificial Intelligence Act (2024): implications for healthcare. Health Policy 149, 105152 (2024).

Dignum, V., Penagos, M., Pigmans, K. & Vosloo, S. Policy Guidance on AI for Children (UNICEF, 2021); https://www.unicef.org/innocenti/reports/policy-guidance-ai-children

Atabey, A. et al. Children & AI Design Code (5Rights Foundation); https://5rightsfoundation.com/children-and-ai-code-of-conduct/

Artificial Intelligence for Children (World Economic Forum, 2022); https://www3.weforum.org/docs/WEF_Artificial_Intelligence_for_Children_2022.pdf

APA’s AI Tool Guide for Practitioners (APA, 2024); https://www.apaservices.org/practice/business/technology/tech-101/evaluating-artificial-intelligence-tool

Urging the Federal Trade Commission to Take Action on Unregulated AI (APA, 2025); https://www.apaservices.org/advocacy/news/federal-trade-commission-unregulated-ai

National Academy of Medicine. An Artificial Intelligence Code of Conduct for Health and Medicine: Essential Guide for Aligned Action (National Academies Press, 2025); https://nap.nationalacademies.org/catalog/29087/an-artificial-intelligence-code-of-conduct-for-health-and-medicine

The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research: Appendix (Department of Health, Education, and Welfare, National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1978).

Cassell, E. J. The principles of the Belmont report revisited. How have respect for persons, beneficence, and justice been applied to clinical medicine? Hastings Cent. Rep. 30, 12–21 (2000).

McNeilly, E. A. et al. Adolescent social communication through smartphones: linguistic features of internalizing symptoms and daily mood. Clin. Psychol. Sci. 11, 1090–1107 (2023).

Stiles-Shields, C. et al. mHealth uses and opportunities for teens from communities with high health disparities: a mixed-methods study. J. Technol. Behav. Sci. 8, 282–294 (2022).

Movahed, S. V. & Martin, F. Ask me anything: exploring children’s attitudes toward an age-tailored AI-powered chatbot. Preprint at https://arxiv.org/abs/2502.14217 (2025).

Duffy, C. 'There are no guardrails.' This mom believes an AI chatbot is responsible for her son’s suicide. CNN Buisness https://edition.cnn.com/2024/10/30/tech/teen-suicide-character-ai-lawsuit/ (2024).

Kurian, N. ‘No, Alexa, no!’: designing child-safe AI and protecting children from the risks of the ‘empathy gap’ in large language models. Learn. Media Technol. https://doi.org/10.1080/17439884.2024.2367052 (2024).

Hadar-Shoval, D., Asraf, K., Mizrachi, Y., Haber, Y. & Elyoseph, Z. Assessing the alignment of large language models with human values for mental health integration: cross-sectional study using Schwartz’s theory of basic values. JMIR Ment. Health 11, e55988 (2024).

Mansfield, K. L. et al. From social media to artificial intelligence: improving research on digital harms in youth. Lancet Child Adolesc. Health 9, 194–204 (2025).

Xu, Y., Prado, Y., Severson, R. L., Lovato, S. & Cassell, J. in Handbook of Children and Screens 611–617 (Springer Nature, 2025).

Wong, Q. California lawmakers consider possible dangers of AI chatbots. Los Angeles Times (9 April 2025); https://www.govtech.com/artificial-intelligence/california-lawmakers-consider-possible-dangers-of-ai-chatbots

Sanderson, C., Douglas, D. & Lu, Q. Implementing responsible AI: tensions and trade-offs between ethics aspects. In Proc. 2023 International Joint Conference on Neural Networks (IJCNN) 1–7 (IEEE, 2023).

"Without It, I Wouldn't Be Here Today" (Born This Way Foundation, 2025); https://bornthisway.foundation/research/lgbtq-young-people-online-communities-2025/

Mustanski, B. Ethical and regulatory issues with conducting sexuality research with LGBT adolescents: a call to action for a scientifically informed approach. Arch. Sex. Behav. 40, 673–686 (2011).

Chleider, J. L., Dobias, M., Sung, J., Mumper, E. & Mullarkey, M. C. Acceptability and utility of an open-access, online single-session intervention platform for adolescent mental health. JMIR Ment. Health 7, e20513 (2020).

Liverpool, S. et al. Engaging children and young people in digital mental health interventions: systematic review of modes of delivery, facilitators, and barriers. J. Med. Internet Res. 22, e16317 (2020).

Samdal, O. et al. Encouraging greater empowerment for adolescents in consent procedures in social science research and policy projects. Obes. Rev. 24, e13636 (2023).

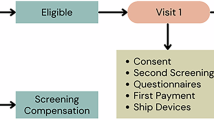

Stiles-Shields, C. et al. Digital mental health screening, feedback, and referral system for teens with socially complex needs: protocol for a randomized controlled trial integrating the Teen Assess, Check, and Heal System into pediatric primary care. JMIR Res. Protoc. 14, e65245 (2025).

Hein, I. M. et al. Informed consent instead of assent is appropriate in children from the age of twelve: policy implications of new findings on children’s competence to consent to clinical research. BMC Med. Ethics 16, 76 (2015).

Crane, S. & Broome, M. E. Understanding ethical issues of research participation from the perspective of participating children and adolescents: a systematic review. Worldviews Evid. Based. Nurs. 14, 200–209 (2017).

Stiles-Shields, C. et al. A call to action: using and extending human-centered design methodologies to improve mental and behavioral health equity. Front. Digit. Health 4, 848052 (2022).

Ramos, G. & Chavira, D. A. Use of technology to provide mental health care for racial and ethnic minorities: evidence, promise, and challenges. Cogn. Behav. Pract. 29, 15–40 (2022).

Whitehead, L., Talevski, J., Fatehi, F. & Beauchamp, A. Barriers to and facilitators of digital health among culturally and linguistically diverse populations: qualitative systematic review. J. Med. Internet Res. 25, e42719 (2023).

Ramos, G., Ponting, C., Labao, J. P. & Sobowale, K. Considerations of diversity, equity, and inclusion in mental health apps: a scoping review of evaluation frameworks. Behav. Res. Ther. 147, 103990 (2021).

Hatef, E., Hudson Scholle, S., Buckley, B., Weiner, J. P. & Austin, J. M. Development of an evidence- and consensus-based Digital Healthcare Equity Framework. JAMIA Open 7, ooae136 (2024).

McCabe, E. et al. Youth engagement in mental health research: a systematic review. Health Expect. 26, 30–50 (2023).

Zhou, B. et al. Youth Perspectives and Recommendations for the United Nations's High-Level Advisory Board on Artificial Intelligence. Foundational Papers of the United Nations High-Level Advisory Body on AI (United Nations, 2023).

Gurevich, E., El Hassan, B. & El Morr, C. Equity within AI systems: what can health leaders expect? Healthc. Manage. Forum 36, 119–124 (2023).

Kotek, H., Dockum, R. & Sun, D. Gender bias and stereotypes in large language models. In Proc. ACM Collective Intelligence Conference 12–24 (Association for Computing Machinery, 2023).

Kharchenko, J., Roosta, T., Chadha, A. & Shah, C. How well do LLMs represent values across cultures? Empirical analysis of LLM responses based on Hofstede cultural dimensions. Preprint at https://arXiv:2406.14805 (2024).

Karnik, N. S., Afshar, M., Churpek, M. M. & Nunez-Smith, M. Structural disparities in data science: a prolegomenon for the future of machine learning. Am. J. Bioeth. 20, 35–37 (2020).

Wells, K. An eating disorders chatbot offered dieting advice, raising fears about AI in health. Shots https://www.npr.org/sections/health-shots/2023/06/08/1180838096/an-eating-disorders-chatbot-offered-dieting-advice-raising-fears-about-ai-in-health (2023).

Gabriel, S., Puri, I., Xu, X., Malgaroli, M. & Ghassemi, M. in Findings of the Association for Computational Linguistics: EMNLP 2024 (eds Al-Onaizan, Y. et al.) 2206–2221 (Association for Computational Linguistics, 2024).

Ramos, G. et al. App-based mindfulness meditation for people of color who experience race-related stress: protocol for a randomized controlled trial. JMIR Res. Protoc. 11, e35196 (2022).

Thomas et al. Leveraging data and digital health technologies to assess and impact social determinants of health (SDoH): a state-of-the-art literature review. Online J. Public Health Inform. 13, E14 (2021).

McGorry, P. D. et al. The Lancet Psychiatry Commission on youth mental health. Lancet Psychiatry 11, 731–774 (2024).

Bailey, K. et al. Benefits, barriers and recommendations for youth engagement in health research: combining evidence-based and youth perspectives. Res. Involv. Engagem. 10, 92 (2024).

Zidaru, T., Morrow, E. M. & Stockley, R. Ensuring patient and public involvement in the transition to AI-assisted mental health care: a systematic scoping review and agenda for design justice. Health Expect. 24, 1072–1124 (2021).

Schueller, S. M., Hunter, J. F., Figueroa, C. & Aguilera, A. Use of digital mental health for marginalized and underserved populations. Curr. Treat. Options Psychiatry 6, 243–255 (2019).

Juntunen, C. L. et al. Centering equity, diversity, and inclusion in ethical decision-making. Prof. Psychol. Res. Pr. 54, 17–27 (2023).

Stiles-Shields, C., Ramos, G., Ortega, A. & Psihogios, A. M. Increasing digital mental health reach and uptake via youth partnerships. npj Ment. Health Res. 2, 9 (2023).

Figueroa, C. et al. Skepticism and excitement when co-designing just-in-time mental health apps with minoritized youth. Preprint at SSRN https://doi.org/10.2139/ssrn.5039034 (2024).

Afkinich, J. L. & Blachman-Demner, D. R. Providing incentives to youth participants in research: a literature review. J. Empir. Res. Hum. Res. Ethics 15, 202–215 (2020).

Lyon, A. R., Brewer, S. K. & Areán, P. A. Leveraging human-centered design to implement modern psychological science: return on an early investment. Am. Psychol. 75, 1067–1079 (2020).

Malloy, J., Partridge, S. R., Kemper, J. A., Braakhuis, A. & Roy, R. Co-design of digital health interventions with young people: a scoping review. Digit. Health 9, 20552076231219117 (2023).

van Velsen, L., Ludden, G. & Grünloh, C. The limitations of user- and human-centered design in an eHealth context and how to move beyond them. J. Med. Internet Res. 24, e37341 (2022).

Khawaja, J., Bagley, C. & Taylor, B. Breaking the silence: critical discussion of a youth participatory action research project. JCPP Adv. 4, e12283 (2024).

Leman, A. M. et al. An interdisciplinary framework of youth participatory action research informed by curricula, youth, adults, and researchers. J. Res. Adolesc. 35, e13007 (2024).

Figueroa, C., Marin, L., de Reuver, M. and Jaff, M. Translating well-being values into design features of social media platforms: a value sensitive design approach. Preprint at OSF https://osf.io/preprints/osf/wxtj7_v1 (2024).

Farmer, N. et al. Use of a community advisory board to build equitable algorithms for participation in clinical trials: a protocol paper for HoPeNET. BMJ Health Care Inform. 29, e100453 (2022).

Chang, M. A. et al. Co-design partners as transformative learners: imagining ideal technology for schools by centering speculative relationships. In Proc. CHI Conference on Human Factors in Computing Systems 1–15 (ACM, 2024).

Thorn, P. et al. Developing a suicide prevention social media campaign with young people (the #chatsafe project): co-design approach. JMIR Ment. Health 7, e17520 (2020).

Teixeira, S., Augsberger, A., Richards-Schuster, K. & Sprague Martinez, L. Participatory research approaches with youth: ethics, engagement, and meaningful action. Am. J. Community Psychol. 68, 142–153 (2021).

Bevan et al. Practitioner review: co-design of digital mental health technologies with children and young people. J. Child Psychol. Psychiatry 61, 928–940 (2020).

Cockerham, D. Participatory action research: building understanding, dialogue, and positive actions in a changing digital environment. Educ. Technol. Res. Dev. 72, 2763–2791 (2024).

Gerdes, A. & Frandsen, T. F. A systematic review of almost three decades of value sensitive design (VSD): what happened to the technical investigations? Ethics Inf. Technol. 25, (2023).

Acknowledgements

C.A.F. is supported through the ‘High Tech for a Sustainable Future’ capacity-building program of the 4TU Federation in the Netherlands. The funders had no role in the content of the manuscript. G.R. is supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number T32HL166114. N.S.K.’s work on this project is supported, in part, by the J. Usha Raj Endowed Professorship at the University of Illinois Chicago. C.S.-S.’s work is supported in part by a grant from the National Institute of Mental Health (K08 MH125069). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or any of the institutions named here.

Author information

Authors and Affiliations

Contributions

C.S.-S. drafted the initial version of the paper. C.A.F. led the development of subsequent drafts and facilitated discussion sessions among the authors. C.A.F., C.S.-S., E.E.A., G.R., N.S.K., A.M.P. and O.A. participated in at least one group discussion. P.B. and E.E. engaged in a dedicated session with C.A.F. and met with her individually to discuss their contributions, which they additionally provided in writing. C.A.F. also held a separate meeting with M.d.H. to incorporate their input. C.A.F., C.S.-S., E.E.A., G.R., N.S.K., A.M.P., O.A., P.B., E.E. and M.d.H. contributed to editing and approved the final version of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Mental Health thanks Marcello Ienca, Tamar Tavory and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Figueroa, C.A., Ramos, G., Psihogios, A.M. et al. Advancing youth co-design of ethical guidelines for AI-powered digital mental health tools. Nat. Mental Health 3, 870–878 (2025). https://doi.org/10.1038/s44220-025-00467-7

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s44220-025-00467-7

This article is cited by

-

Translating the value of well-being into design features of social media platforms: a value sensitive design approach

Ethics and Information Technology (2026)