Abstract

The Leshan Giant Buddha’s ushnisha (Head Bun) has suffered from loss of lime plaster, cracks, and biological damage, compromising its structural integrity and reducing the effectiveness of the drainage system in the Buddha's head. The infiltration of moisture has led to water damage within the statue, significantly accelerating its weathering. This situation urgently requires protection and reinforcement measures. Detecting deterioration in the ushnisha is a crucial step in the preservation process. In this study, we utilized two deep learning models for pixel-level semantic segmentation of the damage. Due to the small size of the cracks, a weighted loss function was applied to improve both the training speed of the model and the efficiency of crack identification. This weighting strategy proved effective for both models. The weighted K-Net model achieved a mean accuracy (mAcc) of 90.23% and a mean intersection-over-union (mIoU) of 69.55%, with a damage segmentation speed of 7 images per second, which is 1309 times faster than manual segmentation. By applying the trained deep learning models to re-examine the ushnisha, we successfully identified damage that had been overlooked during manual annotation. Using the model’s enhanced results, we conducted a comprehensive quantification of the damage across all ushnisha and identified the most severely affected areas. Additionally, we performed a model interpretability analysis to explain the decision-making process and principles of the deep learning models. This research provides significant practical value for detecting and quantifying damage in the Leshan Giant Buddha.

Similar content being viewed by others

Introduction

The Leshan Giant Buddha, located in Leshan City, Sichuan Province, was carved in 713 AD, during the early years of Emperor Xuanzong’s reign in the Tang Dynasty. It is one of the world's largest stone-carved seated Maitreya Buddha statues [1]. In February 1982, the State Council of China designated the Buddha as a National Key Cultural Relic, and in December 1996, UNESCO included it in the World Heritage List for both natural and cultural heritage. The ushnisha atop the Buddha's head is composed of 1021 individual sandstone blocks, bonded with an inner layer of lime and covered by two layers of plaster. This design not only holds symbolic significance but also serves a practical purpose. The 18-layer structure of the ushnisha forms a drainage system, with transverse drainage channels located at the 4th, 9th, and 18th layers, decorated with lime plaster. This drainage system works in conjunction with the Buddha’s collar, robe folds, chest drainage channels, and the ventilation tunnels behind the ears (which connect to the cliffs), creating a complete network of drainage and ventilation. This network helps to slow down erosion and weathering.

However, prolonged exposure to a harsh geological conditions has led to widespread issues within the ushnisha, including cracking (Fig. 1c), loss of lime plaster (Fig. 1d), and biological damage from plant growth (Fig. 1e). These issues have diminished the drainage system's effectiveness, allowing rainwater to infiltrate the Buddha's body, altering the physical and mechanical properties of the bedrock, and thereby compromising the overall stability of the statue. This poses a potential threat to its long-term preservation. Given the critical role that the ushnisha plays in the Buddha’s structure, its deterioration has significantly impacted the head’s drainage efficiency. Consequently, the timely protection and reinforcement of the ushnisha have become a priority in current cultural heritage conservation efforts.

Since 1914, the Leshan Giant Buddha has undergone seven major restoration efforts by relevant departments and organizations. However, inspecting the 1021 ushnisha elements has always been a daunting task, as manual detection is time-consuming, labor-intensive, and inefficient. Moreover, due to the Buddha’s massive size—standing 71 m tall, with a head height of 14.7 m and an ear length of 6.72 m—manual inspection poses significant safety risks. Thus, exploring more efficient, safe, and cost-effective inspection methods is crucial for improving the Buddha’s preservation and ensuring sustainable conservation [2, 3].

As deep learning advances, the development of automated digital systems, tools, and techniques has become a focal point of the Industry 4.0 revolution [4]. Utilizing algorithms such as convolutional neural networks (CNNs) based on deep learning to detect defects in cultural heritage (CH) images can help overcome the time-consuming and labor-intensive nature of manual inspections [5]. In practical applications, deep learning technology has been widely used in the inspection of bridges [6,7,8], roads [9, 10], the construction industry [11, 12], and modern concrete buildings and surfaces [13,14,15]. In the CH domain, one of the latest digital technologies is AI-based automation [16]. Research on applying deep learning-based computer vision in CH is expanding and can be categorized into three main areas [17].

The first category involves direct classification and assessment of cultural heritage defects and damage in images. For example, Karimi et al. [18] utilized the Inception-ResNet-v2 model to detect cracks, spalling, erosion, and salt weathering defects in the bridges of Isfahan, achieving accuracy, precision, and recall rates of 96.58%, 96.96%, and 96.24%, respectively. Meklati et al. [19] used the MobileNetV2 model to detect wall damage, achieving a weighted average precision of 0.868 in identifying common surface damage types in wall photographs. Wang et al. [20] used a deep learning framework to detect multiple types of damage, such as spalling, cracks, and weathering, in the walls of Beijing's Forbidden City, achieving an average accuracy of 94.3%. They then applied a Faster R-CNN model based on the ResNet101 framework for real-time damage detection in masonry buildings [2]. Ugail et al. [21] employed deep transfer learning for visual analysis and attribution of paintings by Raphael, demonstrating an accuracy of 98% in image-based classification tasks during validation on a test set of well-known and authentic Raphael paintings.

The second category involves the use of object detection algorithms to locate defects and damage in cultural heritage images, mainly including the YOLO [22] series and R-CNN [23, 24] series of algorithms. For instance, Yang et al. [25] used the YOLOv4 machine learning model to automatically detect five types of damage in historical gray brick buildings within the buffer zone of the World Cultural Heritage site in Macau. Mishra et al. [26] applied the YOLOv5 framework to detect four types of defects—discoloration, exposed bricks, cracks, and spalling—in monuments in New Delhi, India. Mansuri et al. [27] utilized the Faster R-CNN framework to detect three types of defects on a monument in Surat, India, and summarized various AI techniques and algorithms used in CH conservation in related literature [28], highlighting significant advances in AI for heritage conservation. Pathak et al. [29] used the Faster R-CNN model to detect damage in 3D point cloud data collected from cultural heritage sites and to locate detected cracks and spalling on the 3D model.

The third category involves the detection and segmentation of cultural heritage defects and damage using semantic segmentation models. This task is typically more complex than image classification and object detection but has seen some successful applications in the CH field. For example, Yuan et al. [30] used the Res-UNet semantic segmentation method to identify cracks and painting losses in the Forbidden City in Beijing, achieving accuracy and F1-score values of 98.19% and 93.42%, respectively. Wu et al. [31] proposed a new U-Net convolutional neural network for mural crack segmentation tasks, demonstrating precise segmentation performance with an mIoU value of 0.7731. Garrido et al. [32] combined infrared thermography technology and the Mask R-CNN algorithm, using thermal image sequences as input for the Mask R-CNN learning process to automatically detect and segment surface and subsurface defects. Liu et al. [33] used the DeepLabV3 series of semantic segmentation models to monitor the semantic segmentation of crowdsourced images of historical facades, achieving an IoU value of 66.9%.

The aforementioned studies demonstrate the widespread and successful application of deep learning algorithms in the CH domain, showing the feasibility of replacing manual defect detection with these techniques. However, in the numerous restorations and conservation efforts of the Leshan Giant Buddha over the years, the ushnisha damage has been manually detected, with no deep learning methods employed thus far. To fill this research gap, this study conducted field surveys to obtain images of the ushnisha and employed two deep learning models, PSPNet and K-Net, to perform pixel-level segmentation experiments on the damage. To improve the accuracy of crack damage segmentation, a weighted strategy was proposed, which adjusts the model’s loss function according to the proportion of the damaged area. Additionally, the study conducted secondary damage detection based on the model’s generalization ability, identifying cracks missed during manual annotation, and completed statistical and quantitative evaluations of all ushnisha in the dataset. Finally, to address the challenge of model interpretability in deep learning, this study utilized the Grad-CAM algorithm to perform interpretability analysis on the damage identification results, visually displaying the areas of interest during model detection through heatmaps, thereby gaining a deeper understanding of the model’s decision-making process.

Data collection and workflow

Due to the loss of lime plaster on the ushnisha, rainwater can directly infiltrate these areas during rainfall, causing damage to the Buddha's structure. The cyclical growth of plants on the ushnisha leads to dehydration, shrinkage, flaking, and spalling of the rock surface, exposing new rock faces and exacerbating deterioration. Cracking is an early stage of the damage process, where plant roots grow along the microcracks in the ushnisha, further damaging it through root wedging. Additionally, the organic acids secreted by the roots contribute to the corrosion of the ushnisha [34]. Consequently, this study focuses on three types of damage: loss of lime plaster, biological damage from plant growth, and cracks. To analyze these forms of deterioration, we collected images of ushnishas exhibiting these specific damage patterns. The image data was acquired in May 2021 using a Canon EOS 200D II camera with a resolution of 2976 × 1984. To ensure image quality and consistency, all photographs were taken under clear weather conditions. This approach minimized the impact of light variations and shadows on image quality, thereby enhancing the accuracy and reliability of subsequent analysis.

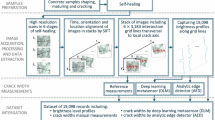

As shown in Fig. 2, the complete workflow of this study is outlined as follows. First, 149 images of the ushnisha on the head of the Leshan Giant Buddha were collected in the field. These images underwent preprocessing, including noise reduction, contrast enhancement, and brightness adjustment. Next, the Labelme tool was used to annotate the damage in the images, constructing a dataset for training the semantic segmentation neural network. A total of 120 images were designated as the training set, while 29 images were used as the test set. The training set was then augmented through operations such as flipping, translation, sharpening, HSV transformation, and noise addition, resulting in a final training set of 360 images. It should be noted that in this study, the data augmentation strategies were combined in a probabilistic manner, meaning that each image may have undergone one or more augmentation techniques (for example, an image may have both noise addition and sharpening applied at the same time), with the goal of obtaining more diverse training samples. These images were used to train the deep learning models, which were subsequently evaluated on the test set to assess their performance. Since the images in the test set were entirely new to the models, they provided an effective test of the models’ generalization capabilities. Finally, based on the damage segmentation results from the models, a quantitative assessment of all ushnisha damage was completed.

Methodology

Semantic segmentation models

The objective of semantic segmentation is to classify each pixel in an image into a specific category. This task requires not only identifying objects within the image but also precisely localizing them. Compared to image classification and object detection, semantic segmentation is significantly more challenging due to its pixel-level precision requirements. In this study, we employed two models, the Pyramid Scene Parsing Network (PSPNet) and K-Net, to segment the damage on the ushnisha of the Leshan Giant Buddha’s head. Additionally, we proposed a weighted strategy based on the proportion of damage area to optimize the training process.

PSPNet

PSPNet [35] enhances the model’s understanding of complex scenes by capturing global contextual information through the multi-scale pooling of feature maps. The architecture of PSPNet is typically based on a pre-trained convolutional neural network (e.g., ResNet18, ResNet50, or ResNet101) as the backbone, responsible for extracting fundamental image features. These features are then processed through a pyramid pooling module that integrates multi-scale information, and finally, a convolutional layer generates the refined segmentation predictions.

Figure 3 illustrates the structure of the PSPNet model. Initially, the input image is resized to 512 × 512 pixels, and feature extraction is performed using ResNet50 [36], resulting in a feature map that is 1/8th the size of the original input. The pyramid pooling module then processes these features, generating a feature map that incorporates global information. This is concatenated with the pre-pooled features and passed through a convolutional layer to produce the segmentation output. The pyramid pooling module divides the feature map into grids of 6 × 6, 3 × 3, 2 × 2, and 1 × 1, performing multi-scale pooling to capture contextual information at various scales. PSPNet excels in handling complex scenes and object boundaries, making it particularly suitable for multi-scale damage detection tasks in this study, including the identification of loss of lime plaster, biological damage, and cracks of varying sizes.

K-Net

K-Net [37] is a neural network architecture designed for image segmentation that enhances segmentation performance through the learning and updating of dynamic convolutional kernels. The core concept of K-Net is to dynamically adjust the convolutional kernel parameters during the feature extraction and segmentation process, thereby improving the model's generalization ability and accuracy. Figure 4 illustrates the structure of the K-Net model. The entire network process can be divided into the following five steps:

-

1)

Input and Feature Extraction: In this study, the Swin Transformer was selected as the backbone network to extract features from the input image, which are then processed through a neck network to output the feature map F.

-

2)

Kernel Initialization and Mask Prediction: Semantic kernels and instance kernels are generated through a learning mechanism, which are then convolved with the feature map F to produce initial mask predictions.

-

3)

Kernel Updating and Dynamic Adjustment: The convolutional update head utilizes the dynamic kernels from the previous stage to convolve with the feature map, updating and outputting new dynamic kernels. This process is repeated multiple times to progressively optimize the segmentation results.

-

4)

Category and Mask Prediction: After each kernel update, category prediction is performed, and the updated dynamic kernels are used to predict the mask, generating the final segmentation results.

-

5)

Output: The final segmentation output is produced by combining the category prediction and the mask prediction.

K-Net’s dynamic convolutional kernel updating mechanism and multi-scale feature fusion enable the network to adaptively adjust the convolutional kernel parameters, improving image feature capture and significantly enhancing the accuracy and efficiency of semantic segmentation.

Weighted loss function

During the optimization process, the commonly used standard cross-entropy loss function is formulated as shown in Eq. (1):

Here, \(C\) represents the number of classes, \({y}_{i}\) is the indicator variable for the actual class, which is 1 for the correct class and 0 for the others.\(\hat{y}_{i}\) is the predicted probability of the \({i}^{th}\) class by the model. The closer \(\hat{y}_{i}\) is to 1, the closer \(\log \left( {\hat{y}_{i} } \right)\) approaches 0, thereby reducing the loss value, which aligns with the optimization objective.

However, the standard cross-entropy loss function assigns equal weights to all samples. In detection tasks, if there is an imbalance between positive and negative samples, a large number of easily classified negative samples may dominate, while a small number of difficult samples and positive samples may not contribute effectively, leading to a decrease in model accuracy [38]. Similar issues can arise in semantic segmentation tasks.

In this study, the three types of damage—biological damage, crack damage, and loss of lime plaster—are unevenly distributed in the images. Crack damage appears in 87.2% of the images, biological damage in 92.6%, while loss is relatively rare, occurring in only 45.6% of the images. Although crack damage is more frequent, its area is relatively small, whereas biological damage typically cover a larger area. Moreover, multiple types of damage often coexist on the same ushnisha. This imbalance impacts the recognition of crack damage in semantic segmentation tasks. Therefore, this study proposes a weighted strategy to improve the accuracy of crack detection.

Specifically, the weighted strategy adjusts the loss function by assigning weights to each class, where the weights are inversely proportional to the area ratio of each class. Let \({area}_{i}\) represent the area ratio of the \({i}^{th}\) type of damage. The weight \({w}_{i }{\prime}\) is calculated using Eq. (2) and Eq. (3), which increases the weight of the loss value for minority classes. The final weighted cross-entropy loss function is shown in Eq. (4):

Transfer learning

Transfer learning is a method that applies the knowledge acquired by a model in one task to a related task [39, 40]. The core idea is to transfer pre-trained models or their weight parameters to a new task, reducing the need for large amounts of data, shortening the training time, and improving model performance [41]. In this study, a transfer learning strategy was employed to provide a strong starting point for the model, which reduces the demand for extensive labeled data and speeds up the convergence of the training process. Specifically, the weights of the pre-trained model were loaded into the base model, and all layers were frozen by setting “trainable = False”, which reduces both training time and memory usage.

Grad-CAM

Gradient-weighted Class Activation Mapping (Grad-CAM) [42] is a visualization technique for deep learning models, particularly convolutional neural networks. It generates a Class Activation Map (CAM) to help understand the model's decision-making process by highlighting the regions in the input image that had the greatest influence on its decision. The core idea of Grad-CAM is to use the gradient information to produce a heatmap that highlights the areas with the most significant impact on the model’s output. The process consists of six main steps:

-

1)

Forward Propagation: The input image is passed through the neural network to obtain the final classification score.

-

2)

Gradient Calculation: For the target class, the gradient of the score with respect to the feature map of the last convolutional layer is calculated. These gradients indicate which features most influence the target class score.

-

3)

Global Average Pooling: The gradients for each channel of the feature map are globally averaged to obtain the weight for each channel. These weights reflect the contribution of each feature map to the target class score.

-

4)

Weighted Feature Map Generation: The computed weights are used to perform a weighted sum of the corresponding feature maps.

-

5)

ReLU Activation: ReLU is applied to eliminate negative values, ensuring that only regions with a positive influence on the target class are retained.

-

6)

Heatmap Upsampling: The generated heatmap is upsampled to match the size of the input image, allowing it to be overlaid on the original image for visualization.

Given the output feature map \({A}^{k}\) from a convolutional layer and the score \({y}^{c}\) for the target class, the weight \({\alpha }_{k}^{c}\) for each channel is calculated as shown in Eq. (5):

where \(Z\) is the total number of pixels in the feature map, and \({A}_{ij}^{k}\) is the value at position \((i,j)\) in the \({k}^{th}\) feature map. The final Grad-CAM heatmap \({L}^{c}\) is calculated using Eq. (6), which visually illustrates the regions the model focused on during the decision-making process, thereby providing an explanation for the model's reasoning.

Performance metrics

In semantic segmentation tasks, Mean Accuracy (mAcc) and Mean Intersection over Union (mIoU) are commonly used evaluation metrics. mAcc focuses on the accuracy of predictions within each category, helping to assess the precision of classification, while mIoU is a standard metric for evaluating the overall performance of semantic segmentation, particularly useful in handling datasets with class imbalance. In this study, both metrics were calculated on the test dataset to assess model accuracy, while Frames Per Second (FPS) evaluated the model's speed, providing a comprehensive evaluation of model performance.

Mean accuracy (mAcc)

mAcc measures the proportion of correctly predicted pixels for each class relative to the total number of pixels in that class, focusing on the accuracy of pixel predictions for each category. Given \(C\) classes, where \({n}_{i}\) represents the number of correctly predicted pixels for class \(i\), and \({t}_{i}\) represents the total number of actual pixels for class \(i\) the accuracy for class \(i\), \({\text{Acc}}_{i}\), can be calculated using Eq. (7). The mAcc is the average of the accuracy values across all classes, as shown in Eq. (8).

mAcc assesses classification performance for each class, preventing the overestimation of overall performance due to majority class dominance. However, mAcc is less sensitive to imbalanced datasets, especially when class distributions are highly uneven. Therefore, it is important to use the mIoU metric in conjunction with mAcc to more objectively evaluate the model's performance.

Mean intersection over union (mloU)

In classification tasks, metrics like recall, precision, and F-score are commonly used, whereas mIoU is more commonly used in segmentation tasks. mIoU measures the ratio of the overlap area to the union area between the predicted and the actual results. The IoU for each class \(i\) can be expressed using Eq. (9):

where \({\text{TP}}_{i}\) (True Positive) denotes the number of pixels correctly predicted as class \(i\); \({\text{FP}}_{i}\) (False Positive) indicates the number of pixels incorrectly predicted as class \(i\); and \({\text{FN}}_{i}\) (False Negative) represents the number of pixels that belong to class \(i\) but were incorrectly predicted. The mIoU is the average IoU across all classes, as shown in Eq. (10):

The advantage of mIoU is that it accounts for the impact of misclassification when assessing model performance, offering better robustness against class imbalance. However, mIoU can be more sensitive to performance on smaller classes, where the impact of misclassification is relatively more significant. By combining both mIoU and mAcc metrics to evaluate model accuracy, a more comprehensive assessment of model performance can be achieved.

Results and discussion

In the process of training the model, the batch size refers to the number of images input during each training iteration. After all the images in the training set have passed through the model once, an epoch is completed. These hyperparameters typically need to be adjusted based on the hardware and the characteristics of the dataset. The hardware configuration for this study includes an NVIDIA RTX3090Ti GPU with 24 GB of memory and a 6-core Xeon Gold 6142 CPU. Given the high demands of segmentation tasks on image data size, the input image size was set to 512 × 512 to maintain a high level of recognition accuracy. The final configuration set the batch size of 4, with 10,000 epochs of training. The model’s performance was evaluated on the test set every 10 epochs, and the best-performing model on the test set was saved to prevent overfitting.

Training and validation of PSPNet

In this study, the PSPNet model was trained for 10,000 epochs, during which the error between the predicted results and the actual results (Loss) was evaluated. The loss curve during the training process is shown in Fig. 5. As the number of iterations increased, the Loss value steadily decreased, indicating that the model was gradually converging. Statistical analysis showed that the area proportions of the deterioration types were 28.2% for missing parts, 64.7% for plant-related deterioration, and 7.2% for cracks. Using Eq. (2) and Eq. (3), the weight ratios for the three types of deterioration were calculated as 0.187:0.081:0.732. Figure 5 shows that the use of the weighted strategy significantly accelerated the reduction of the Loss value.

As training progressed, the Loss value stabilized during the later stages. To prevent overfitting—where the model performs well on the training set but underperforms on the test set—validation was conducted every 10 epochs. During validation, the mAcc and mIoU values were calculated on the test set, and only the best-performing model was saved. As shown in Fig. 6, after the 2000th epoch, the improvement in accuracy metrics became gradual. Although there were minor fluctuations, the overall performance remained stable. Without weighting, the highest mAcc on the test set was 69.40%, and the highest mIoU was 61.26%. Using the weighted strategy, mAcc increased to 80.04%, and mIoU to 61.31%. Although the improvement in mIoU was small, the mAcc increased by 10.64%, which is a significant enhancement. Figure 6 also shows that with the weighted strategy, both mAcc and mIoU were notably higher in the early stages of training compared to the unweighted case, with mAcc showing particularly substantial improvement. It is worth noting that these maximum values did not appear simultaneously. For the unweighted case, the maximum mAcc occurred at 8,880 training steps; with the weighted strategy, the maximum mAcc occurred earlier, at 2210 steps, indicating that the weighted strategy improved training efficiency. Similarly, the maximum mIoU occurred at 8610 steps without weighting and at 3460 steps with weighting, further validating the effectiveness of the weighted strategy in enhancing training efficiency.

Changes in various metrics on the PSPNet test set. The blue dashed line represents the mAcc metric without the weighted strategy, with a maximum at Step = 8880; the blue solid line represents mAcc with the weighted strategy, with a maximum at Step = 2210. The green dashed line represents the mIoU metric without the weighted strategy, with a maximum at Step = 8610; the green solid line represents mIoU with the weighted strategy, with a maximum at Step = 3460

Table 1 provides a detailed evaluation of the accuracy metrics for each type of deterioration during the training process. Comparative analysis of the Acc metrics reveals that although the accuracy for the background class slightly decreased, the accuracy for all three types of deterioration improved, particularly for cracks, where the Acc increased by 37.02%, showing the most significant improvement. The Acc for missing parts increased by 1.97%, and for plant-related deterioration, it increased by 6.19%. The mean Acc, calculated as the average of the background and the three types of deterioration, increased by 10.64%, consistent with the results in Fig. 6. Although the weighted strategy significantly improved the Acc metric, the IoU improvement was modest. Therefore, this study also explored the K-Net model to further verify the effectiveness of the weighted strategy.

Training and validation of K-Net

The loss curve during the training process is shown in Fig. 7. As the number of iterations increased, the Loss value steadily decreased, showing that the model was converging, similar to the PSPNet model’s training process. After applying the weighted strategy to the loss function, the rate of decrease in Loss was faster.

To prevent overfitting, validation was conducted every 10 epochs, calculating the mAcc and mIoU values on the test set. As shown in Fig. 8, the introduction of learning kernels, dynamic kernels, and the convolutional update mechanism in the K-Net model, along with the Swin Transformer as the backbone for feature extraction, led to significant improvements in mAcc and mIoU values compared to the PSPNet model. Without weighting, the highest mAcc in the K-Net model on the test set was 81.61%, and the highest mIoU was 67.15%. Compared to PSPNet, mAcc increased by 12.1% and mIoU by 5.89%. With the weighted strategy, the K-Net model's performance further improved, reaching an mAcc of 90.23% and an mIoU of 69.55%. Compared to the unweighted case, mAcc increased by 8.62% and mIoU by 2.3%. As Fig. 8 shows, the mAcc and mIoU curves with the weighted strategy were higher than those without, indicating superior performance.

Changes in various metrics during the K-Net model training on the test set. The blue dashed line represents the mAcc variation without the weighted strategy, with a maximum at Step = 780; the blue solid line represents the mAcc variation with the weighted strategy, with a maximum at Step = 660. The green dashed line represents the mIoU variation without the weighted strategy, with a maximum at Step = 8380; the green solid line represents the mIoU variation with the weighted strategy, with a maximum at Step = 8290

In terms of training efficiency, the K-Net model's performance was consistent with that of the PSPNet model. With the weighted strategy, the maximum mAcc occurred at the 660th iteration, while without weighting, it occurred at the 780th iteration, advancing by 100 steps, indicating higher training efficiency and faster mAcc improvement with the weighted strategy. Similarly, for the mIoU metric, the maximum value without weighting occurred at the 8380th iteration, while with weighting, it advanced to the 8290th iteration, 90 steps earlier, further demonstrating the weighted strategy’s effectiveness in enhancing training efficiency.

Table 2 provides a detailed evaluation of the accuracy metrics for each type of deterioration during the K-Net model training process. A comparative analysis of the Acc metrics shows that while the background class accuracy slightly decreased, recognition accuracy for all three deterioration types improved significantly, particularly for cracks, where the Acc increased by 25.15%, a notable improvement. The Acc for missing parts increased by 3.74%, and for plant-related deterioration, it increased by 7.43%. In terms of IoU metrics, the most significant improvement was in the loss of lime plaster, with a 7.73% increase; IoU for plant-related deterioration and cracks also increased by 0.59% and 1.47%, respectively. The mean values of Acc and IoU for the background and the three types of deterioration showed improvements of 8.62% and 2.4%, respectively, under the weighted strategy, corresponding to the results in Fig. 8, with the maximum mAcc reaching 90.23% and the maximum mIoU reaching 69.55%.

Comparison of recognition results

As shown in Fig. 9, different grayscale values are used to represent various types of deterioration and the background. The background is depicted in black (grayscale value of 0), missing deterioration in dark gray (value of 80), plant-related deterioration in light gray (value of 160), and cracks in white (value of 240), effectively distinguishing between the different types of deterioration. The figure comprises six columns of images. The first column displays the original images of the ushnisha, presenting the actual scene to be segmented. The second column shows the deterioration information manually annotated by experts, representing the true distribution of the deterioration. The third column presents the deterioration segmentation results using the PSPNet model. The fourth column shows the optimized segmentation results of the PSPNet model after applying the weighted strategy. The fifth column presents the deterioration segmentation results using the K-Net model, and the sixth column shows the optimized segmentation results of the K-Net model after applying the weighted strategy. The figure shows that the segmentation results of the models closely align with the actual deterioration conditions. Whether dealing with a single type of deterioration or multiple types, the models accurately segment the major deterioration areas, meeting the requirements of detection. However, there are differences in the recognition results of certain details between the models. For example, in the ushnisha image numbered 657, the weighted PSPNet model exhibited a more ‘aggressive’ segmentation, displaying thicker cracks. Similarly, in the ushnisha image numbered 720, the weighted K-Net model showed much better recognition of a long crack compared to the unweighted results.

Deterioration segmentation results. The left side shows the ushnisha’s number; the first column is the original image; the second column is the annotation information; the third column shows the segmentation results of the PSPNet model; the fourth column shows the segmentation results of the PSPNet model with the weighted strategy; the fifth column shows the segmentation results of the K-Net model; and the sixth column shows the segmentation results of the K-Net model with the weighted strategy

Additionally, it is worth noting that manual annotation of deterioration is time-consuming, and annotators are prone to fatigue, leading to omissions— a common issue in manual deterioration detection. For some fine cracks, manual annotation may result in missed detections. As shown in Fig. 9, the red boxes highlight cracks that were present but were overlooked during manual annotation and were successfully identified by the model. Without zooming in on the image, some cracks are difficult to detect with the naked eye and are easily overlooked. However, semantic segmentation models are pixel-level deep learning models, and after training on the data, they can predict and segment at the pixel level, outperforming manual annotation in terms of efficiency.

Quantitative evaluation based on K-Net

As previously mentioned, the trained model demonstrates excellent generalization ability and can detect fine cracks that were overlooked during manual annotation. Therefore, this study utilized the trained K-Net model to re-assess the entire dataset, supplementing the parts missed during manual annotation and conducting a quantitative analysis of the area proportions of each type of deterioration. This analysis allowed the determination of the specific proportions of missing deterioration, plant-related deterioration, and cracks for each ushnisha.

As shown in Fig. 10a, the proportions of deterioration for each ushnisha were statistically analyzed, where the red line represents the proportion of missing deterioration, the green line indicates the proportion of plant-related deterioration, and the blue line denotes the proportion of cracks. These statistical results allow the identification of the most severely deteriorated ushnisha for each type of deterioration. Figure 10b–d illustrate the results using color overlay methods, where red represents missing deterioration, green indicates plant-related deterioration, and black marks cracks. This method of directly overlaying deterioration information on the original image provides a clear visualization of the distribution and interrelationships of deterioration on the surface of the ushnisha. Figure 10b corresponds to the ushnisha numbered 611, which has the most severe missing deterioration, with a missing deterioration area proportion of 0.278, a plant-related deterioration area proportion of 0.194, and a crack area proportion of 0.00113. Figure 10c corresponds to the ushnisha numbered 615, where plant-related deterioration is the most severe, with a plant-related deterioration area proportion of 0.211, no missing deterioration, and a crack area proportion of 0.002. Figure 10d corresponds to the ushnisha numbered 683, where cracks are the most severe, with a crack area proportion of 0.033, a missing deterioration area proportion of 0.002, and a plant-related deterioration area proportion of 0.131.

Quantitative evaluation results of deterioration; a Statistical results of deterioration area proportions; b Ushnisha with the most severe missing deterioration, with a missing deterioration area proportion of 0.278; c Ushnisha with the most severe plant-related deterioration, with a plant-related deterioration area proportion of 0.211; d Ushnisha with the most severe cracks, with a crack area proportion of 0.033

Speed evaluation

In terms of speed evaluation, since the weighted strategy does not inherently affect the model's running speed, this study primarily compared the speed performance of manual annotation, the PSPNet model, and the K-Net model.

The experimental results indicate that deep learning models significantly outperform manual annotation in processing efficiency. The K-Net model’s detection speed is 7.01 images per second, requiring only 22 s to process 149 images, making it 1309 times faster than manual annotation (approximately 8 h). Although the PSPNet model is slightly less accurate than K-Net, it is faster, processing 10.38 images per second, taking only 14.35 s to process the same dataset, which is 2006 times faster than manual annotation. Deep learning models not only significantly improve work efficiency but also effectively avoid the issue of missed detections due to fatigue during manual annotation. Combined with the accuracy evaluation results discussed earlier, the performance comparison of various methods is summarized in Table 3.

Analysis of model interpretability

Grad-CAM is a visualization technique used in deep learning models to help understand the model’s decision-making process. It generates class activation maps highlighting the regions of the input image that are crucial for a specific decision. The main principle uses the model’s gradient information relative to the input image to generate a heatmap, highlighting the regions with the most significant impact on the model's output. As shown in Fig. 11, when cracks are specified as the target category, Grad-CAM computes a heatmap for the crack category based on Eq. (6). The regions with the most crack-like features are highlighted in the image, with areas closer to red indicating a higher probability of being identified as cracks.

Additionally, as shown in Fig. 12, there are three images of ushnishas with various types of deterioration. By specifying the type of deterioration to detect, Grad-CAM generates corresponding heatmaps, highlighting areas with specific deterioration characteristics. For instance, when cracks are specified for detection, areas with crack features are highlighted; similarly, when plant-related or missing deterioration is specified, corresponding regions are highlighted. By selecting a specific type of deterioration for detection, the algorithm uses heatmaps to reveal the model’s focus areas, directly showing where the model’s attention lies. This aids in a deeper understanding of the decision-making process and underlying principles of deep learning models.

Interpretability analysis Heatmap (Multiple Deterioration Types Coexisting); The numbers on the left correspond to the IDs of the ushnishas on the head of the Leshan Giant Buddha. The first column shows the original images, the second column displays the heatmap for cracks, the third column shows the heatmap for plant-related deterioration, and the fourth column displays the heatmap for loss of lime plaster

However, it must be emphasized that interpreting deep learning “black box” models still faces significant challenges. This study's use of Grad-CAM for model interpretability analysis is an attempt that, while not completely breaking open the “black box” model, has successfully transformed it partially into a “gray box” model. Through this visualization technique, the aim is to enhance our understanding of the model’s decision-making process, “focus points,” and “intentions.” Consider a hypothetical scenario: if, when detecting cracks, the model's focus points were not concentrated on crack areas but randomly distributed across the image, this would contradict human intuitive cognition, making it difficult to understand the basis of the model's decision-making behavior. However, the visualization results from Grad-CAM in this study reassuringly demonstrate that when crack detection is specified, the model's attention areas indeed focus on locations with crack characteristics, rather than being randomly scattered throughout the image. This confirms that the model’s decision-making is based on reasonable grounds and follows certain logic, thereby enhancing our confidence in the model’s decision-making process. It deepens our understanding of the model's internal mechanisms and provides important basis for further optimization and improvement of the model, driving the application of artificial intelligence in cultural heritage protection towards higher accuracy and reliability.

Limitations and future prospects

Balance between computational efficiency and accuracy

While the segmentation models employed in this study demonstrate excellent accuracy, their high computational resource requirements pose challenges for standard computing devices. Currently, the processing speed of these algorithms has not reached the threshold required for real-time video analysis, precluding their direct integration into drone systems for real-time detection. Consequently, they are limited to offline processing of image data. The You Only Look Once (YOLO) series, as one-stage detection models, exhibit outstanding computational efficiency but slightly lower accuracy. Future research could explore improvements based on YOLO models to achieve a more optimal balance between speed and accuracy.

Limitations in model generalization capability

Detecting deterioration in various cultural heritage artifacts typically requires specific datasets to train specialized models, as a single model struggles to generalize across multiple domains. For instance, the model developed in this study is challenging to apply directly to deterioration detection in other cultural heritage contexts. However, it is noteworthy that while this model has limitations in cross-domain applications, it performs excellently in detecting deterioration in the ushnisha of the Leshan Giant Buddha. Considering the enormous scale of the Leshan Giant Buddha (with 1021 ushnisha on the head), each deterioration inspection is a formidable task. The detection capability of this model can be continuously applied to future inspection work, significantly reducing the workload of cultural heritage conservation professionals. This specialized application holds significant importance for the long-term preservation of the Leshan Giant Buddha. Future research directions may include:

-

1)

Exploring methods to enhance model computational efficiency to achieve real-time detection.

-

2)

Developing models with stronger generalization capabilities to adapt to deterioration detection in various types of cultural heritage.

-

3)

Integrating multimodal data, such as hyperspectral imagery or 3D scan data, to improve the comprehensiveness and accuracy of detection.

Limitations of quantitative assessment

Given the unique nature of the Leshan Giant Buddha, there have been no reported studies or literature applying deep learning models for the detection of damage to its ushnisha. Thus, our research represents a relatively recent development. In previous survey work, damage assessment of the ushnisha primarily relied on verbal descriptions, a method that made quantification of damage severity challenging and failed to directly reflect the damage condition of each ushnisha or the urgency of conservation work. In contrast, quantitative assessments based on deep learning models can accurately rank the severity of damage to the ushnisha, allowing for prioritized treatment of the most severely damaged areas within limited time and resource constraints. This approach not only enhances the efficiency of conservation efforts but also maximizes the prevention of rainwater infiltration from the Buddha's head into its body, effectively mitigating further erosion.

It is important to emphasize that the actual damage to the ushnisha is three-dimensional; therefore, the quantitative damage assessment provided by visual models based on two-dimensional images serves only as a semi-quantitative reference and cannot fully represent the actual three-dimensional damage. Furthermore, mosses and herbaceous plants differ significantly in their degree of harm to the Leshan Giant Buddha. In future in-depth studies, mosses and herbaceous plants could be distinguished as two separate categories. This differentiation is based not only on their varying degrees of damage to the Leshan Giant Buddha but also on the specific treatment measures required for each. Therefore, a more refined classification of these two types of plants will contribute to the development of more targeted conservation strategies.

Conclusion

This study employed two semantic segmentation models to perform semantic segmentation and quantitative evaluation of deterioration in the ushnishas of the Leshan Giant Buddha, providing significant support for the detection of deterioration in the statue. The conclusions are as follows:

-

1)

The PSPNet and K-Net models demonstrated excellent performance in detecting deterioration, with the identified deterioration areas closely matching the results of manual detection, while their processing speed was over 1000 times faster than manual methods. By implementing the weighted strategy proposed in this study, the effectiveness of crack detection was further enhanced, achieving a final mean accuracy (mAcc) of 90.23% and a mean intersection over union (mIoU) of 69.55%, meeting the accuracy requirements for deterioration segmentation.

-

2)

The trained models exhibited strong generalization capabilities, enabling precise pixel-level segmentation of deterioration and the detection of fine cracks that were overlooked during manual inspection.

-

3)

Based on the segmentation results from the models, each ushnisha was quantitatively evaluated for deterioration. Ushnisha No. 611 had the most severe missing deterioration, Ushnisha No. 615 exhibited the most severe plant-related deterioration, and Ushnisha No. 683 had the most severe crack deterioration.

-

4)

Grad-CAM was utilized to conduct interpretability analysis of the models, with the resulting heatmaps aiding in the understanding of the model's decision-making process.

Availability of data and materials

No datasets were generated or analysed during the current study.

References

Sun B, Zhang H, Zhang P, Shen X, Yang T. Study on characteristics of water seepage in chest of leshan giant buddha. J Southwest Jiaotong Univ. 2023;58:1037–44.

Wang N, Zhao X, Zhao P, Zhang Y, Zou Z, Ou J. Automatic damage detection of historic masonry buildings based on mobile deep learning. Autom Constr. 2019;103:53–66.

Agdas D, Rice JA, Martinez JR, Lasa IR. Comparison of visual inspection and structural-health monitoring as bridge condition assessment methods. J Perform Constr Facil. 2016;30:04015049.

Newman C, Edwards D, Martek I, Lai J, Thwala WD, Rillie I. Industry 4.0 deployment in the construction industry: a bibliometric literature review and UK-based case study. Smart Sustain Built Environ. 2021;10:557–80.

Sony S, Dunphy K, Sadhu A, Capretz M. A systematic review of convolutional neural network-based structural condition assessment techniques. Eng Struct. 2021;226:111347.

Dung CV, Sekiya H, Hirano S, Okatani T, Miki C. A vision-based method for crack detection in gusset plate welded joints of steel bridges using deep convolutional neural networks. Autom Constr. 2019;102:217–29.

Peng X, Zhong X, Zhao C, Chen A, Zhang T. A UAV-based machine vision method for bridge crack recognition and width quantification through hybrid feature learning. Constr Build Mater. 2021;299:123896.

Cardellicchio A, Ruggieri S, Nettis A, Renò V, Uva G. Physical interpretation of machine learning-based recognition of defects for the risk management of existing bridge heritage. Eng Fail Anal. 2023;149:107237.

Du Y, Pan N, Xu Z, Deng F, Shen Y, Kang H. Pavement distress detection and classification based on YOLO network. Int J Pavement Eng. 2021;22:1659–72.

Mei Q, Gül M. A cost effective solution for pavement crack inspection using cameras and deep neural networks. Constr Build Mater. 2020;256:119397.

Akinosho TD, Oyedele LO, Bilal M, Ajayi AO, Delgado MD, Akinade OO, et al. Deep learning in the construction industry: a review of present status and future innovations. J Build Eng. 2020;32:101827.

Pan Y, Zhang L. Roles of artificial intelligence in construction engineering and management: a critical review and future trends. Autom Constr. 2021;122:103517.

Dorafshan S, Thomas RJ, Maguire M. Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr Build Mater. 2018;186:1031–45.

Kim B, Yuvaraj N, Sri Preethaa K, Arun PR. Surface crack detection using deep learning with shallow CNN architecture for enhanced computation. Neural Comput Appl. 2021;33:9289–305.

Zou Z, Zhao X, Zhao P, Qi F, Wang N. CNN-based statistics and location estimation of missing components in routine inspection of historic buildings. J Cult Herit. 2019;38:221–30.

Peng N, Zhang C, Zhu Y, Zhang Y, Sun B, Wang F, et al. A vulnerability evaluation method of earthen sites based on entropy weight-TOPSIS and K-means clustering. Herit Sci. 2024;12:161.

Mishra M, Lourenço PB. Artificial intelligence-assisted visual inspection for cultural heritage: State-of-the-art review. J Cult Herit. 2024;66:536–50.

Karimi N, Valibeig N, Rabiee HR. Deterioration detection in historical buildings with different materials based on novel deep learning methods with focusing on isfahan historical bridges. Int J Archit Herit. 2024;18:981–93.

Meklati S, Boussora K, Abdi MEH, Berrani S-A. Surface damage identification for heritage site protection: a mobile crowd-sensing solution based on deep learning. ACM J Comput Cult Herit. 2023;16:1–24.

Wang N, Zhao Q, Li S, Zhao X, Zhao P. Damage classification for masonry historic structures using convolutional neural networks based on still images. Comput-Aided Civ Infrastruct Eng. 2018;33:1073–89.

Ugail H, Stork DG, Edwards H, Seward SC, Brooke C. Deep transfer learning for visual analysis and attribution of paintings by raphael. Herit Sci. 2023;11:268.

Redmon J. You only look once: Unified, real-time object detection. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

Girshick R, Donahue J, Darrell T, Malik J. Rich feature hierarchies for accurate object detection and semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition. 2014. p. 580–7.

Ren S, He K, Girshick R, Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 2016;39:1137–49.

Yang X, Zheng L, Chen Y, Feng J, Zheng J. Recognition of damage types of Chinese gray-brick ancient buildings based on machine learning—taking the macau world heritage buffer zone as an example. Atmosphere. 2023;14:346.

Mishra M, Barman T, Ramana G. Artificial intelligence-based visual inspection system for structural health monitoring of cultural heritage. J Civ Struct Health Monit. 2024;14:103–20.

Mansuri LE, Patel D. Artificial intelligence-based automatic visual inspection system for built heritage. Smart Sustain Built Environ. 2022;11:622–46.

Mansuri LE, Patel D. Artificial intelligence for heritage conservation: a case study of automatic visual inspection system. Current state of art in artificial intelligence and ubiquitous cities. Springer; 2022. p. 1–15.

Pathak R, Saini A, Wadhwa A, Sharma H, Sangwan D. An object detection approach for detecting damages in heritage sites using 3-D point clouds and 2-D visual data. J Cult Herit. 2021;48:74–82.

Yuan Q, He X, Han X, Guo H. Automatic recognition of craquelure and paint loss on polychrome paintings of the palace museum using improved U-net. Heritage Sci. 2023;11:65.

Wu M, Jia M, Wang J. TMCrack-net: A U-shaped network with a feature pyramid and transformer for mural crack segmentation. Appl Sci. 2022;12:10940.

Garrido I, Erazo-Aux J, Lagüela S, Sfarra S, Ibarra-Castanedo C, Pivarčiová E, et al. Introduction of deep learning in thermographic monitoring of cultural heritage and improvement by automatic thermogram pre-processing algorithms. Sens. 2021;21:750.

Liu Z, Brigham R, Long ER, Wilson L, Frost A, Orr SA, et al. Semantic segmentation and photogrammetry of crowdsourced images to monitor historic facades. Heritage Sci. 2022;10:1–17.

Zhou J. The prevention countermeasures of leshan giant buddha weathering erosion and seepage harm. J Mountain Sci. 2009;27:367–72.

Zhao H, Shi J, Qi X, Wang X, Jia J. Pyramid scene parsing network. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017. p. 2881–90.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. p. 770–8.

Zhang W, Pang J, Chen K, Loy CC. K-net: towards unified image segmentation. Adv Neur In. 2021;34:10326–38.

Lin T. Focal loss for dense object detection. 2017. arXiv prepr arXiv:1708,02002.

Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, et al. A comprehensive survey on transfer learning. Proc IEEE. 2020;109:43–76.

Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2009;22:1345–59.

Kornblith S, Shlens J, Le QV. Do better imagenet models transfer better? Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019. p. 2661–71.

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proceedings of the IEEE international conference on computer vision. 2017. p. 618–26.

Acknowledgements

Thanks to Chunlei Zhang and Weidong Zhang for their guidance on this article’s software technology.

Funding

This research was funded by the National Natural Science Foundation of China (No. 51808246), and the National Key Research and Development Program of China (No. 2019YFC1520500).

Author information

Authors and Affiliations

Contributions

Conceptualization, N.P. and J.H.; methodology, Y.Z. (Ye Zhu) and C.Z.; software, C.Z., L.W. and Y.C.; validation, Y.Z. (Ye Zhu) and Y.Z. (Yue Zhang); formal analysis, C.Z., B.S. and F.W.; investigation, L.W. and Y.C.; resources, B.S. and F.W.; data curation, C.Z., Y.Z. (Ye Zhu) and Y.Z. (Yue Zhang); writing—original draft preparation, N.P. and C.Z.; writing—review and editing, Y.Z. (Ye Zhu) and J.H.; visualization, C.Z., L.W. and Y.C.; supervision, N.P. and J.H.; funding acquisition, N.P. and J.H. All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Zhang, C., Peng, N., Wang, L. et al. Application of deep learning algorithms for identifying deterioration in the ushnisha (Head Bun) of the Leshan Giant Buddha. Herit Sci 12, 399 (2024). https://doi.org/10.1186/s40494-024-01514-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40494-024-01514-9

Keywords

This article is cited by

-

Semantic segmentation of building façade materials and colors for urban conservation

npj Heritage Science (2025)