Abstract

The absence of word boundaries between words in scriptio continua script hinders the development of NLP models for such scripts. The objective of this research is to facilitate the building of NLP models for scriptio continua scripts by designing a word segmentation model for predicting word boundaries between characters in sentences, focusing particularly on ancient Tamil scripts. We have utilized an NGRAM Naive Bayes model to predict the existence of word boundaries between two characters in a scriptio continua text. We trained and assessed the model on a dataset of ancient Tamil writing, achieving an accuracy of 91.28%. Efficiently segmenting ancient Tamil texts not only helps preserve and comprehend historical manuscripts, but it also enables advancements in automated text segmentation. This model will assist archeologists in constructing NLP models utilizing ancient Tamil, allowing for the extraction of significant information from ancient Tamil manuscripts without the need for a language expert. Additional research may be undertaken to examine more effective techniques for word segmentation with better performance, managing scripts from several centuries, and developing models for additional scripts.

Similar content being viewed by others

Introduction

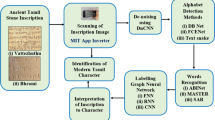

Ancient Tamil inscriptions exhibit significant differences compared to modern Tamil, which poses difficulties to common man in understanding. Therefore, tasks like transliteration and translation require the intervention of human experts. Different AI-based systems have been proposed to solve the transliteration sub-tasks like character recognition, character reconstruction, and word and sentence segmentation. Shadow photometric stereo method1 and bi-directional Long Short-Term Memory (Bi-LSTM)2 are the two prominent methods used for character recognition and character reconstruction.

The Tamil script is based on the Brahmi script, known for its distinctive ‘scriptio continua’ characteristic. We use the whitespace character ‘ ‘ in modern Tamil and English to demarcate the word boundaries. Scripts like ancient Tamil and many South Asian languages such as Tibetan, Thai, Chinese, and Japanese lack explicit word boundaries. The absence of clearly defined word boundaries in these scripts makes the task of word segmentation challenging and non-trivial.

Addressing the issue of word boundary identification in scriptio continua scripts is an active and ongoing area of research. This word segmentation process is considered a crucial preprocessing step in various Natural Language Processing (NLP) tasks. The development of accurate word segmentation techniques in scriptio continua scripts will ultimately result in robust NLP systems to perform downstream tasks like machine translation, information retrieval, and text analysis, contributing to a deeper understanding of these diverse and culturally rich linguistic traditions. Several statistical and machine learning-based approaches are being explored to achieve efficient and accurate word segmentation. Tamil script poses additional complexities such as, in English, an alphabet typically represents the minimal unit of a word. But Tamil script operates under a different system. In Tamil, a single character can symbolize a vowel, consonant, or even a combination of both, making it a complex and versatile script. This characteristic is particularly pronounced when considering the structural and usage differences between ancient and modern Tamil.

Ancient Tamil literary works are available in the form of inscriptions or written texts. The medium of writing varied from stones, copper plates, palm leaves, etc. These inscriptions and writings often lack deterministic meaning and require expert intervention to map the sequence of geometrical signs to valid characters and combine them into meaningful sentences of a language. The Tamil script has 12 vowels, 18 consonants, and a special character called aayutha ezhuthu  . The vowels and consonants combine to form compound characters. For example, the character

. The vowels and consonants combine to form compound characters. For example, the character  is a combination of vowel

is a combination of vowel  and consonant

and consonant  . The 12 vowels and 18 consonants combine to form 216 compound characters, giving a total of 247 characters (12 + 18 + 1 + (12 × 18)). All consonants have an inherent vowel a, as with other Indic scripts. This inherent vowel is removed by adding a title called a puḷḷi

. The 12 vowels and 18 consonants combine to form 216 compound characters, giving a total of 247 characters (12 + 18 + 1 + (12 × 18)). All consonants have an inherent vowel a, as with other Indic scripts. This inherent vowel is removed by adding a title called a puḷḷi  . The character

. The character  is a combination of

is a combination of  and pulli

and pulli

Related work

The methods proposed for word segmentation can be broadly classified into Dictionary based and Machine Learning based approaches.

Dyer et al.3 compare token- and character-level approaches to restoration of spaces, punctuation, and capitalization to unformatted sequences of input characters. The proposed model is a novel character-level end-to-end BiLSTM model (overall F-score 0.90) which has the advantages of being able to restore mid-token capitalization and punctuation and of not requiring space characters to be present in input strings. This method also can be viable for building word segmentation models for low resource languages due to its small number of trainable parameters but in large extensive corpora, the model may falter due to the inability to generalize with a huge number of cases and edge cases and also have low latency issues during inference.

Haruechaiyasak and Kongthon4 proposed LexToPlus, a tokenization and normalization tool for Thai language which has scriptio-continual property. They modified the Dictionary based segmentation method which handled insertion, transformation, transliteration, and onomatopoeia in Thai social media texts. The proposed methods performed better than Machine Learning based approaches like Conditional Random Fields and Support Vector Machines. This approach also requires the system to have a well developed Dictionary which will be hard to obtain of low-resource languages such as Ancient Tamil.

Paripremkul and Sornil5 proposed a novel technique for Thai word segmentation. They divided the word segmentation task into three phases: Minimum Text Unit (MTU) extraction (MTU is the smallest unit of a word in Thai language), syllable identification, and word construction. MTU extraction and Syllable identification used Conditional Random Fields with features engineered from language characteristics. Word construction incorporated a combination of dictionary-dependent longest word matching and rule-based approach. This proposed method showed better performance in handling ambiguous word boundaries and Out of Vocabulary (OOV) words compared to baseline CNN model.

Peng, Feng, and McCallum6 present a way to extract words from Chinese scripts, which do not delimit words with spaces. They use linear conditional random fields to segment a script into individual words and identify new words which are not in the existing vocabulary. As Chinese is a morphologically rich language.

Raman and Sahu7 cover a method of reading Devanagari script with OCR by preprocessing and segmenting the script into three constituent parts for each character. By reading the upper, middle, and lower regions for each character individually, they manage to achieve a high accuracy at recognizing the characters. This technique allows one to have a degree of contextual knowledge of the input data even in low resource conditions but the model will lack flexibility in handling out-of-distribution cases.

Widiarti and Pulungan8 proposed brute force and greedy algorithms for word segmentation which was essential in the transliteration of ancient Javanese manuscripts to modern Indonesian language. The algorithms worked by composing syllables extracted from manuscripts (Hamong Tani Book) into meaningful words found in the Bausastra dictionary given a string of continuous syllables as an input. Experimental results show that the greedy algorithms were more efficient than the brute-force algorithm. They reported inefficiencies in processing deformed characters and Out of Vocabulary (OOV) words. Greedy algorithms are good for solving simple incremental optimization problems allowing for building models even in low resource constraint situations but they falter when advanced distributions need to be learned for the final solution.

Zia et al.9 present a word segmentation system for Urdu, which does not always add spaces between two words, and sometimes adds spaces within a single word, using linear chain conditional random fields, which use undirected graphs connecting each element to its immediate neighbors to allow for predictions using context clues. Conditional Random Field approaches are hard to define and inflexible to changes but are very good at building simple probabilistic state models.

Perruchet and Vinter10 introduced PARSER a computational model designed to simulate implicit learning processes in language acquisition, particularly focusing on how infants segment continuous speech into discrete words. Unlike other models that rely on explicit statistical calculations, PARSER operates by forming and reinforcing chunks based on co-occurrence patterns in the speech input, using memory-based learning and competition among chunks to gradually refine segmentation. In the context of word segmentation, the authors applied PARSER to demonstrate that infants can segment speech without relying on prosodic or phonological cues, as shown in studies by Saffran et al.11 This suggests that implicit learning mechanisms, as modeled by PARSER, play a crucial role in early language acquisition.

Muchtar et al.12 address the problem of processing texts written in scriptio continua, particularly in the Batak language, a low-resource scriptio continua spoken by an Indonesian Tribe. They employed a brute force algorithm to iterate over the length of the word and match them using a pre-built Trie with corpus of 4000 Batak sentences. The Trie automation algorithm achieved an accuracy of 98% in correctly identifying and inserting spaces within the Scriptio Continua Batak sentences. They also emphasized that size of the dataset plays an important role in improving the performance of the model.

Zheng and Zheng13 present a deep neural network approach to address the challenges faced by traditional word segmentation models in Vietnamese language. Traditional model struggle with the language’s inherent ambiguities, including combination ambiguity and cross ambiguity. They introduce an improved Long Short-Term Memory (LSTM) network combined with Convolutional Neural Networks (CNN) for feature extraction. A Vietnamese word corpus of 100,000 sentences was constructed using a web crawler, and the performance of the model was tested against this dataset. The hybrid LSTM-CNN model achieved an accuracy of 96.6%, a recall of 95.2%, and an F1 score of 96.3%, outperforming traditional methods as well as single LSTM and CNN models.

Dyer et al.14 explored generative byte-level models for restoring spaces, punctuation, and capitalization in unformatted text in English, Japanese, and Gujarati languages, tackling the challenge of processing texts without predefined word boundaries or punctuation marks. ByT5, a multilingual, token-free transformer model was fine-tuned for specific tasks in English, Japanese, and Gujarati. It was trained on large datasets and evaluated on its ability to restore the missing text features accurately. The experimentation results show that ByT5 model outperformed other existing methods, including a Naive Bayes + BERT BiLSTM pipeline and a BiLSTMCharE2E model.

Shao et al.15 proposed a universal word segmentation model that can effectively segment text across multiple languages with varying typological characteristics. They employed a sequence tagging framework using Bidirectional Recurrent Neural Networks with Conditional Random Fields (BiRNN-CRF) to handle word segmentation tasks. The model can also be customized using language-specific settings based on factors like character set size and segmentation frequency, particularly for languages like Chinese, Japanese, Arabic, and Hebrew. The model was evaluated on Universal Dependencies datasets and experimental results show that the model outperformed previous methods, particularly for challenging languages without explicit word boundary markers (Chinese and Japanese).

Ahmadi16 discusses the development of a tokenization system specifically designed for the Kurdish language, focusing on the Sorani and Kurmanji dialects. The system incorporated a lexicon and a morphological analyzer to tokenize Kurdish text, designed to handle orthographic inconsistencies, excessive concatenation, and the complex morphology of Kurdish. Performance of the system was compared with unsupervised neural methods like BPE, Unigram, and WordPiece, as well as with a baseline model, WordPunct tokenizer.

Overview of proposed method and objectives

The purpose of our method is to segment low-resource languages whose script has scriptio continua property, Ancient Tamil in our case, efficiently using Machine Learning Techniques with data collected from stone inscriptions. The major contributions of this study include,

-

1.

A Segmentation model trained on text extracted from South Indian Inscriptions Books published by the Archaeological Survey of India.

-

2.

Ancient Tamil corpus extracted from Sangam literature and South Indian Inscriptions Books which can be used in NLP tasks for Tamil script.

Methods

In this section, we describe the proposed system starting with an overview of model, data used for training and evaluation, and the training methodology incorporated.

Model overview

The proposed system aims to produce a word segmentation model trained for segmenting ancient Tamil extracted from stone inscriptions. This model uses an NGRAM model, a type of language model, storing the frequency of every N-gram where N is less than or equal to a specified value, k in memory. By using the previous (N – 1) words seen or generated by the model along with a database of known NGRAMs (that is, sequences of N words that occur in the language), the Bayesian probabilities of all possible future words can be calculated. By simply seeing which sequence of previous words followed by a future word occurs in the database, a probability distribution can be obtained for all potential future words. To account for cases in which the previous N words never occur in the database, a type of algorithm called a backoff algorithm is employed, which augments the probability distribution using (N – 1)GRAMS, (N – 2)GRAMS, and so on until 1GRAM.

The backoff algorithm used in this paper is the Stupid Backoff algorithm17. This algorithm, when given an NGRAM consisting of (N – 1) past words and one future word, checks if the NGRAM occurs in the database. If it does, it returns the number of times this NGRAM occurs in the database divided by the number of times the (N – 1)GRAM given by only the past words occurs. If it does not occur in the database, it instead repeats the procedure for the (N – 1)GRAM consisting of (N – 2) past words and one future word. This process repeats recursively either until finds some KGRAM that does occur in the database, or until it is left with a unigram (a word) that it has never encountered before. In this case, it approximates a probability for the word as a function of its length. Although, strictly speaking, this process results in the final distribution of future words not being a probability distribution as the sum of all probabilities exceeds one, in practice this algorithm has shown to produce very good results while being computationally inexpensive to perform.

The database for the model can be compiled by supplying it with a large number of segmented ancient Tamil sentences and storing the frequency of every NGRAM that occurs in it for values of N less than or equal to a hyperparameter k. The segmentation process, trained for Ancient Tamil, ensures that “uyir” character is never produced in the middle of a word and “mei” character is never present at the beginning of the word. The model is primarily trained on text from Sangam literature and South Indian inscriptions, along with some text in modern Tamil corpus from various sources due to the scarcity of ancient Tamil data.

Data

For the ancient Tamil corpus, we have used the South Indian Inscriptions books published by Archaeological Survey of India and text from Sangam Literature. South Indian Inscriptions is an epigraphical series that has been published by the Archaeological Survey of India in 27 volumes from 1890 through the present. The texts are supplemented with summaries and an overview of the texts, both in English and Tamil. Hundreds of inscriptions were copied from different parts of South India which were in Dravidian languages like Tamil, Kannada, and Telugu. We use the Tesseract OCR model to extract text from PDF files, South Indian Inscriptions book in our case. The model converts each PDF page to image and uses the Tesseract model to extract text from the image. The quality of extracted inscriptions was verified with the guidance of Archaeological experts. The raw text is processed using regex to remove unwanted characters (English characters, special characters, numbers). For evaluation, we use a subset of the extracted corpus along FLORES-200 and IN22 benchmarking datasets. FLORES-20018 is a multi-domain general-purpose benchmark designed for evaluating translations across 200 languages, including 19 Indic languages. The English sentences are source-original and have been translated into other languages. It comprises sentences sourced from Wikimedia entities with equal portions of news, travel, and non-fiction content from children’s books. IN2219 is a comprehensive benchmark for evaluating machine translation performance in multi-domain, n-way parallel contexts across 22 Indic languages. It comprises three distinct subsets, namely IN22-Wiki, IN22-Web, and IN22-Conv. The Wikipedia and Web sources subsets offer diverse content spanning news, entertainment, culture, legal, and India-centric topics.

Data mapping

Unlike languages like English, Tamil characters are comprised of multiple Unicode characters ( , for example, is comprised of

, for example, is comprised of  and

and  ), the tamil texts are mapped such that each set of Unicode characters representing a single Tamil character is mapped to a unique single Unicode character. The mapping is one-to-one so that it can be unmapped into legible text after processing. Algorithm 1 depicts how the mapping works. The list of Tamil characters is obtained and sorted in descending order of bytes per character. This is to ensure that characters like

), the tamil texts are mapped such that each set of Unicode characters representing a single Tamil character is mapped to a unique single Unicode character. The mapping is one-to-one so that it can be unmapped into legible text after processing. Algorithm 1 depicts how the mapping works. The list of Tamil characters is obtained and sorted in descending order of bytes per character. This is to ensure that characters like  come before

come before  , as otherwise the single-byte characters would be mapped first, leaving the multi-byte characters broken. Then, each character in the list is mapped to a unique and arbitrary single-byte Unicode character. Then, the list is iterated over in order, and instances of a character in the sequence to be mapped are replaced with the corresponding mapped character.

, as otherwise the single-byte characters would be mapped first, leaving the multi-byte characters broken. Then, each character in the list is mapped to a unique and arbitrary single-byte Unicode character. Then, the list is iterated over in order, and instances of a character in the sequence to be mapped are replaced with the corresponding mapped character.

Algorithm 1

TEXT_MAPPER

Input: Sequence of Tamil Characters

Output: Mapped Sequence

tamil_characters = load(Tamil Character List)

tamil_characters = sort(tamil_characters, descending=true, Key=bytes per character)

M = Map()

index = 0

for character in tamil_characters:

M.add({character: char(40000 + index)})

index += 1

for key in M:

sequence = sequence.replace(key, M[key])

return sequence

Model training

The NGRAM model trains by storing the frequency of every NGRAM for values of N less than or equal to a given k. When executing, it recalls this data to calculate a probability in the case of any NGRAM it has seen before. In case it has not seen a particular NGRAM, it uses the stupid backoff algorithm and attempts to calculate a probability based on the (N - 1)GRAM obtained by dropping the least recent word from the sequence. It performs this until it reaches a unigram (single word). If the unigram has not been seen either, it uses a function to approximate the probability of the unseen word given the new word’s length. Segmentation is done using a dynamic programming approach that iterates through every possible segmentation and returns the one with the highest probability. The segmentation process has been specialized to Tamil, where it shall never allow an “uyir” character to appear in the middle of a word, and will never allow a ‘mei’ character to appear at the beginning of a word.

Algorithm 2

SEGEMENT_TEXT

Input: unsegmented_text, preceding_n_words, maximum_word_length

Output: Probability of segmentation, Best segmentation of the text

set SPACE = ‘\s‘

if unsegmented_text is empty:

return (1, [])

best_segmentation = NULL

for each element S in SPLIT_TEXT(unsegmented_text, maximum_word_length):

W = Word obtained by splitting unsegmented_text at S

P’, S’ = SEGMENT_TEXT(text after S in unsegmented_text, (preceding n-1 words, W), maximum_word_length)

P = COMPUTE_PROBABILITY(W, preceding n words) * P’

if P > best_segmentation’s probability:

best_segmentation = (P, (W, S’))

return best_segmentation

Algorithm 2 depicts the logic used to segment the input text. It works by calling on Algorithm 3 to generate every possible location a space can occur and calculating each word that would be generated by inserting a space at those locations. For each word, it calls upon itself recursively, giving the unsegmented text after the space and the new word generated as inputs, along with the past words from its own input. It then receives the best possible split and its probability from that function call. It multiplies this probability with the probability of the word it generated, calculated using Algorithm 4, and if this value is better than the best split it has generated so far, it stores its current split as the best, which is then returned at the end.

Algorithm 3

SPLIT_TEXT

Input: unsegmented_text, maximum_word_length

Output: All possible split locations

if an uyir character is present at location i < maximum_word_length:

return {1..i}

else:

return {1..maximum_word_length}

Algorithm 3 is used to generate all possible locations a space can be added to unsegmented text. It uses the maximum word length hyperparameter and returns a list with every position from zero to the maximum word length. An exception is made if an “uyir” character is found at a location before the maximum word length. As no Tamil word can have an “uyir” character at any position other than the beginning, it only returns positions up until then.

Algorithm 4

COMPUTE_PROBABILITY

Input: word, preceding k words

Output: Probability of word

if word begins with a mei character:

return 0

if the sequence (preceding k words, word) is new:

return Probability(word, preceding k-1 words)

if preceding k words is empty and word is new:

return UnkownProbability(word)

return frequency[(preceding k words, word)] / frequency[preceding k words]

Algorithm 4 computes the probability of a word, given any preceding words. It has four steps: first, if the word begins with a ‘mei’ character (such as  or

or  ), it returns a probability of zero, as no Tamil word can begin with one of these characters. Then, if the sequence of words given by the preceding words and the new word is not present in the training data, it uses the Stupid Backoff algorithm and recursively calls itself with near-identical parameters, except the oldest word from the preceding words is truncated. If there are no preceding words and the new word is not present in the training data, a function is called to estimate the probability of the word. The probability is computed as \(\frac{\lambda }{10\left|L-{\underline{L}}\right|}\), where \(L\) is the length of the word and \({\underline{L}}\) is the average word length obtained from the training data. Lastly, if it does find the sequence of words given by the preceding words and the new word from the training data, it returns the frequency of that sequence divided by the frequency of just the preceding words.

), it returns a probability of zero, as no Tamil word can begin with one of these characters. Then, if the sequence of words given by the preceding words and the new word is not present in the training data, it uses the Stupid Backoff algorithm and recursively calls itself with near-identical parameters, except the oldest word from the preceding words is truncated. If there are no preceding words and the new word is not present in the training data, a function is called to estimate the probability of the word. The probability is computed as \(\frac{\lambda }{10\left|L-{\underline{L}}\right|}\), where \(L\) is the length of the word and \({\underline{L}}\) is the average word length obtained from the training data. Lastly, if it does find the sequence of words given by the preceding words and the new word from the training data, it returns the frequency of that sequence divided by the frequency of just the preceding words.

Algorithm 5

TEXT_UNMAPPER

Input: Mapped Sequence

Output: Sequence of unmapped Tamil character

tamil_characters = load(Tamil Character List)

tamil_characters = sort(tamil_characters, descending=true, Key=bytes per character)

M = Map()

index = 0

for character in tamil_characters:

M.add({char(40000 + index): character})

index += 1

for key in M:

mapped_sequence = mapped_sequence.replace(key, M[key])

return mapped_sequence

Algorithm 5 is used to unmap the output of SPLIT_TEXT algorithm, the segmented text into a sequence of Tamil characters. Figure 1 illustrates the complete flow of the process.

Hyperparameter details

The NGRAM model uses two hyperparameters: the maximum word length (L), and ‘lambda’ used in predicting the probability of an unseen word. These variables are tweaked using a validation set, on which the model is run repeatedly with different values of L and lambda. Performance metrics are calculated for each combination of L and lambda and the combination that yields the best results is selected.

Results and discussion

The performance evaluation was conducted on the Stone inscriptions, FLORES-200, and IN22 datasets, specifically focusing on the segmentation task. Word segmentation can be approached as a classification task where we classify each predicted word separator ‘whitespace’ by comparing them with the ground truth sentence. Therefore, we employed Precision, Recall, and F1-Score metrics to assess the model’s performance. In addition to these traditional metrics, we also leveraged BLEU score to quantify the similarity between the predicted and reference sentences. Figure 2 shows the predictions from some examples in the test.

To further evaluate the similarity between predicted and reference sentences, we employed Cosine Similarity. This involved utilizing the FastText9 Tamil model to compute sentence embeddings for both the predicted and reference sentences. By comparing these embeddings, we obtained a measure of their cosine similarity, providing insight into the semantic similarity between the predicted and ground truth segments. Table 1 shows performance metrics on various datasets discussed.

Hyperparameter tuning

The experiments include an exhaustive grid search over “L” and “lambda” hyperparameter values. 10 different values of L and lambda were given, and the model was run on a small validation set consisting of 20 data points with every possible combination of hyperparameters possible. The final values of L and lambda arrived at were 13 and 1E-6, respectively. Tables 2–4 show the experimentation results for South Indian Inscriptions, Flores-200, IN22 for different values of max n grams. Fig 2.

Conclusion

Digitalization of ancient texts in the form of inscriptions is a key for understanding the history and cultural aspects of society. For ancient Tamil, an important hurdle in the digitization process is to segment the words in scriptio continua text. This paper proposes an NGRAM language model based on stupid backoff algorithm which effectively calculates probabilities for known NGRAMs and approximates probabilities for unseen NGRAM based on length, thus ensuring robust segmentation performance even in the face of limited training data. We also added support to add custom language-specific segmentation rules, in our case, we added a rule to ensure “uyir” characters do not appear in the middle of a word and ‘mei’ characters do not appear at the beginning. We evaluated the segmentation performance on texts extracted from South Indian Inscriptions Books published by Archaeological Survey of India which represents the ancient Tamil text using Precision, Recall, F1-Score, BLEU score, and Cosine Similarity. We achieved ~92% precision and ~93% similarity (based on cosine score) indicating that the system not only achieves high segmentation accuracy but also maintains semantic fidelity to the reference sentences.

In summary, the model presents a significant advancement in the field of ancient Tamil word segmentation, providing a valuable tool for linguistic researchers and historians.

Future directions

Future work could explore further enhancements through ensemble techniques and expansion of the training corpus to include more diverse sources of ancient Tamil text. The proposed model has been trained from a corpus consisting of ancient 11th-century ancient texts. To enhance its effectiveness across in a variety of contexts, Improvements can be obtained by expanding the corpus size of the text corpus. By the addition of mixture of expert neural blocks before the classifier We can train networks which can generalize to a variety of data distribution, producing expert networks for all text distributions in Ancient Tamil. It can also be extended to be a single model to be capable of handling multiple versions of the characters across centuries. This advancement not only improves accuracy but also augments the generalizability across multiple century text.

Data availability

The datasets used to for model training and the trained model pickle (.pkl) files are available in the Zenodo repository. To access the dataset, use https://doi.org/10.5281/zenodo.14059322. For trained model files use https://doi.org/10.5281/zenodo.14059256. We have also open-sourced the source code used to train and evaluate the Ancient Tamil Segmentation model in a public repository in GitHub. Access them using this link https://github.com/waj2san/segment-tamil.

References

Bhuvaneswari, G. & Bharathi, V. S. An efficient method for digital imaging of ancient stone inscriptions. Curr. Sci. 245-250. http://www.jstor.org/stable/24906752 (2016)

Bojanowski, P., Grave, E., Joulin, A. & Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 5, 135–146, https://doi.org/10.1162/tacl_a_00051 (2017).

Dyer, L., Hughes, A., Shah, D. & Can, B. Comparison of token-and character-level approaches to restoration of spaces, punctuation, and capitalization in various languages. In Proc. of the 5th International Conference on Natural Language and Speech Processing, (pp.168-178). https://aclanthology.org/2022.icnlsp-1.19 (2022).

Haruechaiyasak, C., & Kongthon, A. LexToPlus: A Thai lexeme tokenization and normalization tool. In Proc. of the 4th Workshop on South and Southeast Asian Natural Language Processing, (pp.9-16). https://aclanthology.org/W13-4702 (2013).

Paripremkul, K. & Sornil, O. Segmenting words in Thai language using minimum text units and conditional random field. J. Adv. Inf. Technol. Vol. 12, 135–141, https://doi.org/10.12720/jait.12.2.135-141 (2021).

Peng, F., Feng, F. & McCallum, A. Chinese segmentation and new word detection using conditional random fields, In COLING 2004: Proceedings of the 20th International Conference on Computational Linguistics, (pp.562-568).https://doi.org/10.3115/1220355.1220436 (2004).

Raman, N. K. & Sahu, N. Efficient system for Devnagari script segmentation, 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India (pp.722-725) (2015).

Widiarti, A. R. & Pulungan, R. A method for solving scriptio continua in Javanese manuscript transliteration, Heliyon. 6. https://doi.org/10.1016/j.heliyon.2020.e03827 (2020).

Zia, H. B., Raza, A. A. & Athar, A. Urdu word segmentation using conditional random fields (CRFs), In Proc. of the 27th International Conference on Computational Linguistics, Santa Fe, New Mexico, USA, (pp.2562-2659). https://aclanthology.org/C18-1217 (2018).

Perruchet, P. & Vinter, A. PARSER: a model for word segmentation. J. Mem. Lang. 39, 246–263, https://doi.org/10.1006/jmla.1998.2576 (1998).

Saffran, J. R., Newport, E. L., Aslin, R. N., Tunick, R. A. & Barrueco, S. Incidental language learning: listening (and learning) out of the corner of your ear. Psychol. Sci. 8, 101–105, https://doi.org/10.1111/j.1467-9280.1997.tb00690.x (1997).

Muchtar, M. A., Sitompul, O. S., Lydia, M. S. & Efendi, S. Implementation of Trie Automation Algorithm for Problem Solving Scriptio Continua. In 2021 International Seminar on Machine Learning, Optimization, and Data Science (ISMODE) (pp.28-32). https://doi.org/10.1109/ISMODE53584.2022.9743133 (IEEE, 2022).

Zheng, K. & Zheng, W. Deep neural networks algorithm for Vietnamese word segmentation. Sci. Program. 2022, 8187680. https://doi.org/10.1155/2022/8187680 (2022).

Dyer, L., Hughes, A. & Can, B. Generative Byte-Level Models for Restoring Spaces, Punctuation, and Capitalization in Multiple Languages. In Practical Solutions for Diverse Real-World NLP Applications (pp.37-57). https://doi.org/10.1007/978-3-031-44260-5_3 (Cham: Springer International Publishing, 2023).

Shao, Y., Hardmeier, C. & Nivre, J. Universal word segmentation: implementation and interpretation. Trans. Assoc. Comput. Linguist. 6, 421–435, https://doi.org/10.1162/tacl_a_00033 (2018).

Ahmadi, S. A tokenization system for the Kurdish language. In Proc. of the 7th Workshop on NLP for Similar Languages, Varieties and Dialects (pp.114-127). https://aclanthology.org/2020.vardial-1.11 (2020).

Brants, T., Popat, A., Xu, P., Och, F. J. & Dean, J.. Large language models in machine translation. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL) (p858-867) (2007).

Costa-jussà, M. R. et al. No language left behind: Scaling human-centered machine translation, arXiv preprint arXiv:2207.04672. https://doi.org/10.48550/arXiv.2207.04672 (2022)

Gala, J. et al. IndicTrans2: Towards High-Quality and Accessible Machine Translation Models for all 22 Scheduled Indian Languages, Transactions on Machine Learning Research. https://doi.org/10.48550/arXiv.2305.16307 (2023).

Author information

Authors and Affiliations

Contributions

B.S. worked on designing the experiments and undertook experimental work. S.S. (Sanjith S.) worked on designing the experiments and preparing the datasets. S.S. (Sandeep S.) worked on developing the concept and tabulating the experimentation results. All authors equally contributed to this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sandeep, S., Sanjith, S. & Sudarsan, B. Word segmentation of ancient Tamil text extracted from inscriptions. npj Herit. Sci. 13, 97 (2025). https://doi.org/10.1038/s40494-025-01612-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s40494-025-01612-2

This article is cited by

-

AI helps segment ancient Tamil text

Nature India (2025)