Abstract

Three-dimensional (3D) surface geometry provides elemental information in various sciences and precision engineering. Fringe Projection Profilometry (FPP) is one of the most powerful non-contact (thus non-destructive) and non-interferometric (thus less restrictive) 3D measurement techniques, featuring at its high precision. However, the measurement precision of FPP is currently evaluated experimentally, lacking a complete theoretical model for guidance. We propose the first complete FPP precision model chain including four stage models (camera intensity, fringe intensity, phase and 3D geometry) and two transfer models (from fringe intensity to phase and from phase to 3D geometry). The most significant contributions include the adoption of a non-Gaussian camera noise model, which, for the first time, establishes the connection between camera’s electronics parameters (known in advance from the camera manufacturer) and the phase precision, and the formulation of the phase to geometry transfer, which makes the precision of the measured geometry representable in an explicit and concise form. As a result, we not only establish the full precision model of the 3D geometry to characterize the performance of an FPP system that has already been set up, but also explore the expression of the highest possible precision limit to guide the error distribution of an FPP system that is yet to build. Our theoretical models make FPP a more designable technique to meet the challenges from various measurement demands concerning different object sizes from macro to micro and requiring different measurement precisions from a few millimeters to a few micrometers.

Similar content being viewed by others

Introduction

Three-dimensional (3D) surface geometry provides elemental information for the understanding of the world in the tasks such as self-driving vehicles1 and even for the augmenting of the world in metaverse.2 Many optical 3D shape measurement techniques have been developed, including LiDAR,3 stereo-vision,4 time of flight,5 speckle projection,6 and fringe projection profilometry (FPP).7 Among the non-interferometric techniques, FPP is outstanding for its merits of being non-contact, low-cost, high-speed and especially high-precision, and has been successfully applied in industrial manufacturing,8 robotics,9 spectral measurement,10 biomedicine,11 forensic science,12 etc. Having said that, it is of much surprise that there is a lack of a theoretical precision model for FPP, to the best of our knowledge, and the precision measure is often obtained experimentally.13 This gives two challenges in practice. First, it is difficult to predict the measurement precision and thus lacks theoretical guidance for the FPP system design; Second, although the precision can be measured, there is no theoretical-experimental cross verification. Developing a complete theoretical precision transfer model is thus highly demanded and is the motivation of this paper.

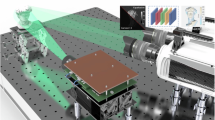

FPP systems includes one-camera and one-projector systems,14 fringe projection assisted multi-view systems15 and coaxial systems.16 Without loss of generality, this paper focuses on the analysis of the most widely adopted and extensively studied one-camera and one-projector system as a typical example, which can serve as good reference for the other two types of systems. A typical FPP includes a projector, a camera, and a computer, where the projector is used to project the fringes onto the surface of the object, the camera captures the deformed fringes, and the computer process the captured images, extracts the phase information, and reconstructs a 3D shape by a triangulation method. Accordingly, the measurement process includes a capturing step and the following three computing steps: (1) from the phase-shifted fringe patterns captured by the camera, phase is calculated; (2) with the calculated phase, the camera-projector pixel-correspondence is established; and (3) from the corresponding camera and projector pixels, 3D coordinate is reconstructed by triangulation.17 The last computing step requires the FPP system to be pre-calibrated which can be achieved by either directly establishing the relationship between the absolute phase distribution and the 3D information,18,19,20 or using the stereo-vision-based model proposed by Zhang et al. 14. The latter is adopted in this work due to it high accuracy, precision, and convenience. Although the calibration is extremely important, we argue that once the FPP system is calibrated, the calibration error will only affect the measurement accuracy, not the precision, and thus not considered for our precision analysis in this paper. Furthermore, in real measurement, there are error sources such as lens distortion,21 gamma effects,22 intensity saturation,23 etc., which can be corrected or mitigated.17,21,22,23,24 As they affect the measurement accuracy, they could also be calibrated.

From the above-mentioned measurement procedure, the random noise is generated in the capturing step and then transfers in the three computing steps, until it reaches the final reconstruction result. Such step-by-step measurement procedure enables us to propose our complete and generic noise model chain with the following four stage models and two transfer models: (S1) a model of camera noise (MC); (S2) a model of fringe intensity noise (MI) by applying MC to fringe patterns; (S3) a model of phase noise (MP) which is important to evaluate the phase quality; (S4) a model of geometry noise (MG) which is important to evaluate the final geometry quality; (T1) a model to transfer noise from fringe intensity to phase (MItoP); and (T2) a model to transfer noise from phase to the geometry (MPtoG). All these models are illustrated in Fig. 1, where the computing steps (2) and (3) are combined into MPtoG for simplicity.

Although our model chain seems apparent, it has not been clearly described in the FPP community. Among all the individual models, our research focus will be on MC, MP, MG and MPtoG, since MI can be directly obtained from MC while MItoP has been extensively studied.25,26,27,28 The problem statement and our contributions are as follows:

-

(i)

For MC, the Gaussian model25,26,27,29,30,31 has been almost exhaustively used in the FPP community, because many noise distributions can be approximated as Gaussian, and when independent random variables are summed up, their properly normalized sum tends toward a normal distribution (central limit theorem).32 However, little experimental validation on the Gaussian model has been provided. In fact, there is another standard camera noise model33 which has been commonly used in Machine Vision field, but unfortunately, not yet been adopted in FPP, to the best of our knowledge. Although some observations on non-Gaussian noise have been made in,34 the noise treatment is rather empirical. Therefore, it is necessary to re-examine the camera noise modeling. We adopt this standard camera noise model into FPP for the first time. The most significant feature of the new model is that its parameters can be obtained from the manufacturer in advance without any measurement, making the precision prediction of FPP possible;

-

(ii)

For MP, although the phase distribution is an intermediate variable, it is important for the evaluation of various techniques including fringe pattern design, phase-shifting technique, and phase unwrapping technique. We develop three phase noise models, from most complicated to simplest, for different purposes;

-

(iii)

For MPtoG, in our previous work, we reported some initial results for the purpose of proving the equivalence of different measurement methods (OptE3, OptR4 and HorVer4) with respect to measurement precision, but not in a form of precision model nor in the pursuit of precision limit.17 In this paper, we derive an explicit expression and approximate it according to practical conditions. These results not only reveal the roles of the physical parameters in determining the measurement precision, but also effectively guide the design of FPP system.

-

(iv)

For MG, since it is at the final stage of measurement, an integrated form will be derived to enable the characterization of the entire FPP system.

In summary, in this paper, all six individual models are established, and three key stage models, MC, MP, and MG, will be validated experimentally. The significance of our work is twofold: it provides a guide for the system configuration and hardware selection during system design with a given error tolerance and provides a theoretical basis for precision estimation after the system has been set up, which are important both theoretically and practically. In addition, our phase noise model can provide reference value to other optical methods and instruments, such as fringe projection assisted multi-view systems15,35 and coaxial systems,16 interferometry36,37,38 and deflectometry.39,40

The rest of this paper is arranged as follows: the basic principles of an FPP system are given in Principle of FPP; MItoP and MPtoG models are described in Two transfer models of noise; all four proposed stage models are explained in Four stage models of noise; comprehensive experimental validation is given in Experimental verification; further discussions are provided in Discussions and the paper is concluded in Conclusion.

Principle of FPP

In this section, the principle of FPP is briefly introduced, as a preparation for all the stage and transfer models.

Pinhole models in an FPP system

Since FPP can be seen as a triangulation method for 3D reconstruction, the pinhole model is often used to describe the imaging process of the camera and the projector. The pinhole model of the camera can be expressed as,17

where the superscript w denotes the world coordinate system; \(\left({x}^{w},{y}^{w},{z}^{w}\right)\) indicates the coordinate of the object point in the world coordinate system; the superscript c highlights that we are modeling the camera imaging system; \(\left({u}^{c},{v}^{c}\right)\) indicates the coordinate of the image of an object point in the pixel coordinate system; \({f}_{u}^{c}\) and \({f}_{v}^{c}\) (unit: pixel) are the focal lengthes of the camera lens along \({u}^{c}\) and \({v}^{c}\) directions, respectively; \(\left({u}_{0}^{c},{v}_{0}^{c}\right)\) is the camera’s principle point coordinate; \({s}^{c}\) is a scalar; \({r}_{{ij}}^{c}\) and \({t}_{j}^{c}\) \(\left(i=\mathrm{1,2,3}{;j}=\mathrm{1,2,3}\right)\) are the entries of the rotation matrix \({{R}}^{c}\) and translation vector \({{\bf{t}}}^{c}\), respectively, to describe the transformation from the world coordinate system \({O}^{w}{x}^{w}{y}^{w}{z}^{w}\) to the camera coordinate system \({O}^{c}{x}^{c}{y}^{c}{z}^{c}\).

For convenience, the camera’s coordinate system is selected as the world coordinate system so that \({{\textit{R}}}^{c}\) is an identity matrix and \({{\bf{t}}}^{c}\) is a zeros vector. By substituting the special \({{\textit{R}}}^{c}\) and \({{\bf{t}}}^{c}\) into Eq. (1), the relationship between \(\left({x}^{w},{y}^{w},{z}^{w}\right)\) and \(\left({u}^{c},{v}^{c}\right)\) can be simplified as,

Similarly, the pinhole model of the projector can be expressed as,

where the superscript p highlights that we are modeling the projection system; \(\left({u}^{p},{v}^{p}\right)\) indicates the coordinate of the “inversed” image of an object point in the pixel coordinate system; \({s}^{p}\) is a scalar; \({f}_{u}^{p}\) and \({f}_{v}^{p}\) (unit: pixel) are the focal lengthes of the projector lens along \({u}^{p}\) and \({v}^{p}\) directions, respectively; \(\left({u}_{0}^{p},{v}_{0}^{p}\right)\) is the projector’s principle point coordinate; \({r}_{{ij}}^{p}\) and \({t}_{j}^{p}\) \(\left(i=\mathrm{1,2,3}{;j}=\mathrm{1,2,3}\right)\) are the entries of the rotation matrix \({{R}}^{p}\) and translation vector \({{\bf{t}}}^{p}\), respectively. By canceling the unknow scalar \({s}^{p}\), Eq. (4) is re-written as

FPP calibration is to obtain the values of all the system parameters of the pinhole models given in Eqs. (2), (3), (5) and (6), i.e., \(\left({f}_{u}^{c},{f}_{v}^{c},{f}_{u}^{p},{f}_{v}^{p},{r}_{i,j}^{p},{t}_{j}^{p}\right)\). These parameters will be used for the reconstruction of a 3D point \(\left({x}^{w},{y}^{w},{z}^{w}\right)\). Thus, given a camera pixel \(\left({u}^{c},{v}^{c}\right)\), there are five unknowns \(\left({x}^{w},{y}^{w},{z}^{w},{u}^{p},{v}^{p}\right)\), but with only four equations Eqs. (2), (3), (5) and (6). Phase measurement by projecting the sinusoidal fringe patterns has been a widely used choice to precisely determine \({u}^{p}\) and/or \({v}^{p}\), with which, \(\left({x}^{w},{y}^{w},{z}^{w}\right)\) can now be easily reconstructed from the above-mentioned equations. As a simple but popularly used example, if \({u}^{p}\) is determined, then \(\left({x}^{w},{y}^{w},{z}^{w}\right)\) can be solved from Eqs. (2), (3) and (5). As a result, we have,17

Phase measurement

For the above-mentioned camera-projector correspondence, i.e., to obtain \({u}^{p}\) and/or \({v}^{p}\) corresponding to \(\left({u}^{c},{v}^{c}\right)\), phase measurement through phase-shifting has been widely used in FPP, due to its advantages of high accuracy, resolution, and noise immunity. First, N-step phase-shifting patterns with equal phase shifts are generated by a computer according to Eq. (8) and then sent to the projector,

where \(A\left({u}^{p},{v}^{p}\right)\) is the background intensity; \(B\left({u}^{p},{v}^{p}\right)\) is the fringe amplitude; \(\varphi\) is the phase to be solved. The vertical, horizontal, and optimal angle fringes are three typical projected fringes.17 Without loss of generality, we take the vertical fringes as an example for analysis and the results can be directly extended to the other two cases. The phase distribution of the vertical fringes is designed as,

where T is the fringe period.

When the generated fringes are projected onto the test object, the fringes are modulated by the optical properties and the profile of the object surface, i.e., both A, B will be changed and \(\varphi\) re-distributed, where the new phase distribution carries the 3D shape information. The modulated fringe patterns captured by the camera have the same representation as given in Eq. (8), and is given below for clearness,

The phase can then be calculated as,

where the subscript w indicates that the obtained phase is wrapped in a the range from \(-\pi\) to \(\pi\). In addition, the background intensity and fringe amplitude can also be obtained as,

From now on, we will focus on analyzing the captured fringe patterns, thus the coordinates \(\left({u}^{c},{v}^{c}\right)\) will often be omitted when no confusion is caused.

To obtain the continuous phase distribution, the wrapped phase should be unwrapped as17

where \(k\left({u}^{c},{v}^{v}\right)\) is the fringe order. When \(\left({u}^{c},{v}^{c}\right)\) and \(\left({u}^{p},{v}^{p}\right)\) correspond to the same object point, we have,

By substituting Eq. (15) into Eq. (9), we can calculate \({u}^{p}\) as,

Two transfer models of noise

According to the principle in Principle of FPP, after calibration, the measurement process of the FPP system can be simply divided into the capturing step and the three computing steps, with six individual models in the model chain, as shown in Fig. 1 and introduced in Introduction. Among all these individual models, we first study the two noise transfer models, MItoP and MPtoG, because they serve as intermediate bridges for noise transfer. As mentioned earlier, this work focus on precision models, so we pay attention to the variances of \({I}_{n}\), \(\varphi\) and \({z}^{w}\).

MItoP : from fringes intensity to phase

According to the phase measurement procedure described in Phase measurement, \({\sigma }_{\varphi }^{2}\) can be quantitatively evaluated. First, we assume that the wrapped phase \({\varphi }_{w}\) can be correctly unwrapping into \(\varphi\), which is generally achievable.41 Thus, we have

Then, since the wrapped phase is calculated by Eq. (11), we have

where the partial derivative can be derived as

Finally, by substituting Eqs. (18) and (19) into Eq. (17), the ItoP precision conversion can be expressed as,

The model’s name is put in the equation for easy reference, which will be done for all the models to be introduced.

MPtoG : from phase to geometry

Equation (7) reconstructs \({z}^{w}\) with three groups of variables/parameters: (i) a camera pixel \(\left({u}^{c},{v}^{c}\right)\) is specified for 3D reconstruction; (ii) given the specified \(\left({u}^{c},{v}^{c}\right)\), \({u}^{p}\) is calculated by using Eq. (16) whose precision is affected by the phase measurement result; (iii) all the other parameters appearing in the right hand of Eq. (7) are system-related, pre-calibrated, and assumed to be perfectly known. Thus, \({u}^{p}\) is the only variable for the consideration of the measurement precision so that we have,

Firstly, we obtain \(\partial {z}^{w}/\partial {u}^{p}\) from Eq. (7) as,

where F indicates that it is a full model. We mention that the direct derivation of Eq. (22) from Eq. (7) is lengthy, and an easier shortcut is to refer to the derivation of \(\partial {u}^{p}/\partial {z}^{w}\) in Ref. 17. To estimate the variance of \({z}^{w}\) according to Eq. (21), we yet need to know the variance of \({u}^{p}\), which can be calculated from Eq. (16) as,

Substituting Eqs. (22) and (23) into Eq. (21), MPtoG precision transfer model under ideal calibration can be obtained as,

This model is applicable for any practical systems.

Next, it is seen from Eq. (22) that \(\partial {u}^{p}/\partial {z}^{w}\) is not constant but varies with \(\left({u}^{c},{v}^{c}\right)\). This implies that an FPP system has inherent spatially non-uniform error distribution. Fortunately, for a typical FPP system with a camera and projector placed on left and right, \({r}_{11}^{p}\), \({r}_{22}^{p}\) and \({r}_{33}^{p}\) are closed to 1, and the other entries of \({{\textit{R}}}^{p}\) are much small. Additionally, the camera’s focal lengths (unit: pixel) are larger than the detector’s pixel number, and thus \(\left({u}^{c}-{u}_{0}^{c}\right)/{f}_{u}^{c}\), \(\left({v}^{c}-{v}_{0}^{c}\right)/{f}_{v}^{c}\) are less than 1. Based on the above two facts, in both the numerator and the denominator of Eq. (22), the first two terms in the bracket can be omitted compared with the third term. Equation (22) can then be approximated into a simpler form,

Accordingly, we have the following approximated model,

where A indicates the approximation. This model is more concise and practical both for guiding FPP system design and for precision analysis of an already established FPP system. However, caution must be taken on whether the approximation is valid, for which, we suggest to numerically evaluate the following relative error between two partial derivatives when the system parameters are available,

\({E}_{r}\) is determined by the intrinsic and extrinsic parameters of FPP, \(\left({u}^{c},{v}^{c}\right)\), and the measured depth \({z}^{w}\). We define the maximum relative error, \({E}_{{r}_{\max }}\) as the maximum value of \({E}_{r}\) at a depth \({z}^{w}\). As an example, for the verification in Validation of the theoretical reconstruction precision of FPP, we set up an FPP system and calibrate it within a depth range from 850 mm to 1000 mm. With the calibration parameters and the depth value, we are able to calculate \({E}_{{r}_{\max }}\) using Eq. (27) ranging from 1.436% to 1.441% across this depth range. We then modify the configuration of the system in a less favorable manner and found that \({E}_{{r}_{\max }}\) do increase to \(\left[8.748 \% ,8.881 \% \right]\) across the calibration depth range from 380 mm to 480 mm. Details of these two systems are given in Validation of the theoretical reconstruction precision of FPP. In addition, we evaluate the system recommended by Zhang in his book as it is “capable of achieving good sensitivity and compact design” based on his experience,13 and calculate its \({E}_{{rmax}}\) to be 1.117% at the designed measuring distance. Thus MPtoG-A can be used when \({E}_{{r}_{\max }}\) is small (say, less than 5%), while MPtoG-F should be used otherwise.

Finally, according to our analysis,17 for the vertical fringe projection, the optimal precision can be achieved when the epipolar line of the camera and projector is horizontal. In this case, the rotation matrix is an identity matrix and \({t}_{3}^{p}\) = 0, which gives

where E stands for epipolar. This model is useful for precision prediction, and also clearly shows the possibly ways of precision improvement: to reduce the fringe period T, the object distance \({z}^{w}\) and noise level \({\sigma }_{\varphi }\), and to increase the focal length \({f}_{u}^{p}\) and the baseline \({t}_{1}^{p}\) between the camera and projector. Among them, \({\sigma }_{\varphi }\) will be elaborated in MP: model the phase noise using the new MI-C; T will be discussed in The optimal period of the projected fringe patterns; others are often constrained by a practical measurement task. We mention that when the epipolar line is not horizontal, using the optimal fringe angle for projection17 can also achieve the same precision.

Four stage models of noise

In this section, we introduce and explain the four stage models, MC, MI, MP and MG, in Sections MC: an existing camera noise model to be adopted to MG: integrated noise model for the reconstructed geometry, respectively.

MC: an existing camera noise model to be adopted

We first explain an existing noise model which we will adopt into FPP. The comparison with the tradition Gaussian model will be given in Comparison with the tradition Gaussian model. Figure 2 shows the mathematical model of a linear camera sensor which converts photons hitting a pixel area during the exposure time into a digital number (DN) which is a gray value of the corresponding pixel in the captured image. We follow the convention in the European Machine Vision Association (EMVA) Standard 1288,33 and use DN as the unit of the gray value. During the exposure time, the process of converting the average number of photons \(\left({\mu }_{p}\right)\) into the average number of electrons \(\left({{\mu }_{e},{\rm{unit}}\!\!:{e}}^{-}\right)\) can be determined by the quantum efficiency \(\eta\) of the sensor,

Mathematical model of a camera, adapted from33 with minor modification

Since the number of electrons fluctuates statistically with Poisson probability distribution,33 we have,

In addition, a sensor also generates electrons \({\mu }_{d}\) due to the influence of temperature without light. Both types of the electrons are converted into a voltage, amplified, and finally converted into a digital signal I (unit:DN) by an analog digital converter (ADC). The whole process can be described as,

where \(K({\rm{unit}}\!\!:{\rm{DN}}{\left({{\rm{e}}}^{-}\right)}^{-1})\) is the overall gain of a camera; \({I}_{{dark}}=K{\mu }_{d}\) is called dark signal. Because all noise sources are independent, the total variance of the digital signal \(I\) can be expressed as,

which includes three noise sources: (i) shot noise with a variance of \({\sigma }_{e}^{2}\) \(\left({{\rm{unit}}\!\!:\left({e}^{-}\right)}^{2}\right)\); (ii) all the noise sources related to the sensor read out and amplifier circuits represented by \({\sigma }_{d}^{2}\) \(\left({{\rm{unit}}\!\!:\left({e}^{-}\right)}^{2}\right)\); (iii) the quantization noise with a variance of \({\sigma }_{q}^{2}=1/12\left({{\rm{unit}}\!\!:\left({\rm{DN}}\right)}^{2}\right)\).42

Substituting Eqs. (30) and (31) into Eq. (32) gives,

Equation (33) is central to the characterization of the sensor for camera manufacturers to test the specifications of a camera, and is known as the photon transfer curve (PTC) in EMVA 1288.33 For users, it is more convenient to re-write this equation as,

where MC is used to denote the camera model. It is clear that the variance of intensity is proportional to the image intensity, which is seldom noticed in the FPP literature.

MI: model the fringe intensity noise

Applying MC to the fringe intensities is straightforward but significant, as it will influence the entire model chain. By submitting the acquired phase-shifting fringe patterns introduced in Phase measurement into Eq. (35), we have

where C indicates that this model is based on the new camera model.

MP: model the phase noise using the new MI-C

Now, based on MI-C, we develop three new phase models for different purposes. By substituting MI-C in Eq. (37) into MItoP-F in Eq. (20), the phase variance is obtained as,

where F stands for a full phase noise model. This equation clearly indicates that the variance of the phase is determined by the parameters (\({A}^{c}\) and \({B}^{c}\)) of the fringes and the parameters (K and \({C}_{n}\)) of a camera, where K and \({C}_{n}\) can be obtained from the manufacture of the sensor, e.g. FLIR recently released 2022 Camera Sensor Review testing using EMVA 1288.43 Once the acquired fringe intensity is set, the camera parameters fully determine the variance of the phase noise.

According to the K value provided by FLIR,43 the term of \({C}_{n}\) can be negligible compared with K\({A}^{c}\) in actual measurement, especially when a camera captures images with 10 bits greyscale value or higher. For example, for the camera used in this study (8 bits), \(K{A}^{c}/{C}_{n} > 10\), which will be seen in Validation of the camera noise model. Thus, Eq. (38) can be approximated as,

where A in the subscript stands for approximation. This model can be used for quick estimation. However, MP-A still relies on the background intensity \({A}^{c}\) and fringe amplitude \({B}^{c}\), which will change for different object surface properties.

In a special case where the acquired fringe image is close to the saturation value, the overall gain of a camera can be expressed as,33

where \({\mu }_{e.{sat}}\) \(\left({\rm{unit}}\!\!:{e}^{-}\right)\) is the saturation capacity. For a fringe pattern expressed in Eq. (10), the maximum intensity is \({I}_{{Sat}}={A}^{c}+{B}^{c}\). Further consider the case where \({A}^{c}\approx {B}^{c}\), we have \({I}_{{Sat}}\approx 2{B}^{c}\), and then Eq. (40) can be re-written as,

Substituting Eq. (41) into Eq. (39), the phase noise can be simplified as,

which is named MP-S where S stands for saturation. The interesting factors of this model are that, first, the noise variance is no longer dependent on either \({A}^{c}\) or \({B}^{c}\), allowing us to clearly know the measurement precision limit for an ideal FPP system, and second, \({\mu }_{e.{Sat}}\) is also a basic parameter provided by the camera manufacturer and thus very convenient to use. This model is most useful to theoretically guide the selection of a sensor.

Table 1 shows the overall gain and saturation capacity of several commonly used sensors, obtained from 2022 Camera Sensor Review-Mono released by FILR company.43 Note that the gain provided by FLIR is the number of electrons required to increase the pixel value from a 16-bit greyscale value to one DN higher.43 Therefore, if 8-bit, 10-bit and 12-bit greyscale values are used, users need to be divided these parameters in Table 1 by \({2}^{8}\), \({2}^{6}\) and \({2}^{4}\), respectively. (Note that \({C}_{n}\) cannot been obtained by using a similar conversion way. If you require a \({C}_{n}\) for a specific bit number, it is advisable to contact the camera manufacturer).

MG: integrated noise model for the reconstructed geometry

By integrating an MP model (MP-F, MP-A or MP-S) developed in MP: model the phase noise using the new MI-C and a MPtoG model (MPtoG-F, MPtoG-A or MPtoG-E) developed in MPtoG: from phase to geometry, we can obtain nine different combinations and thus nine different versions of MG, among which the following four models are most useful and significant. The first version integrates MP-F (full model) and MPtoG-F (full model), which accurately evaluate of the measurement precision of a constructed FPP system under any circumstances, but is also most complicated:

The second version integrates MP-F (full model) and MPtoG-A (approximation mode) when \({C}_{n}\) is available from the camera manufacturer:

The third version integrates MP-A (approximation model) and MPtoG-A (approximation model) when \({C}_{n}\) is not available from the camera manufacturer:

The fourth version integrates MP-S and MPtoG-E which is simplest and can be used to predict the theoretical precision limit before system development because the fringe intensity approaches saturation in MP-S and the fringe angel is optimal in MPtoG-E:

Comparison with the tradition Gaussian model

The Gaussian noise model has been used in FPP almost exhaustively. It assumes that the noise at a pixel is Gaussian with a mean of zero and a variance of \({\sigma }_{I}^{2}\) which is shared by all the pixels in all the phase-shifted fringe patterns.25,26,27,31 Consequently, the variance of the fringe intensity can be written as

Combining MI-G with MItoP model gives the following phase variance,

Further combing MP-G with MPtoG-F gives

We now compare our adopted camera model MC with the Gaussian model MG. Referring to Eq. (35) and also noting that \({C}_{n}\) is ignorable, we have \({\sigma }_{I}^{2}={KI}\) which provides a large range of variance. For example, when I changes from 30 DN to 240 DN when different phase shifts are applied, the variance will change from 30 K to 240 K, i.e., the variance can be different by 8 times. However, in the Gaussian model, the variance is assumed to be constant. This will affect the subsequent evaluation of the precision at all the stages in the model chain. In addition, in the Gaussian noise model, the noise variance \({\sigma }_{I}^{2}\) is unknown in advance and has to be measured, and the measurement result varies with the measured object and the surrounding environment, which is undesirable.

For easy reference, Table 2 summarizes the most useful phase and geometry models.

Experimental verification

In this section, we carefully verify the following key aspects to support our theoretical analysis:

-

(i)

Since this is the first time to adopt the camera noise model for FPP, we verify this model by our measurement in detail (Validation of the camera noise model);

-

(ii)

We have stated in MC: an existing camera noise model to be adopted that the camera noise is dominant comparing with other error source. We will measure the influence of the projector to show that it is indeed ignorable (Validation of the projector noise);

-

(iii)

We further verify the above statement on all error sources, by showing that the measured phase variance influenced by all error sources in a real experiment agrees perfectly with that predicted by our proposed phase models with only camera noise (Validation of the phase noise models);

-

(iv)

We finally verify the reconstruction precision which is one of the most important indicators of FPP (Validation of the theoretical reconstruction precision of FPP).

In this study, all experiments are conducted using the same camera (Model: MER2-502-79U3M-L, resolution: \(2048\times 2448\), sensor: Sony IMX250).

Validation of the camera noise model

In this section, we validate the camera noise model expressed in Eq. (35). We measure the PTC of the camera using an integrating sphere which is able to illuminate the image sensor homogeneously.44 Figure 3a shows the setup in a dark room with an illuminance of lower than \({10}^{-4}\) lx. During the test, the exposure time of the camera is set to 20 ms and images are captured as 8-bit monochromatic and saved in a raw format. The brightness of the integrating sphere increases gradually from the initial dark field, so that the mean image gray level increases from its initial small value to 250 DN. The increment interval is approximately 10 DN. In the dark field, we measure the mean value of the gray value of all the pixels as

From each captured image, the mean value of the gray value of all the pixels is calculated as \(I\), while the variance of all these pixels is used as \({\sigma }_{I}^{2}\). We then plot \({\sigma }_{I}^{2}\) with respect to \(I-{I}_{{dark}}\) as blue dots in Fig. 3b, which serves as our measured PTC curve.

Validation of the camera’s linear noise model

We take the data between the two red points (including the red points) in Fig. 3b and fit them as a line by least squares fitting. The fit line is also shown in Fig. 3b as a red dotted line.

The coefficient of determination \({R}^{2}\)45 is calculated \(0.9996\), demonstrating perfect linearity between \({\sigma }_{I}^{2}\) and \(I-{I}_{{dark}}\). This measurement result clearly shows that the noise variance indeed changes according to the input intensity. Such a phenomenon should not be ignored in precision evaluation.

Validation of the camera’s key parameters

According to Eq. (51), the estimated slope gives the overall gain K = 0.0232 \({\rm{DN}}{\left({{\rm{e}}}^{-}\right)}^{-1}\). The saturation gray value is ISat = 240 DN, which is shown as the green point in Fig. 3b. Therefore, the saturation capacity can be obtained as,

We compare our results with other two independent testing results:

-

(i)

The FILR’s result has been provided in the first row of Table 1 where their \(K\) value is measured for 16-bit greyscale. We adapt the result to 8-bit by converting the K value as 5.7/28 = 0.0222 and adapt \({\mu }_{e.{Sat}}\) accordingly. The result in shown in the first row of Table 3.

Table 3 comparison of the camera’s key parameters -

(ii)

The data shared by DaHeng company46 undergo a similar conversion from 10-bit to 8-bit.

-

(iii)

The relative differences are computed by using the FILR’s results as the ground truth. The differences are less than 7% and considered as consistent.

Validation of the significance of C n

Substituting Eq. (50) into Eq. (51) gives,

So, the value of \({C}_{n}\) is \(0.1187{\left({\rm{DN}}\right)}^{2}\). According to Eq. (38), we can calculate the ratio for the following two particular cases:

Thus, \({C}_{n}\) is smaller by more than an order of magnitude and ignorable for theoretical consideration, to support our statement in MI: model the fringe intensity noise. To be safer, since all the above-mentioned camera parameters are available from the camera manufacturer, users are encouraged to evaluate the above ratio in actual measurement.

Since our experiment well validates the camera noise model, our measured parameters in Table 3 will be used in all our later verifications.

Validation of the projector noise

In this experiment, we study the influence of the projector’s stability on the captured image intensity. The projector used is a DLP projector (Model: DLP4500, resolution: 1140×912) and the camera is equipped with a VTG-1614-M4 lens with a focal length of 16 mm. Before starting to verify, the projector was turned on for thirty minutes for thermal equilibrium, where the LED of the projector is set to green light. Then, the projector projects, repeatedly for 500 times, an image with a uniform grayscale onto a white plate. The camera captures 500 images. To check the stability of the projector, the temporal evolution of two randomly selected pixels, one from the left of the camera with a coordinate of (592, 800) and the other from right with a coordinate of (1536, 1836), are shown in Fig. 4a. We interestingly find that these two pixels show different light intensity (the left pixel has higher intensity than the right pixel). This is a common phenomenon mainly due to the non-uniformity and directional scattering of the plate, and the angle between the camera’s viewing angle and the direction of the incident light,47 and the illumination of the projector are not uniform, with a typical uniformity of around 90%.48

We now statistically examine the projector’s stability. We calculate the mean and variance for each pixel separately as,

where \({I}_{l}\left({u}^{c},{v}^{c}\right)\) is the intensity of the \({l}^{{th}}\) captured image at the pixel \(\left({u}^{c},{v}^{c}\right)\) and the total frame number is L = 500 in our test.

We then use the mean intensity \(\bar{I}\left({u}^{c},{v}^{c}\right)\) to predict the intensity variance from Fig. 3b as a reference (\({\sigma }_{{I}_{r}}^{2}\)) which is solely due to the camera’s influence. Linear interpolation is used when the input intensity falls between two available data in Fig. 3b. For a qualitative observation, Fig. 4b shows the distribution of\(\,{\sigma }_{I}^{2}\) and \({\sigma }_{{I}_{r}}^{2}\) along the middle row of pixels on the camera. The difference between the measured variance and the reference variance at each pixel is very small, and the latter appears to be a smoothed version of the former. We also quantitatively calculate the mean and variance of the differences of all the pixels to be \(-0.0864{\left({\rm{DN}}\right)}^{2}\) and \(0.0837{\left({\rm{DN}}\right)}^{4}\), respectively, which convincingly demonstrates that the measured variance, including the influence of the projector’s stability (and implicitly, all other possible disturbances), perfectly matches that without considering such influence. Thus, when evaluating a system’s precision limit, we can safely ignore the projector’s influence. We mention that the measured data should be larger than the theoretical predication, whereas in our case, the former is a little larger, which is attributed to the imperfection in the experimental data in both Figs. 3b and 4.

Validation of the phase noise models

In this section, we validate the proposed phase noise models with the following procedure:

-

(i)

Sequentially project 500 sets of fringe patterns with \({A}^{p}={B}^{p}=127.5\). Each set includes 9-step phase-shifted fringe patterns with a period of 21 pixels. Capture all 500 sets of fringe patterns. Define the regional of interest (ROI) for validation;

-

(ii)

Calculate the wrapped phase \({\varphi }_{{wl}}\), background intensity \({A}_{l}^{c}\) and fringe amplitude \({B}_{l}^{c}\) (\(1\le l\le 500)\) from the captured fringe patterns using Eqs. (11), (12) and (13), respectively;

-

(iii)

Calculate the experimental variance of the phase of each pixel, \({\sigma }_{{\varphi }_{e}\left({u}^{c},{v}^{c}\right)}^{2}\), using Eq. (56) by merely replacing \({I}_{l}\left({u}^{c},{v}^{c}\right)\) by \({\varphi }_{{wl}}\left({u}^{c},{v}^{c}\right)\);

-

(iv)

Calculate the means of the background intensity \(\bar{{A}^{c}}\left({u}^{c},{v}^{c}\right)\) and fringe amplitude \(\bar{{B}^{c}}\left({u}^{c},{v}^{c}\right)\) using Eq. (55) by merely replacing \({I}_{l}\left({u}^{c},{v}^{c}\right)\) by \({A}_{l}^{c}\left({u}^{c},{v}^{c}\right)\) and \({B}_{l}^{c}\left({u}^{c},{v}^{c}\right)\), respectively;

-

(v)

Obtain the model-based phase variances of each pixel, \({\sigma }_{{\varphi }_{r}^{M}\left({u}^{c},{v}^{c}\right)}^{2}\), from a particular model M in Eqs. (38), (39), or (42), where M takes F, A and S for MP-F, MP-A and MP-S, respectively;

-

(vi)

Take the phase variance difference (PVD) as \({\delta }^{M}\left({\sigma }_{\varphi \left({u}^{c},{v}^{c}\right)}^{2}\right)={{\sigma }_{{\varphi }_{e}\left({u}^{c},{v}^{c}\right)}^{2}-\sigma }_{{\varphi }_{r}^{M}\left({u}^{c},{v}^{c}\right)}^{2}\) and then calculate its mean for quantitative comparison purpose.

MP-F: In Step (i), the ROI is the entire frame; In Step (ii), the evolutions of the calculated phases with respect to l for the same two pixels used in Validation of the projector noise are plotted in Fig. 5a, b, respectively; In Step (iii), the distribution of the experimental phase variances (in blue) is shown in Fig. 5c; In step (iv), the intensity of \(\bar{{A}^{c}}\left({u}^{c},{v}^{c}\right)\) varies approximately from \(63.92{\rm{DN}}\) to \(109.74{\rm{DN}}\), while the intensity range of \(\bar{{B}^{c}}\left({u}^{c},{v}^{c}\right)\) is approximately \(56.06{\rm{DN}}\) to \(96.96{\rm{DN}}\); In Step (v), \(\bar{{A}^{c}}\left({u}^{c},{v}^{c}\right)\), \(\bar{{B}^{c}}\left({u}^{c},{v}^{c}\right)\) and the overall gain \(K=0.0232{\rm{DN}}{\left({{\rm{e}}}^{-}\right)}^{-1}\) are submitted into Eq. (38) to obtain the theoretical phase variance \({\sigma }_{{\varphi }_{r}^{F}\left({u}^{c},{v}^{c}\right)}^{2}\). The distribution of the middle row (in orange) is shown in Fig. 5c, which match very well with the blue line; In Step (vi), the mean of the PVD is calculated to be as small as −1.2269×10−6 which validates the proposed MP-F where the phase error is introduced only by the camera noise.

MP-A: The validation is the same as for MP-F. In Step (vi), the theoretical result by MP-A of the middle row is also plotted in Fig. 5c (in yellow), which, as expected, is slightly shifted downwards in relation to the orange line. Despite this, the result of MP-A still matches the actual measurement result very well. The mean of the PVD is increased slightly to 2.7303 × 10−6, indicating that our approximation is valid.

MP-S: As assumed in this model, the fringe intensity is adjusted to be close to saturation and \({A}^{c}\) and \({B}^{c}\) in the captured fringe patterns are approximately equal. To achieve it, in Step (i), we slightly increase the aperture of the lens and use fringes with a period of 912 pixels. The ROI is changed from entire frame to a central area 40×50 pixels, which is reasonable as we only concern the precision limit; In Step (iv), in the ROI, \(\bar{{A}^{c}}\) and \(\bar{{B}^{c}}\) vary from \(122.57{\rm{DN}}\) to \(132.63{\rm{DN}}\) and from \(117.61{\rm{DN}}\) to \(127.26{\rm{DN}}\), respectively, thus both \({A}^{c}\) and \({B}^{c}\) have higher values and \(\bar{{A}^{c}}\approx \bar{{B}^{c}}\); In Step (v), we substitute the saturation capacity measured in Validation of the camera noise model into Eq. (42) to obtain the phase variance; and in Step (vi), we obtain the mean of PVD to be 7.85 × 10−7, showing that MP-S matches the experimental result well. It is worth highlighting that, for this model, we can skip Step (iv), and only require the saturation capacity to obtain the phase variance. Since the saturation capacity is usually provided by the manufacturer of the camera, this model is able to predict the phase variance successfully even without any measurements.

Comparison of all the phase noise models

In the above evaluations, two experiments were conducted. One may have noticed that, only the means of the PVDs are provided, instead of the means of the phase variances. This is because the phase variances in the first experiment are spatially varying, as clearly seen in Fig. 5c. However, within the small ROI (only 40×50 pixels) used in MP-S, such trend is ignorable. We thus re-calculate the means of the phase variances of all the models again and list them in Table 4 for a more direct comparison. Again, we validate that all the models agree well with their respective experimental results. More importantly, MP-S perfectly predicts the lower limit of the phase variance without measurement (the measurement carried out here is only for validation purpose), providing a useful theoretical guideline for FPP system design.

Validation of the theoretical reconstruction precision of FPP

In this section, we verify the theoretical reconstruction precision using two FPP systems. The first FPP system has the following components, a projector (DLP6500), a camera (Model: MER2-502-79U3M-L) attached with a lens (Computar-M2512-MP2, 25 mm), with the following configuration: (i) the camera and the projector are positioned side by side so that \({t}_{1}^{p}\) is significantly larger than both \({t}_{2}^{p}\) and \({t}_{3}^{p}\); and (ii) the angle between the projector and the camera optical axes is approximately 7°. The FPP system is calibrated by Zhang’s method14 and the intrinsic and extrinsic matrices are consistent to the configuration,

A ceramic plate with a size of 300 × 300 mm2 and the peak-valley difference less than 0.005 mm is used as the measured object. The plate is placed at 14 different positions and measured one after another. In order to reveal possible spatial variation of the system performance, two ROIs are defined for analysis, one at the center of the image (center ROI) and the other near the lower-left corner of the image (corner ROI). The sizes of both ROIs are limited to 100 × 100 pixels. The fringe projection, fringe capturing, and phase calculation follow Validation of the phase noise models. The optimal three-frequency method49 is adopted for phase retrieval and unwrapping, where the fringe periods from low to high are \({T}_{0}=21\) pixels, \({T}_{1}=700/33\) pixels and \({T}_{2}=70/3\) pixels. Then \({u}^{p}\) is calculated by Eq. (16) and \(\left({x}^{w},{y}^{w},{z}^{w}\right)\) is calculated from Eqs. (2), (3) and (7). For clarity, we denote the obtained 3D point cloud as \(\left({x}_{0}^{c},{y}_{0}^{c},{z}_{0}^{c}\right)\). The point cloud data in each ROI are fitted into a plane as

The closest distance from each measured object point to the fitted plane is computed by

from which, the standard deviation (STD) is calculated as the experimental precision. We then move on to calculate their theoretical STDs in each ROI. Following the procedure described in Validation of the phase noise models, we obtain the theoretical phase variances, \({\sigma }_{{\varphi }_{r}^{F}\left({u}^{c},{v}^{c}\right)}^{2}\) of MP-F and \({\sigma }_{{\varphi }_{r}^{A}\left({u}^{c},{v}^{c}\right)}^{2}\) of MP-A. Together with calibration parameters and \({z}_{0}^{w}\left({u}^{c},{v}^{c}\right)\), we further obtain \({\sigma }_{{z}^{F}\left({u}^{c},{v}^{c}\right)}\), \({\sigma }_{{z}^{A1}\left({u}^{c},{v}^{c}\right)}\), and \({\sigma }_{{z}^{A2}\left({u}^{c},{v}^{c}\right)}\) using our full model in Eq. (43), first approximation model in Eq. (44) and second approximation model in Eq. (45), respectively. Such calculation is repeated for all 14 plate positions. All these precision results in the center ROI and the corner ROI are shown in Fig. 6a, c, respectively, where the three theoretical precisions are seen to match the experimental precision very well. To see it more clearly, we also calculate the relative theoretical estimation errors against the experimental result for both the center and corner ROIs, which are shown in Fig. 6b and d, respectively. The relative errors of MG-F, MG-A1 and MG-A2 are less than 5% in both ROIs. We remark that the theoretical models agree well with the experimental result with little spatial variation in this experiment.

To further reveal the influence of different system configurations, the above validation is repeated using a second FPP system with the following modifications from the first one: (i) the projector model is changed from DLP6500 to DLP4500; (ii) the camera lens focal length is reduced from 25 mm (Computar-M2512-MP2) to 16 mm (Model: VTG-1614-M4); (iii) the imaging distance is almost halved; (iv) the positioning of the camera and projector exhibits noticeable deviations where \({t}_{1}^{p}\) is much reduced, \({t}_{2}^{p}\) is significantly increased, while \({t}_{3}^{p}\) is slightly increased. As a result, the angle between the camera and the projector optical axes is increased to 9°. Accordingly, the intrinsic and extrinsic matrices are obtained as,

Note that, the camera is now almost above the projector, thus we project horizontal fringes17 and adjust both MPtoG and \({E}_{r}\) expressions accordingly. All the results are shown in Fig. 7. We remark that (i) the full geometry model continues to provide good agreement with the experimental data with a relative error of less than 5%. Note that the seemingly larger discrepancy between the experimental and full geometry model results is larger in Fig. 7a, c than in Fig. 6a, c, which is actually due to the different scales of the vertical axes; (ii) however, the two approximation models present larger deviations, which is noticeably spatially varying. In the center ROI, the deviation of the first approximation model remained less than 5%, but in the corner ROI, it reaches 10%.

We also quantitatively investigate the approximation errors from the full geometry model (MG-F) to the first approximation model (MG-A1) and also from the first approximation model (MG-A1) to the second approximation model (MG-A2) for both ROIs in both experiments. The first approximation, \({E}_{r}\) is calculated from MG-F and MG-A1 using Eq. (27) for all 14 positions and then calculate the average value. For the second approximation, \({E}_{r}\) is similarly calculated from MG-A1 and MG-A2. The results are given in Table 5, from which we observe that (i) the first approximation is dependent on both the pixel location and the system configuration, where the latter is more significant; and (ii) the second approximation interestingly gives a stable relative error of around 2.5%, which is because this approximation only simply neglects \({C}_{n}\). To summarize, if a system is reasonably well configured, then both approximation models, MG-A1 and MG-A2, can be used, with the latter preferred due to its simplicity. The study on the optimization of the FPP system configuration will be our future work.

Discussions

In this section, we discuss the following four aspects of an FPP system, which can help to improve the measurement precision.

The thermal equilibrium of LEDs of the projector

In our study, we find that the thermal equilibrium of LEDs of the projector is one of the main factors that affects the accuracy and precision of the measurement. We conduct seven pre-heating experiments for both green and white LED lights, increasing the pre-heating time from 0 min (i.e., no pre-heating) to 30 min with an increment of 5 min, which is indicated by the numbers above the red arrows in Fig. 8. After each pre-heating, we immediately acquire 500 sets of 9-step phase shifting fringe patterns, turn off the LED light and wait for it to cool down before starting the next pre-heating experiment. Figure 8 shows the phase value of a randomly selected pixel. The phase value increases before reaching the equilibrium. The stabilized phase values also differ between white and green LED lights, mainly because white light is composed of the red, green, and blue light. These phenomena suggests that pre-heating is necessary to achieve thermal equilibrium and subsequently a stable phase distribution; otherwise, both the accuracy and precision will be compromised.

The optimal period of the projected fringe patterns

Equation (28) indicates that reducing the fringe period can enhance the measurement precision of FPP system. In the meantime, because of the influence of the system’s modulation transfer function (MTF),50 reducing the fringe period, i.e., increasing the fringe’s spatial frequency, will increase the phase variance. We investigate the impact of fringe period on the measurement precision by repeating the second experiment in Validation of the phase noise models with varying fringe periods. The result shows that the influence of the MTF is limited and a small period around T = 5 performance best in our focused FPP system. When the system is defocused, the influence of the MTF becomes more significant, and the optimal period will be increased to around T = 20.34

The positioning of the measured object

The final precision of FPP, \({\sigma }_{z}\), is proportional to the square of the measurement distance, as shown in the MPtoG. Thus, reducing the measurement distance is the most straightforward and effective approach to improve the measurement precision. As a very rough estimation based on the experiment 5.4, if the measurement distance is reduced from the current 900 mm to about 240 mm, \({\sigma }_{z}\) can be reduced by 14 times from the current \(70{\rm{\mu }}{\rm{m}}\) to \(5{\rm{\mu }}{\rm{m}}\). Nevertheless, the field of view will be sacrificed.

The correlation of the camera pixels

The simplest yet effective method for noise reduction is to combine neighboring 2 × 2 pixels into a super-pixel and take the average value as the intensity of this super-pixel. By assuming that the noises in these four pixels are independent, one would expect that the noise variance of the super-pixel will be reduced by four times compared to the original pixel, according to probability theory. However, our careful experiment which repeats the second experiment in Validation of the phase noise models demonstrates that the noise variance is reduced from \(6.8669\times {10}^{-5}\) to \(1.9495\times {10}^{-5}\) with a reduction of about 3.5 times. This surprising phenomenon is now easily explainable according to the diagram in Fig. 2 that the noises at neighboring pixels are not fully independent; for example, the quantization errors at neighboring pixels tend to be highly correlated. In our experiment, we obtain the following relationship for a super-pixel,

By comparing it with Eq. (53), the overall gain K is reduced by four times, but \({C}_{n}\) almost remains the same. Such a finding is useful in understanding such pixel manipulations in practice.

Conclusion

To characterize the measurement precision of an FPP system, in this paper, a complete precision model chain is proposed first, comprising four stage models (MC, MI, MP, and MG) and two transfer models (MItoP and MPtoG). Next, by moving forward from our previous study, we establish three phase-geometry transfer models (MPtoG) for different purposes. In addition, a non-Gaussian camera model MC is adopted so that the noise can be properly estimated by simply referring to the known camera parameters. Together with the existing MItoP, we derive all the other stage models, MI, MP and MG, to fully understand the FPP performance. Among them, we highlight that we develop three phase noise models (MP) under different conditions so that readers can easily evaluate and compare the performance based on the measured phase, which has been a strong interest in many published research works; we then emphasize that we obtain four important 3D geometry noise models (MG), including a full model to accurately evaluate of the measurement precision, an approximation model that is suggested for use, and a precision limit model to guide the system design. Thus, the theoretical characterization of FPP is finally made possible. All our proposed models are experimentally validated.

References

Fernandes, D. et al. Point-cloud based 3D object detection and classification methods for self-driving applications: a survey and taxonomy. Inf. Fusion 68, 161–191 (2021).

Dionisio, J. D. N., Burns III, W. G. & Gilbert, R. 3D Virtual worlds and the metaverse: current status and future possibilities. ACM Comput. Surv. 45, 34 (2013).

Jiang, Y. S., Karpf, S. & Jalali, B. Time-stretch LiDAR as a spectrally scanned time-of-flight ranging camera. Nat. Photonics 14, 14–18 (2020).

Lazaros, N., Sirakoulis, G. C. & Gasteratos, A. Review of stereo vision algorithms: from software to hardware. Int. J. Optomechatronics 2, 435–462 (2008).

Li, L. Time-of-Flight Camera—an Introduction. (Texas Instruments, 2014).

Zhu, F. P. et al. Accurate 3D measurement system and calibration for speckle projection method. Opt. Lasers Eng. 48, 1132–1139 (2010).

Gorthi, S. S. & Rastogi, P. Fringe projection techniques: whither we are? Opt. Lasers Eng. 48, 133–140 (2010).

Liu, Y. et al. In-situ areal inspection of powder bed for electron beam fusion system based on fringe projection profilometry. Addit. Manuf. 31, 100940 (2020).

Du, H. et al. Development and verification of a novel robot-integrated fringe projection 3D scanning system for large-scale metrology. Sensors 17, 2886 (2017).

Heist, S. et al. 5D hyperspectral imaging: fast and accurate measurement of surface shape and spectral characteristics using structured light. Opt. Express 26, 23366–23379 (2018).

Zhao, Y. Y. et al. Shortwave-infrared meso-patterned imaging enables label-free mapping of tissue water and lipid content. Nat. Commun. 11, 5355 (2020).

Liao, Y. H. et al. Portable high-resolution automated 3D imaging for footwear and tire impression capture. J. Forensic Sci. 66, 112–128 (2021).

Zhang, S. High-speed 3D imaging with digital fringe projection techniques. Proceedings of SPIE 11813, Tribute to James C. Wyant: The Extraordinaire in Optical Metrology and Optics Education. San Diego, CA, USA: SPIE, 2021, 118130 V.

Zhang, S. & Huang, P. S. Novel method for structured light system calibration. Opt. Eng. 45, 083601 (2006).

Tao, T. Y. et al. High-precision real-time 3D shape measurement based on a quad-camera system. J. Opt. 20, 014009 (2018).

Wang, Z. Y. et al. Single-shot 3D shape measurement of discontinuous objects based on a coaxial fringe projection system. Appl. Opt. 58, A169–A178 (2019).

Lv, S. Z. et al. Fringe projection profilometry method with high efficiency, precision, and convenience: theoretical analysis and development. Opt. Express 30, 33515–33537 (2022).

Wang, Z. Y., Nguyen, D. A. & Barnes, J. C. Some practical considerations in fringe projection profilometry. Opt. Lasers Eng. 48, 218–225 (2010).

Zhang, Z. H. et al. A simple, flexible and automatic 3D calibration method for a phase calculation-based fringe projection imaging system. Opt. Express 21, 12218–12227 (2013).

Zhang, S. Flexible and high-accuracy method for uni-directional structured light system calibration. Opt. Lasers Eng. 143, 106637 (2021).

Zhang, G. Y. et al. Correcting projector lens distortion in real time with a scale-offset model for structured light illumination. Opt. Express 30, 24507–24522 (2022).

Zhang, S. Comparative study on passive and active projector nonlinear gamma calibration. Appl. Opt. 54, 3834–3841 (2015).

Zhang, S. Rapid and automatic optimal exposure control for digital fringe projection technique. Opt. Lasers Eng. 128, 106029 (2020).

Wang, Y. J. & Zhang, S. Optimal fringe angle selection for digital fringe projection technique. Appl. Opt. 52, 7094–7098 (2013).

Li, J. L., Hassebrook, L. G. & Guan, C. Optimized two-frequency phase-measuring-profilometry light-sensor temporal-noise sensitivity. J. Opt. Soc. Am. A 20, 106–115 (2003).

Zuo, C. et al. Temporal phase unwrapping algorithms for fringe projection profilometry: a comparative review. Opt. Lasers Eng. 85, 84–103 (2016).

He, X. Y. & Kemao, Q. A comparative study on temporal phase unwrapping methods in high-speed fringe projection profilometry. Opt. Lasers Eng. 142, 106613 (2021).

Lyu, N. et al. Structured light-based underwater 3-D reconstruction techniques: a comparative study. Opt. Lasers Eng. 161, 107344 (2023).

Zheng, D. L. et al. Phase error analysis and compensation for phase shifting profilometry with projector defocusing. Appl. Opt. 55, 5721–5728 (2016).

Wang, H. X., Kemao, Q. & Soon, S. H. Valid point detection in fringe projection profilometry. Opt. Express 23, 7535–7549 (2015).

Wu, Z. J., Guo, W. B. & Zhang, Q. C. Two-frequency phase-shifting method vs. Gray-coded-based method in dynamic fringe projection profilometry: a comparative review. Opt. Lasers Eng. 153, 106995 (2022).

Rathjen, C. Statistical properties of phase-shift algorithms. J. Optical Soc. Am. A 12, 1997–2008 (1995).

EMVA. EMVA 1288 release 4.0 (2021). at https://www.emva.org/standards-technology/emva-1288/emva-standard-1288-downloads-2/ URL.

Hu, Y. et al. Online fringe pitch selection for defocusing a binary square pattern projection phase-shifting method. Opt. Express 28, 30710–30725 (2020).

Wei, Y. H., Liu, C. H. & Zhao, H. J. 3-D error estimation based on binocular stereo vision using fringe projection. 2017 2nd International Conference on Electrical and Electronics: Techniques and Applications. Beijing, China: EETA, (2017), 351-356.

de Groot, P. Principles of interference microscopy for the measurement of surface topography. Adv. Opt. Photonics 7, 1–65 (2015).

Häusler, G. & Willomitzer, F. Reflections about the holographic and non-holographic acquisition of surface topography: where are the limits? Light.: Adv. Manuf. 3, 5 (2022).

Situ, G. Deep holography. Light Adv. Manuf. 3, 8 (2022).

Huang, L. et al. Review of phase measuring deflectometry. Opt. Lasers Eng. 107, 247–257 (2018).

Xu, Y. J., Gao, F. & Jiang, X. Q. A brief review of the technological advancements of phase measuring deflectometry. PhotoniX 1, 14 (2020).

Zhang, S. Absolute phase retrieval methods for digital fringe projection profilometry: a review. Opt. Lasers Eng. 107, 28–37 (2018).

Jähne, B. Digital Image Processing. 6th edn. (Berlin: Spring, 2005).

FLIR. Machine vision sensor review (2023). at https://www.flir.asia/landing/iis/machine-vision-camera-sensor-review/.

Integrating Sphere. at https://en.wikipedia.org/wiki/Integrating_sphere/.

Walpole, R. E. et al. Probability & Statistics for Engineers & Scientists. 8th edn. (Upper Saddle River: Pearson Prentice Hall, 2007), 224.

The testing results: obtained from Daheng through personal request. at https://www.daheng-imaging.com/product/area-scan-cameras/daheng/mer2-u3/.

Phong, B. T. Illumination for computer generated pictures. Commun. ACM 18, 311–317 (1975).

Texas Instruments. TI DLP® LightCrafter™ 4500 evaluation module user’s guide (2017). at https://www.ti.com/lit/ug/dlpu011f/dlpu011f.pdf.

Zhang, Z. H., Towers, C. E. & Towers, D. P. Time efficient color fringe projection system for 3D shape and color using optimum 3-frequency Selection. Opt. Express 14, 6444–6455 (2006).

Boreman, G. D. Modulation Transfer Function in Optical and Electro-Optical Systems. (Bellingham: SPIE, 2001).

Acknowledgements

This study was kindly supported by the Ministry of Education - Singapore (MOE-T2EP20220-0008). We thank Weiwei Xue from the University of Science and Technology of China for helpful discussions. The authors appreciate the experimental assistance provided by the Quality Inspection Center of Changchun Institute of Optics, Fine Mechanics, and Physics (CIOMP), CAS for camera testing.

Author information

Authors and Affiliations

Contributions

S.L.: conceptualization, writing-original draft, data curation, and editing. K.Q.: conceptualization, writing-review and editing, supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lv, S., Kemao, Q. Modeling the measurement precision of Fringe Projection Profilometry. Light Sci Appl 12, 257 (2023). https://doi.org/10.1038/s41377-023-01294-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41377-023-01294-0

This article is cited by

-

Single-shot super-resolved fringe projection profilometry (SSSR-FPP): 100,000 frames-per-second 3D imaging with deep learning

Light: Science & Applications (2025)

-

Accelerating fringe projection profilometry to 100k fps at high-resolution using deep learning

Light: Science & Applications (2025)

-

Chromatic Aberration Compensation of a Lens for Use in Any CCD Camera

Experimental Mechanics (2025)

-

Selection of fringe frequency sequence based on “2 + 1” phase-shifting algorithm

Applied Physics B (2025)