Abstract

Psilocybin has shown promise as a novel pharmacological intervention for treatment of depression, where post-acute effects of psilocybin treatment have been associated with increased positive mood and decreased pessimism. Although psilocybin is proving to be effective in clinical trials for treatment of psychiatric disorders, the information processing mechanisms affected by psilocybin are not well understood. Here, we fit active inference and reinforcement learning computational models to a novel two-armed bandit reversal learning task capable of capturing engagement behaviour in rats. The model revealed that after receiving psilocybin, rats achieve more rewards through increased task engagement, mediated by modification of forgetting rates and reduced loss aversion. These findings suggest that psilocybin may afford an optimism bias that arises through altered belief updating, with translational potential for clinical populations characterised by lack of optimism.

Similar content being viewed by others

Introduction

Psilocybin has shown promise as a novel pharmacological intervention for treatment of depression, where post-acute effects of psilocybin treatment have been associated with increased positive mood and decreased pessimism [1,2,3]. Although accumulating evidence indicates that psilocybin is effective for treatment of psychiatric disorders, the information processing mechanisms underlying the effects of psilocybin are not well understood [4, 5]. Establishing the information processing mechanisms of psilocybin could significantly benefit our understanding of the drug’s neurobiological substrate and its therapeutic actions, potentially helping to improve its efficacy and specificity, which could assist in informing clinical decisions. One way we can investigate the post-acute effects of psilocybin is with animal models [6, 7], which have a number of benefits, including the ability to collect many data points in controlled environments and over sustained periods after treatment, as well as circumventing issues with expectancy effects that may confound the results from clinical trials in humans [8].

To understand the information processing mechanisms of psilocybin, computational modelling approaches allow us to investigate the change in specific model parameters over time, providing insight into how psilocybin may help treat depression. Such computational modelling approaches fall into the burgeoning field of computational psychiatry that aims to develop precise treatments for psychiatric disorders, based on the specific information processing mechanisms of a particular individual, with an understanding that shared symptoms may arise from different computational processes [9,10,11,12,13]. Here, we employed a novel two-armed bandit reversal learning task capable of capturing engagement behaviour in rats. Measuring engagement behaviour has translational potential for individuals with depression, who often choose to withdraw from the world rather than engage in rewarding activities [14, 15]. In fact, modifying such behaviour is a primary target of existing behavioural activation interventions within cognitive-behavioural therapies [16, 17]. In our experiment, two groups of rats were administered psilocybin (n = 12) or saline (n = 10) 24 h before the initiation of the task. The rats could engage with the task for three hours each day for 14 days and completed the task in their home cage such that they could decide to either engage in the task or stay in the cage without engaging.

The present study used both behavioural measures and computational modelling methods to distinguish the mechanisms underlying task engagement. The space of models included both model-free reinforcement learning (RL) [18] and active inference (AI) [19,20,21] models, with a range of possible parameters motivated by prior research on depression. As optimism bias is associated with increased engagement with the world and depression is linked to diminished optimism, we include parameters related to optimism, allowing us to investigate if increased engagement in the task can be accounted for by increased optimism [22,23,24,25,26]. We hypothesised that psilocybin would increase subsequent task engagement through discrete changes in information processing in rats.

Methods

Animals

All animals were obtained from the Monash Animal Research Platform. Female Sprague-Dawley rats (n = 22) arrived at 6 weeks of age and were allowed to acclimate to the reverse light cycle (lights off at 11AM) for 7 days prior to any intervention. Young female rats were used in these studies to compare outcomes to previous studies in the laboratory investigating the role of psilocybin on cognitive flexibility [27]. Because reinforcement learning motivation is known to fluctuate over the estrous cycle [28], a male rat was housed in all experimental rooms at least 7 days prior to experimentation, synchronizing cycling (cf. the Whitten Effect [29]). Before training, rats were housed individually in specialised cages (26 cm W × 21 cm H × 47.5 cm D) and were habituated to sucrose pellets (20 mg, AS5TUT, Test Diet, CA, USA) sprinkled into the cage for two consecutive days. Pre-training involved first training animals to take pellets from the magazine (magazine training) on a “free-feeding” schedule, and subsequently training animals to make nose-poke responses to obtain a pellet (nose-poke training), whereby a nose-poke into either port delivered a pellet at a fixed ratio (FR) 1 schedule. At the completion of pre-training, when rats were between 8 and 9 weeks of age, a single dose of psilocybin (1.5 mg/kg; USONA Lot# AMS0167, dissolved in saline) or saline alone (control) was administered intraperitoneally and reversal learning training commenced the following day. Psilocybin efficacy was confirmed by the adoption of hind limb abduction, a stereotypical posture, within 15 min post-administration. All experimental procedures were conducted in accordance with the Australian Code for the care and use of animals for scientific purposes and approved by the Monash Animal Resource Platform Ethics Committee (ERM 29143).

Reversal learning task

For the reversal learning task, Feeding Experiment Devices (version 3; FED3) [30] were placed in the home cage of the rat for 3 h a day (Fig. 1). The rats were maintained on ad libitum access to food (standard rat and mouse chow; Barastoc, Australia) throughout the entire experimental paradigm in order to rule out hunger as a motivating factor for performance. The reversal learning task was a modified two-armed bandit designed so that 10 pokes on the rewarded side of the FED3, with each poke resulting in a reward, triggered the reversal of the rewarded side. This continued on a deterministic schedule of reinforcement (i.e., “active” pokes delivered a reward 100% of the time) over 14 experimental sessions on separate and consecutive days, where the left side was active first. There were three outcomes from the rats’ actions in the reversal learning task. If the rat poked the active side and received a sucrose pellet, the outcome was a ‘reward’. If the rat poked the non-active side of the FED3 the outcome was a ‘loss’, as they exerted energy without reward. If the rat did not engage in the task, the outcome was ‘null’, which was considered a better outcome than a loss as the rat did not exert energy without reward.

Rats completed a reversal learning task over 14 experimental sessions on separate consecutive days in their cage, with the first session beginning 24 h after treatment with psilocybin (n = 12) or saline (n = 10). The reversal learning task had a deterministic reinforcement schedule and was designed so that 10 pokes on the rewarded side, with each poke resulting in a reward, triggered the reversal of the rewarded side. The rat could select from three actions: poke left, poke right, or stay in the cage. Created with biorender.com.

Locomotor activity and anxiety-like behaviour

To examine potential influences of general locomotor and anxiety-like behaviour that may contribute to task performance, a separate cohort of female Sprague-Dawley rats (age-matched to 8-9 weeks old) were administered psilocybin (n = 8) or saline (n = 8) and 24 h later were tested in the open field (OF) and elevated plus maze (EPM). Behavioural tests were recorded with an overhead camera connected to a computer and analysed with Ethovision XT (V3.0; Noldus, NL) tracking software using centre of mass for detection. The EPM consisted of an elevated 4-arm platform made of grey Perspex (70 cm long × 10 cm wide × 90 cm high) with two closed (40 cm high walls) and two open (“risky”) arms. Rats were placed in the centre platform (10 × 10 cm) facing an open arm and the proportion of time spent in the closed arms relative to the open arms in each 10-min trial, was used as the primary measure of anxiety-like behaviour [31]. The OF test consisted of a deep open topped box (60 × 60 × 55 cm deep) in which distance travelled in each 10-min trial was used as the primary measure of locomotor activity and the proportion of time spent in the aversive (“risky”) centre zone (middle square of a 3 × 3 grid; 20 × 20 cm) was used as a secondary measure of anxiety-related behaviour, although it is shown to be less sensitive to the effects of anxiolytic drugs [32].

Computational modelling methods

We considered model-free reinforcement learning (RL) [18] and active inference (AI) [19,20,21, 33] models as possible explanations of observed choice behaviour. For all computational models, the rat had three actions to choose from: ‘poke left’, ‘poke right’, or ‘stay in cage’, and three outcomes: ‘reward’, ‘loss’, or ‘null’. A reward was modelled as a positive outcome, loss as a negative outcome, and null as a neutral outcome (i.e., less aversive than a loss, as the rat conserved energy).

Reinforcement learning models

Four different model-free RL models were considered that reflect hypotheses about how an optimism bias might be computationally implemented in such models. We hypothesised that psilocybin increased optimism by asymmetric belief updating, which was the main focus of these models; however, other parameters that have been associated with depression or optimism were also considered. Note that we only include model-free RL modelling approaches here, which do not include explicit beliefs about state transition probabilities. In this study, we have opted to use active inference models to include state transition beliefs, acknowledging that more complex RL models with explicit transition beliefs (i.e., “model-based” RL) could also have been considered [33,34,35,36,37].

All models included, \({V}_{0}\), which is the initial value of the expected reward for each action, ‘stay in cage’, ‘poke left’, ‘poke right’. The initial value of the expected reward was restricted to positive values. The value of the expected reward was transformed into a discrete probability distribution using a softmax function, which included a standard inverse temperature parameter (\(\beta\)) controlling randomness (value insensitivity) in choice, as follows where \(a\) is set of possible actions.

Here, trial is denoted by t. Expected values (Q) were updated based on reward prediction errors (\({RPE})\). These prediction errors reflect the difference between the current (\(r)\) and the expected reward, as follows:

The reward value was coded as 1 for a reward, −1 for a loss, and 0 for the null outcome.

Simple Rescorla–Wagner

The simplest RL (Rescorla–Wagner) model included one learning rate parameter, \(\alpha\), in addition to \({V}_{0}\) and \(\beta\), The expected reward was here updated at the same rate regardless of whether the rat observed a reward or a loss.

Pessimistic Rescorla–Wagner

The pessimistic Rescorla–Wagner model aimed to account for a pessimism bias the rat might possess. For this model, we fixed the initial value for the expected reward for the ‘stay in cage’ action to be 0. During fitting, the estimated initial values of \({V}_{0}\) for the ‘poke left’ and ‘poke right’ actions were then permitted to take negative or positive values. This allowed the rat to potentially begin the trial with a pessimism bias, in which it initially believed that selecting left or right sides would lead to a negative outcome (promoting avoidance), which would need to be unlearned through subsequent task engagement.

Asymmetric learning model loss aversion

As depression and optimism have been associated with differences in belief updating to good versus bad outcomes [38], we tested a Rescorla–Wagner model with separate learning rates for rewards and losses.

Additionally, as depression is related to increased loss aversion, this model included a loss aversion parameter [22]. This parameter was implemented as the negative reward value assigned to the loss outcome. Instead of being encoded as a −1 (as in the other models above), this negative reward could take larger values if a rat was more loss averse. Thus, under a loss outcome, the negative reward was scaled by a loss aversion (LA) parameter as follows:

Dynamic learning rate (associability)

Theoretically, learning rate should be greater when the environment is expected to be volatile/unpredictable, while learning rate should decrease in a stable/predictable environment. Prior work suggests this learning rate adjustment may be altered in affective disorders [39]. To evaluate the possibility that differences in learning rate adjustment could account for differences in optimistic behaviour, we included an ‘associability’ RL model that allows learning rate to be adjusted on a trial-by-trial basis, based on the magnitude of previous prediction errors (i.e., larger prediction errors effectively increase learning rates, as they suggest confidence in current expectations should be low). This model includes a parameter (\({\rm{\eta }}\)), which modulates how strongly the strength of learning rate can change after each trial. The update equations for associability (\({\rm{\kappa }}\)), which is used to modulate the baseline learning rate on each trial, are as follows:

Note that a lower bound (L) on the value of \({\rm{\kappa }}\) is required, which we fit as an additional parameter. The expected reward is then calculated by the following equation:

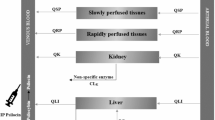

Active inference models

In addition to RL models, we consider active inference (AI) models, which incorporate Bayesian learning and an intrinsic information-seeking drive that could play a role in promoting engagement behaviour (Fig. 2). The categories of actions and outcomes (\(o)\) were set in the same manner here as in the RL models. The main differences from RL, as described further below, are that: 1) the model explicitly learns probabilities of each outcome under each action and estimates its confidence in current beliefs about those probabilities; 2) the reward value of each outcome is encoded in the form of a probability distribution, \(p({o|C})\), where higher probabilities correspond to greater subjective reward; and 3) action selection is driven by an objective function called negative expected free energy (\(Q)\), which jointly maximises expected reward and expected reductions in uncertainty about outcome probabilities.

Expected free energy is calculated by the following equation:

Here, \({\bf{A}}\) is a matrix that encodes the reward probabilities, \(p({o|s})\), as the relationship between action states (\(s)\) and observations (\(o\)). \({\bf{C}}\) is a vector that encodes the reward values for the rat. The \(\sigma\) symbol indicates a softmax function. The first term in the expected free energy equation calculates how much information about the reward probabilities would be gained by taking an action. In other words, if the rat selects a certain action, the magnitude of this term indicates how much it expects to increase its confidence in the best action. In this model actions are considered observable. Note here that the symbol \(q\) indicates an approximate probability distribution. The second term in \({Q}_{{action}}\) calculates the expected probability of reward under each action, based on current beliefs.

The first AI model included five parameters with theoretical links to depression and optimism, similar to those in the RL models. To investigate the differences in belief updating from positive and negative outcomes associated with depression and optimism [38], we included separate forgetting rate parameters (\({\alpha }_{{decay}}\)) for rewards and losses. In the AI framework, forgetting rates are conceptually similar to (but mathematically distinct from) learning rates in RL, as they indicate how strongly the rat forgets its prior beliefs before observing a new reward or loss. The higher the value for the forgetting rate, the more the rat updates its beliefs after a new observation (i.e., larger values down-weight prior beliefs before each update). Learning specifically involves updating the parameters of a Dirichlet distribution (\({\bf{a}}\)) over the likelihood matrix \({\bf{A}}\) after each observation, as shown in the equation below. We included a fixed value (0.5) for learning rate (\({\alpha }_{{update}}\)).

Here we included an additional parameter (\({pr}\)) reflecting the initial expected probability of a reward under either action at the start of the task (prior to learning). Greater values for this parameter reflect more optimistic initial expectations [26, 40].

Similar to the RL model space, we included a loss aversion parameter (\({LA})\) encoding the value of a loss, assigning a fixed positive value of 2 for observing reward:

We included an inverse temperature (action precision) parameter (\(\beta )\) that controlled the level of randomness in action selection:

Finally, we compared this model to a simpler four-parameter AI model that omitted the prior reward probability (\({pr}\)) parameter, where \({pr}\) is fixed at 0.5.

Model comparison

Model simulations for the AI and model-free RL models were run in MATLAB (v.9.12.0, (R2022a)). To estimate the parameters for all models, a variational Bayes algorithm (variational Laplace) was used, which maximises the likelihood of the rat’s actions while incorporating a complexity cost to deter overfitting [41]. To find the best model, we then used Bayesian model comparison across the 4 model-free RL models and 2 AI models [42]. To confirm the recoverability of parameters in the best model, we then simulated behavioural data using the parameters we estimated for each rat (based on the same number of trials each rat performed in the task). Using this simulated behavioural data, we then estimated these parameters and evaluated how strongly the estimates correlated with the true generative values. Recoverability was acceptable for all parameters in the winning model (see Supplementary Materials).

Statistical analysis

All analyses were performed in R (v4.1.1) using functions from the Tidyverse package (v1.3.2; [43]). To explore whether model parameters might jointly differentiate groups, we performed logistic regression analyses with the parameters as predictors and group classification as the outcome variable. Interactions between each parameter and time (day) were included. This was carried out using the glm() function in R with the ‘binomial’ family and the ‘logit’ link function.

Behavioural performance and computational model parameters were modelled using generalised additive mixed-effects models (GAMMs), a semiparametric extension of the generalised linear mixed-effects modelling framework that enables the fitting of nonparametric curves (splines) describing how the mean of an outcome variable \(y\) varies as a smooth function \(f\) of explanatory variable(s) \(x\) (labelled ‘smoothers’) [44,45,46]. A smoothing penalty is applied during the fitting of smooth terms, ensuring that the complexity (i.e., ‘wiggliness’) of \(f(x)\) is appropriately constrained (e.g., reducing \(f(x)\) to a linear function in the event there is insufficient evidence of nonlinearity in the mapping between \(y\) and \(x\)).

GAMMs were estimated using restricted maximum likelihood via the gam() function of the mgcv package (v1.8-40; [46]). All GAMMs took the following generic form:

where Group encoded a two-level factor variable reflecting group allocation (psilocybin, control) and Day encoded the consecutive sequence of test sessions (days 1-14). Thin-plate regression splines [47] were used to model changes in \(y\) over Day for each level of Group. Factor smooths on Day were implemented for each individual rat (ID) to capture random fluctuations in behaviour over successive sessions (this term essentially functions as a time-varying random intercept; for similar approaches, see Corcoran et al. [48]; Cross et al. [49]). The selection of link function \(g(.)\) was informed by the distribution of \(y\) and quality of model fit, which was evaluated using functions from the mgcViz (v0.1.9; [50]) and itsadug (v2.4.1; [51]) packages.

To understand the relationship between the behavioural outcomes and the winning model parameters, we ran Pearson correlations between each model parameter and behavioural outcome measure.

Results

Behavioural results

Rats that received psilocybin tended to achieve more rewards in the reversal learning task than those that received saline (b = 36.1, t(239) = 1.92, p = 0.056). There was a significant difference between smoothers; rats in the psilocybin group achieved significantly more rewards on days 7–14 compared to those in the control group (Fig. 3A). Rats in the psilocybin group showed significantly more losses on average than the control group (b = 15.60, t(218) = 2.04, p = 0.042). Comparison of smoothers indicated that this difference was driven by fewer losses in the control group on days 4–6 (Fig. 3B). As task engagement was optional, one group could have both more wins and more losses than another group due to more frequent engagement.

The first 5 panels in this figure illustrate pairwise comparisons of the behavioural measures across days including (A) rewards, (B) losses, (C) win-stay strategies (logit-scaled), (D) lose-shift strategies (logit-scaled), and (E) stay in cage behaviour (log-scaled). Estimated marginal means are represented with dotted lines (psilocybin group) or solid lines (saline group) with shading indicating SEM. Solid red lines indicate significant differences between groups (p < 0.05). Correlations between behavioural measures presented in F. *p < 0.05, **p < 0.01, ***p < 0.001.

We found no difference in win-stay or lose-shift behaviour between the two groups (Fig. 3C, D). In contrast, the control group selected the action of ‘staying in the cage’ significantly more than the psilocybin group (i.e., reflecting less frequent task engagement; b = −0.11, t(221) = −1.99, p = 0.048). Comparison of smoothing splines further revealed significant reductions in psilocybin group stay-in-cage times on days 5–7 and 12–14 (Fig. 3E).

Rewards showed a strong positive association with the amount of time rats chose to engage in the task (r = 0.93, p < 0.001). Rewards were positively associated with losses (r = 0.69, p < 0.001). This was because the chances of reward and loss both increased with greater task engagement (Fig. 3F).

Model comparisons

When comparing four reinforcement learning (RL) and two active inference (AI) models with Bayesian model comparison we found the winning model to be the five parameter AI model (protected exceedance probability = 1). Model validation and parameter recoverability analyses confirmed the validity and reliability of this model (see Supplementary Materials).

Active inference model results

The inter-correlations for the winning AI model parameters and behavioural results showed several associations in expected directions (Fig. 4F). The strongest association was with number of rewards received and the loss aversion parameter, where lower loss aversion was correlated with more rewards. Rewards were associated with forgetting rates in the expected directions.

The first 5 panels in this figure illustrate pairwise comparisons of the model parameters across days including (A) forgetting rate for losses (logit-scaled), (B) forgetting rate for rewards (logit-scaled), (C) loss aversion (log-scaled), (D) action precision (log-scaled), and (E) prior reward probability (logit-scaled). Estimated marginal means are represented with dotted lines (psilocybin group) or solid lines (saline group) with shading indicating SEM. Solid red lines indicate significant differences between groups (p < 0.05 Correlations between parameters in the best-fit computational (AI) model and behavioural results measures (F). *p < 0.05, **p < 0.01, ***p < 0.001.

The primary finding within these results was that the location within parameter space for each rat changed over time in response to psilocybin. Namely, logistic regressions capturing the variance among all parameters found forgetting rate for rewards was significantly higher in the psilocybin group (b = 0.62, z(295) = 2.034, p = 0.042) and forgetting rate for losses was significantly lower in the psilocybin group (b = 0.57, z(295) = −2.053, p = 0.040). We found a significant interaction between forgetting rate for rewards and day (b = −0.080, z(295) = 2.294, p = 0.022), indicating that the forgetting rate for rewards for the psilocybin group increased more over time. There was a significant interaction between loss aversion and day (b = −0.11, z(295) = −2.580, p = 0.010), indicating that the psilocybin group had smaller loss aversion on the later days of testing. Taken together, the logistic regression found that parameter space change for psilocybin group compared to saline group was as follows: forgetting rates for rewards were higher, forgetting rates for losses were lower, forgetting rate for rewards increased more over time, and loss aversion was lower for the psilocybin group over time. This finding highlights the post-acute effects of psilocybin on the best-fit location within model parameter space.

A secondary analysis of the individual parameters was performed to investigate the shifts in individual parameter values over time. Results for these GAMMS, which did not take into account the other parameters, showed forgetting rates for losses were significantly lower on average in the psilocybin group (b = 0.18, z(267) = −1.99, p = 0.046). This between-group difference was most pronounced on day 1, but remained present across the experiment (Fig. 4A). Although there was no main effect of group for the forgetting rate for rewards, comparison of smoothers revealed this parameter to be significantly higher in the psilocybin group on day 14 (Fig. 4B).

None of the remaining model parameters showed significant between-group main effects. However, smoothing splines differed significantly between groups for both the loss aversion and action precision parameters on specific days. Loss aversion was lower in the psilocybin group on day 13 and 14, suggesting that this reduced anticipated aversiveness of losses over time (Fig. 4C). Action precision was significantly lower for the smoothing splines in the psilocybin group on days 4 and 5 (Fig. 4D). As action precision indicates less randomness in choice, this most likely reflected the fact that rats in this group less deterministically chose to stay in their cage and not perform the task on those days.

Other aspects of behaviour relevant to task performance

Because engagement during the reversal task could be influenced by prior experience with sucrose rewards, response vigour, general locomotor activity and anxiety-like behaviour, we assessed these aspects of behaviour separately. During pre-training (Fig. 5A), where poking into either side of the FED3 resulted in a pellet, there was no overall difference between rats that would go on to be administered psilocybin or saline (Fig. 5B; p = 0.102) and while both groups preferred the right over the left nose-poke (Fig. 5C, D), initial group differences in sucrose collection did not contribute to subsequent task performance. In contrast, we found that during the reversal task (Fig. 5E), the rate of responding (response vigour) showed a trend toward increase vigour after psilocybin for the target (rewarded) nose-poke over the 14 days (Fig. 5F; p = 0.096) but this was not the case for non-target (incorrect) responses (Fig. 5E–G).

A–D Nose-poke training results prior to psilocybin or saline administration. E–G Response rates for psilocybin and saline groups in the reversal learning task. H–K Reversal rates for psilocybin and saline groups during initial and stable performance periods. L–O Open field data for rats that received psilocybin and saline aged matched to cohort in current study. P–R Elevated plus maze data for rats that received psilocybin and saline aged matched to cohort in current study. PSI psilocybin, SAL saline. Data presented are mean ± SEM.

We found that post-administration day-to-day performance variability, as measured by reversal rate, had stabilised by the late reversal learning period (Fig. 5J; sessions 12–14, p = 0.681), where significantly higher rates of reversal were observed for the psilocybin group (Fig. 5K; p > 0.001). This improvement in reversal ability in the later sessions also coincided with the greatest trend towards an effect of psilocybin on total number of rewards received (p = 0.083) Taken together, this suggests that psilocybin could enhance reversal learning to improve reward outcomes (i.e., goal-directed action) over time, rather than a generalised increase in engagement with the operant device. An effect of psilocybin in early learning was not observed but this may be due to performance instability during this initial period (Fig. 5H–I; p = 0.0657 and p < 0.05, respectively).

We have previously shown that psilocybin administered at the same dose used here did not affect effortful responding in rats using a progressive ratio of reinforcement as a measure of incentive motivation [27]. However, to rule out possible contributions to task performance from general locomotor activity and anxiety-like behaviour in the present study, we examined the effects of psilocybin on behaviour in the OF (Fig. 5L) and EPM (Fig. 5P) in a separate cohort of animals. Psilocybin did not alter locomotor activity or change the duration spent in risky or safe zones of either the OF (Fig. 5M–O) or EPM (Fig. 5Q, R; all ps > 0.3217), indicating that these behavioural features did not explain the increase in task performance observed.

Discussion

This study explored the enduring effects of psilocybin on behaviour and information processing in rats, in an effort to further understand the mechanisms of how psilocybin may treat conditions such as depression. Our results support the notion that post-acute effects of psilocybin increase engagement in a reversal learning task, and suggest this may be explained by specific changes in the rats’ location within model parameter space, primarily due to altered forgetting rates and reduced loss aversion. These results could have translational/clinical relevance to conditions, such as major depression, which are linked to a withdrawal from the world, and indeed existing (e.g., behavioural activation) treatments often specifically aim to promote greater engagement. Our results show the increased engagement could be due to increased optimism via an asymmetry in belief updating, highlighting optimism as a possible treatment target.

We investigated the changes in parameter values in response to psilocybin administration in the model using a logistic regression. One’s overall location within a high-dimensional parameter space is an important concept in computational psychiatry, as it can help characterise the way multiple computational factors jointly impact a patient’s decision making. It is this insight into mechanisms of psychiatric disorders that provides promise for precision treatments in psychiatry [9, 12, 13]. Our computational results, where we investigated how psilocybin affected locations with the parameter space of the model, found psilocybin reduced belief updating rates after losses and increased belief updating rates after wins; that is, over time, the rats that received psilocybin forgot more about their previous beliefs when receiving a reward compared to when receiving a loss. These results are in line with an optimism bias, which relates to an asymmetry in belief updating in which individuals learn more from positive than negative outcomes [26, 38, 52, 53]. Interestingly, this biased belief updating manifests as more engagement in the environment. If an individual holds the (biased) belief that an action will lead to a positive outcome, they are more likely to choose that action – engaging more, and enabling themselves to minimise missed opportunities. Through this increased engagement with the world, optimism has been associated with improved quality of life and is suggested to be adaptive [26, 54,55,56,57,58,59,60,61]. As the psilocybin-receiving rats increase the asymmetry in their belief updating, they also increase their engagement with the task. These results, along with complementary behavioural findings ruling out other explanations of increased engagement, support this optimism-based interpretation. Additionally, our results complement recent work showing that the antidepressant properties of ketamine treatment may be due to increased optimism from asymmetry in belief updating [62].

It is important to note that saline treated control rats also showed asymmetry in their belief updating, where they had a lower forgetting rate for losses vs. rewards. This is not surprising, since research shows that some optimism in rats is widely present in healthy animals, and is absent mainly in rat models of depression [63, 64]. Our control group did not undergo any additional experimental interventions to develop pessimism, and would therefore be expected to show some optimism bias [65]. Our findings suggest that psilocybin amplifies the asymmetry in updating seen in wild type rats. Future research on optimism should test the effects of psilocybin in a depression model cohort, such as in a model of chronic mild stress [66].

Our computational results also show that psilocybin reduced loss aversion in rats. The rats that received psilocybin had more losses than the control group, but still engaged more with the task. As the rats had reduced loss aversion, they expected to dislike losses less. This means they were less deterred from engaging in the task than the control group, despite the possibility of a loss. As expected, this was also associated with more rewards. Our results thus suggest that, in addition to asymmetric belief updating, psilocybin may further increase engagement by reducing loss aversion. As loss aversion and pessimism are elevated in depression, these results are potentially promising in understanding how psilocybin may counter these crucial features of this disorder [22, 38, 67,68,69]. Notably, these differences in loss aversion were seen in the later days of the task. This is consistent with human studies showing positive psilocybin effects weeks or months after treatment [70, 71].

Previous studies on psilocybin and decision-making indicate that psilocybin may improve cognitive flexibility [72,73,74]; a further study found that psilocybin may reduce punishment by changing one’s concerns for the outcome of their game partner in a social decision-making task [73]. The current findings add to a growing literature in this area highlighting a range of effects that emerge in different task contexts, and which perhaps have similar underlying mechanisms. Neurobiologically, our finding that changes in task engagement occur post-acutely complements research showing that after psilocybin administration in mice, increased indices of neuroplasticity, measured by dendritic spine density, peaked 7 days post-administration [75]. While the relationships between structural neuroplasticity, decision-making and task engagement remain poorly understood in the context of psilocybin actions, it is plausible that increased task engagement depends on elements of both neural and cognitive flexibility.

Although it is beyond the scope of this paper to directly extend research into the serotonergic effects of psilocybin, our findings might also be relevant to existing neurobiological theories on the role of serotonin. For example, one theory suggests that increased serotonin promotes exploration in order to identify new polices that can then be exploited [76]. As psilocybin is a potent agonist for multiple serotonin receptor subtypes [77], the rats in the present study that explored their environment more would have likely had elevated serotonin signalling (at least acutely), suggesting a neural mechanism underlying our computational and other behavioural results. In our task, increased engagement resulted in more rewards so the rat can then exploit increased engagement after the initial elevation in serotonin availability. This increased engagement policy results in optimistic learning of the task as the rat continues to receive rewards. If serotonin does increase exploration after psilocybin treatment, it may have clinical implications when considering the environment within which a patient will explore and learn their new polices after treatment. In our task, performance also benefited from increased engagement; however, it should be noted that it is unknown at present whether this will generalise to other experimental or real-world contexts in which patients might benefit. Future studies should therefore extend our modelling approach to other environments and test potential links to current work on the neurobiology of serotonin and psilocybin [78,79,80].

Taken together, these results further support a way in which psilocybin treatment has potential to improve core symptoms of depression, associated with anhedonic, apathetic withdrawal, and diminished optimism, by altering specific computational mechanisms that lead to improved optimism and greater engagement with the world [14, 15, 38, 67,68,69, 81, 82]. Further research should consider if these effects on task engagement are specific to psilocybin, or if they can occur for other psychoactive agents (such as opiates), including how such psychoactive agents might manifest in the model parameters. This would be informative not only about the mechanism of psilocybin, but also about different intervention targets. For example, even if opiates increase engagement, the underlying model might not reveal the same kind of belief updating asymmetry found here, which could have additional clinical relevance. To garner a more comprehensive picture from the perspective of alternative computational modelling constructs, future research could also include other model-based RL approaches that utilise a Partially Observable Markov Decision Process (POMDP), in addition to the (POMDP-based) AI models we focused on here [34, 37]. In this context, major differences between POMDPs in AI vs. RL models include, among others, that RL-based POMDPs need not (but could) incorporate Bayesian learning or an explicit directed exploration drive. Another consideration when contrasting AI and model-based RL is that the latter often does not assume partial observability. That is, it is typically assumed that the state is known, and a transition model is used to predict future rewards under possible sequences of state transitions. In contrast, use of POMDP architectures and associated generative models has been a foundational feature in AI (although these models can reproduce full observability using appropriate likelihood functions).

Other future research directions could include testing relevant antagonists and dose-dependence trials to understand the optimal serotonin receptor binding and occupancy required for increased engagement, and potentially evaluate the associated dose-dependence of computational parameter estimates. Finally, it is important to highlight that, as the clinical potential of these results depends on translatability to human participants, it will be crucial to find ways to ensure this is feasible. The current paradigm used here in rats required gathering behaviour over a large number of sequential days, which could be logistically and methodologically challenging, especially in patient samples. Thus, adapted study procedures will likely need to be explored.

Conclusion

In summary, we find that, in rats, psilocybin increases engagement with the environment consistent with an amplified optimism bias. Computational modelling suggests the psilocybin-receiving rats have heightened expectations of reward as a result of changes in relative rates of belief updating from rewards and losses, as well as reduced loss aversion. This result has translational potential, and should motivate confirmation of these effects in human studies.

Data availability

Code and data used presented in this paper are openly available on the Open Science Framework repository (DOI 10.17605/OSF.IO/EU8KH). Note: The original FED3 recordings did not include ‘Stay in Cage’ trials. The original data along with the code implemented to include ‘Stay in Cage’ trials, and processed data are available online.

References

Barrett FS, Doss MK, Sepeda ND, Pekar JJ, Griffiths RR. Emotions and brain function are altered up to one month after a single high dose of psilocybin. Sci Rep. 2020;10:2214.

Lyons T, Carhart-Harris RL. More Realistic Forecasting of Future Life Events After Psilocybin for Treatment-Resistant Depression. Front Psychol. 2018;9:396454.

Mason NL, Mischler E, Uthaug MV, Kuypers KPC. Sub-Acute Effects of Psilocybin on Empathy, Creative Thinking, and Subjective Well-Being. J Psychoactive Drugs. 2019;51:123–34.

Goldberg SB, Pace BT, Nicholas CR, Raison CL, Hutson PR. The experimental effects of psilocybin on symptoms of anxiety and depression: A meta-analysis. Psychiatry Res. 2020;284:112749.

Prouzeau D, Conejero I, Voyvodic PL, Becamel C, Abbar M, Lopez-Castroman J. Psilocybin Efficacy and Mechanisms of Action in Major Depressive Disorder: a Review. Curr Psychiatry Rep. 2022;24:573–81.

Gregorio DD, Aguilar-Valles A, Preller KH, Heifets BD, Hibicke M, Mitchell J, et al. Hallucinogens in Mental Health: Preclinical and Clinical Studies on LSD, Psilocybin, MDMA, and Ketamine. J Neurosci. 2021;41:891–900.

Meccia J, Lopez J, Bagot RC. Probing the antidepressant potential of psilocybin: integrating insight from human research and animal models towards an understanding of neural circuit mechanisms. Psychopharmacology. 2023;240:27–40.

Aday JS, Heifets BD, Pratscher SD, Bradley E, Rosen R, Woolley JD. Great Expectations: recommendations for improving the methodological rigor of psychedelic clinical trials. Psychopharmacology. 2022;239:1989–2010.

Adams RA, Huys QJM, Roiser JP. Computational Psychiatry: towards a mathematically informed understanding of mental illness. J Neurol Neurosurg Psychiatry. 2016;87:53–63.

Browning M, Paulus M, Huys QJM. What is Computational Psychiatry Good For? Biol Psychiatry. 2023;93:658–60.

Chekroud AM, Lane CE, Ross DA. Computational Psychiatry: Embracing Uncertainty and Focusing on Individuals, Not Averages. Biol Psychiatry. 2017;82:e45–7.

Friston K. Computational psychiatry: from synapses to sentience. Mol Psychiatry. 2023;28:256–68.

Huys QJM, Maia TV, Frank MJ. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat Neurosci. 2016;19:404–13.

Chase HW, Frank MJ, Michael A, Bullmore ET, Sahakian BJ, Robbins TW. Approach and avoidance learning in patients with major depression and healthy controls: relation to anhedonia. Psychol Med. 2010;40:433–40.

Cooper JA, Arulpragasam AR, Treadway MT. Anhedonia in depression: biological mechanisms and computational models. Curr Opin Behav Sci. 2018;22:128–35.

Soucy Chartier I, Provencher MD. Behavioural activation for depression: Efficacy, effectiveness and dissemination. J Affect Disord. 2013;145:292–9.

Tindall L, Mikocka-Walus A, McMillan D, Wright B, Hewitt C, Gascoyne S. Is behavioural activation effective in the treatment of depression in young people? A systematic review and meta-analysis. Psychol Psychother Theory Res Pract. 2017;90:770–96.

Sutton RS, Barto AG. Reinforcement Learning, second edition: An Introduction. Cambridge, MA: MIT Press; 2018.

Friston K, FitzGerald T, Rigoli F, Schwartenbeck P, Pezzulo G. Active Inference: A Process Theory. Neural Comput. 2017;29:1–49.

Parr T, Pezzulo G, Friston KJ. Active inference: the free energy principle in mind, brain, and behavior. Cambridge, Massachusetts: The MIT Press; 2022.

Smith R, Friston KJ, Whyte CJ. A step-by-step tutorial on active inference and its application to empirical data. J Math Psychol. 2022;107:102632.

Charpentier CJ, Martino BD, Sim AL, Sharot T, Roiser JP. Emotion-induced loss aversion and striatal-amygdala coupling in low-anxious individuals. Soc Cogn Affect Neurosci. 2016;11:569–79.

Engelmann JB, Berns GS, Dunlop BW. Hyper-responsivity to losses in the anterior insula during economic choice scales with depression severity. Psychol Med. 2017;47:2879–91.

Hertel PT, Gerstle M. Depressive Deficits in Forgetting. Psychol Sci. 2003;14:573–8.

Huh HJ, Baek K, Kwon JH, Jeong J, Chae JH. Impact of childhood trauma and cognitive emotion regulation strategies on risk-aversive and loss-aversive patterns of decision-making in patients with depression. Cognit Neuropsychiatry. 2016;21:447–61.

Sharot T. The optimism bias. Curr Biol. 2011;21:R941–5.

Conn K, Milton LK, Huang K, Munguba H, Ruuska J, Lemus MB, et al. Psilocybin restrains activity-based anorexia in female rats by enhancing cognitive flexibility: contributions from 5-HT1A and 5-HT2A receptor mechanisms. Mol Psychiatry. 2024;27;1–14.

Verharen JPH, Kentrop J, Vanderschuren LJMJ, Adan RAH. Reinforcement learning across the rat estrous cycle. Psychoneuroendocrinology. 2019;100:27–31.

Cora MC, Kooistra L, Travlos G. Vaginal Cytology of the Laboratory Rat and Mouse: Review and Criteria for the Staging of the Estrous Cycle Using Stained Vaginal Smears. Toxicol Pathol. 2015;43:776–93.

Matikainen-Ankney BA, Earnest T, Ali M, Casey E, Wang JG, Sutton AK, et al. eLife. An open-source device for measuring food intake and operant behavior in rodent home-cages. Elife. 2021;10:e66173.

File SE, Lippa AS, Beer B, Lippa (thirsty rat conflict) MT. Animal Tests of Anxiety. Curr Protoc Neurosci. 2004;26:8.3.1–8.3.22.

Prut L, Belzung C. The open field as a paradigm to measure the effects of drugs on anxiety-like behaviors: a review. Eur J Pharmacol. 2003;463:3–33.

Sajid N, Ball PJ, Parr T, Friston KJ. Active Inference: Demystified and Compared. Neural Comput. 2021;33:674–712.

Costa VD, Mitz AR, Averbeck BB. Subcortical Substrates of Explore-Exploit Decisions in Primates. Neuron. 2019;103:533–545.e5.

Kaelbling LP, Littman ML, Cassandra AR. Planning and acting in partially observable stochastic domains. Artif Intell. 1998;101:99–134.

Rao RPN. Decision making under uncertainty: a neural model based on partially observable markov decision processes. Front Comput Neurosci. 2010;4:146.

Safavi S, Dayan P. Multistability, perceptual value, and internal foraging. Neuron. 2022;110:3076–90.

Garrett N, Sharot T, Faulkner P, Korn CW, Roiser JP, Dolan RJ. Losing the rose tinted glasses: neural substrates of unbiased belief updating in depression. Front Hum Neurosci. 2014;8:639.

Brown VM, Zhu L, Wang JM, Frueh BC, King-Casas B, Chiu PH. Associability-modulated loss learning is increased in posttraumatic stress disorder. eLife. 2018;7:e30150.

Sharot T, Riccardi AM, Raio CM, Phelps EA. Neural mechanisms mediating optimism bias. Nature. 2007;450:102–5.

Friston K, Mattout J, Trujillo-Barreto N, Ashburner J, Penny W. Variational free energy and the Laplace approximation. NeuroImage. 2007;34:220–34.

Rigoux L, Stephan KE, Friston KJ, Daunizeau J. Bayesian model selection for group studies — Revisited. NeuroImage. 2014;84:971–85.

Wickham H, Averick M, Bryan J, Chang W, McGowan LD, François R, et al. Welcome to the Tidyverse. J Open Source Softw. 2019;4:1686.

Hastie T, Tibshirani R. Generalized Additive Models: Some Applications. J Am Stat Assoc. 1987;82:371–86.

Lin X, Zhang D. Inference in Generalized Additive Mixed Models by Using Smoothing Splines. J R Stat Soc Ser B Stat Methodol. 1999;61:381–400.

Wood SN. Generalized Additive Models: An Introduction with R, 2nd ed. Boca Raton: Chapman and Hall/CRC; 2017.

Wood SN. Thin Plate Regression Splines. J R Stat Soc Ser B Stat Methodol. 2003;65:95–114.

Corcoran AW, Macefield VG, Hohwy J. Be still my heart: Cardiac regulation as a mode of uncertainty reduction. Psychon Bull Rev. 2021;28:1211–23.

Cross ZR, Corcoran AW, Schlesewsky M, Kohler MJ, Bornkessel-Schlesewsky I. Oscillatory and Aperiodic Neural Activity Jointly Predict Language Learning. J Cogn Neurosci. 2022;34:1630–49.

Fasiolo M, Nedellec R, Goude Y, Wood SN. Scalable Visualization Methods for Modern Generalized Additive Models. J Comput Graph Stat. 2020;29:78–86.

Rij J van, Wieling M, Baayen RH, Rijn H van. itsadug: Interpreting time series and autocorrelated data using GAMMs. 2022.

Garrett N, Sharot T. Optimistic update bias holds firm: Three tests of robustness following Shah et al. Conscious Cogn. 2017;50:12–22.

Sharot T, Guitart-Masip M, Korn CW, Chowdhury R, Dolan RJ. How Dopamine Enhances an Optimism Bias in Humans. Curr Biol. 2012;22:1477–81.

Bateson M. Optimistic and pessimistic biases: a primer for behavioural ecologists. Curr Opin Behav Sci. 2016;12:115–21.

Cohen F, Kearney KA, Zegans LS, Kemeny ME, Neuhaus JM, Stites DP. Differential Immune System Changes with Acute and Persistent Stress for Optimists vs Pessimists. Brain Behav Immun. 1999;13:155–74.

Conversano C, Rotondo A, Lensi E, Della Vista O, Arpone F, Reda MA. Optimism and Its Impact on Mental and Physical Well-Being. Clin Pract Epidemiol Ment Health CP EMH. 2010;6:25–9.

de Ridder D, Fournier M, Bensing J. Does optimism affect symptom report in chronic disease?: What are its consequences for self-care behaviour and physical functioning? J Psychosom Res. 2004;56:341–50.

Dolcos S, Hu Y, Iordan AD, Moore M, Dolcos F. Optimism and the brain: trait optimism mediates the protective role of the orbitofrontal cortex gray matter volume against anxiety. Soc Cogn Affect Neurosci. 2016;11:263–71.

Krittanawong C, Maitra NS, Hassan Virk HU, Fogg S, Wang Z, Kaplin S, et al. Association of Optimism with Cardiovascular Events and All-Cause Mortality: Systematic Review and Meta-Analysis. Am J Med. 2022;135:856–63.e2.

Lee E, Jayasinghe N, Swenson C, Dams-O’Connor K. Dispositional optimism and cognitive functioning following traumatic brain injury. Brain Inj. 2019;33:985–90.

Mulkana SS, Hailey BJ. The Role of Optimism in Health-enhancing Behavior. Am J Health Behav. 2001;25:388–95.

Bottemanne H, Morlaas O, Claret A, Sharot T, Fossati P, Schmidt L. Evaluation of Early Ketamine Effects on Belief-Updating Biases in Patients With Treatment-Resistant Depression. JAMA Psychiatry. 2022;79:1124–32.

Enkel T, Gholizadeh D, von Bohlen und Halbach O, Sanchis-Segura C, Hurlemann R, Spanagel R, et al. Ambiguous-Cue Interpretation is Biased Under Stress- and Depression-Like States in Rats. Neuropsychopharmacology. 2010;35:1008–15.

Rygula R, Papciak J, Popik P. Trait Pessimism Predicts Vulnerability to Stress-Induced Anhedonia in Rats. Neuropsychopharmacology. 2013;38:2188–96.

Richter SH, Schick A, Hoyer C, Lankisch K, Gass P, Vollmayr B. A glass full of optimism: Enrichment effects on cognitive bias in a rat model of depression. Cogn Affect Behav Neurosci. 2012;12:527–42.

Willner P. The chronic mild stress (CMS) model of depression: History, evaluation and usage. Neurobiol Stress. 2016;6:78–93.

Hobbs C, Vozarova P, Sabharwal A, Shah P, Button K. Is depression associated with reduced optimistic belief updating? R Soc Open Sci. 2022;9:190814.

Korn CW, Sharot T, Walter H, Heekeren HR, Dolan RJ. Depression is related to an absence of optimistically biased belief updating about future life events. Psychol Med. 2014;44:579–92.

Strunk DR, Lopez H, DeRubeis RJ. Depressive symptoms are associated with unrealistic negative predictions of future life events. Behav Res Ther. 2006;44:861–82.

Carhart-Harris RL, Bolstridge M, Day CMJ, Rucker J, Watts R, Erritzoe DE, et al. Psilocybin with psychological support for treatment-resistant depression: six-month follow-up. Psychopharmacology. 2018;235:399–408.

Gukasyan N, Davis AK, Barrett FS, Cosimano MP, Sepeda ND, Johnson MW, et al. Efficacy and safety of psilocybin-assisted treatment for major depressive disorder: Prospective 12-month follow-up. J Psychopharmacol. 2022;36:151–8.

Doss MK, Považan M, Rosenberg MD, Sepeda ND, Davis AK, Finan PH, et al. Psilocybin therapy increases cognitive and neural flexibility in patients with major depressive disorder. Transl Psychiatry. 2021;11:1–10.

Gabay AS, Carhart-Harris RL, Mazibuko N, Kempton MJ, Morrison PD, Nutt DJ, et al. Psilocybin and MDMA reduce costly punishment in the Ultimatum Game. Sci Rep. 2018;8:8236.

Torrado Pacheco A, Olson RJ, Garza G, Moghaddam B. Acute psilocybin enhances cognitive flexibility in rats. Neuropsychopharmacology. 2023;48:1011–20.

Shao LX, Liao C, Gregg I, Davoudian PA, Savalia NK, Delagarza K, et al. Psilocybin induces rapid and persistent growth of dendritic spines in frontal cortex in vivo. Neuron. 2021;109:2535–44.e4.

Shine JM, O’Callaghan C, Walpola IC, Wainstein G, Taylor N, Aru J, et al. Understanding the effects of serotonin in the brain through its role in the gastrointestinal tract. Brain. 2022;145:2967–81.

Rickli A, Moning OD, Hoener MC, Liechti ME. Receptor interaction profiles of novel psychoactive tryptamines compared with classic hallucinogens. Eur Neuropsychopharmacol J Eur Coll Neuropsychopharmacol. 2016;26:1327–37.

Erkizia-Santamaría I, Alles-Pascual R, Horrillo I, Meana JJ, Ortega JE. Serotonin 5-HT2A, 5-HT2c and 5-HT1A receptor involvement in the acute effects of psilocybin in mice. In vitro pharmacological profile and modulation of thermoregulation and head-twich response. Biomed Pharmacother. 2022;154:113612.

Hesselgrave N, Troppoli TA, Wulff AB, Cole AB, Thompson SM. Harnessing psilocybin: antidepressant-like behavioral and synaptic actions of psilocybin are independent of 5-HT2R activation in mice. Proc Natl Acad Sci. 2021;118:e2022489118.

Vargas MV, Dunlap LE, Dong C, Carter SJ, Tombari RJ, Jami SA, et al. Psychedelics promote neuroplasticity through the activation of intracellular 5-HT2A receptors. Science. 2023;379:700–6.

Rizvi SJ, Pizzagalli DA, Sproule BA, Kennedy SH. Assessing anhedonia in depression: Potentials and pitfalls. Neurosci Biobehav Rev. 2016;65:21–35.

Robinson OJ, Chase HW. Learning and Choice in Mood Disorders: Searching for the Computational Parameters of Anhedonia. Comput Psychiatry Camb Mass. 2017;1:208–33.

Author information

Authors and Affiliations

Contributions

ELF: Conceptualization, Computational Modelling, Formal Analysis, Writing—Original Draft, Writing—Review & Editing, Visualization. RS: Computational Modelling, Formal Analysis, Writing—Review & Editing. KC: Investigation, Formal Analysis, Writing—Review & Editing, Visualization. AWC: Formal Analysis, Writing—Review & Editing, Visualization. LKM: Investigation, Formal Analysis. JH: Writing—Review & Editing. CJF: Writing—Review & Editing, Visualization.

Corresponding author

Ethics declarations

Competing interests

We would like to acknowledge A/Prof Alexxai Kravitz (Washington University in St Louis) for input into task design and operations and the USONA Institute Investigational Drug Supply Program (Madison, WI) for providing the psilocybin used in these studies. We acknowledge the use of Biorender for aspects of the figures. This work was supported by a National Health and Medical Research Council Grant (Grant No. GNT2011334) to CJF and a Three Springs Foundation Grant awarded to JH. CJF sits on the scientific advisory board for Octarine Bio, Denmark. No other authors report biomedical financial interests or potential conflicts of interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Fisher, E.L., Smith, R., Conn, K. et al. Psilocybin increases optimistic engagement over time: computational modelling of behaviour in rats. Transl Psychiatry 14, 394 (2024). https://doi.org/10.1038/s41398-024-03103-7

Received:

Revised:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41398-024-03103-7

This article is cited by

-

A metaphysics for predictive processing

Synthese (2025)