Abstract

Introduction

Artificial Intelligence (AI) is a rapidly evolving technology, with various applications in dentistry including diagnosis, treatment planning, and prognosis. There are various AI-based applications for dental practitioners, however, their real-world evaluation through deployement studies is scarce, as most of the studies are validation studies. This review explores the potential pitfalls of focusing solely on technical performance metrics when evaluating AI-based applications in dentistry while overlooking the importance of clinical applicability.

Methods

An electronic search was performed on PubMed and Scopus while a manual search was conducted on Google Scholar “Dentistry”, “Dental”, “Artificial Intelligence”, “Deep Learning, “Machine Learning”, “Applications”, “Diagnocat”, “CephX”, “Denti.AI”, “VideaAI”, “Smile Designer”, “Overjet”, “DentalXR.AI”, “Smilo.AI”, “Smile.AI”, “Pearl”, “AI deployment challenges in dental practice”, “AI for treatment planning in dentistry”, “AI in dental imaging”, and “AI-based diagnosis in dentistry”.

Results

The electronic search yielded a total of 34 studies, while 10 additional studies were obtained through a manual search, resulting in a total of 44 studies included in this review. Among the 44 studies analyzed, 26 studies were retrospective, while 7 studies utilized a comparative design. The remaining studies comprised of 3 observational, 5 validation, 2 cross-sectional, and 1 prospective study. Further to evaluate the identified applications, relevant companies were contacted via email. Only one company’s representative responded, offering a limited trial version which was insufficient for evaluating the application’s effectiveness. AI technologies may offer lots of benefits for dental practice by enhancing patient-health-based outcomes, however, real-world applications are necessary to ensure its safety.

Conclusion

This work highlights the need for conducting deployment studies for such AI-based dental applications to translate and implement them into dental practice. Collaboration with stakeholders and dental practitioners to assess the use of such applications is of paramount importance.

Similar content being viewed by others

Introduction

Artificial Intelligence (AI) is emerging as a promising technology in dentistry, but its adoption in clinical practice is limited due to challenges such as the need for large training datasets, validation, data privacy, and deployment of AI-based applications. AI use is being proposed in terms of patient care including improved diagnoses, treatment planning, and prognosis [1]. AI technologies offer the potential to reduce human error, increase efficiency, and provide more precise, data-driven insights in dental care [2].

The dental literature is full of validation studies, which emphasize the vast array of small-scale investigations [3]. These studies predominantly focus on assessing an individual AI-based application tasked with specific objectives [4]. Within such research, the technical precision of these applications is extensively documented, utilizing a diverse set of robust performance metrics. These metrics include sensitivity, specificity, positive and negative predictive values, and the area under the receiver operating characteristic curve (AUROC) or precision-recall (PR) curve [5]. The metrics are crucial as they enable standardized reporting and facilitate comparison between applications. They should always serve as the cornerstone of any assessment of AI-based applications, however, relying solely on these metrics is insufficient. Performance metrics are critical for understanding the capabilities of AI-based applications, but they often fail to capture the broader clinical impact. For example, metrics like sensitivity and specificity provide insights into how well an AI tool can detect or rule out a condition, but they do not address how the tool will integrate into a clinical workflow or how it may affect patient outcomes.

Many practitioners hesitate to adopt AI-based applications in their clinical practice due to their concerns about reliability and potential errors [6]. Lack of transparency and information in terms of the deployment and assessment of AI-based applications are some of the key reasons for the same [7]. To ensure the acceptability and translation of AI into dental practice, there is a need to explore the real-world use of AI-based applications in dentistry.

Therefore, this review aims to explore the potential pitfalls of focusing solely on technical performance metrics when evaluating AI-based applications in dentistry. It also discusses the concerns related to the real-world deployment of these applications in dentistry, with an emphasis on their applicability and integration into clinical practice. The following case study illustrates a hypothetical scenario highlighting the importance of a comprehensive approach that prioritizes clinical impact of AI-driven dental care.

Case presentation

A 12-year-old patient presented for a routine dental examination. The periapical radiograph, analyzed by the in-house AI software, flagged a suspected cavity on the lower first molar (tooth #36). Upon closer examination, the radiolucency appeared atypical, with a jagged outline and no surrounding radiolucency. Reviewing the patient’s dental history revealed a low caries risk and a recent sealant application on tooth #36. These findings suggested a mis-identification by the AI, likely due to an artifact from the fissure sealant.

In the presented case, focusing solely on the AI’s output could have led to unnecessary intervention due to automation bias [8]. The use of AI for caries detection has shown the potential of increased sensitivity, especially in early caries but with decreased specificity (False Positive) these errors are inevitable [9]. There is a need to convert errors or mis-identificationss made by AI applications into clear and informative messages that dentists can easily understand when making clinical decisions.

In this case, by considering the atypical radiographic presentation and the patient’s dental history, the dentist was able to correctly diagnose the finding as an artifact. Radiographic diagnosis serves as the foundation that guides clinicians, yet it alone does not determine a specific outcome. The clinical impact of diagnosing caries also depends on how the clinician responds to the radiograph. Several factors have to be considered such as caries risk, clinical exam, and disease prevalence before any treatment is undertaken.

In essence, the efficacy of the diagnostic imaging process is multifaceted. Therefore Fryback and Thornbury suggested a comprehensive framework for evaluating its efficacy ranging from technical performance to societal impact [10]. This framework is illustrated in Fig. 1 and further explained in detail in the results section. Each level contributes to understanding the effectiveness of imaging technologies in improving patient care and informing healthcare policy decisions. This framework has been suggested for the assessment of the efficacy of AI-related applications in healthcare [11].

Materials and Methods

Research question

What are the concerns associated with the real-world deployment of AI-based applications, particularly regarding their integration into clinical practice and impact on patient outcomes?

Search strategy

A search strategy defining the focus of interest such as dental applications that utilized AI models was developed by the authors. Initially, to evaluate the outcomes, the authors performed a preliminary search based on the specific keywords. The search strategy also included cross-referencing citations from key articles to identify additional relevant studies and expert opinions.

Literature search

A manual Google search was performed initially to identify the most commonly utilized dental propriety applications which were then included in the search strategy to identify relevant studies. An electronic search was conducted using PubMed and Scopus with no restrictions on the year of publication and studies in the English Language to identify relevant literature. Furthermore, a manual search was performed using specific keywords on Google Scholar by the authors to identify studies that were not found in the electronic database search.

Search terms

For a literature search, the following search terms were used: “Dentistry”, “Dental”, “Artificial Intelligence”, “Deep Learning, “Machine Learning”, “Applications”, “Diagnocat”, “CephX”, “Denti.AI”, “VideaAI”, “Smile Designer”, “Overjet”, “DentalXR.AI”, “Smilo.AI”, “Smile.AI”, “Pearl”, “AI deployment challenges in dental practice,” “ AI for treatment planning in dentistry,” “AI in dental imaging,” and “ AI-based diagnosis in dentistry”.

Eligibility criteria

Inclusion criteria:

-

1.

Original peer-reviewed studies

-

2.

Studies related to dental AI-based applications

-

3.

Studies that have been published to date

Exclusion criteria:

-

1.

Studies such as all kinds of reviews including systematic reviews and meta-analysis, conference proceedings, case reports, case series, letter to the editors, editorials, and policy documents.

-

2.

Studies published in languages other than the English Language

Screening process

The initially identified studies were imported to Endnote version 21.0 (Clarivate Analytics) and the duplicates were removed. The title and abstract of each study were then screened as part of the initial screening by authors (AL and AN) analogously to the predetermined eligibility criteria. Any disagreement was resolved by discussion with the third author (FU). After initial screening, the remaining studies underwent full-text screening to determine the final count of the included studies in this review. The data extracted from the included studies was imported into pre-formulated proforma to chart the characteristics of included studies and key findings.

Data extraction

A pre-formulated proforma was drafted using Google Sheets with the following variables: Author, year of publication, study objective, study design, name of AI-based application, key findings, limitation and level of deployment, services provided, availability of demonstration, approval from the Food and Drug Administration (FDA), and whether there are any peer-reviewed studies conducted on the respective AI-based application.

Results

Study selection and search results

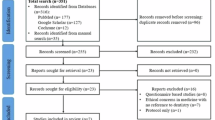

The electronic search conducted through PubMed and Scopus identified a total of 776 studies out of which 51 duplicate records were removed. Out of the remaining 725 studies, 665 studies were excluded based on the irrelevancy of their titles. Following this, 60 studies underwent abstract and full-text screening, resulting in the inclusion of 34 studies from the electronic search. An additional manual search using Google Scholar yielded 123 studies, out of which 10 were included. In total, 44 studies were included in this review.

Summary characteristics of studies

The studies included in this review were published between 2011 and 2024, with most of the studies reporting a sample size ranging from 10 to 68 patients. Among the 44 studies analyzed, 26 studies [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37] were retrospective, while 7 studies [38,39,40,41,42,43,44] utilized a comparative design. The remaining studies comprised of 3 observational [45,46,47], 5 validation [48,49,50,51,52], 2 cross-sectional [53, 54], and 1 prospective study [55] (Supplementary Table). In terms of AI-based application utilization, Diagnocat was the most frequently employed tool, used in 26 studies [13,14,15,16, 18,19,20,21,22,23,24, 27, 28, 30, 32, 33, 35,36,37, 41, 43, 51, 52, 55], followed by CephX in 16 studies [12, 17, 25, 26, 29, 31, 34, 38, 40, 44, 45, 47,48,49,50, 54]. Additionally, 2 studies [39, 53] utilized Denti.AI, while Smile.AI [42] and Smile Designer [46] were each reported by 1 study. The deployment levels of the studies according to Fryback and Thornbury's model varied. Notably, 27 studies [13,14,15,16, 19, 21,22,23, 25, 26, 28, 30, 31, 33, 36, 39,40,41, 43,44,45,46, 50,51,52,53, 55] achieved level 2 deployment that assessed the AI software and tested their efficacy without any real-world deployment. However, 6 studies [20, 24, 38, 42, 47, 49] attained level 1, while another 6 studies [17, 18, 34, 35, 37, 48] reached level 3, and 3 studies [12, 29, 54] reached level 4. Only one study achieved level 5 [32] and level 6 each [27], respectively assessing the effectiveness of the AI-based application in clinical workflows. Regarding discipline, 20 studies had AI-based applications focusing in the field of Orthodontics [12, 17, 21, 25, 26, 29,30,31, 34, 36, 38, 40, 42, 44, 45, 47,48,49,50, 54], followed by 7 studies in Endodontics [13, 33, 35, 39, 41, 43, 52], 6 studies in Dental Radiology [14, 15, 22, 27, 32, 53], and 5 studies in Oral and Maxillofacial Surgery [16, 18, 23, 24, 28]. However, only two studies were in Prosthodontics [19, 46] and Periodontics [20, 51], and only 1 study for Restorative Dentistry [37] and Oral Medicine [55].

Contacting companies

To assess the validity and deployment, we attempted to gather more information about AI-based applications for dentistry by reaching out to different companies via email claiming to offer AI solutions to dental practitioners, as presented in Table 1. The developers that we contacted included Smilo.AI ©, Smile.AI © Videa.AI ©, Diagnocat ©, Denti.AI ©, Smile Designer ©, Overjet ©, CephX ©, Pearl ©, and DentalXR.ai ©. Despite our multiple inquiries via email, only CephX company's developer replied offering a free trial instead to complete access which was needed to evaluate the real-world deployment of the application.

Deployment levels refer to different stages of studies, ranging from technical reports (Level 1) to assessing the broader societal impact with levels 5–6 specifically denoting applications in real-world settings. The multi-level framework, as presented in Fig. 1, for evaluating AI in dental care is predominantly populated by studies focused on deployment Level 1: Technical Efficacy and Level 2: Diagnostic Accuracy Efficacy. These studies primarily involve principles of concepts, and validation that assess AI algorithm performance and diagnostic accuracy compared to expert human dentists. Level 1 studies emphasize parameters like algorithm accuracy, sensitivity to imaging artifacts, and computational efficiency, while Level 2 studies focus on metrics such as sensitivity, specificity, and positive predictive value. In contrast, there are comparatively fewer studies that explore the higher levels of effectiveness, such as Level 3: Diagnostic Thinking Efficacy, Level 4: Therapeutic Efficacy, Level 5: Patient Outcome Efficacy, and Level 6: Societal Efficacy.

Discussion

Fryback & Thornbury’s model in dental AI

Fryback and Thornbury’s hierarchical model has been widely used to evaluate the effectiveness of diagnostic technologies in medicine [10]. However, its application in dentistry has remained unexplored. To the best of the authors’ knowledge, this review is the first to adapt this framework to the dental context, providing a comprehensive evaluation of AI applications in dentistry.

According to this model, most studies included in this review reached levels 1 and 2, indicating that these were primarily validation studies which are conducted in controlled environments. Only two studies progressed to higher levels of the hierarchy, which evaluate AI’s impact on clinical decision-making, patient health outcomes, and societal benefits in real-world settings. Since validation studies do not account for the complexities and variability of real-world settings, the actual benefits and challenges these applications present for both providers and patients remain uncertain.

Notably, several AI-based dental applications, such as Pearl© and Overjet©, included in this review are currently well-known and widely available within the dental landscape. However, despite their popularity, there is a significant lack of studies evaluating their effectiveness. Therefore, it is essential that these applications undergo rigorous scientific evaluation to ensure their accuracy and reliability [56]. Additionally, the authors of this review contacted companies of identified AI-based applications but only one company responded. This leaves us with a significant gap in the transparency of AI-based applications in dental practices. It prompts a need for a closer examination to ensure that commercially available AI-based applications meet established standards for accuracy and dependibility. These applications should only be made accessible in the market after their effectiveness has been thoroughly tested, enabling dental professionals and patients to benefit from their use.

Almost all of the studies included in this review reported a limited sample size. This limitation highlights the challenges in generalizing the findings to broader populations and underscores the need for future research with larger, more diverse samples to ensure the reliability of these AI-based applications [57]. Additionally, there is considerable variation in the focus of AI-based applications across different dental disciplines. For instance, Orthodontics has emerged as the most extensively explored field in terms of using the AI based applications available, likely due to its high demand for precision in diagnosis and treatment planning. In contrast, fields such as Prosthodontics and Periodontics which also require precision remains underrepresented. Future research should focus on expanding the use of AI-based applications to these underexplored areas. This will ensure equitable technological advancements and patient care across all dental specialties.

Regulatory clearance for AI-based applications in dentistry

AI-based dental applications, reported in this review, exhibit varying degrees of regulatory clearance. FDA clearance is an essential step in evaluating the safety, efficacy, and reliability of medical technologies, including AI-based applications [58]. For instance, Diagnocat© application which uses two-dimensional and Cone Beam Computed Tomography (CBCT) imaging to identify signs of cavities, periodontal diseases, and other pathologies is not yet FDA-cleared. This lack of clearance limits its use to research settings or regions where FDA oversight is not a requirement. Furthermore, the lack of FDA clearance also limits user’s confidence and trust in utilizing the application. In contrast, Overjet© has received FDA clearance for specific functionalities, such as evaluating the extent of periodontal bone loss and detecting decay on radiographs [59]. Similarly, another application in use called Denti.AI© is FDA -cleared which caters to transcribing patient-clinician conversations, improving diagnostic accuracy, perio charting, and identifying restorations through radiographs [60]. This clearance ensures that the application meets regulatory standards and has undergone rigorous evaluation for these purposes. However, FDA clearance alone does not guarantee optimal performance or successful clinical integration of these AI applications. Periodic updates and retraining are necessary to maintain their accuracy and relevance, especially as new data emerges, or clinical needs evolve [61].

Recommended future metrics for AI in dentistry

The growing significance of AI in healthcare underscores the need for developing measures that precisely evaluate its effectiveness. For these measurements to adequately represent the real-world settings, they must transcend conventional metrics like accuracy and precision. Metrics that assess AI’s direct effects on patients are suggested, such as Patient-Centered Outcomes like morbidity or mortality rates, quality of life (QoL), and readmission rates [62]. Metrics related to equity are also crucial; these include bias identification, social effect evaluation, and disparity measures to guarantee that AI operates equally across demographic groups [62]. Important criteria in Clinical Decision Support (CDS), such as time efficiency, diagnostic accuracy, and adherence to recommendations, will be used to assess how well AI supports physicians [62]. Additionally, measurements for explainability, including Explainable AI (XAI) will guarantee that the rationale behind AI models is transparent and easy to comprehend. Lastly, Long-Term Impact measurements will evaluate AI’s potential to succeed in the changing healthcare environment. These indicators include sustainability, scalability, and adaptability. AI may be created and applied in ways that enhance patient care, healthcare outcomes, and equity by utilizing these thorough measures.

Future of AI in dentistry

The burgeoning application of AI in dentistry necessitates the development of robust frameworks and guidelines which should clearly illustrate its development, testing, and deployment. This will ensure that AI applications are used safely and effectively in clinical settings, minimizing the risk of errors and maximizing patient benefits. Currently, AI systems in dentistry are often developed in isolation, using different methodologies, biased datasets, and algorithms, which can result in inconsistent outcomes across various applications [63]. Standardization of these factors would help ensure that AI-based dental applications corroborate consistency and reliability regardless of the specific tool being used. This will build trust among dental professionals, who will feel more confident adopting AI applications that have been rigorously tested and meet recognized benchmarks [64].

Regulatory bodies and professional dental associations should also work together with the developers and dental practitioners to ensure a smooth transition of AI-based dental applications into practice. These bodies should take an active role in establishing safety and efficacy standards for AI-based applications by creating clear guidelines that specify how these applications should be evaluated before they are approved for use in dental practices. Professional dental associations can play a key role by providing education and training to dentists on how to effectively use AI-based applications in their practice. Moreover, AI developers can consider the clinical realities of dental practice when designing and testing their applications by closely collaborating with dental practitioners. The developers should also be encouraged to offer trials of their applications to healthcare professionals so that they can test the application in their practices to provide valuable feedback. The stakeholders and policymakers should ensure these applications should undergo independent and rigorous assessments by expert healthcare professionals, data management, and security experts. This will ensure the accuracy, validity, and safety of such applications as they are directly involved in patient care.

Conclusion

This study underscores the potential of AI in enhancing diagnostic accuracy and treatment planning in dentistry. However, it highlights a significant gap between the promise of AI technologies and their real-world integration. While FDA clearance and technical performance metrics are essential, they are insufficient to guarantee clinical applicability and successful adoption. To bridge this gap, rigorous testing, regular updates, and collaborative efforts among stakeholders, including developers, dental practitioners, and regulatory bodies, are crucial. Ensuring transparency, standardizing evaluation criteria, and prioritizing clinical relevance are also vital for effective AI integration into dental practice. Future research should focus on higher levels of deployment, evaluating AI’s societal and therapeutic efficacy to ensure its long-term success and trustworthiness in clinical settings.

Data availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

References

Umer F, Adnan S, Lal A. Research and application of artificial intelligence in dentistry from lower-middle income countries – a scoping review. BMC Oral Health. 2024;24:220. https://doi.org/10.1186/s12903-024-03970-y.

Mahesh Batra A, Reche A. A new era of dental care: harnessing artificial intelligence for better diagnosis and treatment. Cureus. 2023;15:e49319. https://doi.org/10.7759/cureus.49319.

Thurzo A, Urbanová W, Novák B, Czako L, Siebert T, Stano P, et al. Where is the artificial intelligence applied in dentistry? Systematic review and literature analysis. Healthcare (Basel) 2022;10. https://doi.org/10.3390/healthcare10071269.

Umer F, Habib S. Critical analysis of artificial intelligence in endodontics: a scoping review. J Endod. 2022;48:152–60. https://doi.org/10.1016/j.joen.2021.11.007.

Hicks SA, Strümke I, Thambawita V, Hammou M, Riegler MA, Halvorsen P, Parasa S. On evaluation metrics for medical applications of artificial intelligence. Sci Rep. 2022;12:5979. https://doi.org/10.1038/s41598-022-09954-8.

Vodanović M, Subašić M, Milošević DP, Galić I, Brkić H. Artificial intelligence in forensic medicine and forensic dentistry. J Forensic Odontostomatol. 2023;41:30–41.

Vodanović M, Subašić M, Milošević D, Savić Pavičin I. Artificial intelligence in medicine and dentistry. Acta Stomatol Croat. 2023;57:70–84. https://doi.org/10.15644/asc57/1/8.

Umer F, Khan M. A call to action: concerns related to artificial intelligence. Oral Surg Oral Med Oral Pathol Oral Radio. 2021;132:255. https://doi.org/10.1016/j.oooo.2021.04.056.

Devlin H, Williams T, Graham J, Ashley M. The ADEPT study: a comparative study of dentists’ ability to detect enamel-only proximal caries in bitewing radiographs with and without the use of AssistDent artificial intelligence software. Br Dent J. 2021;231:481–5. https://doi.org/10.1038/s41415-021-3526-6.

Fryback DG, Thornbury JR. The efficacy of diagnostic imaging. Med Decis Mak. 1991;11:88–94. https://doi.org/10.1177/0272989x9101100203.

van Leeuwen KG, Schalekamp S, Rutten M, van Ginneken B, de Rooij M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur Radio. 2021;31:3797–804. https://doi.org/10.1007/s00330-021-07892-z.

Mosleh MA, Baba MS, Malek S, Almaktari RA. Ceph-X: development and evaluation of 2D cephalometric system. BMC Bioinforma. 2016;17:499. https://doi.org/10.1186/s12859-016-1370-5.

Orhan K, Bayrakdar IS, Ezhov M, Kravtsov A, Özyürek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int Endod J. 2020;53:680–9. https://doi.org/10.1111/iej.13265.

Kurt Bayrakdar S, Orhan K, Bayrakdar IS, Bilgir E, Ezhov M, Gusarev M, et al. A deep learning approach for dental implant planning in cone-beam computed tomography images. BMC Med Imaging. 2021;21:86. https://doi.org/10.1186/s12880-021-00618-z.

Ezhov M, Gusarev M, Golitsyna M, Yates JM, Kushnerev E, Tamimi D, et al. Clinically applicable artificial intelligence system for dental diagnosis with CBCT. Sci Rep. 2021;11:15006. https://doi.org/10.1038/s41598-021-94093-9.

Orhan K, Bilgir E, Bayrakdar IS, Ezhov M, Gusarev M, Shumilov E. Evaluation of artificial intelligence for detecting impacted third molars on cone-beam computed tomography scans. J Stomatol, Oral Maxillofac Surg. 2021;122:333–7. https://doi.org/10.1016/j.jormas.2020.12.006.

Khalid RF, Azeez SM. Comparison of cephalometric measurements of on-screen images by CephX software and hard-copy printouts by conventional manual tracing. J Hunan Univ Nat Sci. 2022;49:294–302.

Orhan K, Shamshiev M, Ezhov M, Plaksin A, Kurbanova A, Ünsal G, et al. AI-based automatic segmentation of craniomaxillofacial anatomy from CBCT scans for automatic detection of pharyngeal airway evaluations in OSA patients. Sci Rep. 2022;12:11863. https://doi.org/10.1038/s41598-022-15920-1.

Zadrożny Ł, Regulski P, Brus-Sawczuk K, Czajkowska M, Parkanyi L, Ganz S, et al. Artificial intelligence application in assessment of panoramic radiographs. Diagnostics. 2022;12:224.

Amasya H, Jaju PP, Ezhov M, Gusarev M, Atakan C, Sanders A, et al. Development and validation of an artificial intelligence software for periodontal bone loss in panoramic imaging. Int J Imaging Syst Technol. 2024;34:e22973. https://doi.org/10.1002/ima.22973.

Balashova M, et al. Artificial intelligence application in assessment of upper airway on cone-beam computed tomography scans. J Int Dent Med Res. 2023;16:105–10.

Dadoush, M. Pre-implant tracing of mandibular canals on CBCT images using artificial intelligence. University of British Columbia, Retrieved from https://open.library.ubc.ca/collections/ubctheses/24/items/1.0434634 (2023).

Issa J, Jaber M, Rifai I, Mozdziak P, Kempisty B, Dyszkiewicz-Konwińska M. Diagnostic test accuracy of artificial intelligence in detecting periapical periodontitis on two-dimensional radiographs: a retrospective study and literature review. Medicina. 2023;59:768.

Issa J, Kulczyk T, Rychlik M, Czajka-Jakubowska A, Olszewski R, Dyszkiewicz-Konwińska M. Artificial intelligence versus semi-automatic segmentation of the inferior alveolar canal on cone-beam computed tomography scans: a pilot study. Dent Med Probl. 2024;61:893–9.

Kunz F, Stellzig-Eisenhauer A, Widmaier LM, Zeman F & Boldt J. Assessment of the quality of different commercial providers using artificial intelligence for automated cephalometric analysis compared to human orthodontic experts. Journal of Orofacial Orthopedics/Fortschritte der Kieferorthopädie, 2023. https://doi.org/10.1007/s00056-023-00491-1.

Kazimierczak N, Kazimierczak W, Serafin Z, Nowicki P, Lemanowicz A, Nadolska K, et al. Correlation analysis of nasal septum deviation and results of AI-driven automated 3D cephalometric analysis. J Clin Med. 2023;12:6621.

Orhan K, Aktuna Belgin C, Manulis D, Golitsyna M, Bayrak S, Aksoy S, et al. Determining the reliability of diagnosis and treatment using artificial intelligence software with panoramic radiographs. Imaging Sci Dent. 2023;53:199–208. https://doi.org/10.5624/isd.20230109.

Orhan K, Sanders A, Ünsal G, Ezhov M, Mısırlı M, Gusarev M et al. Assessing the reliability of CBCT-based AI-generated STL files in diagnosing osseous changes of the mandibular condyle: a comparative study with ground truth diagnosis. Dentomaxillofacial Radiology 2023;52:20230141. https://doi.org/10.1259/dmfr.20230141.

Paige A. Comparison of Conventional and Automated Cephalometric Analysis Using Cone-Beam Computed Tomography. (University of California, Los Angeles, 2023).

Arslan C, Yucel NO, Kahya K, Sunal Akturk E, Germec Cakan D. Artificial intelligence for tooth detection in cleft lip and palate patients. Diagnostics. 2024;14:2849.

Bor S, Ciğerim SÇ, Kotan S. Comparison of AI-assisted cephalometric analysis and orthodontist-performed digital tracing analysis. Prog Orthod. 2024;25:41. https://doi.org/10.1186/s40510-024-00539-x.

Futyma-Gąbka K, Piskórz M, Smala K, Miazek W, Moskwa M, Różyło-Kalinowska I. Evaluation of effectiveness of a virtual AI-based dental assistant in recognizing mixed dentition on panoramic radiographs. J Stomatol. 2024;77:181–5. https://doi.org/10.5114/jos.2024.143587.

Kazimierczak W, Kazimierczak N, Issa J, Wajer R, Wajer A, Kalka S, et al. Endodontic treatment outcomes in cone beam computed tomography images—assessment of the diagnostic accuracy of AI. J Clin Med. 2024;13:4116.

Kazimierczak W, Kazimierczak N, Kędziora K, Szcześniak M, Serafin Z. Reliability of the AI-assisted assessment of the proximity of the root apices to mandibular canal. J Clin Med. 2024;13:3605.

Kazimierczak W, Wajer R, Wajer A, Kiian V, Kloska A, Kazimierczak N, et al. Periapical lesions in panoramic radiography and CBCT imaging—assessment of AI’s diagnostic accuracy. J Clin Med. 2024;13:2709.

Khabadze Z, Mordanov O, Shilyaeva E. Comparative analysis of 3D cephalometry provided with artificial intelligence and manual tracing. Diagnostics. 2024;14:2524.

Szabó V, Szabó BT, Orhan K, Veres DS, Manulis D, Ezhov M, et al. Validation of artificial intelligence application for dental caries diagnosis on intraoral bitewing and periapical radiographs. J Dent. 2024;147:105105.

Alqahtani H. Evaluation of an online website-based platform for cephalometric analysis. J Stomatol Oral Maxillofac Surg. 2020;121:53–57.

Hamdan MH, Tuzova L, Mol A, Tawil PZ, Tuzoff D, Tyndall DA. The effect of a deep-learning tool on dentists’ performances in detecting apical radiolucencies on periapical radiographs. Dentomaxillofacial Radio. 2022;51:20220122. https://doi.org/10.1259/dmfr.20220122.

Al-Ubaydi AS, Al-Groosh D. The validity and reliability of automatic tooth segmentation generated using artificial intelligence. Sci World J. 2023;2023:5933003. https://doi.org/10.1155/2023/5933003.

Amasya H, Alkhader M, Serindere G, Futyma-Gąbka K, Aktuna Belgin C, Gusarev M et al. Evaluation of a decision support system developed with deep learning approach for detecting dental caries with cone-beam computed tomography imaging. Diagnostics (Basel) 2023;13. https://doi.org/10.3390/diagnostics13223471.

Ali, GAK. et al. in 2023 Eleventh International Conference on Intelligent Computing and Information Systems (ICICIS). 568–73 (2023).

Boubaris M, Cameron A, Manakil J, George R. Artificial intelligence vs. semi-automated segmentation for assessment of dental periapical lesion volume index score: a cone-beam CT study. Computers Biol Med. 2024;175:108527.

Guinot-Barona C, Alonso Pérez-Barquero J, Galán López L, Barmak AB, Att W, Kois JC, et al. Cephalometric analysis performance discrepancy between orthodontists and an artificial intelligence model using lateral cephalometric radiographs. J Esthet Restor Dent. 2024;36:555–65.

Bratu DC, Bălan RA, Szuhanek CA, Pop SI, Bratu EA, Popa G. Craniofacial morphology in patients with Angle Class II division 2 malocclusion. Rom J Morphol Embryol. 2014;55:909–13.

Ceylan G, Özel GS, Memişoglu G, Emir F, Şen S. Evaluating the facial esthetic outcomes of digital smile designs generated by artificial intelligence and dental professionals. Appl Sci. 2023;13:9001.

Kazimierczak N, Kazimierczak W, Serafin Z, Nowicki P, Jankowski T, Jankowska A, et al. Skeletal facial asymmetry: reliability of manual and artificial intelligence-driven analysis. Dentomaxillofacial Radio. 2024;53:52–59.

Szuhanek C, Gâdea Paraschivescu E, Sişu AM, Motoc A. Cephalometric investigation of Class III dentoalveolar malocclusion. Rom J Morphol Embryol. 2011;52:1343–6.

Meriç P, Naoumova J. Web-based fully automated cephalometric analysis: comparisons between app-aided, computerized, and manual tracings. Turkish J Orthod. 2020;33:142–149.

Jeon S, Lee KC. Comparison of cephalometric measurements between conventional and automatic cephalometric analysis using convolutional neural network. Prog Orthod. 2021;22:1–8.

Schulze D, Häußermann L, Ripper J, Sottong T. Comparison between observer-based and AI-based reading of CBCT datasets: an interrater-reliability study. Saudi Dent J. 2024;36:291–5.

Kazimierczak W, Wajer R, Wajer A, Kalka K, Kazimierczak N, Serafin Z. Evaluating the diagnostic accuracy of an AI-driven platform for assessing endodontic treatment outcomes using panoramic radiographs: a preliminary study. J Clin Med. 2024;13:3401.

Bonfanti-Gris M, Garcia-Cañas A, Alonso-Calvo R, Salido Rodriguez-Manzaneque MP, Pradies Ramiro G. Evaluation of an Artificial Intelligence web-based software to detect and classify dental structures and treatments in panoramic radiographs. J Dent. 2022;126:104301.

Zaheer R, Shafique HZ, Khalid Z, Shahid R, Jan A, Zahoor T, et al. Comparison of semi and fully automated artificial intelligence driven softwares and manual system for cephalometric analysis. BMC Med Inform Decis Mak. 2024;24:271. https://doi.org/10.1186/s12911-024-02664-3.

Leśna M, Górna K, Kwiatek J. Managing fear and anxiety in patients undergoing dental hygiene visits with guided biofilm therapy: a conceptual model. Appl Sci. 2024;14:8159.

Deniz-Garcia A, Fabelo H, Rodriguez-Almeida AJ, Zamora-Zamorano G, Castro-Fernandez M, Alberiche Ruano M, et al. Quality, usability, and effectiveness of mhealth apps and the role of artificial intelligence: current scenario and challenges. J Med Internet Res. 2023;25:e44030. https://doi.org/10.2196/44030.

Peters, U & Carman, M. 38 52–60 (IEEE Computer Society, 2023).

Artificial Intelligence and Machine Learning in Software as a Medical Device, https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device?utm_source=chatgpt.com (2025).

Overjet Expands Dental AI Detection Capabilities with 4th FDA Clearance, https://www.overjet.com/blog/overjet-expands-dental-ai-detection-capabilities-with-4th-fda-clearance?utm_source=chatgpt.com (2023).

Press Release, https://www.denti.ai/denti-ai-pioneers-fda-cleared-pathology-detection-in-both-panoramic-and-intraoral-x-rays-with-launch-of-denti-ai-detect#:~:text=TORONTO%2C%20Oct.,product%2C%20Denti.AI%20Detect (2023).

Muralidharan V, Adewale BA, Huang CJ, Nta MT, Ademiju PO, Pathmarajah P, et al. A scoping review of reporting gaps in FDA-approved AI medical devices. npj Digital Med. 2024;7:273. https://doi.org/10.1038/s41746-024-01270-x.

Gallifant J, Bitterman DS, Celi LA, Gichoya JW, Matos J, McCoy LG, et al. Ethical debates amidst flawed healthcare artificial intelligence metrics. npj Digital Med. 2024;7:243. https://doi.org/10.1038/s41746-024-01242-1.

Schwendicke F, Samek W, Krois J. Artificial intelligence in dentistry: chances and challenges. J Dent Res. 2020;99:769–74. https://doi.org/10.1177/0022034520915714.

Ma J, Schneider L, Lapuschkin S, Achtibat R, Duchrau M, Krois J, et al. Towards trustworthy AI in dentistry. J Dent Res. 2022;101:1263–8. https://doi.org/10.1177/00220345221106086.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

AL: Writing—Original Draft, Literature search, Data Curation and Data Analysis. AN: Writing—Original Draft, Literature search, and Data Curation; FU: Conceptualization, Writing—Original Draft, Data Curation, Data Analysis, Critical review and final approval of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there are no conflicts of interest. Ethics approval and consent was not applicable as this study did not involve any human or animal subjects. Fahad Umer serves as an Editorial Board Member on BDJ Open.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lal, A., Nooruddin, A. & Umer, F. Concerns regarding deployment of AI-based applications in dentistry – a review. BDJ Open 11, 27 (2025). https://doi.org/10.1038/s41405-025-00319-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41405-025-00319-7

This article is cited by

-

Ten years of BDJ Open: reflections on a decade of transformation in dentistry

British Dental Journal (2025)