Abstract

Background/Objectives

Accurate dietary assessment is essential for understanding diet–health relationships, yet day-to-day variability in intake complicates the identification of individuals’ usual consumption. This study aimed to determine the minimum number of days required to obtain reliable estimates of dietary intake across various nutrients and food groups using data from a large digital cohort.

Methods

We analyzed dietary data from 958 participants of the “Food & You” study in Switzerland, who tracked their meals for 2–4 weeks using the AI-assisted MyFoodRepo app. Over 315,000 meals were logged across 23,335 participant days. We assessed day-of-week intake patterns using linear mixed models and estimated minimum days for reliable measurement using two complementary methods: (1) the coefficient of variation (CV) method based on within- and between-subject variability, and (2) intraclass correlation coefficient (ICC) analysis across all possible day combinations.

Results

Our findings indicate that water, coffee, and total food quantity can be reliably estimated (r > 0.85) with just 1–2 days of data. Most macronutrients, including carbohydrates, protein, and fat, achieved good reliability (r = 0.8) within 2–3 days. Micronutrients and food groups like meat and vegetables generally required 3–4 days. Linear mixed models revealed significant day-of-week effects, with higher energy, carbohydrate, and alcohol intake on weekends—especially among younger participants and those with higher BMI. ICC analyses further showed that including both weekdays and weekends increased reliability, and specific day combinations outperformed others.

Conclusions

Three to four days of dietary data collection, ideally non-consecutive and including at least one weekend day, are sufficient for reliable estimation of most nutrients. These results support and refine FAO recommendations, offering more nutrient-specific guidance for efficient and accurate dietary assessment in epidemiological research.

Similar content being viewed by others

Introduction

Accurate dietary intake data is essential for studying the relationship between diet and health outcomes, informing public health policies, and developing effective nutritional interventions [1]. However, capturing precise dietary intake data is challenging due to the inherent individual variability in daily food consumption, recall bias, and the burden placed on participants [2]. The complexity of obtaining reliable dietary data has implications for our understanding of the role of diet on health: while many studies suggest that diet influences the risk of chronic diseases such as metabolic disorders, cardiovascular diseases, and cancer [3, 4], the strength of these associations may be limited by the quality of available dietary data [5, 6]. Improving our methods for collecting accurate dietary information is therefore crucial not only for enhancing the reliability of nutritional research but also for clarifying the true extent of the impact of diet on health outcomes.

Recent evidence from Bajunaid et al. has quantified these challenges using doubly labeled water measurements from >6400 individuals [7]. Their analysis revealed systematic under-reporting in more than 50% of dietary reports, with misreporting strongly correlated with BMI and varying by age groups. Previous studies have also shown demographic and anthropometric factors systematically influence dietary reporting behaviors, with BMI affecting measurement both quantitatively and qualitatively, while age and sex independently impact reporting patterns with documented differences in both magnitude and consistency across different population segments [8,9,10,11,12,13].

Beyond these reporting biases, another primary challenge in dietary assessment is the day-to-day variation in food intake. Individuals do not generally consume the same foods in the same amounts every day, leading to variability that can obscure true dietary patterns [14,15,16,17,18,19]. For instance, Basiotis et al. showed in a small cohort of 30 people from a year long study how much variability is present, using daily food intake records for 365 consecutive days. Traditional dietary assessment methods, such as 24-h recalls, food diaries, and food frequency questionnaires (FFQs), also have their limitations. For instance, 24-h recalls rely on participants’ memory and may not capture infrequently consumed foods, while food diaries require substantial effort from participants, potentially leading to underreporting or changes in eating behavior due to the recording process [20].

Minimum days estimation, therefore, addresses the challenge of variability by determining the minimum number of days required to obtain a representative sample of an individual’s usual dietary intake. This approach can substantially reduce participant burden and associated costs, making dietary studies more practical and feasible [21]. By optimizing the number of days needed for accurate data collection, researchers can better allocate resources and reduce overall project costs.

Advanced dietary assessment methods, such as mobile applications for food tracking that incorporate features such as image recognition, barcode scanning, and user-friendly diet recording, offer new opportunities to refine these estimations and improve the accuracy and efficiency of dietary data collection [22,23,24,25]. AI-enhanced tools show particular promise, with validation studies demonstrating comparable accuracy against established methods while substantially improving user experience and cost effectiveness [26,27,28,29]. The “Food & You” study exemplifies this, demonstrating high adherence rates to digital nutritional tracking and enabling the collection of at least two weeks of detailed dietary data from over 1000 participants [30].

To advance the field of dietary assessment, our study leverages the “Food & You” dataset to determine the minimum number of days required to obtain reliable dietary intake. We assessed temporal intake patterns of different food groups, macro- and micronutrients in the full cohort as well as different demographic sub-groups. Our findings indicate that while some nutrients achieve reliable estimates with as few as two days of data, others require up to a week for accurate assessments. These results have important implications for designing efficient and cost-effective dietary studies.

Methods

Data collection and preparation

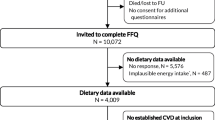

The “Food & You” study involved 1014 adults across Switzerland, with data collected from October 2018 to March 2023. The study was divided into two sub-cohorts, ‘B’ and ‘C’, where participants tracked their meals for 2 and 4 weeks respectively using the MyFoodRepo app. The MyFoodRepo app allowed study participants to track nutrition in three ways, through image taking, barcode scanning, and manual logging. Analysis of usage patterns showed that 76.1% of entries were logged through photographs, 13.3% through barcode scanning, and 10.6% through manual entry. Food items were mapped to a comprehensive nutritional database containing 2129 items, integrating data from multiple sources including the Swiss Food Composition Database [31], MenuCH data [32], and Ciqual [33]. For barcode-scanned products, nutritional values were automatically retrieved from the Open FoodRepo database (https://www.foodrepo.org/) or Open Food Facts database (https://world.openfoodfacts.org/) if not available in the former. Standard portion sizes were primarily sourced from the WHO MONICA study [34] and the Mean Single Unit Weights report from the German Federal Office of Consumer Protection and Food Safety. In cases where standard portions were unavailable, portions were assigned based on similar food items. This standardized approach to portion estimation helped ensure consistency across the dataset. For image-based logging, photographs were first processed by a machine learning algorithm for automatic segmentation and food classification. All logged entries, regardless of input method, underwent a rigorous verification process by trained annotators who reviewed portions, segmentations, and food classifications. The app facilitated direct communication between annotators and participants to clarify any uncertainties about logged items, ensuring data accuracy. The robustness of this method is supported by both a dedicated validation study [28] and comparisons with national dietary survey data [30]. These results indicate that the MyFoodRepo app yields data of high quality and reliability. For further details on data collection, study design and participant adherence rates, see Héritier et al. [30].

In the analysis of minimum days estimation below, we focused on the longest sequence of at least 7 consecutive days for each participant. Days with total energy intake below 1000 kcal were excluded from analysis. This approach was chosen for several reasons: First, it allowed us to include the vast majority of participants (958 out of 1014), with only 56 participants excluded due to insufficient data. Second, the use of consecutive days eliminated the need to account for data gaps. Third, a full week of data enabled us to investigate potential day-of-the-week effects on dietary patterns. For participants with data sequences exceeding 7 days, we calculated the mean intake per weekday to maintain consistency across the sample. Figure 1A, B shows respectively the distribution of days per subject before and after doing the aggregation by mean for the seven days of the week. The remaining 958 participants were 55% female and 45% male. Participants’ ages ranged from 18 to 65 years, with 46% participants aged below 35, and 18% participants aged above 50. BMI categories included 68% participants with a BMI between 18–25, and 29% participants with a BMI of 25 or higher. Figure 1C shows the counts for tracking days for all participants by month, colored by the average temperature for each month during the study period (from 2019 to 2023). Temperature data were obtained from MeteoSwiss [35], which provides monthly mean temperatures of Switzerland. The demographic proportions for age, BMI and sex are shown in Fig. 1D.

A Distribution of tracking days per participant before aggregation, showing minimum and maximum days recorded. B Distribution of days per participant after aggregation to days of the week, with each participant contributing exactly 7 days. C Monthly distribution of tracking days across the Food and You study period, showing seasonal coverage. Bars are colored by the average temperature recorded for each month during the study period. D Demographic characteristics of the study population by age group, BMI category, sex, and season type. E Coefficients and p-values from Linear Mixed Models for various nutrients across different days of the week (Tuesday to Sunday), with Monday as the reference day. Cell values indicate the change in nutrient intake compared to Monday, with color representing statistical significance: red for p < 0.001, orange for p < 0.01, yellow for p < 0.05, and gray for p ≥ 0.05.

Linear mixed model (LMM) analysis

To analyze the effects of age, BMI, sex, or day of the week on nutritional intake, we employed a Linear Mixed Model (LMM) approach using the statsmodels library. The selection of covariates was based on established literature which delineated systematic effect on dietary measurement and reporting. BMI was included as a fixed effect because previous studies have shown it is systematically associated with dietary reporting behaviors, with evidence indicating both quantitative and qualitative impacts on measurement [7, 8, 11, 13]. Age and sex were included as fixed effects based on evidence showing they independently affect dietary reporting patterns, with systematic differences in both the magnitude and patterns of reporting across age groups and between sex [9, 10, 12]. This method allows for the inclusion of both fixed effects (age, BMI, sex, day of the week) and random effects (participant), accommodating the repeated measures design of the dataset. The model formula was specified as follows:

In these models, Monday was used as the reference day, and male was selected as the reference category for sex. We then conducted separate analyses for different demographic subgroups by fitting the model to: (1) age groups (≤35 years, 35–50 years and ≥50 years), (2) BMI categories (18–25 and ≥25), and (3) sex groups (male and female). For the sex subset, we omitted the “sex” variable from the model. To examine seasonal variations, we categorized months into cold (November through April) and warm (May through October) seasons and conducted separate analyses for each season using the same model structure (the proportions of individuals are highlighted in Fig. 1D). From the fitted models, we extracted the model intercept, as well as the coefficients and p-values for the different days of the week. These values were then used to plot a heatmap (shown in Fig. 1E and Supplementary Figs. 1–4), illustrating the effects and significance of the day of the week on nutritional intake.

Minimum days estimation approaches

In this study, we focus on reliability as our primary metric for evaluating dietary assessment adequacy. Reliability refers to the consistency of measurements over repeated assessments - how well the observed intake represents usual intake patterns. We distinguish this from accuracy (how close measurements are to true values) and precision (reproducibility of measurements). Given the absence of a true ‘gold standard’ for free-living dietary intake, reliability provides a practical framework for determining adequate sampling duration.

We employed two complementary methods to determine the minimum number of days required for reliable dietary assessment. The first method, based on variance ratios, explicitly accounts for the scale of measurements through coefficients of variation, allowing us to calculate the exact number of days needed to achieve specific reliability thresholds. This approach is particularly valuable in nutritional studies where different nutrients are measured in varying units. The second method, using intraclass correlation coefficients (ICC), helps evaluate the consistency of measurements across different combinations of days. Together, these methods provide comprehensive insights into both the quantity and optimal timing of dietary measurements needed for reliable assessment.

Approach 1: minimum days estimation using variance ratio method

To determine the minimum number of days needed for reliable dietary assessment, we first employed the Variance Ratio Method, an approach specifically developed for nutritional studies by Black et al. [17] and used in multiple dietary studies [15, 16, 18]. This method accounts for both day-to-day variations within each person’s diet and differences between individuals, while adjusting for the scale of measurements - an important consideration when analyzing different nutrients measured in varying units (e.g., grams, milligrams, or calories).

In this approach, we first modeled each nutritional feature (e.g., carbs eaten) with day of the week as a fixed effect and participant as a random effect using a linear mixed model (statsmodel library). From this model, we extracted two key variance components: the inter-individual variance (σ²b, representing differences between participants) and intra-individual variance (σ²w, representing day-to-day variations within each person’s diet). These were derived from the covariance matrix of the random effects and the residual variance, respectively.

To account for the different scales of nutrients, we converted these variances into coefficients of variation (CV), i.e., between and within CV, by dividing the standard deviations by the mean value (μ) of each nutritional feature:

Following Black et al. [17], we then calculated the variance ratio (VR) as:

This ratio quantifies how much day-to-day variation exists relative to the differences between individuals. A higher ratio indicates that more days of measurement are needed to obtain a reliable estimate of an individual’s typical intake.

Finally, to determine the minimum number of days (D) needed for reliable assessment, we used the formula:

where r represents the desired reliability threshold (correlation between observed and true average intake). We calculated D for three reliability thresholds (r = 0.8, 0.85, and 0.9), with higher thresholds requiring more days of measurement but providing greater confidence in the dietary assessment. These thresholds were chosen based on established guidelines in nutritional epidemiology, where r ≥ 0.8 is considered good reliability for dietary assessment studies [18].

Approach 2: ICC calculation for estimating minimum days of dietary assessment

Intraclass correlation coefficient (ICC) is a statistical measure used to assess the reliability and consistency of measurements across multiple observations. In nutritional epidemiology, ICC can be employed to determine the minimum number of days required for reliable dietary assessment. A higher ICC indicates greater reliability of the dietary intake estimate for a given number of measurement days. When examining ICC values across different numbers of measurement days, reaching a sufficiently high ICC value (typically above 0.8 [36]) indicates the point at which a good estimate of an individual’s typical intake has been achieved.

Importantly, in contrast to our linear mixed model (LMM) analysis - which includes fixed effects such as age, BMI, and sex to account for between-subject variability and day-of-week effects - the ICC analyses were performed on the raw dietary intake data without adjustment for any covariates. This unadjusted approach ensures that our ICC estimates remain directly comparable to previous studies that evaluated dietary variability using raw intake data.

We employed the pingouin library for ICC(3,k) calculations. The ICC(3,k) variant represents a two-way mixed effects model [36], assessing the consistency of dietary intake across k days, thus providing an estimate of reliability for repeated measures. Our goal was to identify the point at which adding more days of dietary data collection yields diminishing returns in terms of improved reliability. We developed two distinct ICC methods to address different aspects of dietary measurement reliability:

-

1.

ICC Method 1 aims to determine how many days are needed to achieve reliable dietary assessment across different nutrients by analyzing the distribution of ICC values across all possible day combinations.

-

2.

ICC Method 2 aims to identify which specific combinations of days (e.g., weekdays vs. weekends) provide the most reliable representation of overall dietary intake.

ICC Method 1: ICC distribution analysis across all possible subsets of days

To assess the stability of ICC across different combinations of days for each nutritional feature, we computed ICC(3,k) for all possible combinations of days, ranging from k = 2 to k = 7. For instance, for k = 2, ICC was computed for each of the 21 unique pairs of days available from the 7-day sequence. This approach yielded a distribution of ICC scores for each value of k, allowing us to examine how ICC values changed with different numbers and combinations of days. These distributions provide insights into the variability of ICC stability for a given value of k < 7 across different temporal patterns within a week. A total of 38 nutritional features had at least one combination that reached the threshold of 0.75.

ICC method 2: minimum days and optimal combination estimation

This method complements the previous method by focusing on the combination within minimum days needed for reliable overall dietary intake estimation. We calculated the mean intake of each nutritional feature per participant across all days as a reference. We then examined subsets of days (1–7) in all combinations, comparing their means to the overall mean using ICC. Unlike the ICC method 1, which assesses consistency within subsets, this method compares subset means to the overall mean. We increased the subset size until reaching an ICC threshold of 0.9, identifying the minimum days, and the best weekday combination thereof, needed to reliably represent overall intake for a given nutritional feature.

Results

Consumption patterns across days of the week

It has been documented that nutrient intake can vary strongly across different days of the week [37,38,39]. To analyze the association of different days of the week and nutrient intake, we used the linear mixed models approach described in the methods section. The results are plotted as a heatmap (Fig. 1E) where the p-values indicate the statistical significance of the coefficients, with thresholds set at <0.001 (red), <0.01 (orange), <0.05 (yellow), and ≥0.05 (gray). The numerical values within the cells represent the coefficients of the day of the week for each nutrient, indicating the change in nutrient intake compared to the intercept (with Monday as reference). Furthermore, the results are segmented into groups as follows: all participants (Fig. 1E), age groups (Supplementary Fig. 1), BMI groups (Supplementary Fig. 2), sex groups (Supplementary Fig. 3) and cold/warm seasons (Supplementary Fig. 4) in order to observe the variations of dietary daily habits with respect to age and BMI.

We observed significant variations across different days for several nutrients and food groups, as shown in Fig. 1E. Alcohol intake showed notable increases from Wednesday through Sunday (all p < 0.001). Similarly, carbohydrate intake increases significantly throughout the week, peaking on Friday, Saturday, and Sunday (increase of 15 g, 29 g and 22 g respectively; p < 0.001). Energy intake followed a similar pattern, with substantial increases on all days, particularly on Friday, Saturday, and Sunday (+202 kcal, +371 kcal, and +250 kcal respectively, p < 0.001). The intake of sodium and sugar also increased significantly on the weekend, with sugar increasing by roughly 10 g on Saturday and Sunday, respectively (p < 0.001). These observations reflect more indulgent dietary habits towards the end of the week. In contrast, non-alcoholic beverages, especially water and coffee, exhibited a significant decrease from Friday to Sunday (p < 0.001). Notably, dairy was the only food group for which there was no significant difference across all days of the week, even when considering subgroups (Supplementary Figs. 1–4).

For individuals aged 35 years or younger (Supplementary Fig. 1A), carbohydrate consumption pattern was similar to the overall study population, with significant increases on Thursday, Friday, and Sunday (+15 g, +30 g and +18 g respectively, p < 0.001). Interestingly, these significant increments were also seen for other weekdays for the older age group, with an increase of about 11 g from Tuesday to Thursday (p < 0.05), shown in Supplementary Fig. 1B. Eaten quantity for the younger age group changed significantly over the weekend with the exception of Sunday where it decreased (−90 g, p < 0.01). In the age group 50+, this increase was not significant for any other days. Fruit consumption significantly increased during the weekend (between 35 and 40 g) as well among the older age participants. Note that fruit consumption in this study comprises consumption of both fruit juices and whole fruits, which might explain why the younger age group displayed a much higher baseline for this food category. Converse to the previous nutrient trends, intake of non-alcoholic beverages such as coffee and water decreased significantly, particularly during the weekend (all p < 0.001) in the younger age group, while this difference was either non-significant (coffee, tea and non-alcoholic beverages) or not as significant (water, p < 0.05) in the older age group. Vegetable consumption on Saturday and Sunday also decreased (−33 g and −28 g, p < 0.01) significantly in the younger age group but non-significantly in the older age group.

For individuals with a healthy BMI (between 18 and 25), carbohydrate and energy intake increased significantly from Friday onwards to Sunday (all p < 0.001), as highlighted in Supplementary Fig. 2A, akin to the observation for the full cohort. Interestingly, the intake of meat, and thus also protein consumption, steadily increased significantly over the week. Similar to the full cohort, intake of non-alcoholic beverages, including coffee and water, showed a notable decrease on the weekend (p < 0.001), while vegetable intake decreased significantly on Saturday (−26g, p < 0.01). For individuals with a BMI greater than 25 (Supplementary Fig. 2B), carbohydrate and energy intake patterns were similar to those with healthy BMI. Conversely, meat consumption was higher among those with high BMI, but its consumption over the week was not significantly higher than the baseline with the exception of Saturday. The consumption of vegetables and grains-cereals decrease was more pronounced over the week among high BMI participants. For potassium intake, the increment was observably the highest on Saturday in both the groups (p < 0.001 for healthy BMI; p < 0.05 for high BMI), while for sodium, the intake was substantially higher on the weekend (Friday to Sunday with p < 0.001) for the healthy BMI group. Sodium intake among high BMI participants was also higher during the weekend, substantially higher on Saturday (p < 0.001), but slightly lower on Sunday (p < 0.01) and Friday (p < 0.05). Finally, when comparing consumption patterns by sex, males had higher increases in consumption of meat, daily eaten quantity, and kcal energy across several days of the week compared to females (Supplementary Fig. 3), consistent with findings from earlier studies [40, 41].

Further examination of seasonal patterns revealed that the observed day-of-week effects persisted across both cold (November-April) and warm (May-October) months (Supplementary Fig. 4). The weekend effect on energy intake remained consistent across seasons (cold: +395 kcal; warm: +344 kcal on Saturdays, p < 0.001), similar to the overall pattern observed in the full cohort. The decrease in non-alcoholic beverage consumption, particularly water, was more pronounced during cold season weekends (−185 g on Saturday, p < 0.001) compared to warm season (−127 g, p < 0.001). Sweet and salty snack consumption showed strong weekend increases in both seasons but was more pronounced during warm months (warm: +256 g vs cold: +219 g on Saturdays, p < 0.001). Vegetable consumption decreased consistently across weekends in both seasons (cold: −25 g; warm: −35 g on Saturdays, p < 0.001), aligning with the overall pattern observed in the full dataset.

These seasonal variations, while noteworthy, are comparatively small, and ultimately reinforce the importance of weekend measurements in dietary assessment, as the fundamental pattern of weekend-weekday differences remains consistent throughout the year. When using mixed models to analyze dietary data, certain limitations may arise that suggest the need for complementary methods like ICC and CV. Mixed models estimate population-averaged effects, which can overlook subtle day-to-day variations within individuals. Additionally, skewed or non-normal data distributions can impact the model’s accuracy, and assumptions about random effects might obscure real patterns. Due to these potential limitations, using ICC and/or CV based approaches may help in capturing within-subject variability and providing a clearer picture of daily dietary behaviors.

Minimum days estimation covariance method

While mixed models revealed weekly consumption patterns, determining the optimal duration for dietary assessment requires analyzing both within-person and between-person variability in nutrient intake. To address this central question, we employed the Coefficient of Variation (CV) method, established by Black et al. [17], to quantify the relationship between within-person and between-person variation to directly calculate the minimum days needed for reliable dietary assessment. By examining the variance ratio between these components, we can determine how many days of data collection are required to achieve specific reliability thresholds, where the correlation (r) between observed and true mean nutrient intake serves as our reliability metric (see Methods).

Figure 2 shows the minimum number of days required to achieve high to very high reliability thresholds of 0.8 (blue), 0.85 (yellow), and 0.9 (green) for various nutrients and food groups. Water, coffee, non-alcoholic beverages, and eaten quantity by weight can be reliably estimated (at r = 0.85) with just 1 day of data collection. Furthermore, with the exception of the eaten quantity feature, we observed for the other three features that their between-subject coefficient of variations (CVb) were quite a bit larger compared to their within-subject coefficient of variations (CVw), thereby reducing their variance ratios. This indicates that individuals have very consistent patterns in their fluid intakes and overall food quantity consumption, but simultaneously, these intakes vary across individuals.

These thresholds represent different levels of desired correlation between observed and true intakes, with 0.9 being the most stringent criterion. Top 30 nutrients are shown; sorted by the variance ratio. Additionally, variance ratio, between-subject coefficient of variation (CVb), and within-subject coefficient of variation (CVw) are shown for the top 35 nutrients.

Similarly, major macronutrient intake like carbohydrates, sugar, fiber, fat, and protein as well as energy consumption (measured in kilocalories), also showed high reliability with only 2–3 days of data (at r = 0.8). Micronutrients, on the other hand, tended to require longer periods for reliable estimation. Saturated fatty acid, beta carotene, vitamins C as well as certain minerals like phosphorus, potassium, calcium and iron typically needed 3–4 days of data (at r = 0.8 threshold) to achieve decent reliability. Moreover, iron intake also displayed high CVw. However, some micronutrients like folate and magnesium required only 2 days. Food groups such as dairy and fruits needed only 2 days for high reliability (r = 0.8), and about 5 days for very high reliability (r = 0.9). Meat and vegetables required longer, about 3 days for high reliability, and correspondingly longer, 7 days, for very high reliability.

Observed ICC patterns in nutrient features

The ICC values for various nutrient features were computed over different numbers of days to determine the optimal duration of dietary assessment for reliable estimates of average nutrient consumption. Figure 3 shows the top 24 distinct food features that reached an ICC threshold of 0.75, the remaining features are shown in Supplementary Fig. 5. The x-axis in both figures represents the number of days, while each boxplot demarcates the ICC scores for combinations of days for each minimum number of days on the x-axis. The ICC thresholds of 0.9 and 0.75 are shown as a horizontal red line in each subplot. The color of the boxplots represents different nutrient groups: blue for micronutrients, red for food groups, and green for macronutrients. The ICC values for all nutrients generally improve as the number of days of dietary data increases. This indicates that more extended periods of dietary assessment tend to provide more reliable estimates, as expected. Largest daywise variability for all nutrients occurs when only 2 days of data are used.

The x-axis represents the number of days, while the y-axis represents the ICC scores. Each subplot corresponds to a different nutrient, with boxplots showing the distribution of ICC scores computed from all possible combinations of days for each number of days on the x-axis. The horizontal red lines in each subplot denote the ICC threshold of 0.75 (good reliability) and 0.9 (excellent reliability). Boxplot colors represent different nutrient groups: blue for micronutrients, red for food groups, and green for macronutrients. Note that ICC does not generally reach 1 due to inherent within-subject variability.

It took 3 days for the median ICC of carbohydrate intake to attain a value of 0.75, which is widely considered the minimum threshold for good reliability [36]. For protein and fat intake, it took longer, about 4–5 days, to attain this threshold. Similarly, the median ICC for alcohol intake required 5 days, while sugar and fiber intakes required just 2–3 days to reach 0.75, and in about 7 days nearly reached the ICC threshold of 0.9, which is considered excellent reliability. Four days was sufficient for the median ICC of energy kcal consumption to reach the 0.75 threshold, while this was just 2 days for eaten quantity. Micronutrients, like magnesium, pantothenic acid, folate, phosphorus and calcium, were able to cross the 0.75 threshold at 4–5 days, as observed by their median ICCs from their day-wise combinations for these days. Interestingly, water and non-alcoholic beverages intakes cross the 0.75 threshold in just 2 days. Many combinations of food groups, like vegetables-fruits and oil-nuts, as well as dairy, attained the 0.75 threshold at 4 days, while for meat and grains-potatoes-pulses, reaching the 0.75 threshold required at least 5 days.

In summary, using this method, most nutrients show a stabilization of ICC values above the 0.75 threshold after 4–5 days, suggesting that to achieve high reliability for most nutrient intake measurements a 4–5 days of dietary logging is desirable. Certain nutrients, typically non-alcoholic drinks such as water, achieve higher ICC values even with fewer days of measurement, indicating that these nutrients may require less extensive data collection to reach reliable estimates.

Minimum days and optimal combination estimation

In order to extract the best (and worst) collection day combinations for each nutrient or food group, we used another approach wherein we compare the ICC of different day combinations to the mean intake of the entire dataset (consisting of a 7 day week). Hence, under the assumption of an entire week being sufficient in capturing the nutritional variation, this method allows to elucidate which days of the week (or their combinations) lead to highest (or lowest) ICC values when comparing to the full week.

Figure 4 highlights the best and worst day combinations at different number of days across various nutritional features (for the full set of nutrition features, see Supplementary Figs. 6, 7). Since the assumption of one week being sufficient to capture variation already loses some precision, we set the ICC threshold in the assessment below at 0.9, indicating excellent reliability. In order to achieve this threshold, carbohydrate intake required 3 days that were spread across the week (Monday, Wednesday and Saturday) for the best combination. The worst combination of 3 days also reached the 0.9 threshold, but the days were continuous weekdays from Monday to Wednesday. Similarly, for protein and fat intake, it required 3 days minimum for the best combination to cross the 0.9 threshold and included two weekdays and one weekend day (Saturday for both protein and fat), while their worst 3 day combination was below the 0.9 threshold. For fiber, the best combination of 2 days (Monday and Wednesday) attained the 0.9 threshold precisely, although with 3 days, even the worst combination was above the excellent reliability threshold. For alcohol, the alternating day combination at 3 days resulted in being the best combination which surpassed the threshold, while the worst combination at even 4 days - which were all continuous weekdays - was below the threshold. Eaten quantity and water intake required only one day of collection (Wednesday) to reach the reliability threshold. The same can be observed for non-alcoholic beverages group (Supplementary Fig. 6) Coffee intake nearly achieved this threshold with just one day of data (Tuesday). Interestingly, when adopting a more conservative approach by increasing the collection period to 2 days, the optimal combination for all four nutritional features (eaten quantity, water, non-alcoholic beverages and coffee) was consistently Monday and Friday. Similarly, extending to a 3 day collection period, the best combination was Monday, Tuesday, and Friday for all these features (except for non-alcoholic beverages).

For each nutrient and at each number of days, the day combinations which yielded the highest (in color) and lowest ICC scores (in gray) are shown. The plot is ranged between ICC values of 0.75–1.0, with the ICC reliability threshold at 0.9 shown as a red line - points lying below this range are not shown.

A 3-day combination of Monday, Tuesday, and Thursday precisely reached the 0.9 reliability threshold for meat intake. Notably, when extending the collection period to 4 days, even the least optimal combination - consisting of four consecutive days - surpassed this threshold. This suggests that any 4-day period within a week is sufficient to accurately capture an individual’s meat consumption patterns. For dairy intake, the least optimal combination at 3 days, consisting of two weekend days, had exactly reached the 0.9 threshold, while the best combination consisted of Monday, Tuesday and Sunday. Bread consumption needed 4 days for its best combination to surpass the 0.9 threshold. The collective vegetables and fruit group required just 3 days for the worst day combination to reach the threshold, while the best combination included only weekdays (Monday, Tuesday and Thursday).

Interestingly, the optimal 2-day combination for iron intake (Monday and Saturday) exceeded the 0.9 threshold. However, unlike other features, even some 6-day combinations that included both Monday and Saturday fell below this threshold. This unexpected result suggests that the observed patterns in iron intake may be subject to high variability or influenced by factors not fully captured in our data. For vitamin C, the best combination at 3 days (Monday, Tuesday and Saturday) reached the 0.9 threshold exactly, although a more conservative choice of 4 days yields ICC values above 0.9 even for its least optimal combination. Other vitamins like B1, B2, B6 and D required at least 4–5 days for the most optimal combination to cross the threshold, while vitamin B12 required just 3 days (Supplementary Fig. 7). For saturated fatty acids, the results were similar to vitamin C, with 4 days yielding ICC values above 0.9 even for the least optimal combination. Polyunsaturated fatty acids and monounsaturated fatty acids, however, required more days (Fig. 3 and Supplementary Fig. 7 respectively): only the best 5-day combination crossed the 0.9 threshold, while some 5-day combinations produced ICC values below 0.75, highlighting greater variability in intake.

Discussion

Recent advances in digital dietary assessment tools offer promising opportunities to enhance data collection in nutritional epidemiology. For instance, the “Food & You” study, consisting of over 1000 participants who tracked their dietary intake using the AI-assisted MyFoodRepo app, used such a digital approach to capture detailed and accurate dietary intake in a real-world setting [30]. However, despite the advantages of such technologies, it is still crucial to determine the minimum number of days required for reliable dietary intake estimation, in order to design nutritional studies and extract meaningful individual dietary profiles. Optimizing the duration of dietary assessment offers multiple benefits: it can reduce participant fatigue and dropout rates while simultaneously improving the cost-effectiveness and feasibility of large-scale nutritional studies. To determine the ideal duration of data collection, a sufficiently powered study with long-term data is needed. This allows for systematic analysis of how reducing data collection periods affects the reliability of dietary intake estimates. While other studies may have also collected extensive dietary data, our analysis, based on the Food & You dataset, represents one of the most comprehensive examinations of this specific question to date. The Food & You study encompasses more than 46 million kcal collected from 315,126 dishes over 23,335 participant days [30], providing a robust foundation for our investigation. The current study thus offers unique quantitative measures that can guide study planning across various levels of nutrient information, from micronutrients to broad food groups, and at different reliability thresholds.

Our Linear Mixed Model (LMM) analysis revealed distinct weekly patterns in nutrient intake, highlighting the variability of consumption profiles both across and within individuals, as well as across seasons. Significant increases in energy, carbohydrate, and alcohol intake were observed on weekends, particularly on Saturdays, indicating more indulgent dietary habits during these periods. For instance, carbohydrate intake increased by 29 g on Saturdays (p < 0.001) compared to the Monday baseline, while energy intake rose by 371 kcal (p < 0.001). These weekend effects persisted across seasons, though with varying magnitudes - energy intake showed similar increases during both cold (395 kcal) and warm (344 kcal) season Saturdays, suggesting the robustness of these weekly patterns. Concurrently, we observed significant decreases in the consumption of non-alcoholic beverages, especially water and coffee, from Friday to Sunday (p < 0.001). These patterns were more pronounced in younger individuals (aged 35 or below) and those with higher BMI. Sex differences were also apparent, with males exhibiting higher increases in meat consumption and overall eaten quantity across several weekdays compared to females. The persistence of these weekly patterns across seasons, despite variations in their magnitude, underscores the importance of accounting for day-of-week effects, as well as demographic factors, in dietary assessments and interventions. The observed seasonal variations in dietary patterns, such as stronger decreases in vegetable consumption during cold season weekends and more pronounced increases in sweet and salty snack consumption during warm season weekends, suggest that while weekly patterns remain consistent, their manifestation may be modulated by seasonal factors.

Through the Coefficient of Variation (CV) method, we quantified the relationship between within-person and between-person variation to directly calculate the minimum days needed for reliable dietary assessment. We found that water, coffee, non-alcoholic beverages, and the quantity eaten by weight could be reliably estimated (r = 0.85) with just one day of data collection (Fig. 2). For major macronutrients (carbohydrates, sugar, fiber, fat, and protein) and energy consumption, only 2–3 days of data were required for high reliability (r = 0.8). Micronutrients generally required longer periods for reliable estimation - saturated fatty acids, beta carotene, vitamin C, and minerals like phosphorus, potassium, calcium, and iron typically needed 3–4 days of data to achieve good reliability (r = 0.8).

To complement and validate these findings, we also employed the ICC method, which largely corroborated our CV results while providing additional insights about optimal day combinations. The ICC analysis confirmed that the required number of days varies considerably among different nutrients and food groups. Consistent with our CV findings, water, non-alcoholic beverages, coffee, and total daily eaten amount could be reliably estimated (ICC > 0.9) with as little as 1–2 days of data. For macronutrients, carbohydrate intake achieved good reliability (ICC > 0.75) with 3 days of data, while protein and fat intake required 4–5 days to reach the same threshold (Fig. 3). Our analysis of optimal day combinations revealed that spreading these days across the week, including both weekdays and weekend days, generally yielded higher reliability (Fig. 4). For instance, the best 3-day combination for carbohydrates included Monday, Wednesday, and Saturday, which precisely reached the excellent reliability threshold (ICC = 0.9). Food groups like meat, vegetables, and alcohol required at least 3–4 days for good reliability, with meat intake reaching the 0.9 reliability threshold with a specific 3-day combination of Monday, Tuesday, and Thursday. Micronutrients typically exhibited more variability, with components like magnesium, pantothenic acid, folate, phosphorus, and calcium requiring 4–5 days to cross the 0.75 ICC threshold. This alignment between the CV and ICC methods reinforces the robustness of our findings while providing comprehensive guidelines for study design. While our LMM analysis incorporated covariates to account for known systematic measurement biases [7,8,9,10,11,12,13], our ICC analyses deliberately used unadjusted data to maintain comparability with existing literature. This allowed us to control for important sources of variation in our day-of-week effects analysis while ensuring that our reliability estimates could be directly compared to previous studies.

We benchmarked our minimum days observations from the covariance (CV) method against two comparable studies, Pereira et al. [15] and Palaniappan et al. [16], which also employed the same method for several macro- and micro-nutrients. Several of these nutrients showed notable similarities for minimum days. For instance energy intake, the minimum days in our study was 2 days (at r = 0.8), which closely matches with Pereira et al. for both males and females, who reported 3 days each (at r = 0.8). This also aligns reasonably with Palaniappan et al., who found 2 days for men and 4 days for women (at r = 0.8). Similarly, for carbohydrate intake, our study yielded 2 days at r = 0.8, which is strikingly consistent to both other studies at 2–3 days. Fiber intake also showed some alignment, with our study indicating 2 days and 3–4 days for Pereira et al. Iron intake also showed strong agreement, with our study indicating 3–5 days (r = 0.8 and r = 0.85 respectively), while Pereira et al. found 4 days for females, and Palaniappan et al. reported 4 days for both men and women. For calcium, both our study and Pereira et al. reported 4 days (for both males and females), while just 2 days by Palaniappan et al. Saturated fat shows a strong match as well, with our study indicating 5 days and Pereira et al. reporting the same for females. Cholesterol intake in our study was 6 days, aligning closely with Pereira et al.‘s finding of 7 days for females. Protein intake, however, was quite dissimilar across the three studies, where we report ~3 days, while Pereira et al. reported 8 days for males and 4 days for females, and Palaniappan et al. indicated 3 days for men and 6 for women. This was also the case for total fat consumption, where we found the minimum days to be 3–4 days (at r = 0.8 and r = 0.85 respectively), while ranging between 3 and 7 days in Pereira et al. and 2–5 days in Palaniappan et al.’s study.

Our findings largely align with and refine the recommendations set forth by the Food and Agriculture Organization (FAO) [42], which suggests that a minimum of three to four days of dietary intake data, including a weekend day, is generally required to reliably characterize an individual’s usual intake of energy and macronutrients. Indeed, our results from both the ICC and CV methods corroborate this guideline, indicating that for most macronutrients and energy intake, 3–4 days of data collection yield reliable estimates. Importantly, our analysis of day-of-week effects and optimal day combinations strongly supports the FAO’s emphasis on including weekend days, as we found substantial variations in intake patterns between weekdays and weekends for many nutrients. However, our study provides a more nuanced understanding by demonstrating that the required number of days can vary strongly depending on the specific nutrient or food group of interest. For instance, while some dietary components like water and coffee intake can be reliably estimated with just 1–2 days of data, others, particularly micronutrients and certain food groups, may require up to 5–7 days for very high reliability. These insights offer more precise guidelines for designing dietary assessment protocols.

Reliable dietary assessment is essential for accurately characterizing exposure in nutritional epidemiology. Studies have shown that imprecise dietary measurement may obscure associations with chronic disease outcomes [10, 43]. Our findings suggest that optimizing the number of assessment days would not only improve the reliability of dietary intake estimates, but would also strengthen exposure–outcome analyses by reducing misclassification. Recent validation studies of digital dietary assessment tools reveal persistent methodological challenges [28, 44], demonstrating omissions and estimation errors in self-reported intake, particularly for discretionary items, beverages, and hidden ingredients, with portion size errors. These findings align with our observations on intake variability and reinforce that while digital approaches may improve data collection efficiency [26, 27, 29], they remain subject to fundamental limitations of self-reporting - highlighting the importance of optimizing data collection duration to maximize reliability while minimizing participant burden. The predominant use of image-based food logging (76% of entries) in our study, combined with dual verification through AI and expert annotators, likely enhanced data quality compared to traditional written food diaries. This approach reduced recall bias through real-time capture and allowed direct verification of reported foods. While image-based logging may miss certain details like hidden ingredients or exact portions, our findings’ alignment with previous studies using different logging methods suggests the approach did not substantially bias our minimum days estimates.

While our study provides valuable insights into the minimum days required for reliable dietary assessment, several limitations should be acknowledged. Firstly, our analysis is based on a single cohort study conducted exclusively in Switzerland. As such, the findings may not be fully generalizable to other populations or cultural contexts with different dietary patterns and habits. Additionally, while the “Food & You” study included over 1000 participants, its sampling methods did not yield a fully representative population sample, which may limit the broader applicability of our results. Furthermore, although the MyFoodRepo app collects accurate dietary data, it’s important to note that the information is still self-reported, which introduces potential biases inherent to this method of data collection. While all the collected data was verified by expert human annotators, challenges like selective reporting or changes in eating behavior due to the act of recording remain possible. Our study also lacks validation against objective comparators such as biomarkers or controlled feeding studies that would serve as external reference standards. Despite this limitation, the value of our approach lies in its internal consistency across two complementary analytical methods (CV and ICC), its alignment with previous literature findings, and its application to a uniquely comprehensive real-world dataset verified through dual AI and human annotation. Lastly, while our study duration allowed for the analysis of weekly patterns, longer-term studies might reveal additional insights into dietary variability over extended periods. Given these limitations, further research is needed to validate our findings across diverse populations, cultural settings, and longer time frames. Future studies should also aim to compare digital dietary assessment tools like MyFoodRepo with other methods to further establish their reliability and potential advantages in nutritional research.

In conclusion, our study provides insights into the optimal duration of dietary data collection, offering a nuanced understanding that can enhance the design and implementation of nutritional research. Determining the minimum number of days required for reliable dietary intake assessment across various nutrients and food groups may enable more efficient, cost-effective, and participant-friendly dietary studies. These findings have implications for both personalized nutrition approaches and broader public health policies. The variability we observed in data collection requirements across different nutrients underscores the importance of tailoring study designs to specific research questions or interventions. Moreover, our results highlight the value of leveraging real-world data and real-world evidence from digital cohorts, demonstrating how tools like AI-assisted food tracking apps such as MyFoodRepo can contribute to more accurate and comprehensive dietary assessments. As we move towards an era of precision nutrition, these insights could be important for developing more targeted and effective dietary interventions, ultimately contributing to improved health outcomes at both individual and population levels. Future research should build upon these findings, exploring their applicability across diverse populations and cultural contexts, and further investigating the potential of digital tools in nutritional epidemiology.

Data availability

The data that support the findings of this study are not publicly available due to privacy considerations. However, data can be made available upon request from the corresponding author.

Code availability

The code used for all analyses in this study is available in the GitHub repository at https://github.com/digitalepidemiologylab/mindays-estimation-paper. This includes code for Linear Mixed Models, ICC calculations, and Coefficient of Variation analyses. The repository contains the scripts used to generate all figures and statistical analyses presented in this paper.

References

Thompson FE, Kirkpatrick SI, Subar AF, Reedy J, Schap TE, Wilson MM, et al. The National Cancer Institute’s Dietary Assessment Primer: a resource for diet research. J Acad Nutr Diet. 2015;115:1986–95.

Thompson FE, Subar AF. Chapter 1 - Dietary assessment methodology. In: Coulston AM, Boushey CJ, Ferruzzi MG, Delahanty LM, editors. Nutrition in the prevention and treatment of disease, 4th ed. Academic Press; 2017. p. 5–48. Available from: https://www.sciencedirect.com/science/article/pii/B9780128029282000011.

Riboli E, Norat T. Epidemiologic evidence of the protective effect of fruit and vegetables on cancer risk. Am J Clin Nutr. 2003;78:559S–569S.

Aune D, Giovannucci E, Boffetta P, Fadnes LT, Keum N, Norat T, et al. Fruit and vegetable intake and the risk of cardiovascular disease, total cancer and all-cause mortality-a systematic review and dose-response meta-analysis of prospective studies. Int J Epidemiol. 2017;46:1029–56.

Bailey RL. Overview of dietary assessment methods for measuring intakes of foods, beverages, and dietary supplements in research studies. Curr Opin Biotechnol. 2021;70:91–6.

Grandjean AC. Dietary intake data collection: challenges and limitations. Nutr Rev. 2012;70:S101–4.

Bajunaid R, Niu C, Hambly C, Liu Z, Yamada Y, Aleman-Mateo H, et al. Predictive equation derived from 6497 doubly labelled water measurements enables the detection of erroneous self-reported energy intake. Nat Food. 2025; Available from: https://doi.org/10.1038/s43016-024-01089-5.

Ravelli MN, Schoeller DA. Traditional self-reported dietary instruments are prone to inaccuracies and new approaches are needed. Front Nutr. 2020;7:90.

Burrows TL, Ho YY, Rollo ME, Collins CE. Validity of dietary assessment methods when compared to the method of doubly labeled water: a systematic review in adults. Front Endocrinol. 2019;10:850.

Subar AF, Freedman LS, Tooze JA, Kirkpatrick SI, Boushey C, Neuhouser ML, et al. Addressing current criticism regarding the value of self-report dietary data12. J Nutr. 2015;145:2639–45.

Livingstone MBE, Black AE. Markers of the validity of reported energy intake. J Nutr. 2003;133:895S–920S.

Johansson G, Wikman Å, Åhrén AM, Hallmans G, Johansson I. Underreporting of energy intake in repeated 24-hour recalls related to gender, age, weight status, day of interview, educational level, reported food intake, smoking habits and area of living. Public Health Nutr. 2001;4:919–27.

Heitmann BL, Lissner L. Dietary underreporting by obese individuals–is it specific or non-specific?. BMJ. 1995;311:986–9.

Basiotis PP, Welsh SO, Cronin FJ, Kelsay JL, Mertz W. Number of days of food intake records required to estimate individual and group nutrient intakes with defined confidence. J Nutr. 1987;117:1638–41.

Pereira RA, Araujo MC, Lopes Tde S, Yokoo EM. How many 24-hour recalls or food records are required to estimate usual energy and nutrient intake?. Cad Saude Publica. 2010;26:2101–11.

Palaniappan U, Cue RI, Payette H, Gray-Donald K. Implications of day-to-day variability on measurements of usual food and nutrient intakes. J Nutr. 2003;133:232–5.

Black AE, Cole TJ, Wiles SJ, White F. Daily variation in food intake of infants from 2 to 18 months. Hum Nutr Appl Nutr. 1983;37:448–58.

Presse N, Payette H, Shatenstein B, Greenwood CE, Kergoat MJ, Ferland G. A minimum of six days of diet recording is needed to assess usual vitamin K intake among older adults. J Nutr. 2011;141:341–6.

Lanigan JA, Wells JCK, Lawson MS, Cole TJ, Lucas A. Number of days needed to assess energy and nutrient intake in infants and young children between 6 months and 2 years of age. Eur J Clin Nutr. 2004;58:745–50.

Shim JS, Oh K, Kim HC. Dietary assessment methods in epidemiologic studies. Epidemiol Health. 2014;36:e2014009.

Carriquiry AL. Estimation of usual intake distributions of nutrients and foods. J Nutr. 2003;133:601S–8S.

Zhao X, Xu X, Li X, He X, Yang Y, Zhu S. Emerging trends of technology-based dietary assessment: a perspective study. Eur J Clin Nutr. 2021;75:582–7.

Beijbom O, Joshi N, Morris D, Saponas S, Khullar S. Menu-match: restaurant-specific food logging from images. IEEE Winter Conf Appl Comput Vis. 2015;2015:844–51.

Zahedani AD, McLaughlin T, Veluvali A, Aghaeepour N, Hosseinian A, Agarwal S, et al. Digital health application integrating wearable data and behavioral patterns improves metabolic health. Npj Digit Med. 2023;6:216.

Bouzo V, Plourde H, Beckenstein H, Cohen TR. Evaluation of the diet tracking smartphone application KeenoaTM: a qualitative analysis. Can J Diet Pr Res. 2022;83:25–9.

Rogers B, Somé JW, Bakun P, Adams KP, Bell W, Carroll DA, et al. Validation of the INDDEX24 mobile app v. a pen-and-paper 24-hour dietary recall using the weighed food record as a benchmark in Burkina Faso. Br J Nutr. 2022;128:1817–31.

Ji Y, Plourde H, Bouzo V, Kilgour RD, Cohen TR. Validity and usability of a smartphone image-based dietary assessment app compared to 3-day food diaries in assessing dietary intake among Canadian adults: randomized controlled trial. JMIR MHealth UHealth. 2020;8:e16953.

Zuppinger C, Taffé P, Burger G, Badran-Amstutz W, Niemi T, Cornuz C, et al. Performance of the digital dietary assessment tool MyFoodRepo. Nutrients. 2022;14. Available from: https://www.mdpi.com/2072-6643/14/3/635.

Moyen A, Rappaport AI, Fleurent-Grégoire C, Tessier AJ, Brazeau AS, Chevalier S. Relative validation of an artificial intelligence–enhanced, image-assisted mobile app for dietary assessment in adults: randomized crossover study. J Med Internet Res. 2022;24:e40449.

Héritier H, Allémann C, Balakiriev O, Boulanger V, Carroll SF, Froidevaux N, et al. Food & You: a digital cohort on personalized nutrition. PLOS Digit Health. 2023;2:1–20.

Federal Food Safety and Veterinary Office. Swiss food composition database. 2025. Available from: https://valeursnutritives.ch/en.

Chatelan A, Marques-Vidal P, Bucher S, Siegenthaler S, Metzger N, Zuberbühler CA, et al. Lessons learnt about conducting a multilingual nutrition survey in Switzerland: results from menuCH pilot survey. Int J Vitam Nutr Res Int Z Vitam. 2017;87:25–36.

French Agency for Food Environmental and Occupational Health and Safety. ANSES-CIQUAL food composition table. 2025. Available from: https://ciqual.anses.fr/.

Dinauer M, Winkler A, Winkler G. Moncia Mengenliste. 1991. Available from: https://www.ble-medienservice.de/0654/monica-mengenliste.

MeteoSwiss. Area-mean temperatures of Switzerland. Federal Office of Meteorology and Climatology MeteoSwiss; 2025. Available from: https://www.meteoswiss.admin.ch/climate/climate-change/changes-in-temperature-precipitation-and-sunshine/swiss-temperature-and-precipitation-mean/data-on-the-swiss-temperature-mean.html.

Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med. 2016;15:155–63.

Haines PS, Hama MY, Guilkey DK, Popkin BM. Weekend eating in the United States is linked with greater energy, fat, and alcohol intake. Obes Res. 2003;11:945–9.

Esposito F, Sanmarchi F, Marini S, Masini A, Scrimaglia S, Adorno E, et al. Weekday and weekend differences in eating habits, physical activity and screen time behavior among a sample of primary school children: the “Seven Days for My Health” Project. Int J Environ Res Public Health. 2022;19:4215.

Béjar LM. Weekend-weekday differences in adherence to the Mediterranean diet among Spanish University Students. Nutrients. 2022;14:2811.

Kiefer I, Rathmanner T, Kunze M. Eating and dieting differences in men and women. J Mens Health Gend. 2005;2:194–201.

Feraco A, Armani A, Amoah I, Guseva E, Camajani E, Gorini S, et al. Assessing gender differences in food preferences and physical activity: a population-based survey. Front Nutr. 2024;11:1348456.

Achterberg C, Agrotou A, Al-Othaimeen A, Aro A, Blumberg J, Buzzard M, et al. Preparation and use of food-based dietary guidelines. Technical Report Series, 1998.

Kipnis V, Subar AF, Midthune D, Freedman LS, Ballard-Barbash R, Troiano RP, et al. Structure of dietary measurement error: results of the OPEN biomarker study. Am J Epidemiol. 2003;158:14–21.

Chan V, Davies A, Wellard-Cole L, Lu S, Ng H, Tsoi L, et al. Using wearable cameras to assess foods and beverages omitted in 24 h dietary recalls and a text entry food record app. Nutrients. 2021;13:1806.

Funding

This work was supported by grants to MS of the Kristian Gerhard Jebsen Foundation, and through support to RS from the EPFLglobaLeaders programme, funded from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 945363. The funders had no role in the design or execution of this study, in the analyses and interpretation of the data, or in the decision to submit results. Open access funding provided by EPFL Lausanne.

Author information

Authors and Affiliations

Contributions

RS: Conceptualization, study design, data analysis, methodology, investigation, visualization, software, writing – original draft, writing – review & editing. MTV: Data analysis, methodology, software. MS: Study design, supervision, writing – review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

All methods were performed in accordance with relevant guidelines and regulations. The study protocol was approved by the Geneva Ethics Commission (Ethical Approval Number: 2017–02124). The study is registered with the Swiss Federal Office of Public Health (SNCTP000002833) and on ClinicalTrials.gov (NCT03848299). All participants provided informed consent prior to data sharing.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Singh, R., Verest, M.T.E. & Salathé, M. Minimum days estimation for reliable dietary intake information: findings from a digital cohort. Eur J Clin Nutr 79, 1007–1017 (2025). https://doi.org/10.1038/s41430-025-01644-8

Received:

Revised:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41430-025-01644-8