Abstract

Neural networks have been applied to tackle many-body electron correlations for small molecules and physical models in recent years. Here we propose an architecture that extends molecular neural networks with the inclusion of periodic boundary conditions to enable ab initio calculation of real solids. The accuracy of our approach is demonstrated in four different types of systems, namely the one-dimensional periodic hydrogen chain, the two-dimensional graphene, the three-dimensional lithium hydride crystal, and the homogeneous electron gas, where the obtained results, e.g. total energies, dissociation curves, and cohesive energies, reach a competitive level with many traditional ab initio methods. Moreover, electron densities of typical systems are also calculated to provide physical intuition of various solids. Our method of extending a molecular neural network to periodic systems can be easily integrated into other neural network structures, highlighting a promising future of ab initio solution of more complex solid systems using neural network ansatz, and more generally endorsing the application of machine learning in materials simulation and condensed matter physics.

Similar content being viewed by others

Introduction

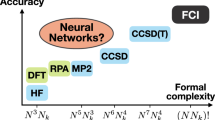

Solving the many-body electronic structure of real solids from ab initio is one of the grand challenges in condensed matter physics and materials science1. More accurate ab initio solutions can push the limit of our understanding of many fundamental and mysterious emergent phenomena, such as superconductivity, light–matter interaction, and heterogeneous catalysis, to name just a few2. The current workhorse method is density functional theory (DFT), whose accuracy depends quite sensitively on the choice of the so-called exchange-correlation functional and unfortunately there lacks a systematic routine towards the exact3,4. Other commonly used ab initio quantum chemistry methods, such as the coupled-cluster and configuration interaction theories5, can provide more accurate solutions for molecules but face severe difficulty when applied to solid systems due to their high computational complexity. Recently, several breakthroughs have been made in applying these quantum chemistry methods on solids6,7, driving the study of solid systems towards a new frontier.

Meanwhile, in the last few years, many attempts to tackle the correlated wavefunction problem in molecules or model Hamiltonians using neural network-based approaches have been reported by different groups8,9,10,11,12,13,14,15,16. The key idea is to use the neural network as the wavefunction ansatz in quantum Monte Carlo (QMC) simulations. The stochastic nature of QMC enables a considerably economical time scaling and efficient parallelization6,17,18,19. The universal approximation theorem behind neural network-based ansatz significantly improves the accuracy of the traditional QMC method. This strategy has been proved successful in studying small molecules10,11,12,13 in the first and second quantization, and solids in the second quantization14. However, how to apply such neural network ansatz for real solids in continuous space, and whether it can describe the long-range electron correlations in extended systems remain as open questions.

Here we propose a powerful periodic neural network ansatz for solids, which combines periodic distance features20 with existing molecule neural networks10. Based on that, we develop a highly efficient QMC method for ab initio calculation of real solid and general periodic systems with high accuracy. We apply our method to periodic hydrogen chains, graphene, lithium hydride (LiH) crystals, and homogeneous electron gas. These systems cover a wide range of interests, including materials dimension from one to three, electronic structures from metallic to insulating, and bonding types from covalent to ionic. Standard techniques are employed to reduce finite-size errors. The calculated dissociation curve, cohesive energy and correlation energy, can be compared satisfactorily with available experimental values and other state-of-the-art computational approaches. Electron densities of typical systems are further calculated to test our neural network and explore the underlying physics. All the results demonstrate that our method can achieve accurate electronic structure calculations of solid/periodic systems. In parallel to our work, refs. 21, 22 also developed periodic versions of neural networks to study the homogeneous electron gas system and obtained high-accuracy results. A more detailed comparison is discussed in the following sections.

Results

Neural network for a solid system

Periodicity and anti-symmetry are two fundamental properties of the wavefunction of a solid system. The anti-symmetry can be ensured by the Slater determinant, which has been commonly used as the basic block in molecular neural networks. We also approximate the wavefunction by two Slater determinants of one spin-up channel and one spin-down channel,

In this regard, our ansatz resembles the structure of FermiNet10,11, whereas other neural network wavefunction ansatz may include extra terms in addition to the Slater determinants12. Each determinant is then constructed from a set of periodic orbitals, which inherits the physics captured by the generalized collective Bloch function formed by a product of phase factor eik⋅r and collective molecular orbital umol. The generalized many-body Bloch function incorporates electron correlations and goes beyond single-electron approximation18.

Figure 1 displays more details on the structure of our neural network. Building an efficient and accurate periodic ansatz is the key step in developing ab initio methods for solids. Here we have followed the recently proposed scheme of Whitehead et al. to construct a set of periodic distance features d(r)20 using lattice vectors in real and reciprocal space (ai, bi),

The periodic metric matrix M is constructed by periodic functions f, g, which retain ordinary distances at the origin and regulate them to periodic ones at far distances, ensuring asymptotic cusp form, continuity, and periodicity requirement at the same time.

The electron coordinates ri are passed to two channels. In the first one, they build the periodic distance features d(r) using the periodic metric matrix M and the lattice vectors a, and then d(r) features are fed into two molecular neural networks, that represent separately the real and the imaginary part of the wavefunction. In the second channel, ri constructs the plane-wave phase factors on a selected set of crystal momentum vectors. The total wavefunctions of solids are constructed by the two channels following the expression of Eq. (1).

The constructed periodic distance features d(r) can then be fed into molecular neural networks to form collective orbitals umol. Specifically, in this work, we represent the molecular networks with FermiNet10, which incorporates electron–electron interactions. The inclusion of all-electron features promotes the traditional single-particle orbitals to the collective ones, and hence the description of wavefunction and correlation effects can be improved while fewer Slater determinants are required. In addition, the wavefunction of solid systems is necessarily complex-valued, and we introduce two sets of molecular orbitals to represent the real and imaginary parts of the solid wavefunction, respectively. The plane-wave phase factors eik⋅r in Fig. 1 are used to construct the Bloch function-like orbitals, and the corresponding k points are selected to minimize the Hartree–Fock (HF) energy.

Based on the variational principle, our neural network is trained using the variational Monte Carlo (VMC) approach. To efficiently optimize the network, a Kronecker-factored curvature estimator (KFAC) optimizer23 implemented by DeepMind team24 is modified and adopted, which significantly outperforms traditional energy minimization algorithms. Calculations are also ensured by efficient and massive parallelization on multiple nodes of high-performance GPUs. More details on the theories, methods, and computations are included in the Methods section and the supplementary information.

Hydrogen chain

Hydrogen chain is one of the simplest models in condensed matter research. Despite its simplicity, the hydrogen chain is a challenging and interesting system, serving as a benchmark system for electronic structure methods and featuring intriguing correlated phenomena25. The calculated energy of the periodic H10 chain as a function of the bond length is shown in Fig. 2a. The results from lattice-regularized diffusion Monte Carlo (LR-DMC) and traditional VMC are also plotted for comparison25. We can see that our results nearly coincide with the LR-DMC results and significantly outperform traditional VMC (see Supplementary Table 3). In Fig. 2b, the energy of hydrogen chains of different atom numbers are calculated for extrapolation to the thermodynamic limit (TDL). The shaded bar in Fig. 2b illustrates the extrapolated energy of the periodic hydrogen chain at TDL from auxiliary field quantum Monte Carlo (AFQMC), which is considered as the current state-of-the-art along with LR-DMC. Our TDL result is comparable with both AFQMC and LR-DMC (see Supplementary Table 4).

Our results are all labeled as Net. Statistical errors are negligible for the presented data. a H10 dissociation curve is plotted. b energy of different hydrogen chain sizes N, the bond length of the hydrogen chain is fixed at 1.8 Bohr. LR-DMC and VMC both use the cc-pVTZ basis set, and the one-body Jastrow function uses orbitals from LDA calculations. AFQMC is pushed to complete the basis limit. All the comparison results are taken from ref. 25. c Structure of graphene. d the cohesive energy per atom of Γ point and finite-size error corrected result is plotted. Experiment cohesive energy is from ref. 29. Graphene is calculated at its equilibrium length 1.421 Å. e Structure of rock-salt lithium hydride crystal. f Equation of state of LiH crystal is plotted, fitted Birch–Murnaghan parameters and experimental data are also given. HF corrections are calculated using cc-pVDZ basis, and \({E}_{\infty }^{{{{{{{{\rm{HF}}}}}}}}}\) is approximated by \({E}_{{{{{{{{\rm{N=8}}}}}}}}}^{{{{{{{{\rm{HF}}}}}}}}}\). The arrows denote the corresponding HF corrections. g Plot of homogeneous electron gas system. h Correlation error of 54-electrons HEG systems at different rs. Correlation error is defined as [1 − (E − EHF)/(Eref − EHF)] × 100%, and EHF is taken from ref. 33. DCD, BF-VMC, and TC-FCIQMC results are displayed for comparison, and BF-DMC data were used as reference33,34.

Graphene

Graphene is arguably the most famous two-dimensional system (Fig. 2c) receiving broad attention in the past two decades for its mechanical, electronic, and chemical applications26. Here we carry out simulations to estimate its cohesive energy, which measures the strength of C-C chemical bonding and long-range dispersion interactions. The calculations are performed on a 2 × 2 supercell of graphene using twist average boundary condition (TABC)27 in conjunction with structure factor S(k) correction28 (see Supplementary Fig. 3) to reduce the finite-size error. The calculated results are plotted in Fig. 2d along with the experimental value29, and it shows that our neural network can deal with graphene very well, producing a cohesive energy of graphene within 0.1 eV/atom to the experimental reference (see Supplementary Table 6). We also plotted the results with periodic boundary conditions (PBC), namely the Γ point-only result, which deviates from the experiment data by 1.25 eV/atom.

Lithium hydride crystal

For a three-dimensional system, we consider the LiH crystal with a rock-salt structure (Fig. 2e), another benchmark system for accurate ab initio methods6,30,31. Despite consisting of only simple elements, LiH represents typical ionic and covalent bonds upon changing the lattice constants. Using our neural network, we first simulate the equation of the state of LiH on a 2 × 2 × 2 supercell, as shown in Fig. 2f. In addition, we employ a standard finite-size correction based on Hartree–Fock calculations of a large supercell (see Supplementary Fig. 5). In Fig. 2f we also show the Birch–Murnaghan fitting to the equation of state, based on which we can obtain thermodynamic quantities such as the cohesive energy, the bulk modulus, and the equilibrium lattice constant of LiH. As shown in the inset, our results on the thermodynamic quantities agree very well with experimental data30 (see Supplementary Table 8, 9).

For further validation, we have also computed directly the 3 × 3 × 3 supercell of LiH at its equilibrium length of 4.061 Å, which contains 108 electrons. To the best of our knowledge, this is the largest electronic system computed using a high-quality neural network ansatz. The 3 × 3 × 3 supercell calculation predicts the total energy per unit cell of LiH is −8.160 Hartree and the cohesive energy per unit cell is −4.770 eV after the finite-size correction (see Supplementary Table 10), which is also very close to the experimental value −4.759 eV30.

Homogeneous electron gas

In addition to the solids containing nuclei, our computational framework can also apply straightforwardly to model systems such as homogeneous electron gas (HEG). HEG has been studied for a long time to understand the fundamental behavior of metals and electronic phase transitions32. Several seminal ab initio works have reported the energy of HEG at different densities21,22,32,33,34,35. Recently two other works have extended neural network ansatz to study HEG21,22. Although our computational framework is independently designed for solids, the network structure between this work and refs. 21, 22 employ similar ideas. Different physics-inspired envelope functions and periodic features are used in these works, which suit the features of solids and homogeneous electron gas respectively. We make comparisons between these networks and ours on HEG, and observe consistent performance, which further proves the effectiveness of neural network-based QMC works. In this section, we present the results calculated on a simple cubic cell containing 54 electrons in a closed-shell configuration, the largest HEG system studied in this work (Fig. 2g). More results and comparisons with other works on smaller systems are discussed in the section “Network comparison” and Supplementary Table 13.

Figure 2h shows our calculated correlation error on the 54-electrons HEG at six different densities from rs = 0.5 Bohr to 20.0 Bohr. The state-of-the-art results, namely VMC with backflow correlation (BF)33, distinguishable cluster with double excitations (DCD)34, and transcorrelated full configuration interaction quantum Monte Carlo (TC-FCIQMC)35 are also plotted for comparison, and BF-DMC result is often used as the reference energy of correlation error. Overall, our neural network performs very well, with an error of less than 1% in a wide range of density (see Supplementary Table 14). Generally, the correlation error increases as the density of HEG decreases when the correlation effects become larger, which also appears in DCD calculations.

Electron density

Besides the total energy of solid systems, the electron density is also a key quantity to be calculated. For example, the electron density is crucial for characterizing different solids, such as the difference between insulators and conductors, and the distinct nature of ionic and covalent crystals. In DFT the one-to-one correspondence between many-body wavefunction and electron density proved by Hohenberg and Kohn in 1964 suggests that electron density is a fundamental quantity of materials. However, an interesting survey found that while new functionals in DFT improve the energy calculation the obtained density somehow can deviate from the exact36. Here, with our accurate neural network wavefunction, we can also obtain accurate electron density of solids and provide a valuable benchmark and guidance for method development.

A conductor features free-moving electrons, which would have macroscopic movements under external electric fields. In contrast, electrons are localized and constrained in insulators and cause considerable electron resistance. In Fig. 3, as an example, we show the calculated electron density of the hydrogen chain at two different bond lengths. As we can see, for the compressed hydrogen chain (L = 2 Bohr), the electron density is rather uniform and has small fluctuations. As the chain is stretched, the electrons are more localized and the density profile has larger variations. The observation is consistent with the well-known insulator-conductor transition on the hydrogen chain by varying the bond length. To measure the transition more quantitatively, we further calculate the complex polarization Z as the order parameter for insulator-conductor transition37. A conducting state is characterized by a vanishing complex polarization modulus ∣Z∣ ~0, while an insulating state has a finite ∣Z∣ ~1. We can see that the insulator-conductor transition bond length of the hydrogen chain is around 3 Bohr according to the calculated results, which is also consistent with the previous studies37.

Ionic and covalent bonds are the most fundamental chemical bonds in solids. While the physical pictures of these two types of bonding are very different, they both lie in the behavior of electrons as the “quantum glue" and electron density distribution is a simple way to visualize different bonding types. Here we choose to calculate the electron density of the diamond-structured Si, rock-salt NaCl and LiH crystals at their equilibrium position. They are representative of covalent and ionic crystals, and have also been investigated by other high-level wavefunction methods, e.g., AFQMC38. Note that in the calculations of NaCl and Si, correlation-consistent effective core potential (ccECP) is employed to reduce the cost, which removes the inertia of core electrons and keeps the behavior of active valence electrons15,39.

The electron density of diamond-structured Si in its \((01\bar{1})\) plane is plotted in Fig. 4b. We can see that valence electrons are shared by the nearest Si atoms, forming apparent Si-Si covalent bonds. In contrast, valence electrons are located around atoms in NaCl crystal as Fig. 4c shows. All the valence electrons are attracted around Cl atoms, forming effective Na+ and Cl− ions in the crystal. Moreover, the electron density of LiH crystal is also calculated and plotted in Fig. 4d. LiH crystal is a moderate system between a typical ionic and covalent crystal. According to the result, the electrons are nearly equally distributed near Li and H atoms for our network. Detailed Bader charge analysis40 manifests the atoms in the crystal become Li0.67+ and H0.67− ions, respectively (resolution ~0.015 Å), which slightly deviates from the stable closed-shell configuration (see Supplementary Note 7 for more details).

a Structures of solids, where the lattice planes for plotting electron densities are indicated. b Electron density of diamond-structured Si in its (\(01\bar{1}\)) plane, ccECP[Ne] is employed, and the bond length of Si equals 5.42 Å. c Electron density of NaCl crystal in its xy-plane, ccECP[Ne] is employed, and the bond length of NaCl equals 5.7 Å. d, the electron density of LiH crystal in its xy-plane, and the bond length of LiH equals 4.0 Å.

Network comparison

In refs. 21, 22, neural networks are also used to simulate homogeneous electron gas system, employing a different choice of periodic feature function. In Fig. 5 we plot the correlation error computed on the 14-electrons HEG system, which can be compared with the results of other works. We can see that all three networks can go beyond the BF-DMC level for high-density systems. For all systems tested, our correlation errors are about 2% with the TC-FCIQMC result as the reference35, whereas the results of refs. 21, 22 are within 1%. It is understandable that the networks of refs. 21, 22 are specially designed for HEG systems, so slightly better accuracy can be achieved than our network. In their works, multiple phase factors eik⋅r are used in the constructed orbitals, which improve the expressiveness of the network. In comparison, our network contains an additional exponential decay term, which simulates the attraction between atoms and electrons in solids containing nuclei (see Methods section for more details). Furthermore, the choice of periodic distance, as well as the domains of the constructed wavefunction (complex or real-valued), are also different in these three works, which may add differences to their performance. In the future, it would be interesting to combine the insights learned from these three works and design a better network ansatz for periodic systems.

Metallic lithium

We have also carried out preliminary calculations on metallic lithium. The real metal system remains a notoriously difficult task for accurate wavefunction approaches7,41,42,43,44. The zero gap of metal leads to a discontinuity in the Brillouin zone integral. As a consequence, a significantly larger simulation cell is required for metals than insulators to reach the thermodynamic limit. Shortcut approaches to simulate metals are proposed via employing a special twist angle7,43, which helps to reduce the simulation size and finite-size error. Here we employ our network to simulate lithium with a body-centered cubic (bcc) structure, which is a typical metal with zero gap. A 2 × 2 × 2 conventional cell of bcc-Li at Γ point is employed (see Supplementary Table 11). In Supplementary Table 12, we list the total energy and the cohesive energy computed. As expected, the error in cohesive energy of lithium with such a limited supercell is larger than in non-metallic solids such as LiH, and further developments are desired to treat the large finite-size errors in metal.

Discussion

The construction of a wavefunction for solid systems is a crucial but unsolved problem in the neural network community. The core mechanism of our neural network is the use of the periodic distance feature, which promotes molecule neural networks elegantly to the corresponding periodic ones and avoids time-consuming lattice summation. Considering the high-accuracy results obtained in this work, our neural network can be further applied to study more delicate physics and materials problems, such as the phase transitions of solids, surfaces, interfaces, and disordered systems, to name just a few. Our ansatz also offers a flexible extension to other neural networks and an easy integration into traditional computational techniques. The naturally evolved many-body wavefunction from the neural network may provide more physical and chemical insights into emergent phenomena of complex materials.

For further development of neural network-based QMC, the most crucial task is to enlarge its simulation size while retaining a reasonable accuracy, which allows a more accurate simulation of metals and high-temperature superconductors. Employing pseudopotential is helpful to enlarge the simulation size15, while a better solution is a more efficient neural network, and related works are under progress.

Methods

Supercell approximation

Simulating a solid system requires solving the Schrödinger equation of many electrons within a large bulk. Supercell approximation is usually adopted to simplify the problem, considering a finite number of electrons and nuclei with periodic boundary conditions, whose Hamiltonian reads

where ri denotes the spatial position of ith electron in the supercell. RI, ZI are the spatial position and charge of the Ith nucleus and {LS} is the set of supercell lattice vectors, which is usually a subset of primitive cell lattice vectors {Lp}. In order to simulate the real environments of electrons in solids, the interactions between the particles and their images are also included in \({\hat{H}}_{S}\), and the prime symbol in summation means i = j terms are omitted for LS = 0.

Supercell Hamiltonian \({\hat{H}}_{S}\) is invariant under the translation of any electron by a vector in {LS} as well as a simultaneous translation of all-electrons by a vector in {Lp}. As a consequence, two periodic conditions are required for the ground-state wavefunction ψ45,

where kS, kp denote the momentum vectors reduced in the first Brillouin zone of the supercell and the primitive cell, respectively. Eq. (4) and the anti-symmetry condition together form the fundamental requirements for ψ. As the size of the supercell increases, simulation results gradually converge to the thermodynamic limit of a real solid system.

Wavefunction ansatz

In conventional QMC simulation of solids, Hartree–Fock type wavefunction ansatz composed of Bloch functions is often used, which reads

In order to satisfy Eq. (4), ki in the determinant should lie on the grid of supercell reciprocal lattice vectors {GS} offset by kS within the first Brillouin zone of the primitive cell. Moreover, uk functions in Eq. (5) should satisfy the translation invariant condition by the primitive cell lattice vectors,

Following the strategy of FermiNet10, Bloch functions in Eq. (5) can be promoted with collective distances,

where r≠i denotes all the electron coordinates except ri. These collective orbitals are constructed to achieve the equivalence of electron permutations P,

which combined with the Slater determinant ensures the anti-symmetry nature of electrons. Moreover, we use the periodic distance features d(r) in Eq. (2) to substitute ordinary ∣r∣ in the molecular neural network. The periodic functions f, g used in Eq. (2) read

and their arguments ω are reduced into [−π, π]. Eq. (6) can then be satisfied without causing discontinuity20. The constructed periodic features {∑ig(ωi)ai, d(r)} are substituted into FermiNet10 to build a periodic wavefunction. Specifically, electron-atom features he and electron–electron features \({{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}\) are constructed as follows,

where \({\omega }_{e,I},\,{\omega }_{e,{e}^{{\prime} }}\) are defined as

and superscripts p, S denote the primitive cell and supercell respectively. A permutation equivalent feature \({{{{{{{{\bf{f}}}}}}}}}_{e}^{\alpha }\) are further constructed from \({{{{{{{{\bf{h}}}}}}}}}_{e},\, {{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}\),

where α denotes the spin index (↑, ↓). g↑, g↓ and \({{{{{{{{\bf{g}}}}}}}}}_{e}^{\alpha,\uparrow },\, {{{{{{{{\bf{g}}}}}}}}}_{e}^{\alpha,\downarrow }\) read

\({{{{{{{{\bf{f}}}}}}}}}_{e}^{\alpha }\) and \({{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}\) are subsequently substituted into a series of fully connected layers recursively

where l denotes the number of layers, and {Vl, bl}, {Wl, cl} denote corresponding weight and bias of l-layer.

Functions u in Eq. (7) are built using the \({{{{{{{{\bf{h}}}}}}}}}_{e}^{L}\) from the last L-layer,

where \({{{{{{{{\rm{Orb}}}}}}}}}^{{{{{{{{\rm{Re,Im}}}}}}}}}\) denote the weight parameters of the real part and the imaginary part respectively.

Moreover, u function is multiplied by an additional phase factor \(\exp (i{{{{{{{\bf{k}}}}}}}}\cdot {{{{{{{\bf{r}}}}}}}})\), which mimics Bloch functions and encodes the occupied k-point information from HF calculation. Inspired by the tight-binding model in solid physics, a periodic-generalized envelope term \(\exp [-d({{{{{{{\bf{r}}}}}}}})]\) is also added to the molecule orbitals, which considers an attractive interaction effect between atoms and electrons. The final molecule orbitals ϕ reads

For an overall sketch of the neural network, see section “Pseudocode of network”. Note that the distance between electrons and nuclei is omitted for the HEG system since it does not contain any nucleus. Specific hyperparameters of each system are listed in Supplementary Note 1.

Pseudocode of network

For clarity, the pseudocode of network reads below:

Require: electron positions \(\{{{{{{{{{\bf{r}}}}}}}}}_{1}^{\uparrow },\, \cdots \,,\, {{{{{{{{\bf{r}}}}}}}}}_{{n}^{\uparrow }}^{\uparrow },\, {{{{{{{{\bf{r}}}}}}}}}_{1}^{\downarrow },\cdots,\, {{{{{{{{\bf{r}}}}}}}}}_{{n}^{\downarrow }}^{\downarrow }\}\)

Require: nuclear positions {RI} in the primitive cell

Require: lattice vector \(\{{{{{{{{{\bf{a}}}}}}}}}_{1}^{p,S},\, {{{{{{{{\bf{a}}}}}}}}}_{2}^{p,S},\, {{{{{{{{\bf{a}}}}}}}}}_{3}^{p,S}\}\) of primitive cell and supercell

Require: reciprocal lattice vector \(\{{{{{{{{{\bf{b}}}}}}}}}_{1}^{p,S},\, {{{{{{{{\bf{b}}}}}}}}}_{2}^{p,S},\, {{{{{{{{\bf{b}}}}}}}}}_{3}^{p,S}\}\) of primitive cell and supercell

Require: occupied {ki} points offered by Hartree–Fock method

For each electron e, atom I:

\({\omega }_{e,I}=({{{{{{{{\bf{r}}}}}}}}}_{e}-{{{{{{{{\bf{R}}}}}}}}}_{I})\cdot \{{{{{{{{{\bf{b}}}}}}}}}_{1}^{p},\, {{{{{{{{\bf{b}}}}}}}}}_{2}^{p},\, {{{{{{{{\bf{b}}}}}}}}}_{3}^{p}\}\)

\({\omega }_{e,{e}^{{\prime} }}=({{{{{{{{\bf{r}}}}}}}}}_{e}-{{{{{{{{\bf{r}}}}}}}}}_{{e}^{{\prime} }})\cdot \{{{{{{{{{\bf{b}}}}}}}}}_{1}^{S},\, {{{{{{{{\bf{b}}}}}}}}}_{2}^{S},\, {{{{{{{{\bf{b}}}}}}}}}_{3}^{S}\}\)

End For

For each electron e:

\({{{{{{{{\bf{h}}}}}}}}}_{e}=\{{\Sigma }_{i=1}^{3}g({\omega }_{e,I}^{i})\,{{{{{{{{\bf{a}}}}}}}}}_{i}^{p},\, d({\omega }_{e,I})\}\)

\({{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}=\{{\Sigma }_{i=1}^{3}g({\omega }_{e,{e}^{{\prime} }}^{i})\,{{{{{{{{\bf{a}}}}}}}}}_{i}^{S},\, d({\omega }_{e,{e}^{{\prime} }})\}\)

End For

For each layer l:

\({{{{{{{{\bf{g}}}}}}}}}^{l,\uparrow }=\frac{1}{{n}^{\uparrow }}{\sum }_{e}{{{{{{{{\bf{h}}}}}}}}}_{e}^{l,\uparrow }\)

\({{{{{{{{\bf{g}}}}}}}}}^{l,\downarrow }=\frac{1}{{n}^{\downarrow }}{\sum }_{e}{{{{{{{{\bf{h}}}}}}}}}_{e}^{l,\downarrow }\)

For each electron e, spin α:

\({{{{{{{{\bf{g}}}}}}}}}_{e}^{l,\alpha,\uparrow }=\frac{1}{{n}^{\uparrow }}{\sum }_{{e}^{{\prime} }}{{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}^{l,\alpha,\uparrow }\)

\({{{{{{{{\bf{g}}}}}}}}}_{e}^{l,\alpha,\downarrow }=\frac{1}{{n}^{\downarrow }}{\sum }_{{e}^{{\prime} }}{{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}^{l,\alpha,\downarrow }\)

\({{{{{{{{\bf{f}}}}}}}}}_{e}^{l,\alpha }={{{{{{{\rm{concat}}}}}}}}({{{{{{{{\bf{h}}}}}}}}}_{e}^{l,\alpha },\, {{{{{{{{\bf{g}}}}}}}}}^{l,\uparrow },\, {{{{{{{{\bf{g}}}}}}}}}^{l,\downarrow },\, {{{{{{{{\bf{g}}}}}}}}}_{e}^{l,\alpha,\uparrow },\, {{{{{{{{\bf{g}}}}}}}}}_{e}^{l,\alpha,\downarrow })\)

\({{{{{{{{\bf{h}}}}}}}}}_{e}^{l+1,\alpha }=\tanh ({{{{{{{{\bf{V}}}}}}}}}^{l}\cdot {{{{{{{{\bf{f}}}}}}}}}_{e}^{l,\alpha }+{{{{{{{{\bf{b}}}}}}}}}^{l})+{{{{{{{{\bf{h}}}}}}}}}_{e}^{l,\alpha }\)

\({{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}^{l+1,\alpha,\beta }=\tanh ({{{{{{{{\bf{W}}}}}}}}}^{l}\cdot {{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}^{l,\alpha,\beta }+{{{{{{{{\bf{c}}}}}}}}}^{l})+{{{{{{{{\bf{h}}}}}}}}}_{e,{e}^{{\prime} }}^{l,\alpha,\beta }\)

End For

End For

For each orbital i:

For each electron e, spin α:

\({u}_{i,e}^{\alpha }={{{{{{{{\rm{Orb}}}}}}}}}_{i,\alpha }^{{{{{{{{\rm{Re}}}}}}}}}\cdot {{{{{{{{\bf{h}}}}}}}}}_{e}^{L}+{{{{{{{\bf{i}}}}}}}}\times {{{{{{{{\rm{Orb}}}}}}}}}_{i,\alpha }^{{{{{{{{\rm{Im}}}}}}}}}\cdot {{{{{{{{\bf{h}}}}}}}}}_{e}^{L}\)

\({p}_{i,e}^{\alpha }=\exp ({{{{{{{\bf{i}}}}}}}}{{{{{{{{\bf{k}}}}}}}}}_{i}\cdot {{{{{{{{\bf{r}}}}}}}}}_{e}^{\alpha })\)

\({{{{{{{{\rm{enve}}}}}}}}}_{i,e}^{\alpha }={\sum }_{I}{\pi }_{i}^{I,\alpha }\exp [-{\sigma }_{i}^{I,\alpha }d({\omega }_{e,I})]\)

\({\phi }_{i,e}^{\alpha }={p}_{i,e}^{\alpha }{u}_{i,e}^{\alpha }{{{{{{{{\rm{enve}}}}}}}}}_{i,e}^{\alpha }\)

End For

End For

\(\psi={{{{{{{\rm{Det}}}}}}}}[{\phi }^{\uparrow }]{{{{{{{\rm{Det}}}}}}}}[{\phi }^{\downarrow }]\)

Neural network optimization

Parameters θ within the neural network can be optimized to minimize the energy expectation value 〈El〉, and the gradient ∇θ〈El〉 reads

where El denotes the local energy of neural network ansatz ψ. Besides energy minimization, stochastic reconfiguration optimization46 has also been widely adopted and proved to be much more efficient, whose gradient reads

In this work, we adopt a modified KFAC optimizer, which approximates F as

where Wl denotes the weight parameters of layer l, and vec means vectorized form. al, el denote the activation and sensitivity of layer l respectively. Note that activation al is always real-valued, which explains the absence of conjugation of al in the second line. The first term in the bracket of Eq. (19) is approximated as the Kronecker product of the expectation values, and the second term is omitted for simplification.

Twist average boundary condition

TABC is a conventional technique to reduce the finite-size error due to the constrained size of the supercell27. It averages the contributions from different periodic images of the supercell and improves the convergence of the total energy. The formula reads

where 1.B.Z. denotes the first Brillouin zone of supercell and the integral is practically approximated by a discrete sum of a Monkhorst-Pack mesh (see Supplementary Note 3.2 for more details).

Structure factor correction

Finite-size error can be further reduced via the structure factor S(k) correction28, which is usually calculated to correct the exchange-correlation potential Vxc and the formula reads

where \(\mathop{\lim }_{{{{{{{{\bf{k}}}}}}}}\to 0}\) is practically estimated via interpolation (see Supplementary Note 3.4 for more details).

Empirical correction formula

Empirical formulas are also commonly employed to reduce the finite-size error18, one of which reads

The simulation size of high-accuracy methods is usually limited due to high computational costs. Hence methods with a much more practical time scale, such as HF, is usually used to give a posterior estimation of the finite-size error. All the results of LiH are corrected using this empirical formula with HF results in a cc-pVDZ basis (see Supplementary Note 4.3 for more details).

Electron density analysis

Electron density ρ(r) is defined as

and it’s practically evaluated by accumulating Monte Carlo samples of electrons on a uniform grid over the simulation cell. As for the complex polarization Z, it is defined as37

where r∥ denotes the projection of electron coordinate along the chain direction. Moreover, Bader charge is employed to estimate the charge partition on each atom40. The convergence test of Bader charge is shown in the Supplementary Fig. 8.

Workflow and computational details

This work is developed upon open-source FermiNet47 and PyQMC48 on Github, deep learning framework JAX49 is used which supports flexible and powerful complex number calculation. Ground-state energy calculations are performed with all-electrons. Diamond-structured Si and NaCl crystal are simulated with ccECP[Ne]39. The neural network is pretrained by Hartree–Fock ansatz, obtained with PySCF software50. All the used k points are the occupied k points from Hartree–Fock calculation using Monkhorst-Pack mesh offset by kS in cc-pVDZ basis, and the mesh size is the same as the supercell. All the expectation values for distribution ∣ψ∣2 are evaluated via the Monte Carlo approach, and then the energy and wavefunction is optimized using the modified KFAC optimizer24 (see Supplementary Figs. 1, 2, 4, 6, 7). The Ewald summation technique is implemented for the lattice summation in energy calculation. After training is converged, energy is calculated in a separate inference phase.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The data generated in this study are provided in the Supplementary Information.

Code availability

The concrete code of this work is developed on Github (https://github.com/bytedance/DeepSolid).

References

Kohn, W. Nobel lecture: electronic structure of matter—wave functions and density functionals. Rev. Mod. Phys. 71, 1253–1266 (1999).

Martin, R. M. Electronic Structure: Basic Theory and Practical Methods (Cambridge Univ. Press, 2004).

Jones, R. O. Density functional theory: Its origins, rise to prominence, and future. Rev. Mod. Phys. 87, 897–923 (2015).

Kirkpatrick, J. et al. Pushing the frontiers of density functionals by solving the fractional electron problem. Science 374, 1385–1389 (2021).

Williams, K. T. et al. Direct comparison of many-body methods for realistic electronic Hamiltonians. Phys. Rev. X 10, 011041 (2020).

Booth, G. H., Grüneis, A., Kresse, G. & Alavi, A. Towards an exact description of electronic wavefunctions in real solids. Nature 493, 365–370 (2013).

Mihm, T. N. et al. A shortcut to the thermodynamic limit for quantum many-body calculations of metals. Nat. Comput. Sci. 1, 801–808 (2021).

Han, J., Zhang, L. & Weinan, E. Solving many-electron Schrödinger equation using deep neural networks. J. Comput. Phys. 399, 108929 (2019).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355, 602–606 (2017).

Pfau, D., Spencer, J. S., Alexander, G., Matthews, D. G. & Foulkes, W. M. C. Ab initio solution of the many-electron schrödinger equation with deep neural networks. Phys. Rev. Res. 2, 033429 (2020).

Spencer, J. S., Pfau, D., Botev, A. & Foulkes, W. M. C. Better, faster fermionic neural networks. Preprint at arXiv:2011.07125 (2020).

Hermann, J., Schätzle, Z. & Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 12, 891–897 (2020).

Choo, K., Mezzacapo, A. & Carleo, G. Fermionic neural-network states for ab-initio electronic structure. Nat. Commun. 11, 2368 (2020).

Yoshioka, N., Mizukami, W. & Nori, F. Solving quasiparticle band spectra of real solids using neural-network quantum states. Commun. Phys. 4, 1–8 (2021).

Li, X., Fan, C., Ren, W. & Chen, J. Fermionic neural network with effective core potential. Phys. Rev. Res. 4, 013021 (2022).

Ren, W., Fu, W. & Chen, J. Towards the ground state of molecules via diffusion monte carlo on neural networks. Preprint at arXiv:2204.13903 (2022).

Guther, K. et al. NECI: N-electron configuration interaction with an emphasis on state-of-the-art stochastic methods. J. Chem. Phys. 153, 034107 (2020).

Foulkes, W. M. C., Mitas, L., Needs, R. J. & Rajagopal, G. Quantum monte carlo simulations of solids. Rev. Mod. Phys. 73, 33 (2001).

Shi, H. & Zhang, S. Some recent developments in auxiliary-field quantum monte carlo for real materials. J. Chem. Phys. 154, 024107 (2021).

Whitehead, T. M., Michael, M. H. & Conduit, G. J. Jastrow correlation factor for periodic systems. Phys. Rev. B 94, 035157 (2016).

Wilson, M. et al. Wave function ansatz (but periodic) networks and the homogeneous electron gas. Preprint at arXiv:2202.04622 (2022).

Cassella, G. et al. Discovering quantum phase transitions with fermionic neural networks. Preprint at arXiv:2202.05183 (2022).

Martens, J. & Grosse, R. Optimizing neural networks with kronecker-factored approximate curvature. In Proc. 32nd International Conference on International Conference on Machine Learning 2408–2417. (JMLR.org, 2015).

Botev, A. & Martens, J. KFAC-JAX. (2022).

Motta, M. et al. Towards the solution of the many-electron problem in real materials: equation of state of the hydrogen chain with state-of-the-art many-body methods. Phys. Rev. X 7, 031059 (2017).

Geim, A. K. Nobel lecture: random walk to graphene. Rev. Mod. Phys. 83, 851–862 (2011).

Lin, C., Zong, F. H. & Ceperley, D. M. Twist-averaged boundary conditions in continuum quantum monte carlo algorithms. Phys. Rev. E 64, 016702 (2001).

Chiesa, S., Ceperley, D. M., Martin, R. M. & Holzmann, M. Finite-size error in many-body simulations with long-range interactions. Phys. Rev. Lett. 97, 076404 (2006).

Dappe, Y. et al. Local-orbital occupancy formulation of density functional theory: application to si, c, and graphene. Phys. Rev. B 73, 235124 (2006).

Nolan, S. J., Gillan, M. J., Alfè, D., Allan, N. L. & Manby, F. R. Calculation of properties of crystalline lithium hydride using correlated wave function theory. Phys. Rev. B 80, 165109 (2009).

Binnie, S. J. et al. Bulk and surface energetics of crystalline lithium hydride: benchmarks from quantum monte carlo and quantum chemistry. Phys. Rev. B 82, 165431 (2010).

Ceperley, D. M. & Alder, B. J. Ground state of the electron gas by a stochastic method. Phys. Rev. Lett. 45, 566–569 (1980).

López Ríos, P., Ma, A., Drummond, N. D., Towler, M. D. & Needs, R. J. Inhomogeneous backflow transformations in quantum monte carlo calculations. Phys. Rev. E 74, 066701 (2006).

Liao, K., Schraivogel, T., Luo, H., Kats, D. & Alavi, A. Towards efficient and accurate ab initio solutions to periodic systems via transcorrelation and coupled cluster theory. Phys. Rev. Res. 3, 033072 (2021).

Luo, H. & Alavi, A. Combining the transcorrelated method with full configuration interaction quantum monte carlo: Application to the homogeneous electron gas. J. Chem. Theory Comput. 14, 1403–1411 (2018).

Medvedev, M. G., Bushmarinov, I. S., Sun, J., Perdew, J. P. & Lyssenko, K. A. Density functional theory is straying from the path toward the exact functional. Science 355, 49–52 (2017).

Stella, L., Attaccalite, C., Sorella, S. & Rubio, A. Strong electronic correlation in the hydrogen chain: a variational monte carlo study. Phys. Rev. B 84, 245117 (2011).

Chen, S., Motta, M., Ma, F. & Zhang, S. Ab initio electronic density in solids by many-body plane-wave auxiliary-field quantum monte carlo calculations. Phys. Rev. B 103, 075138 (2021).

Annaberdiyev, A., Melton, C. A., Bennett, M. C., Wang, G. & Mitas, L. Accurate atomic correlation and total energies for correlation consistent effective core potentials. J. Chem. Theory Comput. 16, 1482–1502 (2020).

Tang, W., Sanville, E. & Henkelman, G. A grid-based bader analysis algorithm without lattice bias. J. Phys. Condens. Matter 21, 084204 (2009).

Yao, G., Xu, J. G. & Wang, X. W. Pseudopotential variational quantum monte carlo approach to bcc lithium. Phys. Rev. B 54, 8393–8397 (1996).

Sugiyama, G., Zerah, G. & Alder, B. J. Ground-state properties of metallic lithium. Phys. A Stat. Mech. Appl. 156, 144–168 (1989).

Dagrada, M., Karakuzu, S., Vildosola, VerónicaLaura, Casula, M. & Sorella, S. Exact special twist method for quantum monte carlo simulations. Phys. Rev. B 94, 245108 (2016).

Azadi, S. & Foulkes, W. M. C. Efficient method for grand-canonical twist averaging in quantum monte carlo calculations. Phys. Rev. B 100, 245142 (2019).

Rajagopal, G., Needs, R. J., James, A., Kenny, S. D. & Foulkes, W. M. C. Variational and diffusion quantum monte carlo calculations at nonzero wave vectors: theory and application to diamond-structure germanium. Phys. Rev. B 51, 10591–10600 (1995).

Sorella, S. Green function monte carlo with stochastic reconfiguration. Phys. Rev. Lett. 80, 4558–4561 (1998).

Pfau, D.Spencer, J. S. & Contributors, FermiNet. FermiNet. (2020).

Wheeler, W. A. et al. Pyqmc: an all-python real-space quantum monte carlo module in pyscf. Preprint at arXiv:2212.01482 (2022).

Bradbury, J. et al. JAX: composable transformations of Python+NumPy programs. (2018).

Sun, Q. et al. Pyscf: the python-based simulations of chemistry framework. WIREs Comput. Mol. Sci. 8, e1340 (2018).

Acknowledgements

The authors thank Matthew Foulkes, David Ceperley, Lucas Wagner, Gareth Conduit, Mario Motta, and Ke Liao for helpful discussions. We thank Gino Cassella for providing Hartree–Fock energies of HEG. We thank the ByteDance AML team specially for their technical and computing support. We also thank ByteDance AI-Lab LIT Group and the rest of the ByteDance AI-Lab research team for inspiration and encouragement. This work is directed and supported by Hang Li and ByteDance AI-Lab. J.C. is supported by the National Natural Science Foundation of China under Grant No. 92165101.

Author information

Authors and Affiliations

Contributions

X.L. and J.C. conceived the study; X.L. developed the method, performed implementations, simulations, and data analyses; Z.L. contributed to the code development and simulation of HEG; J.C. supervised the project. X.L., Z.L., and J.C. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, X., Li, Z. & Chen, J. Ab initio calculation of real solids via neural network ansatz. Nat Commun 13, 7895 (2022). https://doi.org/10.1038/s41467-022-35627-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-022-35627-1

This article is cited by

-

A multi-resolution systematically improvable quantum embedding scheme for large-scale surface chemistry calculations

Nature Communications (2025)

-

Down to one network for computing crystalline materials

Nature Computational Science (2025)

-

Transferable neural wavefunctions for solids

Nature Computational Science (2025)

-

Emergent Wigner phases in moiré superlattice from deep learning

Communications Physics (2025)

-

Deep-learning electronic structure calculations

Nature Computational Science (2025)