Abstract

The transformative role of artificial intelligence (AI) in various fields highlights the need for it to be both accurate and fair. Biased medical AI systems pose significant potential risks to achieving fair and equitable healthcare. Here, we show an implicit fairness learning approach to build a fairer ophthalmology AI (called FairerOPTH) that mitigates sex (biological attribute) and age biases in AI diagnosis of eye diseases. Specifically, FairerOPTH incorporates the causal relationship between fundus features and eye diseases, which is relatively independent of sensitive attributes such as race, sex, and age. We demonstrate on a large and diverse collected dataset that FairerOPTH significantly outperforms several state-of-the-art approaches in terms of diagnostic accuracy and fairness for 38 eye diseases in ultra-widefield imaging and 16 eye diseases in narrow-angle imaging. This work demonstrates the significant potential of implicit fairness learning in promoting equitable treatment for patients regardless of their sex or age.

Similar content being viewed by others

Introduction

Fairness in artificial intelligence (AI) has attracted great attention recently because AI has penetrated into every aspect of our lives. AI models have the risk of over-associating sensitive attributes such as age, race, and sex with the decision-making process1,2. These models may exhibit discriminatory behavior against specific subgroups. In the context of fundus disease diagnosis, unfairness refers to bias or favoritism against individuals or subgroups of a specific age or sex, i.e., sexism or ageism in AI diagnostic models. Fairness issues may have serious adverse effects on individuals and society and further exacerbate social inequality3.

Sexism and ageism are pervasive biases in various healthcare disciplines, including the field of ophthalmology. These biases can lead to disparities in diagnosis, treatment, and care, compromising patient outcomes and equity. By developing a fairer AI for applications in ophthalmology, we can mitigate these biases and provide fair and unbiased eye care4,5. Despite the remarkable diagnostic accuracy of data-driven deep neural networks (DNNs), DNNs are susceptible to bias due to the prioritization of minimizing overall prediction errors, ultimately leading to unfair decisions6. For instance, studies have highlighted the biases of automated diagnostic systems, wherein individuals from certain racial, sex, or age groups may receive more accurate diagnostic results. These biases have caused great concern to society and government7,8. Alarmingly, unfair AI diagnostic systems may further amplify social inequity and unfairness, because the unfair AI diagnoses may affect subsequent diagnosis and treatment decisions, causing certain groups to face a higher rate of misdiagnosis and receive, delayed or unnecessary treatment. This vicious cycle further leads to greater data bias, perpetuating and exacerbating inequality and injustice9,10,11.

The field of ophthalmology faces significant challenges in achieving AI fairness6. These challenges arise from several key factors. First, sample imbalance presents an obstacle, as different ophthalmic diseases have different prevalence, resulting in uneven distributions of the samples used during algorithm training and evaluation. This imbalance can lead to biased performance, with common diseases receiving more attention than rare ones. Second, the inherent diversity within retinal fundus images adds complexity to the issue of achieving fairness. Fundus images can show a wide range of ophthalmic diseases, each with distinct characteristics and manifestations. Developing algorithms that can effectively address the diversity across and within disease groups are crucial for fair and accurate diagnosis.

Furthermore, disparities associated with individual attributes, such as age, sex, region, and race, pose additional challenges12,13. Variations in the incidence and prevalence of ophthalmic diseases among different demographic groups introduce the potential for biased predictions and unequal outcomes. Ensuring fair performance across diverse populations requires careful consideration and appropriate mitigation strategies. Finally, data biases can undermine fairness in ophthalmology. Biases may arise from the data collection methods and data sources, leading to under-representation or over-representation of certain populations or geographic regions. Such biases can limit the generalizability and fairness of algorithms in real-world applications. Addressing these challenges requires the development of fairness-aware algorithms in ophthalmology that account for sample imbalances, accommodate disease diversity, mitigate attribute biases, and use data obtained with fair data collection practices. By promoting fairness, the field of ophthalmology can advance towards more equitable and unbiased healthcare delivery.

In this study, we propose an implicit fairness learning approach to build a fairer ophthalmology AI (called FairerOPTH) that mitigates sex (biological attribute) and age biases in AI diagnosis of eye diseases. Specifically, FairerOPTH incorporates the causal relationship between fundus features and eye diseases, which is relatively independent of sensitive attributes such as race, sex, and age (Supplementary Fig. 1). Our results show that the proposed FairerOPTH can more fairly and precisely screen disease presence from fundus images. The casual relationship between fundus features and disease diagnosis approximates the clinical diagnosis process and helps AI models to deal with the complex diseases with the changing of fundus features. Therefore, using this causality as prior knowledge for AI models can encourage models to learn representations that are relatively independent to sensitive attributes, thereby enabling unbiased classification.

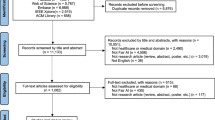

To evaluate the FairerOPTH, we collected a large and diverse fundus dataset from over 8,405 patients across a wide age range (0–90 years). The fundus dataset contains advanced ultra-widefield (UWF) and regular narrow-angle (NAF) fundus imaging datasets (Fig. 1 and Supplementary Table 1). These two new datasets have unique advantages with respect to the labeling of fundus features compared with public fundus datasets. The collected UWF dataset called OculoScope, a comprehensive fundus imaging dataset, contains 16,530 UWF images, 38 ophthalmic diseases, and 67 fundus features. The NAF dataset called MixNAF, which is compiled from four public NAF datasets and our newly collected NAF images, contains 4540 NAF images, representing 20 ophthalmic diseases and 16 fundus features. Both the OculoScope and MixNAF datasets are used to evaluate the fairness and accuracy of FairerOPTH. Our results show that the implicit fairness learning method can significantly mitigate unfairness in terms of sexism and ageism while improving the accuracy of the multi-disease classification and this method merits further study.

a Fundus images including both ultra-widefield (UWF) and narrow-angle (NAF) images, play a foundation role in the diagnosis of various ocular diseases by modern clinical systems. UWF imaging, an advanced method, covers up to 200° eccentricities in a single capture, while NAF imaging, which is more common, has an angle of view of 30–60°. b Statistics of the OculoScope and MixNAF datasets. The label density \(\rho=\frac{1}{N}\sum\nolimits_{i=1}^{K}\left\vert {y}_{i}\right\vert\) quantitatively shows that a single fundus map contains multiple diseases and pathological signs on average, where yi is the number of the i-th disease, N represents the total number of images, and K represents the number of disease types. c The sample distribution of 38 diseases in OculoScope is extremely unbalanced. d The sample distribution of 16 diseases on MixNAF is also extremely unbalanced. e Visual representation of fundus features in fundus images. Source data are provided as a Source Data file.

Results

We develop and validate a fairer AI approach for ophthalmology (FairerOPTH) using OculoScope and MixNAF datasets containing data from over 8,405 patients. We adopt six fairness-based metrics including ΔD (screening quality disparity), ΔM (max screening disparity), ΔA (average screening disparity), PQD (predictive quality disparity), DPM (demographic disparity metric), and EOM (equality of opportunity metric)2 (see ‘Fairness evaluation metrics’ Section in Methods) and four commonly used screening accuracy metrics including mAP (mean average precision), specificity, sensitivity, and AUC (area under the curve) to evaluate FairerOPTH. Specifically, we first conduct extensive experiments with the OculoScope dataset to evaluate the fairness of FairerOPTH in terms of two sensitive attributes, age and sex. Then, we evaluate the diagnostic accuracy of FairerOPTH with the OculoScope and MixNAF datasets and show the advanced performance of FairerOPTH by comparing it with state-of-the-art methods. Finally, we conduct ablation studies to verify the effectiveness of the proposed pathology-aware attention module and different loss terms.

Mitigating ageism

We first evaluate FairerOPTH for its capability to fairly screen different age groups of patients (Fig. 2). Across the four age divisions, comprising patients groups up to 40 years, FairerOPTH achieves an average screening disparity, ΔA of 31.9%, 29.3%, 15.4%, and 12.4%, respectively (Fig. 2b) and a max screening disparity ΔM of 122.7%, 122.7%, 62.7%, and 154.6%, respectively. The multi-label classification model using ResNet-101 as the backbone (baseline model) achieves a ΔA of 44.3%, 38.4%, 24.4%, and 14.8% and a ΔM of 131.8%, 134.4%, 105.3%, and 185.0%, respectively. Compared with the baseline model, FairerOPTH has significantly mitigated the age bias when screening 38 diseases from UWF images. The DPM and EOM also demonstrate the superior performance of FairerOPTH. The ΔD and PQD of different age groups of each disease are shown in Fig. 2c, d in detail. In most cases, ΔD and PQD of FairerOPTH is better than that of the baseline model for the 38 diseases. We also show the comparison of the screening accuracy of each disease corresponding to the patient 10-year age groups (Table 1).

a Sample distribution of patient age (in 10-year intervals) for the OculoScope test set. The unbalanced sample distribution of patient age may cause AI to favor the patients in the age group that contains more samples in the screening dataset, which will lead to the problem of AI ageism. In this study, we show that implicit fairness learning based on the relationship between ophthalmic diseases and fundus features can not only mitigate the unfairness that arises due to different sensitive attributes but also improve the screening accuracy for multiple diseases. b FairerOPTH is developed and validated with the OculoScope dataset, which contains data from 8405 patients with ages ranging from 0 to 90. Within each age division, FairerOPTH has obvious advantages in four fairness metrics (ΔA (average screening disparity), ΔM (max screening disparity), DPM (demographic disparity metric), and EOM (equality of opportunity metric)2) compared with the baseline model. The smaller ΔA and ΔM are, the better. c Details of the fairness metric ΔD (screening quality disparity) for 38 diseases. d Details of PQD (predictive quality disparity)2 for 38 diseases. Source data are provided as a Source Data file.

Mitigating sexism

Next, we evaluated the efficacy of FairerOPTH for the equitable screening of ocular diseases in patients of different sexes. On the OculoScope test set, the FairerOPTH achieves a mAP of 91.8% and 90.8% for females and males, respectively, while the baseline model achieves values of 88.1% and 86.8% for females and males, respectively (Fig. 3). For females and males, the average screening accuracy of FairerOPTH is increased by 3.7% and 4.0% mAP over the baseline performance, respectively (Fig. 3b). In addition, FairerOPTH achieves a ΔA of 7.6%, a ΔM of 66.5%, a DPM of 82.4, and an EOM of 97.6, while the baseline model achieves a ΔA of 10.3%, a ΔM of 60.5%, a DPM of 71.2, and an EOM of 96.1. FairerOPTH has significant advantages in terms of lower average screening disparity, DPM, and EOM. The ΔD and PQD of FairerOPTH for each disease are shown in Fig. 3c, d. Compared with the baseline model, FairerOPTH has smaller ΔD values for 38 diseases, which means that FairerOPTH is fairer when considering the sex of patients, showing less sex bias, especially for diagnosing lens dislocation. For myelinated nerve fiber, optic abnormalities, and other diseases, FairerOPTH also shows large fairness advantages. Finally, we report the screening accuracy (mAP) for each disease in patients of different sexes (Table 2). FairerOPTH can make diagnoses that are not only less biased but also more accurate. Even when screening 38 disease types with a significantly imbalanced distribution (Fig. 1), we show that FairerOPTH still demonstrates great improvements in accuracy and fairness over the baseline model.

a Proportion of male and female patients included in the OculoScope test set. b Comparison of fairness metrics (ΔA (average screening disparity), ΔM (max screening disparity), DPM (demographic disparity metric), and EOM (equality of opportunity metric)2) and screening accuracy (mAP) between the baseline and our FairerOPTH. c Details of the fairness metric ΔD (screening quality disparity) for 38 diseases. d Details of PQD (predictive quality disparity)2 for 38 diseases. Source data are provided as a Source Data file.

Screening accuracy on the OculoScope dataset

To verify that FairerOPTH is not solely able to mitigate unfairness, we examine its multitask multilabel classification accuracy on UWF and NAF fundus images to understand the effectiveness of incorporating fundus features into the disease diagnosis process (Fig. 4a). We compare FairerOPTH with several state-of-the-art multi-label classification approaches, including the MCAR14, CSRA15, C-Tran16, ML-Decoder17, and ASL18 approaches.

a The FairerOPTH consists of two branches, pathology and disease classification that predict 67 fundus features and 38 ophthalmic diseases, respectively. Such design of the two branches aims to enhance the disease representation in the disease classification branch, resulting in higher screening accuracy. b Comparison of FairerOPTH with the baseline model and state-of-the-art multi-label classification methods using mAP, specificity, sensitivity, and AUC (area under the curve) evaluation metrics. c, d ROC (Receiver Operating Characteristics) curves for 38 diseases of baseline model and FairerOPTH. Source data are provided as a Source Data file.

The approach for each comparison is slightly modified to identify the 38 diseases included in the OculoScope dataset. The comparison results evaluated on the OculoScope dataset are shown in Fig. 4b. The performance of the MCAR14 method is relatively poor because it uses a CNN framework and does not consider the problem of class imbalance. Therefore, this method is not suitable for images with scattered pathological features such as fundus images. Likewise, although the CSRA15 method shows a slight improvement, this improvement may be more obvious on images with more concentrated features such as natural images. For fundus images with widely distributed and scattered fundus features of the same category, C-Tran16 and ML-Decoder17 use a transformer-based classification head, and their performance is improved to a certain extent. ASL18 somewhat addressed the class imbalance problem, so its performance is improved significantly. Our FairerOPTH method achieves the best results compared to the above methods. The mAP of FairerOPTH is 92.0%, which is 2.2% larger than that of ASL18. FairerOPTH achieves the sensitivity and specificity of 0.956 and 0.970, respectively. In summary, the experimental results demonstrate the effectiveness of using fundus features to enhance disease representation, thereby achieving higher diagnostic accuracy.

To further demonstrate the superiority of FairerOPTH, we also show the ROC curves and AUC values for each disease with the OculoScope dataset (Fig. 4c). Except for the AUCs of fibrosis, isolated vessel tortuosity, and optic abnormalities, which were lower than 0.95, the remaining 35 diseases all had AUCs exceeding 0.96. Among them, there are more than 20 disease types with AUC values above 0.99, showing that our method achieves good results in multilabel disease classification and can be adapted to most ophthalmic diseases. The false positive rate and false negative rate for typical diseases with imbalanced data are also demonstrated in Supplementary Table 2.

Screening accuracy on the MixNAF dataset

In addition to validating the screening performance of FairerOPTH with UWF images, we also validated our method’s performance with NAF images. To fully demonstrate the superiority of our method on the MixNAF dataset, we also compare it with other state-of-the-art multi-label classification approaches. Each comparison method is slightly modified to identify the 16 types of disease included in the MixNAF dataset. The screening results are shown in Fig. 5 on the comparison with the narrow-angle dataset shows that our method exhibits the best mAP, sensitivity, and AUC. Specifically, the mAP is 2.2% higher than the second-best ASL method18. The results shows that our FairerOPTH also achieves good screening performance on narrow-angle fundus images partly from several public datasets. This screening improvement is mainly attributed to the introduction of fundus features.

a The FairerOPTH predicts 20 fundus features and 16 ophthalmic diseases from input NAF images. b Comparison of FairerOPTH with the baseline model and state-of-the-art multi-label classification methods using mAP, specificity, sensitivity, and AUC (area under the curve) evaluation metrics. c ROC (Receiver Operating Characteristics) curves for 16 diseases of baseline model and FairerOPTH. Source data are provided as a Source Data file.

Generalizability of the FairerOPTH architecture

Other types of data such as commonly used text can be easily adapted to our architecture. To validate it, additional experiments using text as input to the pathology classification branch are conducted on the OculoScope dataset and denoted as “FairerOPTH (text)” (Supplementary Table 3). The input text is a description of fundus features in the fundus image. We use the trained BERT19 to extract features from the inputted text. The “FairerOPTH (text)” and “FairerOPTH (image)” achieve better performance than the Baseline. In addition, “FairerOPTH (text)” performs better in screening accuracy and fairness than “FairerOPTH (image)” most of the time. The results demonstrate that our architecture can adapt to the text modality and even achieve better performance.

The trained FairerOPTH model, like other common fundus image-based diagnostic models, can be directly deployed on fundus datasets without fundus feature annotations. As shown in Supplementary Fig. 1, the FairerOPTH model contains the upper and bottom two branches, where the upper branch is used to extract pathological features and help the bottom branch of disease classification. During the testing phase, similar to other fundus-based disease classification models, the trained FairerOPTH model only needs to input fundus images and can automatically extract pathological features and identify diseases. We test the trained FairerOPTH model on the public IDRiD20 dataset without fundus feature annotations. The diagnostic performance of the Baseline and ViT-Large21 models is also shown in Supplementary Table 4.

Ablation study

To evaluate whether the newly annotated fundus features reflect an effective enhancement in disease diagnosis, we perform ablation studies by removing pathological information (i.e., the pathology-aware attention module). Specifically, we use an advanced ASL18 method (Ldisea term) to serve as the baseline model, which helps us to remove the potential confound of differing models. We also conduct an ablation study on the proposed pathological feature loss Lpatho to evaluate its effectiveness. The ablation study results on the OculoScope and MixNAF datasets are shown in Supplementary Table 5. The experiment demonstrates that the mAP for disease classification on the OculoScope dataset drops by 0.3% after removing the fundus features. Likewise, the mAP decreased by 2.0% after removing Lcon and Ljoint from the model, suggesting a strong relationship between fundus features and disease. Similar experimental results are shown for the MixNAF dataset. This performance gain once again demonstrates the effectiveness of our proposed method for enhancing disease diagnosis with fundus features.

Discussion

Our extensive results demonstrate that the rich fundus features contained in UWF or NAF images can make AI models not only less unfair but also more accurate. We achieve this through the implicit fairness learning approach that incorporates the causal relationship between fundus features and eye diseases. This causal relationship is relatively independent of sensitive attributes and imaging modes. The fairness of our model is statistically significantly better than the baseline model (ResNet-101) using the disease classification branch alone. Even when screening many disease types with a significantly imbalanced distribution, experimental results demonstrate that FairerOPTH still greatly improves the accuracy and fairness of diagnosis for most diseases.

The discovery of using fundus features to mitigate the unfairness in the disease diagnosis is interesting because such an implicit learning method does not directly model fair regularization for AI diagnostic models and has never been reported. There are several common factors that cause biases7,22. For example, the biases have already been included in the collected dataset because of variations in incidence such as race, age, region, or sex. In addition, the biases may also be caused by missing data, such as missing samples for the target population, which prevents representation of the population. The widely used optimization objectives that are designed to minimize overall classification errors will benefit majority groups over minorities. To overcome these biases, pre-process23,24,25, in-process26,27, and post-process28,29 have been demonstrated in recent literature. Different mechanism types have their own advantages and disadvantages. For instance, preprocessing mechanisms offer the advantage of compatibility with any classification algorithm, but using these methods may compromise the interpretability of the outcomes. Postprocessing mechanisms have the flexibility to be applied in conjunction with any classification algorithm. However, owing to their application at a relatively late stage in the learning process, they generally yield subpar outcomes. Our implicit learning method based on the relationship between the fundus features and disease diagnosis can be regarded as a kind of fair causal learning.

We also explore the most contributing factors by conducting experiments on the diseases including congenital retinal degenerative diseases (including retinitis pigmentosa, stargardt and other congenital macular diseases), optic abnormalities, and fibrosis that correspond to the three possible factors including congenital diseases with different manifestations in different periods, mixed causes, and great individual differences (Supplementary Table 6). The result demonstrates that compared with the baseline model, FairerOPTH has obvious improvement in fairness in Fibrosis disease, which indicates that individual difference is a potential factor that contributes most to mitigating unfairness. In addition, the mixed cause and congenital disease with different manifestations in different periods are also two relatively important factors.

FairerOPTH can aid in addressing the diagnostic basis by predicting the fundus features that exist in the input fundus images. This promotes transparency and explainability in fair models, which are vital for building trust in these models. Compared with regular screening methods, FairerOPTH can not only diagnose diseases in fundus images but also output the relevant fundus features at the same time. Because of their causal relationship, the fundus features can be used as the pathological explanation of the disease diagnosis on the fundus image. This is different from traditional methods for disease explanation that uses the CAM30 method to generate class activation maps as visual explanations (Supplementary Fig. 2). Such explanations are incomprehensible to non-professionals. In contrast, FairerOPTH can give the specific fundus feature name, which is beneficial to the patients with the disease in better understanding their clinical signs. However, there may be a contradiction between the predictions of the two branches, even the disease and pathological features are classified with high accuracy (Supplementary Table 7). This contradictory prediction does affect the accuracy of the model’s interpretability, which should be addressed in future work.

Our approach has the potential to be extended to diagnosing other similar diseases. Apart from ophthalmic diseases, various other diseases have a diagnostic process that typically involves doctors identifying the pathological areas first and then considering all the pathological information to diagnose the specific disease in the patient. Therefore, our method can effectively be extended to similar diseases, thereby improving diagnosis and treatment outcomes.

Although FairerOPTH achieves good results, there are some limitations. First, there is a significant amount of fundus manifestation information in fundus images, with single images containing as many as six different pathologies. This pathological information is often scattered throughout the image. Therefore, accurately capturing these fundus features poses a significant challenge for the model and directly impacts the model’s ability to effectively provide useful information for diagnosing diseases without introducing misinformation. In this study, we do not carefully design specific network modules to improve the identification accuracy of fundus features. However, the ablation study results demonstrate the significant role of fundus features. Therefore, designing a better deep network structure to improve the model’s ability to capture diverse fundus features is a worthy pursuit for future research. Another challenge is the lack of pathological labels in most existing datasets, which primarily consists only of disease labels. This poses a substantial hurdle in studying the relationship between diseases and fundus manifestations. Using our labeled datasets, developing semi-supervised learning methods with larger public datasets is one of the ways to improve FairerOPTH.

Finally, we recognize the importance of considering and addressing potential disparities related to race and ethnicity in healthcare research. However, a limitation is that the demographics of our patient population can not perform the fairness evaluation to the sensitive attributes of race/ethnicity. Our hospital is situated in Shanghai, a region located in East China. The majority of our patients come from the surrounding provinces and the local area. In this geographical context, the Han nationality constitutes the overwhelming majority of our patient population. As a result, our dataset primarily comprises patients of Han ethnicity.

In summary, we have demonstrated that using the relationship between fundus features and disease diagnosis to model an implicit fairness learning approach can mitigate age and sex biases and improve the overall screening accuracy of the model. There are two main further directions of study. One is to develop other effective, generalizable implicit fairness learning approaches. The other is to evaluate whether our approach can be generalized to other diseases that are of worldwide concern to alleviate the unfairness brought about by AI.

Methods

Ethical approval for the study was obtained from the Ethics Committee of Eye & ENT Hospital, Fudan University, Shanghai, China (Approval No. 2023427), and all procedures were in accordance with the Declaration of Helsinki. The study used anonymized images from patients who had completed their routine eye examinations or treatments at the hospital. Personal identifiers were removed post-annotation, ensuring that neither the retinal annotation experts nor the AI development team had access to patient-identifiable information. Given that the study utilized pre-existing, anonymized data and involved no additional examinations or interventions, the Ethics Committee waived the need for informed consent, citing minimal risk and adherence to privacy regulations.

Datasets

We developed and validated a fairer AI system in ophthalmology (FairerOPTH) using a newly collected ultra-widefield fundus dataset (OculoScope) and a re-annotated narrow-angle dataset (MixNAF) combining multiple public datasets with data from over 8405 patients in total.

OculoScope

The OculoScope dataset contains 16,530 UWF images captured by Optos P200dTx (Optos PLC, Dunfermline, United Kingdom) with an image resolution of 3070 × 3900 pixels from the Eye Institute and Department of Ophthalmology, Eye & ENT Hospital, Fudan University, Shanghai, China (Fig. 1). The Optos Daytona P200T utilizes advanced technology to acquire a singular 200° image that covers 82% of the retina within a duration of <0.4 seconds. The corresponding proprietary software, OptosAdvanceTM, facilitates documentation, monitoring, and analysis, including various measurement tools. Furthermore, multiple images can be merged to create a comprehensive 220° montage that covers 97% of the retina.

The images in the OculoScope dataset showed left and right eyes of 8405 patients of different sexes and ages, as well as fundus images of different disease stages and after treatment (Supplementary Table 8). The annotations were completed by two ophthalmologists with extensive clinical experience who strictly followed relevant standards and procedures during the data labeling process. Initially, the two ophthalmologists conducted two rounds of annotations on the same batch of fundus images, accounting for the symptom descriptions and final diagnosis results. This resulted in a total of four annotation rounds. Simultaneously, the fundus features closely associated with each disease type were annotated for each image. If there was a disagreement in the results, the two doctors would jointly arbitrate. In cases where a consensus could not be reached, a senior retinal disease expert would be consulted. The final annotation result was based on the consensus of these three retinal disease experts. The OculoScope dataset encompasses images depicting 38 disease types and 67 fundus features (Supplementary Data 1), such as retinitis pigmentosa (RP), pathological myopia (PM), familial exudative vitreoretinopathy (FEVR), central retinal vein occlusion (CRVO), von hippel-lindau disease (VHL), acute retinal necrosis (ARN), maculopathy, retinal detachment (RD), vogt-koyanagi-harada (VKH) disease, branch retinal vein Occlusion (BRVO), coats disease, lens dislocation, etc. Importantly, no other publicly available datasets include labels for fundus features (Supplementary Table 1). There are 2,423 and 512 normal fundus images in the training and test sets, respectively.

To demonstrate the complexity of OculoScope and the natural causal relationship that exists between disease and fundus features, we calculate the label densities for disease types and fundus features separately (Fig. 1e). The label density is denoted as ρ, with \(\rho=\frac{1}{N}{\sum }_{i=1}^{C}\left\vert {y}_{i}\right\vert\), where yi is the number of images showing the i-th disease, N represents the total number of images, and C represents the number of disease types. The label densities for disease type and fundus features in OculoScope are 1.94 and 2.05, respectively. Such densities indicate that the fundus images in the OculoScope dataset contain more than one disease, while on average each fundus image nearly contains two fundus features. This also demonstrates that, on average, one disease corresponds to more than two fundus features.

MixNAF

After thoroughly examining various publicly available fundus datasets, two expert doctors discovered a common limitation: while these datasets contained annotations for one or a few specific diseases, they did not cover a wide range of other eye diseases present in fundus images. To comprehensively evaluate the effectiveness of FairerOPTH, we took a multi-faceted approach. We combined images from partial datasets such as the JSIEC4, Kaggle-EyePACS31, iChallenge-AMD32, OIA-ODIR33, and RFMiD34 datasets, along with newly collected regular narrow-angle fundus images captured using a traditional fundus camera. This amalgamation resulted in a new regular fundus dataset called MixNAF.

To ensure accurate annotations, we invited three ophthalmologists with extensive clinical experience to meticulously examine and determine the diagnosis for each of the collected images. Each image was carefully re-diagnosed and annotated with the corresponding fundus features. Images with unclear disease types and fundus features were excluded from the dataset. Ultimately, the MixNAF dataset consists of 4540 annotated fundus images, representing 16 diseases and 20 fundus features. There are 1622 and 365 normal fundus images in the training and test sets, respectively.

FairerOPTH development

Based on the two fundus datasets captured by different imaging devices, we develop the FairerOPTH model (Supplementary Fig. 1) to fairly and accurately identify the diseases in fundus images simultaneously. Specifically, FairerOPTH takes a UWF image as an input and outputs the classification results showing any pathologies and the disease diagnosis. Our objective is to utilize the relationship between diseases and fundus features to learn informative and attribute-invariant representations to improve the screening accuracy of ophthalmic diseases and mitigate the sexism and ageism associated with AI screening.

FairerOPTH consists of two branches, the pathology classification and disease classification branches, involving two encoders and a pathology-aware attention module. Both encoders have an identical architecture: a pre-trained CNN (ResNet-101)35. The encoders are used to extract pathological features Fp ∈ RC×H×W and disease features Fd ∈ RC×H×W, respectively. Then, the extracted pathological features are simultaneously input into a classifier and a pathology-aware attention module. Here, the pathology-aware attention module consists of a convolutional block attention module (CBAM)36, which enables the computation of both channel and spatial attention. Finally, two classifiers are used for pathology and disease classification. We use three classification losses to constrain the classification results on pathology, disease, and consistency.

Implicit fairness learning

Ophthalmologists rely on the observation of crucial pathological information to accurately diagnose ophthalmic diseases. By applying the pathology-aware attention module to analyze the extracted pathological features, we obtain a refined pathological feature map. Then, the refined feature map is added to the extracted disease features to obtain \({F}_{e}\in {R}^{{C}^{{\prime} }\times {H}^{{\prime} }\times {W}^{{\prime} }}\), aiming to enhance disease features with pathological semantics. This module captures the inherent relationships between the pathological features and the disease categories, enhancing the representation of crucial information in the feature map. Specifically, the pathological attention module takes the pathological and disease features, namely Fp and Fd, obtained by ResNet-101 as inputs and then learns the information for enhancing the disease features based on the pathological features.

Given the pathological feature map Fp, we first apply global average pooling to compress the spatial information, ultimately yielding global spatial features Favg. Then, we input the global spatial features into a fully connected neural (FCN) layer to generate a channel attention map Wchn, which is described as follows:

where σ( ⋅ ) is the sigmoid function normalizing the attention weights to \(\left[0,1\right]\), and WFCN and bFCN are the weights and bias of the FCN layer, respectively. Afterwards, we apply the Hadamard product to the learned attention weights Wchn and the original feature map Fp in order to adaptively select the pathological information that is useful for disease classification.

Finally, we add the selected feature map and the disease feature map Fd in an element-wise manner to generate the pathologically aware feature map \({F}_{p}^{aware}\):

Therefore, the pathological attention module is used to capture the causal relationship between pathology and disease, and then further enhance the representation of diseases in the input fundus image. Finally, this module can make the model not only more accurate but also less biased.

Loss functions

The distributions of diseases and pathologies are highly imbalanced distribution due to limited number of rare diseases, age groups, etc. in the dataset. To mitigate this problem, we use the asymmetric loss (ASL)18 as the classification loss. The overall loss function is defined as

where λ1, λ2, λ3, and λ4 are the weights to balance the effects of different loss terms. Lpatho and Ldisea indicate the ASL loss for pathology and disease classification, respectively. Then, Ljoint is used to constrain the consistency of the two classification results, and Lconsis constrains the consistency of feature maps extracted from two encoders. Letting Fp and Fd be the two feature maps extracted from Encoderp and Encoderd, respectively, Lconsis is defined as

where m, n, and l indicate the width, height, and channel of the features map, respectively.

Implementation details

We use similar network configurations for the experiments with the OculoScope and MixNAF datasets. The parameters of the ResNet-101 network are initialized from the model pre-trained on the ImageNet37. Common data augmentation methods are used to enrich the data. For instance, we scale the size of input fundus images to 512 × 648 and augment the set of training data with random horizontal and vertical flips. The details of training and test dataset division are shown in Supplementary Table 9. For the OculoScope and MixNAF datasets, we carry out multi-class multi-disease classification and perform fairness analysis. The network is optimized using the Adam optimizer38. The initial learning rate is 1e-4, and a decaying learning rate is implemented by using OneCycleLR for each batch with a weight_decay=1e-4, betas=(0.9, 0.999)39,40. We trained the network for 100 epochs with a batch size of 16. The entire model is built on a Ubuntu 18.04 system with PyTorch and two NVIDIA GeForce RTX 2080Ti. The loss weight parameters of λ1, λ2, λ3, and λ4 are experimentally set to 0.01, 1, 0.01, and 1e-5, respectively.

Fairness evaluation metrics

There exist several quantitative measures and approaches to ascertain fairness, encompassing widely employed metrics such as disparate impact and equalized odds. These fairness metrics aim to achieve complete decoupling between predictions and sensitive attributes, either unconditionally (disparate impact) or conditionally (equalized odds)3. We adopt three prediction-based fairness evaluation metrics: (i) ΔD (screening quality disparity) measures the average precision (AP) difference between each sensitive group. We first calculate the difference between the highest and the lowest AP across different sensitive groups for a certain disease, and then compute the ratio between the obtained difference and the mean AP of all sensitive groups, i.e.,

where S = {1, 2, ⋯ , M} is the set of sensitive groups, for instance, under the sensitive attribute of sex, S = {female, male}. k is the index of a disease type, and APk,i denotes the average precision of i-th group of a sensitive attribute in k disease. Together, (ii) ΔM (max screening disparity) and (iii) ΔA (average screening disparity) are used to comprehensively evaluate the overall performance of the FairerOPTH. We calculate the ΔM and ΔA across sensitive groups for each category as follows:

where Q = {1, 2, ⋯ , K} is the set of disease types. A model is considered to be fairer if it has smaller values for these three fair metrics. In addition to the above metrics, we also use three fairness evaluation metrics including PQD (predictive quality disparity), DPM (demographic disparity metric), and EOM (equality of opportunity metric), which is defined in the paper2. The PQD measures the fairness of an individual class, similar to our ΔD metric. The DPM and EOM are defined for multi-class multi-diseases, which are suitable for our experimental setting.

Screening evaluation metrics

Each sample of the experimental datasets is assigned multiple labels of fundus features and disease types shown in the images. We treat the multi-label classification problem as multiple binary classification tasks involving the ≥16 disease classes and ≥20 fundus features. In this setting, the classification performance of each class is evaluated, which allows us to assess the classification performance of sensitive attributes. Specifically, TPj, FPj, TNj, and FNj represent the true positive, false positive, true negative and false negative rates of the test samples for the j-th class. We report four evaluation metrics for each disease, including \(Precision=\frac{T{P}_{j}}{F{P}_{j}+T{P}_{j}}\), \(Specificit{y}_{j}=\frac{T{N}_{j}}{F{P}_{j}+T{N}_{j}}\), \(Sensitivit{y}_{j}=\frac{T{P}_{j}}{T{P}_{j}+F{N}_{j}}\), and area under the ROC curve (AUC). Finally, we calculate the macro-average of the above metrics for each disease to measure the overall screening performance of a model.

Models for comparison

The implementation of compared models such as https://github.com/gaobb/MCARMCAR14, https://github.com/Kevinz-code/CSRACSRA15, https://github.com/QData/C-TranC-Tran16, https://github.com/Alibaba-MIIL/ML_DecoderML-Decoder17, and https://github.com/Alibaba-MIIL/ASLASL18 adopts the official code and hyperparameter settings provided by the authors of the paper.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

All data that support the findings of this study are included in the paper. This study utilizes some public datasets, including JSIEC (https://zenodo.org/record/3477553), Kaggle-EyePACS (https://www.kaggle.com/c/diabetic-retinopathy-detection), iChallenge-AMD (https://ai.baidu.com/broad/download), OIA-ODIR (https://odir2019.grand-challenge.org/), and RFMiD (https://riadd.grand-challenge.org/download-all-classes/). The public IDRiD dataset can be accessed through the following link: https://ieee-dataport.org/open-access/indian-diabetic-retinopathy-image-dataset-idrid. Our newly collected OculoScope and reorganized MixNAF datasets are publicly available at figshare https://figshare.com/s/926c2c2ef9e77ab5eb9d. Source data are provided with this paper.

Code availability

The codes and trained models associated with FairerOPTH are freely available at https://github.com/mintanwei/Fairer-AI(https://doi.org/10.5281/zenodo.10892893), which is based on PyTorch.

References

Daneshjou, R. et al. Disparities in dermatology ai performance on a diverse, curated clinical image set. Sci. Adva. 8, eabq6147 (2022)

Du, S. et al. FairDisCo: Fairer AI in Dermatology via Disentanglement Contrastive Learning. In ECCV Workshops (Springer, Cham, 2022).

Du, M. et al. Fairness in deep learning: a computational perspective. IEEE Intell. Syst. 36, 25–34 (2020).

Cen, L.-P. et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 12, 4828 (2021).

Varadarajan, A. V. et al. redicting optical coherence tomography-derived diabetic macular edema grades from fundus photographs using deep learning. Nat. Commun. 11, 130 (2020).

Ricci Lara, M. A., Echeveste, R. & Ferrante, E. ddressing fairness in artificial intelligence for medical imaging. Nat. Commun. 13, 4581 (2022).

Pessach, D. & Shmueli, E. ewblock A review on fairness in machine learning. ACM Comput. Surv. (CSUR) 55, 1–44 (2022).

Jiang, J. and Lu, Z. Learning fairness in multi-agent systems. Advances in Neural Information Processing Systems. Vol. 32. pp. 13854–13865 (Curran Associates Inc., 2019).

Makhlouf, K., Zhioua, S. & Palamidessi, C. k Machine learning fairness notions: bridging the gap with real-world applications. Inf. Process. Manag. 58, 102642 (2021).

Tromp, J. et al. ewblock A formal validation of a deep learning-based automated workflow for the interpretation of the echocardiogram. Nat. Commun. 13, 6776 (2022).

Dai, L. et al. ewblock A deep learning system for detecting diabetic retinopathy across the disease spectrum. Nat. Commun. 12, 3242 (2021).

Eulenberg, P. et al. structing cell cycle and disease progression using deep learning. Nat. Commun. 8, 463 (2017).

Handa, J. T. et al. ewblock A systems biology approach towards understanding and treating non-neovascular age-related macular degeneration. Nat. Commun. 10, 3347 (2019).

Gao, B.-B. & Zhou, H.-Y. Learning to discover multi-class attentional regions for multi-label image recognition. IEEE Trans. Image Process. 30, pp. 5920–5932 (2021).

Zhu, K. and Wu, J. Residual attention: A simple but effective method for multi-label recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (2021), pp. 184–193.

Lanchantin, J. et al. General multi-label image classification with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021), pp. 16478–16488.

Ridnik, T. et al. Ml-decoder: Scalable and versatile classification head. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (2023), pp. 32–41.

Ridnik, T. et al. Asymmetric loss for multi-label classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (2021), pp. 82–91.

Devlin, J. et al. Bert: pre-training of deep bidirectional transformers for language understanding. In North American Chapter of the Association for Computational Linguistics (2019).

Porwal, P. et al. ock Idrid: diabetic retinopathy - segmentation and grading challenge. Med. image Anal. 59, p. 101561 (2020).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations (2021).

Mehrabi, N. et al. ewblock A survey on bias and fairness in machine learning. ACM Comput. Surv. 54, 1–35 (2021).

Calmon, F. et al. Optimized pre-processing for discrimination prevention. Advances in neural information processing systems. Vol. 30.

Xu, D., Yuan, S. and Wu, X. Achieving differential privacy and fairness in logistic regression. In Companion proceedings of The 2019 world wide web conference (2019), pp. 594–599.

Samadi, S. et al. The price of fair pca: One extra dimension. Advances in neural information processing systems. Vol. 31.

Friedler, S. A. et al. A comparative study of fairness-enhancing interventions in machine learning. In Proceedings of the conference on fairness, accountability, and transparency (2019), pp. 329–338.

Woodworth, B. et al. Learning non-discriminatory predictors. In Conference on Learning Theory (2017), pp. 1920–1953.

Agarwal, A. et al. A reductions approach to fair classification. In International conference on machine learning (2018), pp. 60–69.

Agarwal, A., Dudík, M. and Wu, Z. S. Fair regression: quantitative definitions and reduction-based algorithms. In International Conference on Machine Learning (2019), pp. 120–129.

Zhou, B. et al. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition (2016), pp. 2921–2929.

Gulshan, V. et al. velopment and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316, 2402–2410 (2016).

Fang, H. et al. lock Adam challenge: detecting age-related macular degeneration from fundus images. IEEE Trans. Med. Imaging 41, 2828–2847 (2022).

Li, N. et al. A benchmark of ocular disease intelligent recognition: one shot for multi-disease detection. In F. Wolf and W. Gao (eds.), Benchmarking, Measuring, and Optimizing (2021), pp. 177–193.

Pachade, S. et al. Retinal fundus multi-disease image dataset (rfmid): A dataset for multi-disease detection research. Data. Vol. 6

He, K. et al. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (2016), pp. 770–778.

Woo, S. et al. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV) (2018), pp. 3–19.

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (2009), pp. 248–255.

Kingma, D. P. and Ba, J. Adam: a method for stochastic optimization. In Y. Bengio and Y. LeCun (eds.), 3rd International Conference on Learning Representations, ICLR 2015 (2015).

Loshchilov, I. and Hutter, F F. SGDR: stochastic gradient descent with warm restarts. In 5th International Conference on Learning Representations, ICLR 2017 (2017).

Smith, L. N. and Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. In Artificial intelligence and machine learning for multi-domain operations applications, vol. 11006 (2019), pp. 369–386.

Acknowledgements

We gratefully acknowledge support for this work provided by National Natural Science Foundation of China (NSFC) (Grant No.: 82020108006 to C.Z., U2001209 to B.Y., and 62372117 to W.T.), and Natural Science Foundation of Shanghai (NSFS) (Grant No.: 21ZR1406600 to W.T.).

Author information

Authors and Affiliations

Contributions

W.T., Q.W., B.Y., and C.Z. conceived the framework and technique for eye disease research. B.Y., Z.X., W.T., and H.F. designed and implemented the fairer AI algorithm. B.Y., W.T., Q.W., and H.F. designed the validation experiments. C.Z., Q.W., H.K. H.F., and Y.L. collected, organized, and annotated the UWF and NAF fundus images. H.F. trained the network and performed the validation experiments. W.T., Z.X., and Q.W. analyzed the validation results. C.Z., B.Y., and Y.L. supervised the research. All authors had access to the study data and contributed to writing the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Mengnan Du, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tan, W., Wei, Q., Xing, Z. et al. Fairer AI in ophthalmology via implicit fairness learning for mitigating sexism and ageism. Nat Commun 15, 4750 (2024). https://doi.org/10.1038/s41467-024-48972-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-024-48972-0

This article is cited by

-

An ultra-wide-field fundus image dataset for intelligent diagnosis of intraocular tumors

Scientific Data (2025)