Abstract

The practical implementation of many quantum algorithms known today is limited by the coherence time of the executing quantum hardware and quantum sampling noise. Here we present a machine learning algorithm, NISQRC, for qubit-based quantum systems that enables inference on temporal data over durations unconstrained by decoherence. NISQRC leverages mid-circuit measurements and deterministic reset operations to reduce circuit executions, while still maintaining an appropriate length persistent temporal memory in the quantum system, confirmed through the proposed Volterra Series analysis. This enables NISQRC to overcome not only limitations imposed by finite coherence, but also information scrambling in monitored circuits and sampling noise, problems that persist even in hypothetical fault-tolerant quantum computers that have yet to be realized. To validate our approach, we consider the channel equalization task to recover test signal symbols that are subject to a distorting channel. Through simulations and experiments on a 7-qubit quantum processor we demonstrate that NISQRC can recover arbitrarily long test signals, not limited by coherence time.

Similar content being viewed by others

Introduction

The development of machine learning algorithms that can handle data with temporal or sequential dependencies, such as recurrent neural networks1 and transformers2, has revolutionized fields like natural language processing3. Real-time processing of streaming data, also known as online inference, is essential for applications such as edge computing, control4, and forecasting5. The use of physical systems whose evolution naturally entails temporal correlations appears, at first sight, to be ideally suited for such applications. An emerging approach to learning, referred to as physical neural networks (PNNs)6,7,8,9, employs a wide variety of physical systems to compute a trainable transformation on an input signal. A branch of PNNs that has proven well suited to online data processing is physical reservoir computing10, distinguished by its trainable component being only a linear projector acting on the observable state of the physical system11. This approach has the enormous benefit of fast convex optimization through singular value decomposition routines and has already enabled temporal learning on various hardware platforms4,12,13,14,15.

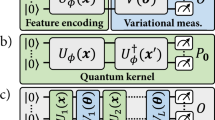

Among many physical systems considered for PNNs, quantum systems are believed to offer an enormous potential for more scalable, resource-efficient, and faster machine learning16,17,18,19,20,21,22,23, due to their evolution taking place in the Hilbert space that scales exponentially with the number of nodes24,25,26,27,28,29,30. However, quantum machine learning (QML) on present-day noisy intermediate-scale quantum (NISQ) hardware has so far been restricted to training and inference on low-dimensional static data due to several difficulties. A fundamental restriction is Quantum Sampling Noise (QSN) – the unavoidable uncertainty arising from the finite sampling of a quantum system – which limits the accuracy of both QML training and inference9,31,32 even on fault-tolerant hardware. In addition, the optimization landscape for training quantum systems often features “barren plateaus”33,34, which are regions where optimization becomes exponentially difficult. These plateaus, especially in the presence of QSN, present a significant challenge to implementing QML at scales relevant to practical applications.

Two further concerns arise when considering inference on long data streams, which call into question whether quantum systems can even in principle be employed for online learning on streaming data. Firstly, without quantum error correction, the operation fidelities and finite coherence times of constituent quantum nodes place a limit on the size of data on which inference can be performed35,36, which would appear to rule out inference on long data streams. Secondly, the nature of measurement on quantum systems imposes a fundamental constraint on continuous information extraction over long times. Backaction due to repeated measurements on quantum systems necessitated by inference on streaming data is expected to lead to the rapid distribution of information between different parts of the system, a phenomenon known as information scrambling and thermalization37,38, making it extremely difficult to track or retrieve the information correlations in the input data. This constraint persists even in an ideal system with perfect coherence, such as one that may be realized by a fault-tolerant quantum computer. It is not known precisely what conditions must be satisfied to avoid information scrambling. For classical dynamical systems, a strict condition known as the fading memory property39,40 is required for a physical system to retain a persistent temporal memory that does not degrade on indefinitely long data streams. This imposes restrictions on the design of a classical reservoir and in particular, how input data is encoded. Here, a mathematical framework known as Volterra Series theory41 provides the basis for analyzing the memory properties of a classical dynamical system. Such a general theory for quantum systems has remained elusive so far.

Here we present a Volterra theory for quantum systems that accounts for measurement backaction, necessary for analyzing the conditions required to endow a quantum system with a persistent temporal memory on streaming data. Based on this Quantum Volterra Theory we propose an algorithm, NISQ Reservoir Computing (NISQRC), that leverages recent technical advances in mid-circuit measurements to process signals of arbitrary duration, not limited by the coherence time of constituent physical qubits (see Fig. 1). The property that enables inference on an indefinitely-long input signal – the ability to avoid measurement-induced thermalization at long times under repeated measurements due to a deterministic reset protocol – is intrinsic to the algorithm: it survives even in the presence of QSN, and does not require operating in a precisely-defined parameter subspace – and is thus unencumbered by barren plateaus.

For concreteness, the architecture is shown for a quantum circuit with a projective computational basis readout; both the underlying quantum system and the measurement scheme can be much more general. Temporal input data is encoded into the evolution of the reservoir at every time-step n via a quantum channel \({{{\mathcal{U}}}}({u}_{n})\); a non-trivial I/O map is enabled via partial readout and subsequent reset of a readout subsystem while a memory subsystem retains the memory of past inputs. Temporal quantum reservoir computing (QRC) output x(n) are obtained via measurements (more precisely, stochastic unbiased estimators \(\bar{{{{\bf{X}}}}}(n)\) of expected features are constructed from S repetitions of the experiment, see Method III A), and a learned linear combination is used to approximate the target functional y(n) of un. The overall execution time of the circuit is O(NS), where N is the length of the input temporal sequence.

Here, we demonstrate the practical viability of NISQRC through application to a task of technological relevance for communication systems, namely, the equalization of a wireless communication channel. Channel equalization aims to reconstruct a message streamed through a noisy, non-linear and distorting communication channel and has been employed in benchmarking reservoir computing architectures11,14 as well as other machine learning algorithms42,43. This task poses a challenge for parametric circuit learning-based algorithms19 because the number of symbols in the message, Nts, to recover in the inference stage directly determines the length of the encoding circuit, which, in turn, is limited by the coherence time of the system. A more critical issue is that the recovery has to be done online, as the message is streamed, which structurally is not suitable for static encoding schemes. We demonstrate through numerical simulation (Results’ subsection “Practical machine learning using temporal data”) and experiments on a 7-qubit quantum processor (Results’ subsection “Experimental results on the quantum system”) that NISQRC enables quantum systems to process signals of arbitrary duration. Most significantly, this ability to continuously extract useful information from a single quantum circuit is not limited by coherence time. Instead, the quantum system’s coherence influences the resulting memory timescale; we show that by balancing the length of individual input encoding steps with the rate of information extraction through mid-circuit measurements, it is possible to endow the circuit with a memory that is appropriate for the ML task at hand. Even in the limit of infinite coherence, temporal memory is still limited by this fundamental trade-off. Reliable inference on a time-dependent signal of duration Trun = 117 μs is demonstrated on a 7-qubit quantum processor with qubit lifetimes in the range 63 μs – 164 μs and T2 = 9 μs – 231 μs. In our experiments, longer durations are restricted by limitations on mid-circuit buffer clearance. To leave no doubt that a persistent memory can be generated, we first compare the experimental results to numerical simulations with the same parameters, showing excellent agreement. Building on the accuracy of numerical simulations in the presence of finite coherence and our noise model, we explicitly demonstrate successful inference on a 5000 symbol signal: the resulting circuit duration is 500 times that of the individual qubit lifetimes.

Here, we also develop a method to efficiently sample from deep circuits under partial measurements. Simulating individual quantum trajectories for circuits with repeated measurements requires the traversal of ever-branching paths conditioned on the measurement results, which becomes rapidly unfeasible for deep circuits. Our numerical method (see “Methods” subsection “The quantum Volterra theory and analysis of NISQRC”) allows us to sample from repeated partial measurements on circuits of arbitrary depth. We use our scheme to numerically explore other seemingly reasonable encoding methods adopted in previous studies, showing that these can lead to a sharp decline in performance when the effect of measurement is properly accounted for. Drawing upon the Quantum Volterra Theory, we unveil the underlying cause: the absence of a persistent memory mechanism.

Results

Time-series processing in quantum systems

The general aim of computation on temporal data is expressed most naturally in terms of functionals of a time-dependent input u = {u−∞, ⋯ , u−1, u0, u1, ⋯ , u∞}. A functional \({{{\mathcal{F}}}}:{{{\bf{u}}}}\mapsto {{{\bf{y}}}}\) maps a bounded function u to another arbitrary bounded function y, where y = {y−∞, ⋯ , y−1, y0, y1, ⋯ , y∞}. Without loss of generality, these functions can be normalized; we choose un ∈ [ − 1, 1] and yn ∈ [ − 1, 1]. Within the reservoir computing paradigm44, this processing is achieved by extracting outputs x(n), where n is a temporal index, from a physical system evolving under said time-dependent stimulus un ≡ u(n). Learning then entails finding a set of optimal time-independent weights w to best approximate a desired \({{{\mathcal{F}}}}\) with a linear projector yn ≡ y(n) = w ⋅ x(n). If the physical system is sufficiently complex, its temporal response x(n) to a time-dependent stimulus u is universal in that it can be used to approximate a large set of functionals \({{{\mathcal{F}}}}[{{{\bf{u}}}}]\) with an error scaling inversely in system size and using only this simple linear output layer27,28,45.

To analyze the utility of this learning framework, it proves useful to quantify the space of functionals \({{{\mathcal{F}}}}[{{{\bf{u}}}}]\) that are accessible. For classical non-linear systems, a firmly established means of doing so is a Volterra series representation of the input-output (I/O) map39:

where the Volterra kernels \({h}_{k}^{(j)}({n}_{1},\cdots \,,{n}_{k})\) characterize the dependence of the systems’ measured output features at time n on its past inputs \({u}_{n-{n}_{\kappa }}\). Hence the support of \({h}_{k}^{(j)}\) over the the temporal domain (n1, ⋯ , nk) quantifies the notion of memory of a particular physical system, with the kernel order k being the corresponding degree of nonlinearity of the map. Most importantly, the Volterra series representation describes a time-invariant I/O map, as well as the property of fading memory, which roughly translates to the property that the reservoir forgets initial conditions and thus depends more strongly on more recent inputs (For instance, for multi-stable dynamical systems, a global representation such as Eq. (1) may not exist. However, a local representation around each steady state can be shown to exist with a finite convergence radius). The realization of such a time-invariant map is essential for a physical system to be reliably employed for inference on an input signal of arbitrary length, and thus for online time series processing.

In classical physical systems, the existence of a unique information steady state and the resulting fading memory property is determined only by the input encoding dynamics – the map from input series to system state. More explicitly, the information extraction step (sometimes referred to as the “output layer”) on a classical system is considered to be a passive action, so that the state can always be observed at the precision required. However, for physical systems operating in the quantum regime, the role of quantum measurement is fundamental: in addition to the inherent uncertainty in quantum measurements as dictated by the Heisenberg uncertainty principle, the conditional dependence of the statistical system state on prior measurement outcomes – referred to as backaction – strongly determines the information that can be extracted. Recent work in circuit-based quantum computation has shown that the qualitative features of the statistical steady state of monitored circuits strongly depend on the rate of measurement46,47. In particular, generic quantum systems that alternate dynamics and measurement (input encoding and output in the present context) are known to give rise to deep thermalization of the memory subsystem48,49, resulting in an approximate Haar-random state with vanishing temporal memory. The absence of a comprehensive framework in QML for analyzing and implementing an encoding-decoding system with finite temporal memory, along with characterization tools for the accessible set of input-output functionals, has hindered both a systematic study and the practical application of online learning methods.

Here, we develop both a general temporal learning framework suitable for qubit-based quantum processors and the associated methods of analysis based on an appropriate generalization of the Volterra Series analysis to monitored quantum systems, the Quantum Volterra Theory (QVT). Our approach incorporates the effects of backaction that results from quantum measurements in the process of information extraction.

We begin by providing a fundamental description of both the information input and output processes that enable general time series processing with quantum systems before specializing in the NISQRC algorithm. The ‘input’ component of the map is given by a pipeline (encoding) that injects temporal data {un} into a quantum system through a general parameterized quantum channel \({{{\mathcal{U}}}}({u}_{n})\hat{\rho }\). This channel could describe for instance continuous Lindblad evolution for a duration τ, namely \({e}^{\tau {{{\mathcal{L}}}}({u}_{n})}\hat{\rho }\), as in Results’ subsection “Practical machine learning using temporal data”, or a discrete set of gates as in Results’ subsection “Experimental results on the quantum system”; \({{{\mathcal{U}}}}({u}_{n})\) is generally applied to all qubits, and we assume only that they are not explicitly monitored for its duration.

To enable persistent memory in the presence of quantum measurement, we separate the L-qubit system into M memory qubits and R readout qubits (L = M + R) and denote their respective Hilbert spaces with superscript M and R. After evolution under any input un, only the R readout qubits are (simultaneously) measured; this separation therefore allows for the concept of partial measurements of the full quantum system, which proves critical to the success of NISQRC. The measurement scheme itself can be very general, characterized by a positive operator-valued measure (POVM)

satisfying \({\hat{E}}_{j}\succcurlyeq 0\) and \({\sum }_{j}{\hat{E}}_{j}={\hat{I}}^{\otimes R}\). Here we will consider a practically implementable measurement in the readout qubit computational basis, described by \({\hat{E}}_{j}=\vert {{{{\bf{b}}}}}_{j}\rangle \,\langle {{{{\bf{b}}}}}_{j}\vert\): each bit-string bj is the R-bit binary representation of integer j ∈ {0, 1, ⋯ , 2R − 1} denoting the bit-wise state of the measured qubits.

As elucidated by the QVT analysis of Results’ subsection “Quantum Volterra Theory”, a purification mechanism must necessarily accompany readout to prevent thermalization and furnish our quantum architecture with persistent fading memory. This is accomplished by following each projective measurement operation with a deterministic reset to the ground state \(\left\vert 0\right\rangle\). The resulting measure-reset operation we employ throughout this paper is formally described by the POVM operators \({\hat{E}}_{j}={\hat{K}}_{j}^{{{\dagger}} }{\hat{K}}_{j}\) in Eq. (2), with non-hermitian Kraus operators \({{\hat{K}}_{j}=\vert {{{{\bf{b}}}}}_{0}\rangle \,\langle {{{{\bf{b}}}}}_{j}\vert}\). In each measure-reset step, only the readout qubits are measured in the computational basis and then reset to the ground state, irrespective of the measurement outcome.

NISQRC is distinguished by the iterative encode-measure-reset scheme depicted in Fig. 1. Explicitly, for a given input sequence u with length N, we initialize the system in the state \({\hat{\rho }}_{0}^{{\mathsf{M}}}\,\otimes \,\left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\). For each element of the input sequence un, an encoding step is comprised of unmonitored evolution of all qubits via \({{{\mathcal{U}}}}({u}_{n})\) followed by a measure-reset operation \({{{{\mathcal{O}}}}}_{R}\). The measurement outcome in this single shot is a random bitstring b(s)(n), and the resulting state is \({\hat{\rho }}_{n}^{{\mathsf{M}},{\mathtt{cond}}}\,\otimes \,\left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\): the memory qubits are in a state conditioned on the measurement outcome, and the readout qubits are reset. The subsequent input is then encoded in this state, i.e., \({{{\mathcal{U}}}}({u}_{n+1})\,\left({\hat{\rho }}_{n}^{{\mathsf{M}},{\mathtt{cond}}}\,\otimes \,\left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\right)\), and the process is iterated as long as there is data in the pipeline. This structure elucidates the naming of the unmeasured memory qubits: these are the only qubits that retain the memory of past inputs.

The above description yields a set of N measurement outcomes {b(s)(n)} observed in a single shot s of the quantum circuit. In order to obtain statistics and therefore to output features as expected values of observables \({\hat{M}}_{j}\), we perform S repetitions of this circuit for a given u sequence: the total execution time is NS, linear with respect to shots S and input length N. The resulting readout features are formally defined as the probability

which are estimated by the empirical mean \({\bar{X}}_{j}(n)=(1/S){\sum }_{s}\delta ({{{{\bf{b}}}}}^{(s)}(n),{{{{\bf{b}}}}}_{j})\) of \({\{{{{{\bf{b}}}}}^{(s)}(n)\}}_{s\in [S]}\) (see “Methods” subsection “Generating features via conditional evolution and measurement” for more details of the NISQRC algorithm). We show in Supplementary Note 2 that at time step n, xj(n) can be computed efficiently through

where \({\hat{\rho }}_{n}^{{\mathsf{MR}}}\) is the effective full L-qubit system state at time step n prior to measurement.

The output \({y}_{n}\equiv {{{\bf{y}}}}(n)={{{\bf{w}}}}\cdot {{{\bf{x}}}}(n)\in {\mathbb{R}}\) is obtained from the measurement results in each step, defining the functional I/O map which we characterize next (see details in “Methods” subsection “Generating features via conditional evolution and measurement” and “The quantum Volterra theory and analysis of NISQRC”). This complete architecture, from the quantum circuit generating measurement outcomes for a given input, to the construction of weighted output features, is depicted schematically in Fig. 1. We note that reset operations have been used implicitly in prior work on quantum reservoir algorithms, where the successive inputs are encoded in the state of an ‘input’ qubit26,50. However the critical role of the reset operation in endowing a quantum reservoir with a persistent memory, discussed in the next subsection, has so far not been highlighted.

While for null inputs (i.e., un = 0 for all n) such quantum systems are guaranteed to have a unique statistical steady state, the existence of a nontrivial memory and kernel structure is much more involved. Through QVT (see “Methods” subsection “The quantum Volterra theory and analysis of NISQRC”), we show that these requirements place strong constraints on the encoding and measurement steps viz. the choice of (\({{{\mathcal{U}}}}\), \({\hat{M}}_{j}\)). This then enables us to propose an algorithm for online learning that provably provides a controllable and time-invariant temporal memory (which will be referred to as persistent memory) – enabling inference on arbitrarily long input sequences even on NISQ hardware without any error mitigation or correction.

Quantum Volterra theory

In NISQRC the purpose of the partial reset operation is to endow the system with asymptotic time-invariance, a finite persistent memory, and a nontrivial Volterra Series expansion for the system state (see “Methods” subsection “The quantum Volterra theory and analysis of NISQRC” and Supplementary Note 3):

where all Volterra kernels \({\hat{h}}_{k}\) are quantum operators. The classical kernels in Eq. (1) describing the measured features can be extracted through \({h}_{k}^{(\; j)}={{{\rm{Tr}}}}({\hat{M}}_{j}{\hat{h}}_{k})\). We refer to this analysis as the Quantum Volterra Theory (QVT). Through analytical arguments based on the QVT, we show that omitting the partial reset operation renders all Volterra kernels trivial – a finding corroborated by our experimental results in Results’ subsection “Experimental results on quantum system”.

QVT also provides a way to characterize the important memory time-scales of the I/O map generated by the NISQRC algorithm through a given encoding, which we use in Results’ subsection “Practical machine learning using temporal data” to aid encoding design for a specific ML task on an experimental system. In what follows, we show that inference on an indefinitely long input sequence can be done even in the presence of dissipation and decoherence.

Consider an input-encoding \({{{\mathcal{U}}}}({u}_{n})\hat{\rho }={e}^{\tau {{{\mathcal{L}}}}({u}_{n})}\hat{\rho }\) where

representing evolution under a parameterized Hamiltonian \(\hat{H}({u}_{n})\) for a duration τ in the presence of dissipation \({{{{\mathcal{D}}}}}_{{{{\rm{T}}}}}\). For concreteness, we take \({{{{\mathcal{D}}}}}_{{{{\rm{T}}}}}={\sum }_{i=1}^{L}{\gamma }_{i}{{{\mathcal{D}}}}[{\hat{\sigma }}_{i}^{-,z}]\) describing decoherence processes and study here a specific Ising Hamiltonian encoding \(\hat{H}(u)={\hat{H}}_{0}+u\cdot {\hat{H}}_{1}\) inspired by quantum annealing and simulation architectures (other ansätze can likewise be considered),

The coupling strength \({J}_{i,i^{\prime} }\), transverse x-field strength \({\eta }_{i}^{x}\) and longitudinal z-drive strength \({\eta }_{i}^{z}\) are randomly chosen but then fixed for all inputs {un} (see Supplementary Note 1 for more details). The encoding channel is applied for duration τ, and each qubit has a finite lifetime T1 = γ−1. We will specify the number of memory and readout qubits of a given QRC with the notation (M + R).

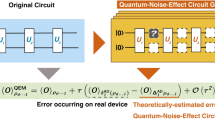

In Fig. 2(a), we plot the first two Volterra kernels h1 and h2 (cf. Eq. (1)), for a random (2 + 1)-qubit QRC using the above encoding and the reset scheme. The expression for these kernels has been derived from the QVT; their numerical construction is discussed in Methods, also see Supplementary Equations 43–45. Importantly, we find all kernels have an essential dependence on the statistical steady state or fixed-point in the absence of any input: \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}={\lim }_{n\to \infty }{\hat{\rho }}_{n}^{{\mathsf{M}}}{| }_{{u}_{n}=0}\). Here \({\hat{\rho }}_{n}^{{\mathsf{M}}}{| }_{{u}_{n}=0}={{{{\mathcal{P}}}}}_{0}^{n}{\hat{\rho }}_{0}^{{\mathsf{M}}}\) is obtained by n applications of the null-input single-step quantum channel \({{{{\mathcal{P}}}}}_{0}\), defined in Methods’ subsection “The quantum Volterra theory and analysis of NISQRC”. The properties of quantum Volterra kernels, including their characteristic decay time, can be related to the spectrum of \({{{{\mathcal{P}}}}}_{0}\), defined by \({{{{\mathcal{P}}}}}_{0}{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}={\lambda }_{\alpha }{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\). Here \({\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\) are eigenvectors that exist in the 4M-dimensional space of memory subsystem states. The eigenvalues satisfy \(1={\lambda }_{1}\ge | {\lambda }_{2}| \ge \cdots \ge | {\lambda }_{{4}^{M}}| \ge 0\); examples are plotted in Fig. 2(b) for various values of τ. The unique eigenvector corresponding to the largest eigenvalue λ1 = 1 is special, being the fixed point of the memory subsystem, \({\hat{\varrho }}_{1}^{{\mathsf{M}}}={\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\), reached once transients have died out.

a First and second order Volterra kernels in a (2 + 1)-qubit QRC, which vanish at large n1 and n2 due to finite memory nM. b Fixed-point of memory subsystem \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\) with reset (top) and without reset (bottom), starting from an arbitrary initial state (center). Without reset, the fixed point is always the trivial fully-mixed state and Volterra kernels vanish. The top panel shows the distribution of the 4M = 256 eigenvalues of \({{{{\mathcal{P}}}}}_{0}\) in a (4 + 2)-qubit QRC, where red dots correspond to the static unit eigenvalue λ1 = 1. The remaining eigenvalues λα≥2 (blue) evolve with evolution time τ, leading to a variable memory time. The bottom panel shows the resulting memory time nM as a function of the evolution duration τ. c Memory time nM as a function of qubit lifetimes T1 = γ−1, in terms of the evolution duration τ in a (4 + 2)-qubit QRC. Provided T1 ≫ τ, \({n}_{{{{\rm{M}}}}}\to {n}_{{{{\rm{M}}}}}^{0}\), so that the QRC memory is mostly dominated by its lossless dynamical map and not by T1 in this regime.

The second largest eigenvalue λ2 determines the time over which memory of an initial state persists as this fixed point is approached, and is used to identify a memory time \({n}_{{{{\rm{M}}}}}=-1/\ln | {\lambda }_{2}|\). Note that this quantity is dimensionless and can be converted to the actual passage of time through multiplication by τ, while nM itself non-trivially depends on τ (see Fig. 2(b)). Memory time describes an effective ‘envelope’ for a system’s Volterra kernels; an additional nontrivial structure is also required for QRC to produce meaningful functionals of past inputs. With the spectral problem at hand, we next analyze the information-theoretical benefit of the reset operation. Firstly, the absence of the unconditional reset operation produces a unital \({{{{\mathcal{P}}}}}_{0}\) (“unital” refers to an operator that maps the identity matrix to itself) with resulting \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}={I}^{\otimes M}/{2}^{M}\). This fully mixed state is inexorably approached after nM steps under any input sequence and retains no information on past inputs: all Volterra kernels, therefore, vanish, despite a generally-finite nM. Such algorithms (e.g., refs. 27,30) are only capable of processing input sequences of length nM and would not retain a persistent memory necessary for inference on longer sequences of inputs. Hence such encodings would be unsuitable for online learning on streaming data. The possibility of inference through the transients has been observed and utilized before (see e.g., refs. 12,51,52) in the context of classical reservoir computing. However, the simple yet essential inclusion of the purifying reset operation avoids unitality – more generally, a common fixed point for all u-encoding channels – which we find is the key to enabling nontrivial Volterra kernels and consequent online QRC processing (see “Methods” subsection “The quantum Volterra theory and analysis of NISQRC” and also ref. 53). Once such an I/O map is realized, λα and the consequent memory properties can be meaningfully controlled by the QRC encoding parameters. As shown in Fig. 2(b) the characteristic decay time set by nM, for instance, decreases across several orders-of-magnitude with increasing τ.

The partial measurement and reset protocol also resolves the unfavorable quadratic runtime scaling of prior approaches. A wide range of proposals and implementations of QRC27,29,54 consider the read-out of all constituent qubits at every output step, terminating the computation. Not only does this preclude inference on streaming data, it requires the entire input sequence to be re-encoded to proceed one step further in the computation, leading to an O(N2S) running time. As shown in schematic Fig. 1, incorporating partial measurement with reset in NISQRC does not require such a re-encoding; the entire input sequence can be processed in any given measurement shot S, enabling online processing with an O(NS) runtime, while maintaining a controllable memory timescale. We note that an alternative scheme to remedy this issue has been suggested in ref. 50, which relies on information extraction through continuous weak measurement.

Next, we show that the nontrivial nature of Volterra kernels realized by the NISQRC algorithm is preserved under the inclusion of dissipation. For example, we explore the effect of finite qubit T1 on nM in Fig. 2(c). If \({T}_{1}/\tau \, > \, {n}_{{{{\rm{M}}}}}^{0}\), where \({n}_{{{{\rm{M}}}}}^{0}\) is the memory time of the lossless map, then \({n}_{{{{\rm{M}}}}}\to {n}_{{{{\rm{M}}}}}^{0}\) and is essentially independent of T1, determined instead by the unitary and measurement-induced dynamics. Therefore the design of the encoding algorithm has to be guided by matching the memory time of the reservoir to the longest correlation time in the input data. Additional design criteria are discussed in Section Discussion. As a result, lossy QRCs can still be deployed for online processing, with a total run time Trun that is unconstrained by (and can therefore far exceed) T1. We will demonstrate this via simulations in Results’ subsection “Practical machine learning using temporal data” with Trun ≫ T1, and via experiments in Results’ subsection “Experimental results on the quantum system” for Trun ≃ T1; in the latter Trun is limited only by memory buffer constraints on the classical backend.

Practical machine learning using temporal data

Thus far, we have assumed outputs to be expected features xj(n), which, in principle assumes an infinite number of measurements. In any practical implementation, one must instead estimate these features with S shots or repetitions of the algorithm for a given input u. The resulting QSN constrains the learning performance achievable in experiments on quantum processors in a way that can be fully characterized9 and is therefore also included in numerical simulations which we present next.

To demonstrate the utility of the NISQRC framework, we consider a practical application of machine learning on time-dependent classical data: the channel equalization (CE) task11,14. Suppose one wishes to transmit a message m(n) of length N, which here takes discrete values from { − 3, − 1, 1, 3}, through an unknown noisy channel to a receiver. This medium generally distorts the signal, so the received version u(n) is different from the intended m(n). Channel equalization seeks to reconstruct the original message m(n) from the corrupted signal u(n) as accurately as possible, and is of fundamental importance in communication systems. Specifically, we assume the message is corrupted by nonlinear receiver saturation, inter-symbol interference (a linear kernel), and additive white noise11,14 (additional details in Supplementary Note 6). As shown in Fig. 3(a), even if one has access to the exact inverse of the resulting nonlinear filter, the signal-to-noise (SNR) of the additive noise bounds the minimum achievable error rate. We also show the error rates of simply rounding u(n) to the nearest m, and a direct logistic regression on u(n) (i.e., a single-layer perceptron with a softmax activation – see Supplementary Note 6). for comparison. Both these approaches are linear and memory-less and, therefore, perform poorly on the non-trivial nonlinear filter we consider, although logistic regression outperforms rounding (≈ 30%) by inverting the linear portion of the distortion.

a Error rates on test messages for the CE task with a Hamiltonian ansatz (2 + 4)-qubit QRC for two distinct connectivities shown in (b) The fully-connected QRC in red has Jacobian rank RJ = 2R − 1 = 15 and is shown for both S → ∞ (circles) and finite S = 105 (⋆), whereas the split QRC has RJ = 2(22 − 1) = 6 and only S → ∞ is plotted in magenta. These are compared with the error rates of naive rounding (black dash-dots) and logistic regression on the current signal (yellow +, see Supplementary Note 6), and the exact channel inverse (blue dashed). c Performance of connected QRC on SNR = 20dB test signals (solid) of increasing length Nts ≤ 5000, with shots S = 105. Training error on N = 100-length messages is indicated for comparison in dashed lines. Without reset (red) or using 4 ancilla qubit ansatz with quantum non-demolition (QND) readout (proposed in ref. 30, green), the algorithms both fail, approaching the random guessing error rate and showing that both architectures suffer from the thermalization problem. Performance is only slightly reduced from the dissipation-free case (blue) when strong decay T1 = 10τ is included (purple). All error rates in (c) are averaged over 8 different test messages.

We now perform the CE task using the NISQRC algorithm on a simulated (2 + 4)-qubit reservoir under the ansatz of Eq. (7), as could be realized in quantum annealing hardware (see Fig. 3). We will later demonstrate the same task in experiments with a completely different quantum system and encoding ansatz, implemented on a superconducting quantum processor (see Fig. 4). The ability to efficiently compute the Volterra kernels for this quantum system immediately provides guidance regarding parameter choices. In particular, we choose random parameter distributions such that the average (across the circuit) \({J}_{i,i^{\prime} }\tau\), \({\eta }_{i}^{x}\tau\) and \({\eta }_{i}^{z}\tau\) provides a memory time nM ≈ O(101), on the order of the length of the distorting linear kernel h(n), which is 8. These QRCs have K = 24 = 16 readout features \({\{{x}_{j}(n)\}}_{j\in [K]}\) whose corresponding time-independent output weights w are learned by minimizing cross-entropy loss on 100 training messages of length N = 100 (see Supplementary Note 6 for additional details). The resulting NISQRC performance on test messages is studied in Fig. 3(a), where we compare two distinct coupling maps shown in Fig. 3(b). In the highly-connected (lower) system the performance approaches the theoretical bound for S → ∞; finite sampling (here, S = 105 is in the range typically used in experiments) increases the error rate as expected, but the increase in error rate in numerical simulations is observed to depend on the encoding (not reported here). In all cases, NISQRC significantly outperforms direct logistic regression due to its ability to reliably implement non-linear memory kernels and therefore approximate the distorting channel inverse.

a (3 + 4)-qubit linear chain of the ibm_algiers device used to perform the CE task. Filled colors represent qubit T1 time according to the displayed colorbar, for the specific experimental run with the split chain. Qubits indexed {8, 14, 19} are used for memory and qubits {5, 11, 16, 22} for readout, and gate-decomposition of the encoding unitary \(\hat{U}({u}_{n})\) is depicted. Removing gates shaded in brown yields two smaller chains to explore the role of connectivity, while removing reset operations (shaded peach) allows switching from a non-unital to a unital I/O map. b Testing error rates for the SNR = 20dB CE task of Results' subsection “Practical machine learning using temporal data” with N = 20 on the ibm_algiers device in filled circles and in simulation in open circles, as a function of number of shots S. The connected circuit in blue outperforms the split circuit in brown and the circuit without reset in peach. For comparison, we plot the testing error rate of logistic regression (yellow line), as well as random guessing (black dashed line).

We note that the split system (upper) performs significantly worse even without sampling noise: this is because the quantum system lives in a smaller effective Hilbert space – the product of two disconnected three-qubit systems – and is far less expressive as a result. Although in both cases the number of measured features is the same, those from the connected system span a richer and independent space of functionals. This functional independence can be quantified by the Jacobian rank RJ, which is the number of independent u-gradients that can be represented by a given encoding (Supplementary Note 5); an increased connectivity and complexity of state-description generally manifests as an increase in the Jacobian rank and consequent improved CE task performance. This observation can be viewed as a generalization of the findings in time-independent computation9 to tasks over temporally-varying data, and also agrees with related recent theoretical work29.

Most importantly, we demonstrate in Fig. 3(c) that the NISQRC algorithm enables the use of a quantum reservoir for online learning. In all cases studied here, N = 100 is used for training and the length of the SNR = 20dB test messages Nts is varied. As suggested by the QVT, the performance is unaffected by Nts even if it greatly exceeds the lifetime of individual qubits: Nts = Trun/τ ≫ T1/τ = 10, and NISQRC can, therefore, be used to perform inference on an indefinite-length signal with noisy quantum hardware. As seen in the same figure, while dissipation imposes only a small constant performance penalty, the reset operation is critical: if removed, the error rate returns to that of random guessing, as the Volterra kernels vanish and the I/O map becomes trivial.

We finally note that an arbitrarily-inserted reset operation may not be sufficient to create a non-zero persistent memory. For instance, an analysis based on the QVT shows that despite its use in a recently studied reservoir algorithm30 (based on a quantum non-demolition measurement proposal in ref. 27), the reset operation can not avoid a zero persistent memory, effectively resulting in an amnesiac reservoir. In this scheme, the quantum circuit is coupled to ancilla qubits by using transversal CNOT gates. Upon closer examination it is found that while the projective measurement of ancilla qubits leads to read out of system qubits and their collapse to the ancilla state via backaction, subsequent reset of the ancillas does not reset the system qubits. This scheme therefore suffers from the same thermalization problem as any no-reset NISQRC does, and hence has zero persistent memory. We verify this analysis in Fig. 3(c) by implementing the CE task with a four-ancilla-qubit circuit. The error rates are found to be very close to the no-reset-NISQRC one, whose I/O map we have shown before to be trivial (see also Fig. 3(c)).

Experimental results on quantum systems

We now demonstrate NISQRC in action by performing the SNR = 20dB CE task on an IBM Quantum superconducting processor. To highlight the generality of our NISQRC approach, we now consider a circuit-based parametric encoding scheme inspired by a Trotterization of Eq. (7), suitable for gate-based quantum computers. In particular, we use a L = 7 qubit linear subgraph of the ibm_algiers device, with M = 3 memory qubits and R = 4 readout qubits in alternating positions, as depicted in Fig. 4(a). The encoding unitary for each time step n is also shown: \(\hat{U}({u}_{n})={\left({{{\mathcal{W}}}}(J){{{{\mathcal{R}}}}}_{z}({{{{\boldsymbol{\theta }}}}}^{z}+{{{{\boldsymbol{\theta }}}}}^{I}{u}_{n}){{{{\mathcal{R}}}}}_{x}({{{{\boldsymbol{\theta }}}}}^{x})\right)}^{{n}_{T}}\), where \({{{{\mathcal{R}}}}}_{x,z}\) are composite Pauli-rotations applied qubit-wise, and \({{{\mathcal{W}}}}(J)\) defines composite \({{{{\mathcal{R}}}}}_{zz}\) gates between neighboring qubits, all repeated nT = 3 times (for parameters θx,z,I, J and further details see “Methods” subsection “IBMQ implementation”).

Realizing the NISQRC framework with the circuit ansatz depicted in Fig. 4(a) requires the state-of-the-art implementation of mid-circuit measurements and qubit reset, which has recently become possible on IBM Quantum hardware55. We plot the testing error using the indicated linear chain of the ibm_algiers device as a function of the number of shots S in solid blue Fig. 4(b), alongside simulations of both the ideal unitary circuit and with qubit losses in open circles. We clearly observe that performance is influenced by the number of shots available, and hence by QSN. In particular, for a sufficiently large S, the device outperforms the same logistic regression method considered previously. For the circuit runs, the average qubit coherence times over 7 qubits are \({T}_{1}^{{{{\rm{av}}}}}=124\,\mu{\rm{s}}\), \({T}_{2}^{{{{\rm{av}}}}}=91\mu{\rm{s}}\) (see Supplementary Note 9 for the ranges of all parameters, which vary over the time of runs as well), while the total circuit run time for a single message is Trun ≈ 117 μs. Even though \({T}_{{{{\rm{run}}}}}\simeq {T}_{1}^{{{{\rm{av}}}}}\), the CE task performance using NISQRC on ibm_algiers is essentially independent of qubit lifetimes. This is emphatically demonstrated by the excellent agreement between the experimental results and simulations assuming infinite coherence-time qubits. In fact, finite qubit decay consistent with ibm_algiers leaves simulation results practically unchanged (as plotted in dashed blue); we find that T1 times would have to be over an order of magnitude shorter to begin to detrimentally impact NISQRC performance on this device (see Supplementary Note 7). We further find that artificially increasing Trun beyond T1 by introducing controlled delays in each layer also leaves performance unchanged (see Supplementary Note 8).

Using the same device, we are able to analyze several important aspects of the NISQRC algorithm. First, we consider the same CE task with a split chain, where the connection between the qubits labeled ‘14’ and ‘16’ on ibm_algiers is severed by removing the Rzz gate highlighted in brown in Fig. 4(a). The resulting device performance using these two smaller chains is worse, consistent both with simulations of the same circuit and the analogous split Hamiltonian ansatz studied in Results’ subsection “Practical machine learning using temporal data”. Next, we return to the 7 qubit chain but now remove reset operations in the NISQRC architecture, shaded in red in Fig. 4(a): all other gates and readout operations are unchanged. The device performance now approaches that of random guessing: the absence of the crucial reset operation leads to an amnesiac QRC with no dependence on past or present inputs. This remarkable finding reinforces that reset operations demanded by the NISQRC algorithm are, therefore, essential to imbue the QRC with memory and enable any non-trivial temporal data processing.

We note that for these experiments, while performance qualitatively agrees well with simulations, some quantitative discrepancies are observed. Our deployment of mid-circuit measurements in their earliest implementation on IBM Quantum was accompanied by some technical constraints; for example, not all shots for a given instance of the CE task could be collected in contiguous repeated device runs, instead sometimes being separated by several hours (due to queuing times as well as classical memory buffer constraints on the number of shots that could be collected in a single experiment). Simply put, this means that the device could suffer non-trivial parameter drifts from one type of device configuration to the next, and even during the course of collecting all shots for a specific configuration. In particular, we find that qubit lifetimes for experiments with the split chain, and the connected chain without reset, were significantly shorter than for the connected chain with reset (see Supplementary Tables 1–3), which could lead to the discrepancy in comparison to simulations, where we assumed a fixed coherence time distribution. Resource constraints similarly restrict us to limited training and testing set sizes, which can also lead to variance in performance. We anticipate such technical constraints to be alleviated as mid-circuit measurement implementations mature on IBM Quantum, enabling even more accurate correspondence with simulations.

We also note that there is room for improvement in CE performance when compared against Hamiltonian ansatz NISQRC of similar scale in Fig. 3. A key difference is the reduced number of connections in the nearest-neighbor linear chain employed on ibm_algiers; including effective \({{{{\mathcal{R}}}}}_{zz}\) gates between non-adjacent qubits significantly increases the gate-depth of the encoding step, enhancing sensitivity to circuit-fidelity due to increasing runtimes. The circuit ansatz can also be optimized - using knowledge of the Volterra kernels - for better nonlinear processing capabilities demanded by the CE task, in addition to memory capacity determined by nM. Nonetheless, the demonstrated performance and robustness of the NISQRC framework to dissipation already suggests its viability for increasingly complex time-dependent learning tasks using actual quantum hardware.

Discussion

A key technical advancement in our work is the formulation of the Quantum Volterra Theory (QVT) to describe the time-invariant input-output map of a quantum system under temporal inputs and repeated measurements. Insights provided by the QVT enabled us to propose the essential component of the NISQRC algorithm - deterministic post-measurement reset to avoid thermalization due to repeated measurements - which allows the quantum system to retain persistent memory of temporal inputs even under projective measurements and their associated strong backaction. The resulting algorithm enables inference on a signal that can be arbitrarily long, provided the encoding is designed to endow the reservoir with a memory that matches the longest correlation time in the data.

While we have applied the QVT to qubit-based circuits, our analysis does not make an explicit assumption on the Hilbert space dimension of the quantum system, and allows for completely general measurements through its formulation in terms of POVMs; as a result, it can be applied to other finite-level quantum systems such as qudits24, and can be extended to continuous-variable quantum systems28,31. We, therefore, believe the QVT provides the ideal framework to analyze the memory and computational capacity of temporal information processing schemes using general quantum systems and their associated measurement protocols. We note here that the use of continuous weak measurements, analyzed in ref. 50, provides an alternative approach to endowing the reservoir with finite persistent memory and can be analyzed with QVT for its task-specific optimization.

Going beyond the crucial reset component, we have demonstrated that QVT can be invaluable in identifying general design principles for qubit-based systems as reservoirs. For example, while measuring some fraction of qubits is essential for extracting information, measuring all qubits imposes a trivial memory time. We employ M ≃ R in this work, but an optimal separation of memory and readout qubits may depend on specific tasks. A simple rule of thumb is to choose M/R, together with τ and other drive strengths, to match the memory time of the physical system to the longest correlation time in the data. In the channel equalization task studied in Results’ subsection “Practical machine learning using temporal data”, the data correlation time is fixed by choice of the distorting channel and we have then chosen M/R to endow the quantum system with a memory time – calculated through the QVT formalism – that matches that time, about 8 steps (recall memory time is measured in the number of encoding steps). Especially, the duration of the unmonitored dynamics, τ, has been chosen to be long enough to generate non-linear kernels that match the known order of the non-linearity of the distorting channel, but short enough to avoid limiting the memory time by the shortest T1. For the latter requirement, the kind of analysis shown in Fig. 2c, calculated through QVT, can act as a very helpful guide. We have observed that even when there is a large spread in T1, the physical memory time may be longer than the shortest T1, presumably through the delocalization of the information on longer-lived memory qubits in the circuit. In addition, qubit connectivity, analyzed in Fig. 3a, can help with the generation of functions that are sufficiently complex to match the functional complexity of the task.

Finally, the most crucial design criterion for any quantum system intended to process streaming data is that the map \({{{{\mathcal{P}}}}}_{0}\) be non-unital. In an architecture with memory and readout qubits, the presence of a reset operation is essential but not on its own sufficient: as noted earlier the quantum channel must additionally contain input-dependent operations on both memory and readout qubits to prevent scrambling of memory qubits and endow the QRC state with the fading memory property. To address an important example, it is straightforward to confirm that any channel with input-dependent operations on only memory qubits \({{{\mathcal{U}}}}({u}_{n})\) and an arbitrary set of controlled gates from memory to readout qubits (e.g., Fig. 5d in ref. 30) is unital on memory qubits and therefore lacks a persistent temporal memory. We note that in such cases the reservoir can still be trained to implement complicated functions in the transient state12, but genuine online learning will not be possible. The QVT presented here prescribes how to avoid such pitfalls when designing a quantum channel for temporal data processing: one can simply check whether the resulting \({{{{\mathcal{P}}}}}_{0}\) is a unital map. We have not carried out here an exhaustive study of the optimal design principles for more complex or general classes of tasks, but we hope that the simple and fundamental guidelines we have followed for designing an experimental reservoir to accurately carry out equalization on RF-encoded messages illustrate the utility of QVT in the design of a hardware reservoir.

By enabling online learning in the presence of losses, NISQRC paves the way to harness quantum machines for temporal data processing in far more complex applications than the CE task demonstrated here. Examples include spatiotemporal integrators, and ML tasks where spatial information is temporally encoded, such as video processing. Recent results provide evidence that the most compelling applications, however, lie in the domain of machine learning on weak signals originating from other, potentially complex quantum systems15,56, for the purposes of quantum state classification. In tackling such increasingly complex tasks, the scale of quantum devices required is likely to be larger than those employed here. The NISQRC framework can be applied irrespective of device size; however, its readout features at a given time live in a K = 2R dimensional space. For applications requiring a large R, the exponential growth of the feature-space dimension may give rise to concerns with under-sampling, as in practice the available number of shots S may not be sufficiently large. In such large-R regimes, certain linear combinations of measured features can be found, known as eigentasks, that provably maximize the SNR9 of the functions approximated by a given physical quantum system trained with S shots. Eigentask analysis provides very effective strategies for noise mitigation. In ref. 9 the Eigentask Learning methodology was proposed to enhance generalization in supervised learning. For the present work, such noise mitigation strategies were not needed as the size of the devices used was sufficiently small to efficiently sample. An interesting direction is the application of Eigentask analysis to NISQRC, which we leave to future work.

The present work and the availability of an algorithm for information processing beyond the coherence time presents new opportunities for mid-circuit measurement and control. While mid-circuit measurement is essential for quantum error correction57, its recent availability on cloud-based quantum computers has allowed the exploration of other quantum applications on near-term noisy qubits. Local operations such as measurement followed by classical control for gate teleportation have been used to generate nonlocal entanglement58,59,60. In addition, mid-circuit measurements have been employed to study critical phenomena such as phase transitions61,62,63 and are predicted to allow nonlinear subroutines in quantum algorithms64. The present work opens up a new direction in this application space, namely the design of self-adapting circuits for inference on temporal data with slowly changing statistics. This would require dynamic programming capabilities for mid-circuit measurements, not employed in the present work. We show here that implementing even the relatively simple CE task challenges current capabilities for repeated measurements and control; having the means to deploy more complex quantum processors for temporal learning via NISQRC can push hardware advancements to more tightly integrate quantum and classical processing for efficient machine-based inference.

Methods

Generating features via conditional evolution and measurement

Here we detail how an input-output functional map is obtained in the NISQRC framework. The quantum system is initialized to \({\hat{\rho }}_{0}^{{\mathsf{MR}}}={\hat{\rho }}_{0}^{{\mathsf{M}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\), where \({\hat{\rho }}_{0}^{{\mathsf{M}}}\) is the initial state, which is usually set to be \(\left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes M}\). Then, for each run or ‘shot’ indexed by s, the process described in the following paragraph is repeated.

Before executing the n-th step, the overall state can be described as \({\hat{\rho }}_{n-1}^{{\mathsf{M}},{\mathtt{cond}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\) (usually pure), where the superscript cond emphasizes that the memory subsystem state is generally conditioned on the history of all previous inputs \({\{{u}_{m}\}}_{m\le n-1}\) and all previous stochastic measurement outcomes. The readout subsystem state is in a specific pure state, which can be ensured by the deterministic reset operation we describe shortly. Then, the current input un is encoded in the quantum system via the parameterized quantum channel \({{{\mathcal{U}}}}({u}_{n})\), generating the state \({\hat{\rho }}_{n}^{{\mathsf{MR}},{\mathtt{cond}}}={{{\mathcal{U}}}}({u}_{n})({\hat{\rho }}_{n-1}^{{\mathsf{M}},{\mathtt{cond}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R})\). In this work, \({{{\mathcal{U}}}}({u}_{n})\) takes the form of continuous evolution under Eq. (6) for a duration τ, or the discrete gate-sequence \(\hat{U}({u}_{n})\) depicted in Fig. 4a. The R readout qubits are then measured per Eq. (2), and the observed outcome is represented as an R-bit string: \({{{{\bf{b}}}}}^{(s)}(n)=({b}_{M+1}^{(s)}(n),\cdots \,,{b}_{M+R}^{(s)}(n))\). Here we consider simple ‘computational basis’ (i.e., \({\hat{\sigma }}^{z}\)) measurements, where each bit simply denotes the observed qubit state. A given outcome j occurs with conditional probability \({{{\rm{Tr}}}}({\hat{M}}_{j}{\hat{\rho }}_{n}^{{\mathsf{MR}},{\mathtt{cond}}})\) as given by the Born rule, and the quantum state collapses to the new state \({\hat{\rho }}_{n}^{{\mathsf{M}},{\mathtt{cond}}}\otimes \left\vert {{{{\bf{b}}}}}_{j}\rangle \,\langle {{{{\bf{b}}}}}_{j}\right\vert\) associated with this outcome. Finally, all R readout qubits are deterministically reset to the ground state (regardless of the measurement outcome); the quantum system is, therefore, in the state \({\hat{\rho }}_{n}^{{\mathsf{M}},{\mathtt{cond}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}\). This serves as the initial state into which the next input un+1 is encoded, and the above process is iterated until the entire input sequence u is processed. It is important to notice that \({\hat{\rho }}_{n}^{{\mathsf{M}}}\) depends on the observed outcome in step n − 1, and thus, the quantum state and its dynamics for a specific shot are conditioned on the history of measurement outcomes \({\{{b}_{i}^{(s)}(m)\}}_{m < n}\).

By repeating the above process for S shots, one obtains what is effectively a histogram of measurement outcomes at each time step n as represented in Fig. 1. The output features are taken as the frequency of occurrence of each measurement outcome, as in ref. 9: \({\bar{X}}_{j}(n)=\frac{1}{S}{\sum }_{s=1}^{S}{X}_{j}^{(s)}(n;{{{\bf{u}}}})\), where \({X}_{j}^{(s)}(n;{{{\bf{u}}}})=\delta ({{{{\bf{b}}}}}^{(s)}(n),{{{{\bf{b}}}}}_{j})\) counts the occurrence of outcome j at time step n. These features are stochastic unbiased estimators of the underlying quantum state probability amplitudes \({x}_{j}(n)={{\mathbb{E}}}_{{{{\mathcal{X}}}}}\,\,[{X}_{j}^{(s)}(n;{{{\bf{u}}}})]={\lim }_{S\to \infty }{\bar{X}}_{j}(n)\)9. As noted in the main text, the final NISQRC output is obtained by applying a set of time-independent linear weights to approximate the target functional \({\bar{y}}_{n}={{{\bf{w}}}}\cdot \bar{{{{\bf{X}}}}}(n)\). Importantly, during each shot s ∈ [S], we execute a circuit with depth N; the total processing time is therefore O(NS). If instead one re-encoded Nm previous inputs prior to each successive measurement the processing time is O(NmNS): Nm = O(N) if the entire past sequence is re-encoded as is conventionally done in QRC26,27,50.

The quantum Volterra theory and analysis of NISQRC

At any given time step n, the conditional dependence on previous measurement outcomes, presented in the “Methods” subsection “Generating features via conditional evolution and measurement”, is usually referred to as backaction. Defining \({\hat{\rho }}_{n}^{{\mathsf{MR}}}\) as the effective pre-measurement state of the quantum system at time step n of the NISQRC framework, quantum state evolution from time step n − 1 to n can be written via the maps:

which describes the reset of the post-measurement readout subsystem after time step n − 1, followed by input encoding via \({{{\mathcal{U}}}}({u}_{n})\) into the full quantum system state. With an eye towards the construction of an I/O map, it proves useful to introduce the expansion of the relevant single-step maps \({{{\mathcal{U}}}}(u)\) and \({{{\mathcal{C}}}}(u)\) in the basis of input monomials uk: \({{{\mathcal{U}}}}(u){\hat{\rho }}^{{\mathsf{MR}}}={\sum }_{k=0}^{\infty }{u}^{k}{{{{\mathcal{R}}}}}_{k}{\hat{\rho }}^{{\mathsf{MR}}}\) and \({{{\mathcal{C}}}}(u){\hat{\rho }}^{{\mathsf{M}}}=\mathop{\sum }_{k=0}^{\infty }{u}^{k}{{{{\mathcal{P}}}}}_{k}{\hat{\rho }}^{{\mathsf{M}}}\). Then, via iterative application of Eq. (9), \({\hat{\rho }}_{n}^{{\mathsf{MR}}}\) can be written as:

The measured features xj(n) can then be obtained via \({x}_{j}(n)={{{\rm{Tr}}}}({\hat{M}}_{j}{\hat{\rho }}_{n}^{{\mathsf{MR}}})\).

In Supplementary Note 3, we show that these xj(n) obtained using the NISQRC framework can indeed be expressed as a Volterra series

in the infinite-shot limit. The existence of this manifestly time-invariant form is only possible due to the existence of an information steady-state, guaranteed for a quantum mechanical system under measurement.

Due to fading memory, the Volterra kernel \({h}_{k}^{(j)}({n}_{1},\cdots \,,{n}_{k})\) characterizes the dependence of the systems’ output at time n on inputs at most nk steps in the past (recall n1≤ ⋯ ≤nk, see Eq. (11)). The evolution of \({\hat{\rho }}_{n}^{{\mathsf{MR}}}\) upto step n − nk, namely for all i < n − nk, is thus determined entirely by the null-input superoperator \({{{{\mathcal{P}}}}}_{0}\). Then the existence of a Volterra series simply requires the existence of an asymptotic steady state for the memory subsystem, \({\lim }_{n\to \infty }{{{{\mathcal{P}}}}}_{0}^{n}{\hat{\rho }}_{0}^{{\mathsf{M}}}={\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\). As shown in the Supplementary Note 3, such a fixed point is usually ensured by the map \({{{{\mathcal{P}}}}}_{0}{\hat{\rho }}^{{\mathsf{M}}}={{{\mathcal{C}}}}(0){\hat{\rho }}^{{\mathsf{M}}}={{{{\rm{Tr}}}}}_{{\mathsf{R}}}({{{\mathcal{U}}}}(0)({\hat{\rho }}^{{\mathsf{M}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}))\) being a CPTP map in generic quantum systems. This immediately indicates the fundamental importance of \({{{{\mathcal{P}}}}}_{0}\), the operator that corresponds to the single-step map of the memory subsystem under null input: it determines the ability of the NISQRC framework to evolve the quantum system to a unique statistical steady state, guaranteeing the asymptotic time-invariance property, and hence the existence of the Volterra series.

One byproduct of computing infinite-S features {xj(n)} is that it enables us to approximately simulate \(\{{\bar{X}}_{j}(n)\}\) in a very deep N-layer circuit for finite S, without sampling individual quantum trajectories under N repeated projective measurements described in “Methods” subsection “Generating features via conditional evolution and measurement”. In fact, given any n, once we evaluate a probability distribution {xj(n) ≥0} satisfying ∑jxj(n) = 1, we can i.i.d. sample under this distribution vector for S shots and construct the frequency \(\{{\tilde{X}}_{j}(n)\}\) as an approximation of \(\{{\bar{X}}_{j}(n)\}\). The validity of this approximation is ensured by the additive nature of loss functions in the time dimension. More specifically, given Q input sequences \({\{{{{{\bf{u}}}}}^{(q)}\in {[-1,1]}^{N}\}}_{q\in [Q]}\), a general form of loss function is \({{{\mathscr{L}}}}=\frac{1}{QN}{\sum }_{q}{\sum }_{n}{{{\mathcal{L}}}}(\bar{{{{\bf{X}}}}}(n;{{{{\bf{u}}}}}^{(q)}))\). As shown in Appendix C5 of ref. 9, \(\frac{1}{Q}{\sum }_{q}{{{\mathcal{L}}}}(\bar{{{{\bf{X}}}}}(n;{{{{\bf{u}}}}}^{(q)}))\approx \frac{1}{Q}{\sum }_{q}{{{\mathcal{L}}}}(\tilde{{{{\bf{X}}}}}(n;{{{{\bf{u}}}}}^{(q)}))\) in all orders of \(\frac{1}{S}\)-expansion for any n ∈ [N], as long as Q is large enough. This is because the probability distribution of \(\{{\tilde{X}}_{j}(n)\}\) is exactly the same as the distribution (marginal in time slice) of \(\{{\bar{X}}_{j}(n)\}\). Therefore, \(\frac{1}{QN}{\sum }_{q}{\sum }_{n}{{{\mathcal{L}}}}(\tilde{{{{\bf{X}}}}}(n;{{{{\bf{u}}}}}^{(q)}))\) is a good approximation of \({{{\mathscr{L}}}}\).

In Supplementary Note 2 and Supplementary Note 3, we show that without the reset operation, the fixed-point memory subsystem density matrix is the identity, \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{MR}}}={\hat{I}}^{\otimes L}/{2}^{L}\). While this steady state is independent of the initial state and therefore possesses a fading memory, it can be shown that the I/O map it enables is entirely independent of all past inputs as well, so that Volterra kernels \({h}_{k}^{(j)}=0\) for any k≤ 1. This yields a trivial reservoir, unable to provide any response to its inputs u. Such single-step maps \({{{\mathcal{C}}}}(u)\) are referred to as unital maps (maps that map identity to identity) and must be avoided for the NISQRC architecture to approximate any nontrivial functional. The inclusion of reset serves this purpose handily, although we have found certain improper encodings with reset to still result in unital maps \({{{\mathcal{C}}}}(u)\) (e.g., setting nT = 1 in the circuit ansatz depicted in Fig. 4(a)).

A more rigorous sufficient condition for obtaining a nontrivial functional map, referred to as a fixed-point non-preserving map in the main text, is that \({{{\mathcal{C}}}}(u)\) does not share the same fixed points for all u. It is equivalently \({{{{\mathcal{P}}}}}_{k}{\hat{\varrho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\, \ne \, 0\) for some k ≥ 1, due to the identity \({{{\mathcal{C}}}}(u){\hat{\varrho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}={\hat{\varrho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}+{\sum }_{k=1}^{\infty }{u}^{k}{{{{\mathcal{P}}}}}_{k}{\hat{\varrho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\). We will prove the importance of these criteria in Supplementary Note 3. The breaking of this criteria will lead to a memoryless reservoir for all earlier input steps: if \({{{{\mathcal{P}}}}}_{k}{\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}=0\) for all k≥1, then \({h}_{k}^{(j)}({n}_{1},{n}_{2},\cdots \,,{n}_{k})\, \ne \, 0\) only if n1 = n2 = ⋯ = nk = 0. A similar result for quantum reservoirs characterized by quantum channels can also be found from Theorem 2 in ref. 53.

Spectral theory of NISQRC: memory, measurement, and kernel structures

Recall that we can always define the spectral problem \({{{{\mathcal{P}}}}}_{0}{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}={\lambda }_{\alpha }{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\) where \({\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\) are eigenvectors that exist in the \({({2}^{M})}^{2}={4}^{M}\)-dimensional space of memory subsystem states and whose eigenvalues satisfy \(1={\lambda }_{1}\ge | {\lambda }_{2}| \ge \cdots \ge | {\lambda }_{{4}^{M}}| \ge 0\). The importance of the spectrum of \({{{{\mathcal{P}}}}}_{0}\) is obvious from the definition of \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\) already. As \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\) is the fixed point of the map defined by \({{{{\mathcal{P}}}}}_{0}\), it must equal the eigenvector \({\hat{\varrho }}_{1}^{{\mathsf{M}}}\) since λ1 = 1. Then writing the initial density matrix in terms of these eigenvectors, \({\hat{\rho }}_{0}^{{\mathsf{M}}}={\sum }_{\alpha }{d}_{0\alpha }{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\), the fixed point becomes \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}={\lim }_{n\to \infty }\left({\hat{\varrho }}_{1}^{{\mathsf{M}}}+{\sum }_{\alpha \ge 2}{d}_{\alpha }^{0}{\lambda }_{\alpha }^{n}{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\right)\). This not only reproduces the result \({\lim }_{n\to \infty }{{{{\mathcal{P}}}}}_{0}^{n}{\hat{\rho }}_{0}^{{\mathsf{M}}}={\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\) but also shows that the approach to the fixed point \({\hat{\rho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}={\hat{\varrho }}_{1}^{{\mathsf{M}}}\) must be determined by the magnitude of λ2; the smaller the magnitude, the faster terms for α ≥2 decay and hence, the shorter the memory time.

To see more directly how the spectrum of \({{{{\mathcal{P}}}}}_{0}\) influences the memory of inputs, it is sufficient to analyze the Volterra kernels in Eq. (1). Focusing on single-time contributions from un−p to xj(n) at all orders of nonlinearity (multi-time contributions are exponentially suppressed, see Supplementary Note 4), these may be expressed as

which can be viewed as a spectral representation of Volterra kernel contributions to the jth measured feature obtained via POVM \({\hat{M}}_{j}\). Here, \({F}_{\alpha }(u)=\mathop{\sum }_{k=1}^{\infty }{c}_{\alpha 1}^{(k)}{u}^{k}\) define 4M − 1 internal features, so-called as they depend only on input encoding operators via \({{{{\mathcal{P}}}}}_{k}{\hat{\varrho }}_{\alpha {\prime} }^{{\mathsf{M}}}=\mathop{\sum }_{\alpha=2}^{{4}^{M}}{c}_{\alpha \alpha {\prime} }^{(k)}{\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\), and are in particular independent of the measurement scheme. Nontrivial Fα(u) and \({c}_{\alpha 1}^{(k)}\) can be guaranteed if \({{{{\mathcal{P}}}}}_{k}{\hat{\varrho }}_{{{{\rm{FP}}}}}^{{\mathsf{M}}}\, \ne \, 0\) for some k ≥1. The dependence of observables on the measurement basis is via coefficients \({\nu }_{\alpha }^{(j)}={{{\rm{Tr}}}}({\hat{M}}_{j}{{{{\mathcal{R}}}}}_{0}({\hat{\varrho }}_{\alpha }^{{\mathsf{M}}}\otimes \left\vert 0\right\rangle \,{\left\langle 0\right\vert }^{\otimes R}))\). Crucially, the weighting of Fα(un−p) for p steps in the past is determined by eigenvalues \({\lambda }_{\alpha }^{p-1}\) of \({{{{\mathcal{P}}}}}_{0}\). For each α ≥2, it vanishes when we take a long time limit p → ∞. This property is usually referred to as fading memory. It also clearly defines a set of distinct but calculable, memory fading rates \({\{| {\lambda }_{\alpha }| \}}_{\alpha \ge 2}\).

Importantly, the ability to construct Volterra kernels and internal features enables us to approximately treat the infinite-dimensional function \({x}_{j}(n)={{{{\mathcal{F}}}}}_{j}({u}_{\le n})\) as a function with support only over a space with effective task dimension deff = O(nM), representing deff time steps in the past:

and we can interpret the fading memory function as a function: \(y(n)\approx {{{\mathcal{F}}}}({u}_{n-{d}_{{{{\rm{eff}}}}}},\cdots \,,{u}_{n-1},{u}_{n})\). In other words, at any given time NISQRC can approximate nonlinear functions that live in a domain of dimension deff.

IBMQ implementation

We recall that the encoding circuit \(\hat{U}({u}_{n})={\left({{{\mathcal{W}}}}(\; J){{{{\mathcal{R}}}}}_{z}({{{{\boldsymbol{\theta }}}}}^{z}+{{{{\boldsymbol{\theta }}}}}^{I}{u}_{n}){{{{\mathcal{R}}}}}_{x}({{{{\boldsymbol{\theta }}}}}^{x})\right)}^{{n}_{T}}\) for the experimental IBMQ implementation in the Results subsection “Experimental results on the quantum system” describes a composite set of single and two-qubit gates repeated nT times. Here \({{{{\mathcal{R}}}}}_{x,z}\) are composite Pauli-rotations applied qubit-wise, e.g., \({{{{\mathcal{R}}}}}_{z}{=\bigotimes }_{i}{\hat{R}}_{z}({\theta }_{i}^{z}+{\theta }_{i}^{I}u)\). \({{{\mathcal{W}}}}(J)\) defines composite two-qubit coupling gates, \({{{\mathcal{W}}}}(\; J)={\prod }_{\langle i,i^{\prime} \rangle }{{{{\mathcal{W}}}}}_{i,i^{\prime} }(\; J)={\prod }_{\langle i,i^{\prime} \rangle }\exp \{-i(J\tau /{n}_{T}){\hat{\sigma }}_{i}^{z}{\hat{\sigma }}_{i^{\prime} }^{z}\}\) for neighboring qubits i and \(i^{\prime}\) along a linear chain in the device and some fixed J. The rotation angles θx,z,I are randomly drawn from a positive uniform distribution with limits [a, a + δ], where \(a=\frac{\tau }{{n}_{T}}{\theta }_{\min }^{x,z,I}\) and \(\delta=\frac{\tau }{{n}_{T}}\Delta {\theta }^{x,z,I}\). We find that letting the number of Trotterization steps nT = 3 is sufficient to generate a well-behaved null-input CPTP map \({{{{\mathcal{P}}}}}_{0}\). Our hyperparameter choices are further tuned to ensure a memory time nM commensurate with the CE task dimension. The particular hyperparameter choices for the plot in Fig. 4 are \({\theta }_{\min }^{x,z,I}=\{1.0,0.5,0.1\}\), \(\Delta {\theta }^{x,z,I}={\theta }_{\min }^{x,z,I}\), J = 1, nT = 3, and τ = 1.

In the experiment, mid-circuit measurements and qubit resets are performed as separate operations, due to the differences in control flow paths between returning a result and the following qubit manipulation55. Related hardware complexities restrict us to a slightly shorter instance of the CE task than considered in Results’ subsection “Practical machine learning using temporal data”, with messages m(n) of length N = 20, submitted in batches of 200 jobs with 100 circuits each and 125 observations (shots) per circuit in order to prevent memory buffer overflows. Regardless, using cross-validation techniques, we ensure that our observed training and testing performance is not influenced by limitations of dataset size. We also forego the initial washout period needed to reach \({\rho }_{{{{\rm{FP}}}}}^{{\mathsf{MR}}}\) for similar reasons. Finally, the \({{{{\mathcal{W}}}}}_{i,i^{\prime} }(J)\) rotations in the two-qubit Hilbert space that implement \({{{\mathcal{W}}}}(J)\) are generated by the native echoed cross-resonance interaction of IBM backends65, which provides higher fidelity than a digital decomposition in terms of CNOTs for Trotterized circuits66.

Data availability

The data generated for numerical results in this study have been deposited in the GitHub repository under the accession link https://github.com/skhanCC/NISQRC-Codes67. The raw experimental data obtained from ibmq_algiers are not available in the GitHub repository due to its huge size, and its access can be be made available to interested parties upon request. The processed experimental data are available at the Github repository. The data of experimental parameters in this study are provided in the Supplementary Note 9. No external data was used in this study.

Code availability

The code used in this article is available in the GitHub repository https://github.com/skhanCC/NISQRC-Codes.

Change history

23 October 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41467-024-53536-3

References

Graves, A., Mohamed, A.-R. & Hinton, G. Speech recognition with deep recurrent neural networks. In 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, 6645–6649 (2013).

Vaswani, A. et al. Attention is all you need. Advances in Neural Information Processing Systems 30 (2017).

OpenAI. Gpt-4 technical report. Preprint at arXiv https://arxiv.org/abs/2303.08774 (2023).

Canaday, D., Pomerance, A. & Gauthier, D. J. Model-free control of dynamical systems with deep reservoir computing. J. Phys. Complex. 2, 035025 (2021).

Chattopadhyay, A., Hassanzadeh, P. & Subramanian, D. Data-driven predictions of a multiscale Lorenz 96 chaotic system using machine-learning methods: reservoir computing, artificial neural network, and long short-term memory network. Nonlinear Process. Geophys. 27, 373–389 (2020).

Wright, L. G. et al. Deep physical neural networks trained with backpropagation. Nature 601, 549–555 (2022).

Nakajima, M. et al. Physical deep learning with biologically inspired training method: gradient-free approach for physical hardware. Nat. Commun. 13, 7847 (2022).

Marković, D., Mizrahi, A., Querlioz, D. & Grollier, J. Physics for neuromorphic computing. Nat. Rev. Phys. 2, 499–510 (2020).

Hu, F. et al. Tackling sampling noise in physical systems for machine learning applications: Fundamental limits and eigentasks. Phys. Rev. X 13, 041020 (2023).

Tanaka, G. et al. Recent advances in physical reservoir computing: A review. Neural Netw. 115, 100–123 (2019).

Jaeger, H. & Haas, H. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science 304, 78–80 (2004).

Brunner, D., Soriano, M. C., Mirasso, C. R. & Fischer, I. Parallel photonic information processing at gigabyte per second data rates using transient states. Nat. Commun. 4, 1364 (2013).

Dong, J., Rafayelyan, M., Krzakala, F. & Gigan, S. Optical reservoir computing using multiple light scattering for chaotic systems prediction. IEEE J. Sel. Top. Quantum Electron. 26, 1–12 (2020).

Rowlands, G. E. et al. Reservoir computing with superconducting electronics. Preprint at arXiv http://arxiv.org/abs/2103.02522 (2021).

Angelatos, G., Khan, S. A. & Türeci, H. E. Reservoir computing approach to quantum state measurement. Phys. Rev. X 11, 041062 (2021).

McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. N. J. Phys. 18, 023023 (2016).

Havlíček, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567, 209–212 (2019).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15, 1273 (2019).

Schuld, M. & Petruccione, F. Machine learning with quantum computers. Quantum Science and Technology (Springer International Publishing, Cham, 2021).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625–644 (2021).

Huang, H.-Y. et al. Quantum advantage in learning from experiments. Science 376, 1182–1186 (2022).

Rudolph, M. S. et al. Generation of High-Resolution Handwritten Digits with an Ion-Trap Quantum Computer. Phys. Rev. X 12, 031010 (2022).

Wright, L. G. & McMahon, P. L. The capacity of quantum neural networks. Preprint at arXiv http://arxiv.org/abs/1908.01364 (2019).

Kalfus, W. D. et al. Hilbert space as a computational resource in reservoir computing. Phys. Rev. Res. 4, 033007 (2022).

Mujal, P. et al. Opportunities in quantum reservoir computing and extreme learning machines. Adv. Quantum Technol. 4, 2100027 (2021).

Fujii, K. & Nakajima, K. Harnessing disordered-ensemble quantum dynamics for machine learning. Phys. Rev. Appl. 8, 024030 (2017).

Chen, J., Nurdin, H. I. & Yamamoto, N. Temporal information processing on noisy quantum computers. Phys. Rev. Appl. 14, 024065 (2020).

Nokkala, J. et al. Gaussian states of continuous-variable quantum systems provide universal and versatile reservoir computing. Commun. Phys. 4, 53 (2021).

Pfeffer, P., Heyder, F. & Schumacher, J. Hybrid quantum-classical reservoir computing of thermal convection flow. Phys. Rev. Res. 4, 033176 (2022).

Yasuda, T. et al. Quantum reservoir computing with repeated measurements on superconducting devices. Preprint at arXiv https://arxiv.org/abs/2310.06706 (2023).

García-Beni, J., Giorgi, G. L., Soriano, M. C. & Zambrini, R. Scalable photonic platform for real-time quantum reservoir computing. Phys. Rev. Appl. 20, 014051 (2023).

Gonthier, J. F. et al. Measurements as a roadblock to near-term practical quantum advantage in chemistry: Resource analysis. Phys. Rev. Res. 4, 033154 (2022).

McClean, J. R., Boixo, S., Smelyanskiy, V. N., Babbush, R. & Neven, H. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 4812 (2018).

Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 12, 6961 (2021).