Abstract

Artificial visual system empowered by 2D materials-based hardware simulates the functionalities of the human visual system, leading the forefront of artificial intelligence vision. However, retina-mimicked hardware that has not yet fully emulated the neural circuits of visual pathways is restricted from realizing more complex and special functions. In this work, we proposed a human visual pathway-replicated hardware that consists of crossbar arrays with split floating gate 2D tungsten diselenide (WSe2) unit devices that simulate the retina and visual cortex, and related connective peripheral circuits that replicate connectomics between the retina and visual cortex. This hardware experimentally displays advanced multi-functions of red–green color-blindness processing, low-power shape recognition, and self-driven motion tracking, promoting the development of machine vision, driverless technology, brain–computer interfaces, and intelligent robotics.

Similar content being viewed by others

Introduction

An artificial visual system empowered by hardware aims to replicate the functionalities of the human visual system; its advanced performance in perceiving and processing external visual information is the cornerstone of various fields, such as driverless technology, brain–computer interfaces, and intelligent robots1,2,3,4,5,6,7,8,9,10. To achieve powerful human visual abilities, artificial visual hardware is developed quickly on the basis of new optoelectronic materials11,12,13,14 and non-von Neumann architectures15,16,17,18. 2D materials are good candidates19,20 for fabricating human visual hardware due to their inherently dangling-bond-free surfaces, atomically sharp interfaces, strong light–matter interaction, and electrically tunable photoresponse. For example, by introducing nonvolatile storage, such as ferroelectric21, floating gate22,23, and material defect24, 2D materials have been proven to exhibit reconfigurable optical responsivity to mix in situ sensing preprocessing, edge computing, and signal coding functionalities in one device25, which is designed as the cornerstone for mimicking the retina. This retina-mimicking design realizes basic functions of human visual adaptation24,26, color perception27, feature extraction22,28,29,30, and motion sensing23,31,32,33. However, most forms of hardware disregard the replication of visual pathways in hardware design, making combining all basic functionalities in one hardware to enable more complex and efficient functions a challenging task.

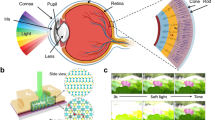

The human visual system is dominated by visual pathways, which include the retina, visual cortex, and connectomics between them (Fig. 1a)34,35,36. In the retina, photoreceptor (rod and cone), bipolar, and ganglion cells are connected successively, horizontal and amacrine cells act on neighbor pixel cells, constituting a center-surround receptive field (CSRF) with potentiated center and depressed surround. The lateral geniculate nucleus (LGN) in the pulvinar is regarded as a connector between the retina and visual cortex to receive the same CSRF information from the parasol and midget ganglion cells in the retina and then send them to the visual cortex for distributed hierarchical processing. The visual cortex consists of the primary visual cortex (V1), secondary visual cortex (V2), area V4 and inferotemporal (IT) cortex in the ventral stream, and middle temporal (MT) cortex and parietal cortex in the dorsal stream. Following the anatomical structure and connectivity of the three aforementioned organizational modules, the P and M pathways37,38 are constructed to process static [color39,40 and shape41,42] and dynamic [direction43,44 and motion45,46] information, respectively, beyond the ability of the retina.

a Human visual pathways, characteristics of the center-surround receptive field (CSRF), and functions of the human visual system. The schematic diagram is adapted with permission from refs. 35,36. SC superior colliculus, LGN lateral geniculate nucleus, V1 primary visual cortex, V2 secondary visual cortex, V4 extrastriate cortex, MT middle temporal cortex, IT inferotemporal cortex. b Microscope image of a 10 × 10 tungsten diselenide (WSe2) split floating gate (SFG) diode/transistor array mimicking the retina and visual cortex. Insert: Schematic of a unit device, exhibiting reconfigurable responsivity and conductivity under the photovoltaic diode and bipolar transistor modes. The array under the photovoltaic diode and bipolar transistor modes performs photoresponse convolution and matrix multiplication to mimic the retina and visual cortex, respectively. c Flowchart of the human visual pathway-replicated hardware that consists of the SFG array and connective peripheral circuits for color process, shape recognition, and motion tracking. The shadings in the background represent the parts of peripheral circuits. SO single color opponency receptive field, DO double color opponency receptive field. d Optical responsivity distribution of the 5 × 5 device array. e ISC − VG1 and VG2 mapping under an illumination of 455 nm and 10 mW/cm2, showing reconfigurable photoresponse. f, ISC−Pin curves under different VG1 ( − VG2). g Voltage pulse number modulated ID, demonstrating synapse-like potentiation and depression behavior. The pulse is ±1 V/10 ms (VD-read = 0.1 V).

To develop an artificial visual system that supports multi-complex functions with lower power consumption, hardware that resembles the visual pathways of the human visual system is under debate. In this work, we designed a general hardware architecture with the crossbar array and related connective peripheral circuits to replicate the neural circuits of visual pathways. The basic device in the crossbar array is a tungsten diselenide (WSe2) split floating gate (SFG) device with reconfigurable positive/negative optical responsivity and conductivity, enabling the crossbar array to simulate the CSRF of the retina and neural networks of the visual cortex in the human visual system. The connection between the retina and cortex is based on the related peripheral circuits. The SFG arrays are used to construct related visual pathway-replicated hardware with specific peripheral circuits, achieving color vision, shape vision, and dynamic vision. The retina-like operation of the array under photovoltaic diode mode is self-powered with nearly zero standby power consumption, and the cortex-like operation under bipolar transistor mode exhibits the programming energy for a floating gate of below 1 pJ/spike, promising ultralow power consumption. On the basis of the visual pathway-replicated design, the hardware experimentally performs color processing in accordance with the human visual system, making it capable of explaining the cause of red–green color blindness (Daltonism) with the hardware. The shape vision hardware is also demonstrated through an effective shape classification within a double-layer sparse neural network, demonstrating neural circuit-compatible sparsity and a recognition rate of >95% in the experiment. The promise of low-power applications is confirmed by a 61.1% reduction in device usage and only 0.9 nJ programming energy per operation. The hardware realizes human dynamic vision function to track motion information in real-time by processing the transmission time difference of synapses within the visual pathway. Remarkably, the diverse functions presented in the human visual pathway-replicated hardware prove that it is a powerful platform for artificial intelligence visual tasks.

Results

Working mechanisms of hardware

As the core building block, the 10 × 10 crossbar array is fabricated with a unit SFG device of Al2O3/Pt/Al2O3/WSe2, which functions as a photovoltaic diode or a bipolar transistor (Fig. 1b). Both working modes are required to build the visual pathway in one hardware. For photovoltaic diode mode under two modulated opposite SFGs, the nonvolatile reconfigurable n–p junction results in photovoltaic effect-based positive and negative photocurrents with adjustable optical responsivity to construct CSRF by convoluting input light with a responsivity kernel. For bipolar transistor mode under one gate with fixed VG, the reconfigurable conductivity serves as the unit weight of the neural network to realize the visual cortex that can calculate matrix multiplication by Kirchhoff’s laws. To replicate the visual pathways of the human visual system in the hardware, WSe2 SFG arrays are successively integrated to mimic the retina or visual cortex by building related peripheral circuits for current-to-voltage transition and gate voltage programming and control (Fig. 1c). Then, the multifunctions of color processing, shape recognition, and motion tracking are realized. In particular, the retina-related parts of the hardware, including color sensing, orientation selector, and motion tracking, are built with arrays that work in photovoltaic diode mode. Meanwhile, visual cortex-related parts, including the CSRF of single color opponency (SO) and double color opponency (DO), neural network for color processing, and double-layer sparse neural network, are built with arrays that work in bipolar transistor mode. These functions require exquisite fitting of visual pathways, which are supported by the designed hardware, as discussed in the following sections.

Under photovoltaic diode mode, the reconfigurable optical responsivity modulated by gates VG1 and VG2 is the basis for realizing CSRF. When VG1 and VG2 have the same values and opposite signs, the ID–VD curves (Fig. S4b) under the illumination of red–green–blue light (637 nm/520 nm/455 nm, Pin = 10 mW/cm2) exhibit typical p–n junction photovoltaic effect. The quasi-linear adjustment of positive/negative optical responsivity can be achieved by gate voltages (Fig. S4c), making reconstructing CSRF an easy task. Here, the impact of non-linear dependency on subsequent imaging processing is suppressed by fixing the weights within several discrete values, also as demonstrated in the recent work25. The near-Gaussian distributed optical responsivity matrix of a 5 × 5 array is constructed under 455 nm and 10 mW/cm2 illumination, where each pixel is independently modulated by gates (Fig. 1d). The SFG voltages significantly co-modulate the unit device (Fig. 1e, S5c, S5d) to construct different junction states (Fig. S3b–S3d). The short-circuit current (ISC) of the SFG device is linearly correlated with light intensity (Pin) (Fig. 1f, S5a, S5b), contributing to the stable coding of incident light information. Furthermore, we test the electronic and optoelectronic performance of each unit in the 10 × 10 array and extract performance features (Supplementary Note 1, Figs. S6–S11), exhibiting considerable uniformity, remarkable storage capacity, and excellent gate control for the whole units. Under bipolar transistor mode, gate pulse modulated output updates (Fig. 1g and S8) are measured by ±1 V/10 ms voltage pulses on one floating gate, resulting in nonvolatile modulation with programming energy below 1 pJ/spike (Supplementary Note 1). The adjustable conductance acts as the unit weight to mimic visual cortex computations in the crossbar array. Thus, each unit of the hardware works properly to meet the functional requirements of the current application.

Color processing

The biomechanism of color processing in the P pathway is shown in Fig. 2a. Three types of cones are individually sensitive to red/green/blue light, sequentially connected to ON/OFF bipolar cells and ON/OFF ganglion cells. Accepting center-potentiated information from one color cone and surround-depressed information from another one, red–green (R–G/G–R), yellow–blue [(G + R)–B/B–(G + R)], and black–white [(B + G + R)/–(B + G + R)] SO CSRFs in LGN are constructed. In V1, red–green (R&G), blue–yellow (B&Y), and black–white (gray) DO CSRFs are formed by integrating small and large SO CSRF information from LGN. Finally, color information is obtained in V4/IT through neural connection analysis from DO CSRF data (details in Supplementary Note 2).

a Light input of a 34 × 31-pixel trichromatic circular image with RGB components onto the tungsten diselenide (WSe2) split floating gate (SFG) array. Color vision in the human visual system. The single color opponency (SO) receptive field in the lateral geniculate nucleus (LGN) receives center-potentiated and surround-depressed signals of different colors from the retinal ganglion cells (RGC) in the retina. The double color opponency (DO) receptive field in the primary visual cortex (V1) integrates large and small SO signals in the same color type. They are both center-surround receptive fields (CSRFs). In area V4 and inferotemporal (IT) cortex, color information is analyzed by the neural network. b Hardware photoresponsivity distributions that correspond to red–green SO CSRF (small CSRF & large CSRF). Simulation (2D gray image) and experiment (3D voltage mapping) results of SO CSRF (c), DO CSRF (d), processed color information (e). Color bar: pixel intensity by simulation, voltage amplitude by experimental test with different individual color bars in each result. The gray shadings mark the results and color bar in simulation. f Test result of red–green color blindness (Daltonism) with 10% R&G pathway contribution (the weight coefficient of R&G integration from c–d is 0.1).

The color processing hardware (Fig. S12) is constructed by SFG arrays and replicates the neural circuit as mentioned above. Mimicking the retina, one SFG array is reused 12 times in the front working under photovoltaic diode mode. The four other arrays that work in bipolar transistor mode replicate the visual cortex LGN–V1–V4–IT successively to obtain voltage signals that represent SO/DO CSRF and color information sequentially through matrix multiplication (Supplementary Note 2).

To demonstrate the working process of the hardware, a 34 × 31–pixel trichromatic circular image is used as the light input, decomposed by RGB components, and mapped to light intensity (Pin) with a wavelength of 637/520/455 nm (Fig. 2a). The light input illuminates the SFG array pixel by pixel (Fig. S1) to perform convolution with color-related kernels. The optical responsivity distribution of color-related kernels for the SO CSRF is set strictly in accordance with the Gaussian function, similar to the biological mechanisms of retina–LGN. The Gaussian standard deviation σ determines the degree of blur in image processing, a larger one is fuzzier to extract global information, and a small one is more concentrated to get local information. The σ = 0.08 and σ = 0.12 respectively set the responsivity distribution of small (3×3) and large (5×5) CSRF with two values (0.04 and −0.008 A/W) and three values (0.007, 0.003 and −0.0001 A/W) (Fig. 2b and other circumstances in Fig. S13). The photocurrents of the SFG array are added and converted into photovoltages by using a transimpedance amplifier (TIA) in the peripheral circuit. The photovoltage signals of the large and small CSRF are imported into another SFG array to obtain the R + G − /brightness/Y + B− and G + R − /darkness/B + Y − SO CSRF signals through matrix multiplication (Fig. 2c). Among them, “R/G/B/Y” is the color information of red, green, blue, and yellow. The front, back, “+”, “−”, “lightness”, and “darkness” represents center, surround, potentiation, depression, “(B + G + R)”, and “−(B + G + R)” respectively. The R&G/gray/B&Y DO CSRF signals and processed RGB components are subsequently obtained through the same process of matrix multiplication. The simulation and experiment results of the DO CSRF and processed RGB components are presented in Fig. 2d, e, respectively. The processed RGB components share the same characteristics of the color information processing pathway of the human visual system, which is hardly realized in traditional color cameras that merely filter RGB components. Moreover, this hardware can demonstrate the unexplained causes of red–green color blindness. Daltonism originates from the failure of the R–G SO–DO pathway39,40, and thus, only a 10% ratio of weights is applied to this pathway in the experiment setup (×0.1, in the upper plane of Fig. 2c, d). Specifically, the conductance value of the WSe2 transistor connecting the R–G SO and DO is set to 100 nS, while the other channels are set to 1 μS. After the above perception and post-processing procedure, the output color information mimics the perception of color images by Daltonism patients (Fig. 2f), which can only be elucidated by considering the visual pathway.

Shape recognition

The function of shape recognition is also executed in the P pathway (Fig. 3a). CSRFs in the retina–LGN are integrated into the orientation-selective CSRF in V1 in accordance with different spatial distributions. The contour information of each point is summarized through V2/V4 with a sparse connection, realizing shape classification in IT47,48.

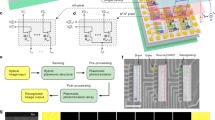

a Workflow of shape recognition in the human visual system. The schematic diagram is adapted with permission from refs. 47,48. LGN lateral geniculate nucleus, V1 primary visual cortex, V2 secondary visual cortex, V4 extrastriate cortex, IT inferotemporal cortex, NN neural network. The hardware structure of sparse neural networks with an orientation selector. b Test photocurrent results (marked with the gray shading in the background) for the convolution of the 50 × 50 pixel regular hexadecagon light input with eight 5 × 5 orientation convolution kernels (OCKs). c Schematic of the hardware principle for shape recognition. The light-mask input is generated by illuminating the light mask consisting of a right-angled triangle and the frosted glass. The right-angled triangle input is divided into five regions for OCK convolution, which are processed through the 5 × 8 × 4 sparse neural network. Simulation (d) and experimental conductivity (e) weights of the double-layer sparse neural network with 30 epochs. f Simulation (red) and experiment (blue) results of the recognition rate with 30 epochs. g Comparison of this sparse neural network and the fully connected neural network in the recognition rate, loss, device usage, and programming energy for one operation. The error bars represent the standard deviation.

By replicating the above neural circuit, the shape recognition hardware (Fig. S14) is also constructed by the SFG arrays. One array working in photovoltaic diode mode adopts the photoresponsivity configuration of the orientation convolution kernel (OCK) and is reused to collect the photovoltage of five points, mimicking the retina–LGN. Similar to the visual cortex V1–V2–V4–IT, three other arrays that are working in bipolar transistor mode build a double-layer sparse neural network for shape recognition. This neural network performs matrix multiplication of the 1st layer, activation, and 2nd layer successively (Supplementary Note 3).

The CSRF OCK of the orientation selector is configured by the photoresponsivity distributions of the SFG array, which exhibits positive photoresponsivity along the selective orientation and negative photoresponsivity in other points (Fig. 3b). To test OCK’s orientation selection effect, a regular hexadecagon of 50 × 50 pixels is used as the incident light pattern and convolved with different OCKs pixel by pixel to obtain the short-circuit photocurrent distributions. The peak value in the corresponding direction encoded by the OCK is produced as verification. In accordance with the abstract processing flow of the visual pathway, shape recognition is verified in the simulation and experiment (Fig. 3c). The light illuminates the light mask of a right-angled triangle (Fig. S1), and the transmitted light pattern is quantified to the 15 × 15–pixel size as the light-mask input. Using 1600 samples augmented by adding Gaussian noise (with a standard deviation of 0.8) as the database (Fig. S15a), five regions (5 × 5–pixel size for each region) of each sample are divided for convolution with OCKs. In the experiment, the light input state of these five regions is adjusted by moving the position of the mask (Fig. S1). The results undergo feedforward calculation, activation (Fig. S15f), and backpropagation to update the weights through a 5 × 8 × 4 double-layer sparse neural network. Simulation (Fig. 3d) and experiment (Fig. 3e) weights are recorded for 30 epochs. Hardware conductance weight accuracy is quantized into 64 levels (Fig. S15b). After training, the recognition rates of the triangles obtained by simulation and experiment are higher than 95% (Fig. 3f). Although the sparse neural network inspired by the sparsity of neural connections exhibits slight recognition accuracy decay compared with the fully connected network, it considerably reduces device usage and programming energy by approximately 61.1% from about 2.3 nJ to 0.9 nJ per operation (Fig. 3g, Supplementary Note 1).

Motion tracking

The M pathway of the human visual system implements motion tracking based on the Barlow–Levick model46, as illustrated in Fig. 4a. Light successively stimulates photoreceptors at different sites and transmits signals to the back end of axons at different time scales following the length-dependent signal transmission delay of axons. The CSRF-based direction selector in the retina and visual cortex only produces signal superposition and activation to the motion stimulus that is parallel to the given movement direction. The higher visual cortex receives the pre-level information and controls the eye movement to track moving objects.

a Principle diagram of the direction selector and motion tracking in the human visual system: center-surround receptive field (CSRF)-based direction selector with axonal connections of different lengths, and eye movement control by the cortex that processes previous visual information. The schematic diagram is adapted with permission from refs. 44,46. Δt: transmission delay of axons; BC bipolar cells, RGC retinal ganglion cells, S/C surround/center, DS direction selector. The gray shading on the left demonstrates the principle of center-surround (marked with the brown shading) direction selector based on the delay mechanism. The gray shadings on the right highlight DS in the retina, thalamus and cortex. b Workflow diagram of direction selector: photovoltaic voltage Vph of the front-end device array as input, converted into Vdl by delay modules, and output voltage Vout is summarized by a multiple-input AND gate. c Test results of Vph, Vdl, and Vout with light stimulus moving down and up at a speed of 20 pixels/s. d Workflow diagram of 1D bidirectional motion tracking: Vph as input, converted into up and down movement signals Vsf through delay modules and AND gates, the Vrg of the shift register is adjusted to control the switch transistors moving along the illumination site. e Test results of Vph, Vsf, and Vrg during the upward and downward movements of the light stimulus. f Schematic of motion tracking in a 2D plane with two 1D bidirectional motion tracker orthogonally arranged. The “HUST” mask moves clockwise along the “I”- and “C”-shaped light trajectory. Test results of Vsf (g), Vrg (h), and diagram of motion trajectory (i). Color bar, time for movement.

The visual pathway-replicated hardware for motion tracking (Fig. S16) is constructed using one WSe2 SFG array and related peripheral circuits for direction selection and controlling eye position in accordance with the above neural circuit. The SFG array mimics the retina and works in photovoltaic diode mode. Meanwhile, the delay module, bidirectional shift register, and switch transistor replicate the mechanisms of the axon’s delay, cortex’s control, and eye movement, respectively (Supplementary Note 4).

First, the direction selector is constructed by the SFG array, delay modules, and AND gate. To simulate the biological delay mechanism (Fig. 4b), four adjacent rows (marked 1–4) of the SFG array are cascaded to the related delay module Δt1−4 (Δt1 > Δt2 > Δt3 > Δt4, for a given direction “up”), which mimics axons of different lengths. When light stimulation is present on a row of the array, a photocurrent pulse is generated and converted into a photovoltage by the TIA. The temporal photovoltages (Vph) are delayed by the respective delay modules to obtain the corresponding temporal voltage signals (Vdl). Then they are fed into the four-input AND gate to get an output voltage (Vout), which determines the direction. The movement direction “up” of the light stimulation generates a 40 ms timing Vph signal at the channel from 1 to 4. After the delay process, the movement in the given direction “up” generates high-level Vdl signals in all four channels simultaneously to output a high-level Vout that represents the right direction (Fig. 4c). Otherwise, the movement direction “down” cannot generate four high-level channel signals Vdl at the same time, thus the output of the AND gate is always low, which is judged to be the wrong direction. Second, 1D bidirectional motion tracking is performed by adding a switch transistor array and a bidirectional shift register as the direction controller (Fig. 4d). The direction selector is a simplified version of a bidirectional selector, which receives Vph of the array in Channels 1–3 and outputs direction selective signal Vsf from two dual-input AND gates to determine the movement direction “up” or “down” for the shift register. Then the output signal Vrg of the shift register automatically controls the gating of the switch transistor and accepts the Vph of the corresponding array channel according to the light stimulus’s direction. When the light stimulus Vph shifts from 2 to 3 (1), the high-level signal Vsf of the bidirectional selector is generated to determine the direction “up” (“down”) and serves as the shift signal of the bidirectional shift register. The output of the shift register (Vrg) is controlled by Vsf, and the related switch transistor is selected along the light stimulation (Fig. 4e). Therefore, the function of motion tracking in the M pathway is replicated in the hardware. Finally, by adding the 1D bidirectional motion tracker in two orthogonal directions, motion tracking can be performed on a 2D plane (Fig. 4f). One is placed horizontally to track “left” and “right” movement, and the other vertically to track “up” and “down” movement. The mark with the pattern of “HUST” is placed between the light source and the array sample (Fig. S1). When the mask moves clockwise along the trajectory of “I” and “C” at a speed of 20 pixels/s, the light stimulus induces the direction selector of “down,” “left,” “up,” and “right” to produce output Vsf sequentially (Fig. 4g). The shift register output sites (Fig. 4h) of these two motion tracker represent the position of the light stimulus, which coincide with the mask’s moving trajectory (Fig. 4i). Therefore, the experiment setup demonstrates motion tracking function similar to the human visual pathway. As a prototype, the hardware can also support high-speed tracking tasks by precisely programming the time of delay.

Discussion

In summary, we proposed visual pathway-replicated hardware to realize red–green color-blindness processing, low-power shape recognition, and self-driven motion tracking in the experiment, which are hardly achieved by the hardware that neglects the neural circuits of visual pathways. The unique WSe2 SFG device in the crossbar array can function in photovoltaic diode and bipolar transistor modes with nonvolatile reconfigurable positive/negative optical responsivity and conductivity, making the array the core building block for retinal CSRF and the visual cortex’s neural networks. This enhances the seamless integration of intricate human vision capabilities into a single chip, made possible by the innovation of the dual-mode functional device. With the feasibility of the heterogenous integration of 2D materials49,50, this WSe2 SFG array can be compatible with complementary metal oxide semiconductor-based peripheral circuits to build human visual pathway-replicated chips. In turn, it can inspire neuroscience and promote progress in the fields of driverless technology, brain–computer interfaces, and intelligent robots51,52. For example, the replication of the color visual pathway of human eyes can inherit color constancy53, which has the potential to simplify the complexity of white balance in subsequent processing circuits and algorithms54. Furthermore, the complete reproduction of visual pathways will help develop brain-computer interface devices that are more adapted to neural structures, and help blind or color-blindness patients regain normal vision55,56,57.

Methods

Material preparation

A ~ 100 × 100 μm2 WSe2 flake was mechanically exfoliated from a bulk crystal (from HQ Graphene). The WSe2 region in the 10 × 10 array was patterned by electron-beam lithography (EBL) and reactive ion etching (RIE) with Ar/SF6 plasma.

Device fabrication

First, we prepared a bottom gate (with an 800 nm gap) array on a silicon wafer (coated with 300-nm-thick SiO2) substrate by EBL and electron-beam evaporation (EBE) with Cr/Au (5 nm/25 nm). Secondly, the blocking layer of a 30-nm-thick Al2O3 gate oxide was deposited by atomic layer deposition (ALD), followed by EBE of 5 nm Pt as the floating layer. Thirdly, the floating gate region was defined by EBL, and the Pt layer was etched using RIE with Ar. Next, the tunneling layer of an 8-nm-thick Al2O3 was deposited by ALD. Then, the WSe2 array was transferred to the desired site of the substrate using a PDMS (polydimethylsiloxane)/PVA (Polyvinyl Alcohol) assisted fixed-point transfer method. Part of the source-drain Cr/Au (5 nm/70 nm) electrodes was prepared by EBL and EBE. A 50-nm-thick Al2O3 isolation layer was deposited by ALD, and via holes through the Al2O3 isolator and gate pads were defined by EBL and etched by inductively coupled plasma RIE (ICP-RIE) with BCl3/Ar plasma. The other part of the second layer of the source-drain Cr/Au (5 nm/70 nm) electrodes was prepared by EBL and EBE. Finally, the sample was placed on a 40-pin chip holder and wire-bonded.

Experimental setup

The schematic diagram of the experimental setup is shown in Fig. S1. A continuous wavelength (CW) laser (Yangtze Soton Laser, SC–PRO) was modulated to a specific wavelength and intensity through the monochromator and attenuator driven by a controller. Then the laser irradiated on the device array through a 10× microscope objective and a mask controlled by a stepper motor, to construct dynamic light information of different colors, intensity, and shapes. In the shape recognition section, the mask consists of a mask and the frosted glass to generate disordered right-angle triangle light input. The gate voltage pulses were supplied row by row switched by a column Ground line using waveform generators (National Instruments, PXI–5404). The output current signals were measured by source meters (National Instruments, PXIe–4141), or amplified and converted to voltage signals by trans-impedance amplifiers (TIAs) on the Printed Circuit Board Assembly (PCBA). The output voltage signals were recorded with source meters (National Instruments, PXIe–4142) or oscilloscopes (Tektronix, TDS2024). The basic optoelectronic measurement for unit devices was conducted in a Lakeshore probe station using a semiconductor analyzer (Keysight B1500A), 455/520/637 nm laser diode, and temperature controller (Thorlabs, ITC4020). The entire test was carried out in an atmospheric environment.

Data availability

All data needed to evaluate the findings of this study are available within the Article. Source Data file has been deposited in Figshare under accession code https://doi.org/10.6084/m9.figshare.2623456158.

Code availability

All codes used in this study are available from the corresponding author (L. Y.) upon request.

References

Luo, L. Architectures of neuronal circuits. Science 373, eabg7285 (2021).

Schuman, C. D. et al. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2, 10–19 (2022).

Lai, C., Tanaka, S., Harris, T. D. & Lee, A. K. Volitional activation of remote place representations with a hippocampal brain–machine interface. Science 382, 566–573 (2023).

Zhou, F. & Chai, Y. Near-sensor and in-sensor computing. Nat. Electron. 3, 664–671 (2020).

Suárez, L. E., Richards, B. A., Lajoie, G. & Misic, B. Learning function from structure in neuromorphic networks. Nat. Mach. Intell. 3, 771–786 (2021).

Wang, T. et al. Image sensing with multilayer nonlinear optical neural networks. Nat. Photonics 17, 408–415 (2023).

Langenegger, J. et al. In-memory factorization of holographic perceptual representations. Nat. Nanotechnol. 18, 479–485 (2023).

Leiserson, C. E. et al. There’s plenty of room at the top: What will drive computer performance after Moore’s law? Science 368, aam9744 (2020).

Indiveri, G. et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 9202 (2011).

Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: A quest to mimic the brain. Front. Neurosci. 12, 353526 (2018).

Zhou, F. et al. Optoelectronic resistive random access memory for neuromorphic vision sensors. Nat. Nanotechnol. 14, 776–782 (2019).

Choi, C. et al. Curved neuromorphic image sensor array using a MoS2-organic heterostructure inspired by the human visual recognition system. Nat. Commun. 11, 1–9 (2020).

Zhang, H. T. et al. Reconfigurable perovskite nickelate electronics for artificial intelligence. Science 375, 533–539 (2022).

Lanza, M. et al. Memristive technologies for data storage, computation, encryption, and radio-frequency communication. Science 376, abj9979 (2022).

Li, X. et al. Power-efficient neural network with artificial dendrites. Nat. Nanotechnol. 15, 776–782 (2020).

Migliato Marega, G. et al. Logic-in-memory based on an atomically thin semiconductor. Nature 587, 72–77 (2020).

Xu, Z. et al. Large-scale photonic chiplet Taichi empowers 160-TOPS/W artificial general intelligence. Science 384, 202–209 (2024).

Zhang, W. et al. Edge learning using a fully integrated neuro-inspired memristor chip. Science 381, 1205–1211 (2023).

Novoselov, K. S., Mishchenko, A., Carvalho, A. & Castro Neto, A. H. 2D materials and van der Waals heterostructures. Science 353, aac9439 (2016).

Luo, P. et al. Molybdenum disulfide transistors with enlarged van der Waals gaps at their dielectric interface via oxygen accumulation. Nat. Electron. 5, 849–858 (2022).

Tong, L. et al. 2D materials-based homogeneous transistor-memory architecture for neuromorphic hardware. Science 373, 1353–1358 (2021).

Lee, S., Peng, R., Wu, C. & Li, M. Programmable black phosphorus image sensor for broadband optoelectronic edge computing. Nat. Commun. 13, 1–8 (2022).

Zhou, Y. et al. Computational event-driven vision sensors for in-sensor spiking neural networks. Nat. Electron. 6, 870–878 (2023).

Liao, F. et al. Bioinspired in-sensor visual adaptation for accurate perception. Nat. Electron. 5, 84–91 (2022).

Mennel, L. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020).

Pan, X. et al. Parallel perception of visual motion using light-tunable memory matrix. Sci. Adv. 9, adi4083 (2023).

Seo, S. et al. Artificial optic-neural synapse for colored and color-mixed pattern recognition. Nat. Commun. 9, 1–8 (2018).

Zhang, G. X. et al. Broadband sensory networks with locally stored responsivities for neuromorphic machine vision. Sci. Adv. 9, adi5104 (2023).

Pi, L. et al. Broadband convolutional processing using band-alignment-tunable heterostructures. Nat. Electron. 5, 248–254 (2022).

Wang, F. et al. A two-dimensional mid-infrared optoelectronic retina enabling simultaneous perception and encoding. Nat. Commun. 14, 1–8 (2023).

Wang, C. Y. et al. Gate-tunable van der Waals heterostructure for reconfigurable neural network vision sensor. Sci. Adv. 6, aba6173 (2020).

Zhang, Z. et al. All-in-one two-dimensional retinomorphic hardware device for motion detection and recognition. Nat. Nanotechnol. 17, 27–32 (2022).

Huang, P. Y. et al. Neuro-inspired optical sensor array for high-accuracy static image recognition and dynamic trace extraction. Nat. Commun. 14, 1–9 (2023).

Lee, D. et al. In-sensor image memorization and encoding via optical neurons for bio-stimulus domain reduction toward visual cognitive processing. Nat. Commun. 13, 1–9 (2022).

Kravitz, D. J., Saleem, K. S., Baker, C. I., Ungerleider, L. G. & Mishkin, M. The ventral visual pathway: An expanded neural framework for the processing of object quality. Trends Cogn. Sci. 17, 26–49 (2013).

Tamietto, M. & Morrone, M. C. Visual plasticity: Blindsight bridges anatomy and function in the visual system. Curr. Biol. 26, R70–R73 (2016).

Baden, T., Euler, T. & Berens, P. Understanding the retinal basis of vision across species. Nat. Rev. Neurosci. 21, 5–20 (2020).

Van Essen, D. C., Anderson, C. H. & Felleman, D. J. Information processing in the primate visual system: An integrated systems perspective. Science 255, 419–423 (1992).

Joesch, M. & Meister, M. A neuronal circuit for colour vision based on rod-cone opponency. Nature 532, 236–239 (2016).

Solomon, S. G. & Lennie, P. The machinery of colour vision. Nat. Rev. Neurosci. 8, 276–286 (2007).

Sun, W., Tan, Z., Mensh, B. D. & Ji, N. Thalamus provides layer 4 of primary visual cortex with orientation- and direction-tuned inputs. Nat. Neurosci. 19, 308–315 (2016).

Chen, X., Wang, F., Fernandez, E. & Roelfsema, P. R. Shape perception via a high-channel-count neuroprosthesis in monkey visual cortex. Science 370, 1191–1196 (2020).

Ding, H., Smith, R. G., Poleg-Polsky, A., Diamond, J. S. & Briggman, K. L. Species-specific wiring for direction selectivity in the mammalian retina. Nature 535, 105–110 (2016).

Lien, A. D. & Scanziani, M. Cortical direction selectivity emerges at convergence of thalamic synapses. Nature 558, 80–86 (2018).

Liu, B., Hong, A., Rieke, F. & Manookin, M. B. Predictive encoding of motion begins in the primate retina. Nat. Neurosci. 24, 1280–1291 (2021).

Strauss, S. et al. Center-surround interactions underlie bipolar cell motion sensitivity in the mouse retina. Nat. Commun. 13, 1–18 (2022).

Brincat, S. L. & Connor, C. E. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nat. Neurosci. 7, 880–886 (2004).

Priebe, N. J. & Ferster, D. Inhibition, spike threshold, and stimulus selectivity in primary visual cortex. Neuron 57, 482–497 (2008).

Jayachandran, D. et al. Three-dimensional integration of two-dimensional field-effect transistors. Nature 625, 276–281 (2024).

Wang, S. et al. Two-dimensional devices and integration towards the silicon lines. Nat. Mater. 21, 1225–1239 (2022).

Bellmund, J. L. S., Gärdenfors, P., Moser, E. I. & Doeller, C. F. Navigating cognition: Spatial codes for human thinking. Science 362, aat6766 (2018).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Gao, S. B., Yang, K. F., Li, C. Y. & Li, Y. J. Color constancy using double-opponency. IEEE Trans. Pattern Anal. Mach. Intell. 37, 1973–1985 (2015).

Gijsenij, A., Gevers, T. & Van De Weijer, J. Computational color constancy: Survey and experiments. IEEE Trans. Image Process. 20, 2475–2489 (2011).

Luo, X. et al. A bionic self-driven retinomorphic eye with ionogel photosynaptic retina. Nat. Commun. 15, 1–9 (2024).

Yang, R. et al. Assessment of visual function in blind mice and monkeys with subretinally implanted nanowire arrays as artificial photoreceptors. Nat. Biomed. Eng. 1–22 https://doi.org/10.1038/s41551-023-01137-8 (2023).

Beauchamp, M. S. et al. Dynamic stimulation of visual cortex produces form vision in sighted and blind humans. Cell 181, 774–783 (2020).

Peng, Z. Source data of figures in the article “Multifunctional human visual pathway-replicated hardware based on 2D materials”. figshare, https://doi.org/10.6084/m9.figshare.26234561.v2 (2024).

Acknowledgements

This work was co-funded by the National Natural Science Foundation of China (Grant nos. 62222404, 62304084 and 92248304) (L. Y.), the National Key Research and Development Plan of China (Grant nos. 2021YFB3601200) (L. Y.), the Major Program of Hubei Province (Grant no. 2023BAA009) (L. Y.), and the Research Grants Council of Hong Kong Postdoctoral Fellowship Scheme (Grant no. PDFS2223-4S06) (L. T.).

Author information

Authors and Affiliations

Contributions

Z. P. and L. Y. conceived the project. Z. P. designed the hardware. Z. P., L. X., X. H. and Z. L. fabricated the hardware. Z. P., X. Y., X. M. and X. H. performed the measurement. L. X. and H. H. completed the characterization of the material. W. S. polished the article. L. Y. and L. T. provided key suggestions to optimize data expression. Z. P. and L. Y. wrote the manuscript. G. Y., S. L., T. J. and X. M supervised the project. All the authors discussed the results and reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Young Min Song, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Peng, Z., Tong, L., Shi, W. et al. Multifunctional human visual pathway-replicated hardware based on 2D materials. Nat Commun 15, 8650 (2024). https://doi.org/10.1038/s41467-024-52982-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-024-52982-3

This article is cited by

-

Bio-inspired optoelectronic devices and systems for energy-efficient in-sensor computing

npj Unconventional Computing (2025)

-

Large-scale high uniform optoelectronic synapses array for artificial visual neural network

Microsystems & Nanoengineering (2025)