Abstract

From sequences of discrete events, humans build mental models of their world. Referred to as graph learning, the process produces a model encoding the graph of event-to-event transition probabilities. Recent evidence suggests that some networks are easier to learn than others, but the neural underpinnings of this effect remain unknown. Here we use fMRI to show that even over short timescales the network structure of a temporal sequence of stimuli determines the fidelity of event representations as well as the dimensionality of the space in which those representations are encoded: when the graph was modular as opposed to lattice-like, BOLD representations in visual areas better predicted trial identity and displayed higher intrinsic dimensionality. Broadly, our study shows that network context influences the strength of learned neural representations, motivating future work in the design, optimization, and adaptation of network contexts for distinct types of learning.

Similar content being viewed by others

Introduction

Human experience is composed of events, words, and locations that occur in sequences, following predictable patterns. For example, heading to work each day, your route is formed by a series of intersections, as could be conveyed in turn-by-turn directions. Over time, you construct a mental map of not just the intersections but also the route. Likewise, when speaking to a colleague, you predict syllables and words based on your knowledge of the language and scholarly field, as evident in your ability to fill in gaps in the sounds and words that reach you due to, for example, background noise. Even when watching a film, our minds synthesize a temporal sequence of scenes into a multifaceted set of interactions between characters and events. The formation of such mental representations depends upon our ability to detect hidden interactions and to transform them into a predictive model.

Building such statistical models is central to human cognition1,2,3, because it allows us to accurately predict and respond to future events. One of the most prominent cues to underlying structure is the set of transition statistics between elements, such as how frequently one word precedes another in a lexicon. This cue has been extensively studied in the field of statistical learning4, and used to demonstrate that syllable transitions can help demarcate word boundaries as infants learn language5,6. Such statistical learning occurs in both infants (see ref. 7 for an overview) and adults8 and is thought to be a fundamental, domain-general learning mechanism because it arises from various sorts of sequences—from tones9 and shapes10 to motor actions11. Human learners likewise exhibit sensitivity to statistics between non-adjacent elements in a sequence12,13. Despite these strands of evidence, a comprehensive understanding of such non-adjacent statistics is challenging due to the number of possible statistical relations on which human learners might rely.

To study the role of non-adjacent probabilities in statistical learning, one particularly promising approach explicitly models a collection of events and the transitions between them as a graph14,15. Here nodes in the graph represent events and edges represent possible transitions between any two events. By formally mapping transition probabilities to a graph, one can study how the graph’s topological properties influence human learning. Practically, an experimenter can construct a sequence of events by a walk on the graph, and then assess the participant’s ability to predict upcoming items by measuring their response time to a cover task as the events proceed one by one. Studies of this ilk have demonstrated that topological features impacting learnability include the type of walk16 and whether the graph contains densely interconnected subsets or modules of nodes17, a property characteristic of many real-world networks shown to efficiently convey information2. Specifically, when graphs contain modular organization, people respond more quickly to transitions between stimuli within the same module than to transitions between stimuli across modules16. Further, when modularity is eliminated in a controlled fashion (for example, when compared to a locally-equivalent lattice graph), responses are globally slowed. Interestingly, it remains unknown what neural processes might explain the relation between graph topology and behavior.

In contrast to prior work exploring neural representations of items within the same graph18,19, here we designed a study to ask whether neural representations of stimuli systematically differed between different graph structures. In particular, given the ability of modular structure to efficiently convey information2, we hypothesized that the presence of modular structure would lead to robust neural representations, whereas the lack of modular structure would lead to weak neural representations. As a comparison structure, we chose a ring lattice graph, which allowed us to control for local degree while eliminating modularity. For a viewer standing at any given node and viewing immediate neighbors, both of these graphs appear identical, only differing when one can observe the high-level structure or when constructing multi-step predictions. To test our hypothesis, we measured BOLD fMRI activity while participants learned to predict and respond to sequences of abstract shapes. We found that modular structure led to improved discriminability between the neural activity patterns of stimuli, as well as higher intrinsic dimensionality, when compared to the ring lattice. These results demonstrate that neural representations differ systematically in response to different graph structures. Moreover, the work suggests that robust behavior may depend upon our ability to extract organizational patterns such as graph modularity. More broadly, our findings underscore the need to characterize how networks of information shape human learning.

Results

Thirty-four participants (11 male and 23 female) were recruited from the general population of Philadelphia, Pennsylvania USA. All participants gave written informed consent and the Institutional Review Board at the University of Pennsylvania approved all procedures. All participants were between the ages of 18 and 34 years (M = 26.5 years; SD = 4.73), were right-handed, and met standard MRI safety criteria. Two of the 34 participants were excluded because they failed to learn the shape-motor associations (one failed to complete the pre-training; one answered at-chance during the second session), and one additional participant was excluded because we did not accurately record their behavior due to technical difficulties. Hence, all reported analyses used data from thirty-one participants.

Task

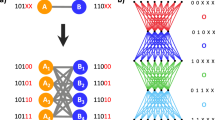

Each participant completed 2 sessions of a visuo-motor response task in an MRI scanner while BOLD activity was recorded. The visuo-motor task consisted of a set of shapes, a set of motor responses, and a graph which both served to map the shapes to responses and to generate trial orderings. Each node in the graph was associated with a shape and a motor response. For the former, we designed a set of 15 abstract shapes (Fig. 1a, left) to be visually discriminable from one another and to evoke activity in the lateral occipital cortex. For the latter, we chose the set of all 15 one- or two-button motor responses on a five-button response pad (Fig. 1a, right). We assigned participants to one of two conditions: a modular graph condition (n = 16) or a ring lattice condition (n = 15) (Fig. 1b, left). Each trial corresponded to a node on the graph, and trial order was determined by a walk between connected nodes. However, subjects were not informed that there would be any such regularity to the order of stimuli. A three-way association between node, shape, and motor response was generated, at random, for each participant: each of the 15 graph nodes was assigned a unique shape and a unique motor response, both chosen at random from the set of shapes and the set of motor responses, respectively (Fig. 1b, right). The mappings of shapes and motor responses were independent of one another, and randomized between participants, allowing us to separate the effects of shape, motor response, and graph.

a Participants were trained and tested on a set of 15 shapes (left) and 15 possible one- or two-button combinations on a response pad (right). b The order of those trials, and how the shapes and motor responses related to one another, varied between participants and across the two graph conditions. Each participant was assigned to one of two graph structures: modular or ring lattice (left). Then, each of the 15 shapes and motor responses was mapped to one of the 15 nodes in the assigned graph (right). To control for differences among shapes and responses, each mapping was random and unique to each participant. c Session one (top left) comprised five runs of a training block followed by a recall block. Session two (top right) comprised eight runs of four recall blocks each. Each block was composed of a series of trials on the assigned graph. In training blocks (bottom left), participants were instructed to press the buttons indicated by the red squares. To encourage participants to learn shape mappings, the shape appeared 500 ms prior to the motor command. In recall blocks (bottom right), the shape was shown and participants had 2 s to respond. If they responded correctly, then the shape was outlined in green; if they response incorrectly, then the correct response was shown.

The experiment extended over 2 days. Session one occurred on the first day and session two occurred on the second day. Session one consisted of five task runs, and each run consisted of a training block and a recall block. Session two consisted of eight runs of four recall blocks each (Fig. 1c, top). During each training block, five squares were shown on the screen, horizontally arranged to mimic the layout of the response pad. On each trial, two redundant cues were presented: (1) a shape was shown in all five squares, and (2) the associated motor response was indicated by highlighting one or two squares in red. Either cue alone was sufficient to pick the correct response. The shape was displayed 500 ms earlier than the motor movement in order to encourage participants to learn the visuo-motor pairings. Participants completed 300 trials at their own pace: each trial would not advance until the correct motor response was produced. Once produced, the shape for the next trial would immediately appear, and 500 ms later the square(s) corresponding to the next required motor response would be highlighted. During each recall block, the shape alone was displayed in the center of the screen, and participants were given 2 s to produce the correct motor response by memory. No motor cue was given, unless the participant answered incorrectly: in the latter case, the correct response was shown below the shape for the remainder of the 2 s (Fig. 1c, bottom).

Subjects accurately learn visuo-motor associations

We measured response accuracy to verify that participants learned the task structure and the visuo-motor associations. For participants to attain high response accuracy during the training blocks, they must simply respond to the motor commands presented to them; in contrast, for participants to attain high response accuracy during the recall blocks, they must correctly learn the shape-motor associations. In the training blocks of session one, we observed a mean accuracy of 89.1% by run five, suggesting that participants could produce the motor response with high accuracy when explicitly cued (Fig. 2a, left). In the recall blocks of session one (Fig. 2a, center), we observed a mean accuracy of 74.4% by run five (chance was 6.67%), indicating that participants could also produce the motor response with some accuracy despite the absence of any motor cue. At the beginning of session two, the recall accuracy was slightly lower (at 70.8%), but by the end of session two, the recall accuracy was 96.0%, demonstrating robust recall of motor responses (Fig. 2a, right). Collectively, these data demonstrate that participants were able to learn the motor response assigned to each visual stimuli over a 2-day period. See Supplementary Fig. 3 for individual participants.

a Participant response accuracy. Markers indicate the mean values and error bars indicate the 95% confidence intervals across participant averages for each block and run. b Participant response times for correct trials. Markers indicate the mean values and error bars indicate the 95% confidence intervals across participant averages for each block and run. In both a and b, the blue (orange) line indicates quantities calculated from data acquired from participants assigned to the modular (ring lattice) graph condition. The black line indicates the mean across both graph conditions. In both panels, we show quantities calculated for session one training (left) and recall (center) blocks, as well as for session two recall blocks (right).

Whereas response accuracy allows us to assess the learning of visuo-motor associations, response time allows us to assess each participant’s expectations regarding event structure and the underlying graph that encodes that structure. If a participant is able to learn the underlying graph structure, then they can predict which shapes might be coming next (those directly connected to the current shape in the graph), and will respond more quickly. Consistent with this intuition, we found that response times steadily decreased during training, from approximately 1.18 s during run one to approximately 1.01 s during run five (Fig. 2b, left). During the recall blocks, response times depended on the participant’s ability to (1) predict upcoming stimuli and (2) recall the shape-motor association. We found that response times decreased during the recall blocks of session one (1.07 s on run one to 1.00 s on run five; Fig. 2b, center) and of session two (from 0.97 s on run one to 0.89 s on run eight; Fig. 2b, right), demonstrating improvement in prediction and/or recall speed. See Supplementary Fig. 4 for individual participants.

Graph representational structure is not decodable Independently from stimulus and motor structure

Given the behavioral evidence for participant learning, we asked how motor response, shape, and graph were represented in the brain. In what follows, we will describe these three domains in turn. We first sought to find direct evidence of representation of graph structure. We hypothesized that, in-line with prior work, we should be able to decode consistent, subject-specific patterns of activity associated with graph structure in the MTL20. We hypothesized that, if the hippocampus was directly encoding graph structure, we should be able to reliably decode node identity from neural patterns on any given trial. Node identity is fully conflated for each individual subject with visual appearance and motor response, but these three aspects should be separable across participants: if the relationship between patterns of evoked BOLD activity in a given brain region is due to graph structure—rather than response or visual associations—then we should be able to find a consistent pattern of relationships preserved across participants based on graph structure (Fig. 3a).

a Subjects were assigned to either the modular or ring lattice groups, which determined the possible transitions between trial types. b Above-chance classification accuracy for lattice graph participants in left hippocampus ROI. Colors indicate graph condition: modular or ring lattice. Classification accuracy does not differ significantly between conditions (independent t-test, t29 = 1.51; two-sided p = 0.141). Boxes show 25%, 50%, and 75% quartiles. Whiskers show the range of the data excluding outliers, and points indicate individual participants. Dotted line indicates chance performance of 6.67%. c We did not observe similar representations of graph structure across participants. Here we ran a searchlight to identify regions that showed high similarity in how graph nodes were represented relative to one another. Shown are the mean values when the RDM in the neighborhood of a voxel was correlated with the average RDM of all other subjects in that neighborhood. We computed this quantity separately for both graph conditions and then averaged the two maps. d Two regions exhibited heightened within-subject consistency of representational dissimilarity matrices: visual cortex (top) and motor cortex (bottom). Both panels present the mean Pearson’s correlation coefficient, r, between RDMs among runs for each subject.

We began by asking whether we could decode trial identity in the hippocampus. To measure the representation of trial information in the brain, we decoded trial identity from patterns of evoked BOLD activity using multi-voxel pattern analysis (MVPA). Each trial evokes a pattern of brain activation, which can be viewed as a point in high-dimensional space, where each dimension corresponds to a different voxel’s BOLD activation. For each of these activation patterns, we used a trained classifier to predict what trial was being experienced by the subject, and we assessed the veracity of that prediction using cross-validation to predict trials in a held-out run (see “Methods”). We examined classification accuracy in left, right, and a bilateral hippocampal gyrus region of interest (ROI) by training a classifier on data from the respective laterality corresponding to seven of the session two recall runs, and by testing our prediction on the remaining run. Data consisted of LS-S β weights resulting from a contrast of the regressor for each trial to a nuisance regressor of all other trials. We find that we were able to decode node identity above-chance in the left hippocampus for lattice graph subjects (t14 = 2.22; p < 0.05) (Fig. 3b), though not for modular graph subjects, and the difference between the two groups is not significant (t29 = 1.51; p < 0.14). Notably, the MTL is prone to low signal-to-noise-ratio fMRI signals due to anatomy21, which can be improved by imaging with a reduced field of view at a cost of our ability to infer graph effects elsewhere in the brain; thus this finding may simply suggest a limitation of our whole-brain signal.

Even if we cannot reliably decode trial identity within the hippocampus, it may be that graph structure is encoded more robustly elsewhere in the brain. For each participant, we conducted a whole-brain searchlight where for each voxel we computed the mean RDM in the neighborhood of that voxel, thereby producing a 105-dimensional vector for each voxel composed of the upper right entries in the 15-by-15 RDM. We then calculated the correlation coefficient between that vector and the mean vector of the same voxel in the remaining participants who had been trained on the same graph type. Finally, we took the mean of these correlations across all subjects. This process is similar in principle to that of estimating a lower bound on the noise ceiling22. The resulting map should highlight voxels that displayed similar representational dissimilarity patterns across participants solely due to graph structure, without making any assumptions about the representations themselves or about the dissimilarity pattern. We observed no clear region in which representational structure was shared across participants (Fig. 3c). This variation is perhaps intuitive, as graph structure is experienced indirectly through a sequence of steps between nodes, and also variably in the sense that each participant experienced a different walk through the network. Hence, it is natural to expect high variability in how each participant encodes the graph structure.

High intersubject variability can exist alongside high intrasubject reliability. To understand the potential relation between the two, we measured intrasubject reliability by the consistency of RDMs across runs within each subject. We performed a similar procedure to our earlier searchlight, with the one key difference being that we estimated the correlation between the RDM for each run and the mean of the RDMs for the other runs, in the neighborhood of each voxel. This approach provided us with a consistency map for each subject, and we averaged these consistency maps across subjects (Fig. 3d). We observed two regions of high consistency that overlap with the motor and visual regions, primarily centered on the postcentral gyrus, as well as on the lateral occipital cortex (LOC), regions which we a priori expected to reflect representations of motor and visual aspects of each trial, respectively. This finding suggests that any consistent representation of graph structure may overlap with stimulus shape or motor response encodings; thus, we next asked whether graph structure might nonetheless influence representational structure of stimuli elsewhere, as suggested by previously observed behavioral sensitivity to graph structure.

Representational structure in motor cortex follows response patterns

We next turned our attention to representation of motor response in the brain. Prior work suggests that we should be able to decode consistent, subject-specific patterns of activity associated with each of the 15 one- and two-finger motor responses in both primary and somatosensory motor cortices23,24,25. We hypothesized that we could reliably decode the associated motor movement from the neural pattern on any given trial, and that the relationships between those neural patterns would be preserved across participants.

As expected, we could predict the identity of the current trial with an accuracy above chance for all 31 participants. That is, the BOLD patterns evoked within each subject in response to finger movements were highly consistent across trials and highly distinct for each movement. Prediction accuracy was above chance for the left hemisphere postcentral gyrus ROI, and for a right hemisphere postcentral gyrus ROI and a bilateral postcentral gyrus ROI in all but one subject (Fig. 4a). Specifically, we found that the left-hemisphere classification accuracy was significantly higher than the right hemisphere classification accuracy, as expected given that task responses were performed with the right hand (two-sided paired t-test; t30 = 6.55; p < 4 × 10−7). Interestingly, classifying held-out runs using data from both hemispheres did not provide a statistically greater accuracy than classifying using data from the left hemisphere alone (two-sided paired t-test; t30 = −0.64, p = 0.53). These results demonstrate that trial information was robustly represented in the BOLD signal in postcentral gyrus, and that we could decode this information with MVPA, particularly in the hemisphere contralateral to the motor response.

a Above-chance classification accuracy for all participants in the left postcentral gyrus. Colors indicate graph condition: modular or ring lattice. Boxes show 25%, 50%, and 75% quartiles. Whiskers show the range of the data excluding outliers, and points indicate individual participants. The dotted line indicates chance performance of 6.67%. b SVM classification accuracy in postcentral gyrus was correlated with mean response time in the recall blocks of session two. Lines indicate linear regression fits and shaded envelopes indicate 95% confidence intervals. c Representational dissimilarity matrices (RDMs) were calculated for each subject using a cross-validated Euclidean metric, and averaged across subjects. Here we show the average RDM in left postcentral gyrus, with rows ordered by the motor command (see key at left of panel). The upper triangle presents data for the modular condition and the lower triangle presents data for the lattice condition; diagonal elements are equal to 0 for both conditions. High values indicate dissimilar patterns. d In the postcentral gyrus, the representational dissimilarity matrices were highly similar across subjects when ordered by motor response. Here we ran a searchlight to identify regions that displayed consistent representations across subjects. Shown is the average Pearson correlation coefficient, r, when the RDM in the neighborhood of a voxel was correlated with the average RDM of all other subjects in that neighborhood. We observed two regions of high consistency: one centered on motor cortex (shown here), and one centered on the cerebellum. The searchlight was thresholded at r = 0.18 to control the FWER at two-sided p < 0.05.

While robust, we observed that classification accuracy varied from 5.9% to 17% across subjects in the bilateral postcentral ROI. What drives these differences in classification accuracy? We hypothesized that individual differences in task performance effected differences in classification accuracy. We therefore asked whether our ability to classify trials for a participant was dependent on that participant’s mean response time or mean response accuracy. To answer this question, we used the participant behavior on the recall blocks in session two, which represented post-training task performance (Fig. 4b). We found that response time was a significant predictor of classification accuracy (Table 1), such that classification accuracy was higher for participants with higher average response times than for participants with lower average response times (OLS; β = 0.0191, SE = 0.009, t27 = 2.13, p < 0.043). We did not observe a relationship between classification accuracy and response accuracy (OLS; β = −0.06, SE = 0.081, t27 = −0.73, p = 0.47). The interaction between graph condition and response time was additionally not significant (OLS, β = −0.015, t27 = −1.362, p < 0.19, 95% CI: −0.038 to 0.008). Given that the postcentral gyrus encodes information about motor movements, it is not surprising that the timing of those movements impacted our ability to decode their evoked activity, as increased response times provide a longer readout of activity patterns.

Our classification results suggested that evoked patterns of activity were highly reliable across runs within single subjects. Prior results have suggested that activation patterns vary appreciably between subjects24, but that the relationships between activation patterns are conserved. That is, the distance between representations should be consistent between subjects, even if the specific voxel-wise patterns differ. To assess the preservation of inter-representation differences across subjects, we estimated a representational dissimilarity matrix (RDM) for each subject using a cross-validated Euclidean metric (see “Methods”). In this matrix, we let i, j index pairs of movements, and we let the ij-th matrix element indicate the dissimilarity of movement i’s representation from movement j’s representation. We then averaged the RDMs across participants (Fig. 4c), separately for the two graph conditions (modular and ring lattice). We found that differences in motor representations were preserved across participants and graph conditions, and reflected the movements involved: representations of two-finger movements were consistently more dissimilar to representations of one-finger movements than they were to themselves (two-sided independent t-test; t93 = 9.45, p < 3 × 10−15), and likewise one-finger movement representations were more dissimilar to two-finger movements than they were to themselves (independent t-test; t58 = 4.70, p < 2 × 10−5). These data demonstrate not only that subjects exhibited reliable patterns of activity evoked by each motor response across the experiment, but also that the relationship between those patterns was reliable between subjects within postcentral gyrus.

Although we chose to consider the postcentral gyrus motivated by prior work, we also performed an exploratory analysis to identify other regions whose motor RDMs were highly similar across participants. For each participant, we repeated the approach from Fig. 3c, conducting a whole-brain searchlight for similar motor representational dissimilarity patterns across participants, without making any assumptions about the representations themselves or about the dissimilarity pattern. We found two regions that exhibit high intersubject similarity after thresholding to control the FWER at p < 0.05 (see “Methods”): a region encompassing both pre- and postcentral gyrus bilaterally (Fig. 4d) and a region in the cerebellum (Supplementary Fig. 2). Thus by constructing an RDM organized by movement, but not by shape or node identity, we identify three regions (precentral gyrus, postcentral gyrus, and cerebellum) which exhibited reliable cross-subject relationships between evoked patterns of activity.

Representational structure in visual cortex reflects both stimulus and graph type

Following our decoding of motor responses, we asked whether visual regions housed reliable encodings of shape identity both within and between participants. Our stimuli were chosen to produce differentiable representations in the lateral occipital cortex (LOC)26. In prior work, the LOC was shown to exhibit task-dependent decorrelations in the neural representations of shape stimuli27. We therefore hypothesized that if the architecture of the graph altered the perception or encoding of stimuli, then that dependence would manifest in LOC. Using data from a separate localizer run, we were able to localize the LOC for each subject by applying a general linear model (GLM) with the following contrast: BOLD responses to a novel set of shape stimuli versus BOLD responses to phase-scrambled versions of those shapes. Note that these novel shapes were generated using the same process as that for the shapes in the training and recall blocks. We then intersected the two largest clusters from the localizer with a surface-defined LOC ROI and ordered the voxels in this intersection by their z-statistics from the localizer contrast. We chose the 200 voxels with the highest z-scores from each hemisphere to define left and right ROIs, and we chose the top 600 voxels to define a bilateral ROI. We then studied whether stimulus coding in this ROI exhibited similar properties to the motor representations we could reliably decode in postcentral gyrus.

As before, we first wished to verify that trial-by-trial representations were consistent within subjects. We found that we could predict trial identity on a held-out run remarkably well across all participants and ROI choices (Fig. 5a). We averaged the classification accuracy across all 8 folds and observed that it was greater than chance (6.67%) for all participants. We also observed higher classification accuracy in participants trained on the modular graph than in participants trained on the lattice graph (Mixed ANOVA, F(1, 29) = 14.14, p < 8 × 10−3). Although the above classification accuracy was averaged only over correct trials, the classifier was trained on all trials, and we wished to verify that this choice did not bias our results. Re-running the analysis but only training on correct trials, we found that classification accuracy was again higher for participants trained on the modular graph than for participants trained on the lattice graph (Mixed ANOVA, F(1, 29) = 18.488, p < 2 × 10−4). These data demonstrate that trial identity could be reliably decoded within-subject from activity within LOC.

a Above-chance classification accuracy for all participants in left, right, and bilateral LOC (lateral occipital cortex) ROIs. Colors indicate graph condition: modular or ring lattice. Classification accuracy differs significantly between conditions (Mixed ANOVA, F(1, 29) = 14.14, p = 7.64 × 10−4). Boxes show 25%, 50%, and 75% quartiles. Whiskers show the range of the data excluding outliers, and points indicate individual participants. Dotted line indicates chance performance of 6.67%. b In LOC, graph type predicted SVM classification accuracy (OLS, t27 = 2.15, uncorrected two-sided p = 0.041, 95% CI: 0.002 to 0.104), whereas response time was not a significant predictor (OLS, t27 = 1.13, uncorrected two-sided p = 0.267, 95% CI: −0.019 to 0.067). Lines indicate linear regression fits and shaded envelopes indicate 95% confidence intervals. c Representational dissimilarity matrices (RDMs) were calculated using a cross-validated Euclidean metric and then averaged across subjects. Here we show the average RDM in left LOC, with rows ordered by the shape stimulus (see key at left of panel). The upper triangle presents data for the modular condition and the lower triangle presents data for the lattice condition; diagonal elements are equal to 0 for both conditions. High values indicate dissimilar patterns. d In the visual cortex, the RDMs were highly similar across subjects when ordered by stimulus shape. Here we ran a searchlight to identify regions that displayed consistent representations across subjects. Shown is the average Pearson correlation coefficient, r, when the RDM in the neighborhood of a voxel was correlated with the average RDM of all other subjects in that neighborhood. We observed one region of high consistency, which was centered bilaterally on LOC and extended posteriorly to early visual cortex. The searchlight was thresholded at r = 0.18 to control the FWER at two-sided p < 0.05.

To evaluate whether classification accuracy differed by graph, we fit a linear regression model that predicted classification accuracy from both response time and graph condition (Fig. 5b and Table 1). We found that graph condition (modular versus ring lattice) was a statistically significant predictor of classification accuracy (OLS, estimated increase of 5.3% for the modular graph, t27 = 2.15, p < 0.042, 95% CI: 0.002–0.104). This result suggests that there are fundamental differences in learned representations between the two training regimens. In contrast to our findings in the postcentral gyrus, the classification accuracy in LOC was not significantly predicted by response time (OLS, β = 0.024, t27 = 1.13, p < 0.27, 95% CI: −0.019 to 0.067), ruling out the possibility of our results being driven solely by behavior. The interaction between graph condition and response time was not significant (OLS, β = −0.006, t27 = −0.227, p < 0.83, 95% CI: −0.059 to 0.048). Thus we find that graph structure induced a significant difference in our classification accuracy of trials, and that difference, as is evident in Fig. 5b, was not driven by response time differences alone.

Given the reliable patterns of activity in LOC, we next computed a mean RDM in the LOC across subjects as in Fig. 4c, but arranged the RDM by shape rather than by motor command. Crucially, this RDM was not a reordering of the mean motor response RDM, as the correspondence between shapes and motor responses was distinct for each subject. In computing the mean RDM, we averaged data separately for modular and ring lattice conditions. Across both graphs, we found a consistent distance relationship between shape representations, which we find to be significant when comparing the modular and ring lattice RDMs (Kendall’s τ = 0.390, p < 3.81e−9, note the symmetry between the upper and lower triangles of Fig. 5c). This finding suggests that the sensitivity of the LOC to individual shape features was shared across subjects.

The analyses we have described thus far focused on the LOC. Next, similar to Fig. 4d), we performed an exploratory analysis to determine whether other areas of the brain displayed RDMs that were consistent across subjects when the RDMs were arranged by shape. We computed the correlation between a single subject’s shape RDM and the average shape RDM across all other subjects, in the neighborhood of each voxel (Fig. 5d). As before, we took the mean of this correlation estimated for all subjects, and applied a threshold to control the FWER at p < 0.05 (see “Methods”). We observed a single region of high between-subject similarity. As expected, this region was centered on the LOC and extended posteriorly through early visual cortex. Interestingly, we observed no other regions where RDMs were consistent between subjects when arranged by shape. These results largely mirror our findings with motor response activity patterns. However, here we demonstrate that by simply rearranging our RDM we can instead isolate relationships between activity driven by visual properties of the stimuli. Intriguingly, we observe a difference in decoding accuracy between graph conditions, suggesting that graph structure modulates the encoding of individual stimuli in LOC.

Representation and dimensionality differ between graph structures

By searching for regions which exhibited significant intrasubject reliability across runs, we identified two regions in which RDMs were consistent across blocks for individual subjects. We then organized the RDMs according to stimulus shape and motor command, and confirmed that these regions exhibited significant intersubject reliability in which patterns of representational dissimilarity were shared across participants. This inter-subject similarity was likely driven by aspects of trial identity: one- and two-finger movements for the motor response, and aspect ratio or curvature for stimulus shapes. This suggests that any consistent representation of graph structure may spatially overlap with stimulus shape or motor response encodings.

Consistent with this suggestion, we observed that graph type was a significant predictor of classification accuracy in LOC (Fig. 5a, b), whereby classification accuracy was greater in the modular condition than in the lattice condition. One possible explanation for this observation is that trial types were more distinguishable from one another due to more distinct representations: when a subject experienced the modular graph, the representation of shape one may simply have been further away from the representation of shape two in representational space. To evaluate the validity of this possible explanation, we calculated the average distance between representations in the bilateral LOC ROI by computing a Euclidean distance RDM for each run, and then taking the mean of the upper-right off-diagonal elements for each subject (Fig. 6a, left). We observed that discriminability was significantly greater in the modular graph than in the lattice graph (two-sided independent t-test; t29 = 3.04, p = 0.005). That is, the modular condition induced more separable mean activity patterns between pairs of trial types than did the lattice condition.

a Subjects in the modular graph condition showed higher average distance between representations of different trial types (left) and lower average distance between representations of the same trial type (right) than subjects in the lattice condition. The inter-representation distance was the mean of the off-diagonal elements of the Euclidean distance RDM for each subject. Higher values indicate that trial type representations were more distinct from one another. Meanwhile, the intra-representation distance measures how invariant a representation is across repetitions of the same trial type. Low values indicate consistent evoked activity. Boxes show 25%, 50%, and 75% quartiles. Whiskers show the range of the data excluding outliers, and points indicate individual participants. b Here we show the separability dimension of the representations in LOC. We observed a consistently higher separability dimension in the modular condition than in the ring lattice condition. Boxes show 25%, 50%, and 75% quartiles. Whiskers show the range of the data excluding outliers, and points indicate individual participants.

Another possible explanation for the greater classification accuracy observed in the modular graph is that trial-to-trial replicability may be higher when a subject experiences the modular graph: a given shape may evoke a more consistent neural representation on each appearance of that shape. To evaluate this possibility, we calculated the average consistency of representations in the same bilateral LOC ROI by taking the mean representation for each run and trial type, and then calculating the mean distance of that representation from the representations of the other runs (Fig. 6a, right). We observed that patterns were more consistent in the modular graph than in the lattice graph, as evident in lower distances between trial repetitions (two-sided independent t-test; t29 = 3.60, p < 0.002). Taken together, these two findings suggest that the modular graph leads to more distinguishable and consistent trial representations, which in turn could drive the heightened classification performance.

The observed increase in neural discriminability in LOC suggests that behavioral discriminability might similarly differ between graph conditions. To test for this possibility, we recruited an additional group of subjects and trained them on the same set of stimuli associated to either a modular or lattice graph. We then tested whether subjects could more quickly discriminate between stimuli presented on the modular graphs than between the same stimuli presented on the lattice graph (Supplementary Fig. 5). We found that response times when discriminating between shape pairs were highly correlated with their representational distance in LOC (Mixed-Effects Model, t93 = 4.33, p < 0.0001). Notably, though, we did not observe a significant difference based on graph type (Mixed-Effects Model, mean = 0.07 ms, t48 = 0.866, p < 0.392). These behavioral results suggest that while subjects in the modular condition exhibited more distinguishable LOC activity patterns than did subjects in the lattice condition, these differences in stimulus representation do not directly relate to behavioral distinguishability of stimuli.

A third possible explanation for the greater classification accuracy observed in LOC in the modular graph is the dimensionality of the representation. If one graph topology allows a participant to encode representations in a higher dimensional space, then those representations could also be made more distinct from one another. Note that our ability to classify trials using an SVM relies on finding a hyperplane separating the trial types. Intuitively, if the trial types lie in a higher-dimensional space, then finding a separating hyperplane becomes an easier task than if the trial types lie in a low-dimensional space. To determine whether dimensionality plays a role in the LOC’s representations, we computed a quantity called the separability dimension, which measures the fraction of binary classifications that can be implemented using our trial types (see refs. 28,29 and “Methods” for details). While our previous neural discriminability finding examined the ability to distinguish between pairs of trial types, dimensionality addresses the ability to distinguish between larger groupings of trial types, where higher dimensionality enables encoding increasingly complex relationships between trial types. Consistent with our intuition, we found that dimensionality was higher in the modular graph condition than in the ring lattice condition (Fig. 6b). Interestingly, this difference was apparent in LOC where we observed a difference in classification accuracy between graph conditions, but not present in the postcentral gyrus where we observed no difference in classification accuracy between graph conditions (Supplementary Fig. 1).

Functional connectivity during learning predicts subsequent decoding

Thus far, we have examined differences in stimulus representations within individual regions of the brain after subjects have successfully learned to perform the task. We have observed significant differences in stimulus-evoked activity patterns in the LOC between subject groups: that is, more robust decoding accuracy alongside heightened intrinsic dimensionality of the associated neural activity in subjects exposed to the modular graph. Moreover, there exists significant within-group variability in decoding accuracy: that is, differences unrelated to the statistical structure of the graph traversals encountered. Thus, we asked whether individual differences in decoding accuracy could be explained by distinct patterns of connectivity between the aforementioned regions during learning. For example, motor activity in postcentral gyrus might be directly driven by visually-evoked activity in LOC, or it might be simultaneously driven by predictive mechanisms in the MTL. Moreover, communication between the MTL and LOC might reflect the degree to which subjects are learning the underlying statistical structure, versus simply learning to robustly respond to each stimulus at it is encountered.

To address how regional interactions during task acquisition contributed to day 2 learned stimulus representations, we computed the degree of static functional connectivity between brain regions during each learning block on day 1 of the experiment. We focused on the three pairwise connections between the regions in which we primarily expected involvement for the current task: left LOC, left postcentral gyrus, and left MTL. We then averaged these FC values for each subject for each of the three region pairs, providing a per-subject summary of regional interactions during task acquisition. We fit mixed-effects linear regression models which predicted day 2 classification accuracy in each of the three regions (left hippocampus, left LOC, and left postcentral gyrus) as a function of all three day 1 FC values.

We observed that individual differences in classification accuracy on day 2 could be predicted by differences in FC during learning after correcting for multiple comparisons (Table 2). In postcentral gyrus, increased decoding accuracy was predicted by higher LOC/postcentral FC as well as by lower LOC/MTL FC during learning. Intriguingly, this relationship was reversed for hippocampal decoding, where increased decoding accuracy was predicted by lower LOC/postcentral FC and by higher LOC/MTL FC, alongside MTL/postcentral FC being a negative predictor of hippocampal decoding. In LOC, only MTL/postcentral FC was predictive of decoding accuracy, with higher FC predicting higher decoding accuracy. These findings suggest that individual differences in FC during task acquisition are predictive of later differences in strength of stimulus representation among brain regions crucial to the performance of this task, perhaps reflecting distinct roles of involvement of the MTL among participants.

Discussion

Humans deftly learn the networks of interactions underlying complex phenomena such as language or music30. Behavioral and neural evidence demonstrates that the way we learn and represent stimuli is modulated by the temporal structure in which we experience those stimuli31,32. Moreover, recent studies indicate that humans may learn to represent the interaction network itself. For example, when stimuli are grouped into clusters, a phenomena commonly observed in natural settings2, learner response times to those stimuli are modulated by whether adjacent stimuli are situated in the same cluster, even when all transition probabilities (and thus predictive information) are held constant16. This effect is shown to be robust to types of stimuli33 and significant topological variation34. Moreover, learners respond more swiftly to a sequence drawn from a topology with such clusters compared to a topology without such clusters17. Collectively, these findings suggest that humans can learn properties of graph structure such as modular organization, and can leverage that structure to create more effective predictions. Converging neuroimaging evidence suggests that neural representations of stimuli encode properties of the interaction networks, including cluster identity18 and graph distance between items19,35. To date, however, it remains unclear how such graph-induced representation structure differs when the same elements are arranged in a distinct organizational pattern.

In the current study, we addressed this question by training human subjects to respond to sequences of paired visual and motor stimuli. We then tested whether representations of those stimuli differed when we performed a controlled manipulation of the sequence. Specifically, each subject learned a sequence based on either a modular graph or a ring lattice graph, in both cases with no explicit knowledge of the underlying graph. Subjects underwent 1 day of training followed by a second day in which they were asked to produce the motor response associated with each visual stimulus in the sequence. We found that we could reliably decode stimulus identity from the neural representation in both postcentral gyrus and lateral occipital cortex. Further, we observed consistent representation structure of our stimulus set across subjects in these regions, when comparing motor response in postcentral gyrus and stimulus shape in lateral occipital cortex. Within lateral occipital cortex, but not postcentral gyrus, our classification ability was modulated by graph type, with the modular graph leading to significantly higher classification scores than the ring lattice graph. This classification difference due to graph was accompanied by changes in the representation structure: modular representations were both more consistent across trials and more separable between different trial types than were representations from the ring lattice graph. Finally, we found that representations in lateral occipital cortex from the modular graph exhibited higher separability dimension than did those from the ring lattice graph. Taken together, these results show how graph structure can modulate learned neural representations of stimuli, and that modular structure induces more effective (discriminable) representations of stimuli than does ring lattice structure.

Modular graphs enable effective neural representations

There is strong theoretical justification to expect more robust representations to arise from the modular graph than from other non-modular graphs. Using information theory, prior work has shown that graph organizations characterized by hierarchical modularity facilitate efficient transmission of information2. Perhaps due to that functional affordance, this type of organization is commonly observed in many real-world networks such as those of word transitions, semantic dependencies, or social relationships. The informational utility of hierarchically modular networks arises in part from the fact that such networks exhibit high entropy, thereby communicating large amounts of information. It also arises in part from the expectations that humans form about clustered structures: preferentially predicting transitions that remain within a cluster, thereby accurately foreshadowing upcoming stimuli36. Here in our study, the modular graph composed of three clusters of five nodes is more highly clustered than the ring lattice graph. This fact can be clearly seen visually, but it can also be quantified by the clustering coefficient, which is defined as the fraction of a node’s neighbors that are connected to one another. The average clustering coefficient of the modular graph is 0.7 and of the ring lattice graph is 0.5. Indeed, we found that participants formed more robust representations of stimuli from the modular graph than from the lattice graph. In other words, the modular graph is more similar to graphs encountered in the real world, to which our expectations are tuned, and is also better capable of conveying its structure.

Response time differences do not explain LOC graph effects

Decoding accuracy in postcentral gyrus was significantly predicted by mean subject response time, explaining some of the observed between-subject variance. This is not particularly surprising, if only because a longer response time would lead to a longer and thus perhaps more robust readout of neural activity. Nonetheless, fully ruling out a non-spurious connection between response time and decoding accuracy would require careful controls. Crucially though, we find that this relationship was not significant in LOC, eliminating a potential confound in explaining our difference in classification accuracy between graph types. In fact, Fig. 5b suggests a clear modulation of graph type on top of any (non-significant) response time effects.

Neural representations from modular graphs are high dimensional

We observed that the neural representations developed during exposure to a sequence drawn from a modular graph tend to be high dimensional. Prior work has shown that high dimensionality of neural representations is associated with faster learning and more efficient stimulus embedding29. Here, we extend the current knowledge in the field by demonstrating that more efficient graph structures lead to higher dimensional neural representations. It is possible that this association between topology and dimensionality arises from the fact that the modular graph can be neatly separated into clusters; coding by cluster could allow the brain to use a high-dimensional embedding. The nature of this higher-dimensional encoding remains a topic for future study, and could be pursued by investigating the learning of a variety of graphs with and without hierarchical structure.

Graph-dependent adaptation decorrelates shapes

Our observation—that graph topology can be used to decorrelate neural responses to shape—is of particular relevance to the study of neural adaptation. An oft-hailed mechanism of neural adaptation is the decorrelation of neural responses among stimuli37,38. Such decorrelation is thought to allow the brain to become attuned to task-relevant distinctions between stimuli39. Prior work has observed decorrelation in BOLD responses to visual stimuli in the lateral occipital cortex (Mattar et al.27). Specifically, this study found that training on two classes of stimuli caused representations evoked by the two classes in the lateral occipital cortex to decorrelate from one another. Such increased neural separability of stimuli may support behavioral discrimination between important stimulus classes.

Here in our study, all subjects learned the same set of 15 previously unseen shapes. Basic visual properties of the shapes are expected to drive the BOLD response in the lateral occipital cortex26. However, if these properties alone drive the BOLD response, then we would expect similar representational structure between activity patterns in both modular and ring lattice subjects. Instead, we found that we could more accurately classify stimuli from neural representations when participants experienced sequences determined by the modular graph than when they experienced sequences determined by the ring lattice, suggesting that BOLD responses are driven by non-visual factors. This classification difference was accompanied by two observed differences in pattern distance: First, activation for a given trial type was more stable across trials in the modular graph than in the ring lattice graph, as evidenced by the lower mean pattern distance between trial repetitions. Second, activation was more distinct between trials of different types: the average distance between different trial types was higher in the modular graph than in the ring lattice graph. In summary, we observe that graph structure drives a difference in representation fidelity, inducing both more reliable and more separable patterns.

Motor cortex displays strong organizational patterns

The approach that we use here—representational similarity analysis or RSA—has been commonly used to understand how neural representations of stimuli are encoded. Rather than asking which neurons or voxels encode a stimulus, we can study how the patterns of neurons or voxels relate across stimuli. Further, we can compare neural distances to those predicted by real-world stimulus properties, elucidating which real-world properties drive the brain’s organization. For instance, the same single-digit finger movement in different people elicits vastly different patterns of neural activation in primary motor cortex, while the relationship between activity patterns of different fingers is highly conserved across people24. Prior work has demonstrated that everyday usage patterns predict motor representations better than musculature, a result that fundamentally underscores the importance of relationships rather than the movements themselves. Similar findings have also been reported for motor sequences25.

Here in our study, we recorded neural activity of both single- and multi-finger movements, and found that these relationships were highly preserved between participants and between graphs (Fig. 4c). This observation raises an intriguing question for further study: Why is the difference in representational dimensionality between conditions observed in LOC but not in motor cortex? Strikingly, in the postcentral gyrus we found a clear separation of one-finger and two-finger response patterns, where one-finger response patterns were similar to other one-finger response patterns, and two-finger response patterns were similar to other two-finger response patterns. We note that our ROI encompasses a larger area than that used in prior work24, and studies of multi-finger sequences, while distinct from multi-finger movements, have observed that these increasingly complex movements elicit distinct representations in areas further from the central sulcus25. Further investigation is necessary to determine whether (and to what degree) the dissimilarity patterns we observed are influenced by differences in cortical location of one-finger and two-finger motions.

Functional connectivity reveals distinct patterns of regional interactions

While the current study has primarily focused on between-group differences driven by the statistics of modular vs. ring lattice graph traversals, we observed significant variability in classification accuracy both between and within groups. This variability existed even in the hippocampus, despite previous work suggesting hippocampal involvement in similar tasks4,40,41. It is not particularly surprising that subjects might exhibit variability in hippocampal recruitment. Although the task in this study was designed to encourage prediction of upcoming stimuli, participants can also perform the task solely through learning shape-motor associations. Moreover, the complexity of learning such associations in the current study may have competed for attention with learning to predict upcoming stimuli.

Indeed, we found that classification accuracy in hippocampus and postcentral gyrus exhibit inverse patterns of association with FC during task acquisition. Whereas high LOC/postcentral FC and low LOC/MTL FC predicts high postcentral classification accuracy, we observe the opposite for hippocampus classification accuracy. This finding suggests that increased FC between visual and motor regions is reflective of heightened reliance on robust stimulus representations directly within motor cortex, and in turn less reliance on hippocampal representation. By contrast, increased FC between visual cortex and MTL reflects a later role for MTL in predicting upcoming stimuli, perhaps reflecting competing mechanisms for task performance that vary across subjects. Future research might elucidate the degree to which these functional connections track separable processes, and/or relate to distinct patterns of behavior reflecting differing roles of the hippocampus in tasks that benefit from—but do not require—robust prediction.

Moreover, the relationship between FC during task acquisition and subsequent stimulus representation suggests that the two measures may be reflective of a single learning process. To better understand this process, future work might examine the dynamics between FC and representation during learning. If, as we hypothesize, the increase in FC between visual and motor regions is directly related to the strength of regional representations, then we would expect that FC would shift alongside local representations during the learning process. In the current study, we measured FC and representation in isolation: FC was measured during the learning process, and representational structure was measured only after it was assumed that participants had robustly learned the task structure. In future work, additional measurements of representational structure while learning over an extended time scale could serve to clarify this relationship, as well as elucidate a trade-off between hippocampal and visuo-motor predictive representations across the learning process.

Limitations

Prior work on learning modular structure has found that graph information such as cluster identity is represented in the medial temporal lobe (MTL)18,42, an area also shown to encode distances between items19. In the current study, we did not observe any graph-dependent effects in the MTL, but instead in the lateral occipital cortex. A number of factors could give rise to this difference. First, prior studies, to our knowledge, did not show evidence that graph structure was directly encoded. Metrics such as distance and cluster identity pool over many trials, and may allow for greater power to detect graph-related effects in the presence of noise. Indeed, the MTL is prone to low signal-to-noise-ratio fMRI signals due to anatomy21. This can be improved by imaging with a reduced field of view, but at a limitation of our ability to infer graph effects elsewhere in the brain. Another possibility is that our analysis is constrained by the number of subjects in our analysis. While we observed robust decoding results in LOC as well as in postcentral gyrus, suggesting that we should be capable of decoding robust within-subject representational structure, the limited sample size in the current study allows the possibility of mis-estimation of effect sizes43. Future work may be well-served by increased sample size, as demonstrated by the more robust behavioral effects of graph structure typically observed in larger behavior-only studies16,17. Additionally, our task is relatively complex. Behavioral work on graph learning has frequently required participants to learn image orientations16,20 or to anticipate motor responses17 but here participants must learn a mapping between both images and motor responses. Indeed, participants are still learning the shape-motor response pairings up through the end of the second session, as response accuracy continues to increase (Fig. 2b, right). The complexity of the task, requiring participants to learn not only visual stimuli but the stimulus-motor response pairings as well, could require additional training for the MTL to detect temporal regularities. Given that plasticity in the lateral occipital cortex can be driven by demands of stimulus discriminability27, additional training may be needed for the hippocampus to extract temporal statistics.

Conclusion

In this study, we expand upon prior behavioral evidence in graph learning, where graphs with modular structures produce faster response times in learners, suggesting that those learners can form more accurate predictions. Learners were successfully able to learn and recall a mapping of 15 novel shapes to motor movements, combining behavioral paradigms from prior graph learning work. We observed that modular graphs enable more consistent and distinct representations when compared to a ring lattice, and in turn allow better prediction of what a participant is responding to using machine learning. This representational difference was present in the lateral occipital cortex, a region sensitive to properties of the visual stimuli used in this task, and was accompanied by increased dimensionality of representations, a feature known to accompany effective learning. Our results motivate future work to better understand the nature of these representational changes, as well as generalizability to broader sets of graph structures.

Citation diversity statement

Recent work in several fields of science has identified a bias in citation practices such that papers from women and other minority scholars are under-cited relative to the number of such papers in the field44,45,46,47,48,49,50,51,52. Here we sought to proactively consider choosing references that reflect the diversity of the field in thought, form of contribution, gender, race, ethnicity, and other factors. First, we obtained the predicted gender of the first and last author of each reference by using databases that store the probability of a first name being carried by a woman48,53. By this measure (and excluding self-citations to the first and last authors of our current paper), our references contain 13.18% woman(first)/woman(last), 7.12% man/woman, 26.79% woman/man, and 52.92% man/man. This method is limited in that (1) names, pronouns, and social media profiles used to construct the databases may not, in every case, be indicative of gender identity and (2) it cannot account for intersex, non-binary, or transgender people. Second, we obtained predicted racial/ethnic category of the first and last author of each reference by databases that store the probability of a first and last name being carried by an author of color54,55. By this measure (and excluding self-citations), our references contain 6.03% author of color (first)/author of color(last), 14.19% white author/author of color, 22.81% author of color/white author, and 56.98% white author/white author. This method is limited in that (1) names and Florida Voter Data to make the predictions may not be indicative of racial/ethnic identity, and (2) it cannot account for Indigenous and mixed-race authors, or those who may face differential biases due to the ambiguous racialization or ethnicization of their names. We look forward to future work that could help us to better understand how to support equitable practices in science.

Methods

Participants

Thirty-four participants (11 male and 23 female) were recruited from the general population of Philadelphia, Pennsylvania USA. All participants gave written informed consent and the Institutional Review Board at the University of Pennsylvania approved all procedures. All participants were between the ages of 18 and 34 years (M = 26.5 years; SD = 4.73), were right-handed, and met standard MRI safety criteria. Two of the thirty-four participants were excluded because they failed to learn the shape-motor associations (one failed to complete the pre-training; one answered at-chance during the second session), and one additional participant was excluded because we did not accurately record their behavior due to technical difficulties. Hence, all reported analyses used data from thirty-one participants.

Task

Stimuli

Visual stimuli were displayed on either a laptop screen or on a screen inside the MRI machine using PsychoPy v3.0.3 and consisted of fifteen unique abstract shapes generated by perturbing a sphere with sinusoids using the MATLAB package ShapeToolbox (Fig. 1a, left). Each shape consisted of two sinusoidal oscillations. Each oscillation could vary in amplitude (either 0.2 or 1), angle (0, 30, 60, or 90 degrees), frequency (2, 4, 8, 10, or 12 cycles/2π), and the second oscillation could also vary in phase relative to the first oscillation (0 or 45 degrees). Out of the set of 3200 permutations, we selected a set of 15 visually distinct shapes for the main experiment. A second set of 15 visually distinct shapes was selected from the same set for the localizer. The same set of stimuli was used for all participants, albeit in different order. Five variations of each shape were also created through scaling and rotating (scale/rotation pairs were 100%/0 degrees, 95%/5 degrees, 100%/10 degrees, 95%/15 degrees, and 100%/20 degrees) and the appearance of variations was balanced within task runs so as to capture neural activity invariant to these properties.

Each shape was paired with a unique motor response. Possible motor responses spanned the set of all fifteen one- or two-button chords on a five-button response pad (Fig. 1a, right). The five buttons on the response pad corresponded to the five fingers on the right hand: the leftmost button corresponded to the thumb, the second button from the left corresponded to the index finger, and so on. In addition to the shape cue, motor responses were explicitly cued by a row of five square outlines on the screen, each of which corresponded to a button on the response pad. Squares corresponding to the cued buttons turned red; for instance, if the first and fourth squares turned red, a correct response consisted in the thumb and ring finger buttons being pressed.

Participants were assigned one of two graph types: modular or ring lattice. Both graph types were composed of fifteen nodes and thirty undirected edges such that each node had exactly four neighbors (Fig. 1b, left). Nodes in the modular graph were organized into three densely connected five-node clusters, and a single edge connected each pair of clusters. Each node in a participant’s assigned graph was associated with a stimulus-response pair (Fig. 1b, right). While the stimulus and response sets were the same for all participants, the stimulus-response-node mappings were random and unique to each participant. This mapping was consistent across runs for an individual participant. The order in which stimuli were presented during a single task run was dictated by a random walk on a participant’s assigned graph; that is, an edge in the graph represented a valid transition between stimuli. No other meanings were ascribed to edges. Walks were randomized across participants as well as across runs for a single participant. Two constraints were instituted on the random walks: (1) all walks were required to visit each node at least ten times, and (2) walks on the modular graph were required to include at least twenty cross-cluster transitions. Subjects were not told about the underlying graph type and statistical organization.

Pre-training

Prior to entering the scanner, participants performed a “pre-training” session during which stimuli were displayed on a laptop screen and motor responses were logged on a keyboard using the keys “space”, “j”, “k”, “l”, and “;”. Participants were provided with instructions for the task, after which they completed a brief quiz to verify comprehension. To facilitate the pre-training process, shape stimuli were divided into five groups of three. First, participants were simultaneously shown a stimulus and its associated motor response cue, and then were asked to press the associated button(s) on the keyboard. Next, participants were shown the stimulus alone and asked to perform the motor response from memory. If they did not respond within 2 s, the trial was repeated. If they responded incorrectly, the motor response cue was displayed. Once the participant responded correctly, the same procedure was repeated for the other two stimuli in the subset. Then, participants were instructed to respond to a sequence of the same three stimuli, presented without response cues. The sequence was repeated until the participant responded correctly for 12 consecutive trials. This entire process was repeated for each three-stimulus subset. The three stimuli in a given subset were always chosen so that none were adjacent to one another in the graph dictating the order of the sequence used during the “Training” stage (see below).

Training

During the first scanning session, participants completed five runs each consisting of a “training” and “recall” block inside the MRI scanner. A training block consisted of 300 trials and was designed to elicit learning of graph structure as in ref. 17. During each trial, participants were presented with a visual stimulus consisting of a row of five square outlines, each of which contained the same abstract shape (Fig. 1c). Five hundred milliseconds later, one or two of the squares turned red. Using a five-button controller, participants were required to press the button(s) corresponding to the red squares on the screen. If a participant pressed the wrong button(s), the word “Incorrect” appeared on the screen. The following stimulus did not appear until the correct buttons were pressed. Participants were instructed to respond as quickly as possible. Sequences were generated via random walks on the participants’ assigned graphs.

Recall (session one)

At the end of each training run, participants completed 15 additional “recall” trials during which each shape was presented without a motor response cue, requiring participants to recall the correct response. This block was intended to measure stimulus-response association learning, as well as to prepare participants for the extended recall phase in session two. A brief instructional reminder was displayed before the start of the block. On each trial, the target stimulus was displayed in a single large square in the center of the screen. Participants were given 2 s to respond to each stimulus; if they did not respond within this time, the trial was marked as incorrect. If they responded incorrectly, the trial was marked as incorrect and the motor response cue was displayed below the shape for the remainder of the 2 s. If they responded correctly, the outline of the square containing the shape turned green. The orderings of all recall trials were also determined by walks on the participants’ assigned graphs. Trials were shown with a variable ITI between presentations. The ITIs in each block varied between 2 and 4 s (mean = 2.8 s, total 42 s) with an additional 6 s delay separating the end of the last recall trial in a block from the start of the first training trial in the subsequent run.

Recall (session two)

During the second session, participants completed eight recall runs, each comprised of four recall blocks of 15 trials, for 60 trials per run. These extended recall runs were designed to allow measurement of the neural patterns of activation associated with each of the stimuli. Each constituent recall block was identical to the recall blocks presented in session one. To generate a continuous walk for each run (across the four blocks), the first block in each run began on a randomly selected node, and each subsequent block in the run was rotated to be contiguous with where the last set ended. This process ensured that the full 60 trials within a block constituted a valid random walk, despite not being generated as a single walk. Blocks 1, 2, and 4 followed a random walk, whereas the third block followed a Hamiltonian walk, in which every node was visited exactly once. Across the full run of 60 trials, each node was required to be visited at least twice. If not, the walk for the run was discarded and a new walk was generated. For the modular graph, the Hamiltonian walk proceeded clockwise on runs 1, 3, 5 and 7 and counter-clockwise on runs 2, 4, 6, and 8. On the ring lattice, the Hamiltonian walk was not generated with any directionality. Hamiltonian and random walks were analyzed together.

Imaging

Acquisition

Each participant underwent two sessions of scanning, acquired with a 3T Siemens Magnetom Prisma scanner using a 32-channel head coil. In session one, two pre-task scans were acquired as participants rested inside the scanner, followed by five task scans and two post-task resting-state scans. In session two, two pre-task scans were acquired, followed by eight task scans, two post-task resting-state scans, and lastly an LOC localizer. Imaging parameters were based on the ABCD protocol56. Each scan employed a 60-slice gradient-echo echo-planar imaging sequence with 2.4 mm isotropic voxels, an iPAT slice acceleration factor of 6, and an anterior-to-posterior phase encoding direction; the repetition time (TR) was 800 ms and the echo time (TE) was 30 ms. A blip-up/blip-down fieldmap was acquired at the start of each session. A T1w reference image was acquired during session one using a MEMPRAGE sequence57 with 1.0 mm isotropic voxels, anterior-to-posterior encoding, and a GRAPPA acceleration factor of 358; the repetition time (TR) was 2530 ms and the echo times (TEs) were 1.69 ms, 3.55 ms, 5.41 ms, and 7.27 ms. A T2w reference image was acquired during session two using the variable flip angle turbo spin-echo sequence (Siemens SPACE59) with 1.0 mm isotropic voxels, anterior-to-posterior phase encoding, and a GRAPPA acceleration factor of 2; the repetition time (TR) was 3200 ms and the echo time (TE) was 565 ms. A diffusion-weighted scan was also acquired at the end of session two. The acquisition followed the ABCD protocol with 1.7 mm isotropic voxels, an iPAT slice acceleration factor of 3, and an anterior-to-posterior phase encoding direction; the repetition time (TR) was 4200 ms and the echo time (TE) was 89 ms, and b-values were 500 (6 directions), 1000 (15 directions), 2000 (15 directions), and 3000 (60 directions). The total acquisition time per scan was 7:29 min and consisted of 81 slices.

Preprocessing

Results included in this manuscript come from preprocessing performed using fMRIPrep 20.1.0 (Esteban et al.60,61; RRID:SCR_016216), which is based on Nipype 1.4.2 (Gorgolewski et al.62,63; RRID:SCR_002502).

Anatomical data preprocessing

A total of 1 T1-weighted (T1w) images were found within the input BIDS dataset. The T1-weighted (T1w) image was corrected for intensity non-uniformity (INU) with N4BiasFieldCorrection64, distributed with ANTs 2.2.0 [ref. 65, RRID:SCR_004757], and used as T1w-reference throughout the workflow. The T1w-reference was then skull-stripped with a Nipype implementation of the antsBrainExtraction.sh workflow (from ANTs), using OASIS30ANTs as target template. Brain tissue segmentation of cerebrospinal fluid (CSF), white-matter (WM), and gray-matter (GM) was performed on the brain-extracted T1w using fast [FSL 5.0.9, RRID:SCR_002823, ref. 66]. Brain surfaces were reconstructed using recon-all [FreeSurfer 6.0.1, RRID:SCR_001847, ref. 67], and the brain mask estimated previously was refined with a custom variation of the method to reconcile ANTs-derived and FreeSurfer-derived segmentations of the cortical gray-matter of Mindboggle [RRID:SCR_002438, ref. 68]. Volume-based spatial normalization to two standard spaces (MNI152NLin2009cAsym, MNI152NLin6Asym) was performed through nonlinear registration with antsRegistration (ANTs 2.2.0), using brain-extracted versions of both the T1w reference and the T1w template. The following templates were selected for spatial normalization: ICBM 152 Nonlinear Asymmetrical template version 2009c [Fonov et al.69, RRID:SCR_008796; TemplateFlow ID: MNI152NLin2009cAsym], FSL’s MNI ICBM 152 nonlinear 6th Generation Asymmetric Average Brain Stereotaxic Registration Model [Evans et al.70, RRID:SCR_002823; TemplateFlow ID: MNI152NLin6Asym].

Functional data preprocessing