Abstract

Influenced by non-stationary factors such as brain states and behavior, neurons exhibit substantial response variability even to identical stimuli. However, it remains unclear how their relative impact on neuronal variability evolves over time. To address this question, we designed an encoding model conditioned on latent states to partition variability in the mouse visual cortex across internal brain dynamics, behavior, and external visual stimulus. Applying a hidden Markov model to local field potentials, we consistently identified three distinct oscillation states, each with a unique variability profile. Regression models within each state revealed a dynamic composition of factors influencing spiking variability, with the dominant factor switching within seconds. The state-conditioned regression model uncovered extensive diversity in source contributions across units, varying in accordance with anatomical hierarchy and internal state. This heterogeneity in encoding underscores the importance of partitioning variability over time, particularly when considering the influence of non-stationary factors on sensory processing.

Similar content being viewed by others

Introduction

The amount of information a sensory neuron carries about external stimuli is reflected in its repeated activity pattern in response to the same stimuli1. However, trial-to-trial variability, ubiquitous in the nervous system2, constrains the amount of sensory information in single-trial neural responses to the stimulus. It follows that the time course of this variance mimics the highly non-stationary dynamics of the underlying neuronal processes3,4. For example, when animals actively explore their environment, the sensory cortex shows desynchronized responses in a manner that increases their responsiveness to stimuli5. Conversely, during periods of sleep or quiet wakefulness, cortical neurons tend to synchronize their activity, resulting in decreased sensitivity to external stimuli6. Dissecting these non-stationary dynamics is critical to comprehending their role in information encoding and ultimately, perception.

Even with well-controlled experiments and behavior-monitoring techniques7,8, understanding how neuronal variability changes over time is challenging9. This is further complicated by the high-dimensional interactions between the various sources of neuronal variability: external stimuli, behavior, and internal brain dynamics10. To address this complexity, a common strategy involves the identification of meaningful temporal patterns and potential latent variables that can capture the evolving dynamics of neural activity. These patterns, which accurately capture the internal brain dynamics, are typically referred to as “brain states"5,11,12,13.

Brain states, characterized by distinct patterns of neural activity and functional connectivity, play a pivotal role in shaping the dynamics of neuronal variability6,12, influencing how sensory information is processed4,14 and behaviors are executed5,11. For instance, during heightened attention, decreases in the correlations between the trial-to-trial fluctuations in the responses of pairs of neurons serve to enhance the signal-to-noise ratio of the entire population, improving behavior15. Likewise, several studies have shown that random fluctuations in the processing of sensory stimuli originate from rapid shifts in the animal’s arousal state11,16. Tightly linking internal brain dynamics to behavior, brain states serve as an ideal temporal framework to study the dynamics of neuronal variability.

Recently, researchers have leveraged advanced machine-learning tools to explain single-trial neural activity by incorporating extensive stimulus and behavioral features17,18,19. While these studies reveal the multi-dimensional nature of neuronal variability, they often assume that neuronal variability remains constant over time. To address this gap, several parallel lines of research have used latent dynamical models to study the temporal patterns of neuronal variability5,12,20,21. However, these studies have not explicitly explored the different sources contributing to variability, as it changes over time. Consequently, our understanding of how various sources dynamically contribute to the non-stationarity of neuronal variability remains limited (Fig. 1A).

A Neuronal variability is a combined effect of influences from independent stochastic processes including external sensory factors, behavior, and fluctuations in internal brain states. The resulting neuronal responses exhibit a variable temporal structure across trials and individual neurons. Capturing these temporal dynamics is a challenging problem and lies at the core of understanding the functional role of neuronal variability. B Top: Schematic of the experimental setup. Bottom: Neuropixels probes in six visual cortical areas simultaneously record local field potentials and spiking activity. A retinotopic sign map overlaid on the vasculature image guides area-specific targeting. C Anatomical hierarchy scores of the six visual areas recomputed from ref. 25. Studying variability along the visual hierarchy can reveal important insights about information propagation and encoding at each stage of signal processing.

Here, we present a comprehensive investigation on how internal and external factors collectively shape the time course of neuronal variability to influence sensory coding. We used the Allen Brain Observatory Visual Coding dataset, which comprises simultaneous recordings of local field potentials (LFPs) and spiking activity from hundreds of Neuropixels channels in multiple visual areas along the anatomical hierarchy22. As mice passively viewed natural movies, we applied Hidden Markov Models (HMMs)23 on LFP data extracted from six visual cortical regions to establish a global temporal framework of internal latent states. Quantifying various aspects of variability across individual trials and neuronal populations, we uncovered significant changes in neuronal variability across states. These findings indicated dynamic shifts in the efficiency of sensory processing over time. To disentangle the sources of non-stationary sensory processing, we designed a neural encoding framework conditioned on internal states to partition variability across three crucial factors: internal brain dynamics, spontaneous behavior, and external visual stimuli. Through this model, we quantified the time-varying contributions of these sources to single-trial neuronal and population dynamics. Our findings revealed that, even during persistent sensory drive, neurons dramatically changed the degree to which they were impacted by sensory and non-sensory factors within seconds. Additionally, we observed considerable diversity in neural encoding across visual cortical units, with the relative influence of these sources varying based on their anatomical location and cell type. Taken together, our results provide compelling evidence for the dynamic nature of sensory processing, while emphasizing the role of latent internal states as a dynamic backbone of neural coding.

Results

We analyzed the publicly available Allen Brain Observatory Neuropixels dataset, previously released by the Allen Institute22. This dataset comprises simultaneous recordings of spiking activity and local field potentials (LFPs) from six interconnected areas in the visual cortex of mice (n = 25) passively viewing a variety of natural and artificial visual stimuli (Fig. 1B). To estimate the dynamic nature of internal state fluctuation during sensory processing, we focused our analysis on data recorded during repeated presentations of a 30 s natural movie. We used a continuous stimulus to mitigate sudden transients in activity induced by abrupt changes in the visual stimuli. Lastly, the application of quality control metrics yielded, on average, 304 ± 83 (mean ± std) simultaneously recorded neurons distributed across layers and areas per mouse (see Methods).

Previous studies22,24 demonstrated that the functional hierarchy of visual areas aligns with their anatomical organization25. This hierarchy places the primary visual cortex (V1) at the bottom, followed by rostrolateral (RL), lateromedial (LM), anterolateral (AL), posteromedial (PM), and anteromedial (AM) areas (Fig. 1C). Here, we consider this visual hierarchy as a first-order approximation of signal processing stages to study signal propagation and information encoding while crucially accounting for the non-stationarity in spiking variability that arises due to influences from fluctuating internal and external factors.

Identification of oscillation states from local field potentials

Internal brain states can vary without clear external markers, making their quantification challenging. To capture state changes associated with internal processes, we employ a definition of brain states derived using LFPs recorded invasively from six visual areas22. LFPs reflect aggregated sub-threshold neural activity and capture the highly dynamic flow of information across brain networks26. The spectral decomposition of LFPs reveals different frequency bands that correlate with specific cognitive states27,28,29, sensory processing30,31,32,33,34, and behavior35,36,37. We found that LFPs in the mouse visual areas also revealed a distinct frequency spectrum across time, whose dynamics were strongly coupled to arousal-related behavioral variables (Fig. 2A). Accordingly, we envisioned that a latent state model could reflect the underlying latent brain dynamics by capturing the dynamic patterns of the LFP spectrum, such that each latent state reflects an oscillation state. To extract these oscillation states from LFPs in the visual area, we employ Hidden Markov modeling23,38,39 on filtered envelopes of LFPs within distinct frequency bands (Fig. 2B, left panel): 3–8 Hz (theta), 10–30 Hz (beta), 30–50 Hz (low gamma), and 50 –80 Hz (high gamma). This approach enabled us to fully capture LFP power across the 3–80 Hz frequency range (Supplementary Fig. S1A), while also aligning with the observed frequency boundaries in the spectral decomposition of LFPs. Finally, to capture laminar dependencies, the observations supplied to the HMM also comprised LFPs from superficial, middle and deep layers in all visual areas (one channel each from layer 2/3, layer 4, layer 5/6; Fig. 2B (middle panel), Supplementary Fig. S1E, F).

A Top: Local field potential (LFP) power modulations in V1 recorded from mice passively viewing a naturalistic movie. Bottom: Time course of running speed and pupil area during the same time period. B Schematic to identify oscillation states using LFPs. Discrete states are defined based on frequency-specific transients of LFPs from six visual areas. Hidden Markov model (HMM) uses Hilbert transforms in the theta (3–8 Hz), beta (10–30 Hz), lower gamma (30–50 Hz), and higher gamma (50–80 Hz) frequency ranges. C Top: Model comparison among HMMs over a range of latent states using three-fold cross-validation. The cross-validated log-likelihood (LL) estimate, normalized by the top eigenvalue of the state definition matrix, is reported for each mouse (hollow circles) along with across-subject averages (solid circles, n = 25 mice, error bars represent s.e.m). For each mouse, the optimal number of states was identified as the point where the normalized LL was maximized. Final model selection was based on the majority rule across all mice. Bottom: Evaluation of state similarity (λ1) as the top eigenvalue of the state definition matrix. D Top: State posterior probabilities identified by the HMM. Bottom: LFPs from randomly selected channels from V1, displayed alongside their respective latent states over the same duration. E LFP power distribution in the three-state model. Shaded lines represent the state-specific z-scored power distributions in individual mice, and the solid black line represent the average across all mice (N = 25 mice). In state-1, or the high-frequency state, LFPs are dominated by high-frequency gamma oscillations. State 3, or the low-frequency state, has characteristic slow oscillations in the theta band. F Histogram of state dwell times in each trial across all states and all mice. G Average probability of observing 3-step or 2-step (inset) transition sequences to different states. Transition probabilities were calculated from observed sequences averaged across all mice (n = 25, error bars represent s.e.m). Source data are provided as a Source Data file.

We found that LFP dynamics in the visual cortex consistently unfolded through three reliable oscillation states across all mice (see Methods; Fig. 2C, 3.08 ± 0.39 states, n = 25 mice, mean ± std). These states did not depend on stimulus types (Supplementary Fig. S4A, B), specific visual areas (Supplementary Fig. S1B, C), or layers (Supplementary Fig. S1E, F). The identity of the inferred states was also remarkably consistent across mice, each characterized by a distinct distribution of the power spectrum: a high-frequency state (SH), a low-frequency state (SL), and an intermediate state (SI). While the high-frequency state is characterized by increased power in the low and high gamma bands, slow oscillations dominate the low-frequency state dynamics in the theta frequency ranges (Fig. 2D, E, Supplementary Fig. S2A). LFP power distribution in the intermediate state is more uniform.

These oscillation states demonstrate stable dynamics, as reflected by the large values along the diagonal of the transition matrix, ranging between 0.94 and 0.99 (Supplementary Fig. S3B). Dwell time in a state averaged around 1.5 ± 0.14 s (mean ± sem, n= 3 states) (Fig. 2F), and the transition intervals between consecutive states (the interval around a transition during which the HMM posterior probability is < 80 %) were significantly shorter than the dwell times, lasting only for about 0.13 ± 0.006s (mean ± sem). Additionally, direct transitions between the low- and high-frequency states were rare and required transitioning through the intermediate state, as evident in both two- and three-step transition sequence-probability trends (Fig. 2G). Consequently, mice spent only short durations in the intermediate state (0.97 ± 0.001 s, mean ± sem), while they spent the most prolonged durations in the high-frequency state (1.92 ± 0.003s, mean ± sem, \({p}_{{S}_{H},{S}_{I}}\) = 1.17e-167, \({p}_{{S}_{H},{S}_{L}}\) = 6.6e-79, \({p}_{{S}_{I},{S}_{L}}\) = 1e-11, one-way ANOVA, n = 25 mice). Notably, this state property was dependent on stimulus type (Supplementary Fig. S4C). During repeated presentations of the drifting grating stimulus, transitions between the extreme states of low- and high-frequency were much faster and more likely (Supplementary Fig. S4E, F). This significantly reduced the amount of time mice spent in the intermediate state (0.25 ± 0.0001 s, p = 1.5e-120, one-way ANOVA, Supplementary Fig. S4C). However, in the absence of any stimulus, mice tended to spend longer durations in the intermediate state (1.16 ± 0.001 s, p = 3.5e-29, one-way ANOVA). We attribute these differences to the strong neural responses evoked by sudden transitions of the visual stimulus such as, the onset and offset of drifting gratings stimuli.

Correlation between oscillation states and body movements

Brain state variations often exhibit strong correlations with the animal’s behavioral context40,41. Indeed, several studies have reported neural activity changes in the visual cortex associated with various behavioral features17,42,43. To this end, we examined the behavioral correlates of the oscillation state patterns, comparing pupil size, running speed, and facial, limb, and tail movements across different states (Fig. 3A–C). Our investigation revealed a strong association between behavioral movements and internal oscillation states across subjects (Fig. 3E). Notably, a shift to the high-frequency state corresponded closely with increased movements and pupil size (Fig. 3D), suggesting increased arousal levels in this state. Conversely, mice tended to be at rest in the low-frequency state while only making small movements in the intermediate state11,44,45.

A Face motion energy evaluated as the absolute value of the difference between consecutive frames. B Eye and pupil tracking. Tracking points were identified using a universal tracking model trained in DeepLabCut. C Animal pose estimation. Specific, visible body parts were tracked using a universal tracking model trained in SLEAP. D Example snippet of behavioral changes alongside the animal’s current oscillation state. SH: High-frequency state (green), SI: Intermediate state (blue), and SL: Low-frequency state (pink). E Comparison of the average movement of specific body parts across states (\({p}_{{S}_{H},{S}_{I,L}}\), pupil size: p = 2.8e-15, running: p = 2.0e-17, face motion: p = 6.3e-13, body center: p = 2.6e-18, left forelimb: p = 1.2e-13, left hindlimb: p = 4.9e-14, right hindlimb: p = 3.0e-11, tail start:, p = 3.0e-16, tail end: p = 2.0e-11, n = 25 mice, one-way ANOVA). F, Mutual information (MI) between behavioral variables and the inferred HMM states (mean ± sem, n = 25 mice). All statistical tests were performed using one-way ANOVA. Statistical tests in (E, F) were adjusted for multiple comparisons using the Bonferroni correction (***: p < 0.0001, **: p < 0.001, *: p < 0.05). Source data are provided as a Source Data file.

Several studies have considered locomotion as an indicator of brain state to examine variations in visual encoding18,46. To quantify the relationship between internal oscillation states and different behavioral features, we calculated the mutual information (MI) between the states and each behavioral feature47. We found that changes in the oscillation states were more faithfully mimicked by pupil size or facial movements (Fig. 3D), reporting significantly higher MI than all other behavioral responses (MIpupil = 0.12 ± 0.006, MIface = 0.1 ± 0.006, mean ± sem, n = 25 mice), including running (MIrunning = 0.08 ± 0.007, mean ± sem, n = 25 mice, Fig. 3F). This held true despite the strong positive correlations between all behavior variables (r = 0.4 ± 0.03, mean ± sem, n = 25 mice), and especially between running, facial movement, and pupil size (r = 0.6 ± 0.04, mean ± sem, n = 25 mice). Importantly, all behaviors associated with running (movements in the proximal end of the tail, left limbs, and body center) reported similar MI with the oscillation states. To further validate these results, we used HMMs to quantify behavioral states in individual mice, fitting individual models to pupil size, face motion, and running measures. Upon comparing these behavioral states with oscillation states, stronger correlations emerged with pupil size and face motion than with running speed (Supplementary Fig. S5B; p = 0.0007, one-way ANOVA, n = 25 mice). We attribute these differences to the dissociation between pupil size and running speed, particularly in cases where pupil dilation occurs, even when the mouse remains stationary (Supplementary Fig. S5A). These results suggest that facial movements serve as a reliable representation of the underlying internal states reflected in voluntary behavior, almost as good as the involuntary changes in pupil size48.

Neuronal variability changes across oscillation states and visual hierarchy

After defining the internal oscillation states and establishing their relation to behavior and arousal state, we wondered how spiking variability changes across these states. Across states, we observed distinct variations in population activity and synchronization levels (Fig. 4A–C). Consistent with previous observations of attentional effect15, increased spiking activity (av. % increase = 7.7 ± 1.6, mean ± sem, p = 6.3e-5, pairwise T-test, n = 25 mice) and decreased correlation (av. % decrease = 36.6 ± 3.4, mean ± sem, p = 1.3e-10, pairwise T-test, n = 25 mice) were typical of the high-frequency state. Moreover, the transition-state-like properties of the intermediate state were broadly consistent across various neuronal properties (Fig. 4B, C) and behavior (Fig. 3E). Bolstered by these findings, we evaluated three types of variability in single neurons to capture complementary aspects of neuronal variability: percentage of shared variance within a population, spike timing variability, and variability in spike counts across trials.

A Raster plots (~10 s) showing the response of 25 units, each from V1 and AM, during two trials in which the mouse was in different states. Each row represents the activity of the same single neuron across the two trials. SH: High-frequency state (green), SI: Intermediate state (blue), and SL: Low-frequency state (pink). B State and area-specific population activity, z-scored and averaged across all mice (\({p}_{{S}_{H},{S}_{I}}\) = 1.4e-05, \({p}_{{S}_{H},{S}_{L}}\) = 3.0e-07, \({p}_{{S}_{I},{S}_{L}}\) = 0.90, one-way ANOVA, n = 25 mice). Error bars represent s.e.m. C Average pairwise correlation between averaged neuronal population activity in different visual areas as a function of oscillation states (\({p}_{{S}_{H},{S}_{I}}\) = 1.5, \({p}_{{S}_{H},{S}_{L}}\) = 0.002, \({p}_{{S}_{I},{S}_{L}}\) = 0.002, one-way ANOVA, n = 25). Error bars represent s.e.m. D Population shared variance. Top: Separation of shared and independent variance using factor analysis (FA). FA partitions the spike count covariance matrix into shared and independent components. Bottom: Percentage of shared variance plotted against the anatomical hierarchy scores of the visual areas in each oscillation state, averaged across all units (One-way ANOVA: \({p}_{{S}_{H},{S}_{I}}\) = 9.6e-7, \({p}_{{S}_{H},{S}_{L}}\) = 1.3e-146, \({p}_{{S}_{I},{S}_{L}}\) = 1.2e-95; Two-way ANOVA, states: F 431.2, p = 1.5e − 189, areas: F = 78.8, p = 3.3e − 82, states × area: F = 3.3, p = 2.6e − 4, n = 7609 units). E Neuronal variability across time, quantified using the coefficient of variation (CV). Top-left: Simulated distributions of inter-spike-intervals (ISI) for regular and Poisson-like firing. For a very regular spike train, a narrow peak in the ISI histogram corresponds to CV ≈ 0, whereas Poisson-like variability in the spike trains leads to an exponentially distributed ISI histogram with CV = 1. Top-right: Distribution of ISIs in each oscillation state over a 2.5 sec range. Bottom: CV along the visual hierarchy (quantified as anatomical hierarchy scores) and across oscillation states, averaged across all units (One-way ANOVA: \({p}_{{S}_{H},{S}_{I}}\) = 4.9e-23, \({p}_{{S}_{H},{S}_{L}}\) = 3.9-03, \({p}_{{S}_{I},{S}_{L}}\) = 2.8e-11; Two-way ANOVA, states: F = 42.5, p = 3.6e − 19, areas: F = 88.1, p = 4.5e − 92, states × area: F = 4.8, p = 4.9e − 7, n = 7609 units). F Neuronal variability across trials, quantified using Fano factor (FF). Top-left: Evaluation of FF as an average of the FF ratio over non-overlapping windows of 150 ms with at least ten trials in each state. Top-right: Mean spike count versus variance over all times in each state for an example cell in V1. Bottom: FF along the visual hierarchy and across brain states, averaged across all units (One-way ANOVA: \({p}_{{S}_{H},{S}_{I}}\) = 2.8e-4, \({p}_{{S}_{H},{S}_{L}}\) = 2.4e-33, \({p}_{{S}_{I},{S}_{L}}\) = 3.5e-39; Two-way ANOVA, states: F = 107.7, p = 7.5e − 47, areas: F = 7.1, p = 9.9e − 6, states × area: F = 0.6, p = 0.8, n = 5017 units). Pearson correlation with hierarchy scores excluding RL, SH: rp−RL = − 0.94, pp−RL = 0.02; SI: rp−RL = −0.43, pp−RL = 0.5; SL: rp−RL = − 0.46, pp−RL = 0.43. G Information encoding along the visual hierarchy across all oscillation states, quantified using mutual information (MI). Top: For each trial, MI was evaluated between the population spike count matrix and a matrix of flattened movie frames at time points corresponding to each state using a matrix-based entropy estimator. Bottom: MI across the visual hierarchy and oscillation states averaged across all mice (Pairwise T-test: \({p}_{{S}_{H},{S}_{I}}\) = 0.01, \({p}_{{S}_{H},{S}_{L}}\) = 7.3e-10, \({p}_{{S}_{I},{S}_{L}}\) = 9.3e-04; Two-way ANOVA, states: F = 3.1, p = 0.04, areas: F = 2.7, p = 0.03, states × area: F = 0.02, p = 0.99, n = 25). Pearson correlation with hierarchy scores excluding RL, SH: rp−RL = − 0.9, pp−RL = 0.03; SI: rp−RL = − 0.86, pp−RL = 0.06; SL: rp−RL = − 0.81, pp−RL = 0.09. Error bars in D–G represent 95% confidence intervals. All statistical tests were adjusted for multiple comparisons using the Bonferroni correction (***: p < 0.0001, **: p < 0.001, *: p < 0.05). Source data are provided as a Source Data file.

Previous studies have shown that variability shared within a neuronal population can constrain information propagation between processing stages49,50,51,52. This is because shared variance within a population may not average out53,54, leading to a deterioration of the population’s coding capacity. To study how shared variability evolves across various internal states, we used factor analysis (FA)55 to partition the spike count variability into its shared and independent components (Fig. 4D, top). Within a neuronal population, the shared component quantifies co-fluctuations in firing rates among individual neurons, while the independent component captures their Poisson-like variability. Percentage of shared variability was then evaluated as the ratio between each neuron’s shared and total variance. Consistent with previous findings that noted more synchronization within a population during low-arousal states40,41, the percentage of shared variability was highest during the low-frequency state (Fig. 4D, bottom). In this state, fewer factors influenced the observed patterns of variation compared to the other states (number of FA components, SH = 21 ± 1, SI = 19 ± 1, SL = 16 ± 1, p = 1.8e-06, one-way ANOVA). Neurons within V1 reported a larger shared component than neurons within other areas. The percentage of shared variance decreased along the visual hierarchy in the high-frequency state, (Pearson correlation r = -0.84 with anatomical hierarchy score, p = 0.03), while the trends were not significant in the intermediate and low-frequency states (SL: Pearson’s r = -0.76, p = 0.08, SI: r = -0.58, p = 0.22). Compared to higher visual areas, neurons in early visual areas are more strongly modulated by the temporal features of visual stimuli22,56. Thus, we attribute the observed decreasing trends across the visual hierarchy to the stronger modulation of neurons in lower visual areas by the temporal features of the natural movie, such as rapid variations in luminance or moving edges. This likely induces more temporally coherent activity within populations in the lower visual areas compared to higher visual areas, resulting in greater shared variance.

To study variability in spike timing, we measured the histograms of inter-spike intervals (ISI) and their associated coefficients of variation57. Coefficient of variation (CV) of each neuron was evaluated as the ratio between the standard deviation and mean of the ISI distributions. Therefore, the farther a neuron’s CV deviates from 0, the more irregular the neuron’s firing (Fig. 4E, top left). Evaluating CV in a state-specific manner, we found that neurons during the high-frequency state had broader ISI distributions than during other states (Fig. 4E, top right), and accordingly, fired more irregularly in this state (Fig. 4E, bottom). Along the visual hierarchy, spike timing variability decreased irrespective of the internal state (Fig. 4E, bottom, SH: Pearson’s r with anatomical hierarchy score = -0.94, p = 0.006; SI: Pearson’s r = -0.97, p = 0.001, SL: Pearson’s r = -0.94, p = 0.006). Consistent with our expectation that V1 neurons more faithfully represent the features of the time-varying visual stimuli22,56,58,59, we found that activity of V1 neurons was the most irregular.

In visual system studies, trial-to-trial variability is commonly assessed using the Fano factor (FF)60, which quantifies the ratio of variance to mean spike count across trials. An FF of 1 corresponds to a Poisson process, indicating that individual action potentials are generated randomly according to a constant firing rate. To ensure the relevance of our analysis to the visual stimulus, we evaluated FF of neurons with receptive field locations near the screen’s center57,61 (see Methods, Fig. 4F, top). Overall, single neurons in the visual cortex showed greater-than-Poisson variability with FF averaging around 1.21 ± 047 (mean ± std). Specifically, spike counts in the low-frequency state showed the largest trial-to-trial variability, suggesting it is less modulated by visual stimuli. In contrast, trial-wise variability was lowest in the high-frequency states (Fig. 4F, bottom). Interestingly, neurons in RL reported the highest variability across visual areas (Fig. 4F, bottom), even regardless of the animal’s internal state and stimulus presented (Supplementary Fig. S6C, D). Accordingly, excluding area RL from the analysis revealed a decreasing trend in the trial-to-trial variability along the hierarchy in the high-frequency state (SH: Pearson’s r with anatomical hierarchy score = -0.94, p = 0.02; SI: Pearson’s r = -0.43, p = 0.5, SL: Pearson’s r = -0.46, p = 0.4).

Based on these results, we hypothesized that lower shared variance and trial-to-trial variability in spiking activity during the high-frequency state would improve stimulus encoding (Fig. 4D, F). Meanwhile, the increased spike timing variability during this state could be due to better encoding of the temporal changes in the natural movie video stimulus (Fig. 4E). We directly validated this hypothesis by evaluating the mutual information (MI) between the population spiking activity and the pixel-level information within each frame of the movie in a trial-by-trial manner in each state (Fig. 4G, top). As expected, spiking activity in the high-frequency state was more informative about the stimulus than the lower-frequency state, with V1 neurons encoding most of that information (Fig. 4G, bottom, Supplementary Fig. S6A). In line with the observed high FF measures (Fig. 4F), neurons in RL reported the lowest MI with the stimulus (see Discussion). Again, omitting the low MI measures in RL, pixel-level information decreased along the hierarchy during the high-frequency state (SH: Pearson’s r with anatomical hierarchy score = -0.90, p = 0.038; SI: Pearson’s r = -0.86, p = 0.06, SL: Pearson’s r = -0.81, p = 0.09). While these findings confirmed the association between spiking variability and stimulus representation across states, they further suggest a loss of pixel-level information along the visual pathway.

In summary, the high-frequency state is characterized by lower population shared variance, trial-to-trial variability, and increased spike timing variability. During this state, variability trends showed strong anti-correlations with the anatomical hierarchy scores such that V1 demonstrated the highest variability across the different visual areas in all three measurements. This could be due to a strong influence of the temporal pattern of sensory drive in early areas, which is validated by the trend of decreasing pixel-level information encoded in V1, especially in the high-frequency state.

Internal state conditioned neural encoding model

Given the substantial influence of the internal oscillation states on spiking variability and sensory processing, we next sought to quantify the impact of different variability sources on neural dynamics during the different states. We built an encoding model conditioned on internal states to predict changes in single-trial neural activity in each visual area (Fig. 5A). The resulting framework allows for the quantification of state-specific contributions of stimulus and other source variables to the target single-trial neural activity. Deriving inspiration from an HMM-GLM framework62, the encoding model has two essential pieces: an HMM governing the distribution over latent LFP states (identified in the preceding section) and a set of state-specific predictors governing the weight distributions over the input features. However, unlike the previously proposed HMM-GLM, the state sequences are pre-determined by the HMM, and we do not re-train the HMM model for optimized prediction. Finally, the model also produces a time-varying kernel (τ seconds long) for each feature, relating that variable to neural activity in the subsequent time bin (Fig. 5A, panel 3).

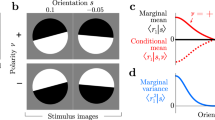

A State-conditioned encoding model to account for state-specific contributions of different sources of variability. Design matrices were constructed using decorrelated features to train state-specific regressors. SH: High-frequency state (green), SI: Intermediate state (blue), and SL: Low-frequency state (pink). B Regression models to study encoding in population and single neuron models. Population models included a linear weighting of the input features, while in single neuron models, linear weighting was followed by a non-linear exponential projection. C–G Results from single-neuron model. C Mean explained variance for different categories of input features, averaged across n = 3923 neurons and obtained using five-fold cross-validation. D (First panel) Neuronal activity, with neurons sorted vertically by a manifold embedding algorithm, Rastermap. (Panels 2–6) Prediction of neuronal activity (n = 350 units, best explained units across mice and areas) from respective input feature categories. E Contributions from single category models to explaining single-neuron variability during different oscillation states (n = 3923 neurons). SH: High-frequency state (green), SI: Intermediate state (blue), and SL: Low-frequency state (pink). F Explained variance of all units in each input feature category. G Neuronal clusters identified through unsupervised clustering of the final explained variance from single-category models for all units. H Distribution of neuronal clusters across areas. I Neuronal clusters derived from unsupervised clustering of state-specific explained variance from single-category models for all units, showing how feature encoding dynamics shift across different oscillatory states. Box-plots in (C, E) show the first and third quartiles, the inner line is the median over all neurons (n = 3923), and the whiskers represent 1.5 × IQR (Tukey method). Statistical tests in (C, E) were adjusted for multiple comparisons using the Bonferroni correction (***p < 0.0001, **p < 0.001, *p < 0.05). Source data are provided as a Source Data file.

Our model considers an extensive array of variables that we classify into three categories: stimulus, behavior, and internal brain activity (Fig. 5A, panel 1). Stimulus features include a set of higher (edges, kurtosis, energy, entropy) and lower-order (intensity, contrast) image features, and behavioral features include the complete set of movement variables determined in the previous section (see Fig. 3). Under internal brain activity, the model includes both the averaged neuronal population activity from simultaneously recorded neighboring visual areas (that is, other than the target visual area) and the raw LFPs from different layers within the target area. The averaged neuronal population activity represents the average activity across all units in a given area. Since model fits to linearly dependent input features are unreliable, we employed QR decomposition to systematically orthogonalize the input features63 (see Methods).

We derived two separate versions of the encoding model to study neural variability at multiple scales: a population model and a single-neuron model (Fig. 5B). The single-neuron model predicted the single-trial firing rate of the target neuron, while the population model predicted the single-trial averaged neuronal population activity in an area. In the population model, the predictors were linear regressors of the input features, and the model was fit using Ridge regression to prevent overfitting (equation (16)). The single-neuron model accounted for the non-linearity associated with spike generation, wherein the predictors were designed as Poisson regressors of the input features, and the model was optimized by maximizing a regularized log-likelihood function to prevent overfitting (equation (17)). To evaluate how well the model captured the target neural activity, we computed the five-fold cross-validated R2 (cvR2, equation (18)).

Before quantifying state-specific variability from different sources, we assessed the effect of internal states on neuronal variability while controlling for other factors. We ran two separate ANCOVA tests to examine how internal states influenced variability in both single-unit and population-level activity (Supplementary Fig. S7A–D). Internal states significantly affected variability at both levels, with contributions of 3% for single-unit activity and 6% for averaged population activity, indicating that additional factors are required to explain a majority of the observed variability.

State specific contributions to single-neuron variability

To systematically quantify the relative contributions from the different sources to single-neuron variability in each trial, we constructed a state-conditioned GLM framework. Since a GLM predicts the conditional intensity of the spiking response, we evaluated our model performance against the rate functions of individual neurons obtained after smoothing the spike counts with a Gaussian filter (s.d. 50 ms). To appropriately identify their variability sources, neurons were further selected based on minimal firing rate (>1 spikes/s in all states) criteria and receptive field locations, along with the standard quality control metrics of the dataset22 (see Methods). After filtering, n = 3923 units remained across all mice and were analyzed using the GLM model.

The model explained an average of cv\({R}_{F}^{2}=26.7\pm 13.5\%\) (mean ± std, n = 3923 units) of the total variance of single-trial activity across all neurons (Fig. 5C). To quantify the relative contributions of different source variables, we applied the model to individual sub-groups corresponding to each category. Across all factors, stimulus features were the most predictive of single-neuron activity (cv\({R}_{S}^{2}=19.8\pm 13.6\%\), mean ± std), while internal brain activity was the least predictive (cv\({R}_{I}^{2}=11.6\pm 9.5\%\), mean ± std). Within the internal brain activity category, averaged population activity from neighboring visual areas accounted for nearly twice as much explained variance as LFPs from the same area (cv\({R}_{LFP}^{2}=5.6\pm 6.4\%\), cv\({R}_{P}^{2}=11.1\pm 9.4\%\), mean ± std, p = 3.2e−179, one-way ANOVA). Therefore, these features were analyzed separately. Across different visual areas, single neuron variability was best explained along the anterolateral pathway (LM, AM, and AL, \({{{{\rm{c}}}}vR}_{F}^{2}=26.2\pm 0.9\%\) (mean ± std), Supplementary Fig. S8, p = 2.5e-05, one-way ANOVA). Visualizing the model predictions using Rastermap18 revealed transient changes in the neural ensemble that were captured solely by the stimulus features (Fig. 5D). Other features were less discerning and captured only the broad changes in the firing patterns. Additionally, we examined cell-type specific contributions from various factors. We found that the explained variance of fast spiking units (FS) significantly surpassed that of regular spiking units (RS) (Supplementary Fig. S8H, p = 5.32e-11, one-way ANOVA), with behavior and internal activity contributing more to FS units. In contrast, stimulus features explained variance equally well in both FS and RS cells (Supplementary Fig. S8F–K, behavior: p = 9e-48, internal activity: p = 0, stimulus: p = 0.05, one-way ANOVA).

We further investigated how internal brain states influence neuronal encoding by analyzing state-wise contributions of input features to single-neuron activity. Neuronal activity was the most predictive during the high-frequency state (Fig. 5E, p = 5e-109, one-way ANOVA) such that stimulus and behavior-driven variability was highest in this state (stimulus: p = 2.1e-70 ; behavior, p = 0.008, one-way ANOVA), and lowest in the low-frequency state. Within internal activity features, population activity from neighboring visual areas contributed more significantly during the high-frequency state (p = 0.02, one-way ANOVA), while LFPs from the same area played a more prominent role in the low-frequency state (p = 3.9e-28, one-way ANOVA). These findings are consistent with prior studies11,64,65, highlighting the role of slow-oscillatory waves in synchronizing spiking activity during the low-frequency state (Fig. 4C, D), thereby disrupting stimulus encoding in this state.

The influence of different sources was not uniform across neurons; rather, individual neurons appeared to be driven by a diverse array of factors (Fig. 5F), suggesting heterogeneous coding mechanisms within the population. To investigate single-cell diversity in the visual cortex, we used unsupervised clustering based on each neuron’s encoding pattern, represented as a 5-element vector that included (cross-validated) explained variance from each feature category (stimulus, behavior, same-area LFPs, and population activity from other visual areas) and the number of categories with >10% explanatory power (Supplementary Fig. S9A). Clustering based on these encoding profiles revealed six distinct groups (Fig. 5G, Supplementary Fig. S9B): one dominated by stimulus, one by behavior, another with high encoding of both stimulus and behavior, a group influenced by both stimulus and averaged population activity from neighboring visual areas, a group with high explained variance across all input feature categories (multi-source), and a final group comprising neurons where no single feature explained >10% of their variance. The two largest clusters comprised units predominantly driven by stimulus features alone (29.5%) and multi-source units (30.2%). These two clusters made up 60% of all units (Fig. 5G). In contrast, units driven solely by behavior formed the smallest cluster, representing just 4.7% of all units, while 12.2% of units were jointly influenced by both stimulus and behavior features.

When examining the distribution of all neuron clusters across visual areas, we found that the fraction of units best predicted by stimulus features peaked in V1 (39.6% of units in V1), decreasing along the hierarchy (Fig. 5H; LM: 33.8%, RL: 21%, AL: 24.8%, PM: 26%, AM: 21.3%; Pearson correlation with hierarchy score, rp−RL = − 0.96, pp−RL = 0.01). Conversely, the influence of behavior increased along the hierarchy. Proportion of units driven by behavior alone (V1: 2.1%, LM: 5.3%, RL: 5.6%, AL: 5.8%, PM: 5.4%, AM: 5.9%; rp = 0.84, pp = 0.03) and of units affected by both stimulus and behavior nearly doubled in higher-order areas (V1: 7.7%, LM: 6.5%, RL: 17.9%, AL: 12.7%, PM: 16.7%, AM: 18.6%). Lastly, the proportion of multi-source units also increased along the hierarchy (V1: 28%, LM: 28.8%, RL: 30%, AL: 32.6%, PM: 31.4%, AM: 32.4%; rp = 0.85, pp = 0.03). These findings point to an increasing functional diversity among neurons as one ascends the visual hierarchy. Supporting this, neurons influenced by multiple factors, especially behavior, had larger receptive field sizes (Supplementary Fig. S9F, p = 0.0001, one-way ANOVA), consistent with the known trend of increasing receptive field sizes along the hierarchy66,67. Multi-source units also tended to have higher firing rates compared to neurons predominantly explained by a single factor (Supplementary Fig. S9D, p = 4.3 × 10−71, one-way ANOVA). Finally, the ratio of RS to FS units was highest in the stimulus-driven cluster (8:1), exceeding the overall ratio of 4:1 in the neuronal population (Supplementary Fig. S9E). In contrast, clusters driven by behavior or influenced by multiple factors had a lower ratio (2:1), indicating that non-visual factors predominantly modulate FS units, while visual factors primarily modulate RS units.

Given the shift in neural dynamics across different brain states, we explored how the contributing factors to single-unit activity varied between these states. To do so, we performed a similar clustering analysis based on the state-specific explained variance of individual factors in both high- and low-frequency states (Fig. 5I, Supplementary Fig. S9H–K). This analysis revealed notable changes in the dominant factors contributing to single unit variance across states. In the low-frequency state, a large proportion of units (53%) fell into a cluster where no single feature explained >10% of their variance. However, in the high-frequency state, around 40% of these same units shifted to being predominantly driven by stimulus features alone. Additionally, units influenced by multiple sources in the low-frequency state became more specialized in the high-frequency state. These results indicate a significant state-dependent reorganization of neural representation in the visual cortex.

State specific contributions to population-level variability

Recent studies17,18 have reported significant contribution of spontaneous movements in the emergent properties of brain-wide activity. To examine these effects in population dynamics within the visual cortex in a state-specific manner, we constructed a state-dependent linear regression model to predict the averaged neuronal population activity in each of the six visual areas. Using the same input features as the single-neuron model, this population model explained 53.4 ± 6.6% (mean ± std, n = 25 mice, Fig. 6A) of the variance in the averaged neuronal population activity across across the visual areas.

A Explained variance for different categories of input feature groups, averaged across all mice obtained using five-fold cross-validation. B Averaged population responses overlaid with model predictions from respective input feature groups. The prediction traces were generated by concatenating the outputs from three state-conditioned GLMs into a single, continuous prediction. C Comparison of predictions in different (left) states and (right) visual areas prior to and post addition of internal brain activity. Top: Cross-validated explained variance for each model. Bottom: Unique contribution of internal brain activity. D Contributions from single category models to explaining the variance in averaged neuronal population activity in different states. E Contributions from LFPs in the same area to explain the variance in averaged neuronal population activity from (right) different layers and (left) in different visual areas. F–I Same as (E) (left), but for different input features, one-way ANOVA (F, p = 5.8e-5; (G) p = 6e-3; (H, I) p > 0.01). In all panels, boxplots show the first and third quartiles, the inner line is the median over 25 mice, and the whiskers represent 1.5 × IQR (Tukey method). All statistical tests were adjusted for multiple comparisons using the Bonferroni correction (***p < 0.0001, **p < 0.001, *p < 0.05). Source data are provided as a Source Data file.

To further explore the contributions of different source variables, we applied the model to individual sub-groups corresponding to each input feature category. Interestingly, internal brain activity had the most predictive power (cv\({R}_{I}^{2}\) = 41.0 ± 7.6%, mean ± std, p = 2.5e-11, one-way ANOVA, n = 25 mice), higher even than the combined power of behavioral and stimulus features (cv\({R}_{B+S}^{2}\) = 30.1 ± 9.3%, mean ± std, p = 0.0005, one-way ANOVA, n = 25 mice). This was unlike single neuron activity, which was primarily driven by stimulus features. Stimulus features predicted the variance in the averaged neuronal population activity better than behavioral features (cv\({R}_{S}^{2}\) = 22.8 ± 8.8%, cv\({R}_{B}^{2}\) = 18.9 ± 7.0%, mean ± std, p = 0.009, one-way ANOVA, n = 25 mice). Although bodily movements significantly contributed to both single-neuron and population-level activity, they were rarely the dominant factor driving visual cortical responses to natural movie stimuli. These successive improvements in the explanatory power resulting from the inclusion of more sources are evident in the prediction traces shown in Fig. 6B. It is worth noting that if single-neuron responses to external stimuli were completely independent, the contribution from stimulus features to population activity would be negligible. Nevertheless, the significant influence of stimulus features on population-level variability is suggestive of stimulus-related neuronal correlations within an area.

The addition of internal brain activity to the combined model of behavioral and stimulus features increased the explained variance by almost 24% (\(\Delta {r}_{F-(B+S)}^{2}\) = 23.5 ± 10.2%, mean ± std, Fig. 6A). Considering that LFP and population activity inherently carry information about stimulus and behavioral features, potentially making part of their contributions redundant, we have deliberately orthogonalized these internal variables against the stimulus and behavior variables68. This orthogonalization ensures that internal variables capture variance beyond what can be accounted for by stimulus and behavior variables alone.

To understand the substantial increase in explained variance, we analyzed the contributions of internal brain activity to each state. We found that these variables largely increased the predictability during the low-frequency state (\(\Delta {r}_{{S}_{L},F-(B+S)}^{2}\) = 39.0 ± 15.8%, mean ± std, Fig. 6C, left panel). Activity in this state was poorly explained by the combined model of stimulus and behavioral features (cv\({R}_{{S}_{L},(B+S)}^{2}\) = 16.2 ± 11.5%, mean ± std, p = 8.3e-6, one-way ANOVA, n = 25 mice). The combined model of stimulus and behavioral features was best at explaining variability in the high-frequency state, and accordingly, activity in this state showed a smaller improvement in its predictability on the inclusion of internal activity features (\(\Delta {r}_{{S}_{H},F-(B+S)}^{2}\) = 14.3 ± 4.6%, mean ± std, p = 2.3e-6, one-way ANOVA, n = 25 mice). Consistently, within-area LFPs and averaged population activity from the neighboring visual areas contributed more towards explaining the activity in the low-frequency state (p = 4.5e-6, p = 8.2e-13, respectively; one-way ANOVA, n = 25 mice, Fig. 6D). At the same time, both stimulus and behavioral features demonstrated increased predictive power during the high-frequency state (p = 0.003, p = 0.01, respectively; one-way ANOVA, n = 25 mice).

Using the complete set of input features, we could predict about 61.1 ± 6.9% (mean ± std, n = 25 mice) of the variance in V1’s averaged neuronal population activity, the highest among all visual areas (Fig. 6F). Although including internal brain activity did not differentially affect predictability across visual areas (p = 0.12, one-way ANOVA, n = 25 mice, Fig. 6C, right panel), contributions from its sub-components revealed interesting differences. Firstly, averaged population activity from neighboring areas explained more variance than within-area LFPs (p = 1.5e-9, one-way ANOVA, n = 25 mice, Fig. 6D). Secondly, their across-area prediction showed reversed trends. While LFPs explained significantly more variance in V1 than other visual areas (p = 1.9e-8, one-way ANOVA, Fig. 6E, left panel), averaged population activity explained significantly more variance in AM (p = 6e-3, one-way ANOVA, Fig. 6G). Lastly, the predictive power of LFPs varied across the cortical depth, wherein layer 4 (L4) LFPs contributed more to the variance in the averaged neuronal population activity than LFPs in other layers (\({p}_{{L}_{2/3},{L}_{4}}\) = 5e-4, \({p}_{{L}_{5/6},{L}_{4}}\) = 2.5e-4, one-way ANOVA, Fig. 6E, right panel).

When disregarding the influence of internal states, stimulus features did not significantly differ in their predictive power across areas (Fig. 6H, p = 0.13, one-way ANOVA), even at the level of single features (Supplementary Fig. S10A, B, p ∈ [0.33, 1]). However, state-specific analysis revealed pronounced differences in the high-frequency state (Supplementary Fig. S10D, F). In this state, different stimulus features also showed distinct predictive powers indicating heightened sensitivity to stimulus changes (Supplementary Fig. S10C, E). Specifically, higher-order stimulus features (edges, kurtosis, and energy) reported greater predictive power than stimulus contrast and intensity. Finally, facial movements made the most substantial contribution to the averaged neuronal population activity compared to other behavioral features (Supplementary Fig. S11A, C, p = 0.02, one-way ANOVA, n = 25 mice).

Discussion

Our observations provide a comprehensive description of the dynamic aspects of spiking variability in the visual cortex as the brain traverses through distinct oscillation states. We characterized this variability along three dimensions: variability across trials61, variability in spike times57, and shared variance within a population55. By utilizing cortical LFPs to define different internal oscillation states, we found that each state captured a distinct profile of spiking variability. Using the state fluctuations as a temporal backbone, we constructed a state-based encoding model to partition and evaluate the relative contributions from three different sources of variability to visual cortical activity: visual stimulus, behavior, and internal brain dynamics. The model accounted for 27% of single-neuron variability and 53% of the variance in averaged population activity. Neurons in the visual cortex are influenced by a diverse array of factors, with the relative contributions of these factors differing across sub-populations. Firstly, the combination of factors affecting variability changes spontaneously and rapidly over time in a state-dependent manner. Secondly, the contributions of each source are further influenced by cell type and anatomical location, becoming increasingly heterogeneous as one ascends the hierarchy. Lastly, while single neurons in the visual cortex are primarily affected by stimulus features, population activity is largely dominated by internal brain activity. Overall, our study underscores the importance of accounting for the constantly changing contributions from internal and external factors on stimulus representation at the level of individual units, enabling a deeper understanding of how neural responses are dynamically shaped in real time.

Identifying and locating the different sources influencing neural variability poses a significant challenge in systems neuroscience10,69. Previous research has emphasized the significance of internal brain activity in accounting for neuronal variability2,70,71. While these studies did not consider variability induced by externally observable task- and behavior-related variables, recent investigations have predominantly focused on this latter category of input features12,17,18,72. In this study, we adopt a comprehensive approach by integrating contributions from both internal brain activity and externally observable variables to understand neuronal variability.

We considered a two-fold contribution from internal brain activity. Firstly, utilizing brain states defined by internal oscillatory rhythms as a temporal framework, we were able to associate the various dynamics of spiking variability with these internal states. Secondly, we incorporated averaged neuronal population activity from each neighboring area and LFPs as input features into the state-based encoding model. These variables played a significant role in explaining neural variability, primarily contributing to activity in the low-frequency state. Consistent with previous findings70,71, internal variables explained ~40% of the total variability of averaged neuronal population activity within an area, even surpassing the variance explained by the combined model of stimulus and behavioral features by 11% (cv\({R}_{I}^{2}\) - cv\({R}_{B+S}^{2}\)). At the level of single neurons, contributions from internal brain activity, although relatively small, remained statistically significant, explaining around 11% of the total variance. However, this was nearly 9% (cv\({R}_{S}^{2}\) - cv\({R}_{I}^{2}\)) less than the variance explained by stimulus features alone.

Recent progress in behavioral video analysis, computational modeling, and large-scale recording techniques has highlighted the impact of movement-related variables on neural activity across the cortex17,18,72. Our observations are consistent with these findings. Behavior-related variables explained up to ~20% of the averaged neuronal population activity and ~12% of single-neuron variability in the visual cortex. Moreover, the influence of behavior becomes more pronounced in the high-frequency state (Figs. 5E, 6D) and as one ascends the visual hierarchy, entraining a larger proportion of the neural population (Fig. 5H). However, our findings diverge from those reported in Musall et al.17, which found that uninstructed movements exerted a greater influence on V1 neural activity than a visual stimulus. We attribute this difference to three reasons: first, our mice are passively viewing the screen without engaging in a behavioral task; second, our naturalistic movie stimulus may engage a broader array of neurons compared to the static, flashed stimuli used in previous research; third, our recording captures single-unit spiking activity, contrasting with previous wide-field calcium imaging. In addition to behavior, these differences underscore the importance of recording methodologies, experimental conditions and stimuli, prompting a closer examination of the specific factors influencing single-trial neural activity in diverse contexts.

Despite large variability in spiking activity, neuronal populations exhibit a remarkable ability to robustly encode information across different brain regions24,25,73. Our results suggest this is state-dependent. A clear pattern emerges throughout our analyses: population dynamics during the high-frequency state are the most effective in representing stimulus information, while stimulus features weakly modulate activity in other states (Figs. 4G, 5E, 6D). While several lines of studies have indirectly confirmed this state-dependence of information encoding either through reports of task performance or via investigations under artificially induced states of anesthesia5,40,70,74, our findings directly quantify and describe this dependency. Specifically, we find that spiking activity in the high-frequency state has the lowest shared variance, lowest trial-to-trial variability, and the highest spike timing variability (Fig. 4). These characteristics of single-neuron activity may result from enhanced encoding of various temporal and spatial features of the time-varying natural movie stimulus during the high-arousal state (Figs. 5E, 6D). In contrast, the dominance of slow oscillatory activity in low-frequency state, coupled with high shared variance, trial-to-trial variability, and more regular firing, appears to reflect internal dynamics that disrupt the accurate representation of stimulus information. We posit that this observed correlation between heightened sensory encoding capacity and increased arousal during the high-frequency state may arise from the mice’s innate survival mechanism, leading them to enhance visual information intake while in a state of heightened alertness or running.

Neurons in the visual cortex can be classified by several criteria, including their morphology, connectivity, developmental history, gene expression, intrinsic physiology, and in vivo encoding strategies. Single-cell RNA sequencing studies have revealed extensive cell-type diversity and their relationships within cortical circuits75,76. Different cell types have distinct functional roles, which are further influenced by their position within the cortical hierarchy and the specific inputs they receive across different layers77,78,79,80,81,82. Furthermore, different neuronal types are modulated by various factors such as behavior17,18, top-down feedback78, and internal brain states83. Through unsupervised clustering of each neuron’s encoding patterns, we quantified their encoding diversity, uncovering units with specialized properties within the visual cortex (Fig. 5G). Our findings indicate that neurons in the visual cortex are modulated by a diverse array of factors, with the relative contributions of these factors varying across states, hierarchical positions, layers and cell-types (Fig. 5H, I, Supplementary Fig. S9C, E). Additionally, we observed an increasing representation of pan-modulated units along the visual hierarchy (Fig. 5H), suggesting that while integrative processes may start as early as V1, a larger network of neurons becomes involved in this process higher up the hierarchy. In line with recent studies suggesting that sensory-motor integration begins in early sensory areas18,46,84, we identified two distinct neuronal clusters likely involved in this process : one driven solely by behavior, and another influenced by both visual stimuli and behavioral factors (Fig. 5G). These findings emphasize the complex and dynamic nature of visual processing, shaped by a multitude of internal and external factors.

Given the hierarchical organization of the visual cortex22,25, the response variance of a sensory neuron can potentially limit the amount of stimulus information available to downstream circuits51 (Fig. 4G). While past studies have shown the effects of pair-wise correlations on information encoded by a neuronal population49,50,53, a more comprehensive population-level perspective is essential to understanding the brain’s correlational structure12,85,86. Here, we applied shared variance55 as a generalization of the pair-wise correlations between single neurons extended to an entire population. Notably, we observed a decrease in the percent of shared variance along the visual hierarchy (Fig. 4D). While this decline might imply the introduction of independent noise at subsequent stages of signal processing, it could alternatively result from the increased diversity of neurons influenced by factors other than the stimulus itself (Fig. 5H). The high variance shared across neurons in V1 can likely be attributed to V1 comprising the largest proportion of neurons exhibiting strong, time-locked responses to the temporal dynamics of stimulus features (Fig. 5H4,56). Our findings provide further support for this notion, particularly through the observation that neurons in V1 reported high spike-timing variability, likely corresponding to the variance induced by a constantly changing stimulus (Fig. 4E). Consistently, LFPs have a more pronounced influence on averaged population activity in V1 in comparison to other visual areas (Fig. 6E). This suggests that the collective synaptic inputs into V1, represented by LFPs in the area, may entrain a larger population in V1 than in other areas.

Previous studies have indicated that trial-to-trial variability (Fano factor) increases as information propagates up along the visual pathway from the retinal receptors to the primary visual cortex61,70,87. Our observations mirror this trend in the visual cortex when mice were exposed to full-field light flashes, revealing an increase in trial-to-trial variability along the cortical hierarchy (Supplementary Fig. S6D). However, in response to natural movies, trial-to-trial variability decreased along the visual cortical hierarchy (Fig. 4F). We attribute this decrease in variability to the heterogeneous properties of a natural movie frame where, in awake mice, eye movements (even small saccades) across the frame could elicit more variable neuronal responses across trials in early visual areas with smaller receptive fields88. Consistent with this, controlling for fluctuations in eye gaze in the evaluation of Fano factor abolished the area-wise differences in trial-to-trial variability across all states (see Methods, Supplementary Fig. S6E–G). Lastly, it is important to note the variability properties of neurons in the rostrolateral visual area (RL), which do not always follow the visual hierarchy trends. This is especially true when considering trends related to stimulus encoding, such as trial-to-trial variability and mutual information (Figs. 4F, G, 5H, Supplementary Fig. S6C, D). We attribute this to two reasons. Firstly, since RL is located at the border of the visual and primary somatosensory (S1) cortices, the functional specialization of neurons in RL is likely more diverse than in other visual areas. This is reflected in our findings where RL had the smallest proportion of neurons influenced by stimulus features and the largest proportion of neurons with low explained variance (Fig. 5H). Secondly, due to the retinotopic center of RL being situated on the boundary between RL and S189, it is often challenging to target its precise retinotopic center90.

The dynamic nature of neuronal variance across time has been consistently demonstrated in theoretical and empirical analyses3,10,91. Here, we specifically quantify the magnitude of stimulus-driven neuronal variability associated with internal states. Our findings show that, during passive viewing, mice typically persist in a specific state for an average duration of 1.5 ± 0.1 seconds, indicating that state-dependent neuronal variability undergoes changes within seconds (Figs. 2F). The state sequences reveal a smooth transition of neuronal variability between distinct variability profiles, passing through an intermediate state (Figs. 2G, 4). Moreover, each state constitutes a unique composition of sources that influence neuronal variability (Figs. 5E, I, 6D). These rapid shifts in source composition across states arise from the complex interactions between non-stationary source variables, collectively contributing to the dynamics in neuronal variability.

These findings offer additional insights into the dynamic properties of neuronal variability, providing important constraints for theoretical modeling of stimulus-driven variability. Firstly, the dynamically changing source composition indicates that the responsiveness of a neuronal population to sensory input varies over time, challenging the assumption of a constant stimulus contributing to the responsiveness of a sensory system. Secondly, accounting for the distinct variability profiles associated with different internal states can specifically address the non-stationary stimulus-encoding capability of neuronal populations. Lastly, integrating state fluctuations as a temporal framework can enhance our understanding of the network dynamics contributing to neuronal variability.

Several studies have demonstrated that the structural connectivity of neural networks directly influences neural dynamics92. Theoretical studies on biologically plausible models show that neural computations are guided by the interplay between recurrence and changes in dimensionality, enabling flexible computations across different tasks93,94. This flexibility in local circuits has been shown to be closely linked to the emergence of metastable states, where neural activity remains in quasi-stable patterns before abruptly transitioning to new states95. These metastable states have provided a valuable framework for studying how structural connectivity relates to variability and noise correlations, which in turn influences the state-dependent processing of sensory information96,97,98,99,100. While these studies have suggested important neural mechanisms, they are yet to be tested in the scenario of state-dependent changes in local circuit dynamics, which could represent a more global mechanism underlying the dynamic variability observed across various brain areas. To address this gap, our future work will investigate the state-specific functional organization of the cortical circuits and how they adapt to different internal and external stimuli, with a particular focus on the mechanisms that drive state transitions and their impact on sensory processing.

In this study, we make use of the controlled yet dynamic structure of the passive viewing design to trace neuronal variability across discrete oscillation states in awake mice. While our discrete characterization of brain states provides a straightforward interpretation of neural activity, recognizing the possibility of continuous state changes (such as a continuum of pupil size or network activity changes) is vital for exploring the full spectrum of neural responses in awake, behaving animals. Additionally, to fully characterize neuronal variability and its influence on information processing in the cortex, investigating neural activity during active tasks is essential. Recent studies have shown that a subject’s engagement during an active task varies drastically from trial to trial, playing out through multiple interleaved strategies62,101,102, where other work has shown that changes in these strategies can be predicted by the animal’s arousal levels, suggesting a direct link between brain states and task performance11,103. While the tools in this study can help identify variables that promote task engagement, they do not elucidate the underlying mechanisms causing state transitions. Understanding these dynamics entails a thorough investigation of unit activity in the subcortical regions of the brain.

Our observations, combined with existing studies on spiking variability, suggest that cortical state acts as a key determinant of the variability seen in the cortex. By offering a comprehensive view of this variability, we have been able to directly study both the sensory and non-sensory aspects of neuronal responses in the visual cortex. It is evident that spiking variability in the cortex transcends mere ‘neural noise’, and explaining neuronal variability by partitioning it into different origins can help us understand its influence on information representation and propagation in the brain, and ultimately resolve its computational contribution to behavior.

Methods

Data collection

The data analyzed and discussed in this paper are part of the publicly released Allen Institute Brain Observatory Neuropixels dataset (n = 25 mice)22. Neural recordings used Neuropixels probes104 comprising 960 recording sites, of which either 374 for “Neuropixels 3a” or 383 for “Neuropixels 1.0” were configured for recording. The electrode sites closest to the tip formed a checkerboard pattern on a 70 μm wide x 10 mm long shank. Six Neuropixels probes were inserted at the shallowest 2 mm and at the deepest 3.5 mm into the brain for each recording. These requirements ensured adequate recordings of the cortex while preventing any brain damage. To ensure that the probes were recording from functionally related cells in each visual area, retinotopic centers were determined and targeted accordingly. Targeting the cortical visual areas, AM, PM, V1, LM, AL, and RL, was guided by the angle of approach of the probe, as well as the depth of functionality of the imaging boundaries. All procedures were performed according to protocols approved by the Allen Institute Institutional Animal Care and Use Committee under an assurance with the NIH Office of Laboratory Animal Welfare.

The Open Ephys GUI was used to collect all electrophysiological data. Signals from each recording site were split into a spike band (30 kHz sampling rate, 500 Hz highpass filter) and an LFP band (2.5 kHz sampling rate, 1000 Hz lowpass filter). Spike sorting followed the methods outlined in Siegle, Jia, et al.22. Briefly, the spike-band data was subject to DC offset removal, median subtraction, filtering, and whitening before applying the Kilosort2 MATLAB package (https://github.com/MouseLand/Kilosort) for spike time identification and unit assignment18. Detailed information about the complete experimental design can be found in Durand et al.105.

Statistics and data analyses

For all analyses, Python was used as the primary programming language. Essential analytical tools utilized include Scipy106 and Scikit-learn107. Error bars, unless otherwise specified, were determined as the standard error of the mean. For comparisons across units (n = 7609 units after QC filtering, and n = 3923 units post-RF filtering), mice (n = 25), or states (n = 3), we used a one-way ANOVA for Gaussian-distributed metrics and the rank sum test for non-Gaussian distributed metrics. In cases of high subject-to-subject variability, we used a paired T-test. Bonferroni correction was applied for multi-group comparisons. To evaluate the similarity to the previously established anatomical visual hierarchy in mice25, we computed the correlation between our measured variable and the anatomical hierarchy score (V1: −0.50, RL: −0.14, LM: −0.13, AL: 0.00, PM: 0.12, AM: 0.29), and Pearson’s correlation was applied to estimate the significance of correlation.

Visual stimulus

Custom scripts based on PsychoPy (Peirce, 2007) were used to create visual stimuli, which were then presented on an ASUS PA248Q LCD monitor. The monitor had a resolution of 1920 x 1200 pixels and a refresh rate of 60 Hz, measuring 21.93 inches wide. The stimuli were shown monocularly, with the monitor positioned 15 cm from the right eye of the mouse. The visual space covered by the stimuli was 120° × 95° before any distortion occurred. Each monitor used in the experiment was gamma corrected and maintained a mean luminance of 50 cd/m2. To accommodate the mouse’s close viewing angle, spherical warping was applied to all stimuli to ensure consistent apparent size, speed, and spatial frequency across the monitor from the mouse’s perspective.

Receptive field mapping

The receptive field locations were mapped with small Gabor patches randomly flashed at one of 81 locations across the screen. Every degree of drifting grating (3 directions: 0°, 45°, 90°) was characterized by a 2 Hz, 0.04 cycles with a 20° circular mask. The receptive field map (RF) for an individual unit is defined as the average 2D histogram of spike counts at each of the 81 locations, where each pixel corresponds to a 10° × 10° square.

Stimuli for passive viewing

The mice were exposed to various types of stimuli during the experiment, including drifting gratings, natural movies, and a flashes stimulus. The gratings stimulus included 4 directional gratings that were repeated 75 times at a frequency of 2 Hz. As for the natural movies, they were divided into 30 s clips, and each clip was repeated 30 times as a block. To introduce variability, there were an additional 20 repeats with temporal shuffling. Lastly, the flashes stimulus included a series of dark or light full field image with luminance = 100 cd/m2.

Quality control metrics

All single-neuron analyses (Figs. 4, 5) were performed on neurons that successfully met three essential quality control thresholds: presence ratio (>0.9), inter-spike interval violations (< 0.5) and amplitude cut-off (<0.1). Specific details of these metrics can be found in Siegle, Jia, et al.22. These metrics were implemented to prevent the inclusion of neurons with noisy data in the reported analyses, considering both the physical characteristics of the units’ waveforms and potential spike sorting challenges. For single-neurons analyzed in Fig. 5, a tighter threshold on presence ratio (>0.95) was incorporated to avoid inflated values of prediction accuracy. Additionally, analyses in Figs. 4F and 5 were filtered for neurons with receptive fields positioned at least 20 degrees away from the monitor’s edge. This criterion was incorporated to facilitate a meaningful comparison of the relative contributions from different sources of variability.

Local field potentials and time-frequency analysis

Prior to constructing the hidden Markov model (HMM), we identified appropriate frequency ranges in the LFPs. To evaluate their power spectra, we applied short time-Fourier transform (STFT) on single channels using a Hann window of size ~800 ms such that consecutive windows overlapped over ~400 ms. Z-scoring the power spectrum at each frequency revealed LFP modulations in distinct frequency bands (Fig. 2B). Further informed by the literature on LFPs in the mouse cortex33,34,108,109,110, the following frequency ranges were selected from the LFP spectrum: 3–8 Hz (theta), 10–30 Hz (beta), 30–50 Hz (low gamma), and 50–80 Hz (high gamma). To filter the LFPs, we constructed four IIR Butterworth filters of order 11, each corresponding to the above frequency ranges. Finally, envelopes of the filtered LFP signals, obtained via the Hilbert transform, were supplied as observations to the HMM.

As part of these observations provided to the HMM model, we included LFPs recorded across different cortical depths. To determine the corresponding layer of each LFP channel, we first estimated the depth of the middle layer of the cortical column. Similar to methods summarized previously24,111, we applied current source density (CSD) on the LFPs within the 250 ms interval post-presentation of the flashing stimulus. To evaluate the CSD, we calculated each recording site’s average evoked (stimulus-locked) LFP response (s) and duplicated the uppermost and lowermost LFP traces. Next, we smoothed the signals across sites as shown in equation (1), where r is the coordinate perpendicular to the layers, and h is the spatial sampling distance along the electrode. Finally, the CSD mapping was obtained as the second spatial derivative of the LFP response (equation (2), Supplementary Fig. S1D, right). The CSD map can approximately dissociate the current sinks from current sources, respectively indicated as downward and upward deflections in the density map.

To facilitate visualization, we used 2D Gaussian kernels (σx = 1, σy = 2) to smooth the CSD maps. We identified the location of the input layer based on the first appearance of a sink within 100 ms of the stimulus onset. We then designated the center channel of the middle layer (L4) as the input layer and marked eight channels above and below it as L4. All channels above the middle layer were classified as superficial layers (L2/3), while all channels below the middle layer but above the white matter were categorized as deep layers (L5/6). Lastly, for each mouse, we validated the layer classification against the spectral decomposition of the LFPs across depth (Supplementary Fig. S1D).

Identification of internal oscillation states—hidden Markov model