Abstract

In this work we propose an approach for implementing time-evolution of a quantum system using product formulas. The quantum algorithms we develop have provably better scaling (in terms of gate complexity and circuit depth) than a naive application of well-known Trotter formulas, for systems where the evolution is determined by a Hamiltonian with different energy scales (i.e., one part is “large” and another part is “small”). Our algorithms generate a decomposition of the evolution operator into a product of simple unitaries that are directly implementable on a quantum computer. Although the theoretical scaling is suboptimal compared with state-of-the-art algorithms (e.g., quantum signal processing), the performance of the algorithms we propose is highly competitive in practice. We illustrate this via extensive numerical simulations for several models. For instance, in the strong-field regime of the 1D transverse-field Ising model, our algorithms achieve an improvement of one order of magnitude in both the system size and evolution time that can be simulated with a fixed budget of 1000 arbitrary 2-qubit gates, compared with standard Trotter formulas.

Similar content being viewed by others

Introduction

Time-dynamics simulation (TDS) of quantum systems has long been considered as a natural application where quantum computers can outperform classical ones. A quantum algorithm for TDS approximates the time-evolution operator e−itH by a sequence of elementary gates. The gate complexity of this decomposition is at least linear in t in general1,2, and several methods have been proposed that achieve (or nearly achieve) that complexity3,4,5,6. These methods differ in the way they implement time evolution, have different overheads, and scale differently with the desired accuracy.

Arguably the most straightforward TDS algorithm is the use of (Trotter) product formulas7. This approach does not use ancilla qubits3,4,5, nor does it involve potentially costly operations (such as block encodings or reflections about ancillary quantum states)6, or any classical pre-processing (such as searching for classically optimized circuits8,9). Moreover, product formulas can be more efficient in practice when simulating systems with hundreds of qubits for times that scale with the size of the system10. This may be due to overheads that some asymptotically better algorithms incur, and to the fact that product formula methods scale better in practice than naive bounds suggest, with dependence on commutators of terms that can naturally take advantage of spatial locality11,12.

Product formulas split the evolution under a Hamiltonian H = ∑khk into a product of the form \({\prod }_{jk}{e}^{-i{t}_{jk}{h}_{k}}\) for some times tjk. This provides an efficient simulation if each elementary exponential can be implemented efficiently. Observe that the choice of the summands that compose H is not unique. A common practice when simulating lattice systems is to represent the Hamiltonian as a sum of Pauli terms H = ∑kαkPk and choose hk = αkPk.

Starting from a Hamiltonian generating time evolution that can be implemented with a quantum circuit with error independent of the evolution time (e.g., a Hamiltonian diagonal in the computational basis, or diagonalisable with a circuit that does not scale with the evolution time), we ask, “What is the effect of adding a perturbation to the Hamiltonian on the complexity of implementing the TDS algorithm with product formulas?". This motivates going into the interaction picture and approximating the time-ordered operator by a product of exponentials.

This technique allows us to introduce several algorithms that take advantage of the structure of the Hamiltonian to achieve better error scaling than standard product formulas. This approach can leverage knowledge of the gates that can be efficiently implemented in practice on a particular quantum computer, so we call this family of algorithms Trotter Heuristic Resource Improved Formulas for Time-dynamics (THRIFT). The interaction-picture approach has been studied in ref. 13, where approximations of the time-ordered operator are done through a Taylor expansion of the Dyson series, instead of using product formulas achieving a gate complexity of \(O(\alpha T\,{{\rm{polylog}}}(T\alpha /\epsilon ))\) for a simulation for time T with error ϵ. Although, theoretically, the LCU method has better scaling with evolution time and simulation error than product formulas, it has also been shown empirically that product-formula approaches can perform better in practice10. Furthermore, the LCU method uses ancilla qubits and involves implementing both an operation that coherently performs the constituent unitaries conditioned on the ancilla and a reflection about a certain ancilla state. Our approach uses no ancillas and only involves evolution according to terms of the Hamiltonian, as it directly implements the time evolution using product formulas, achieving a gate complexity of O(αT(αT/ϵ)1/(k−1)) for arbitrary fixed k.

Using Lieb–Robinson bounds ref. 14 introduces a protocol for quantum simulation of lattice models that resembles the THRIFT algorithm described in Eq. (7), but where the splitting of the Hamiltonian is decided based on the support of its summands, not on the energy scales involved in the Hamiltonian. The cost of this method is nearly optimal as a function of system size as well as evolution time and approximation error. However, in practice, this strategy may perform worse than straightforward application of product formulas11.

After completing an initial version of this work, we became aware of ref. 15. There, Omelyan et al. derive a set of optimised fourth-order product formulas for a Hamiltonian H = HA + HB by adding additional sub-steps to standard Trotter formulas and numerically minimizing the error arising from commutators (see Supplementary Note 3 for details). The generalisation to Hamiltonians with an arbitrary number of terms is described in ref. 7. Of particular interest for the present work is an optimised formula valid in the regime α ≪ 1 of a Hamiltonian H = H0 + αH1 (denominated “Omelyan’s small A” in ref. 7). In Results we show that for the 1D and 2D transverse-field Ising and the 1D Heisenberg models, THRIFT outperforms this optimised formula for all the values of α we consider. On the other hand, Omelyan et al.’s optimised small A formula proves to be the most efficient algorithm in the thop/U ≪ 1 regime of the Fermi–Hubbard model. This is mainly due to the high cost of implementing the terms arising in the THRIFT decomposition of this model.

In this work, we generate an efficient product-formula decomposition for time evolution of a quantum system. This decomposition has provably better scaling of both gate complexity and circuit depth than a naive application of well-known product formulas, for systems where the evolution is determined by a Hamiltonian with different energy scales (i.e., in which one part is “large” and another part is “small”, with the size of the small part quantified by a parameter α). This situation is ubiquitous in effective models describing physical systems and can occur, for example, in systems with strong short-range interactions and weaker long-range interactions. Furthermore, weak (or strong) external perturbations can be used to push a system out of equilibrium and to extract its dynamical properties. Crucially, the efficiency of the algorithm depends on the characteristics of the quantum computer itself, namely, the set of gates that are easily implementable with an error independent of the circuit depth. This is particularly useful in a noisy intermediate-scale quantum (NISQ) computer, where some types of gates can be implemented more easily than other nominally similar gates. As these formulas provide better gate complexity than naive product formulas in many instances, we expect them to be useful beyond NISQ applications as well.

In “Results”, we introduce THRIFT and show that its error scales as O(α2t2), an improvement by a factor of α compared with standard first-order product formulas. We show that kth-order THRIFT achieves error-scaling of O(α2tk+1), compared to O(αtk+1) for standard kth-order formulas. We also show (in Supplementary Note 1) that general product formulas based directly on products of the summands of the Hamiltonian cannot achieve better scaling than α2. To improve the α-scaling for higher-order formulas, we introduce the Magnus-THRIFT and Fer-THRIFT algorithms in “Results” and Supplementary Note 2, respectively, which achieve an effective O(αk+1t k+1) error scaling, for any \(k\in {\mathbb{N}}\). These results are valid for partitions of the Hamiltonian where implementing a piece of the perturbation together with the dominant Hamiltonian does not incur in an error larger than O(α2), as we discuss below.

To complement our theoretical results that show favourable asymptotic scaling of the algorithms, in Results we carry out numerical experiments comparing several product formulas with THRIFT. We analyse the error as a function of the total evolution time and the scale of the small part of the Hamiltonian α for three different models: the transverse-field Ising model in one (1D) and two dimensions (2D), the 1D Heisenberg model with random fields, and the 1D Fermi–Hubbard model. For the spin models studied, the THRIFT approach generates better product formulas in terms of gate complexity (measured as the number of CNOT or arbitrary 2-qubit gates to achieve a target error) for a wide range of evolution times and α. We stress that, despite THRIFT formulas having been derived assuming a small α, our numerical results show that in the case of the transverse-field Ising and Heisenberg models, THRIFT formulas outperform standard product formulas also for intermediate and large values of α. Despite the simplicity of such models, they correctly describe the relevant physics of a wide range of low-dimensional magnetic materials and, in the presence of frustration, can host exotic quantum phases of matter16,17,18. In this context, the introduction of perturbative corrections is often required in order to obtain a precise agreement with experimental data. A paradigmatic example is the quasi-1D ferromagnet CoNb2O6, which is regarded as one of the closest experimental realizations of the 1D transverse-field Ising model. However, to describe the complex physics emerging near its critical point, one has to consider a more detailed microscopic model containing several perturbations, which break the integrability of the Hamiltonian and make numerical simulations more challenging19,20,21. In the simulation of the transverse-field Ising and Heisenberg models, the favourable scaling is due to the possibility of implementing the elementary evolution gates with a 2-qubit gate cost that is the same as standard product formulas. For simulations of the Fermi-Hubbard model, THRIFT methods have advantageous scaling for large enough simulation time T ≳ U−1 and small scale of the hopping term thop/U. This is due to the extra cost incurred in the implementation of THRIFT in this case.

Results

THRIFT algorithms

Consider a Hamiltonian of the form H = H0 + αH1 where α ≪ 1, the norms of H0 and H1 are comparable, and the unitary \({U}_{0}={e}^{-it{H}_{0}}\) can be implemented exactly for arbitrary times t with an efficient quantum circuit, with complexity independent of t. We are interested in approximating the full evolution operator U = e−itH. The first-order Trotter formula with N steps has error7,11

We can use the fact that U0 is implementable exactly to give a simulation with lower error. Going to the interaction (also known as intermediate) picture22, we have

where in the second line we have just inserted identities between each exponential of H1. Here, \({{\mathcal{T}}}\) is the time-ordering operator (which moves terms with smaller times to the right) and \({\tilde{H}}_{1}(t)={e}^{it{H}_{0}}{H}_{1}{e}^{-it{H}_{0}}\). This is a better starting expression for bounding the error. Let \({[{{\mathcal{T}}}{e}^{-i\int_{0}^{t}\alpha {\tilde{H}}_{1}(\tau )d\tau }]}_{{{\rm{apx}}}}\) denote a product formula (to be defined) for approximating \({{\mathcal{T}}}{e}^{-i\int_{0}^{t}\alpha {\tilde{H}}_{1}(\tau )d\tau }\), and let Uapx denote the overall approximation to U obtained by using this formula. Then we have

by invariance of the operator norm under unitary transformations. Using for example the first-order generalised Trotter formula \({[{{\mathcal{T}}}{e}^{-i\int_{0}^{t}\alpha {\tilde{H}}_{1}(\tau )d\tau }]}_{{{\rm{apx}}}}={{\mathcal{T}}}{e}^{-i\int_{0}^{t}\alpha {\tilde{{{H}}_{1}^{A}}}(\tau )d\tau }{{\mathcal{T}}}{e}^{-i\int_{0}^{t}\alpha {\tilde{{{H}}_{1}^{B}}}(\tau )d\tau }\)23,24, where \({\tilde{H}}_{1}(\tau )={\tilde{{{H}}_{1}^{A}}} (\tau )+{\tilde{{{H}}_{1}^{B}}}(\tau )\) is some splitting of \({\tilde{H}}_{1}(\tau )\), we have

assuming that \(\parallel [{\tilde{{{H}}_{1}^{A}}}(s),{\tilde{{{H}}_{1}^{B}}}(v)]\parallel=O(1)\). Note that the error now scales as α2 instead of α. The inequality in Eq. (4) can be shown by writing the integral representation of the error for the time-dependent case. This forms the basis for the proof presented in23. For general evolution time, we can divide the evolution into N steps, giving an error

To turn this approach into a useful product-formula decomposition, we describe how to implement the time-ordered exponentials. This can be done using the definition of the time-ordered exponential in the other direction,

which is valid for any Hermitian operator \(\tilde{A}(t)={e}^{i{H}_{0}t}A{e}^{-i{H}_{0}t}\). This leads to the decomposition

This is nothing more than the usual first-order Trotter decomposition of the Hamiltonian \(H={H}_{0}+\alpha ({H}_{1}^{A}+{H}_{1}^{B})\) using the summands \({H}_{0}+\alpha {H}_{1}^{A}\), −H0, and \({H}_{0}+\alpha {H}_{1}^{B}\).

The decomposition (7) has an error α times smaller than the usual first-order Trotter formula. In particular, we have the following theorem.

Theorem 1

(THRIFT decomposition) Given a Hamiltonian H = H0 + αH1 where \({H}_{1}={\sum }_{\gamma=1}^{\Gamma }{H}_{1}^{\gamma }\), the decomposition

approximates U(t) = e−itH with error

For sufficiently small time, this error is O(α2t2).

The proof of this theorem appears in “Methods”. For α small the error of this approximation scales better than a normal Trotter approximation.

The THRIFT decomposition in Theorem 1 corresponds to a first-order Trotter formula, and can be used as a seed for higher-order approximations using standard techniques1,12,25,26. Note that, in practice, for this result to be useful, the unitary \({e}^{-it({H}_{0}+\alpha {H}_{1}^{\gamma })}\) has to be implemented with an error of order O(α2). More formally, we have the following procedure to turn a product formula into a THRIFT formula with O(α2) error scaling.

Proposition 1

(Higher-order THRIFT) Given a second-order product formula \({{{\mathcal{S}}}}_{2}(t)\) and a set of parameters \({\{{u}_{j}\}}_{j=1}^{m}\) such that

is a kth-order product formula, the product

with Uapx(t) specified by Eq. (8), approximates e−itH with error O(t k+1α2).

Proof

Uapx(t) is simply a first-order product formula with the unusual splitting

It follows trivially that Eq. (11) is a kth-order product formula. To prove the O(α2) error scaling, we write

with \({a}_{m-k}={\sum }_{r=0}^{k-1}{u}_{m-r}\) and am = 0. This expression follows by applying Eq. (6) to Eq. (11). A valid product formula satisfies \(\mathop{\sum }_{j=1}^{m}{u}_{j}=1\), so the claim follows by Supplementary Theorem 3 of Supplementary Note 1. This finishes the proof.

In practice, the set of parameters \(s: = {\{{u}_{j}\}}_{j=1}^{m}\) in Proposition 1 can be taken from known results like the recursive definition \({{{\mathcal{S}}}}_{2k}(t)={{{\mathcal{S}}}}_{2k-2}^{2}({v}_{k}t){{{\mathcal{S}}}}_{2k-2}((1-4{v}_{k})t){{{\mathcal{S}}}}_{2k-2}^{2}({v}_{k}t)\), where vk = 1/(4 − 41/(2k−1)) from25. For a sixth-order formula of this type, this means \(s=\{\left(r,r,s,r,r\right),\left(r,r,s,r,r\right),\left(m,m,sm/r,m,m\right),\left(r,r,s,r,r\right),\left(r,r,s,r,r\right)\}\) with r = v2v4, s = (1 − 4v2)v4, and m = v2(1 − 4v4). In the numerical analysis that we perform later, we use the parameters found in ref. 26, see “Results”.

Beyond quadratic scaling

The procedure developed in Proposition 2 improves the O(t) error scaling, but leaves the O(α2) error scaling unchanged. In fact, in Supplementary Note 1 we prove that no formula that approximates the evolution by a product of time-ordered evolutions according to terms of the Hamiltonian can achieve better scaling in α than THRIFT, regardless of how the Hamiltonian is decomposed. However, in this section we show how to achieve better scaling using two alternative approaches.

Motivated by Eq. (2), we look for approximations of the time-ordered operator that have better error scaling in the small parameter α. First, we consider the Magnus expansion27, which approximates the time-ordered exponential as the standard exponential of a time-dependent operator Ω. Second, we consider directly approximating the time-ordered exponential as a product of exponentials28. We show that these approaches achieve error scaling O(tkαk) for any positive integer k. We also present two algorithms to implement these approximations in practice. The first of these is consequence of the following result:

Theorem 2

(Magnus-THRIFT decomposition) Consider a Hamiltonian H = H0 + αH1. Let \({\tilde{H}}_{1}(t): = {e}^{it{H}_{0}}{H}_{1}{e}^{-it{H}_{0}}\). Defining \({\Omega }^{[k]}: = \mathop{\sum }_{j=1}^{k}{\Omega }_{j}(\alpha,t)\), the operation

approximates U(t) = e−itH with error O((tα)k+1) for small times t.

The proof of this theorem is presented in “Methods”. We now describe a method for approximating the dynamics of the Hamiltonian H = H0 + αH1 for time T using the Magnus expansion, that we call Magnus-THRIFT Algorithm. The approach is as follows:

-

1.

Write the evolution operator \(U(T)={e}^{-iT({H}_{0}+\alpha {H}_{1})}\) in the interaction picture, with H0 as the dominant part:

-

2.

Slice the time T into N intervals:

-

3.

Approximate the time-ordered exponential of a slice using its Magnus expansion up to order \(O({(\frac{T}{N}\alpha )}^{p})\). Note that here we use the Magnus expansion with an initial time t0 ≠ 0. We write the Magnus approximation of order p with an arbitrary initial time t as Ω(α, δt; t), such that

-

4.

Approximate the exponential \(\exp \left({\Omega }^{[p]}(\alpha,\delta t;t)\right)\) obtained from the Magnus expansion using a pth-order product formula Sp:

This procedure leads to the decomposition

Note that this last step does not alter the scaling with α, as the norm of the time-dependent Hamiltonian that determines the time-evolution operator is \(\alpha \parallel {\tilde{H}}_{1}(t)\parallel \), so the error has to have the same scaling in time and α.

As an example, consider the expansion of

Expanding the time-dependent Hamiltonian as a sum of time-independent operators Oq and functions of time αq(t) as \(H(t)={\sum }_{q=1}^{Q}{\alpha }_{q}(t){O}_{q}\), we find

where

which can be computed classically. Thus we can approximate \({e}^{{\Omega }^{[2]}(\alpha,t;\delta t)}\) using a second-order product formula as

If necessary, each of the products can be decomposed further using a second-order product formula to keep the error at most O(α3δt3).

Note that in any application of these formulas, some care has to be taken when expanding functions of time, to avoid losing the favourable scaling with α. As the error scales with both α and t, in any expansion the scaling with both of them should be considered.

In principle it should be possible to analyse the commutator scaling of the product formula appearing in Eq. (19), generalizing12. We leave this as a topic for future work.

In Supplementary Note 2 we introduce another algorithm achieving O(αk+1tk+1) error scaling. It is based on the Fer approximation28 of the time-ordered operator in the interaction picture in Eq. (31), so we refer to it as Fer-THRIFT.

Numerical simulations

The asymptotics derived in Theorems 1 and 3 show that for α small enough, THRIFT methods will outperform Trotter methods, and for even smaller α, Magnus-THRIFT will eventually outperform THRIFT. Similarly, higher-order methods will outperform lower-order methods for small enough time steps. To ascertain that THRIFT and Magnus-THRIFT methods give an advantage at relevant values of α and T, we performed extensive simulations of different models, namely the transverse-field Ising model in one and two dimensions, and the Heisenberg model with random local fields in one dimension (see below). Additionally, we present a similar analysis of a fermionic model, the 1D Fermi-Hubbard model, in Supplementary Note 4.

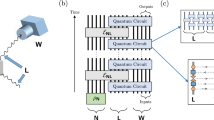

We compare the ordinary first- and second-order product formulas1,12 (here dubbed “Trotter 1” and “Trotter 2”), the fourth-order formula due to Suzuki25 (here dubbed “Trotter 4” for conciseness), a numerically optimised eighth-order product formula due to Morales et al.26 ("optimised Trotter 8”) based on an ansatz of Yoshida29, and a fourth-order formula optimised for Hamiltonians containing a small perturbation derived in ref. 15 (here dubbed “opt. small A 4” to indicate its error scaling with T; see Supplementary Note 3). For each of these product formulas, we also construct the corresponding THRIFT circuit (dubbed “THRIFT 1” through “THRIFT 4” and “optimised THRIFT 8”) as described in Theorem 1 and Proposition 2. For the transverse-field Ising model, we also implement the Magnus-THRIFT decompositions described in Theorem 3 with the first- and second-order Magnus expansion.

In the numerical implementation of THRIFT 1 through 8, we use the approximant

obtained by first breaking up the total time T into small steps T/N and then approximating each unitary evolution over a small step by Eq. (8). For a total time-independent Hamiltonian H, this is equivalent to splitting the time-ordered exponential over the full evolution time into a product of unitary evolutions with a small time step T/N, as described in Eqs. (15) and (16).

Note that Fer-THRIFT 1 and Magnus-THRIFT 1 coincide. As we found that Magnus-THRIFT 2 was not generally competitive with the other approaches for the systems we analysed, we did not implement Fer-THRIFT 2 as it has essentially the same cost as Magnus-THRIFT 2.

1D and 2D transverse-field Ising model with weak coupling

The first model we use for numerical tests and algorithm comparison is the transverse-field Ising model with weak interaction in one and two dimensions. In the 1D case, the model is integrable and can be mapped to a free-fermion model that can be simulated in polynomial time and space using the method described in refs. 30,31. This enables us to simulate chains of length up to L = 100 using the fermionic linear optics simulation tools from32. While the equivalence to free fermions makes this model a less interesting target for quantum simulation, we expect that the simulation costs may be indicative of costs for some other 1D models that are not necessarily classically easy. Indeed, we see evidence of this in the case of the Heisenberg model, as shown below. In 2D, we are restricted to relatively small system sizes using full state vector simulations.

The Hamiltonian of the transverse-field Ising model is

where Xi and Zi are the spin-1/2 operators in the x and z directions, respectively. For the purpose of studying THRIFT-based algorithms, we fix the field strength to h = 1, let the interaction strength α ≔ J be the small parameter, and measure time T in units of h−1. Since the transverse-field part, H0 = ∑j Zj, only consists of one-qubit terms, this has the advantage that the interaction-picture Hamiltonian \({\tilde{H}}_{1}(t)\) has the same locality as the original H1 = J ∑〈i, j〉 XiXj, and THRIFT circuits have the same 2-qubit gate depth as the corresponding Trotter circuits. We also note that, because \({e}^{-itJ{X}_{i}{X}_{j}}\) and \({e}^{-it(J{X}_{i}{X}_{j}+h({Z}_{i}+{Z}_{j}))}\) can be implemented with the same number of CNOT gates—namely two—the same holds for CNOT gate depth. The 2-qubit gate depths of one TDS step for all algorithms considered are shown in Tables 1 and 2 (2D). The explicit formulas for the approximants used for the THRIFT simulations of the transverse-field Ising model are discussed in Supplementary Note 3.

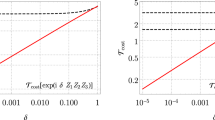

In Fig. 1, we show which of the different Trotter, THRIFT, or Magnus-THRIFT algorithms performs best at a given T and α for a wide range of these two quantities for the 1D transverse-field Ising model (panel a) and 2D transverse-field Ising model (panel b). The results broadly agree with what we expect from Theorems 1 and 3 and Proposition 2: as T decreases, higher-order formulas become advantageous over lower orders, and for smaller α, THRIFT methods are advantageous over Trotter methods. Interestingly, this crossover happens for a relatively large α ≈3 for the transverse-field Ising model. First-order methods are never advantageous for the 1D transverse-field Ising model, because for Hamiltonians that can be split into only two exactly implementable parts for Trotterisation, second-order methods have the same amortised depth per step as first-order methods (see Table 1). Magnus-THRIFT 2 outperforms all other methods only for very small α < 10−2 and T > 1. Nevertheless, in order to make a fair comparison between all algorithms we allowed for a gate budget corresponding to 15 and 26 steps of the first-order Trotter formula for the 1D and 2D case, respectively (see Table 1). This leads to errors below numerical accuracy for very small α and T.

a Landscape of the best TDS algorithm, as measured by the worst-case error \(\left\Vert U-{U}_{{{\rm{exact}}}}\right\Vert \), as a function of the relative field strength α = J/h and evolution time T at identical circuit depth for a 1 × 16 Ising chain with transverse field. The circuit depth was fixed to 1 step of Magnus-THRIFT 2 evolution. For the other algorithms, the number of steps is chosen to match the 2-qubit depth as closely as possible according to the 2-qubit depths shown in Tables 1 and 2. The colour of each point represents the algorithm that achieves the lowest error at those values of J and T, while the brightness indicates the magnitude of the error. b Same for a 3 × 3 transverse-field Ising model. Note that in the top right corner of both panels, \(\left\Vert U-{U}_{{{\rm{exact}}}}\right\Vert \) is of order 1, so this region is not of particular interest.

An analysis to compare the algorithms at system sizes, evolution times, and worst-case errors relevant in near-term realistic implementations is done in Fig. 2. There we investigate the scaling of the different algorithms with the system size and evolution time by searching for the lowest number of steps such that each algorithm achieves worst-case error \(\left\Vert U-{U}_{{{\rm{exact}}}}\right\Vert \le 0.01\). For the 1D transverse-field Ising model, we scale the system size L and evolution time T together as T = L. Figure 2a shows the 2-qubit depth to get the error below threshold. For the 2D transverse-field Ising model, we fix the system size at 3 × 3 and only change the simulation time T when searching for the minimal circuit depth to get the error below threshold. The results are shown in Fig. 2b. In both cases, we find that the circuit depth as a function of evolution time (and system size) is well described by a power law. The power-law exponents match those theoretically expected from Supplementary Note 1, with the notable exception of the optimised eighth-order THRIFT formula and fourth-order Trotter formula, for which the exponents are substantially smaller. In Supplementary Note 4 we show these exponents as a function of the interaction strength J = α and discuss the results in more detail. We observe surprisingly slow growth of the circuit depth for the optimised eighth-order THRIFT formula, which appears to scale sub-linearly in the evolution time. While the small slope of opt. THRIFT 8 for the 1D case could be attributed to the model being fast-forwardable, in the 2D case we believe this is an artifact of the small system size, as the model is not believed to be fast-forwardable in general. See Supplementary Note 4 for more details. The specific partitions we used to implement Trotter, Omelyan et al.’s, and THRIFT algorithms for the various models we consider are discussed in Supplementary Note 3.

a 2-qubit gate depth to achieve \(\left\Vert U-{U}_{{{\rm{exact}}}}\right\Vert \le 0.01\) for the different TDS algorithms for a field strength of J = 1/8 and evolution time T = L, for a 1 × L Ising chain with transverse field. The depths follow a power law of the form d = aLk whose parameters a and k we determine via a least-squares fit and report, also for different values of α, in Supplementary Fig. 4. b Similar simulation for a 3 × 3 transverse-field Ising model. Because the 2D transverse-field Ising model is not integrable and hence large system sizes are not classically simulable, we fix the system size to 3 × 3 and only scale the evolution time T. Error bars (mostly barely visible) are ±1, i.e., the minimal possible depth resolution.

We now investigate the benefits of THRIFT methods in scenarios of more practical relevance. Specifically, in Fig. 3 we compare the simulation of the dynamics of an excitation placed in the ground state of a 29-site transverse-field Ising model chain with J = 1 and h = 2 (corresponding to α = J/h = 0.5) obtained by using 15 steps of second-order Trotter and THRIFT formulas. Despite the two formulas requiring the same computational resources (see Table 1), one can clearly see that the simulation with second-order THRIFT remains close to the true dynamics for much longer than the simulation with the Trotter formula of the same order. The system sizes and circuit depths required to replicate Fig. 3 on real hardware are within the reach of current quantum devices33. Comparing the previous results for the 1D and 2D transverse-field Ising model, we expect the advantage of THRIFT methods over Trotter formulas observed here for the 1D transverse-field Ising model to extend to higher-dimensional cases as well, making THRIFT methods a powerful tool for the simulation of quantum spin models on near-term quantum devices in regimes of practical interest.

Simulation of the propagation of an excitation in a 29-site transverse-field Ising model chain at h = 2 and J = 1 (α = 0.5) with 15 Trotter 2/THRIFT 2 steps. The initial state chosen here is \(\left\vert \psi (0)\right\rangle=\left\vert \downarrow \cdots \downarrow -\downarrow \cdots \downarrow \right\rangle \), with \(\left\vert -\right\rangle=(\left\vert \uparrow \right\rangle -\left\vert \downarrow \right\rangle )\sqrt{(2)}\). Clearly, the simulation with Trotter 2 starts to deviate significantly from the exact dynamics starting from T > 5, while the simulation with THRIFT 2 remains accurate for much longer times.

1D Heisenberg model with strong random fields

The second model we use for numerical tests of the THRIFT algorithms is the 1D spin-\(\frac{1}{2}\) Heisenberg model with strong random fields. Unlike the 1D transverse-field Ising model, it is not exactly solvable, and we are not aware of a fast classical simulation for arbitrary times. The Hamiltonian is

where the hi are chosen uniformly random in [−h, h] and Xi, Yi, and Zi are again the spin-\(\frac{1}{2}\) operators in the respective directions. We fix h = 1, use the interaction strength α ≔ J as the small parameter, and measure time T in units of h−1. To evaluate errors, we always average over 10 different random instantiations of the field strengths hi. As in the case of the transverse-field Ising model, the field part H0 = ∑i hi Zi consists only of one-qubit terms, so \({\tilde{H}}_{1}(t)\) consists entirely of 2-qubit terms. Because simulating the Heisenberg interaction \({e}^{-it({X}_{i}{X}_{j}+{Y}_{i}{Y}_{j}+{Z}_{i}{Z}_{j})}\) already takes three CNOT gates, simulating the THRIFT gate \({e}^{-it({X}_{i}{X}_{j}+{Y}_{i}{Y}_{j}+{Z}_{i}{Z}_{j}+{h}_{i}{Z}_{i}+{h}_{j}{Z}_{j})}\) takes the same 2-qubit gate depth. Therefore, one step of any THRIFT circuit takes the same depth as one step of the corresponding Trotter circuit. See Supplementary Note 3 for more details about how we partitioned HHeisenberg. The exact 2-qubit gate depths are shown in Table 3.

In Figs. 4 and 5, we repeat the analysis done for the transverse-field Ising model in Figs. 1 and 2 for the Heisenberg model. However, because the Heisenberg model is not integrable and average-case errors are much easier to compute than worst-case errors, we use the average infidelity as a figure of merit in Fig. 5. (Note that this may not be indicative of worst-case performance, since product formula simulations can have significantly better performance on average34). Similarly to the case of the transverse-field Ising model, the THRIFT methods perform better than the corresponding Trotter methods, with higher-order methods outperforming lower-order methods for smaller T and α in Fig. 4. We observe that the crossover point from one method to the next in Fig. 4 roughly happens along lines of constant αT. This is because the interaction-picture Hamiltonian \({\tilde{H}}_{1}(t)\) scales with α, so the relevant scale for the Trotter errors is αT/N. The seeming advantage of the optimised eigth-order formula at very small αT is for worst-case errors below the numerical precision floor, so it is probably not borne out in reality. In Fig. 5, we see that the THRIFT methods always outperform the corresponding Trotter methods, and the 2-qubit gate depth to achieve average infidelity below a fixed threshold scales very similarly with T and the system size L for both methods, in broad agreement with the theory in Supplementary Note 1. Again, we note that the system size of L = 100, an average-case error ≤0.01 and evolution time T = 100 are realistic targets for near-term simulations. Figure 5 can also be directly compared to Fig. 1 in ref. 34, which considers the same question (albeit only for Trotter and not for THRIFT methods) for the Heisenberg model at J = 1. That analysis finds very similar results, including matching exponents k. We present a more detailed analysis of the scaling of the circuit depth with system size and evolution time in Supplementary Note 2.

Landscape of the best TDS algorithm, as measured by the worst-case error ∥U − Uexact∥, as a function of the relative field strength α = J/h and evolution time T at identical circuit depth for a 1 × 8 Heisenberg chain. The circuit depth is fixed to one step of optimised THRIFT 8 evolution. For the other algorithms, the number of steps is chosen to match the 2-qubit depth as closely as possible according to the 2-qubit depths shown in Table 3. The colour of each point represents the algorithm that achieves the lowest error at those values of J and T, while the brightness indicates the magnitude of the error. The isolated purple and red pixels in the THRIFT 4 and THRIFT 2 regions are artifacts of the randomness in the field strengths and running the optimised small A and eighth-order simulations with different random fields, but do not seem indicative of the general relative performance of the algorithms at these (α, T)-points.

2-qubit depth to achieve average infidelity \({{\mathbb{E}}}_{\{\left\vert x\right\rangle \}}[1-| \langle x| {U}_{{{\rm{exact}}}}^{{\dagger} }U| x\rangle {| }^{2}]\le 0.01\) for the different TDS algorithms for a 1 × L Heisenberg chain with field strength of J = 1/8 and evolution time T = L. Unlike Fig. 2, we use average fidelity to be able to simulate larger systems. Again, the required depths follow a power law of the form d = aLk whose parameters a and k we determine via a least-squares fit and use to extrapolate to up to L = 100. We report the fit parameters a and k, also for different values of α, in Supplementary Fig. 5. Error bars are ±1 step and the shaded regions are the one-sigma confidence intervals of the extrapolations.

For this model, we did not implement the Magnus-THRIFT algorithm since we expect that it performs similarly to the 1D transverse-field Ising model case, i.e., it performs best only in a region with small α and large T. Furthermore, Magnus-THRIFT formulas of order p > 1 would involve unitaries acting on more than 2 qubits, resulting in a higher 2-qubit gate cost.

Discussion

Better algorithms to simulate the time dynamics of Hamiltonians with different scales have natural applications in systems where the interactions have distinct origins. We have shown both theoretically and through numerical experiments in various systems that the THRIFT algorithms can achieve better scaling than standard product formulas for Hamiltonians with different energy scales. Concretely, we consider Hamiltonians of the form H = H0 + αH1, where α ≪ 1 and the norms of H0 and H1 are comparable. Using product formulas with a carefully chosen partition, we can achieve an O(α2tk) error scaling for any \(k\in {\mathbb{N}}\), which is better by a factor of α compared to the standard product formulas that do not use any structure of the Hamiltonian. We also present two algorithms to achieve scaling O(αktk) of the approximation error. These two algorithms perform better than other formulas only in small, extreme regions of the parameter space of the systems we consider. However, such a scaling with α cannot be achieved using products of time-ordered evolutions according to the terms of the Hamiltonian, and they may achieve better performance in other applications.

While we have concentrated on the evolution generated by time-independent Hamiltonians, the methods developed in this work also generalise to time-dependent Hamiltonians satisfying the same assumptions. Consider a Hamiltonian H(t) = H0(t) + αH1(t), where H0(t) and H1(t) are time dependent and have similar norms for all times t. As before we consider α small. Using the same ideas developed in Results, it is possible to show that for a partition of \({H}_{1}(t)={H}_{1}^{A}(t)+{H}_{1}^{B}(t)\), evolving the system with the approximant

induces an error bounded by

where \({\tilde{{H}}_{1}^{A,B}}(t): = {{\mathcal{T}}}{e}^{i\int_{0}^{t}{H}_{0}(s)ds}{H}_{1}^{A,B}(t){{\mathcal{T}}}{e}^{-i\int_{0}^{t}H(s)ds}\). The main difference with respect to the time-independent case is that the evolution over a total time T cannot generically be obtained from repeating the evolution over small times, but instead must be obtained from an approximation of each time-ordered slice of the total evolution.

Although these algorithms lack the competitive scaling of other approaches not based on product formulas, it has been shown10 that in the regime of medium sizes and time evolution scaling with the system size, standard product formulas can outperform asymptotically better algorithms. This makes our approach competitive in practical applications.

Developing algorithms that utilise the structure of the Hamiltonian to lower the cost of simulating time dynamics is crucial to make quantum computers useful sooner. In particular, our approach may help to study dynamical phase transitions35, where the behaviour of the dynamics of a system can change as a function of the parameters of the Hamiltonian. Quantum algorithms for time dynamics that fare well in particular regions of the parameter space allow exploring these questions with fewer resources, or for longer times given fixed resources and error.

Methods

In this section we present the proof of Theorems 1 and 3 of the main text.

Proof of Theorem 1. Define the approximant

Here \({V}^{(0)}(t)={{\mathcal{T}}}{e}^{-i\int_{0}^{t}{\tilde{H}}_{1}(s)ds}\) corresponds to the evolution under the full Hamiltonian \({\tilde{H}}_{1}(t)\), while \({V}^{(\Gamma -1)}(t)={e}^{it{H}_{0}}{U}_{{{\rm{apx}}}}(t)\), where Uapx(t) is defined in Eq. (8). This follows from repeated use of Eq. (6). Using the invariance of the operator norm and Eq. (4), it follows that

We use Eq. (30) to bound the error by applying the triangle inequality on the identity \({V}^{(0)}-{V}^{(\Gamma -1)}={\sum }_{j=0}^{\Gamma -2}({V}^{(j)}-{V}^{(j+1)})\) and noting that ∥V(0)(t) − V(Γ−1)(t)∥ = ∥U(t) − Uapx(t)∥, which leads finally to Eq. (9) as claimed. This finishes the proof.

In order to prove Theorem 3, it is convenient to introduce

for some time-dependent operator Ω(α, t), it is easy to show that \(\frac{d{e}^{\Omega (t)}}{dt}{e}^{-\Omega (t)}=-i\alpha {\tilde{H}}_{1}(t)\). Magnus27 used this to find an equation for Ω by employing the inverse of the derivative of the exponential map, i.e.,

where adΩ( ⋅ ) ≔ [Ω, ⋅ ] and \({{{\rm{ad}}}}_{\Omega }^{\,j}(\cdot ): = {{{\rm{ad}}}}_{\Omega }^{\,j-1}([\Omega,\cdot ])\). The coeficients bj are Bernoulli numbers, defined through \(\frac{x}{{e}^{x}-1}={\sum }_{j=0}^{\infty }\frac{{b}_{j}}{j!}{x}^{j}\). The equation for Ω can now be solved through Picard iteration27,36. Defining α-independent coefficients \({\tilde{\Omega }}_{j}(t)\) so that \({e}^{\Omega (\alpha,t)}=\exp \left(\mathop{\sum }_{j=1}^{\infty }{\alpha }^{\,j}{\tilde{\Omega }}_{j}(t)\right)\), and using this expression in Eq. (32), produces the recurrence37

The series for Ω converges for sufficiently small time t38,39 (see also Supplementary Theorem 11). Using these results, we can state the following lemma bounding the terms of the Magnus expansion.

Lemma 1

For l ≥ 1, \( \Vert {\tilde{\Omega }}_{l}(t) \Vert \le \frac{1}{2}{x}_{l} {{(2\int_{0}^{t} \Vert {\tilde{H}}_{1}(s) \Vert ds)}}^{l}\), where xl is the coefficient of sl in the expansion of \({G}^{-1}(s)={\sum }_{m=1}^{\infty }{x}_{m}{s}^{m}\), the inverse function of \(G(s)=\int_{0}^{s}\left(2+\frac{x}{2}\right.{(1-\cot (x/2))}^{-1}dx\).

This lemma is mentioned in ref. 36. We include a proof for completeness in Supplementary Note 2. Armed with Lemma 4, we have:

Proof of Theorem 3. As \({e}^{-it({H}_{0}+\alpha {H}_{1})}={e}^{-it{H}_{0}}{{\mathcal{T}}}{e}^{-i\alpha \int_{0}^{t}{\tilde{H}}_{1}(s)}\), it suffices to approximate the time-ordered evolution \({{\mathcal{U}}}(\alpha,t): = {{\mathcal{T}}}{e}^{-i\alpha \int_{0}^{t}{\tilde{H}}_{1}(s)}\). Introducing the Taylor remainder of a function h(α) as \({R}_{k}(h(\alpha )): = \mathop{\sum }_{n=k+1}^{\infty }\frac{{\alpha }^{n}}{n!}{h}^{(n)}(0)\), it follows that for \(\Omega (\alpha,t)=\mathop{\sum }_{j=1}^{\infty }{\alpha }^{j}{\tilde{\Omega }}_{j}(t)\), one has Rk(Ω(α, t)) = Ω(α, t) − Ω[k](α, t), and

The remainder provides a bound on the difference between \({{\mathcal{U}}}(\alpha,t)={e}^{\Omega (\alpha,t)}={e}^{\left({\Omega }^{[k]}(\alpha,t)+{R}_{k}(\Omega (\alpha,t))\right.}\) and \({e}^{{\Omega }^{[k]}(\alpha,t)}\) by means of the integral representation of the error

Using Eq. (34), we have \(\parallel {{\mathcal{U}}}(\alpha,t)-{e}^{{\Omega }^{[k]}}\parallel \le {R}_{k}(\frac{1}{2}{G}^{-1}(\alpha t\parallel {H}_{1}\parallel ))\). This implies that the error scales as O((αt)k+1). This finishes the proof. Note that this result extends trivially to an arbitrary time-dependent \({\tilde{H}}_{1}(t)\).

Data availability

Data supporting the figures and tables in this manuscript are available at ref. 40.

Code availability

Numerical simulations have been carried out using the Yao.jl framework. The code for data analysis is provided in ref. 40.

References

Berry, D. W., Ahokas, G., Cleve, R. & Sanders, B. C. Efficient quantum algorithms for simulating sparse Hamiltonians. Commun. Math. Phys. 270, 359 (2006).

Childs, A. M. & Kothari, R. Limitations on the simulation of non-sparse Hamiltonians. Quantum Inf. Comput. 10, 669 (2010).

Childs, A. M. On the relationship between continuous- and discrete-time quantum walk. Commun. Math. Phys. 294, 581–603 (2009).

Childs, A. M. & Wiebe, N. Hamiltonian simulation using linear combinations of unitary operations. Quantum Inf. Comput. 12, 901 (2012).

Berry, D. W., Childs, A. M., Cleve, R., Kothari, R. & Somma, R. D. in Proceedings of the Forty-Sixth Annual ACM Symposium on Theory of Computing (ACM, 2014).

Low, G. H. & Chuang, I. L. Optimal Hamiltonian simulation by quantum signal processing. Phys. Rev. Lett. 118, 010501 (2017).

Ostmeyer, J. Optimised Trotter decompositions for classical and quantum computing. J. Phys. A 56, 285303 (2023).

Mc Keever, C. & Lubasch, M. Classically optimized Hamiltonian simulation. Phys. Rev. Res. 5, 023146 (2023).

Tepaske, M. S. J., Hahn, D. & Luitz, D. J. Optimal compression of quantum many-body time evolution operators into brickwall circuits. SciPost Phys. 14, 073 (2023).

Childs, A. M., Maslov, D., Nam, Y., Ross, N. J. & Su, Y. Toward the first quantum simulation with quantum speedup. Proc. Natl Acad. Sci. USA 115, 9456 (2018).

Childs, A. M. & Su, Y. Nearly optimal lattice simulation by product formulas. Phys. Rev. Lett. 123, 050503 (2019).

Childs, A. M., Su, Y., Tran, M. C., Wiebe, N. & Zhu, S. Theory of Trotter error with commutator scaling. Phys. Rev. X 11, 011020 (2021).

Low, G. H. & Wiebe, N. Hamiltonian simulation in the interaction picture. Preprint at arXiv https://doi.org/10.48550/arXiv.1805.00675 (2019).

Haah, J., Hastings, M. B., Kothari, R. & Low, G. H. Quantum algorithm for simulating real time evolution of lattice Hamiltonians. SIAM J. Comput. 250, FOCS18-250–FOCS18-284 (2021).

Omelyan, I., Mryglod, I. & Folk, R. Optimized Forest–Ruth- and Suzuki-like algorithms for integration of motion in many-body systems. Comput. Phys. Commun. 146, 188 (2002).

Manousakis, E. The spin-\(\frac{1}{2}\) Heisenberg antiferromagnet on a square lattice and its application to the cuprous oxides. Rev. Mod. Phys. 63, 1 (1991).

Fazekas, P. Lecture Notes on Electron Correlation and Magnetism (World Scientific, 1999).

Zhou, Y., Kanoda, K. & Ng, T.-K. Quantum spin liquid states. Rev. Mod. Phys. 89, 025003 (2017).

Coldea, R. et al. Quantum criticality in an Ising chain: experimental evidence for emergent E8 symmetry. Science 327, 177 (2010).

Kjäll, J. A., Pollmann, F. & Moore, J. E. Bound states and E8 symmetry effects in perturbed quantum ising chains. Phys. Rev. B 83, 020407 (2011).

Fava, M., Coldea, R. & Parameswaran, S. A. Glide symmetry breaking and Ising criticality in the quasi-1D magnet CoNb2O6. Proc. Natl Acad. Sci. USA 117, 25219 (2020).

Fetter, A. & Walecka, J. Quantum Theory of Many-particle Systems, Dover Books on Physics (Dover Publications, 2003).

Huyghebaert, J. & Raedt, H. D. Product formula methods for time-dependent Schrödinger problems. J. Phys. A 23, 5777 (1990).

Poulin, D., Qarry, A., Somma, R. & Verstraete, F. Quantum simulation of time-dependent Hamiltonians and the convenient illusion of Hilbert space. Phys. Rev. Lett. 106, 170501 (2011).

Suzuki, M. General theory of fractal path integrals with applications to many—body theories and statistical physics. J. Math. Phys. 32, 400 (1991).

Morales, M. E. S., Costa, P. C. S., Burgarth, D. K., Sanders, Y. R. & Berry, D. W. Greatly improved higher-order product formulae for quantum simulation. Preprint at arXiv https://doi.org/10.48550/arXiv.2210.15817 (2022).

Magnus, W. On the exponential solution of differential equations for a linear operator. Commun. Pure Appl. Math. 7, 649 (1954).

Fer, F. Résolution de l’équation matricielle du/dt = pu par produit infini d’exponentielles matricielles. Bull. Cl. Sci. 44, 818 (1958).

Yoshida, H. Construction of higher order symplectic integrators. Phys. Lett. A 150, 262 (1990).

Terhal, B. M. & DiVincenzo, D. P. Classical simulation of noninteracting-fermion quantum circuits. Phys. Rev. A 65, 032325 (2002).

Bravyi, S. & König, R. Disorder-assisted error correction in Majorana chains. Commun. Math. Phys. 316, 641–692 (2012).

Bosse, J. L. FLOYao (v1.2.0). Zenodo. https://doi.org/10.5281/zenodo.7303997 (2022).

Kim, Y. et al. Evidence for the utility of quantum computing before fault tolerance. Nature 618, 500 (2023).

Zhao, Q., Zhou, Y., Shaw, A. F., Li, T. & Childs, A. M. Hamiltonian simulation with random inputs. Phys. Rev. Lett. 129, 270502 (2022).

Heyl, M. Dynamical quantum phase transitions: a review. Rep. Prog. Phys. 81, 054001 (2018).

Blanes, S., Casas, F., Oteo, J. & Ros, J. The Magnus expansion and some of its applications. Phys. Rep. 470, 151 (2009).

Klarsfeld, S. & Oteo, J. A. Recursive generation of higher-order terms in the Magnus expansion. Phys. Rev. A 39, 3270 (1989).

Blanes, S., Casas, F., Oteo, J. A. & Ros, J. Magnus and fer expansions for matrix differential equations: the convergence problem. J. Phys. A 31, 259 (1998).

Moan, P. C. Efficient Approximation of Sturm-Liouville Problems Using Lie-group Methods, Numerical Analysis Reports (University of Cambridge, Department of Applied Mathematics and Theoretical Physics, 1998).

Gambetta, F. M. & Bosse, J. L. Data for “Efficient and practical Hamiltonian simulation from time-dependent product formulas”. Zenodo. https://doi.org/10.5281/zenodo.14513664 (2024).

Acknowledgements

We thank J. Ostmeyer for pointing out ref. 15. This work received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. 817581 (A.M.)), and from EPSRC grant EP/S516090/1 (A.M.), InnovateUK grant 44167 (A.M.), and InnovateUK grant 10032332 (A.M.). Andrew Childs’s contribution to this publication was not part of his University of Maryland duties or responsibilities.

Author information

Authors and Affiliations

Contributions

R.S. initiated the project and designed the THRIFT algorithms. J.L.B. and F.M.G. implemented the algorithms for the specific models and performed the numerical simulations and analysis. C.D. performed the error scaling analysis of the Magnus-THRIFT algorithm. A.M.C. and A.M. provided technical guidance. All authors contributed to drafting and reviewing the paper.

Corresponding author

Ethics declarations

Competing interests

A.M. is a cofounder of Phasecraft Ltd. A patent application has been filed based on this work by R.S. with reference number 24158476.2 (EU). The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bosse, J.L., Childs, A.M., Derby, C. et al. Efficient and practical Hamiltonian simulation from time-dependent product formulas. Nat Commun 16, 2673 (2025). https://doi.org/10.1038/s41467-025-57580-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-025-57580-5

This article is cited by

-

Discrete Superconvergence Analysis for Quantum Magnus Algorithms of Unbounded Hamiltonian Simulation

Communications in Mathematical Physics (2026)

-

Time-Dependent Hamiltonian Simulation via Magnus Expansion: Algorithm and Superconvergence

Communications in Mathematical Physics (2025)