Abstract

It is challenging for wearable obstacle avoidance devices to simultaneously meet practical demands of high reliability, rapid response, long-lasting duration, and usable design. Here we report a wearable obstacle avoidance device, comprising a set of self-developed glasses (weighing ~400 grams, including an ~80 grams battery) and a common smartphone. Specifically, the glasses collect the multi-modal data for comprehensive environmental perception, including video and depth modalities, and implement a depth-aided video compression module. This module not only adaptively compresses video data to reduce transmission delay to the smartphone, but also operates on a customized FPGA board featuring a multi float-point vector unit streaming processing architecture, thereby facilitating responsive and energy-efficient obstacle detection. Additionally, we design a cross-modal obstacle detection module on the smartphone, which ensures reliable detection and provides user-friendly auditory and tactile alerts by utilizing cross-modal learning based on modal correlations. Multiple indoor and outdoor experimental results demonstrate 100% collision avoidance rates, delay of less than 320 ms, and duration of approximately 11 hours.

Similar content being viewed by others

Introduction

Statistics from the World Health Organization (WHO) show that over 1 billion people worldwide are visually impaired or blind, significantly hindering their safety and mobility in daily life1,2,3. Despite the availability of various indoor and outdoor navigation technologies4,5,6, independent travel remains extremely challenging for them due to ubiquitous obstacles, particularly fast-moving or suddenly appearing ones, as highlighted in National Federation of the Blind (NFB) surveys7,8. For example, a study in Changsha, China identified a density of 205 obstructions in a 1.8 km district9. This finding echoes a separate survey10, where nearly 40% of visually impaired individuals reported encountering serious collision injuries at least once a year. Therefore, developing obstacle avoidance devices will play a key role in enabling safer independent mobility for visually impaired individuals11,12.

Currently, white canes and guide doges are commonly used manners for obstacle avoidance. However, white canes can hardly respond to suddenly appearing obstacles in real-time, while rare guide dogs are difficult to obtain due to strict selection criteria and lengthy queueing application process12,13. In China, where there are 17 million visually impaired individuals, only around 200 guide dogs are available14. Recent years have witnessed rapid advances in sensor hardware and artificial intelligence (AI), paving the way for researchers to adopt diverse sensors for environmental perception to detect the presence of obstacles15,16,17. Based on extensive statistical data from the NFB and our practical investigation with the blind group (Supplementary Table 1)18, the ideal wearable obstacle avoidance devices are expected to adhere to the following fundamental principles in terms of software design, hardware design, and user experience.

-

Responsive. This means that obstacle avoidance devices offer rapid detection of potential obstacles and provide timely alert feedback. Responsiveness is typically quantified by the end-to-end (E2E) delay, encompassing the total time from data collection to alert feedback. Based on human response time from psychology and cognitive science, the E2E delay for obstacle avoidance must be no more than 320 ms19,20,21.

-

Reliable. Reliability covers accuracy and robustness, that is, obstacle avoidance devices must maintain collision avoidance rates of 100%, whenever confronted with various obstacle types (e.g., moving or stationary) and scenario changes (e.g., crowded malls or low-visibility intersections). Here the collision avoidance rate is defined as the proportion of successfully detected obstacles to the total number of obstacles.

-

Durable. Duration refers to the length of time that obstacle avoidance devices can continuously operate in running mode before requiring battery recharging or replacement. Combing cognitive accessibility standard of ISO 21801 with subjective investigation from Disabled Persons’ Federation22, the average duration of electronic aids for visually impaired individuals typically ranges from 5 to 8 h. This translates to a power consumption requirement of less than 8 W, when equipping a standard 12,000 mAh battery capacity and 3.7 V voltage.

-

Usable. Usability aims to ensure that obstacle avoidance devices seamlessly integrate into the lives of visually impaired individuals. Based on a series of usability standards (e.g., ISO 9241-11)12,23, this involves effectiveness, efficiency, and satisfaction. In particular, the appropriate weight of wearable obstacle avoidance devices is no more than 500 grams, which is approximately consistent with standard white canes24.

Table 1 exhibits a comprehensive investigation on the existing popular products/demonstrations of obstacle avoidance devices. Our findings reveal that none of them can simultaneously meet all these demands. The underlying technical reasons can be attributed to the following three aspects.

-

Sensor Type: Given the stringent reliability requirements, an increasing trend is to integrate multiple sensor types for multi-modal fusion. However, due to the heterogeneity in data formats and the challenge of feature alignment across different modalities25, these devices mostly employ outcome fusion, where the detection result is constrained by the weakest component. This inevitably results in a lack of robustness in diverse scenarios.

-

Data Processing: On-device obstacle detection for processing massive data fails to meet the responsiveness requirements, while connecting to a laptop compromises usability. A promising solution involves wirelessly offloading detection tasks to a carry-on smartphone, sharing the computational burden. However, directly transmitting raw data incurs significant transmission delays, whereas aggressive data compression potentially impacts the reliability of obstacle avoidance.

-

Hardware Processor: Low-power and compact MCUs are commonly chosen as the embedded processors, yet their limited hardware resources struggle with real-time AI inference. A customized field-programmable gate array (FPGA) board emerges as a potential solution, balancing responsiveness and duration through parallel computation and resource adaptation. However, achieving these benefits requires scheduling hardware resources to efficiently handle matrix operations specific to the employed AI model, which remains a significant challenge.

In this work, we report an ultra-reliable, ultra-responsive, and ultra-low-power Wearable Obstacle Avoidance Device (WOAD) for visually impaired individuals, including a set of self-developed glasses and a common smartphone. On the software side, the glasses simultaneously capture video and depth data, and implement a depth-aided video compression module. This module reduces transmission delay of multi-modal data to the smartphone, enabling responsive obstacle detection without degrading collision avoidance rates. In contrast to most video compression methods that prioritize visual perception quality, this module significantly improves compression efficiency by shifting the focus from human-oriented perception to task-oriented performance. Specifically, its core is to utilize obstacle status information derived from differences between consecutive depth frames to achieve adaptive video compression and compensate for spatio-temporal loss of video features caused by dynamic compression. Additionally, a cross-modal obstacle detection module is implemented on the smartphone. Unlike most existing devices that directly integrate detection results from different sensors at the outcome level, this module ensures reliable and lightweight detection across diverse obstacle types and scenarios by fully exploiting the inter-modal correlations at the feature level. The key technical aspect lies in extracting inherent modal correlations, and leveraging these correlations to propose a feature fusion mechanism based on cross-modal learning. This mechanism performs feature-level alignment, fusion, and complementarity between video and depth modalities at varying granularities.

On the hardware side, we customize an FPGA-based computation unit to execute depth-aided video compression module, featuring a multi float-point vector unit (MFVU) streaming processing architecture. This MFVU architecture offers two key benefits: accelerated execution speed and reduced power consumption. First, it optimizes data flow for matrix operation acceleration by processing sequentially arriving elements immediately rather than waiting for all elements to arrive. Second, this architecture dynamically adapts the AI model size to match on-chip memory resources, reducing power consumption by minimizing off-chip memory access and thereby supporting longer operational durations. To enhance device usability from a user experience perspective, we also design a multi-sensory alert mechanism that integrates both auditory and tactile feedback to convey key obstacle information, including direction, distance, and hazard level. Compared to relying solely on auditory or tactile inputs for vision substitution, this multi-sensory mechanism effectively balances the tradeoff between cognitive overload and comprehensive information display by distributing the information across both sensory modalities.

During seven months of continuous use by 12 visually impaired and blind volunteers, practical tests were conducted across various typical indoor and outdoor scenarios. As visualized in Fig. 1 and Supplementary Movies 1–5, these scenarios included traversing snowy streets crowded with pedestrians, crossing low-visibility intersections with fast-moving cars, avoiding suddenly appearing pedestrians in noisy malls, and navigating staircases. Across all test scenarios, the WOAD demonstrated reliability in adapting to scenario changes and diverse obstacle types. For instance, it consistently maintained 100% collision avoidance rates, particularly for motorized vehicles traveling at velocities exceeding 10 m per second with only 50 m visibility at night, and for continuously appearing 18 pedestrians with varying walking velocities and behavioral patterns on snowy streets. The E2E delay remained below 320 ms, enabling responsive collision avoidance even when unexpectedly encountering pedestrians moving at velocities exceeding 3 m per second within a 1 m range in visual blind corners. With a power consumption not exceeding 4 W under running modes, the WOAD ensured a long-lasting duration of more than 11 h. Moreover, the self-developed glasses weigh just ~ 400 grams in total (including an ~ 80 grams battery), which is lighter than the weight of a standard white cane. It also provides multi-sensory alerts through both auditory and tactile feedback, proven to be beneficial in streets exceeding 53 dB noise and malls exceeding 38 dB noise.

These scenarios cover outdoor snowy environment, outdoor crossroad at nighttime, indoor shopping mall, and indoor staircase. The movie contains five windows. Specifically, the first window is captured by a third-person camera, showcasing the behavioral details of obstacle avoidance. The second window displays the navigation. The third window visualizes the real-time power consumption of the glasses. The fourth window presents the depth signals captured by the TOF radar, along with the obstacle detection results. The fifth window shows the video signals captured by the IR camera. During testing, all participants were free to choose whether to use a white cane along with the WOAD. Online watching link: https://watch.wave.video/49YvNrOXukHljCyw.

Results

General design of the WOAD

The WOAD consists of the self-developed glasses and a smartphone. Figure 2a illustrates the hardware components of the glasses, while Fig. 2b shows the whole implementation procedure of the WOAD. Specifically, the glasses collect multi-modal data, implement depth-aided video compression, and transmit the compressed data to the smartphone via wireless transmission protocol. The smartphone then executes cross-modal obstacle detection, and the corresponding detection results trigger both auditory and tactile alerts. The details are explained as follows.

a Hardware components of a set of glasses within WOAD. b Detailed implementation procedure of the WOAD. The glasses transmit multi-modal data collected from infrared (IR) and time-of-flight (TOF) sensors to the smartphone via wireless communications. The smartphone is responsible for computational obstacle detection, and detection results are fed back to the headset and glasses for multi-sensory alerts. c Network structure of cross-modal obstacle detection module. d Field-programmable gate array (FPGA) board and multi float-point vector unit (MFVU) streaming processing architecture. We take the following examples to illustrate the optimization of data flow: each float-point vector unit (FVU) processing two inputs, and each MFVU processing four inputs. Note that the employed FPGA chip is XC7A35T-1FTG256C.

Multi-modal data collection

The set of glasses integrate a compact Synexens multi-modal sensor (CS30) along with the necessary software and packages, such as GNU Compiler Collection 9.4.0, GNU C++ Compiler 9.4.0, and Open Source Computer Vision Library 4.4.0. The sensor measures 90 mm × 25 mm × 25 mm, and consists of an infrared (IR) camera and a time-of-flight (TOF) radar. In particular, the rationale behind this sensor integration is numerically validated in Supplementary Table 2 and Supplementary Fig. 1. Our integrated multi-modal sensor can simultaneously collect video and depth data, enhancing environmental perception. Specifically, high-resolution visual information from video data provides fine-grained shape and texture details for reliable obstacle detection. On the other hand, depth data delivers precise distance measurements of obstacles, and is further utilized to derive their velocity and hazard levels (Supplementary Fig. 2). In order to mimic the human field of view (FOV) and match signals from different sensors, both the camera and radar share a FOV of 100∘ × 75∘ with a high resolution of 640 × 480, and the distance measurement range extends accurately from 0.1 m to 5 m.

Depth-aided video compression

In Fig. 2b, the collected data are fed into the depth-aided video compression module via the Type C interface with USB 2.0 protocol. This module executes three procedures to achieve a video compression ratio of exceeding 95% (Supplementary Fig. 3a, b) while ensuring 100% collision avoidance rates: i) Real-Time Obstacle Status Acquisition: It employs the consecutive depth differences to rapidly acquire obstacle status, e.g., relative distance, velocity, and direction. ii) Adaptive Compression Configuration Selection: We leverage the Discrete Soft Actor-Critic (D-SAC) algorithm of reinforcement learning (RL, see Fig. 2b for more details) for adaptive compression configuration selection. Based on the time-varying threats posed by obstacles, it can dynamically determine frame filtering decision and resolution setting within each video frame to balance the trade-off between compression efficiency and detection reliability. iii) Temporal-Spatial Video Compression Implementation: According to compression configurations, the frame filtering and intra-frame uneven region encoding are utilized to compress video signals from the temporal and spatial perspectives, respectively. Also, aided by the obtained obstacle status, the spatio-temporal loss of video features caused by dynamic compression can be compensated based on motion compensation.

In terms of hardware deployment, this module operates on the FPGA-based computation unit. As shown in Fig. 2a, it comprises two boards: i) ARM Board: This board manages multi-modal data collection, two procedures within depth-aided video compression, and multi-sensory feedback alert. It features the Amlogic 12 nm Cortex-A55 processor with 4-core CPU, supporting versatile encoding and decoding methods (e.g., H.26X) of 4K@60fps video. ii) FPGA Board: This board is dedicated to deploying the proposed RL for adaptive compression configuration selection. The custom-designed 28 nm FPGA offers on-chip computation with 90 DSPs, 33280 LEs, 1800 K BRAMs, 1.8 M MCs, and a maximum clock frequency of 450 MHz. As depicted in Fig. 2d, a MFVU streaming processing architecture is specifically designed in FPGA board. This architecture features efficient matrix operations through introducing processing elements (i.e., float-point vector unit, FVU) to optimize data flow and adapting RL size to on-chip memory resources for low power consumption.

Cross-Modal obstacle detection

A cross-modal obstacle detection module is designed and deployed on the smartphone in a lightweight manner. It ensures accuracy and robustness for detecting various obstacles across changing scenarios when receiving compressed signals from the glasses via 4G/5G, Wi-Fi 6.0, or Bluetooth 5.0.

As illustrated in Fig. 2c, this module encompasses three procedures: i) Separate Feature Extraction: It utilizes the initial layers of pre-trained object detectors to separately extract intra-modal features from video signals (e.g., texture, shape) and depth signals (e.g., distance, velocity). ii) Cross-Modal Feature Fusion: Video and depth features are firstly concatenated within a common feature space. Then, Transformer blocks are utilized to comprehensively characterize the inherent modal correlations through a self-attention mechanism. Based on these correlations, an inter-modal attention mechanism is employed to align, fuse, and complement the features at different granularities, dynamically assigning weights to video and depth modalities. This procedure achieves reliable and lightweight obstacle detection across various scenarios. iii) Decision-Making Network: Based on the features obtained after cross-modal fusion, the remaining layers of pre-trained object detectors are used to infer the obstacle detection results.

Multi-Sensory feedback alert

The detection results are wirelessly fed back to the headset and vibrotactile motors on the glasses, triggering multi-sensory alerts in real-time11,26,27. Specifically, besides the traditional auditory alert, we deploy coin-type vibrotactile motors (thickness of 2.7 mm and outer diameter of 10mm) to offer tactile alerts. For vibration pattern, Pulse Width Modulation (PWM) signals are employed to control the vibration intensity and frequency by adjusting the duty cycle and PWM frequency. Settings of the vibration temporal patterns, including duration, intervals, and refresh rates, are provided in Supplementary Fig. 4. Key obstacle information is displayed through this multi-sensory alert feedback mechanism, including direction, distance, and hazard level.

As primary information, direction and distance of obstacles are displayed through tactile stimuli, which are essential for visually impaired individuals to ensure basic safety through enhancing their spatial awareness. Compared to auditory alerts, it offers clearer and more immediate reminders, especially when encountering fast-moving obstacles in noisy environments. In particular, the spatial location of vibrotactile motors indicates distinct directions, while varying vibration frequencies represent different distance levels. As depicted in Fig. 2a and Supplementary Fig. 4, three motors are strategically positioned on sensitive areas of the skin, including the forehead and the regions near the temples on both sides, to align with the human horizontal FOV, which ranges roughly from −60∘ to +60∘. Inspired by the frequency settings from ISO 9241-920:2024 and the distance settings proposed by Van Erp et al.27,28,29, frequencies of 200 Hz and 150 Hz are notably distinguishable to humans. A 200 Hz frequency is triggered when the distance is less than 3 meters, while a 150 Hz frequency is used when the distance exceeds 3 meters.

As secondary information, conveying hazard levels through auditory feedback offers a more comprehensive description of obstacles. Unlike reporting the obstacle type, the choice to prioritize hazard levels can be justified by two key factors. First, hazard levels directly represent the potential threats posed by obstacles, which obstacle type alone cannot accurately capture. For instance, vehicles in distinct motion states may present significantly different levels of threat. Second, three hazard levels (i.e., Attention, Warning, and Danger) reduce the cognitive load compared to processing numerous obstacle types, thereby facilitating quicker decision-making for visually impaired individuals.

Scenario I: Indoor environments

After seven months of continuous use of the WOAD by 12 visually impaired and blind volunteers, we selected three representative indoor scenarios based on obstacle type and spatial distribution. These scenarios, illustrated in Fig. 3a, mainly encompass dense obstacles, suddenly appearing obstacles, and up-down stairs. As depicted in Fig. 3b, the first scenario type features a high density of stationary and moving obstacles, such as three cartons and two walking pedestrians with an average velocity of 2 m per second. The second scenario type involves visual blind spots where moving obstacles suddenly appear, such as pedestrians moving faster than 3 m per second within a 1 m range. The third scenario type focuses on the accurate detection of stair start and end points, along with avoiding randomly walking pedestrians. Across 720 trails, we not only measured and visualized various metrics including walking trajectory, velocity, time, and distance to examine WOAD’s smooth mobility13,30, but also recorded metrics such as collision avoidance rate, E2E delay, and power consumption to validate functional strengths of ultra-reliable, ultra-responsive, and ultra-low-power obstacle avoidance.

a Visualization of indoor test scenarios and walking trajectories using the wearable obstacle avoidance device (WOAD) and a white cane. The red dashed circles mark nine abrupt directional changes for the white cane. b Video frame sequences in different scenarios and typical detection results. Video frames in the first three columns are captured by the third-person camera, while the typical detection results in the fourth column are derived from cross-modal obstacle detection module. c–e Mobility evaluation in terms of walking velocity, time, and distance. A two-sided ANOVA was used to assess significant differences between the two devices, with specific p-values provided in the figures. Box plots depict the mean (square), median (central line), the first and third quartiles (box), whiskers extending to 1.5 times the interquartile range from the first and third quartiles, respectively (n=120, with 10 replicates for each of 12 participants). Source data are provided with paper. f Collision avoidance rate comparison between the two devices. The WOAD maintains 100% collision avoidance rates across three scenarios, showing significant performance improvements of 8% and 74% in scenario 1 and scenario 2, respectively. g E2E delay of the WOAD. Compared to the E2E delay requirement (i.e., 320 ms), the WOAD achieves delay reductions of 8.4%, 10.6%, and 1.6% across the three scenarios. h Power consumption in both standby and running modes. In running mode, the power consumption of the WOAD is around 3.5 W. The shadings of solid lines (mean) represent error bars (standard deviation). n = 3 replicates for each scenario.

For mobility evaluation, the WOAD (yellow line) exhibited smoother and more direct motion compared to the white cane (blue line), which showed multiple abrupt directional changes during the whole tests (Fig. 3a). This disparity arises because the white cane explores the surrounding environment through continuous ground contact in various directions. As depicted in Fig. 3c–e, a two-sided analysis of variance (ANOVA) was used to test the significance of differences between the two devices across all three indoor scenarios. Specifically, the WOAD led to increases in walking velocity of 8.2%, 11.6%, and 24.5%, while walking time was reduced by 3.5%, 9.1%, and 35.5%. Furthermore, walking distance in Scenario 3 was decreased by 15.6% with the WOAD compared to the white cane.

In contrast to the white cane’s collision avoidance rate of only 26% for suddenly appearing obstacles, which pose a potential life-safety challenge, the volunteers wearing the WOAD achieved 100% collision avoidance rates across all scenarios, as demonstrated in Fig. 3f. The E2E delay of the WOAD in Fig. 3g was around 320 ms, encompassing data collection and compression, data transmission, obstacle detection, and vibration perception. Notably, the transmission delay is primarily influenced by the amount of compressed data, while the vibration perception is determined by human temporal sensitivity, measured at 20 ms under the guidance of ISO 9241-920:2024 (Supplementary Fig. 5). The total value comfortably meets the strict responsiveness requirement, which allows the WOAD to react effectively even to fast-moving pedestrians exceeding 3 m per second, suddenly appearing within a 1 m range in corners. In terms of device duration, Fig. 3h showed the power consumption under the three scenarios, both in standby and running modes. Compared to the requirement of less than 8 W, the WOAD achieved an average power savings of 56%.

Scenario II: Outdoor environments

In contrast to indoor environments, navigating outdoor environments presents greater challenges due to tactile pavings being occupied by diverse obstructions, the presence of fast-moving motorized vehicles on noisy streets without traffic lights, and adverse weather conditions (e.g., rain, snow, and low-visibility nights). After seven months of continuous use of the WOAD by 12 visually impaired and blind volunteers, we selected four typical and most challenging outdoor scenarios: occupied tactile pavings and busy crossroads without traffic lights, both in daytime and nighttime (Fig. 4a, b). In particular, the velocity of fast-moving vehicles ranges from 10 m per second to 16 m per second, with visibility for drivers less than 50 m at night.

Figure 4a showcased two walking trajectories generated by the same volunteer using the WOAD and the white cane, respectively. The WOAD facilitated much smoother movement along the yellow trajectory compared to the blue trajectory corresponding to the white cane. Figure 4c–e quantitatively illustrated smooth mobility provided by the WOAD. A two-sided ANOVA revealed significant improvements in both walking velocity and time. Specifically, Fig. 4c showed that the WOAD significantly increased walking velocity in occupied tactile paving scenarios, with notable increases of 21.1% during the day and 31.3% at night. In busy crossroad scenarios, daytime velocity increased by 6.8%, whereas nighttime velocity saw a substantial increase of 36.3%. Regarding walking time, Fig. 4d showcased significant improvements (22.2%, 27.8%, 10.9%, and 25.4%) with the WOAD across all four scenarios. Finally, Fig. 4e demonstrated that volunteers using the WOAD walked less distance compared to using the white cane.

Figure 4f demonstrated the accuracy and robustness of the WOAD across various obstacle types and scenario changes. Notably, when confronted with fast-moving cars exceeding 10 m per second at nighttime (refer to Fig. 4b), the WOAD consistently achieved a remarkable collision avoidance rate of 100%, compared to merely ~40% when using a white cane. The achievement of this metric can primarily be attributed to two key factors. First, a cross-modal obstacle detection module is implemented to enhance reliability through leveraging correlations between two different modalities. Second, to fundamentally ensure human safety, the WOAD is designed to identify any possible threats. However, this safety-first approach occasionally results in a small number of false alerts during practical tests, where non-obstacles are mistakenly recognized as potential obstacles due to similar features influenced by environmental factors (e.g., shadow, snowflakes). From the volunteers’ perspective, they agreed that these false alerts are acceptable, provided that human safety is ensured. Figure 4g illustrated that the E2E delay was less than 320 ms, enabling volunteers to proactively detect and avoid obstacles in real-time. Figure 4h exhibited that the power consumption measured in all outdoor scenarios was no more than 4 W, regardless of running or standby mode. This efficiency allowed for a long-lasting duration of about 11 h, satisfying approximately 8 h of consumer-level daily usage.

a Visualization of outdoor test scenarios and walking trajectories using the wearable obstacle avoidance device (WOAD) and a white cane. When approaching moving pedestrians and bikes, the WOAD facilitated much smoother movement along the yellow trajectory, while the blue trajectory corresponding to the white cane exhibited five abrupt directional changes (Please refer to the red dashed circles). b Video frame sequences and typical detection results. Video frames in the first three columns are captured by the third-person camera, while the typical detection results in the fourth column are derived from cross-modal obstacle detection module. c–e Mobility evaluation in terms of walking velocity, time, and distance. A two-sided ANOVA was used to assess significant differences between the two devices, with specific p-values provided in the figures. Box plots depict the mean (square), median (central line), the first and third quartiles (box), whiskers extending to 1.5 times the interquartile range from the first and third quartiles, respectively (n = 120, with 10 replicates for each of 12 participants). Source data are provided with paper. f Collision avoidance rate comparison. The WOAD showcases robustness across the four scenarios, with remarkable improvements of 28%, 27%, 59%, and 60%. g E2E delay of the WOAD. Across four scenarios, the WOAD achieves delay reductions of 11.6%, 13.8%, 6.3%, and 15.6%, respectively. h Power consumption in both standby and running modes. Compared to the power requirement, the WOAD in running mode saves approximate 56% power. The shadings of solid lines (mean) represent error bars (standard deviation). n = 3 replicates for each scenario.

Survey

To gather user feedback on the practical usage of the WOAD, we conducted a survey with 8 visually impaired and blind volunteers who had extensive experience using assistive tools like white canes or guide dogs. After completing experiments, these volunteers responded to a survey consisting of 26 questions. These questions covered three broad topics: personal information (Supplementary Table 3), device usability (Table 2), and open discussion (Supplementary Table 1). Note that we have obtained consent for the publication of identifiable information, such as age, gender, image, etc. For device usability, we quantified their responses on a scale ranging from 1 to 5. In this scale, 1 denotes Strongly Disagree and 5 denotes Strongly Agree.

In terms of reliability, these volunteers rated the WOAD significantly higher, with an average score of 4.125, compared to an average of 2.75 for the white cane. This was particularly evident when encountering diverse obstacle types (e.g., stationary and moving vehicles) across various scenarios (e.g., crowded malls or low-visibility intersections). Moreover, the enhanced reliability of the WOAD also contributed to improved walking velocity (average 4.125) compared to using a white cane (average 3.25). Interviews with visually impaired volunteers revealed two main reasons why the WOAD’s user ratings for reliability did not achieve a perfect score of 5. First, from a psychological perspective, the Expectation Discrepancy inevitably contributes to the degradation in reliability. Before participating in the practical tests, all volunteers had high expectations for the unknown obstacle avoidance device. Given their primary concern for safety, they simply wished for the device to be as secure as possible. In their minds, the standard for reliability was not defined by a specific value. As a result, although the volunteers acknowledged that the device performed excellently in terms of reliability, they felt that there was still room for improvement, making it difficult for the device to achieve the highest possible rating. Second, two other factors influence reliability ratings based on user feedback. To ensure 100% collision avoidance rate, the WOAD is designed to identify any potential threat to safety. However, due to environmental influences (e.g., snowflakes), this safety-first design leads to an extremely low probability of non-obstacles being mistakenly identified as obstacles, which can slightly degrade reliability for volunteers with perfectionistic personality traits. Also, the level of familiarity with the device also plays a role in shaping its perceived reliability. For responsiveness in terms of rapid obstacle avoidance and timely feedback alert, the WOAD received average scores of 4.25 and 4.125, respectively, compared to 2.875 and 3.125 for the white cane. These benefits were particular noticeable when encountering fast-moving vehicles at low-visibility nights and suddenly appearing pedestrians at corners. For duration, the WOAD’s 11 h duration was satisfactory for daily use, receiving an average score of 4.625. In terms of usability, we inquired 10 additional questions regarding effectiveness, efficiency, and satisfaction, following the international standard ISO 9241-1131. In particular, based on the feedback from volunteers, the reasons for deficits in user satisfaction stemmed from the following three aspects: i) Device Appearance. While the WOAD was lightweight and comfortable for long-term wear during practical tests, some interviewees expressed concern that wearing it might attract unwanted attention from passengers, which they preferred to avoid in their daily lives. ii) High Expectations for Reliability. As mentioned earlier, a small number of non-obstacles were occasionally identified as potential obstacles, which may negatively impact user satisfaction. iii) Device Familiarity. The visually impaired volunteers have used the white cane for 3 to 20 years, compared to just seven months with the WOAD, making the latter unfamiliar to them. Although the tactile pattern within the WOAD is simple and intuitive, it still requires volunteers to undergo a training period to learn the specific patterns (e.g., various vibration directions) before participating in practical tests.

Discussion

This article reports the WOAD for visually impaired individuals, designed to achieve ultra-reliable, ultra-responsive, and ultra-low-power obstacle avoidance. To ensure rapid response, a depth-aided video compression module is integrated into the self-developed glasses, utilizing obstacle status information derived from depth data for adaptive video compression. For high reliability, a cross-modal obstacle detection module is ligtweightly implemented on the carry-on smartphone, characterizing and leveraging inherent modal correlations to improve accuracy and robustness of obstacle detection across various obstacle types and scenarios. For both long-lasting duration and rapid response, a customized FPGA board with a MFVU streaming processing architecture is used to implement adaptive video compression. This architecture optimizes data flow and adapts on-chip memory resources to accelerate execution speed and reduce power consumption. To comprehensively evaluate performance of obstacle avoidance, visually impaired and blind volunteers wearing the WOAD navigated in diverse indoor and outdoor scenarios. Typically, these scenarios included office buildings and malls with suddenly appearing pedestrians, up-down stairs, snowy streets with crowded pedestrians, and crossroads with fast-moving vehicles at low-visibility night. Under these scenarios, numerical results demonstrated 100% collision avoidance rates, E2E delay of below 320 ms, and less than 4 W power consumption. Moreover, the glasses weigh only ~400 grams in total (including an ~80 grams battery), and provide non-disruptive auditory and tactile alerts. Overall, compared with existing popular products and demonstrations listed in Table 1, the WOAD effectively balances the tradeoff among reliability, responsiveness, duration, and usability.

Furthermore, the WOAD’s technical contributions can extend to address challenges faced by micro unmanned devices (e.g., drones and robots) in terms of environmental adaptation and handling computation-intensive or delay-sensitive tasks with limited computation and power capacity. This allows micro unmanned devices to play a significant role in a broader range of application scenarios. For example, in emergency search and rescue, they facilitate access to narrow or dangerous spaces to find survivors or hazardous objects. In environmental monitoring, they can assist in environmental protection and disaster early warning in harsh environments such as high-altitude uninhabited areas. Specifically, the WOAD can provide potential solutions to the following challenges.

-

1.

Low-Power Rapid Autonomous Decision-Making. In the WOAD, the proposed depth-aided video compression mechanism rapidly offloads task-related data to the edge wirelessly, without compromising task performance. Simultaneously, the streaming processing architecture of the FPGA board is optimized to fit the task characteristics. This methodology for integrating edge-device collaborative learning with on-device hardware design enables energy-efficient and rapid autonomous decision-making. It serves as a potential solution to the challenge faced by micro unmanned devices in handling computation-intensive or delay-sensitive tasks with limited computation and power capacity.

-

2.

Comprehensive Environmental Understanding. The WOAD extracts correlations among multi-modal data, and leverages them to develop a obstacle detection module through cross-modal learning. This methodology for feature-level cross-modal fusion with multi-granularity enables micro unmanned devices to reliably and efficiently understand their surrounding environment. It provides a solution to improve their environmental adaptation in distinct scenarios, including autonomous navigation, risk identification and mitigation.

In our future works, we plan to enhance the WOAD from the perspective of device appearance. We envision leveraging advancements in flexible electronics and multidisciplinary research to transform the glasses into a pair of fashionable, compact sunglasses32,33. The redesigned device will seamlessly integrate more miniaturized, soft, and comfortable circuit modules and computation units, further enhancing user acceptance and social integration34.

Methods

Experiment design

This section primarily outlines the scenario designs used in various practical tests, emphasizing the WOAD’s superior obstacle avoidance performance in both indoor and outdoor environments with a variety of obstacles. Before detailing the scenarios, we first identified the common and typical obstacles encountered in daily life.

Based on discussions with visually impaired individuals and a thorough review of existing works and public datasets, we broadly categorized obstacles into two types: obstruction-type and guidance-type obstacles (Supplementary Table 4). Specifically, the obstruction-type obstacles refer to physical objects or barriers that restrict or impede the ability to move freely within an environment. They are further divided into stationary and moving obstacles based on their motion state. Typical moving obstacles include pedestrians and fast-moving vehicles, while stationary obstacles are categorized by height: waist-below (e.g., chairs, roadblocks) and waist-above (e.g., poles, trees). In contrast, guidance-type obstacles influence walking behavior (e.g., navigation, orientation) by providing cues or signals for movement, such as zebra crossings and entrances. For both categories, test results on representative examples have demonstrated the WOAD’s robust detection performance across a wide range of obstacles (Figs. 3b, 4b, and Supplementary Fig. 6).

In indoor environments, we have comprehensively considered three typical application scenarios, encompassing dense obstacles, suddenly appearing obstacles, and up-down stairs. Specifically, i) Dense Obstacles Scenario: This scenario is commonly found in crowded areas like malls (refer to Scenario 3 in Fig. 1) and office buildings (refer to Scenario 1 in Fig. 3). It features a high density of both stationary and moving obstacles (e.g., trash cans, cartons, moving pedestrians). Practical tests in office buildings were conducted as an example scenario. Three cartons, each with a random dimension exceeding 85 cm in length, 50 cm in width, and 55 cm in height, were positioned less than 2 m apart. Meanwhile, two pedestrians walked at an average velocity of 2 m per second. This scenario serves as a comprehensive test for reliability and responsiveness when encountering a variety of obstacles in a short span of time. ii) Suddenly Appearing Obstacles Scenario: This scenario typically occurs in visual blind spots such as indoor corners. During our practical tests across malls (refer to Scenario 3 in Fig. 1) and office buildings (refer to Supplementary Movie 1 and Scenario 2 in Fig. 3), pedestrians unexpectedly appeared at speeds exceeding 3 m per second within a 1 m range. This scenario serves as a crucial test for responsiveness when encountering the sudden appearance of obstacles. iii) Up-Down Stairs: Stairs are a critical component of indoor navigation within buildings (refer to Scenario 4 in Fig. 1, Supplementary Movie 2, and Scenario 3 in Fig. 3). The primary challenge for navigating stairs lies in how to accurately perceive start and end points in real-time. Volunteers randomly selected stairs in different places and encountered walking pedestrians on the stairs. Notably, Fig. 3b illustrates upward stair navigation as an example, while downward movements during both nighttime and daytime are shown in Supplementary Fig. 7.

In outdoor environments, we have exhibited four prevalent yet challenging scenarios: occupied tactile pavings in both daytime and nighttime, and busy crossroads without traffic lights in both daytime and nighttime. Specifically, i) Occupied Tactile Pavings in Daytime: This scenario focuses on the challenge of navigating around multiple obstacles on tactile pavings in a noisy street (refer to Scenario 1 in Fig. 1 and Scenario 1 during daytime in Fig. 4). These obstacles include stationary vehicles and crowded pedestrians randomly walking at around 2m per second. ii) Occupied Tactile Pavings in Nighttime: Unlike daytime scenarios where pedestrians may actively avoid approaching volunteers, the key challenge at night lies in navigating around both stationary and moving obstacles in low visibility, typically less than 50 m (refer to Scenario 1 during nighttime in Fig. 4). iii) Busy Crossroad without Traffic Lights in Daytime: This scenario emphasizes the need for rapid detection and response to fast-moving obstacles at a crossroad without traffic lights (refer to Supplementary Movie 3 and Scenario 2 during daytime in Fig. 4). Obstacles include running pedestrians and fast-moving motorized vehicles exceeding 10 m per second in velocity. iv) Busy Crossroad without Traffic Lights in Nighttime: This involves navigating a crossroad with fast-moving pedestrians and motorized vehicles, but with the added challenge of low visibility, limited to less than 50 m (refer to Scenario 2 in Fig. 1 and Scenario 2 during nighttime in Fig. 4).

User study

A total of 20 volunteers with impaired vision, who were invited with assistance of the Jiangsu Disabled Persons’ Federation, participated in our experiments. All participation was fully voluntary without receiving any compensation, and each volunteer gave informed written consent for the publication of identifiable information. In particular, 12 volunteers provided quantitative feedback on various metrics, including walking trajectory, velocity, time, distance, collision avoidance rate, E2E delay, and power consumption, to comprehensively evaluate mobility, reliability, responsiveness, and duration. Additionally, we conducted a series of surveys with another 8 volunteers, who had extensive experience with various assistive devices (e.g., white canes or guide dogs), to gather insights into their practical experiences with device usability and engage in open discussions.

Depth-aided video compression

Challenge

Under the premise of ensuring reliability and usability, obstacle avoidance devices need to detect and respond to obstacles within a millisecond-scale delay to effectively handle suddenly appearing and fast-moving obstacles. In general, E2E delay is regarded as the crucial metric to characterize responsiveness. For AI-based obstacle avoidance devices, the E2E delay primarily involves data collection, data processing, AI inference for obstacle detection, and alert feedback. In particular, for the compact WOAD which equips limited hardware resources, deploying multi-modal sensors to gather extensive heterogeneous data can improve reliability, but significantly burdening the E2E delay due to complex data processing and computation-intensive AI inference. To overcome this difficulty, a feasible method is to share the computational burden with the WOAD by wirelessly transmitting data to the on-carry smartphone. However, transmitting raw multi-modal signals directly to the smartphone incurs additional transmission delay, increasing E2E delay. Consequently, our focus shifts to on-device massive video compression to reduce transmission delay without impacting the reliability of obstacle avoidance.

Recent research on video analytics tasks (e.g., object detection, semantic segmentation) has argued that discarding frames or setting lower resolution for pixels irrelevant to target tasks may improve compression efficiency without impacting task performance35,36,37. Indeed, our tests have also confirmed that objects can be accurately detected at 320 P resolution using computation-intensive deep neural networks (DNNs), while higher resolutions like 1080 P or even 4 K offer negligible improvements in detection accuracy. Inspired by these findings, the WOAD aims to compress the massive video data from two perspectives: i) From a temporal perspective, video frames irrelevant to obstacle detection are filtered out. ii) From a spatial perspective, performing intra-frame uneven encoding to emphasize regions that contains critical information for obstacle detection. These perspectives involve different parameters, such as filtering decision, resolution setting. Without loss of generality, a combination of these parameters used in compression manners (i.e., frame filtering, intra-frame uneven encoding) can be regarded as compression configurations. As such, the bottlenecks are summarized as follows.

On one hand, given the obstacle characteristics such as diverse types, sudden appearances, time-varying and unpredictable status, one critical issue for the WOAD is how to adaptively select the best compression configuration over time, subject to the following requirements: i) The rationale for differentiated intra-frame region encoding quality should be based on the detection difficulty and potential threats posed by diverse obstacle types. For instance, it is common to assign a lower encoding quality to pedestrians compared to bikes within a video frame. This is because pedestrians tend to exhibit more standardized and recognizable features than bikes, making them more resilient to lower encoding quality. Moreover, higher encoding quality is necessary for moving obstacles compared to stationary ones within the same frame. ii) The adjustment of compression configurations should be real-time to promptly respond to changes in obstacle status and surrounding environment. For example, when approaching vehicles with fluctuating velocities, frame rates and encoding quality need to be dynamically adjusted to match the current velocity and distance of vehicles.

On the other hand, due to the stringent reliability requirement, another issue is how to consistently ensure reliable obstacle avoidance under frequent changes in compression configuration selection. Aggressive video compression may lead to spatio-temporal information loss of video frames critical for reliable obstacle detection. For instance, frequent changes in video frame rates can introduce errors in motion estimation, thereby diminishing the localization and distance precision of fast-moving obstacles.

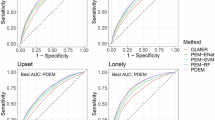

To address these two issues, we propose a depth-aided video compression module, which employs timely obstacle status acquired from consecutive depth map differences to guide adaptive video compression based on RL. Compared with State-of-the-Art (SOTA) methods, this module significantly enhances compression efficiency, reducing transmission delay while maintaining reliable obstacle detection (Supplementary Table 5 and Supplementary Fig. 8).

Real-time obstacle status acquisition

The depth data collected from the TOF radar is typically depicted as a depth map, which essentially consists of a two-dimensional array providing a direct representation of object distances. This straightforward data format enables the timely acquisition of obstacle status, including relative distance, velocity, and direction, by calculating the difference between consecutive depth maps. As such, leveraging this status information can offer a two-fold benefit to video compression. Firstly, the relative velocity, distance, direction, and trajectory can help compensate for the spatio-temporal information loss of video frames caused by dynamic compression through motion compensation. Secondly, the regions of potential obstacles can be identified with assistance of status information. This location information can further guide intra-frame uneven encoding, such as selective encoding, region of interest (ROI) encoding. The detailed analysis and steps are as follows.

Step 1: Depth Signal Pre-Processing. Due to lighting variations, the depth maps from depth signals contain some noise and outliers. Bilateral filtering is employed for raw depth signals, effectively filtering noise while preserving key visual details, such as obstacle edges.

Step 2: Depth-Aided Obstacle Status Acquisition. This step analyzes inter-frame depth signals to acquire obstacle status information, exemplified by relative distance and velocity. Specifically, by utilizing the distance values provided by pre-processed depth maps, relative motion distances of potential obstacles can be calculated by comparing the distances between consecutive depth maps, denoted as dis. Combining this with depth signal frame rates enables determination of relative motion velocities, denoted as vel.

Step 3: Motion Compensation. Aided by obstacle status information, this steps aims to mitigate the spatio-temporal information loss of video frames caused by frequent changes in compression configurations. Specifically, we firstly calculate motion vector for each video pixel by decomposing motion distance and velocity into horizontal and vertical components. Then, motion compensation is applied to each video pixel by shifting its portion based on motion vectors. Finally, bilinear interpolation further ensures smoothness in the compensated video frames.

Step 4: Obstacle Region Identification. This step utilizes the depth difference between consecutive depth maps to identify obstacle locations. By taking into account relative motion velocity, the regions with significant differences are identified as the potential obstacle locations, with their four coordinates denoted as c1, c2, c3, and c4. In subsequent steps, these regions containing obstacles are prioritized for high-quality encoding to facilitate accurate obstacle detection. Conversely, other regions are assigned low-quality encoding to optimize compression efficiency.

Adaptive compression configuration selection

In time-varying and unpredictable environments, we employ a learning-based RL framework to implement adaptive compression configuration selection. The RL trains a neural network model to adaptively determine the selection policy of video compression configurations, i.e., frame filtering decision and resolution setting within the frame, based on the current obstacle status and previously selected compression configurations.

Without loss of generality, we construct the RL framework by considering the following three core components.

Action space

In order to compress video signals as much as possible, the action space in RL encompasses redundant frame filtering and intra-frame uneven region encoding from temporal and spatial perspectives, respectively. Specifically, the action at time step t is represented as the selected compression configuration, denoted as at = {fpst, QVt}. Here, fpst = {0, 1} is a binary frame filtering decision: fpst = 1 denotes the transmission of the current frame, while fpst = 0 indicates filtering. QVt = {0, …, 40, …, 75, …, 100} is quality values, which directly correlates with the resolution within the transmitted frame, with higher QV indicating higher resolution.

State space

The state at time step t, denoted as st = {at−1, ot, ct}, is observed from the environment and contains eight key elements. i) Historical Configuration Selection at−1 = {fpst−1, QVt−1}: Due to the principle of trial and error for RL, the current state st is strongly relevant to historical configuration selection at−1. ii) Obstacle Status ot = {dist, velt}: Given the time-varying threats posed by obstacles, the selection of compression configurations becomes more vulnerable to the instant obstacle status ot, including relative distance dist and velocity velt. iii) Obstacle Location Coordinates \({{\bf{c}}}_{t}=\{{c}_{t}^{1},{c}_{t}^{2},{c}_{t}^{3},{c}_{t}^{4}\}\): In order to achieve intra-frame uneven encoding, the current state st includes the region marked by the four coordinates \(\{{c}_{t}^{1},{c}_{t}^{2},{c}_{t}^{3},{c}_{t}^{4}\}\) of potential obstacles, which are then assigned to high-quality encoding. Detailed parameter selection for QV in high-quality and low-quality encoding is provided and analyzed in Supplementary Fig. 9.

Reward space

The goal of the RL is to learn an optimal policy that can maximize the cumulated reward via continuous trial and error. Here, the reward reflects the success of visually impaired individuals in avoiding the current obstacle. We employ binary values, either −1 or 1, to objectively represent the reward, denoted as rt = { − 1, 1}. A reward of 1 is assigned upon successful avoidance of the current obstacle, while −1 is assigned otherwise.

In the above RL settings, the agent (i.e., the developed glasses) initially observes states st at time step t, and then selects an action at based on the selection policy π(at∣st). After taking the action, current states st transition to st+1, and the agent eventually receives a reward rt38.

Note that one reward signal is only achieved upon encountering an obstacle. Thus, the obstacle avoidance task poses a challenge with sparse reward signals, hindering RL learning efficiency39,40. For instance, in a scenario where a visually impaired individual encounters a single obstacle across 20 video frames, the frame resolution and filtering decision remain unchanged until this obstacle is encountered, potentially compromising either compression efficiency or detection reliability. To address the challenge of sparse rewards, we introduce the domain knowledge to design progressive reward signals (a.k.a. reward shaping), aiding in generating sufficient data samples for selection policy learning of compression configurations. Accordingly, the modified reward is denoted as:

where r(st, at, st+1, at+1) denotes the initial RL reward, and \(\hat{r}({{\bf{s}}}_{t},{{\bf{a}}}_{t},{{\bf{s}}}_{t+1},{{\bf{a}}}_{t+1})\) denotes the knowledge-driven reward through reward shaping. The design principle of the knowledge-driven reward is based on the following considerations: i) The relative velocity and distance of obstacles are used to prioritize the transmission and high-quality encoding of video frames, which contain moving obstacles and those situated at closer relative distances. ii) The same optimal selection policy π*(at∣st) should be guaranteed under both \({r}^{{\prime} }\) and r. As such, we adopt a potential-based reward shaping approach to construct \(\hat{r}\), which can be represented as:

where Φ( ⋅ , ⋅ ) is a potential function, and 0 < γ < 1 denotes the discounted factor. Unlike traditional pre-defined potential functions that require full prior knowledge, we jointly consider domain knowledge with DNNs to construct the potential function.

Temporal-spatial video compression implementation

Given the selection policy π(at∣st) inferred from RL, the compression configurations at time step t (i.e., at) are selected based on the current obstacle status and previous selection (i.e., st). According to two components within the action space, this procedure implements temporal-spatial video compression through frame filtering and intra-frame uneven region encoding, respectively.

Notably, while aggressively filtering multiple consecutive video frames by RL can significantly boost compression efficiency, it may also compromise reliability due to two possible factors: misjudgments by RL and unforeseen obstacles emerging between filtered frames. To ensure 100% collision avoidance rates, a complementary frame transmission strategy is implemented following the RL selection results. The core of this strategy is to ensure the transmission of the current frame whenever RL has filtered the previous N consecutive frames. Note that the integrated multi-modal sensor operates at 6 frame rates per second due to limited hardware resources. To ensure a basic transmission of visual information and mitigate safety hazards even during aggressive compression, the integer value of N ranges from 0 to 5. Inspired by congestion control strategy in the TCP/IP protocol, accordingly, we will discuss how to dynamically set N by modeling the following three conditions as a Markov chain.

-

No Obstacle Present (Increase Mode): We exponentially increase N for improved compression efficiency due to the absence of danger, that is, \(N=\min (2N,5)\).

-

Previously Detected Obstacle (Maintain Mode): When encountering the obstacle that has been transmitted to the smartphone before, we moderately increase \(N=\min (N+1,5)\) to balance compression efficiency and detection reliability. This is because the obstacle has already been identified to alert avoidance.

-

New Obstacle Appearing (Decrease Mode): We prioritize immediate threat detection by setting N = 0, guaranteeing transmission of the current frame.

Implementation details

First, we choose D-SAC algorithm to construct the above RL for adaptive compression configurations selection. The specific network structure of D-SAC and corresponding loss functions are described as follows. The D-SAC consists of the actor and critic networks. The actor network is to generate the selection policy, while the critic network is to improve this selection policy by providing expected reward estimations to the actor network. Specifically, the actor network mainly contains three linear layers: the first two layers are each followed by a ReLu activation function, and the third linear layer is accompanied by a Softmax activation function. The loss function for actor network J( ⋅ ) with parameter ϕ is depicted as follows:

where \({\mathcal{D}}\) is a replay buffer of past experiences, ρ denotes the temperature parameter, and Qθ( ⋅ ) denotes the Q-value function estimated from the critical network. In terms of the critic network, we utilize the pre-trained network within the PyTorch 1.13.1. Its corresponding loss function JQ(θ) with parameter θ can be represented as follows.

where ϱ denotes discount rate, and \({U}_{\bar{\theta }}({{\bf{s}}}_{t})={E}_{{{\bf{a}}}_{t} \sim \pi }[{Q}_{\bar{\theta }}({{\bf{s}}}_{t},{{\bf{a}}}_{t})-\rho \log ({\pi }_{\phi }({{\bf{a}}}_{t}| {{\bf{s}}}_{t}))]\) is estimated from a target network with parameter \(\bar{\theta }\) for Q.

Next, we give the network structure and loss function of DNNs, which are used to estimate potential values of reward shaping. Specifically, two-layer DNNs are modeled as a multi-task classifier, covering two distinct binary tasks: one task is to determine whether the current frame contains moving obstacles, while the other task is to determine whether the actual obstacle location is within the coordinates estimated from the depth variation. In terms of network structure, the first layer, with 256 neurons, is designed for pre-processing, and the latter layer, with 64 neurons, is responsible to yield multiple classification results. In summary, the DNNs structure is represented as follows.

where st is the state space serving as the input for both RL and DNNs, X(g) and X(j) denote the network weights, b(g) and b(j) denote the bias, and σ is the activation function. Accordingly, the loss function of DNNs, denoted as Loss, quantifies the discrepancy between the DNNs’ classification results zj and actual ground truth zd. It employs the cross-entropy loss formula:

where zj = [z1, z2, z3, z4] denote the probabilities of obstacles being in a moving state, a stationary state, within a specific region of coordinates, and outside that region, respectively. Based on classification results of DNNs, we can derive numerical potential values, which are further utilized to calculate knowledge-driven reward signals in Eq. (2). Specifically, i) Case 1: Distance less than 3 m. If D-SAC decides to transmit this frame, we assign potential values, i.e., Φ = z1 − z2 + z3 − z4. If D-SAC decides to filter this frame, potential values are assigned, i.e., Φ = z2 − z1 + z4 − z3. ii) Case 2: Distance greater than 3 m. If deciding to transmit this frame, we assign negative potential values for higher compression efficiency, i.e., Φ = − 1. Otherwise, potential values are set to Φ = z2 − z1 + z4 − z3.

Finally, we describe the training and testing methods for the aforementioned D-SAC and DNNs. During the offline training stage, we collect 21,600 pairs of samples within an hour as the dataset, which is splitted into the training set of 17280 pairs and the validation set of 4320 pairs at a ratio of 4:1. We train the D-SAC network, including actor and critic networks, via the Adam optimizer while setting Beta values to 0.9 and 0.999, respectively. In order to guarantee the fast convergence during the training of D-SAC, the learning rate is empirically set to 3 × 10−4 through multiple times of experiments. In addition, we use a fixed batch size of 64 for 100 iterations. In particular, the data analysis was performed using Python 3.8 and Excel 2021. The above training procedure uses PyTorch 1.13.1 as the framework, and is executed on the server with a NVIDIA RTX 3080 GPU. Additionally, the experimental figures were plotted by Origin 2019b.

Cross-modal obstacle detection

Challenge

Exploiting both compressed video signals and depth signals to ensure reliable obstacle detection is paramount for the WOAD. As summarized in Table 1, multi-modal fusion methods applied to some obstacle avoidance devices leverage complementary or redundant information among different modalities to enhance reliability. Due to the heterogeneity in data formats and the difficulty of feature alignment, these devices often employ outcome or decision-level fusion, which may cause false positives and false negatives under the following conditions: i) If incorrect or imprecise decisions from the independent processing of each modality are directly fed into the fusion stage, it can result in the wooden barrel theory, where the final outcome depends on the weakest detection41. ii) When detection results from different modalities are uncertain or contradictory, the outcome fusion can hardly resolve these inter-modal conflicts, thereby affecting the reliability of obstacle detection. Taking ultrasonic canes with IR sensors proposed by Narayani et al. in Table 1 as an example42, IR sensors may struggle to accurately detect nearby stairs in low-visibility conditions, whereas ultrasonic sensors fail to detect them due to their limited range at close distances. Consequently, the outcome fusion tends to improve detection accuracy only in specific scenarios but lacks robustness and generalization in diverse contexts. Therefore, how to ensure accurate and robust obstacle avoidance across various scenarios remains a thorny issue.

Note that both video and depth signals are capable of roughly outlining the shape and location of the same obstacle from different perspectives (Supplementary Fig. 1a–c). This indicates that there exist inherent correlations between these heterogeneous modalities. By using these correlations as intermediaries, they can break down the barriers between modalities from an inter-modal perspective, and achieve feature-level complementarity across different modalities. This approach can potentially address the challenges of robustness and generalization in obstacle detection. We take pole detection as an example. TOF sensor data alone struggles to differentiate poles from trees due to their similar shape features. However, when fused with video data from the IR sensor, the added texture and color features (e.g., the roughness difference to distinguish between trees and poles) significantly enhance detection accuracy and robustness under both sunlight and nighttime conditions (Supplementary Fig. 10). In this way, we need to consider the following two technical difficulties: i) How to characterize modal correlations, and then leverage these correlations to achieve feature fusion and complementarity between video and depth modalities? This would eliminate the need for manual scenario identification and subsequent specific fusing mechanisms, so as to facilitate robustness across scenario changes (e.g., daytime, nighttime). ii) How to implement lightweight on-device obstacle detection? This would alleviate the computational burden on the smartphone without affecting its other functions. Consequently, we propose a cross-modal obstacle detection module on the smartphone, including separate feature extraction, cross-modal feature fusion, and decision-making network. This module comprehensively explores inherent modal correlations based on Transformer blocks. By leveraging these correlations, an inter-modal attention mechanism is employed to fuse and complement features at different granularities. Compared with SOTA methods, this module can facilitate reliable and lightweight obstacle detection across diverse scenarios (Supplementary Fig. 11 and Supplementary Table 6).

Separate feature extraction

The You Only Look Once (YOLO) series, known for its fast and accurate object detection capabilities, serves as the base model for the cross-modal obstacle detector. Among the various versions, we have selected the mature YOLOv5 for this work due to its robust detection performance and low model complexity (Supplementary Fig. 11 and Supplementary Table 6). Based on the pre-trained YOLOv5, the initial two layers function as extracting features for compressed video and depth signals independently. Given the compressed video signals V and depth signals D, video features Iv and depth features Id can be acquired as:

where YOLOtwo denotes the initial two layers of pre-trained YOLOv5 (i.e., Focus layer and Conv layer). Additionally, we extract fine-grained features within each modality. This is achieved through two separate convolution layers with SiLU activation. That is,

where both fv( ⋅ ) and fd( ⋅ ) denote the convolution layers, and Θv and Θd denote the corresponding network parameters. Here, \({{\bf{I}}}_{{\rm{intra}}}^{v}\) and \({{\bf{I}}}_{{\rm{intra}}}^{d}\) are fine-grained features for video and depth modalities, respectively.

Cross-modal feature fusion

This procedure is designed to characterize inherent correlations between video and depth modalities, and then leverage these correlations to align, fuse, and complement the multi-modal features by employing Transformer blocks with an inter-modal attention mechanism. Specifically, we firstly concatenate two types of features, i.e., Iv and Id, within a common feature space, that is,

where Icon denotes the concatenated features.

Then, we utilize multiple Transformer blocks to comprehensively characterize modal correlations from different perspectives. Each block focuses on processing concatenated features from one specific perspective, such as texture, shape, distance. Specifically, the self-attention mechanism within each Transformer block is employed to characterize modal correlations through attention weights, which are represented as follows.

where ω denotes the attention weights, j denotes the j-th Transformer block, \({\rm{softmax}}(\cdot )\) denotes the softmax function, M represents the dimension of Icon. The H and K are obtained from weight matrices of Query and Key in the self-attention mechanism, respectively. Leveraging these attention weights, the features Ispe,j fused from the specific perspective can be generated from the j-th Transformer block. That is,

where \({{\bf{I}}}_{{\rm{con}},j}^{{\prime} }={{\bf{I}}}_{{\rm{con}}}{{\bf{W}}}_{{\rm{val}},j}\) is obtained from weight matrix of Value, denoted as Wval,j, in the self-attention mechanism.

Subsequently, the inter-modal attention mechanism is introduced to dynamically compare the importance of specific features Ispe,j from different Transformer blocks across varying scenarios, and generate a weighted features. Therefore, the weighted features Iinter from multiple Transformer blocks can be denoted as,

where \({\hat{\omega }}_{j}\) denotes the corresponding weight assigned to j-th Transformer block. The calculation of \({\hat{\omega }}_{j}\) is similar to Eq. (13) and Eq. (14) within the attention mechanism and its extensions, and hence we omit the steps here.

Finally, we employ the residual network structure to obtain the overall fused features. Given that the intra-modal features focus on information that significantly impacts detection results, we combine them with weighted features Iinter as follows:

where \({{\bf{I}}}_{{\rm{fuse}}}^{v}\) and \({{\bf{I}}}_{{\rm{fuse}}}^{d}\) denote the fused features for video and depth modalities, respectively. ⊗ denotes the matrix multiplication. The overall fused features Ifuse are given by:

Decision-making network

Leveraging overall fused features Ifuse, the decision-making network built upon the remaining layers of pre-trained YOLOv5 determines detection results. In particular, the optimization of the whole cross-modal obstacle detection module depends on loss functions Lloss, that is,

where Lclass, Lcon, and Lan denote the classification loss, confidence loss, and bounding box loss, respectively. In addition, α and β are hyper-parameters to balance different loss functions. Compared to video-only and depth-only strategies, the numerical results in Supplementary Fig. 12 indicate that our proposed cross-modal obstacle detection module outperforms them in terms of F1-score, recall, and precision.

To achieve a lightweight network design, insights from the loss functions in Eq. (19) provide an opportunity to implement efficient on-device AI. Unlike SOTA multi-modal fusion methods that focus solely on fine-grained object detection (e.g., distinguishing between a bike and a truck), our design goal of Lclass is to adjust the detection granularity to match the time-varying threats posed by obstacles and real-time computational capability of the smartphone. For instance, when the smartphone is under heavy resource usage (e.g., browsing online) through monitoring CPU usage, we can simply determine the broader categories of some stationary obstacles (e.g., identifying a human instead of distinguishing between a boy and a girl). Following this idea, the obstacle detection task is simplified to a multi-class classification problem, which inherently requires fewer model parameters without affecting reliable obstacle avoidance43. Overall, the parameter size of the whole cross-modal obstacle detection module (7.5 M) remains comparable to that of the conventional YOLOv5 (7.03 M), which leads to only a marginal ~6.6% increase in the total parameter size while remarkably improving detection performance.

Implementation details

The cross-modal obstacle detection module is deployed on a common smartphone within the WOAD. Given the network structure shown in Fig. 2c, we report the network hyperparameters and practical deployment of this module.

Regarding the network hyperparameters of the whole module, we firstly split the dataset covering 10,278 samples into the training and testing sets at a ratio of 4:1. This module comprises 387 layers with a total of 7497914 parameters, which are trained using the AdamW optimizer with the learning rate of 3 × 10−3. During the training stage, this module demonstrated stability, robustness, and effectiveness as the number of obstacle classes increased (Supplementary Fig. 13). We set the batch size to 32, and the epoch to 500. Note that we execute the above training procedure on the server, which is equipped with an NVIDIA RTX 3080 GPU.

Concerning the practical deployment for this module, we selected a Samsung Galaxy S23 Ultra (with 12GB RAM/512GB ROM) as the smartphone in our experiments, which features an 8-core Snapdragon 8 Gen2 CPU and an Adreno 740 GPU. However, due to the significant differences between Android/IOS systems and traditional GPU/CPU servers in terms of the operating principle, it is impractical to directly deploy the cross-modal obstacle detection module trained by the server to the smartphone. To resolve this issue, we employed the Google’s Tensorflow Lite to convert this module into a format compatible with the Android/IOS system44. This also facilitates efficient on-device AI inference, but without hurting the detection performance.

Hardware design

Challenge

From a software perspective, the D-SAC algorithm in RL involves training and computing vast network parameters in the form of complex matrix operations, which are major bottlenecks for low-power and fast compression configuration selection. Apart from a lightweight network design for D-SAC, this part investigates improving power efficiency and accelerating execution speed from a hardware perspective. Compared to prevalent CPU-only and GPU-based platforms, FPGA-based network accelerators are currently emerging as a promising paradigm due to their potential advantages of power consumption and execution speed45. However, achieving these two benefits requires the scheduling of FPGA resources for efficient matrix operations, which poses challenges for D-SAC. Specifically, the actor network in D-SAC, which generates the selection policy for compression configurations, is composed of three linear layers. Essentially, three high-dimensional matrices representing three layers are sequentially multiplied during the inference stage. This gives rise to two main issues. First, matrix components appear column by column rather than directly obtaining all at once, causing processing elements in existing FPGA accelerators to spend significant time waiting for all components necessary for subsequent matrix-matrix multiplication. Second, due to the growing network parameters of high-dimensional D-SAC, there is often a reliance on external off-chip memory resources for parameter storage and transfer, which increases power consumption. To overcome these issues, we construct a flexible MFVU streaming processing architecture designed to efficiently handle high-dimensional matrix operations specific to the D-SAC algorithm. This architecture introduces processing elements to optimize data flow, and adapts the model size of D-SAC to fit on-chip memory resources, thereby improving both power efficiency and execution speed.

Design of MFVU

Unlike the conventional time-consuming processing element that awaits the arrival of all components in the row vector before starting multiplication, we firstly design the FVU to significantly accelerate row vector-matrix multiplication. The core of the FVU is to promptly multiply the current component (e.g., the first element) in the row vector with all components in the corresponding row vector (e.g., the first row) of the matrix. The resulting intermediate output is temporarily stored in the buffer block. Upon the arrival of the last component in the row vector, all the intermediate outputs in the buffer block have accumulated, and the final output is generated simultaneously. To clarify the details, we take examples in Fig. 2d and Supplementary Fig. 14, respectively.