Abstract

Manual interpretation of CT images for bone metastasis (BM) detection in primary cancer remains challenging. We present an automated Bone Lesion Detection System (BLDS) developed using CT scans from 2518 patients (9177 BMs; 12,824 non-BM lesions) across five hospitals. The system, developed on 1271 patients and tested on 1247 multicenter cases, demonstrates 89.1% lesion-wise sensitivity (1.40 false-positives/case [FPPC]) in detecting bone lesions on non-contrast CT scans, with 92.3% and 91.1% accuracy in classifying BM/non-BM lesions for internal and external test sets, respectively. Outperforming radiologists in lesion detection (40.5% sensitivity; 0.65 FPPC), BLDS shows lower BM detection sensitivity than junior radiologists, though comparable to trainees. BLDS improves radiologists’ lesion-wise sensitivity by 22.2% in BM detection and reduces reading time by 26.4%, while maintaining 90.2% patient-wise sensitivity and 98.2% negative predictive value in real-world validation (n = 54,610). The system demonstrates significant potential to enhance CT-based BM interpretation, particularly benefiting trainees.

Similar content being viewed by others

Introduction

Bone is a common site for tumour metastasis1. The median survival after the diagnosis of bone metastases (BMs) ranges from 1 to 3–5 years in patients with lung cancer, breast cancer, or prostate cancer2. Patients with suspected metastases who undergo an accurate staging evaluation require comprehensive imaging to detect or rule out BMs3. Conventional computed tomography (CT), a useful imaging technique, is used to evaluate lymph node or visceral spread in addition to BMs4. CT remains the most widespread imaging technique for detecting BMs due to its relatively low cost, high contrast resolution, and short scan time5,6, compared to X-ray, positron emission tomography (PET), and magnetic resonance imaging (MRI). Nevertheless, it is demanding and laborious for radiologists to interpret numerous medical multi-site CT images manually. Additionally, various benign mimickers, such as bone islands, haemangiomas, and degenerative changes, are present in bones throughout the body7. Due to the substantial workload of multi-site CT scans for radiologists, fatigue from reviewing the images can increase the rate of missed diagnoses among them8,9.

Burgeoning artificial intelligence (AI) techniques offer potential for computer-aided diagnoses, aiding in the segmentation, detection, and classification of bone metastases (BMs) on CT images8,10,11,12,13,14,15,16,17,18,19,20,21. Previous studies have a developed deep learning algorithm for BM detection on CT10,11,12,13,14,15,19,21; however, these only focused on the spine. Although the spine is the most frequent site for skeletal metastases22, BMs can occur anywhere throughout the entire skeleton. Moreover, most of these studies have relied on single-center data, and have been limited by small sample sizes and are stricted range of bone lesion types, making it difficult to integrate these algorithms into clinical practice. To address these limitations, multi-institution and multivendor datasets should be used for training and validating deep learning models21. Technically, previous studies have explored the application of deep convolutional neural networks (CNNs) combined with traditional machine learning algorithms for processing handcrafted image features. However, these approaches have failed to achieve an end-to-end fully automated diagnostic solution. Noguchi et al. implemented a deep learning method for candidate region detection and false positive (FP) reduction in BM detection through an observer study8, which is a step towards developing automatic detection methods for BMs that are more applicable to clinical practice. Furthermore, only a few observer studies have been conducted to demonstrate how to translate these results into clinical practice8,13,15,23. Observer studies are important because they allow clinicians to evaluate the performance of the algorithm in a real-world setting and provide feedback on its usability and effectiveness. Additionally, it is worth noting that readers have historically interpreted images using a sequential reading design without crossover24,25, which can introduce reading order bias, favoring the image read last26. This bias should be considered and controlled in observer studies to ensure an accurate evaluation of the algorithm’s performance. Lastly, but importantly, the generalization ability of the algorithm in the real world should also be validated. This can be done through prospective studies in multiple clinical sites to assess the algorithm’s performance in different environments and conditions. Overall, while there has been progress in developing AI techniques for BM detection on CT images, there is still a need for more robust and generalized algorithms that can be integrated into clinical practice.

To address these shortcomings of existing work, we retrospectively collected a large-scale cohort from a specialized cancer hospital to train and validate a fully automated diagnostic system, the bone lesion detection system (BLDS). We then compared the performance of the BLDS and radiologists at different levels, using internal and external cohorts from five large tertiary hospitals in a randomised crossover design. Lastly, to validate the critical issues related to realistic clinical translation, we explored the integration of the BLDS into large-scale real-world multi-scenarios of routine clinical processes, involving 54,610 consecutive patients from three settings (emergency, outpatient, and inpatient). This study included CT imaging devices from most mainstream manufacturers, comprising 13 equipment models worldwide. More importantly, the proposed BLDS can detect all bone lesions (BLs) in the scan field and differentiate common BL stypes (i.e., osteoblastic BM, osteolytic BM, mixed BM, haemangioma, Schmorl’s node, bone island, end-plate osteochondritis, and other lesions) in a fully automated manner. The results demonstrate that the BLDS improves bone metastasis detection on CT scans, showing high sensitivity and accuracy in identifying lesions. While slightly less sensitive than junior radiologists for BM detection, it outperforms them in general lesion detection. BLDS enhances radiologists’ diagnostic performance by boosting sensitivity and reducing analysis time, particularly aiding trainees. Real-world validation highlights its reliability with strong patient-level sensitivity and predictive accuracy.

Results

Patient characteristics

For the development dataset, we included 1271 eligible patients with 12,707 BLs from Sun Yat-sen University Cancer Center (SYSUCC) randomly divided into a training (492 [43.0%] patients with 6,013 BMs, and 651 [57.0%] patients with 5010 non-BMs), validation (31 [48.4%] patients with 578 BMs, and 33 [51.6%] patients with 246 non-BMs), and test (35 [54.7%] patients with 592 BMs, and 29 [45.3%] patients with 268 non-BMs) dataset. The detailed demographic and image characteristics of the development dataset are shown in Table S1. For the multicentre test cohort, the data from 394 patients with 1994 BMs, 769 patients with 7300 benign BLs, and 84 patients without BLs were consecutively collected from five large tertiary hospitals (refer to Table S2 for the different lesion types across internal and external datasets), and were randomly divided into groups A and B. The median age of the patients in groups A and B was 57 (interquartile range [IQR], 47–66) years and 58 (IQR, 48–67) years, respectively. The characteristics of both groups are summarised in Table S3. The ratio of men to women between groups A and B among participants with BMs was not significantly different (110:100 vs. 98:86, P = 0.919), although a significant difference was observed when considering all patients in groups A and B (294:327 vs. 333:293, P = 0.041). Both groups had no differences regarding other demographic information, scan conditions, or lesion characteristics.

Evaluation of BLDS performance

The BLDS was validated using a set of 1247 patients with tumours from one internal and four independent external cohorts. The algorithm outputs different results based on preset thresholds. Utilizing the free-response receiver operating characteristic (FROC) curve for BL detection and considering the decision points for various bone structures in the validation dataset, we have established specific thresholds as the benchmark values for different modules and bone locations (Table S4 and Fig. S4). Under the operating point, for lesion detection, the BLDS achieved an average sensitivities of 89.9% and an average FP per case (FPPC) of 1.40 in the multicentre test datasets. Especially, the sensitivity for lesion detection was 89.0% at an FPPC of 1.33 for SYSUCC, 89.0% at an FPPC of 2.80 for Hunan Cancer Hospital (HNCH), 93.6% at an FPPC of 1.27 for the Eighth Affiliated Hospital of Sun Yat-sen University (EAHSYSU), 90.9% at an FPPC of 1.26 for Shantou Central Hospital (STCH), 88.2% at an FPPC of 1.20 for Sun Yat-sen Memorial Hospital (SYSMH) (Fig.1a). For BM identification, the BLDS achieved an average sensitivity of 88.2% and an average FPPC of 1.4 in the multicentre test datasets (Fig. 1b). The sensitivity for diagnosis of BM was 88.1% at an FPPC of 1.33 for SYSUCC, 84.2% at an FPPC of 2.80 for HNCH, 93.7% at an FPPC of 1.27 for EAHSYSU, 89.6% at an FPPC of 1.26 for STCH, and 84.9% at an FPPC of 1.20 for SYSMH, respectively. For differential diagnosis at an FPPC of 1.40, the sensitivity of the BLDS was 88.7% for osteoblastic BMs, 86.5% for osteolytic BMs, 93.7% for mixed BMs, 94.2% for heamangiomas, 92.4% for bone islands, 93.8% for Schmorl’s nodes and 95.0% for end-plate osteochondritides (Fig. 1c). The detailed sensitivities and FPPC of the BLDS for the seven BLs in all five test datasets are displayed in Fig. 1d–h.

a Free-response receiver operating characteristic (FROC) curves of bone lesion detection in all test datasets; b FROC curves of BM identification in all test datasets; c FROC curves of the detection of seven bone lesion types in all test datasets; d–h FROC curves of the detection of seven bone lesion types in SYSUCC, HNCH, EAHSYSU, STCH, and SYSMH, respectively. i, j Confusion matrix for the binary classification of BM and non-BM in the internal and external test datasets, respectively. k, l Confusion matrix of the eight-class classification in the internal and external test datasets, respectively. m The distribution of the number of patients with BMs and lesion number of BMs according to different diagnosis methods. Diagnosis method 1 denotes comprehensive assessment by three musculoskeletal specialists with more than 10 years of experience; Diagnosis method 2 denotes specialists combined with medical history information provided by external hospitals; Diagnosis method 3 denotes specialists combined with follow-up information or PET-CT/MRI results; Diagnosis method 4 denotes comprehensive judgement made by specialists using a combination of follow-up and PET-CT/MRI. n FROC of BM identification according to four diagnostic methods. Source data are provided as a Source Data file (Source_data_Fig. 1.xlsx).

The confusion matrices in Fig. 1i–l provide a comprehensive understanding of the model’s performance across different lesion types. For the two-category classification (BM vs. non-BM), the BLDS achieved an accuracy of 92.3% (95% confidence interval [CI]: 91.2–93.4%) and a balanced accuracy (averaged class-level accuracy) of 86.8% (95% CI: 84.9–88.6%) in internal test cohorts and an accuracy of 91.1% (95% CI: 90.4–91.8%) and a balanced accuracy of 83.4% (95% CI: 82.1–84.7%) in external test cohorts. For the eight-category classification, the BLDS achieved an accuracy of 82.1% (95% CI: 80.5–83.6%) and a balanced accuracy of 77.0% (95% CI: 74.6–79.3%) in the internal cohort, and an accuracy of 80.8% (95% CI: 79.7–81.8%) and a balanced accuracy of 75.1% (95% CI: 73.3–76.8%) in the external cohorts (for more detailed data regarding the diagnostic performance of BLDS, please refer to Tables 1 and S5).

Since the definite diagnosis of BMs does not primarily rely on pathological examination, clinicians mostly depend on CT images, follow-up observations, and auxiliary diagnostic tools such as PET-CT and MRI. Consequently, we have carefully stratified the diagnostic methods for BMs in the multicentre datasets into four categories: (1) comprehensive assessment by three musculoskeletal specialists with more than 10 years of experience; (2) specialists combined with medical history information provided by external hospitals; (3) specialists combined with follow-up information or PET-CT/MRI results; and (4) comprehensive judgement made by specialists using a combination of follow-up and PET-CT/MRI (Fig. 1m). Based on these four different diagnostic methods for BMs, we conducted a stratified analysis of the BLDS’ performance in detecting BMs. With an FPPC of 1.40, the lesion-wise sensitivity of the BLDS in detecting BMs corresponding to the four diagnostic methods was 88.2%, 88.5%, 92.6%, and 85.9%, respectively (Fig. 1n). Although there was a certain degree of imbalance in the data distribution of these four diagnostic methods, the BLDS’ sensitivity in detecting metastatic tumours remained above 85%, demonstrating stable detection performance.

Interpretability of the BLDS classification

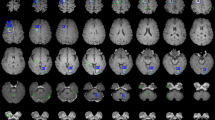

To gain insights into the decision-making processes of the classification model, we visualised its decision criteria by generating class activation map (CAM) images27. These images provided a visual representation of where a convolutional neural network (CNN) model focused its attention when identifying a specific category (Fig. 2). This visualisation allowed for an intuitive observation of the intensity of the CNN model’s response to each voxel during the classification of a particular category, revealing the critical regions within the input image as perceived by the model.

We presented class activation map (CAM) images for seven common bone lesion types. These labels represent: label 1: Osteoblastic bone metastasis (BM); label 2: Osteolytic BM; label 3: Mixed BM; label 4: Haemangioma; label 5: Schmorl’s node; label 6: Bone islands; label 7: End-plate osteochondritis. The first row exhibited cropped images of the lesion in three sections: the transverse plane, sagittal plane, and coronal plane. The second, third, and fourth rows, respectively, presented the CAM images for the top three predicted probability classes. It was evident that the CAM images for the class with the highest probability (usually the ones predicted correctly) were consistently the most intense and focused. Conversely, the CAM response for classes with lower probabilities was typically more scattered and less intense. Taking osteoblastic BM as an example, the CAM images for the classification model predicting osteoblastic, osteolytic, and mixed BM were respectively displayed in the second, third, and fourth rows. The probability of being predicted as osteoblastic BM was the highest and correct, with its CAM image demonstrating the strongest response intensity at the location of the lesion.

Ablation studies

We conducted a series of ablation studies to validate the contributions of our detection algorithm pipeline in the BLDS. The methods compared included: (1) using solely the common detection model, (2) utilizing both the common detection model and a metastasis-focused model, and (3) our comprehensive framework, which encompasses the common detection model, metastasis-focused model, and FP-removed module. Incorporating the metastasis-focused model significantly improved detection performance (Fig. S1). Given the complexity of BM appearance, this specialized model is better equipped to learn challenging examples, thereby boosting overall performance. By integrating the FP-removed Module, specifically a bone lesion classification model, the detection sensitivity achieved optimal performance at the same FPPC levels. These experimental results effectively demonstrate the efficacy of our proposed algorithm (more details on the BLDS algorithm can be found in the “Methods” section).

We also analysed the impact of training data size on the performance of the BLDS. When training deep learning models, ensuring an ample amount of diverse and representative training data is crucial for enhancing model performance. Insufficient data can result in underfitting or overfitting of the model. To evaluate the adequacy of the training data for the detection model, this study randomly selected different proportions of data from the training dataset for training and assessed the model’s performance on the test dataset. Specifically, we used 20%, 40%, 60%, 80%, and 100% of the overall training dataset (1143 samples) for model training. The validation results were presented in Fig. S2. The findings indicated that when sufficient training data were provided, model performance tended to stabilise, suggesting that our model possessed an adequate amount of training data.

Reader performance

We conducted a randomised crossover multireader multicase (MRMC) study on the five test datasets. To clearly understand the performance of the BLDS against radiologists in a clinical setting, we compared the performance of the BLDS and six readers with different levels of expertise, as well as collaborations between readers and BLDS. The six readers were divided into two groups: one consisting of three trainees with two years of experience, forming the trainee group, and the other composed of three radiologists with 5–10 years of experience in musculoskeletal imaging diagnosis, forming the junior radiologist group. For lesion detection, the average performance values of the six readers fell below the BLDS’ FROC curve. With the aid of the BLDS, the average performance values of trainees and junior radiologists rose above the BLDS’ curve (Fig. 3a). The detailed comparison of BL detection between the BLDS and readers with different experiences is shown in Table S6. At an FPPC of 1.40, the lesion-wise sensitivity of BLDS was 32.8–57.8% higher than that of trainees and junior radiologists in detecting lesions, whereas the FPPC was relatively 0.44–1.15 higher compared to most standalone readers. With the assistance of the BLDS, the lesion-wise sensitivity of all readers improved; notably, trainees and junior radiologists had maintained an FPPC lower than that of BLDS (Fig. 3b). Using the BLDS, the weighted alternative FROC figure of merit (wAFROC FOM) of the pooled readers for BL detection improved from 0.658 to 0.897 (increment, 0.239; P < 0.001). The wAFROC FOM improved for each reader using the BLDS, with increments ranging from 0.183 to 0.295 for the six readers (all P < 0.001) (Table 2). Compared with interpretations without the BLDS, BLDS-assisted interpretations were associated with a higher mean wAFROC FOM for trainees (increment, 0.268; P = 0.003) and junior radiologists (increment, 0.210; P < 0.001) (Fig. 3c). The lesion-wise sensitivity of the pooled readers improved from 40.5% to 83.0% (increment, 42.5%; P < 0.001). Compared with each reader alone, the sensitivity of each reader when assisted by the BLDS improved from 31.1% to 58.8%. Reading time reduced for all readers with the BLDS (from 93–203 s to 59–168 s; P < 0.001). The average reading time per case reduced by 26.4% with the assistance of the BLDS (decrease, 38 s; P < 0.001); for trainees, the average reading time per case was reduced from 122 to 81 s (decrease, 41 s; P < 0.001), and the average time for junior radiologists reduced from 166 to 132 s (decrease, 34 s; P < 0.001) (Fig. 3d). On average, for pooled readers, the FPPC for detecting BLs using the BLDS reduced by 0.22 (0.65 vs. 0.43); per reader, the decrease in FPPC ranged from 0.02 to 0.74 for four of the six readers. The reduction in the average FPPC for trainees and junior radiologists using the BLDS was 0.02 and 0.42, respectively.

a Comparison between BLDS and six readers with different levels of expertise for lesion detection. b Difference analysis curve of BLDS, trainees and junior radiologists with and without the aid of BLDS by the evaluation of sensitivity and FPPC for lesion detection. c The weighted Jackknife alternative free-response receiver operating characteristic curves of trainees and junior radiologists without and with BLDS according to the level of readers experience in bone lesions detection. d Reading time of readers with and without assistance of BLDS in multicentre test datasets. e Comparison between BLDS and six readers with different levels of expertise for BM identification. f Difference analysis curve of BLDS, trainees and junior radiologists with and without the aid of BLDS by the evaluation of sensitivity and FPPC for BM identification. g Difference analysis curve of BLDS, trainees and junior radiologists with and without the aid of BLDS by the evaluation of patient-wise evaluation metrics for BM identification. h FROC curves of the pooled readers without and with BLDS in specific lesion detection. i Count of lesions in different groups based on bone lesion size and the sensitivity of readers with and without the BLDS in detecting bone lesions and BMs. j Count of patients in different groups based on bone lesion number per patient and the sensitivity of readers with and without the BLDS in detecting bone lesions and BMs. k Distribution of bone lesion size across different groups. l Distribution of the number of bone lesions across different groups. Source data are provided as a Source Data file (Source_data_Fig. 3.xlsx).

For BM detection, the average detection performance values of trainees overlapped with the BLDS’ FROC curve, and the performance of the junior radiologists was better than the BLDS (Fig. 3e). The lesion-wise sensitivity of the BLDS was 6.9–29.5% higher than that of trainees in detecting BMs (P < 0.001), whereas the FPPC was relatively 0.04–0.29 higher compared to trainees. The lesion-wise sensitivity of only one of the junior radiologists, with an FPPC of 0.12, was found to be 4.2% higher than that of the BLDS (Table S7). With BLDS assistance, the lesion-wise sensitivity of five readers improved, surpassing that of the BLDS (increment, 11.1–22.3%, all P < 0.001). Among the five readers, an increase in FPPC was observed for one of the trainees and one of the junior radiologists, compared with the BLDS (increment, 0.04–0.38) (Fig. 3f). The lesion-wise sensitivity of the BLDS was 6.9–29.5% higher than that of trainees in detecting BMs. At the patient level, the sensitivity of the BLDS surpassed all standalone readers (all P < 0.001), whereas the specificity was inferior to that of these radiologists (all P < 0.05). Except for one trainee, the positive predictive value (PPV) of five readers was better than that of the BLDS (all P < 0.001), whereas the negative predictive value (NPV) of all readers was inferior to that of the BLDS (all P < 0.05). The accuracy of the BLDS was inferior to that of two junior radiologists (all P < 0.05) and was on par with the average accuracy of junior radiologists. The balanced accuracy of the BLDS was on par with junior radiologists and better than that of two trainees (all P < 0.05). With the BLDS assistance, the patient-wise sensitivity of only two trainees was comparable to that of the BLDS, while the sensitivity of the remaining readers was poorer than the BLDS; the NPV of five readers was on par with that of the BLDS, except for one trainee’s, which was poorer than that of the BLDS; the accuracy, balanced accuracy, patient-wise specificity and PPV of all readers exceeded or equalled that of the BLDS (Fig. 3g and Table S8).

Table 3 details lesion-wise sensitivity, FPPC, patient-wise sensitivity, patient-wise specificity, PPV, NPV, accuracy and balanced accuracy of each reader for BM detection with and without the use of the BLDS. Using the BLDS, the lesion-wise sensitivity of the pooled readers was 22.2% higher with BLDS support than without (without BLDS, 54.6%; with BLDS, 76.8%; P < 0.001). The average lesion-wise sensitivity of trainees improved from 46.5% to 72.9% (increment, 26.4%; P < 0.001), and the sensitivity of junior radiologists increased from 62.6% to 80.7% (increment, 18.1%; P < 0.001). The increments in lesion-wise sensitivity ranged from 12.2% to 29.0% for six readers (all P < 0.001). The average FPPC of pooled readers was 0.24 without the BLDS and 0.39 with the BLDS. The increased FPPC of trainees and junior radiologists with BLDS assistance was 0.18 and 0.12, respectively. With the BLDS, the patient-wise sensitivity of pooled readers increased from 82.1% to 91.0%, and the improvement ranged from 6.3% to 16.7% for six readers. The average patient-wise sensitivity of trainees increased by 10.4% (P < 0.001), and the sensitivity of junior radiologists increased by 7.4% (P < 0.001). The patient-wise specificity and PPV of all readers were not significantly different between the two sessions with and without BLDS assistance. The increments in patient-wise NPV ranged from 2.9% to 6.6% for six readers (P = 0.006 for one junior radiologist, and P < 0.001 for all other readers). The average NPV of trainees increased by 4.4% (P < 0.001), and the sensitivity of junior radiologists increased by 3.2% (P < 0.001). The patient-wise accuracy of the pooled readers aided by the BLDS improved from 90.6% to 92.9% (increment, 2.3%; P < 0.001). The average accuracy of trainees increased by 2.8% (P < 0.001), and the accuracy of junior radiologists increased by 2.0% (P < 0.001). With the aid of the BLDS, the patient-wise balanced accuracy of pooled readers improved from 88.3% to 92.4% (increment, 4.1%; P < 0.001), and the increment ranged from 2.0% to 7.5% for the six readers (all P < 0.05). The average accuracy of trainees increased by 4.8% (P < 0.001), and the balanced accuracy of junior radiologists increased by 3.4% (P < 0.001).

Subgroup analysis of reader performance

Lesion-wise sensitivity for detecting osteoblastic BMs, osteolytic BMs, mixed BMs, haemangiomas, bone islands, Schmorl’s nodes, and end-plate osteochondritides improved with BLDS assistance (increment, 12.8–52.4%; P < 0.001). With the aid of BLDS, the FPPC of the pooled readers for BM and bone island detection slightly increased (Table S9 and Fig. 3h).

Regardless of their level of experience, the lesion-wise sensitivity of readers in detecting clinically significant bone metastases (BM), involving pathological fractures, spinal canal stenosis, and soft tissue masses, was notably improved with the assistance of BLDS. This improvement was evident in an increment ranging from 5.9% to 29.7% (P < 0.05). The lesion-wise sensitivity of pooled readers was improved by 14.5% with the aid of BLDS (P < 0.001) (Table S10).

Improvement in patient-wise sensitivity with the BLDS aid was observed regardless of BM location. For trainees, patient-wise specificity for BMs on the ribs decreased with the use of the BLDS (decrease, 19%; P = 0.005), although this decrease was not statistically significant for BMs located on other body parts, irrespective of experience level (decrease, 0.4–1.9%; P = 0.058–0.412) (Table S11).

Lesion size and number distributions are shown in Fig.3i–l. With the aid of the BLDS, the sensitivity improved in detecting BMs and BLs when handling lesions of different sizes. Regardless of the number of metastases and BLs per patient, the sensitivity in detecting BMs and BLs improved with the assistance of the BLDS (increment, 1.5–14.6% for BMs; 4.0–13.0% for BLs).

Real-world clinical evaluation in patient triage

The BLDS has demonstrated diagnostic capabilities in multiple retrospective settings. Furthermore, during the inference phase, BLDS, over an average period of half a year, required only 48–90 s per inspection to provide a diagnosis upon receiving CT images. This encompassed bone segmentation, BL detection, and classification, all performed on a single workstation. This promising outcome motivated us to conduct further research to investigate the potential utility of the BLDS in patient triage. As illustrated in Fig.4, we collected data from 54,610 consecutive patients enroled in emergency, outpatient, and inpatient departments between 1 November 2023, and 30 June 2024, at SYSUCC. In this investigation, the BLDS automatically categorised 64.7% of the total patients as low risk (those without BMs), with the remaining 35.3% classified as high risk (those with BMs). Upon retrospective analysis of the medical reports for all cases, the BLDS achieved a sensitivity of 90.2%, a specificity of 72.2%, an NPV of 98.2%, and a PPV of 30.7%. Essentially, the BLDS identified 64.7% of the patients as negative, with 98.8% of those predicted negative cases confirmed as true negatives. To validate the reliability of the reports, two radiologists with 18 and 10 years of experience, respectively, checked the accuracy of 120 randomised reports at different times, referring to medical history, CT images, and other examinations. The accuracy of the reports reached 100%. Our primary objective was to reduce radiologists’ workload by minimizing the number of cases they need to review, not to replace them with the BLDS for diagnostic purposes. As such, a lower PPV is not a major concern in this context. The triage process using the BLDS can assist radiologists in identifying low-risk BM cases and expedite their review of the corresponding imaging cases, thereby reducing the workload of radiologists.

Example of patient triage performed in Sun Yat-sen University Cancer Center. We collected data from 54,610 consecutive patients enroled from emergency, outpatient, and inpatient departments at Sun Yat-sen University Cancer Center. In this investigation, the BLDS automatically categorised 64.7% of the total patients as low risk (those without BMs), with the remaining 35.3% classified as high risk (those with BMs). Upon retrospective analysis of the medical reports for all cases, the BLDS achieved an NPV of 98.2% and a PPV of 30.7%. Essentially, the BLDS identified 64.7% of the patients as negative, with 1.2% of those predicted negative cases confirmed as false negatives.

Discussion

In this study, we developed an automated BL detection and diagnosis system, which comprised three parts: bone segmentation, BL detection and classification. The system underwent training and validation using non-contrast CT scans, spanning from the neck to the pelvis, obtained from 2518 patients with 22,001 BLs who were recruited across five hospitals. Since the development and multicentre test datasets originated from five major tertiary hospitals, encompassing CT scanning equipment from most mainstream manufacturers, the BLDS is applicable to medical centres that were not included in this study. In addition, we conducted a randomised crossover MRMC study for the multicentre dataset to evaluate the added impact of the BLDS on radiologist detection performance and minimized potential bias of sequential reading design. The BLDS exhibited strong robustness and broad applicability in this retrospective study and the real-world clinical practice.

Metastatic bone disease predominantly occurs in certain types of cancer, with a high prevalence in breast (30.9%), prostate (8.5%), lung (36.5%), and gastrointestinal (5.7%) metastases in China28. The onset age of BMs is typically between 50 and 70 years of age. BLs in patients of this age group are categorised into two major categories: benign or metastatic. There are numerous sub-categories of benign lesions, which may exhibit overlapping appearances; these include degenerative joint disease, Schmorl’s nodules, bone islands, exostosis, deformities, old fractures, fibrous dysplasia, and haemangiomas. To enhance the detection rate of BMs in whole-body CT scans from patients with non-bone-derived malignant tumours, the BLDS needed to address two major issues: first, how to identify all BLs within the bone structure in CT images; second, how to accurately classify these BLs as metastatic or non-metastatic. In this study, the BLDS incorporated three cascade network architectures and an FP-removed module for bone segmentation and BL detection, along with a two-class and eight-class classification model. The BLDS achieved higher BL detection performance than the average performance metrics of all trainees and junior radiologists, and its BM detection performance was comparable to that of trainees at the lesion level. At the patient level, the sensitivity and NPV of the BLDS in BM detection surpassed all radiologists, whereas its specificity and PPV were lower. The accuracy of the BLDS was comparable to the average level of trainees, and the balanced accuracy of the BLDS was higher than that of trainees and lower than that of junior radiologists. Previous reader studies that concentrated on BM detection found that the deep learning-based algorithms achieved a lesion-wise sensitivity of 71–92%, with 0.617–3FPPC8,10,13. The primary limitation of these studies is that all included bone lesions (BLs) were bone metastases (BMs), with no consideration for the differentiation between BMs and non-BM lesions. Consequently, the detection results of BMs in these studies were merely equivalent to the detection performance of BLs, mirroring the BL detection results observed in our research.

In the reader study, pooled readers assisted by the BLDS demonstrated a higher wAFROC FOM and lesion-wise sensitivity and lower FPPC than standalone readers in BL detection; moreover, the average reading time was reduced by 26.3%. Thus, the overall performance of readers in detecting BMs improved using the BLDS. No significant inter-session difference in detecting BMs was observed in patient-wise specificity and PPV. In general, after using the BLDS, the magnitude of improvement in trainees' diagnostic performance significantly exceeded that observed among junior radiologists, similar to that in a previous study26. In detecting BMs, the FPPC of the pooled readers increased with the use of the BLDS, which may be due to the unbalanced number of metastatic and non-metastatic lesions. To detect the most clinically relevant BLs, we planed to enroll patients with benign lesions, BMs, and negative cases in equal proportions. When recruiting consecutive cases from the five institutions, we found few negative cases among patients with primary malignant tumours, and the proportion of benign lesions was high. To reflect conditions in clinical practice, we included all lesions without deleting any eligible benign lesions. In the future, an optimised classification of BMs and benign lesions using the BLDS may help decrease the FPPC in BMs detection.

In subgroup analyses based on lesion types, the lesion-wise sensitivity of readers improved with the BLDS when detecting BMs and benign lesions. No significant inter-session differences were observed in the FPPC of the pooled readers. Regarding the location of BLs, the patient-wise sensitivity for BMs improved with the use of the BLDS, regardless of the experience level; however, the sensitivity for vertebral lesions was higher than that of lesions at other locations. This may be due to the large number of vertebral lesions in the development dataset. This result is consistent with those of a previous study8. The patient-wise specificity in detecting BMs in the ribs decreased substantially for trainees using the BLDS. Interestingly, a previous study reported relatively low sensitivity and specificity of AI-based human readings and standalone AI algorithms for detecting rib fractures29. The difficulty in using an AI-based model to detect rib lesions is caused by the morphology and structure of the ribs. Hence, the BLDS’ ability to detect lesions should be further improved. Regarding the size of lesions, BL size showed an intuitive correlation with sensitivity. The larger the lesion, the more imaging features it contains, which makes extraction easier. This result is consistent with those of a previous study8. By stratifying the number of BLs and BMs per patient, the sensitivity of pooled readers using the BLDS in detecting both BMs and BLs improved relative to standalone readers.

In general, the core value of BLDS resides in its precision in identifying and detecting systemic bone lesions, thereby substantially mitigating the risk of missed detections. In contrast to manual diagnosis, AI models within BLDS apply a meticulous and uniform algorithmic approach to each imaging data, guaranteeing a high level of stability and reliability in lesion detection. Radiologists, when diagnosing bone structures, often face the challenge of bone complexity and variability, leading to prolonged, high-intensity visual and cognitive tasks that can result in fatigue and, consequently, an elevated risk of missed detections over time. Secondly, radiologists scrutinize lesions across two-dimensional axial, coronal, and sagittal planes, whereas BLDS is trained and tested on three-dimensional images, introducing another layer of difference in diagnostic approaches. However, in terms of BL classification, radiologists offer comprehensive assessments grounded on lesion morphology, size, density, margin, number, and other characteristics. In stark contrast, BLDS primarily utilizes image processing and deep learning techniques for differential diagnosis of BLs. Given the constraints posed by algorithms and training data, BLDS may lack the precision or comprehensiveness required for certain feature extractions. Furthermore, differing judgement criteria exacerbate these discrepancies. Radiologists integrate a patient’s medical history, symptoms, other test results, and various factors into their comprehensive assessments, whereas BLDS primarily relies on image data. This divergence can lead to scenarios where radiologists identify a lesion overlooked by BLDS or BLDS detects a lesion deemed clinically insignificant by radiologists.

This study has several limitations. First, the retrospective design has a certain level of selection bias, although data were collected consecutively in chronological order. Second, our study used a consensus ground truth from expert musculoskeletal radiologists from the same institution. To ensure the reliability of the ground truth, we allowed all experts to obtain the detailed history of all enroled patients, and a consensus for each case was reached after three rounds of consultation. Third, there was a risk of spectrum bias from the six readers who represented trainees and junior radiologists from three institutions. However, their findings may not be representative of all radiologists worldwide. More radiologists from other institutions should be recruited to test the generalisability of our findings.

In conclusion, the BLDS, an innovative AI system, successfully completed thorough retrospective validation and real-world clinical assessment, showcasing its remarkable ability to automatically detect and classify BLs using non-contrast CT scans. We envision the BLDS evolving into a revolutionary diagnostic tool, empowering radiologists with streamlined workflows, expedited diagnostic processes, and unparalleled diagnostic precision. By reducing patient wait times and enhancing diagnostic accuracy, the BLDS promises to elevate the efficiency and quality of radiological care. Moreover, its potential as an educational platform, offering real-time feedback and guidance to trainees, underscores its multifaceted role in advancing the field of radiology.

Methods

Ethical approval

The study was approved by the Ethics Committee of Sun Yat-sen University Cancer Center (B2022-142-01), Hunan Cancer Hospital (2021KYKS51), the Eighth Affiliated Hospital of Sun Yat-sen University (2023-028-01), Shantou Central Hospital (2024-002) and Sun Yat-sen Memorial Hospital of Sun Yat-sen University (SYSEC-KY-KS-2022-003). The research protocol was consistent with the guidelines outlined in the Declaration of Helsinki. Given the non-invasive nature of the methodology and the anonymisation of data, the requirement for informed consent was waived for retrospectively collected CT images.

Study design and participants

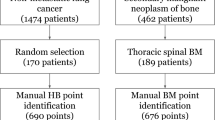

Between 1 January 2016 and 31 July 2019, we retrospectively included 1271 consecutive patients with 12,707 BLs from SYSUCC as 90% training, 5% validation, and 5% test datasets to develop the BLDS randomly. Eligible participants were aged ≥15 years with primary malignant extraosseous tumours, pretreatment plain CT images with <2 mm slice thickness, and soft tissue kernel reconstruction. We excluded bone cementoplasty, instrumented implants, and low-quality plain CT images, such as those having excessive artefacts (e.g., motion and respiratory artefacts). To further evaluate the robustness of the BLDS, we used the same inclusion and exclusion criteria to retrospectively recruit 1247 patients with 9294 BLs between 12 August 2019 and 18 August 2022, including one internal test dataset from SYSUCC and four external test datasets. No overlapping images were included among all datasets. The detailed inclusion and exclusion data for all datasets are provided in Fig. 5. Clinical data, including age, sex/gender, and primary tumour history, were collected from the medical systems of all participating hospitals. The sex and/or gender of the patients were self-reported by the patients at the time of registering information during the admission examination. Furthermore, 54,610 consecutive patients admitted to SYSUCC between 30 June 2019 and 1 November 2023 were collected for a study of patient triage.

a Description of the development, internal and external test datasets. b The real-world clinical test datasets for patient triage. The development dataset was divided into training, validation, and testing sets in a ratio of 90:5:5. The multi-center test dataset encompassed an internal testing subset (SYSUCC) and four external testing subsets (EAHSYSU, HNCH, STCH, SYSMH). We gathered data from 54,610 consecutive patients who were admitted through the emergency, outpatient, and inpatient departments at SYSUCC, with exclusions for Cranial, coronary, limb, breast CT, and CT angiography examinations.

In this study, the ground truth for BM diagnosis typically involved a comprehensive evaluation by experienced radiologists, incorporating clinical and pathological information, CT, nuclear medicine examinations (e.g., emission computed tomography (ECT), PET-CT, and patient follow-up results. Ground truths in the development dataset were determined by four radiologists from SYSUCC, with 2, 2, 2, and 7 years of experience, and checked by three musculoskeletal experts from SYSUCC, with 18, 15, and 10 years of experience. The reader study involved two groups of radiologists: three trainees and three junior radiologists (two with 10 years of experience from STCH and one with 10 years of experience from the First Affiliated Hospital of Guangzhou Medical University), who were blinded to patient information and not involved in patient selection; these readers labelled the multicentre test datasets. Three experts performed ground truth labelling. They conducted three rounds of ground truth confirmation to ensure accuracy. In the initial round, each expert independently annotated the images utilized in the reader study. Subsequently, in the second round, three experts converged to discuss and resolve any discrepancies in annotation among the cases. For the verification of non-metastatic lesions, a decision was made based on the majority vote, adhering to the principle of “minority defers to majority”. In instances where annotations for bone metastases were inconsistent, a third round was invoked, wherein a more senior and experienced expert was consulted for arbitration. Any discrepancies were resolved by consensus. They had access to anonymised clinical information, follow-up CT images and reports, and, where available, relevant PET-CT or ECT.

Radiologist annotation

Before labelling, radiologists and experts underwent rigorous training, which involved familiarisation with AI-based BL detection annotation software (V2.2.1, United Imaging Intelligence, Shanghai, China), reviewing the definitions of each imaging finding, and training on a separate dataset comprising 10 CT scans, which was not included in either the development dataset or the multicenter test dataset. In the development dataset, ground truths were manually established by drawing a 3D rectangular box around each BL on CT images. These were used to indicate the BL types (osteoblastic BMs, osteolytic BMs, mixed BMs, haemangiomas, Schmorl’s nodes, bone islands, end-plate osteochondritis, and other lesions) using the BLDS. Subsequently, a reader study involving five medical institutions was conducted to test the robustness of the BLDS. In total, 1247 CT scans from multicentre datasets were randomised into two groups (Group A [623 cases] and Group B [624 cases]). In the first session, each reader evaluated Group A without the BLDS and then evaluated Group B using the BLDS. In the second session, each reader evaluated Group A with the BLDS and then Group B without the BLDS. The interval between the first and second sessions was 1 month, and the order of the CT images was randomised in each session to reduce recall bias (Fig. S3). They also marked the location of the BLs. The interpretation time was automatically recorded for each case using the BL detection annotation software.

CT protocol and image quality control

CT images were obtained using multiple detector CT scanners with 80–140 kVp or 32–1300 mAs with automatic tube current modulation in all participating institutions. The CT protocols included section intervals of 1–2 mm and section thickness of 1–2 mm. All images were reconstructed using a soft tissue kernel. The detailed CT protocols are provided in Table S12 and Supplementary Methods. The cleaned Digital Imaging and Communications in Medicine (DICOM) series was transformed into a 3D volumetric image. Prior to further analysis, all CT volumes underwent preprocessing through the following steps: (1) resampling of all images to a uniform spacing of 1 mm × 1 mm × 1 mm; (2) initially saturating the image intensity to the range of [−450, 1050] HU to enhance the bone structures within the CT images; and (3) normalizing the intensity values to fall within the range of [0, 1].

BLDS algorithm development

The BLDS algorithm comprised three main components: (1) bone segmentation; (2) BL detection; and (3) BL classification (Fig. 6). Bone segmentation served as the initial and pivotal step, aimed at automatically identifying and segmenting bone structures within CT images. This process enabled the system to precisely extract positional and morphological information about bones, laying the groundwork for further processing and analysis. Subsequently, the BLDS was designed to accurately detect BLs in CT images. The primary lesion type targeted was BM, encompassing osteoblastic, osteolytic, and mixed metastases. Additionally, the BLDS considered clinically prevalent BLs, such as bone tumours (e.g., haemangioma) and other bone abnormalities (e.g., Schmorl’s node, bone island, end-plate osteochondritis). Given the intricate and variable morphology and appearance of different lesions in CT images, along with the diverse nature of bone anatomies, a hybrid deep learning-based framework was employed to construct an effective BLDS. The lesion classification module was tasked with categorising BLs into metastasis and non-metastasis groups. This step was vital for disease diagnosis and treatment planning, as accurate lesion classification assisted clinicians in assessing the severity of the condition and formulating suitable treatment strategies.

a Bone segmentation involves a fast segmentation module trained using a cascaded UNet framework. b Bone lesion detection comprises a lesion detection module and a false-positives (FPs) removal module. c Both the two-class and eight-class bone lesion classification models share a similar network framework based on Res-Net, differing only in the neuron count of the final fully connected layer. The fusion module confirms a lesion as metastatic only when both models agree; otherwise, it is classified as non-metastatic. Subsequently, the case analysis module assesses all lesions, adjusting probabilities if the metastatic confidence of at least one lesion exceeds the predetermined threshold.

Bone segmentation

To achieve efficient and accurate segmentation of medical images, we have developed a robust framework that integrates multi-scale feature fusion with progressive segmentation. Our algorithmic strategy employs a coarse-to-fine processing pipeline. Initially, the original image is down-sampled to generate low-resolution views. Convolutional networks are then used to quickly extract global contextual features, which facilitate the rough localization of organ regions. Following this, regions of interest (ROIs) are constructed based on the localization results. Subsequently, boundary refinement is performed at the original image resolution to obtain finely detailed segmentation results. This two-step approach not only accelerates processing speed but also ensures high segmentation quality. For the network architecture, our design is based on the classic U-Net model, which we have further optimized. U-Net is renowned for its encoder–decoder structure featuring skip connections that effectively combine high-level semantic information with low-level spatial details, thereby enhancing segmentation performance. Additionally, we have incorporated residual connections, which help mitigate the vanishing gradient problem in deep networks, leading to more stable and efficient training processes.

BL detection implementation

The BL detection implementation comprised two modules: a lesion detection module and a false-positives (FPs) removal module. The lesion detection module was further divided into two parts: a common detection model designed to detect all types of BLs and a metastasis-focused detection model specifically tailored for metastasis detection. The additional metastasis-focused model was incorporated due to the clinical significance of BM in cancer-related diagnosis and assessment, necessitating high sensitivity in its detection. The detection algorithm leveraged the feature pyramid network (FPN)30, an anchor-based detection framework that learned to predict a 3D bounding box at multiple scales for lesions of varying sizes. The detection model adopted a cascaded FPN architecture, where two FPNs were trained sequentially. The first FPN aimed to detect all lesions with high sensitivity, whereas the second FPN focused on locations where FPs were likely to occur. During the training processing, positive samples were drawn from ground-truth annotations, while negative samples were randomly selected from the remaining image regions. The second FPN was designed to reduce FPs while maintaining sensitivity. To train this network, positive samples were also derived from ground-truth annotations to ensure high-sensitivity detection, which was the same as the first FPN. However, the negative samples were derived from two parts: (1) FPs detected by the first FPN and (2) additional samples randomly selected from the remaining image regions. This cascaded framework followed the bagging strategy, significantly enhancing overall performance by effectively removing FPs while maintaining high sensitivity. The detected bounding boxes were then merged, and non-maximum suppression was applied to eliminate overlapping boxes31. Using the pyramid architecture, the detection model achieved high sensitivity in detecting lesions of different sizes. During training, input images were randomly cropped into 3D image patches at 128 × 128 × 128, which were then fed into the network to learn the 3D bounding box of the lesions. The detailed architecture of the FPN featured an encoder path and a decoder path. Each level in the encoder path consisted of a downsample block and a residual block, using a convolution operation with a kernel size of 2 × 2 × 2 and a stride of 2 for downsampling to maintain feature information continuity, instead of a pooling layer. In the decoder path, each level comprised an upsample block followed by a residual block, with skip connections between the encoder and decoder paths to merge features at each hierarchy. The output block provided lesion detection results, including 3D bounding box information and the corresponding confidence score. The FP-removed module was designed to reduce FPs primarily caused by image artefacts (e.g., radiation and metal artefacts), further enhancing the robustness of the BLDS. This module used a modified Res-Net classification model to distinguish between real and fake lesions, ultimately removing the fake lesions from the BLDS32. The network architecture consisted of five residual blocks, followed by two fully connected layers and a softmax layer. During training, positive samples (real lesions) were annotated lesions, whereas negative samples (fake lesions) were the FPs from the first lesion detection module. The final output was a 2-class classification result.

BL classification

The lesion classification module encompassed two distinct models: a two-class classification model (distinguishing between metastasis and non-metastasis) and an eight-class classification model (categorising lesions into osteoblastic metastases, osteolytic metastases, mixed metastases, haemangiomas, Schmorl’s nodes, bone islands, end-plate osteochondritis, and others). The two-class model differentiated between metastatic and non-metastatic lesions, whereas the eight-class model provided a more detailed classification into clinically prevalent categories. The fusion module then integrated the outputs of these two classification models. A lesion was confirmed as metastatic if both models concurred; otherwise, it was classified as non-metastatic. Subsequently, the case analysis module assessed all lesions within specific cases. If the confidence level for at least one lesion being metastatic surpassed the predetermined threshold, adjustments were made to the probabilities of other lesions accordingly. By leveraging comprehensive context information, these modules classified lesions as metastatic or non-metastatic, significantly enhancing the precision of lesion-level classification. The architecture of the classification model was based on Res-Net, with both models sharing a similar network structure but differing in the number of neurons in the final fully connected layer. The two-class model comprised two neurons, representing the probability values of metastasis and non-metastasis types, whereas the eight-class model featured eight neurons, corresponding to the probability values of the eight categories.

Measurement of algorithm performance

Sensitivity, specificity, precision, and accuracy are the key metrics for evaluating the performance of BLDS. Sensitivity is defined as the ratio of true positives (TPs) to the sum of true positives (TPs) and false negatives (FNs), specificity as the ratio of true negatives (TNs) to the sum of TNs and FPs, accuracy as (TPs + TNs)/(TPs + TNs + FPs + FNs), and precision as (TPs)/(TPs + FPs). The dice similarity coefficient (DSC) is calculated in a three-dimensional volumetric manner between the actual value labels and the candidate regions33. Ground truth labels with a DSC of ≥0.1 are considered true positives, while those with a DSC of <0.1 are counted as false negatives. Candidate regions that have no spatial overlap with the actual true labels are counted as FPs. Lesion-based sensitivity and FPPC are calculated based on these values and serve as the primary outcomes for evaluating the performance of the BLDS.

Statistical analysis

We employed FROC curves to evaluate the diagnostic performance of the BLDS. These curves were generated by varying the threshold for predicted probability and plotting the true positive rate (recall or sensitivity) against FPPC. Under fixed FPPC values on the horizontal axis, the corresponding sensitivities could be compared. Additionally, the confusion matrix provided comprehensive insights into model efficacy across different lesion types and addressed class imbalance. Cohen’s kappa coefficient κ was also computed between the AI prediction and the standard of truth for differential diagnosis. CIs were calculated based on 1000 bootstrap replicates of the data. To evaluate the performances of the radiologists in detecting multi-type lesions, the jackknife FROC (JAFROC) analysis (RJafroc: Analysis of Data Acquired Using the Receiver Operating Characteristic Paradigm and Its Extensions; R package version 2.1.2) was performed on a per-lesion basis. Lesion weights were introduced to give more credit to finding lesions with clinical importance, such as BMs. A wAFROC FOM was also calculated26,34. The difference in WAFROC FOM was used in the two-sided Wilcoxon Signed-Rank Test. We compared lesion-wise sensitivity, patient-wise sensitivity, and patient-wise specificity between sessions with and without the BLDS using a paired t-test. Additionally, we used the Z test to compare PPV, NPV, accuracy, and balanced accuracy between these sessions. Lastly, we performed the Mann–Whitney U-test to compare interpretation time between sessions with and without the BLDS. For statistical significance, we applied 95% CIs calculated with the Z-score method. Continuous variables are reported as mean (SD) or median (IQR). We used the two-tailed t-test or Mann–Whitney U-test for comparisons of numerical demographic data according to the distribution pattern; the χ² or Fisher exact tests were used for comparisons of categorical demographic data and to analyse data variances between groups A and B. All statistical analyses were performed with R version 4.4.1, Python version 3.6.9, and SPSS version 27.0.1. For all statistical tests, P < 0.05 was considered to indicate a statistically significant difference.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The analytical data generated in this study have been deposited in the Figshare database under accession code 27154209. The raw image data are available under restricted access for patient confidentiality and institutional agreements with the data custodian; access can be obtained by submitting a methodologically viable proposal to the corresponding authors via email (xchuanm@sysucc.org.cn, zhangr@sysucc.org.cn, or dgshen@shanghaitech.edu.cn), signing a data access agreement, and completing a review process (within 6 weeks) to ensure compliance with intellectual property and confidentiality obligations. The raw image data are protected and are not publicly available due to data privacy laws and institutional restrictions. The processed analytical data are available at Figshare. The analytical data generated in this study are provided in the Source Data file. The Source data used in this study are available in the Figshare database under accession code 27154209 Source data are provided with this paper.

Code availability

The code used in this study is publicly available and has been released under the MIT License on GitHub. The repository, named bone lesion detection system (BLDS), can be accessed at https://github.com/hugboom/BLDS35. This repository includes the network architecture, loss function, and bone lesions test data for demonstration purposes. We encourage researchers interested in our work to download and utilize the code for further research and applications under the MlT License. We have released anonymized test data (i.e., 8 cases) with annotations of the bone lesion in the code repository. Additionally, the bone segmentation model code used in this work is based on the open-source implementation from the RTP-Net repository by Professor Dinggang Shen (one of the corresponding authors) et al. available under the MIT License at: https://github.com/simonsf/RTP-Net36.

References

Coleman, R. E. Metastatic bone disease: clinical features, pathophysiology and treatment strategies. Cancer Treat. Rev. 27, 165–176 (2001).

Coleman, R. E. Clinical features of metastatic bone disease and risk of skeletal morbidity. Clin. Cancer Res. 12, 6243s–6249s (2006).

Rowe, S. P. et al. PSMA-based [(18)F]DCFPyL PET/CT is superior to conventional imaging for lesion detection in patients with metastatic prostate cancer. Mol. Imaging Biol. 18, 411–419 (2016).

Logothetis, C. J. & Lin, S. H. Osteoblasts in prostate cancer metastasis to bone. Nat. Rev. Cancer 5, 21–28 (2005).

Liu, F. et al. Comparison of PET/CT and MRI in the diagnosis of bone metastasis in prostate cancer patients: a network analysis of diagnostic studies. Front. Oncol. 11, 736654 (2021).

Heindel, W. et al. The diagnostic imaging of bone metastases. Dtsch. Arztebl. Int. 111, 741–747 (2014).

Théry, C. et al. Minimal information for studies of extracellular vesicles 2018 (MISEV2018): a position statement of the International Society for Extracellular Vesicles and update of the MISEV2014 guidelines. J. Extracell. Vesicles 7, 1535750 (2018).

Noguchi, S. et al. Deep learning-based algorithm improved radiologists’ performance in bone metastases detection on CT. Eur. Radiol. 32, 7976–7987 (2022).

Belue, M. J. et al. Deep learning-based detection and classification of bone lesions on staging computed tomography in prostate cancer: a development study. Acad. Radiol. 31, 2424–2433 (2024).

Lhotska, L., Sukupova, L., Lacković, I. & Ibbott, G. S. Fully automatic CAD System for Segmentation and Classification of Spinal Metastatic Lesions in CT Data 155–158 (Springer Singapore Pte. Limited, Singapore, 2018).

Roth, H. R. et al. Detection of sclerotic spine metastases via random aggregation of deep convolutional neural network classifications. Lect. Notes Comput. Vis. Biomech. 20, 3–12 (2015).

Jan, J., Novosadova, M., Demel, J., Ourednicek, P., Chmelik, J. & Jakubicek, R. Combined bone lesion analysis in 3D CT data of vertebrae. In 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 6374–6377 https://doi.org/10.1109/EMBC.2015.7319851 (IEEE, 2015).

Chmelik, J. et al. Deep convolutional neural network-based segmentation and classification of difficult to define metastatic spinal lesions in 3D CT data. Med. Image Anal. 49, 76–88 (2018).

Burns, J. E. et al. Automated detection of sclerotic metastases in the thoracolumbar spine at CT. Radiology 268, 69–78 (2013).

Hoshiai, S. et al. Effectiveness of temporal subtraction computed tomography images using deep learning in detecting vertebral bone metastases. Eur. J. Radiol. 154, 110445 (2022).

Xiong Y, et al. Deep learning-based diagnosis of osteoblastic bone metastases and bone islands in computed tomograph images: a multicenter diagnostic study. Eur. Radiol.33, 6359–6368 (2023).

Huo, T. et al. Deep learning-based algorithm improves radiologists’ performance in lung cancer bone metastases detection on computed tomography. Front. Oncol. 13, 1125637 (2023).

Roth, H. R. et al. Improving computer-aided detection using convolutional neural networks and random view aggregation. IEEE Trans. Med. Imaging 35, 1170–1181 (2016).

Hammon, M. et al. Automatic detection of lytic and blastic thoracolumbar spine metastases on computed tomography. Eur. Radiol. 23, 1862–1870 (2013).

Hong, J. H. et al. Development and validation of a radiomics model for differentiating bone islands and osteoblastic bone metastases at abdominal CT. Radiology 299, 626–632 (2021).

Lacroix, M. et al. Artificial intelligence in musculoskeletal oncology imaging: a critical review of current applications. Diagn. Interv. Imaging 104, 18–23 (2023).

Kakhki, V. R. D., Anvari, K., Sadeghi, R., Mahmoudian, A. & Torabian-Kakhki, M. Pattern and distribution of bone metastases in common malignant tumors. Nucl. Med. Rev. Cent. East. Eur. 16, 66–69 (2013).

Liu, X. et al. Fully automated pelvic bone segmentation in multiparameteric MRI using a 3D convolutional neural network. Insights Imaging 1, 93 (2021).

Oakden-Rayner, L. et al. Validation and algorithmic audit of a deep learning system for the detection of proximal femoral fractures in patients in the emergency department: a diagnostic accuracy study. Lancet Digit. Health 4, e351–e358 (2022).

Yin, S. et al. Development and validation of a deep-learning model for detecting brain metastases on 3D post-contrast MRI: a multi-center multi-reader evaluation study. Neuro-Oncology 24, 1559–1570 (2022).

Sung, J. et al. Added value of deep learning-based detection system for multiple major findings on chest radiographs: a randomized crossover study. Radiology 299, 450–459 (2021).

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Eds. Leibe, B., Matas, J., Sebe, N. & Welling, M.) 2921–2929 https://doi.org/10.1109/CVPR.2016.319 (IEEE, 2016).

Yang, Y. et al. A multicenter, retrospective epidemiologic survey of the clinical features and management of bone metastatic disease in China. Chin. J. Cancer 35, 40 (2016).

Guermazi, A. et al. Improving radiographic fracture recognition performance and efficiency using artificial intelligence. Radiology 302, 627–636 (2022).

Lin, T. Y. et al. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Eds. Leibe, B., Matas, J., Sebe, N. & Welling, M.) 2117–2125 https://doi.org/10.1109/CVPR.2017.106 (IEEE, 2017).

Zhao, W. & Yan, H. Penalty non-maximum suppression in object detection. Pattern Recognit. Comput. Vis. 11259, 90–102 (2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Eds. Leibe, B., Matas, J., Sebe, N. & Welling, M.) 770–778 (IEEE, 2016). https://doi.org/10.1109/CVPR.2016.90.

Zou, K. H. et al. Statistical validation of image segmentation quality based on a spatial overlap index. Acad. Radiol. 11, 178–189 (2004).

Chakraborty, D. P. & Zhai, X. On the meaning of the weighted alternative free-response operating characteristic figure of merit. Med. Phys. 43, 2548 (2016).

Yun, Z. et al. BLDS:v1.0 on publish. Github https://doi.org/10.5281/zenodo.15054686 (2025).

Shi, F. et al. RTP-Net: v1.0 on publish. Github https://doi.org/10.5281/zenodo.7193687 (2022).

Acknowledgements

This work was supported by the Medical Scientific Research Foundation of Guangdong Province of China (grant number A2020295 for Y.Z.), the Sun Yat-Sen University Cancer Center Research Fund (308 Programme) (grant number 2019-FXY-050 for R.Z.), National Natural Science Foundation of China (grant number 82441023), and HPC Platform of ShanghaiTech University. The doctor icons and machine-learning icons used in Fig. 4 and Supplementary Fig. S3 of this study were sourced from the free icon library Flaticon.com (https://www.flaticon.com/free-icons/doctor/https://www.flaticon.com/free-icons/machine-learning). We acknowledge Flaticon.com for providing high-quality design assets that enhanced the visual presentation of this research.

Author information

Authors and Affiliations

Contributions

C.X. and R.Z. supervised the study. R.Z., C.X., Y.Z., J.L. and S.Y. conceived of and designed the study. X.C., S.M., B.W., Y.Z., X.Z. and D.S. trained and developed the artificial intelligence model. J.L., S.M. and B.W. did the statistical analysis. J.L., S.M. and B.W. wrote the drafted report. Y.Z., Q.Y., X.L. and X.C. critically revised the manuscript. Y.Z., J.H., M.L., F.Z., J.X., S.W., Y.W., J.Z., Z.Y., W.M., D.L. and X.Y. organized and screened patients. All authors had access to all the raw datasets. R.Z., C.X. and D.S. verified all the data. All authors commented on the manuscript and approved the final version before submission.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous, reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, Y., Li, J., Yang, Q. et al. A clinically applicable AI system for detection and diagnosis of bone metastases using CT scans. Nat Commun 16, 4444 (2025). https://doi.org/10.1038/s41467-025-59433-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-59433-7