Abstract

Flow-based generative models have been employed for Boltzmann sampling tasks, but their application to high-dimensional systems is hindered by the significant computational cost of obtaining the Jacobian of the flow. We introduce a flow perturbation method that bypasses this bottleneck by injecting stochastic perturbations into the flow, delivering orders-of-magnitude speed-ups. Unlike the Hutchinson estimator, our approach is inherently unbiased in Boltzmann sampling. Notably, this method significantly accelerates Boltzmann sampling of a Chignolin mutant with all atomic Cartesian coordinates explicitly represented, while delivering more accurate results than the Hutchinson estimator.

Similar content being viewed by others

Introduction

The Boltzmann distribution defines the probability of states of a many-body system at thermal equilibrium. Sampling this distribution is notably challenging due to the presence of numerous metastable states separated by high energy barriers1. Traditional sampling methodologies, such as Molecular Dynamics (MD)2 and Markov Chain Monte Carlo (MCMC)3, operate through making small incremental movements within the configuration space, which makes them inefficient in crossing energy barriers. Enhanced sampling techniques, including replica exchange4,5, umbrella sampling6, metadynamics7, and transition path sampling8, offer avenues to overcome these barriers, yet they introduce their own set of challenges, such as the computational overhead of simulating numerous replicas9 and the challenge of identifying suitable collective variables7,8.

Recent advancements in deep generative models have opened new avenues for generating configurations of many-body systems10,11,12,13,14,15,16,17,18,19. These models map samples from a simple prior distribution to a complex target distribution through a series of transformations parameterized by deep neural networks. One key advantage of deep generative models is their ability to generate independent samples in parallel, making them less likely to get stuck in metastable states compared to traditional methods like MD or MCMC. Recently, deep generative models have demonstrated the ability to generate configurations of complex molecules such as proteins with considerable accuracy, drawing significant attention from the scientific community20,21,22,23,24,25. However, a significant challenge with these models is that the samples they generate typically do not unbiasedly sample the Boltzmann distribution, limiting their ability to accurately capture equilibrium properties that are crucial for applications in computational chemistry and physics.

Normalizing Flows (NFs)26,27,28,29 have emerged as a promising solution to this Boltzmann sampling problem. NFs are a special class of deep generative models that transform the prior distribution through a series of bijective and differentiable neural network layers. By reweighting trajectories generated by NFs based on the generalized work they produced, unbiased Boltzmann sampling can be achieved16,18,19,30,31,32,33,34,35,36,37,38,39,40,41. However, calculating the generalized work requires computing the determinant of the Jacobian of the flow, which necessitates performing D backpropagation passes through all the layers of the flow, where D is the dimensionality of the system. This process is computationally expensive, posing a significant challenge for applying NFs to high-dimensional systems. Moreover, the Jacobian calculation is also a significant hurdle in the training of NFs. To mitigate this issue, specialized neural network layers with easily calculable Jacobian, such as NICE29, RealNVP28, and Glow27, are typically used. However, these layers limit the expressivity of the flow, making it challenging for NFs to model highly complex distributions.

Continuous Normalizing Flows (CNFs), a special limiting case of NFs, have recently been applied to Boltzmann sampling tasks15,42,43. CNFs use neural Ordinary Differential Equations (neural ODEs)44 to establish the mapping between the prior and target distributions. A notable benefit of CNFs over traditional NFs is that they are easier to train, thanks to advancements in simulation-free training methods45,46,47,48,49,50. This ease of training allows CNFs to more effectively model complex distributions. For example, the ODE formulation51,52 of the diffusion model has achieved state-of-the-art performance in generating images51,52,53 and has been used to compute the likelihood of generated molecular configurations11. Additionally, a flow trained using Flow Matching50 has been shown to be able to generate high-quality protein backbone configurations24.

Despite being easier to train, obtaining the Jacobian determinant of a CNF, which is essential for unbiased Boltzmann sampling, requires integrating the Jacobian trace of its velocity field along the flow. The Jacobian trace calculation still necessitates D backpropagation passes, making it challenging to apply CNFs to Boltzmann sampling of high-dimensional systems42. The Hutchinson estimator54 has been used to reduce this computational cost15. However, it complicates the reweighting process, leading to convergence issues42,55 and systematic biases in the reweighted results42. As a result, CNFs have only achieved unbiased Boltzmann sampling for small molecules43.

In this work, we introduce the flow perturbation method, a novel approach designed to accelerate Boltzmann sampling for high-dimensional systems. By leveraging the fact that stochastic trajectories, like their deterministic counterparts, can also be reweighted according to their generalized work30,56, we introduce stochastic perturbations into the flow model, which allows us to avoid Jacobian calculations. Importantly, with certain choices of stochastic perturbations, a Simplified Flow Perturbation Entropy Estimator can be obtained. When combined with Sequential Monte Carlo (SMC) samplers, this estimator achieves substantial speedups compared to brute-force Jacobian evaluation while ensuring unbiased Boltzmann sampling.

Our flow perturbation method is not the first attempt to incorporate stochasticity into flow models. Wu et al.30 introduced the Stochastic Normalizing Flow (SNF), which inserts stochastic layers between deterministic NF layers. However, their approach still requires calculating the Jacobian of the NF layers, potentially limiting its applicability to high-dimensional systems. Other sampling methods based on stochastic differential equations (SDEs) also exist57,58,59. Compared to these SDE-based approaches, the dimensionality of the space that needs to be explored in MCMC is lower in our method. Specifically, our method requires sampling over the latent variable and a single-noise variable. In contrast, SDE-based approaches introduce a new random variable at each integration step, requiring Monte Carlo (MC) sampling over the latent variable and the full set of random noise variables across all steps. These advantages make the flow perturbation method particularly well-suited for Boltzmann sampling of high-dimensional systems.

Our Simplified Flow Perturbation Entropy Estimator has been benchmarked on low- and high-dimensional Gaussian Mixture Models (GMMs), demonstrating exceptional Boltzmann sampling performance with orders-of-magnitude speedups over brute-force Jacobian calculations. It has also been successfully applied to Boltzmann sampling of a Chignolin mutant CL02560,61,62 with explicit representation of all atomic Cartesian coordinates—a task computationally infeasible with brute-force methods—and delivered more accurate results than the Hutchinson estimator. These results underscore the potential of our approach for tackling larger and more complex molecular systems.

Results

Flow perturbation

Flow-based generative models, such as NF and CNF, generate configurations of the target system by first sampling from a simple prior distribution and then transforming these samples through an invertible and differentiable map, denoted as f: z → x. With appropriately trained f, these models can generate configurations of complex many-body systems such as proteins. However, the configurations they generate do not necessarily unbiasedly sample from the target Boltzmann distribution, due to reasons such as limited capacity of the flow model, insufficient training, biases in the training data, etc.16.

To ensure unbiased Boltzmann sampling, one can reweight trajectories generated by the flow model according to the exponential of the negative generalized work, e−W, as illustrated in Fig. 1a. The generalized work W of a trajectory z → x is defined as:

Here, uX(x) and uZ(z) represent the dimensionless potential energy of the Boltzmann distribution \(\frac{1}{{Z}_{X}}\exp (-{u}_{X}({{\bf{x}}}))\) and the prior distribution \(q({{\bf{z}}})=\frac{1}{{Z}_{Z}}\exp (-{u}_{Z}({{\bf{z}}}))\), respectively. The entropy term ΔS is determined by the Jacobian determinant of the flow,

Calculating this entropy term is challenging and computationally intensive, especially for high-dimensional systems. This is because it requires D backpropagation passes through the flow, where D is the dimension of the system.

a Reweighting trajectories generated by a flow model. The prior distribution, shown in the middle, is Gaussian, while the target distribution features two peaks of different heights. The original trajectories, shown on the left, fail to sample the target distribution correctly, with roughly equal numbers of trajectories reaching each peak despite their differing heights. On the right, the trajectories are reweighted according to e−W, resulting in trajectories that arrive at the lower probability regions being given less weight. This reweighting ensures unbiased sampling of the target distribution. b An illustration of the flow perturbation method. The left panel shows the forward trajectories (z → x) and the corresponding backward trajectories (x → z) generated by the original flow model, while the right panel shows the trajectories generated by the perturbed flow model.

To address this computational challenge, we introduce the flow perturbation method. This method is based on the fact that the reweighting scheme applies not only to deterministic flows but also to stochastic processes. For stochastic trajectories, reweighting is performed using the exponential of the negative work, just as in the deterministic case. However, the key difference is that the entropy term for a stochastic trajectory is determined by the conditional probability ratio between this trajectory with its corresponding reverse trajectory30,56, which offers a potential way to avoid the computationally intensive Jacobian calculation.

To implement the flow perturbation method, we add the following stochastic perturbations to the flow and the inverse flow, which create a forward and backward stochastic process:

Here \({{\boldsymbol{\epsilon }}},\,\tilde{{{\boldsymbol{\epsilon }}}}\in {{\mathbb{R}}}^{D}\) are random noises drawn from a standard Gaussian distribution, and \({{{\boldsymbol{\sigma }}}}_{{{\bf{f}}}}({{\bf{z}}}),{{{\boldsymbol{\sigma }}}}_{{{\bf{b}}}}({{\bf{x}}})\in {{\mathbb{R}}}^{D\times D}\) are matrices that scales the forward and backward noise, respectively. An illustration of the perturbed flow is shown in Fig. 1b.

With the forward and backward process defined in Eqs. (3) and (4), the entropy of a forward trajectory z → x is given by

where

is the exact noise needed to bring x back to z. A detailed derivation for this expression of entropy can be found in Supplementary Sec. A.

While this entropy formulation eliminates the need for Jacobian calculations, it does not guarantee an increase in the efficiency of Boltzmann sampling. In fact, its efficiency largely depends on the choice of the forward and backward scaling matrices σf(z) and σb(x). Poorly chosen σf(z) and σb(x) can significantly increase the forward path Kullback–Leibler (KL) divergence of the perturbed flow (as defined in Eq. (26) in the “Methods” section), in which case a far greater number of trajectories would be required for accurate reweighting63. This additional burden may outweigh the computational savings achieved by avoiding Jacobian evaluations.

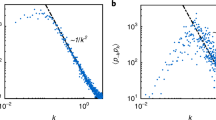

To illustrate the impact of scaling matrix choices, let us consider an example where σf(z) and σb(x) are set as constants. The base flow model in this example targets a GMM as the Boltzmann distribution and is implemented as a diffusion ODE (see “Methods”). Figure 2 shows that with this naive choice of scaling matrices, the forward path KL divergence of the perturbed flow increases significantly compared to that of the base flow model—for a system with 1000 dimensions the KL increased by 3000, thus requiring e3000 times more trajectories to match the sampling accuracy of the base flow model. This entirely offsets the computational savings of avoiding Jacobian evaluations.

“Constant” uses fixed scaling matrices (0.001). “KL loss” fixes σf(z) at 0.001 and trains \(\left.{{{\boldsymbol{\sigma }}}}_{{{\bf{b}}}}({{\bf{x}}})\right)\) to minimize KL directly, while “\(\left\vert \parallel {{\boldsymbol{\epsilon }}}{\parallel }^{2}-\parallel \tilde{{{\boldsymbol{\epsilon }}}}{\parallel }^{2}\right\vert\)” employs the loss from Eq. (7) to train \(\left.{{{\boldsymbol{\sigma }}}}_{{{\bf{b}}}}({{\bf{x}}})\right)\). “Simplified FP” uses the Simplified FP Entropy estimator (Eq. 9) to compute KL. The “baseline” represents the KL of the base flow model, with entropy calculated via brute-force Jacobian evaluation.

To improve reweighting efficiency, it is therefore crucial to optimally choose σf(z) and σb(x). One approach is to model these scaling matrices as neural networks, trained to minimize the forward path KL divergence. However, in practice, directly using the KL divergence as the loss function does not always lead to the best model performance. Instead, we found that the following loss function:

where

yields a substantially better-trained model, as illustrated in Fig. 2. This loss function corresponds to the absolute value of the first term in the entropy defined in Eq. (5), averaged over all possible forward trajectories. For further details on this loss function, please refer to Supplementary Sec. D.

When modeling and training σf(z) and σb(x) as neural networks, one should in practice adopt a low-dimensional representation. A full matrix parametrization would require neural networks with D2 output nodes, which demands an enormous amount of training data. For instance, to generate results in Fig. 2, we set σf(z) as a constant and modeled σb(x) as a scalar function, i.e., \({{{\boldsymbol{\sigma }}}}_{{{\bf{b}}}}:{{\mathbb{R}}}^{D}\to {\mathbb{R}}\).

Even with low-dimensional representations, training scaling matrices incurs additional computational costs. However, under certain limiting conditions, the entropy term defined in Eq. (5) simplifies significantly, removing its dependence on these scaling matrices entirely. These conditions are: (1) both scaling matrices, σf(z) and σb(x), become isotropic and reduce to scalar functions; (2) both scalar functions are scaled by a global parameter α; and (3) the limit α → 0 is taken. Under these specific constraints, the entropy term simplifies to the compact form:

where \(\hat{{{\boldsymbol{\epsilon }}}}\) is a random unit vector drawn from the uniform distribution on the unit D-dimensional sphere, and \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\) is the Jacobian of the inverse flow evaluated at x = f(z). This simplified expression, termed the Simplified Flow Perturbation Entropy Estimator, is derived in Supplementary Sec. B, where we also show that reweighting with this estimator preserves unbiased sampling of the target Boltzmann distribution.

This Simplified Flow Perturbation Entropy Estimator eliminates the need to train scaling matrices entirely. Instead, it only requires computing a Jacobian-vector product for the inverse flow, which can be efficiently handled using Automatic Differentiation frameworks or numerical perturbation methods, provided the inverse flow is explicitly available. For example, for a CNF, inverting the flow simply involves evolving the underlying ODE backward in time. In such cases, the computational cost of obtaining \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\) is equivalent to two passes through the flow, which is significantly less than computing the full Jacobian. However, when the inverse mapping f−1 is not given in closed form, calculating \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\) becomes considerably more challenging, potentially limiting the applicability of this approach. For a more detailed discussion, refer to the “Methods” section.

The forward path KL divergence achieved with the Simplified FP Entropy estimator is comparable to that obtained by training scaling matrices using the loss in Eq. (7), as shown in Fig. 2. However, it remains slightly higher than that of the base flow model, with an increase of ~200 in KL divergence at 1000 dimensions. This discrepancy arises because this simplified estimator requires the scaling matrices to be scalar functions, resulting in greater stochastic fluctuations when the flow’s Jacobian is highly anisotropic, as indicated by Eq. (9). Consequently, directly using the Simplified FP Entropy estimator for Importance Sampling (IS) would require an astronomically greater number of trajectories—approximately e200 = O(1086) times more-compared to using the base flow model with full Jacobian calculations. However, when combined with sampling methods that prioritize high-probability regions, such as Metropolis MC and SMC, our simplified FP entropy estimator can achieve greater Boltzmann sampling efficiency than the full Jacobian calculation, as demonstrated later.

The simplified FP entropy estimator can be naturally generalized to incorporate multiple noise vectors, yielding a more robust estimate of entropy. Specifically, we define the multi-noise estimator as the log-exponential average of N independent single-noise estimators:

where each \(\Delta S({{\bf{z}}},{\hat{{{\boldsymbol{\epsilon }}}}}_{i})\) is the single-noise simplified FP estimator given by Eq. (9). Unlike a naive arithmetic mean of single-noise estimators—which introduces systematic bias (as demonstrated in Supplementary Sec. C)—this log-exponential formulation preserves unbiased sampling of the Boltzmann distribution, as proven in the same supplementary section. While incorporating multiple noise vectors reduces the variance of entropy estimates, each additional vector requires two extra passes through the flow, thus increasing computational cost. A comprehensive comparison of single-noise and multi-noise estimators on benchmark systems is presented in the “Performance on benchmark systems” section.

Compare with other methods

This section compares the simplified FP entropy estimator with SNF and the Hutchinson trace estimator. Table 1 highlights their key features, focusing on computational cost and accuracy in Boltzmann sampling. Detailed discussions of the differences between these techniques are provided in the following subsections.

SNF

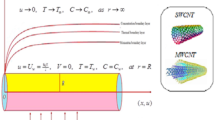

Flow perturbation is not the only approach that tries to add stochasticity into flow-based generative model. Wu et al.’s SNF30 introduces stochastic layers into NFs to overcome the topological limitations inherent in deterministic mappings. Figure 3 compares the structures of our Perturbed Flow and SNF, highlighting a key difference between them: the sequence of deterministic and stochastic operations during the forward and backward processes. In Perturbed Flow, the deterministic mapping is always followed by the stochastic perturbation in both the forward and backward processes. By contrast, SNF reverses this order in the backward pass.

This design choice makes SNF still require computing the Jacobian of the deterministic mapping—a computationally intensive task in high-dimensional settings. Our approach, however, circumvents this requirement.

The loss function defined in Eq. (7) might look similar to the entropy of the Langevin-type layer in SNF (see Eq. (20) of the SNF paper). However, the definition of the backward noise in these two terms is different. The backward noise in this loss function, which is defined in Eq. (8), encapsulates information about the deterministic transformation. As a comparison, the backward noise in the entropy term of the Langevin-type layer of SNF, defined in Eq. (19) of the SNF paper, is independent of the deterministic mapping.

Hutchinson estimator

Another approach to address the computational challenges of Jacobian determinants in flow-based generative models is the Hutchinson trace estimator, which provides an efficient way to approximate the divergence of the vector field governing the CNF without computing the full Jacobian.

A fundamental problem with the Hutchinson estimator is that it introduces biases into the reweighted estimates of observables. During reweighting, the stochastic noise introduced into the entropy by the Hutchinson estimator is transformed by the nonlinear weighting function e−W, which leads to a persistent bias in the reweighted estimates of observables that does not vanish even with an infinite number of samples. In contrast, FP is inherently unbiased in reweighting.

In terms of computational cost, the Hutchinson estimator requires one forward pass of the flow to compute f(z) and N backpropagation passes along the flow to compute entropy, where N is the number of random vectors (noises) used in the estimator. Each backpropagation pass has a computational cost comparable to integrating the neural ODE. Therefore, the total computational cost for the Hutchinson estimator is ~1 + N passes.

SMC

IS and Metropolis MC methods are often integrated with flow-based models to perform Boltzmann sampling tasks. IS assigns importance weights directly to trajectories, whereas Metropolis MC utilizes an acceptance-rejection mechanism determined by importance weights to ensure unbiased Boltzmann sampling. However, both methods face significant practical challenges when dealing with high-dimensional systems. Specifically, IS becomes impractical due to the extremely large number of trajectories required to achieve reliable estimates. Metropolis MC, when updating all coordinates of random variables simultaneously during trial moves, typically leads to a very low acceptance rate. While updating a partial set of coordinates39 may increase the acceptance rate, it can cause the Markov chain to be trapped in local metastable states, compromising sampling quality.

To address these limitations, we propose integrating the perturbed flow with an SMC sampler64,65, also known as a particle filter. Unlike IS and Metropolis MC, SMC bridges the gap between a tractable prior distribution, π0(Γ), and the complex target distribution, πT(Γ), through a sequence of intermediate distributions \({\{{\pi }_{n}(\Gamma )\}}_{n=0}^{T}\). This annealing strategy mitigates local trapping by incrementally adapting configurations toward the target.

Here, \(\Gamma=({{\bf{z}}},\hat{{{\boldsymbol{\epsilon }}}})\) denotes the particle configuration, consisting of the latent variable z from the base flow model f(z), and \(\hat{{{\boldsymbol{\epsilon }}}}\), a normalized noise vector uniformly distributed on the unit D-dim sphere. The prior distribution π0(Γ) is given by \(q({{\bf{z}}}){{\mathcal{U}}}(\hat{{{\boldsymbol{\epsilon }}}})\), where \(q({{\bf{z}}})=\frac{1}{{Z}_{Z}}\exp (-{u}_{Z}({{\bf{z}}}))\) is the prior distribution for z, and \({{\mathcal{U}}}(\hat{{{\boldsymbol{\epsilon }}}})\) is the uniform distribution on the unit sphere. The target distribution πT(Γ), corresponding to the distribution \({p}^{*}({{\bf{z}}},\hat{{{\boldsymbol{\epsilon }}}})\) defined in Eq. (S60) in Supplementary Sec. B is expressed as:

where W*(Γ) represents the generalized work, where the entropy term is computed using the Simplified FP Entropy Estimator (Eq. 9):

Since the normalization constant ZX of the target Boltzmann distribution \(\frac{1}{{Z}_{X}}\exp (-{u}_{X}({{\bf{x}}}))\) is typically unknown, it is convenient to introduce the explicitly computable unnormalized density:

which simplifies the calculation of unnormalized particle weights in the SMC sequence, as discussed in detail below.

The intermediate distributions connecting the prior and target are implemented through a geometric annealing path:

where βn denotes a predefined annealing schedule with β0 = 0 (fully prior) and βT = 1 (fully target). The explicitly computable unnormalized density \({\gamma }_{n}(\Gamma )=\exp \left(-{\beta }_{n}W(\Gamma )\right){\pi }_{0}(\Gamma )\) enables direct calculation of unnormalized particle weights throughout all stages, as detailed later.

The SMC implementation presented here, which bridges the flow’s base distribution to the base distribution reweighted by the generalized work, is similar in spirit to ref. 41. One difference, however, is that ref. 41 performs annealing in the configuration space, whereas the approach here performs annealing in the latent space. Another difference is that in our approach, the latent space is augmented with the noise vector \(\hat{{{\boldsymbol{\epsilon }}}}\), which enables us to circumvent the direct calculation of the flow’s Jacobian determinant via the Simplified FP Entropy Estimator (Eq. 9).

The SMC sampler approximates the evolving sequence of distributions {πn(Γ)} using an ensemble of weighted particles. Initialization begins by sampling particles from the prior distribution π0(Γ), assigning each particle uniform weights. These particles are then propagated sequentially from πn−1 to πn via a forward transition kernel \({K}_{n}(\Gamma,{\Gamma }^{{\prime} })\). In our framework, Kn is implemented as one or repeated applications of neural MCMC move designed to keep πn as its invariant distribution. Specifically, for each particle configuration \(\Gamma=({{\bf{z}}},\hat{{{\boldsymbol{\epsilon }}}})\), the neural MCMC move proposes a trial state \({\Gamma }^{{\prime} }\) by updating a subset coordinates of z and \(\hat{{{\boldsymbol{\epsilon }}}}\) from the prior. The partial-update strategy was first introduced by ref. 39 where the authors partially updated coordinates of z per trial move. Here, we adopt the same partial-update strategy but extend it to partially updating both z and \(\hat{{{\boldsymbol{\epsilon }}}}\). Such updates reduce the magnitude of each trial move, which helps maintain a practical acceptance rate in high-dimensional spaces. The trial state \({\Gamma }^{{\prime} }\) is then accepted with probability:

where W* denotes the generalized work. This Metropolis-Hastings acceptance rule ensures that the distribution πn(Γ) remains invariant under each neural MCMC move, thus guaranteeing that repeated applications of this move also preserve πn.

To compute particle importance weights during the sequential propagation, it is essential to specify the backward transition kernel \({L}_{n-1}({\Gamma }^{{\prime} },\Gamma )\). Following Neal66 and Del Moral et al.65, the backward kernel Ln−1 in our implementation is chosen as the reverse of the forward kernel Kn, which ensures that

This detailed balance condition, which corresponds to Eq. (30) of ref. 65, leads to a particularly simple rule for the incremental weight update. Specifically, the unnormalized weight for the jth particle is updated according to

which corresponds to Eq. (31) of Ref. 65. Here, \({\Gamma }_{n-1}^{( \; j)}\) denotes the state of the jth particle at stage n−1. Substituting the definitions of γn and γn−1 into this equation yields

where \({W}^{*}({\Gamma }_{n-1}^{( \; j)})\) is the generalized work evaluated at the particle’s state at stage n−1.

The sequential weight updates often concentrate probability mass on a small subset of particles. To mitigate this, SMC includes a resampling step that periodically refreshes the particle ensemble. In this step, particles with lower weights are removed, while those with higher weights are duplicated. This ensures computational resources remain concentrated in regions of the state space that significantly contribute to the target distribution.

In this work, resampling is performed adaptively rather than at fixed intervals. At each stage n, the effective sample size (ESS) is computed as

where \({\omega }_{n}^{( \; j)}\) denotes the normalized weight of particle j. Resampling is triggered whenever the ESS drops below a predetermined fraction of the total particle count.

Algorithm 1 presents the proposed SMC sampler.

Algorithm 1

Sequential Monte Carlo Sampler

Input: Annealing schedule \({\{{\beta }_{n}\}}_{n=0}^{T}\) (β0 = 0, βT = 1), prior π0, target πT, Resampling threshold τ ∈ (0, 1), Particle Counts J, Forward Kernels {Kn}.

1. Initialization.

(a) Sample particles \({\Gamma }_{0}^{( \; j)} \sim {\pi }_{0}(\cdot )\) for j ∈ [J].

(b) Initialize both normalized and unnormalized weight \({\omega }_{0}^{( \; j)}={\tilde{\omega }}_{0}^{( \; j)}=\frac{1}{J}\) for j ∈ [J].

2. For n ∈ {1, …, T}, iterate:

(a) For each particle j ∈ [J], propagate it with the forward kernel Kn:

where Kn consists of multiple Neural MCMC steps (with an acceptance rule defined in Eq. 17).

(b) Compute unnormalized weights for j ∈ [J]:

(c) Normalize weights:

(d) Compute ESS:

(e) If ESS < τJ or n = T:

i. Resample ancestor indices \({\{{a}^{ \; j}\}\,}_{j=1}^{J}\) according to \({\{{\omega }_{n}^{( \; j)}\}\,}_{j=1}^{J}\).

ii. Set \({\Gamma }_{n}^{( \; j )}={\Gamma }_{n}^{({a}^{ \; j})}\) and \({\omega }_{n}^{( \; j \, )}={\tilde{\omega }}_{n}^{( \; j \, )}=\frac{1}{J}\) for j ∈ [J].

Output: Particles \({\{{\Gamma }_{T}^{( \; j )}\}\,}_{j=1}^{J}\) approximating πT

A variety of resampling algorithms are available—including multinomial67, stratified68, residual69,70, and systematic68,71 resampling methods—each differing in how particles are duplicated or discarded, and in the amount of variance they introduce. Among these, systematic resampling is widely favored for its relatively low variance and computational efficiency. However, a more recent method known as KL reshuffling, introduced by Kviman et al.72, has shown superior performance in various benchmark studies compared to systematic resampling. In this study, we adopt systematic resampling for the GMM benchmark system, given its widespread use as the default approach, while employing KL reshuffling for the more challenging task of Boltzmann sampling of protein configurations.

While rigorous mathematical analysis of SMC convergence remains challenging, practical insights into its performance can be gained by examining the genealogical structure of the particle ensemble-the ancestral lineages tracing back to initial particles. A key diagnostic is the survival rate of these lineages: if most original particles fail to propagate their “offspring" to the final iteration (a phenomenon termed sample impoverishment73), it typically indicates that the surviving cohort fails to adequately explore the target distribution. Though not a formal convergence guarantee, this genealogical analysis provides an intuitive, scalable proxy for assessing SMC efficacy. It is particularly valuable in high-dimensional settings where more rigorous diagnostics can be difficult to apply.

Performance on benchmark systems

We evaluated the performance of our Simplified FP Entropy estimator for Boltzmann sampling using two benchmark systems: a GMM and the Chignolin mutant CL02560,61,62. The GMM consists of two well-separated, highly anisotropic Gaussian distributions, making it a stringent test for our estimator due to the variance introduced by the anisotropy. The Chignolin mutant, composed of 10 amino acid residues, is one of the smallest peptides with a well-defined folded structure. Representative structures of the Chignolin mutant are shown in Fig. 4. Additional details about these benchmark systems are provided in the “Methods” section and Supplementary Sec. F.

The base flow models for the two benchmark systems were implemented as the Probability-Flow ODEs of diffusion models. For the GMM, the diffusion model was trained on data sampled equally from the two Gaussian components, while the target GMM assigned unequal weights (0.25 and 0.75) to these components. This deliberate mismatch was designed to test whether the Simplified FP Entropy estimator, combined with SMC sampling, could accurately recover the target weights despite the uniform-weight training data. For the Chignolin mutant, the training data consists of protein configurations at 300K collected from simulation data by Frank Noé’s research group. For the GMM, the diffusion model utilizes a fully connected multi-layer perceptron (MLP) as its core neural network architecture, while the Chignolin mutant employs a Transformer-based74,75 architecture. Although computationally more demanding, the Transformer architecture facilitates higher-quality configuration generation, which is essential for accurately capturing the complex conformational landscape of the protein mutant. Detailed architectures and training procedures for the diffusion models are described in Supplementary Sec. K.

We evaluated both the single-noise simplified FP entropy estimator (Eq. 9) and its multi-noise extension (Eq. 10), testing the latter with N = 2 noise vectors.

In addition to the simplified FP Entropy estimator, the SNF, the Hutchinson estimator, and the brute-force Jacobian evaluation (BFJacob) were also tested for comparison. The SNF implementation consisted of a single deterministic layer(the base flow model), followed by a Langevin-type stochastic layer defined as \({{{\bf{x}}}}^{{\prime} }={{\bf{x}}}-a\nabla {u}_{X}({{\bf{x}}})+\sqrt{2a}{{\boldsymbol{\epsilon }}}\), where uX(x) is the target energy, ϵ is Gaussian noise, and constant a controls the step size. Details of the SNF implementation can be found in Supplementary Sec. H. The Hutchinson estimator was performed using Gaussian and Rademacher random vectors, respectively, with trace estimations conducted using 1, 2, and 10 random vectors to evaluate the effect of increasing the number of vectors on accuracy. Finally, the BFJacob method, which directly computes the Jacobian of the flow using Automatic Differentiation, served as a computationally intensive but accurate baseline for entropy evaluation. Implementation details of Hutchinson and BFJacob are provided in the Supplementary Sec. G.

Low-dimensional GMM

To validate the Simplified FP Entropy estimator and compare it with other methods, a 10-dimensional GMM was used for a sanity check. SMC simulations were repeated 10 times, each with 1000 particles, to ensure consistent and reliable results.

Accuracy was evaluated by measuring the percentage of particles that settled in the first Gaussian component at the end of the SMC simulations. As shown in Table 2, the Simplified FP Entropy estimator, in both its single-noise (FP1) and double-noise (FP2) form, along with SNF and BFJacob methods, produce samples that closely matched the target distribution, with ~25% of particles in the first component, demonstrating their ability to sample the target space effectively. In contrast, the Hutchinson estimator showed significant deviations when using only one random vector for trace estimation. These deviations decreased with more random vectors used in trace estimation.

The table also includes the number of initial particles whose lineages were preserved through SMC. A large number of retained lineages, as observed across all accurate methods, indicates effective sampling of the target distribution.

Finally, distributions of final samples along the reaction coordinate (RC)—defined as the vector connecting the centers of the two Gaussian components—were analyzed (Fig. 5). All methods, except the Hutchinson estimator with one random vector, closely reproduced the target distribution.

The reaction coordinate is defined as the vector connecting the centers of the two Gaussian components. The results shown are for the 10-dimensional GMM. The target distribution, shown as the solid blue line labeled “target,” is obtained by direct sampling from the GMM. “BFJacob” denotes the Brute-Force Jacobian evaluation. “SNF” denotes the Stochastic Normalizing Flow. “FP1” and “FP2” denote the Simplified FP entropy estimator in its single-noise and double-noise variants, respectively. “Gn” represents the Hutchinson estimator with n Gaussian random vectors (e.g., G1, G2, G10). “Rn” represents the Hutchinson estimator with n Rademacher random vectors (e.g., R1, R2, R10).

1000-dimensional GMM

We further evaluated the methods on a 1000-dimensional GMM. For the Simplified FP Entropy estimator and the Hutchinson estimator, SMC simulations were initialized with 1000 particles and repeated 10 times to ensure consistency and reliability. Due to the high computational cost, SMC simulations for SNF and BFJacob were conducted with only 100 initial particles and a single run for each method. In the SMC simulations, 2000 intermediate distributions were employed to bridge the prior and target distributions, with 10 MC steps performed to propagate particles between successive distributions.

Table 2 summarizes the proportion of particles in the first Gaussian component (target proportion: 0.25), the number of initial particles with surviving lineages, and the computational cost.

The Simplified FP Entropy estimator, in both its single-noise (FP1) and double-noise (FP2) forms, produced comparable and reasonably accurate estimates. The average proportions were 0.209 for FP1 and 0.214 for FP2, with similar standard deviations across 10 independent runs. However, FP2 requires ~1.6 times the computational time of FP1. By comparison, SNF and BFJacob produced proportions exceeding the target, reaching 0.35 and 0.4, respectively, while incurring a computational cost 25 times higher than that of FP1. However, it is important to note that the SNF and BFJacob evaluations were performed using only 1/10th the number of particles used for the simplified FP estimator, due to computational resource limitations. As a result, their estimates are subject to higher variance. If the same number of initial particles were used, SNF and BFJacob would likely yield estimates at least as accurate as those from the Simplified FP estimator. However, such a comparison would require computational resources beyond our current capacity.

The Hutchinson estimator showed poor performance with 1, 2, or 10 Rademacher random vectors, placing few or no particles in the first well across multiple runs. Using 1 or 2 Gaussian random vectors exhibits large variances. However, with 10 Gaussian vectors, the estimated proportion improved significantly, albeit at approximately three times the computational cost of FP1.

Figure 6 presents particle distributions along the RC, the final energy distribution, and the average energy as a function of β—a parameter controlling the progression of the SMC process (see the section “SMC”). The results demonstrate that the Simplified FP Entropy estimator performs comparably or better than SNF, BFJacob, and the Hutchinson estimator with 10 Gaussian or Rademacher vectors, while maintaining significantly lower computational cost. Note that the figure shows results from a single run, while results from multiple SMC runs using the Simplified FP Entropy estimator are provided in Supplementary Sec. I.

a Probability distribution of final SMC samples along the reaction coordinate. b Monte Carlo acceptance rate as a function of β. c Average sample energy as a function of β. d Final energy distribution. The target energy and distribution (target) are obtained by directly sampling the target GMM. “FP1” and “FP2” correspond to the Simplified FP entropy estimator in its single-noise and double-noise variants, respectively. “G10” and “R10” correspond to the Hutchinson estimator with 10 Gaussian random vectors and 10 Rademacher random vectors, respectively. “BFJacob” denotes the Brute-Force Jacobian evaluation, while “SNF” denotes the Stochastic Normalizing Flow. All SMC results shown here are derived from a single SMC run.

Additionally, Fig. 6b shows the acceptance rates of neural MCMC moves as a function of β during the SMC simulation. Noticeable discontinuities in acceptance rates appear specifically for the Simplified FP estimator, in both its single-noise and double-noise forms. These abrupt changes correspond to steps at which the number of coordinates updated in the partial-update scheme is adjusted. As the SMC simulation progresses, we lower the number of coordinates updated in the partial-update scheme at certain β values, which causes a sudden increase in acceptance rate at those steps.

While adjustments to the number of updated coordinates occur consistently across all methods considered (Simplified FP, BFJacob, SNF, and Hutchinson-based methods), pronounced jumps are uniquely observed for the Simplified FP estimator. This distinct behavior arises because the Simplified FP estimator simultaneously updates coordinates in both random variables, z and \(\hat{{{\boldsymbol{\epsilon }}}}\), whereas the other methods only update coordinates in z. Consequently, modifying the number of updated coordinates in two variables simultaneously magnifies the impact on acceptance rates, resulting in more prominent discontinuities.

Chignolin mutant

For the Chignolin mutant, SMC simulations were conducted using only the Simplified FP Entropy estimator and the Hutchinson estimator. Each simulation was initialized with 2000 particles, and only a single run was performed for each method. In the SMC simulations, 1000 intermediate distributions were employed to bridge the prior and target distributions, with 2 MC steps performed to propagate particles between successive distributions. The KL Reshuffling scheme72 was used as the resampling strategy throughout the SMC run. SMC simulations with BFJacob and SNF were not performed due to their high computational cost.

The Chignolin mutant adopts two metastable states at 300K: a beta-hairpin and a partially folded alpha-helix. To differentiate these structures, two RCs were selected: 1. The distance between the Cα atoms of the 7th and 10th residue. A hydrogen bond exists between these two residues in alpha-helix configuration, while that bond is absent in the beta-haripin configuration. 2. The distance between the Cα atoms of the 1st (head) and 10th (tail) residues. This RC captures the overall compactness of the structure, with the beta-hairpin configuration exhibiting smaller values than the alpha-helix configuration.

Figure 7a shows the probability distribution of the training data on the RC plane, revealing two distinct maxima corresponding to the alpha-helix and beta-hairpin configurations. Panel (b) displays configurations generated directly by the base flow, while panels (c)–(f) show samples produced by SMC using the FP1, FP2, R10, and G10 estimators, respectively. Compared to the samples generated by the base flow and the SMC samples obtained with R10 or G10, the SMC samples obtained using FP1 and FP2 exhibit closer agreement with the target distribution. In particular, FP1 and FP2 place fewer samples in the low-probability corridor separating the two basins, whereas the other methods spill more samples into that region.

Sample distributions projected onto the reaction coordinate (RC) plane: a Target distribution obtained from the training data; b Samples generated directly by the base flow model (denoted “init”); c Samples produced by SMC using the Simplified FP entropy estimator in its single-noise form (FP1); d Samples produced by SMC using the Simplified FP entropy estimator in its double-noise form (FP2); e Samples produced by SMC using the Hutchinson estimator with 10 Rademacher noise vectors (R10); f Samples produced by SMC using the Hutchinson estimator with 10 Gaussian noise vectors (G10). g Monte Carlo acceptance rates as functions of β; h Average energy 〈E〉 of the intermediate samples generated throughout the SMC simulation; i Energy distributions of samples at the end of SMC simulation, compared against the target energy distribution (target) and the energy distribution of samples generated directly by the base flow (init). Target statistics are computed from the training data. “G2” and “R2” represent the Hutchinson estimator with 2 Gaussian random vectors and 2 Rademacher random vectors, respectively. All SMC results are from a single SMC run.

Table 3 presents a more quantitative comparison of how different methods distribute samples across the RC plane, focusing on the fraction of configurations that fall into the right basin. The target fraction, based on the training data, is 0.385. Among all methods, FP1 and FP2 yield the most accurate results, placing 0.43 and 0.42 fractions of samples in the right basin, respectively. These values are noticeably closer to the target compared to the base flow (0.3), R10 (0.27), and G10 (0.25).

Figure 7i presents the energy distribution of samples generated by different methods. Since we model Cartesian coordinates of protein atoms directly (rather than using representations like internal coordinates), the energy of the system is highly sensitive to small perturbations in the distances between bonded atoms. Even minor deviations from physically realistic bond lengths can lead to large energy penalties. This makes the energy distribution a stringent test of sample quality.

As shown in the figure, the base flow and SMC simulations using R10/G10 yield energy distributions that deviate significantly from the target distribution. In contrast, SMC simulations using FP1/FP2 yield energy distributions that are much closer to the target, although minor discrepancies still remain.

Figure 7g shows the acceptance rate of the MC move as a function of β. In contrast to the pronounced discontinuities observed for the 1000-dim GMM benchmark (Fig. 6b), where abrupt adjustments to the number of updated coordinates caused sudden rate changes (as discussed earlier), the acceptance-rate curves for FP1 and FP2 remain relatively smooth for the Chignolin mutant. This smoother behavior occurs because, during most of the SMC simulation for the Chignolin mutant, the number of coordinates updated per each MC move is fixed at one for both z and \(\hat{{{\boldsymbol{\epsilon }}}}\), except at very small values of β.

Notably, FP2 consistently achieves higher acceptance rates compared to FP1, reflecting the fact that incorporating two independent noise vectors reduces the variance of the entropy estimate, thereby enhancing the acceptance probability of MC proposals.

Finally, we present the results of MD simulations for comparison. Six independent MD runs, each lasting 10 million steps, were performed. However, all runs became trapped in one of the metastable states, highlighting the challenges of achieving accurate Boltzmann sampling for this protein using traditional MD methods. Details about the MD results can be found in Supplementary Sec. J.

Discussion

In this study, we introduced the flow perturbation method to accelerate Boltzmann sampling of high-dimensional systems. This approach injects stochastic perturbations into both the forward and backward processes of the deterministic flow, eliminating the need to calculate the flow’s Jacobian by using a stochastic expression for the entropy. This entropy expression can be further simplified into the Simplified FP Entropy estimator, which is independent of the scaling matrices of the stochastic perturbations, making it more convenient for Boltzmann sampling tasks.

After evaluating the accuracy of the Simplified FP Entropy estimator for Boltzmann sampling on a low-dimensional GMM-where it demonstrated excellent accuracy while the Hutchinson estimator produced biased estimates even in this simple case-we further tested its performance on a 1000-dimensional GMM and the Chignolin mutant.

For the 1000-dimensional GMM, the Simplified FP Entropy estimator achieved orders-of-magnitude acceleration compared to brute-force Jacobian evaluation while maintaining similar sampling accuracy. For the Chignolin mutant, the simplified FP entropy estimator produces configurations that are closer in distribution to the target Boltzmann distribution than those produced by the Hutchinson estimator, even when the latter uses 10 random vectors for trace estimation and incurs a significantly higher computational cost. Nevertheless, configurations from the simplified FP estimator still show noticeable deviations from the target distribution, particularly in the proportion of configurations assigned to each metastable mode.

Evaluation of the double-noise form of the Simplified FP Entropy estimator revealed limited practical benefits: across all benchmark systems, the double-noise form showed no notable improvement in sampling accuracy over the single-noise form, while requiring 1.6 times the compute time. This suggests that the single-noise formulation offers a more favorable balance between accuracy and efficiency for the applications considered.

Overall, these benchmark results highlight the potential of the Simplified FP Entropy estimator for sampling the Boltzmann distribution of complex many-body systems.

A limitation of the Simplified FP Entropy estimator is that the efficient computation of the entropy relies on having an explicitly available inverse flow. The fact that diffusion models, which are the SOTA deep generative models, can be recast as ODEs mitigates the impact of this requirement. However, in more general scenarios where f−1 is not given in closed form, one must resort to iterative methods to evaluate \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\,\hat{{{\boldsymbol{\epsilon }}}}\). Such approaches can significantly increase the computational cost, potentially undermining the practical benefits of the Simplified FP Entropy estimator.

Another limitation of the flow perturbation approach is that, while it significantly improves efficiency, it does not inherently increase accuracy compared to the brute-force Jacobian evaluation. This can be problematic if the base flow model experiences mode collapsing. In such cases, neither performing MC using the base flow with brute-force Jacobian evaluation nor performing MC using the perturbed flow is likely to recover the missing modes.

Methods

KL divergence

The forward path KL divergence of a flow model is a measure of the overlap between the ensemble of forward trajectories and the ensemble of backward trajectories. It has been shown that the number of forward trajectories needed for accurate Boltzmann sampling is an exponential function of this KL divergence63.

The forward path KL for the perturbed flow is determined by the expectation value of the generalized work

Here q(z) and p(x) are the prior and target distributions respectively. q(x∣z) and p(z∣x) are the conditional probabilities of generating forward trajectory z → x and the corresponding backward trajectory x → z, respectively. \({{\mathbb{E}}}_{q({{\bf{z}}})q({{\bf{x}}}| {{\bf{z}}})}[W]\) represents the expectation value of the generalized work W over all forward trajectories. const is determined by the normalization constant of q(z) and p(x). The forward path KL for a deterministic flow is similarly determined by the expectation value of the generalized work.

GMM

The GMM is a probabilistic model that represents a distribution as a combination of multiple Gaussian distributions. In this study, a GMM with two well-separated components is used as the benchmark system. The Gaussian components are chosen to be well-separated from each other. This setup creates a complex distribution with well-separated modes, which provides a stringent test for the Boltzmann sampling methods. Further details about the GMMs used in our tests can be found in Supplementary Sec. F.

Chignolin mutant

The Chignolin mutant, CNL02560,61,62, serves as a benchmark system for evaluating the Boltzmann sampling performance of various methods. This protein is a small peptide consisting of 10 amino acid residues (YYDPETGTWY), which is one of the smallest peptides with a well-defined folded structure.

In this study, we modeled the Chignolin mutant using the CHARMM22 force field76 with the implicit OBC2 solvent model77. We used simulation data from Frank Noé’s research group (http://ftp.mi.fu-berlin.de/pub/cmb-data/bgmol/datasets/chignolin/ChignolinOBC2PT.tgz), who performed a replica-exchange MD study of the Chignolin mutant with this force field, as our training set for the flow model. Specifically, we utilized configurations at 300K for training. The representative configurations of the Chignolin mutant at this temperature are shown in Fig. 4.

Base flow model

For the GMM benchmark systems, the base flow models were implemented as Probability-Flow ODEs of the diffusion model51,52. The diffusion model is parameterized based on the “predicting velocity" scheme78, and the velocity functions were modeled as MLPs with residual connections and sinusoidal time embedding. In contrast, the Chignolin system employed a Transformer-based architecture to model its velocity functions. Details about the neural network architecture and the training settings are provided in Supplementary Sec. K.

Jacobian-vector-product calculation

The simplified entropy term in Eq. (9) requires computing \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\), where \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\) is the Jacobian of the inverse flow f−1(x), and \(\hat{{{\boldsymbol{\epsilon }}}}\) is a unit vector. In the case that the reverse flow can be easily obtained, such as the case of CNF, this product can be efficiently evaluated using forward-mode automatic differentiation (AD). Although forward-mode AD was traditionally less supported than reverse-mode AD in major autodiff frameworks, support for it has been picking up recently. For instance, PyTorch introduced native support for forward-mode AD in version 2.0 through the “torch.func.jvp” API. This method has a computational cost equivalent to passing the flow twice. Alternatively, a numerical perturbation method can be employed: perturb x slightly in the direction of \(\hat{{{\boldsymbol{\epsilon }}}}\), compute the resulting change in f−1, and approximate the product as \(\frac{{{{\bf{f}}}}^{-1}({{\bf{x}}}+\delta \hat{{{\boldsymbol{\epsilon }}}})-{{{\bf{f}}}}^{-1}({{\bf{x}}})}{\delta }\), where δ is a small scalar. This method has a computational cost equivalent to passing the flow only once, yet it may be prone to reduced numerical accuracy. For improved accuracy, a symmetric difference approach can be used, which involves perturbing x in both positive and negative directions:

This approach reduces numerical errors compared to one-sided perturbation and provides higher precision. Our tests show that the precision of this method is on par with that of forward-mode AD, and the computational costs are similar, involving two passes of the flow. We have utilized this symmetric difference method for all the results presented in this paper.

If f is invertible but we do not have a closed-form expression for f−1, we cannot directly call or differentiate f−1. In this scenario, there are two general strategies to obtain \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\):

One approach is to solve a linear system for the vector-Jacobian product. Specifically, \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\) is the unique vector x* satisfying the equation

To compute this, the linear system

can be solved using iterative methods such as GMRES or BiCGSTAB. In this case, the matrix-vector products \(\frac{\partial {{\bf{f}}}}{\partial {{\bf{z}}}}\,{{\bf{v}}}\) can be efficiently handled using Automatic Differentiation (AD) without explicitly forming the full Jacobian.

Another approach is to use numerical perturbation combined with iterative inversion. Finite difference methods can approximate \(\frac{\partial {{{\bf{f}}}}^{-1}}{\partial {{\bf{x}}}}\hat{{{\boldsymbol{\epsilon }}}}\) as

Here, \({{{\bf{f}}}}^{-1}({{\bf{x}}}+\delta \hat{{{\boldsymbol{\epsilon }}}})\) and \({{{\bf{f}}}}^{-1}({{\bf{x}}}-\delta \hat{{{\boldsymbol{\epsilon }}}})\) must be obtained through iterative methods such as bisection or the secant method, iteratively solving until \({{\bf{f}}}({{\bf{z}}})\approx {{\bf{x}}}\pm \delta \hat{{{\boldsymbol{\epsilon }}}}\). While this method avoids explicitly calculating the Jacobian, it introduces computational overhead due to the need for iterative inversion.

SMC with Hutchinson, BFJacob, and SNF

In the section “SMC,” we have described how the SMC framework is implemented with the simplified FP entropy estimator. In addition to the FP entropy estimator, the SMC framework is also implemented with the Hutchinson estimator, Brute-Force Jacobian evaluation (BFJacob), and SNF for Boltzmann sampling. These implementations serve as comparative baselines for evaluating the performance of the FP-based approach.

For Hutchinson, BFJacob, and SNF, the SMC settings are largely consistent with the FP-based method, differing in two key aspects. The first difference, inherent to the definitions of these methods, lies in how the generalized work of a trajectory is computed. The second difference involves the Metropolis update: in the FP-based method, partial updates are applied to both the latent variable z and the noise variable \(\hat{{{\boldsymbol{\epsilon }}}}\), whereas in Hutchinson, BFJacob, and SNF, updates are restricted to z alone. To ensure a fair comparison, the number of z-coordinates updated during each trial move is the same across all methods at a given β.

Benchmark platform

All benchmarks presented in this study were conducted on a workstation equipped with dual Intel Xeon 6248R CPUs and an NVIDIA RTX 3090 GPU.

Data availability

The datasets generated in this study are publicly available at GitHub: https://github.com/XinPeng76/Flow_Perturbation, and have been archived on Zenodo: https://doi.org/10.5281/zenodo.1574446779. These datasets include the source data underlying all figures and tables presented in the paper.

Code availability

The code used in this study for training models, generating samples, and analyzing data is openly available on GitHub at: https://github.com/XinPeng76/Flow_Perturbation, and has been archived on Zenodo with the https://doi.org/10.5281/zenodo.1574446779.

References

Frauenfelder, H., Sligar, S. G. & Wolynes, P. G. The energy landscapes and motions of proteins. Science 254, 1598–1603 (1991).

Verlet, L. Computer “experiments" on classical fluids. I. Thermodynamical properties of Lennard-Jones molecules. Phys. Rev. 159, 98–103 (1967).

Metropolis, N., Rosenbluth, A. W., Rosenbluth, M. N., Teller, A. H. & Teller, E. Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092 (1953).

Hukushima, K. & Nemoto, K. Exchange Monte Carlo method and application to spin glass simulations. J. Phys. Soc. Jpn. 65, 1604–1608 (1996).

Swendsen, R. H. & Wang, J.-S. Replica Monte Carlo simulation of spin-glasses. Phys. Rev. Lett. 57, 2607–2609 (1986).

Torrie, G. & Valleau, J. Nonphysical sampling distributions in Monte Carlo free-energy estimation: umbrella sampling. J. Comput. Phys. 23, 187–199 (1977).

Laio, A. & Parrinello, M. Escaping free-energy minima. Proc. Natl. Acad. Sci. USA 99, 12562–12566 (2002).

Dellago, C., Bolhuis, P. G., Csajka, F. S. & Chandler, D. Transition path sampling and the calculation of rate constants. J. Chem. Phys. 108, 1964–1977 (1998).

Ballard, A. J. & Jarzynski, C. Replica exchange with nonequilibrium switches. Proc. Natl. Acad. Sci. USA 106, 12224–12229 (2009).

Zheng, S. et al. Predicting equilibrium distributions for molecular systems with deep learning. Nat. Mach. Intell. 6, 558–567(2024).

Jing, B., Corso, G., Chang, J., Barzilay, R. & Jaakkola, T. Torsional diffusion for molecular conformer generation. In Proc. Advances in Neural Information Processing Systems Vol. 35, 24240–24253 (NeurIPS, 2022).

Xu, M. et al. Geodiff: a geometric diffusion model for molecular conformation generation. In Proc. International Conference on Learning Representations (ICLR, 2022).

Hoogeboom, E., Satorras, V. G., Vignac, C. & Welling, M. Equivariant diffusion for molecule generation in 3D. In Proc. 39th International Conference on Machine Learning Vol. 162, 8867–8887 (ICML, 2022).

Wang, Y., Elhag, A. A., Jaitly, N., Susskind, J. M. & Bautista, M. A. Generating molecular conformer fields. In Proc. NeurIPS 2023 Generative AI and Biology (GenBio) Workshop (NeurIPS, 2023).

Wang, L., Aarts, G. & Zhou, K. Diffusion models as stochastic quantization in lattice field theory. J. High. Energy Phys. 2024, 60 (2024).

Noé, F., Olsson, S., Köhler, J. & Wu, H. Boltzmann generators: sampling equilibrium states of many-body systems with deep learning. Science 365, 1–11 (2019).

Midgley, L. I. et al. SE(3) equivariant augmented coupling flows. In Proc. Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS, 2023).

Albergo, M. S., Kanwar, G. & Shanahan, P. E. Flow-based generative models for Markov Chain Monte Carlo in lattice field theory. Phys. Rev. D. 100, 034515 (2019).

Köhler, J., Invernizzi, M., De Haan, P. & Noe, F. Rigid body flows for sampling molecular crystal structures. In Proc. 40th International Conference on Machine Learning Vol. 202, 17301–17326 (ICML, 2023).

Janson, G., Valdes-Garcia, G., Heo, L. & Feig, M. Direct generation of protein conformational ensembles via machine learning. Nat. Commun. 14, 774 (2023).

Wu, K. E. et al. Protein structure generation via folding diffusion. Nat. Commun. 15, 1059 (2024).

Ingraham, J. B. et al. Illuminating protein space with a programmable generative model. Nature 623, 1070–1078 (2023).

Watson, J. L. et al. De novo design of protein structure and function with rfdiffusion. Nature 620, 1089–1100 (2023).

Yim, J. et al. Fast protein backbone generation with SE(3) flow matching. Preprint at https://doi.org/10.48550/arXiv.2310.05297 (2023).

Abramson, J. et al. Accurate structure prediction of biomolecular interactions with alphafold 3. Nature 630, 493–500 (2024).

Rezende, D. J. & Mohamed, S. Variational inference with normalizing flows. In Proc. 32nd International Conference on Machine Learning Vol. 37, 1530–1538 (ICML, 2015).

Kingma, D. P. & Dhariwal, P. Glow: generative flow with invertible 1 × 1 convolutions. In Proc. Advances in Neural Information Processing Systems Vol. 31 (NeurIPS, 2018).

Dinh, L., Sohl-Dickstein, J. & Bengio, S. Density estimation using real NVP. In Proc. International Conference on Learning Representations (ICLR, 2017).

Dinh, L., Krueger, D. & Bengio, Y. Nice: non-linear independent components estimation. Preprint at https://doi.org/10.48550/arXiv.1410.8516 (2015).

Wu, H., Köhler, J. & Noe, F. Stochastic normalizing flows. In Proc. Advances in Neural Information Processing Systems Vol. 33, 5933–5944 (NeurIPS, 2020).

Nicoli, K. A. et al. Estimation of thermodynamic observables in lattice field theories with deep generative models. Phys. Rev. Lett. 126, 032001 (2021).

Nicoli, K. A. et al. Asymptotically unbiased estimation of physical observables with neural samplers. Phys. Rev. E 101, 023304 (2020).

Ahmad, R. & Cai, W. Free energy calculation of crystalline solids using normalizing flows. Model. Simul. Mater. Sci. Eng. 30, 065007 (2022).

Wirnsberger, P. et al. Normalizing flows for atomic solids. Mach. Learn. Sci. Technol. 3, 025009 (2022).

Invernizzi, M., Krämer, A., Clementi, C. & Noé, F. Skipping the replica exchange ladder with normalizing flows. J. Phys. Chem. Lett. 13, 11643–11649 (2022).

Dibak, M., Klein, L., Krämer, A. & Noé, F. Temperature steerable flows and Boltzmann generators. Phys. Rev. Res. 4, L042005 (2022).

Sbailò, L., Dibak, M. & Noé, F. Neural mode jump Monte Carlo. J. Chem. Phys. 154, 074101 (2021).

Gabrié, M., Rotskoff, G. M. & Vanden-Eijnden, E. Adaptive Monte Carlo augmented with normalizing flows. Proc. Natl. Acad. Sci. USA 119, e2109420119 (2022).

Schönle, C. & Gabrié, M. Optimizing Markov Chain Monte Carlo Convergence with Normalizing Flows and Gibbs Sampling. In Proc. NeurIPS 2023 AI for Science Workshop (NeurIPS, 2023).

Molina-Taborda, A., Cossio, P., Lopez-Acevedo, O. & Gabrié, M. Active learning of Boltzmann samplers and potential energies with quantum mechanical accuracy. J. Chem. Theory Comput. 20, 8833–8843 (2024).

Midgley, L. I., Stimper, V., Simm, G. N. C., Schölkopf, B. & Hernández-Lobato, J. M. Flow annealed importance sampling bootstrap. In Proc. NeurIPS 2022 AI for Science: Progress and Promises (NeurIPS, 2022).

Köhler, J., Klein, L. & Noe, F. Equivariant flows: exact likelihood generative learning for symmetric densities. In Proc. 37th International Conference on Machine Learning Vol. 119, 5361–5370 (ICML, 2020).

Klein, L., Krämer, A. & Noe, F. Equivariant flow matching. In Proc. Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS, 2023).

Chen, R. T. Q., Rubanova, Y., Bettencourt, J. & Duvenaud, D. K. Neural ordinary differential equations. In Proc. Advances in Neural Information Processing Systems Vol. 31 (NeurIPS, 2018).

Lipman, Y., Chen, R. T. Q., Ben-Hamu, H., Nickel, M. & Le, M. Flow matching for generative modeling. In Proc. Eleventh International Conference on Learning Representations (ICLR, 2023).

Liu, X., Gong, C. & Qiang, L. Flow straight and fast: Learning to generate and transfer data with rectified flow. In Proc. Eleventh International Conference on Learning Representations (ICLR, 2023).

Albergo, M. S. & Vanden-Eijnden, E. Building normalizing flows with stochastic interpolants. In Proc. Eleventh International Conference on Learning Representations (ICLR, 2023).

Tong, A. et al. Improving and generalizing flow-based generative models with minibatch optimal transport. In Proc. ICML Workshop on New Frontiers in Learning, Control, and Dynamical Systems (ICML, 2023).

Pooladian, A.-A. et al. Multisample flow matching: Straightening flows with minibatch couplings. In Proc. 40th International Conference on Machine Learning, vol. 202 of Proceedings of Machine Learning Research, 28100–28127 (ICML, 2023).

Chen, R. T. Q. & Lipman, Y. Flow matching on general geometries. In Proc. Twelfth International Conference on Learning Representations (ICLR, 2024).

Song, Y. et al. Score-based generative modeling through stochastic differential equations. In Proc. International Conference on Learning Representations (ICLR, 2021).

Karras, T., Aittala, M., Aila, T. & Laine, S. Elucidating the design space of diffusion-based generative models. In Proc. Advances in Neural Information Processing Systems Vol. 35, 26565–26577 (NeurIPS, 2022).

Song, J., Meng, C. & Ermon, S. Denoising diffusion implicit models. In Proc. International Conference on Learning Representations (ICLR, 2021).

Hutchinson, M. F. A stochastic estimator of the trace of the influence matrix for Laplacian smoothing splines. Commun. Stat. Simul. Comput. 18, 1059–1076 (1989).

Erives, E., Jing, B. & Jaakkola, T. Verlet flows: exact‑likelihood integrators for flow‑based generative models. In Proc. ICLR 2024 Workshop on AI4DifferentialEquations in Science (ICLR, 2024).

Nilmeier, J. P., Crooks, G. E., Minh, D. D. L. & Chodera, J. D. Nonequilibrium candidate Monte Carlo is an efficient tool for equilibrium simulation. Proc. Natl. Acad. Sci. USA 108, E1009–E1018 (2011).

Zhang, Q. & Chen, Y. Path integral sampler: a stochastic control approach for sampling. In Proc. International Conference on Learning Representations (ICLR, 2022).

Vargas, F., Grathwohl, W. S. & Doucet, A. Denoising diffusion samplers. In Proc. Eleventh International Conference on Learning Representations (ICLR, 2023).

Zhang, D., Chen, R. T. Q., Liu, C.-H., Courville, A. & Bengio, Y. Diffusion generative flow samplers: Improving learning signals through partial trajectory optimization. In Proc. Twelfth International Conference on Learning Representations (ICLR, 2024).

Rodriguez, A., Mokoema, P., Corcho, F., Bisetty, K. & Perez, J. J. Computational study of the free energy landscape of the miniprotein cln025 in explicit and implicit solvent. J. Phys. Chem. B 115, 1440–1449 (2011).

McKiernan, K. A., Husic, B. E. & Pande, V. S. Modeling the mechanism of CLN025 beta-hairpin formation. J. Chem. Phys. 147, 104107 (2017).

Maruyama, Y. & Mitsutake, A. Analysis of structural stability of chignolin. J. Phys. Chem. B 122, 3801–3814 (2018).

Pohorille, A., Jarzynski, C. & Chipot, C. Good practices in free-energy calculations. J. Phys. Chem. B 114, 10235–10253 (2010).

Chopin, N. A sequential particle filter method for static models. Biometrika 89, 539–552 (2002).

Del Moral, P., Doucet, A. & Jasra, A. Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B Stat. Methodol. 68, 411–436 (2006).

Neal, R. M. Annealed importance sampling. Stat. Comput. 11, 125–139 (2001).

Gordon, N. J., Salmond, D. J. & Smith, A. F. Novel approach to nonlinear/non-Gaussian Bayesian state estimation. In IEE Proceedings F (Radar and Signal Processing) Vol. 140, 107–113 (IET, 1993).

Kitagawa, G. Monte Carlo filter and smoother for non-Gaussian nonlinear state space models. J. Comput. Graph. Stat. 5, 1–25 (1996).

Beadle, E. R. & Djuric, P. M. A fast-weighted Bayesian bootstrap filter for nonlinear model state estimation. IEEE Trans. Aerosp. Electron. Syst. 33, 338–343 (1997).

Liu, J. S. & Chen, R. Sequential Monte Carlo methods for dynamic systems. J. Am. Stat. Assoc. 93, 1032–1044 (1998).

Carpenter, J., Clifford, P. & Fearnhead, P. Improved particle filter for nonlinear problems. IEE Proc. Radar Sonar Navig. 146, 2–7 (1999).

Kviman, O., Koptagel, H., Melin, H. & Lagergren, J. Statistical distance based deterministic offspring selection in SMC methods. Preprint at https://doi.org/10.48550/arXiv.2212.12290 (2022).

Li, T., Sun, S., Sattar, T. P. & Corchado, J. M. Fight sample degeneracy and impoverishment in particle filters: a review of intelligent approaches. Expert Syst. Appl. 41, 3944–3954 (2014).

Vaswani, A. et al. Attention is all you need. In Proc. Advances in Neural Information Processing Systems Vol. 30 (NeurIPS, 2017).

Li, J. et al. DiT: self-supervised pre-training for document image transformer. In Proc. 30th ACM International Conference on Multimedia 3530–3539 (ACM, 2022).

MacKerell Jr, A. D., Banavali, N. & Foloppe, N. Development and current status of the charmm force field for nucleic acids. Biopolymers 56, 257–265 (2000).

Onufriev, A., Bashford, D. & Case, D. A. Exploring protein native states and large-scale conformational changes with a modified generalized born model. Proteins Struct. Funct. Bioinform. 55, 383–394 (2004).

Salimans, T. & Ho, J. Progressive distillation for fast sampling of diffusion models. In Proc. International Conference on Learning Representations (ICLR, 2022).

Peng, X. & Gao, A. Flow perturbation to accelerate Boltzmann sampling, Repository:XinPeng76/Flow_Perturbation. https://doi.org/10.5281/zenodo.15744467 (2025).

Acknowledgements

This research was supported by the National Natural Science Foundation of China under grant numbers 12204059 and 22473016 (A.G.).

Author information

Authors and Affiliations

Contributions

A.G. designed the study. X.P. and A.G. wrote the code, performed calculations, analyzed data, and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Marylou Gabrié and the other, anonymous, reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Peng, X., Gao, A. Flow perturbation to accelerate Boltzmann sampling. Nat Commun 16, 6604 (2025). https://doi.org/10.1038/s41467-025-62039-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-62039-8