Abstract

Intensive care unit (ICU) patients often experience rapid changes in clinical status, requiring timely identification of deterioration to guide life-sustaining interventions. Current artificial intelligence (AI) models for acuity assessment rely on mortality as a proxy and lack direct prediction of clinical instability or treatment needs. Here we present APRICOT-M, a state-space model to predict real-time ICU acuity outcomes and transitions, and the need for life-sustaining therapies within the next four hours. The model integrates vital signs, laboratory results, medications, assessment scores, and patient characteristics, to make predictions, handling sparse, irregular data efficiently. Our model is trained on over 140,000 ICU admissions across 55 hospitals and validated on external and real-time data, outperforming clinical scores in predicting mortality and instability. The model demonstrates clinical relevance, with physicians reporting alerts as actionable and timely in a substantial portion of cases. These results highlight APRICOT-M’s potential to support earlier, more informed ICU interventions.

Similar content being viewed by others

Introduction

On average, more than five million patients are admitted annually to intensive care units (ICUs) in the US1, with mortality rates ranging from 10 to 29% depending on the patient’s age, comorbidities, and illness severity2. Patient acuity implies the categorization of patients based on their care needs3. Such categories can range from stable (i.e., requiring low-level support) to unstable with escalating support needs (e.g., hemodynamic or respiratory instability)4,5. During an ICU stay, the acuity state of patients can quickly change, leading to an increased threat to life. Early detection of such deteriorating conditions may assist in enabling more timely interventions and has the potential to improve patient outcomes6,7.

While all ICU patients require a high level of care, they can still be categorized into different acuity levels based on the intensity and complexity of their clinical needs. These acuity categories typically range from stable but critically ill (e.g., requiring close monitoring or low-level support) to unstable with escalating support needs (e.g., requiring mechanical ventilation or vasopressors), and finally to life-threatening deterioration (e.g., multi-organ failure). Patient acuity is thus a dynamic continuum, and timely detection of changes in acuity can facilitate earlier interventions and improve clinical outcomes.

Given the frequent pace of monitoring in the ICU, many physiological and clinical measurements are recorded as time series, such as the vital signs, laboratory test results, medications, and assessment scores. The rich temporal data, in conjunction with static patient characteristics (e.g., age, sex, or comorbidities), provide opportunities for implementing real-time approaches for assessing patient acuity and augmenting clinicians’ decisions. Several scores are currently used to assess patient acuity among ICU patients, such as Sequential Organ Failure Assessment (SOFA)8, Acute Physiology And Chronic Health Evaluation (APACHE)9, Simplified Acute Physiology Score (SAPS)10,11, and Modified Early Warning Score (MEWS)12. However, these scores have several limitations including manual calculation and only providing a score at one point in time. More recent approaches have explored the potential use of neural network models to employ the vast amount of Electronic Health Record (EHR) data for automated acuity assessment. Many approaches have focused on mortality prediction as a proxy for real-time patient acuity scores7,13,14 and have been shown to outperform manual scores while providing more timely and frequent acuity assessments. Despite their high performance and granularity, many existing artificial intelligence (AI) methods have the limitation of solely predicting mortality risk. Developing scores that capture a broader spectrum of patient acuity states beyond mortality risk can provide a more complete picture and opportunities for timely interventions.

A recent computational phenotyping method developed by Ren et al.15 annotated patient acuity states based on the use of four life-sustaining therapies: mechanical ventilation (MV), massive blood transfusion (BT) (defined as at least 10 units in the past 24 h), vasopressor (VP) administration, and continuous renal replacement therapy (CRRT). A significant number of patients in the ICU require MV, with an incidence rate of 20–40%1, while 30–50% of ICU patients require BT16. Vasopressors (VP) are used to manage blood pressure in about 27% of ICU patients17, and CRRT is needed in patients with chronic kidney disease or acute kidney injury, up to 14% of ICU patients18. Ren et al. considered patients unstable if they required at least one of the four life-supportive therapies (MV, BT, VP, CRRT); if not, they were considered stable. The stability state predictions, together with the discharge and mortality predictions, provide a more complete picture of patient acuity.

Recent advances in AI have shown that the Transformer architecture19 can be very effective for time-series classification tasks20,21. Specifically, this architecture has been adapted for clinical time series with the development of embeddings that can handle the irregular sampling of this type of data13,22. The emergence of Mamba23, a state space-based model (SSM), has recently provided an alternative to the Transformer architecture with hardware efficient design, increased speed and performance on tasks with longer sequence lengths while using fewer parameters. Models based on this architecture have already shown promising performance on clinical prediction tasks24,25,26. We hypothesize that using a Mamba-based model could potentially lead to improved performance in patient acuity prediction compared to the existing deep learning methods.

In this study, we propose APRICOT-M (Acuity Prediction in Intensive Care Unit-Mamba), a Mamba-based model to predict real-time acuity, defined as stable, unstable, deceased, or discharged, in the ICU. Our model uses temporal data from the preceding four hours in the ICU along with static patient characteristic data obtained at admission time to predict patient acuity within the next four hours in the ICU. Specifically, the algorithm predicts the probability of a patient being stable or unstable within the next four hours, along with the probability of mortality or discharge. Furthermore, it predicts transitions between acuity states (i.e., the transition from stable to unstable and vice versa) and the need for three life-sustaining therapies (MV, VP, and CRRT) within the next four hours. For the purpose of this study, the acuity definition developed by Ren et al.15 was employed. Our model is engineered to not require data imputation for missing values in temporal data. This design choice helps in reducing input noise and optimizing the model for real-time deployment. The APRICOT-M model was developed using data obtained from patients who received care at the University of Florida Health (UFH) Shands Hospital between 2014-2017 in conjunction with data from 54 hospitals from the electronic ICU Collaborative Research Database (eICU)27. The model was then externally validated on data from hospitals not used in the development phase consisting of the remaining 143 hospitals from eICU and data from the Medical Information Mart for Intensive Care (MIMIC)-IV28, and temporally validated on data from a period not used in the development phase consisting of UFH data between 2018-2019. Finally, prospective validation was performed with data acquired prospectively from UFH29 between May 2021 and October 2023. Additionally, a Transformer variant of the model, APRICOT-T, was also developed and used along with three baseline models to demonstrate the advantages of the Mamba-based architecture. In summary, our proposed model is a real-time acuity prediction tool for patients in the ICU that provides an advantage over existing approaches by incorporating the prediction of stable and unstable states, mortality, and discharge, transition in patient acuity states, and the need for life-sustaining therapies, while being extensively validated using three large datasets across external, temporal, and real-time clinical settings, and further evaluated through clinical adjudication. Furthermore, it is among the earliest applications of a Mamba-based model to clinical data. To facilitate the reproducibility of our method, we have made the APRICOT-M code available on GitHub (see Code Availability).

Results

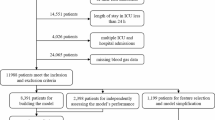

We developed the proposed APRICOT-M on n = 142,062 ICU admissions and evaluated the model’s performance for predicting patient acuity states, transitions in clinical stability, and the onset of life-sustaining therapies across external (n = 101,356), temporal (n = 15,940), and prospective (n = 369) cohorts. These cohorts represent diverse ICU populations across different institutions and time periods, allowing for robust validation of the model’s generalizability. The full model development and validation framework is illustrated in Fig. 1.

a Data from three different Intensive Care Unit (ICU) datasets were used for training and validating the APRICOT-M model (199 hospitals, 195,516 patients, and 259,706 ICU admissions total). Each dataset consisted of ICU stay information (i.e., vital signs, medications, laboratory test results, and assessment scores) and admission information (i.e., patient demographics and comorbidities). The presence of four life-sustaining therapies events were extracted: mechanical ventilation (MV), vasopressors (VP), continuous renal replacement therapy (CRRT), and massive blood transfusion (BT). Highly infrequent and outlier features were removed. b Temporal variables were processed into a list of clinical events for each patient, where each variable was assigned a code according to its order of appearance. Clinical events were assigned to 4-h observation windows and used with static patient information to predict the outcome in the next 4-h window. After data was split into time windows, each variable was scaled. c The acuity status was determined every 4 h in the ICU, with a patient being labeled as ‘unstable’ for a 4-h interval if at least one of the four life-sustaining therapies extracted was present. d The outcome for each 4-h window was determined by combining acuity status labels with decease/discharge indicators, providing a complete acuity trajectory for each patient, including the transition between states and onset of three life-sustaining therapies: MV, VP, and CRRT. e A state space-based model was employed for acuity, transition, and life-sustaining therapy onset prediction, with two separate embeddings used for temporal and static data. The first embedding was created by a Mamba block, which took the sequence of clinical events, from which the output was then combined with the second embedding, created from static patient data, to calculate the probabilities of each acuity outcome, transition and life-sustaining therapy. The loss of the model was optimized by comparing the predicted probabilities with the ground truth labels. Finally, the model was evaluated by calculating the classification error for each outcome individually. This figure was created using BioRender (https://biorender.com/).

Cohort characteristics

Cohort-level characteristics for this study are presented in Table 1 in terms of ICU admissions. The external cohort had the highest median age (65 years), while the prospective cohort had the lowest (60 years). There were no significant differences in the percentage of female patients between the development, external, and temporal cohorts, but there were fewer in the prospective cohort (40.1%) compared to the development and external cohorts. The prospective cohort had the highest ICU length of stay (6.1 days), while the external cohort had the lowest (1.9 days). The incidence of mechanical ventilation was significantly higher in the external cohort (34.2%) compared to the development and temporal cohorts. The incidence of vasopressors was highest in the temporal cohort (28.8%) compared to all other cohorts. The temporal and external cohorts had the highest mortality rate (5.9% and 5.8%, respectively), while the prospective cohort had the lowest (2.7%). Comparisons between patients who did not experience instability during their ICU stay and survived against patients that received MV, VP, and CRRT therapies and deceased patients are provided in the Supplement for all cohorts (Supplementary Tables S2–S5). Furthermore, the probability for each state transition across all study cohorts was calculated and shown in Supplementary Fig. S8. For stable patients, there was a 97.10–97.84% probability of remaining stable, 1.26–1.62% of transitioning to an unstable state, 0.50–1.50% of transitioning to discharge, and 0.03–0.22% of transitioning to deceased. For unstable patients, there was a 92.12–93.93% probability of remaining unstable, 5.57–7.12% of transitioning to stable, 0.03–0.21% of transitioning to discharge, and 0.12–0.56% of transitioning to deceased.

Model performance, calibration, and subgroup analysis

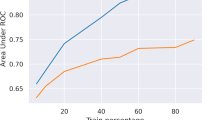

The AUROC performance of APRICOT-M to predict outcomes within the next 4 h was calculated for development, external, and prospective cohorts (Table 2). Results for the temporal cohort can be found in the Supplementary Material, along with all performance metrics compared to baselines calculated with the Youden index30 (Supplementary Table S7). All metrics and analyses reported for the development cohort refer to the validation subset. We report results on this subset rather than the entire development cohort to provide a more unbiased estimate of model performance and avoid overestimating results due to training data exposure. Performance for discharge prediction did not show significant differences among development, external, and prospective cohorts. Prediction of the deceased state had the highest performance in the prospective cohort (0.99 CI: 0.96–1.00) and lowest performance in the development cohort (0.94 CI: 0.94–0.95), while prediction of stable-unstable transition had higher performance in the development and external cohort (0.77 CI: 0.76–0.77, and 0.77 CI: 0.77–0.77 respectively) compared to the prospective cohort (0.71 CI: 0.67–0.75). Prediction for life-sustaining therapies showed highest performance on development and external cohorts for MV (0.80 CI: 0.79–0.81, and 0.81 CI: 0.80–0.81 respectively), on development and prospective cohorts for VP (0.81 CI: 0.80–0.82, and 0.83 CI: 0.78–0.87), and development cohort for CRRT (0.94 CI: 0.93–0.96). The AUROC performance of the model across different time windows is shown in Supplementary Table S16. The best performance for most outcomes was achieved with a 4-h time window with statistical significance, except for Unstable-Stable prediction, which had the best performance with a 24-h time window in the development and external cohorts, and CRRT, which also had the best performance with a 24-h time window in the external cohort.

Calibration curves and Brier scores for each cohort are shown in Supplementary Figs. S9–S12. Subgroup analysis based on age, sex, and race groups is shown in Supplementary Table S15. Given the small size of the prospective cohort and the low incidence rate of certain outcomes, some age, sex, and race groups did not have positive labels for some outcomes (e.g., no positive labels for mortality or transitions). Therefore, the performance metrics could not be computed for these groups and the subgroup bias analysis could not be performed. The hyperparameter search space for optimization of the APRICOT-M model, along with the optimal hyperparameters are provided in Supplementary Table S6. A comparison of APRICOT-M against APRICOT-T of prediction performance and inference speed at different sequence lengths is shown in Supplementary Fig. S18 and Supplementary Table S18, where APRICOT-M had higher overall AUROCs and AUPRCs with increasing sequence lengths compared to APRICOT-T, while keeping faster inference speeds.

Acuity episode prediction and false positive analysis

The performance of APRICOT-M at both step and episode levels for mortality prediction is summarized in Table 3. The model achieved higher AUROC and AUPRC for episode and step-level prediction across the development, external, and prospective cohorts compared to the raw SOFA score, with all differences being statistically significant except episode AUROC for the prospective cohort. For instability, APRICOT-M was compared to the raw SOFA score and the two or more-point increase criterion (Table 4). For development and external cohorts, the model had higher AUROC at both episode and step levels and higher AUPRC at the episode level than raw SOFA scores and SOFA (≥2 points). For the prospective cohort, APRICOT-M had no statistically significant differences at the episode level with both SOFA models but had higher AUROC at the step level.

The prediction metrics for APRICOT-M compared to SOFA at different episode-level precisions (i.e., the PPV of the model for episode predictions) for mortality and instability are shown in Supplementary Tables S9 and S10 respectively. A 33% precision level was chosen given it has been used on previous clinical continuous risk prediction studies31 and yielded the best balance between the number of false positives and sensitivity. When using this episode-precision level, the proposed model predicted 76%, 80%, and 88% of deceased patients in the development, external, and prospective cohorts, respectively, compared to 44%, 34%, and 73% predicted by SOFA. For instability, different precision levels for SOFA could not be calculated, given the low AUPRC. Therefore, the SOFA (≥2 points) criterion was used as the baseline. At 33%-episode precision, our model predicted 36%, 55%, and 51% of instability cases compared to 36% (at 12% precision), 24% (at 11% precision), and 37% (at 27% precision) predicted by SOFA in the development, external, and prospective cohorts respectively.

The metrics at both episode and step levels at 33% precision for mortality, instability, MV, and VP prediction are shown in Supplementary Table S8. The earliest prediction (i.e., lead time) was measured as the number of hours before the onset of the outcome at which the first positive prediction was made, and the number of alerts represents the number of times the model predicted a positive before the outcome. For mortality, 76–88% of cases were correctly predicted with 2–3 alerts and up to 20–200 h of lead time. For instability, 36–55% of cases were correctly predicted with 1–2 alerts and up to 4–14 h of lead time. For MV, 44–63% of cases were correctly predicted with 1–2 alerts and up to 4–18 h of lead time. For VP, 27–51% of cases were correctly predicted with one alert and up to 4 h of lead time. The metrics for all models at episode and step level for different thresholds for mortality, instability, MV and VP are shown in Supplementary Tables S11–S14.

Predictions distribution

To further evaluate the performance of our model in acuity prediction, a confusion matrix was generated for the four primary outcomes at the step (i.e., 4-h window) level: discharge, stable, unstable, and deceased. The proportions of predicted acuity status (Fig. 2) show that across the development, external, and prospective cohorts, the sensitivity for predicting discharge and mortality ranged between 58–73% and 87–100%, respectively. The sensitivity range for predicting stable and unstable states was 25–34% and 64–83%, respectively, with 26–33% of stability cases predicted as discharge and 11–22% of instability cases predicted as deceased. Furthermore, the proportions of each acuity state across each day in the ICU compared to the predictions by the proposed model are shown on Supplementary Fig. S13. This figure shows more discharge, unstable, and deceased state predictions than the ground truth number of these states.

A Development cohort. B External cohort. C Prospective cohort. Each plot shows the proportion of predicted acuity states for each state according to the column: discharge, stable, unstable, and deceased. Green represents a correct prediction (i.e., true positives) for those with the specific acuity state, while red represents an incorrect prediction (i.e., any state other than the correct one was predicted). Source data are provided as a Source Data file.

Feature importance

The relevance of each feature for the prediction of increased acuity was determined using integrated gradients. The integrated gradients of each increased acuity outcome (i.e., unstable, stable-unstable, MV, VP, CRRT, and deceased) were averaged to obtain the overall score for each feature that drives the model to predict higher acuity level. The top 15 features across all development, external, and prospective cohorts are presented in Fig. 3. The top features among all cohorts were age, race, Glasgow coma scale (GCS) score, lactate, sodium, and INR (blood clot). A complete feature ranking for all prediction tasks across all cohorts is provided in the Supplementary Material (Supplementary Figs. S14–17).

A Development cohort. B External cohort. C Prospective cohort. The top 15 features for primary outcome prediction. Features are ordered according to the highest average of integrated gradient attributions across the increased acuity outcomes: unstable, stable-unstable, mechanical ventilation (MV), vasopressors (VP), continuous renal replacement therapy (CRRT), and deceased. Source data are provided as a Source Data file.

Examples of real-time evaluation

An example patient from the prospective cohort was extracted to demonstrate APRICOT-M real-time capabilities (Fig. 4). The patient had an ICU admission spanning over 500 h. The patient transitioned from stable to unstable approximately 128 h into their ICU admission when they required VP support. At around 264 h, MV was initiated. The patient transitioned back to stable at around 276 h, although they required MV again for a brief period of time after (from the 324 to the 328-h mark). The patient was discharged home at the end of their admission. The model detected increased instability as early as 16 h into the ICU admission, with multiple risk peaks before the onset of instability (Fig. 4A). Instability risk steadily decreased as the patient transitioned back to stable until they were discharged. Mortality risk remained low at all times, showing that regardless of the high acuity state of the patient, the model did not consider a high threat to life.

Example of real-time monitoring of an unstable patient in the prospective cohort. A Acuity trajectory of the patient, with the onset of vasopressor (VP) and mechanical ventilation (MV) therapies, along with instability and mortality risk predicted by APRICOT-M throughout ICU admission. Prediction uncertainty was calculated by running the model 100 times at each time step and using the minimum and maximum predicted probabilities to define the range of uncertainty. The model prediction (center) at each time step was calculated as the median across iterations. B Vitals trajectory of the patient including Heart Rate (HR), Diastolic Blood Pressure (DBP), Systolic Blood Pressure (SBP), Respiratory Rate (RR), Oxygen Saturation (SPO2), and Body Temperature. Orange shading represents integrated gradient attributions at each time step, with higher color intensity representing a higher attribution. Source data are provided as a Source Data file.

Two additional example patients were extracted from the external cohort to further demonstrate APRICOT-M’s robustness: one deceased and one discharged patient. The first example patient (Supplementary Fig. S19) transitioned from stable to unstable approximately 136 h after ICU admission when they required mechanical ventilation (MV). About 8 h later, the patient received vasopressor (VP) support and ultimately passed away approximately 280 h after ICU admission. The model detected increased mortality risk at around 200 h (Supplementary Fig. S19A), increasing until death. The vitals chart in Supplementary Fig. S19B shows the integrated gradient attribution to each time step for each vital sign along the patient’s trajectory in the ICU. The second example patient (Supplementary Fig. S20) transitioned from stable to unstable approximately 20 h into their ICU admission when they simultaneously received MV and VP therapies. The patient remained unstable until about 156 h into their admission when they transitioned back to stable and was discharged 24 h later. The model detected decreased instability risk approximately 16 h before the patient transitioned to a stable condition (Supplementary Fig. S20A). Also, mortality risk remained low at all times during the ICU stay.

Clinical adjudication

Demographic data for adjudicators is presented on Supplementary Table S19. Clinical adjudicators’ evaluations of alerts generated by the APRICOT-M system highlight both the perceived clinical utility of the alerts and the extent of inter-rater agreement (Table 5). Predictions triggered an alert if they crossed the 33%-episode level precision threshold. Model alerts were considered likely to have changed clinical management on more than one quarter of cases on average (26.70% ± 29.30% standard deviation [SD]) and timely enough to potentially prevent adverse events in close to one third of cases (31.70% ± 20.80% SD). Moreover, alerts were interpretable, with contributing factors judged as relevant to increased acuity in 42.30% of alerts ( ± 6.90% SD). Inter-rater agreement for these assessments, as measured by Cohen’s Kappa, ranged from 0.25 to 0.46, with the highest agreement observed for the relevance of contributing factors (0.46 ± 0.16 SD). Therapy-specific recommendations were rated more favorably and with stronger consistency: appropriateness was affirmed in 53.3% of MV, 83.3% of VP, and 78.3% of CRRT recommendations. In the context of this study, a recommendation for any life-sustaining therapy is defined as the model predicting a high risk of the therapy being administered. These assessments were also associated with higher inter-rater reliability, with Cohen’s Kappa values of 0.73 ( ± 0.19 SD) for MV, 0.64 ( ± 0.03 SD) for VP, and 0.71 ( ± 0.20 SD) for CRRT. Therapy recommendations by the model and actual therapy administration rates are shown on Supplementary Fig. S24.

Ratings of APRICOT-M alerts were also stratified by three thresholds of consensus: unanimous (all adjudicators agreed), majority (at least two adjudicators agreed), and one-or-more (at least one adjudicator agreed) (Fig. 5A). For general acuity-related criteria, the level of unanimous agreement with the statements ranged from 5% to 25%. When lowering the threshold to majority agreement, consensus increased. Close to a sixth of alerts (15%) were considered to have potentially changed management of the patient, one quarter of alerts (25%) were deemed timely enough to potentially prevent adverse events, over one third (35%) were considered to provide relevant factors to increased acuity, and close to half (45%) were considered to accurately identify an increase in acuity. At the least stringent threshold (“one-or-more”), agreement with statements ranged from 60% to 65% of the cases. Therapy specific recommendations showed unanimous agreements on 45%, 75%, and 70% of cases for MV, VP, and CRRT respectively. Majority agreement reached 50% for MV, 85% for VP, and 80% for CRRT, while agreement from at least one adjudicator showed 65% (MV), 90% (VP), and 85% (CRRT) agreement. Furthermore, when asked to score each patient’s risk on a scale from 0 to 100 before and after the alert, adjudicators generally agreed with the model’s assessments of increased patient acuity. Adjudicators rated patient risk across all cases with an average score of 43.36 ( ± 27.83 SD) before the alert, increasing to 44.00 ( ± 30.43 SD) after the alert (Fig. 5B). Individual adjudicator-assigned risk scores before and after alerts are shown in Supplementary Fig. S22.

A Bar plot showing agreement of adjudicators with different statements regarding APRICOT-M alerts. B Box plot of average risk scores assigned by adjudicators (n = 3) before and after the alert by APRICOT-M across all adjudication cases (n = 20). For each boxplot, the center line, box limits, and whiskers separately indicate the median, upper and lower quartiles, and 1.5× interquartile range. Source data are provided as a Source Data file.

When asked for the potential reason for acuity increase for each APRICOT-M alert, adjudicators judged 16.78% ( ± 6.06% SD) of alerts on average to have occurred when the patient had no apparent issue. On the other hand, 29.18% ( ± 3.88% SD), 10.88% ( ± 7.19% SD), and 5.84% ( ± 3.82% SD) of alerts were judged to have occurred when the patient had hemodynamic instability, respiratory distress, and renal impairment, respectively. These results are shown per adjudicator on the Supplementary Material (Supplementary Fig. S21). When asked about the relevance of contributing factors for each therapy recommendation, factors for MV recommendation were deemed relevant in 63.33% ( ± 12.47% SD) of alerts on average, 60.00% ( ± 10.80% SD) for VP, and 36.67% ( ± 28.96% SD) for CRRT. Per-adjudicator results are provided on the Supplementary Material (Supplementary Fig. S23).

Discussion

The results presented in this study show the development and validation of APRICOT-M as a tool for ICU patient acuity monitoring. This study offers a more comprehensive definition of ICU acuity by incorporating the need for life-sustaining therapies (MV, VP, CRRT, BT) providing a meaningful advantage over existing approaches. Furthermore, discharge and mortality data were integrated to augment patient outcome and discharge disposition predictions. Previous studies to predict patient acuity in the ICU have focused on mortality prediction as a proxy of acuity7,13. Comparing mortality prediction in this study in terms of AUROC, performance on development (0.94 CI: 0.94–0.95), external (0.95 CI: 0.94–0.95), and prospective (0.99 CI: 0.96–1.00) cohorts exceeds DeepSOFA (AUROC 0.90–0.91)7 and is comparable to the Transformer acuity estimation model (AUROC 0.98)13. Furthermore, this study has the advantage of validating mortality prediction performance on patients from multiple hospitals, which demonstrates the generalizability of APRICOT-M.

The model presented here has the ability to predict the type of life-sustaining therapy that the patient will need in addition to overall acuity predictions. Although no studies have predicted the need for CRRT to the best of our knowledge, some studies have predicted the use of VP and MV. For VP prediction, the AUROC in development set (0.81 CI: 0.80–0.82) was comparable to what is seen in the literature (AUROC 0.80–0.81)32, while the prospective set showed slightly higher performance (0.83 CI: 0.78–0.87) and the external set showed lower, although comparable, performance (0.76 CI: 0.76–0.77). For MV prediction, performance reported in the literature is varied, with some studies reporting as low as AUROC 0.6833 and others reporting as high as AUROC 0.9734. It is important to note that these studies performed MV prediction on COVID-19 patients which could be a potential bias. Furthermore, the models were developed and validated on data from only one hospital. The diverse data collection methods of each hospital can make it difficult to extract with high precision the start and end times of these events. The AUROC shown in this study falls within the range of what is seen in the literature (development 0.80 CI: 0.79–0.81, external 0.81 CI: 0.80–0.81, and prospective 0.73 CI: 0.69–0.77) and is tested in data from multiple hospitals. The lower performance in ventilation prediction in the prospective cohort may be partly attributed to the lower acuity level of patients in this group. This cohort was the only one requiring patient consent, and it is a common challenge in ICU studies that patients with more severe illness are often unable to provide consent, which can result in an underrepresentation of high-acuity cases.

We compared APRICOT-M against four baseline models (i.e., CatBoost, GRU, Transformer, and APRICOT-T) across nine tasks in the four validation cohorts (Supplementary Table S7). While the proposed model did not outperform all models in every instance, it achieved the highest overall win rates: 61.1% vs. CatBoost and APRICOT-T, 66.7% vs. GRU, and 69.4% vs. Transformer (22–25 wins out of 36 comparisons). This strong performance could be attributed to the Mamba architecture, which leverages state-space modeling to capture both short- and long-term dependencies efficiently35,36 avoiding the memory limitations of GRUs and the computational burden of Transformers. Its continuous-time dynamics and selective gating enable more effective sequence modeling by dynamically prioritizing relevant information37. A comparison of prediction performance and inference speed between APRICOT-M and APRICOT-T (Supplementary Fig. S18 and Supplementary Table S18) further illustrated the advantage of the Mamba architecture against the Transformer, showing higher performance with longer sequence lengths and significantly faster inference speeds. Although APRICOT-M can be more computationally intensive compared to more traditional machine learning models (i.e., CatBoost) due to its complex state updates and gating layers, the performance gains justify the added cost in tasks requiring accuracy and robustness.

To evaluate the real-world performance of APRICOT-M, evaluating performance at the episode level was fundamental to account for correct prediction of occurrence of an outcome (i.e., episode level) rather than correct prediction of the time where the outcome would occur (i.e., step level). By setting a threshold at the episode level, the model can achieve higher precision at the episode and step level. The model demonstrated statistically significant better performance at both episode and step levels compared to SOFA scores for mortality and instability predictions and predicted more mortality and instability cases at the 33% precision episode level. This precision level means that when the proposed model predicts a positive outcome, 1 out of 3 times, the outcome will occur in the next four or more hours.

The distribution of predictions shown in Fig. 2 demonstrates that our model correctly identifies the primary outcomes with high sensitivity for deceased (87–100%), unstable (64–83%), and discharged (58–73%). Although sensitivity for stability (25–34%) was lower, many false negatives were predicted as discharged (26–33%), which could indicate the patients were in a condition where they could be discharged at the moment but were discharged later. These numbers also reflect the high count of false positive alarms for unstable states in stable 4-h windows (33–42%). This is also reflected in Supplementary Fig. S13, where a significant proportion of the model’s output is unstable predicted labels. As previously shown in the episode prediction, the model often alerts several hours before a patient transitions to an unstable state, which inflates the number of false positives.

The three examples presented in Fig. 4 and Supplementary Figs. S19 and 20 show a simulation of APRICOT-M’s real-time predictive capabilities in the ICU. The first case (Fig. 4), from the prospective cohort, involved a prolonged ICU stay with VP initiated at hour 128 and MV at hour 264. Although instability was resolved by hour 276, MV continued briefly thereafter. The model flagged early instability risk as soon as hour 16 and showed declining risk in parallel with clinical improvement. In the second case (Supplementary Fig. S19), a patient who ultimately passed away transitioned to an unstable state at hour 136, received MV and VP support, and passed away at hour 280. The model detected rising mortality risk around hour 200—well before death—while attribution maps highlighted vital signs contributing to this prediction. In the third case (Supplementary Fig. S20), a patient transitioned to instability early in the stay but returned to a stable state by hour 156 and was discharged home. The model identified decreasing instability risk about 16 h prior to clinical recovery, with consistently low mortality risk. Across all examples, mortality risk remained low unless deterioration occurred, underscoring our model’s potential to anticipate risk trajectories and support timely intervention.

The use of integrated gradients identified several features which could be important for acuity prediction. The importance of age for this type of prediction is consistent with the knowledge that older patients are at a higher risk in the ICU38. The GCS score, used to determine comatose state, is also correlated to patient acuity39. Lactate, sodium, and INR (blood clot) have all been shown to be correlated to higher ICU mortality and therefore higher levels of acuity40,41,42. The high attribution given to race by integrated gradients could be due to different factors including social determinants of health. In fact, some studies have shown the impact of these factors in readmissions across a variety of conditions43,44. Future studies will explore how and why race is associated with patient acuity in the ICU.

The prospective evaluation of APRICOT-M on ICU patient data acquired with our real-time platform provides a validation method of the potential application of this model in the real world. The performance seen in this cohort was comparable to the other three cohorts, which shows the proposed model can be readily deployed and employed in real-time to assist clinicians in decision-making. The model has been deployed for real-time prediction for use in the ICU and will be further validated in future studies. A figure showing the interface of the APRICOT-M web application is provided in the Supplementary Material (Supplementary Fig. S25).

The results of the clinical adjudication provide evidence supporting the clinical relevance and interpretability of our model’s alerts, alongside meaningful variability in inter-rater agreement. Alerts were judged to meaningfully impact clinical decision-making in more than a quarter of cases and were considered sufficiently timely to potentially prevent adverse events in nearly one-third. Moreover, alerts were considered interpretable, providing relevant factors to increasing acuity in close to half of cases. Despite variability in ratings, the relatively moderate level of inter-rater agreement (κ = 0.25–0.46) suggests that adjudicators perceive the model’s alerts as clinically informative, although interpretability and management implications may vary according to individual clinical judgment and specialty experience. Therapy-specific recommendations, particularly for vasopressors and continuous renal replacement therapy, demonstrated notably higher consensus and were regarded positively in most instances, reflecting greater clinical alignment for clearly actionable interventions (κ = 0.64–0.73). This may indicate that APRICOT-M performs especially well in contexts with well-defined clinical guidelines or established therapeutic protocols. However, the observed lower agreement on general acuity and alert timing underscores the complexity of clinical interpretation of increasing acuity.

Ratings of proposed model’s alerts were further stratified by adjudicator consensus thresholds: unanimous, majority, and one-or-more (Fig. 5A). For general acuity-related questions, unanimous agreement was low (5–25%), but increased under the majority threshold, with 15% of alerts considered to have changed management, 25% deemed timely, 35% judged to provide relevant contributing factors, and 45% considered to accurately identify increased acuity. At the least stringent threshold, 60–65% of alerts received agreement from at least one adjudicator. These patterns suggest that although broad acuity signals are often subject to varied interpretation, the alerts still captured clinically meaningful events recognized by at least some reviewers. In contrast, therapy-specific alerts demonstrated significantly higher consensus: unanimous agreement was reached in 45% of MV, 75% of VP, and 70% of CRRT recommendations, increasing to 50–85% at the majority level and up to 90% when considering one-or-more adjudicators. These findings reinforce the system’s strength in providing actionable, domain-specific recommendations that align well with established clinical pathways, particularly in the context of hemodynamic or renal deterioration. Furthermore, when adjudicators scored patients’ acuity from 0 to 100 before and after the alert, average scores increased from 43.36 ( ± 27.83 SD) to 44.00 ( ± 30.43 SD), indicating general alignment with the model’s identification of rising risk (Fig. 5B). Though the average change is modest, it reflects a shift in clinical perception consistent with the alert’s intent. Still, the broad variability in scores suggests differences in how adjudicators weigh clinical information and reinforces the need for larger-scale evaluations to more accurately estimate real-world utility.

Only a small proportion of alerts (16.78% ± 6.06% SD) were judged to have occurred in the absence of a clear clinical issue, suggesting a relatively low false positive rate and indicating that most alerts corresponded to recognizable patient increased acuity. Among alerts with identifiable causes, hemodynamic instability was most frequently cited (29.18%), followed by respiratory distress (10.88%) and renal impairment (5.84%). These findings suggest that APRICOT-M is capable of detecting clinical complications aligned with the acuity labeling phenotypes (i.e., MV, VP, and CRRT). Regarding therapy-specific recommendations, adjudicators found contributing factors to be clinically relevant in the majority of MV and VP alerts (63.33% and 60.00%, respectively), while CRRT alerts showed lower agreement (36.67%) and higher variability across adjudicators. These findings support the clinical utility of the proposed model, particularly in detecting hemodynamic and respiratory deterioration, while highlighting areas for refinement in renal risk prediction and therapy recommendation precision.

Some of the limitations of this study include the challenge of labeling outcomes in different hospital settings. Given the high number of hospitals in this study, labeling procedures such as MV and CRRT can be inconsistent, due to potential restrictions from some hospitals to access this data. Therefore, the datasets could be incomplete, and therefore, the performance of the model is limited. The small number of features can limit model performance as well. Large models can usually benefit from a large number of features. The present study used a limited subset of features in all three databases (UFH, MIMIC, and eICU). Since these features were manually mapped between datasets, some features could have been missed. Future studies will employ the Observational Medical Outcomes Partnership (OMOP) Common Data Model to map features more effectively and accurately between datasets. It is also important to note that the confidence intervals reported in this study were computed using bootstrapping methods, which primarily capture uncertainty arising from the data sample rather than uncertainty associated with model parameters themselves. Future work could explore Bayesian approaches or alternative methods to better characterize parameter uncertainty. Furthermore, while our analysis relied on binary classification within defined prediction windows, adopting a time-to-event modeling framework could help address challenges related to the arbitrary selection of time windows and provide a more continuous and clinically meaningful representation of event risk. Such an approach may also help to bridge the gap between step-level and episode-level analyses by modeling outcomes in a temporally dynamic manner.

The clinical adjudication of our model’s alerts presented some additional limitations. Demographic diversity among adjudicators, representing multiple medical specialties and varied ICU experiences, likely influenced the degree of agreement. On the other hand, adjudicators had relatively limited levels of clinical experience (2-3 years of practice), which may have affected their ability to evaluate complex scenarios or subtle signs of clinical deterioration with the same nuance as more experienced attending physicians. Given these limitations, future adjudications would benefit from involving physicians with greater clinical experience and a broader range of specialties to ensure more representative and robust assessments of the model’s clinical utility. Increasing the number of adjudicated cases beyond the current limited set of 20 could enhance statistical reliability and allow for more granular evaluation of performance across different clinical scenarios. Moreover, incorporating analyses of false-negative cases (i.e., instances where patients experienced increased acuity without an alert being triggered) would be valuable in fully characterizing the model’s clinical performance, improving sensitivity, and ensuring comprehensive patient safety.

In summary, we have developed and validated both retrospectively and prospectively as well as clinically adjudicated APRICOT-M, a state-space model to predict patient acuity in the ICU. This tool allows for real-time acuity monitoring of a patient and can provide helpful information to clinicians to make timely interventions. Furthermore, the model can suggest life-sustaining therapies the patient might need.

Methods

Data and study design

The study was approved by the University of Florida Institutional Review Board and Privacy Office. Three databases were used in this study: UFH, MIMIC, and eICU (cohort diagrams in Supplementary Fig. S1). The UFH dataset was collected in both a retrospective (UFH-R) and prospective (UFH-P) manner, while the MIMIC and eICU datasets were retrospective. The UFH-R dataset includes adult patients admitted to the ICUs at the University of Florida (UF) Health Shands Hospital, Gainesville, between 2014 and 2019, while UFH-P dataset was obtained from adult patients admitted to the ICU at UFH between May 2021 and October 2023 (UFH-P) using our real-time platform. Specifically, UFH-P data was collected and processed on a daily basis. Then, all data from the specified time period was compiled to construct the dataset. The MIMIC dataset is a publicly available dataset collected at the Beth Israel Deaconess Medical Center from 2008 to 201928. The eICU dataset contains data from ICU patients from 208 hospitals in the Midwest, Northeast, Southern, and Western regions of the US from 2014 to 201527. In all four datasets, patients were excluded if they had missing records of basic information (i.e., age, discharge location, sex, race, or BMI), outcomes (i.e., acuity states), or missing at least one of six routine vital signs: heart rate (HR), respiratory rate (RR), systolic blood pressure (SBP), diastolic blood pressure (DBP), body temperature (Temp), and oxygen saturation (SPO2). Also, patients who stayed in the ICU for less than 8 h or more than 30 days were excluded. The lower bound was chosen to allow for enough data for one prediction and outcome validation (4-h observation and subsequent 4-h prediction window), while the upper bound was chosen to exclude long admissions that could bias model training due to increased number of 4-h intervals pertaining to single patients.

Data from UFH-R between 2014 and 2017 and data from 54 hospitals from the eICU database were used to create the development set. This set was used to train and tune the APRICOT-M model. Data from MIMIC and the remaining 143 hospitals in eICU were used for external validation to evaluate the generalizability of the proposed model to diverse hospital settings. Hospitals from eICU used for development were selected if their cohort contained at least 800 ICU admissions to have the largest number of ICU admissions possible in the fewest number of hospitals. This setup allowed for a larger number of hospitals to be used in the external validation set. A histogram showing the number of ICU admissions for each hospital along with the cutoff for inclusion is provided in the Supplementary Material (Supplementary Fig. S2). Data from UFH-R between 2018 and 2019 was used for temporal validation to evaluate generalizability to different years of admission. Data from UFH-P was used for prospective validation to evaluate the application of our model to a real-time setting.

The study design consisted of six steps: development, calibration, validation (i.e., external and temporal), subgroup analysis, interpretation, and prospective evaluation. This study follows the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) Statement45 (checklist available in Supplemental). First, we developed and tuned the APRICOT-M model on the development dataset (80% training, 20% validation), followed by calibration using a random sampling of 10% of each validation dataset (i.e., development validation split, external, temporal, and prospective) to obtain a more accurate risk probability of each outcome. Next, the model was externally validated on hospitals not included in the development stage and temporally validated on data from years that were not included in the development set. We also performed a subgroup analysis of model performance based on age group (18-60 vs over 60), sex, and race, accompanied by detailed interpretability analyses, to find the most relevant predictors correlated with increased patient acuity. Finally, prospective model validation was performed retrospectively on data acquired prospectively using our clinical real-time platform29 interfaced with the Epic® EHR system.

Ethics approval and patient consent

This study was conducted in Florida, USA, with all data collected at the University of Florida Health (UFH) Shands Hospital or sourced from publicly available datasets (MIMIC-IV and eICU-CRD). Retrospective data from UFH (UFH-R) was obtained with the approval of the University of Florida Institutional Review Board (IRB) as an exempt study (IRB201901123). The University of Florida IRB acted as the local regulatory authority in accordance with U.S. federal regulations. A HIPAA waiver of consent was granted by the IRB because the study posed minimal risk to participants’ privacy, appropriate safeguards were in place to protect data confidentiality, and the data were provided in a limited data set format. The waiver was also justified due to the large volume of subjects, lack of reliable contact information, absence of direct subject contact, and inclusion of deceased individuals. Prospective data from UFH (UFH-P) was obtained by consent under the approval of the University of Florida IRB under the numbers 201900354 and 202101013. Before enrolling patients in the study, written informed consent was obtained from all participants. In cases where patients could not provide informed consent, consent was obtained from a legally authorized representative (LAR) acting on their behalf. Eligible participants were individuals aged 18 and older who were admitted to the ICU and expected to remain there for at least 24 h. Patients who could not provide an LAR or self-consent were expected to be transferred or discharged from the ICU within 24 h, and those necessitating contact or isolation precautions were excluded. Also excluded from this study were patients who expired within 24 h of recruitment. The eICU dataset is deidentified and publicly available. The data in the MIMIC dataset is de-identified, and the institutional review boards of the Massachusetts Institute of Technology and Beth Israel Deaconess Medical Center both approved using the database for research. To conduct clinical adjudication of alerts generated by APRICOT-M in the UFH-P cohort, clinician adjudicators were consented and enrolled under IRB202101013, granting them approval to perform the adjudication activities using patient medical record information within the Epic® EHR system. All procedures adhered to U.S. federal and institutional regulations regarding human subject research. The study did not involve the transfer of biological materials or cultural knowledge beyond U.S. borders.

Features and outcomes

The primary outcome predicted by APRICOT-M is the acuity state (obtained using computable phenotyping) of a patient in the ICU in the next four hours defined by four levels of severity from the least to the most severe: discharge, stable, unstable, and deceased15. Four-hour windows were chosen to capture the continuous transitions between acuity states that can happen in short time periods15. Discharge and deceased states were determined through indicators of whether a patient was discharged or transferred out of the ICU alive or passed away in the ICU (Fig. 1d). Unstable and stable were determined by the presence of one of four life-sustaining therapies. Specifically, if a patient was receiving continuous renal replacement therapy (CRRT), on intravenous vasopressors (VP) (either continuous infusion or one-time push), on invasive mechanical ventilation (MV), or had a massive blood transfusion ( > 10 units in the last 24 h) (BT), the patient was categorized as unstable for the time intervals during which any of these therapies were present (Fig. 1c). Otherwise, the patient was considered stable.

Transitions to instability (i.e., Stable-Unstable) and to stability (i.e., Unstable-Stable) were labeled as outcomes to address the potential bias of predicting the current state (e.g., patient which is currently stable, and the model predicts the next state to be stable as well). The onset of CRRT, MV, and VP were also labeled to predict the potential need for one or more of these therapies (Fig. 1d). In the case of BT, prediction could not be performed, given the label was defined by 24-h periods and the patient would have undergone the therapy by the time of prediction.

In order to predict the outcome for the next four hours, static patient information and clinical events from the previous four hours were used as predictive features. Clinical events consisted of the timestamp converted to hours since admission, a variable code, and the value of the measured variable. Simultaneously occurring features were arbitrarily ordered. Events were assigned to their corresponding observation window, where an observation window was comprised of all clinical events in the 4 h prior to the start of the current prediction window. The prediction window represents a four-hour interval in which the outcome is assessed (Fig. 1b). In particular, vital signs, medications, laboratory tests, and assessment scores were extracted from the previous four hours of ICU monitoring, while demographic and comorbidity information were extracted from patient history information. Variables occurring in less than 5% of all ICU stays were removed to prevent the absence of variables in the training set that could have been present in the validation sets. Outliers were also removed based on impossible values based on clinical expertise and based on an upper (99th percentile) and lower (1st percentile) bound (Fig. 1a). A complete list of the variables used for prediction can be found in the Supplementary Material (Supplementary Table S1). Both temporal and static variables were then scaled according to their specific range using minimum-maximum scaling based on the minimum and maximum values for each feature in the development cohort (Fig. 1b). Finally, the APRICOT-M model, which uses the embedding schema of a clinical time-series model22 with incorporation of a state-space model (Mamba block, details in Model development and performance section)23, was trained to predict probabilities for each acuity outcome, transition, and life-sustaining therapy onset and evaluated against ground truth labels (Fig. 1e).

Model development and performance

After splitting the three datasets into development, external, temporal, and prospective, the development dataset was further split by randomly selecting 80% of the patients for training and 20% for validation and tuning hyperparameters. The APRICOT-M model was developed based on the embedding schema of a clinical Transformer architecture22, incorporating a Mamba block23. A detailed description of the Mamba block and state space models is provided in the Supplementary Material. The original architecture was modified to create an embedding for temporal data using a sequence of triplets of time, value, and variable code recordings. The time and value recordings were passed through one-dimensional (1D)-Convolutional layers, and the variable code recordings through an Embedding layer. The results were fused as one temporal embedding through addition, and positional encoding was incorporated to preserve the sequence order information. This approach removed the need for imputation that other time-series models require to maintain fixed dimensions in the input. The embedding schema presented here used padding/truncation to match the maximum sequence length set for the model, so no feature values were imputed. For static data, an embedding was created using two linear layers. A Mamba block was then employed to add context to the temporal embedding, from which top-k pooling was applied to select the k most important time steps in the sequence to reduce dimensionality for input into a multi-layer perceptron (MLP). The resulting vector was fused (i.e., added) with the static embedding. Finally, the fused vector was passed through individual linear layers to calculate the probability of each acuity primary outcome, transition, and life-sustaining therapy (Supplementary Fig. S3).

For training the model, class weights were applied to the loss function to account for the class imbalance. Four algorithms were used as baseline models for comparison: categorical boosting (CatBoost)46, gated recurrent unit (GRU)47, a vanilla Transformer19, and APRICOT-T. For CatBoost, statistical features (i.e., missingness indicators, minimum, maximum, mean, and standard deviation) were extracted to convert temporal data into a static representation. For the GRU and vanilla Transformer, temporal features were resampled to 20-min resolution and tabularized to have the same sequence length and dimensions. The 20-min resolution was chosen to balance granularity of high-frequency features (e.g., vital signs) while avoiding excessive imputation of low-frequency features (e.g., laboratory values, medications, assessment scores). For APRICOT-T, the same data processing as APRICOT-M was applied as well as the same architecture with the only difference of replacing the Mamba blocks with Transformer encoder layers. A logic schema (shown in Supplementary Fig. S4) was implemented in the model outputs to predict the overall acuity status. For evaluation, six metrics were used: the Area Under the Receiver Operating Characteristic (AUROC), the Area Under the Precision-Recall Curve (AUPRC), sensitivity, specificity, Positive Predictive Value (PPV), and Negative Predictive Value (NPV). The Youden index30 was used to find the optimal threshold to calculate sensitivity, specificity, PPV and NPV at the step level for all models. Both AUROC and AUPRC were used as metrics to tune the model architecture and hyperparameters on the development test. For hyperparameter tuning, the Optuna Python library48 was used to run 10 training trials. Each trial was set to 100 epochs with an early stopping strategy to avoid overfitting and the best model (i.e., epoch with best evaluation metric) was loaded at the end of the trial. The hyperparameters of the best performing trial were selected as the optimal hyperparameters.

The Sequential Organ Failure Assessment (SOFA)8 score was used as a clinical mortality and instability prediction baseline. For mortality, the raw SOFA score was scaled to a 0-1 range49 and directly evaluated using AUROC and AUPRC. For instability, two approaches were used: scaling raw SOFA score to 0-1 range and a criterion of 2 or more points increase from baseline SOFA (i.e., the SOFA score at admission) to label 4-h windows as unstable based on previous literature using this criterion to indicate increased mortality risk50. Both approaches were evaluated as well using AUROC and AUPRC.

Calibration and subgroup analysis

Due to differences in the outcomes incidences (i.e., positive rates) in the four study cohorts, our model was calibrated using an isotonic regression algorithm51 to improve the accuracy of the risk probability predictions. A random sampling of 10% for each validation cohort (i.e., development validation split, external, temporal, and prospective) was used for model calibration. Calibration performance was evaluated by the Brier score and calibration curve. We evaluated model performance by comparing important demographic subgroups in all cohorts, including sex (male and female), age (young [18–60] and old [over 60]), and race (Black, White, and Others).

Acuity episode prediction and false positive analysis

Given that the prediction problem is a continuous prediction problem, evaluating the model at the step level (i.e., 4-h window level) does not account for the possibility of the model making true positive predictions earlier than expected (i.e., predicting an outcome more than 4 h before). In other words, the model could predict the occurrence of an outcome rather than the exact time when it will happen. Therefore, both step-level and episode-level (i.e., outcome occurrence) performance were compared for mortality and instability outcomes. For the episode-level performance, both AUROC and AUPRC were computed using the maximum predicted probability of each outcome across all 4-h windows for each ICU admission when the outcome was present or absent (Supplementary Fig. S5). Sensitivity, specificity, and PPV were computed both at step and episode levels for different thresholds according to desired episode level precision.

Time window analysis

To determine the performance between different time window lengths, the same methodology used at the 4-h window level was applied to 24-h and 48-h observation and prediction time windows. Given the necessity of longer ICU admissions to allow at least one prediction for each admission at 24-h and 48-h windows, the cohort sizes for these analyses were smaller. Cohort diagrams for both 24-h and 48-h time window cohorts are provided in the Supplementary Material (Supplementary Figs. S6 and S7).

Model interpretability

For the interpretation of the proposed model outputs, the integrated gradients method was used52. Integrated gradient attributions were calculated for each temporal variable in the embedding layer as well as for each static variable in the static embedding. This method allowed computation of the importance of a feature and the time step, obtaining an overall score for each input feature to better understand risk predictors for each outcome.

Statistical analysis

To determine if the difference in performance between baseline models and our proposed model was statistically significant, a 100-iteration bootstrap with replacement was performed to calculate the 95% confidence interval (CI) for each performance metric, using the median across the bootstrap to represent the overall value of each metric. All metric values between algorithms were then compared using a Wilcoxon rank sum test for statistical significance.

Clinical adjudication

We conducted an adjudication study to evaluate the clinical utility of acuity alerts generated by APRICOT-M. A panel of three practicing clinicians was recruited and oriented through a standardized protocol outlining adjudication procedures, review tools, and case materials. A set of 20 randomly selected ICU admissions from UFH-P where an alert was triggered were assigned to all adjudicators for review. To avoid potential bias, only one ICU admission per patient was randomly selected for inclusion. Each adjudicator was given access to each of the patient’s medical records through Epic®. Adjudicators assessed medical documentation from the four hours preceding and following the alert, focusing on the accuracy, utility, and timeliness of the alert as well as model-identified contributing factors and the appropriateness of recommended life-sustaining therapies, including MV, VP, and CRRT. Adjudicators recorded their responses in a customized QualtricsXM® survey, which included both structured and free-text items designed to assess the aforementioned aspects of each model alert. All adjudications were based solely on medical records information, simulating real-time clinical decision-making with no opportunity for direct patient examination or team communication. A detailed standard operating procedure (SOP) outlining the adjudication protocol and survey is provided in the Supplemental. For analysis of results, adjudicator scores for each question were aggregated through: average, one-vote rule (at least one adjudicator rated the question positively), majority vote (at least two adjudicators rated the question positively), and unanimous vote (all three adjudicators rated the question positively). Inter-rater reliability between adjudicators was assessed using Cohen’s Kappa53.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The MIMIC-IV dataset (https://physionet.org/content/mimiciv/2.2/) and the eICU Collaborative Research Database (https://physionet.org/content/eicu-crd/2.0/) are both publicly available via PhysioNet. Access to both datasets requires completion of the Collaborative Institutional Training Initiative (CITI Program) “Data or Specimens Only Research” training module, agreement to a data use agreement, and submission of a formal credentialing application through PhysioNet. Approval is granted upon verification of training completion and agreement to data protection policies. The UF Health (UFH) dataset used in this study contains protected health information and is not approved for public use due to institutional and ethical restrictions. Access to UFH data is limited to researchers within the University of Florida under IRB-approved protocols and cannot be shared externally. Researchers interested in collaboration involving UFH data may contact the corresponding author. Requests will be evaluated within 4–6 weeks and are subject to institutional approval and data use agreements. Source data are provided with this paper.

Code availability

The code for running all data processing, model training/validation, and analyses, is freely available at https://github.com/iheallab/apricotM. All analyses carried out in this study were performed using Python version 3.10.8. The Python package torch version 2.1.2, mamba-ssm 1.1.1, and scikit-learn 1.4.0 was used for developing the machine learning and deep learning models. Integrated gradients were implemented using the Python package Captum version 0.7.0. Visualization plots/graphs were obtained using Python packages matplotlib version 3.8.3 and seaborn version 0.13.2. Statistical analyses were performed using scipy version 1.12.0.

References

Barrett, M.L. Smith, M.W., Elixhauser, A, Honigman, L.S. & Pines, J.M. Utilization of Intensive Care Services, 2011. In Healthcare Cost and Utilization Project (HCUP) Statistical Briefs, Rockville (MD): Agency for Healthcare Research and Quality (US), 2006.

Zimmerman, J. E., Kramer, A. A. & Knaus, W. A. Changes in hospital mortality for United States intensive care unit admissions from 1988 to 2012. Crit. Care 17, R81 (2013).

Alghamdi, M. G. Nursing workload: a concept analysis. J. Nurs. Manag. 24, 449–457 (2016).

Chioncel, O. et al. Epidemiology, pathophysiology and contemporary management of cardiogenic shock – a position statement from the Heart Failure Association of the European Society of Cardiology. Eur. J. Heart Fail. 22, 1315–1341 (2020).

Ennett, C.M. et al. Predicting respiratory instability in the ICU. In 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 2848–2851 (2008).

Komorowski, M., Celi, L. A., Badawi, O., Gordon, A. C. & Faisal, A. A. The Artificial Intelligence Clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 24, 1716–1720 (2018).

Shickel, B. et al. DeepSOFA: a continuous acuity score for critically ill patients using clinically interpretable deep learning. Sci. Rep. 9, 1879 (2019).

Vincent, J. L. et al. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. On behalf of the Working Group on Sepsis-Related Problems of the European Society of Intensive Care Medicine. Intensive Care Med. 22, 707–710 (1996).

Zimmerman, J. E., Kramer, A. A., McNair, D. S. & Malila, F. M. Acute Physiology and Chronic Health Evaluation (APACHE) IV: hospital mortality assessment for today’s critically ill patients. Crit. Care Med. 34, 1297–1310 (2006).

Moreno, R. P. et al. SAPS 3-From evaluation of the patient to evaluation of the intensive care unit. Part 2: Development of a prognostic model for hospital mortality at ICU admission. Intensive Care Med. 31, 1345–1355 (2005).

Metnitz, P. G. H. et al. SAPS 3-From evaluation of the patient to evaluation of the intensive care unit. Part 1: Objectives, methods and cohort description. Intensive Care Med. 31, 1336–1344 (2005).

Gardner-Thorpe, J., Love, N., Wrightson, J., Walsh, S. & Keeling, N. The value of Modified Early Warning Score (MEWS) in surgical in-patients: a prospective observational study. Ann. R. Coll. Surg. Engl. 88, 571–575 (2006).

Shickel, B. et al. Multi-dimensional patient acuity estimation with longitudinal EHR tokenization and flexible transformer networks. Front. Digit. Health 4, 1029191 (2022).

Shickel, B. et al. Deep multi-modal transfer learning for augmented patient acuity assessment in the intelligent ICU. Front. Digit. Health 3, 640685 (2021).

Ren, Y. et al. Development of computable phenotype to identify and characterize transitions in acuity status in intensive care unit. arXiv https://doi.org/10.48550/arXiv.2005.05163 (2020).

Thomas, J., Jensen, L., Nahirniak, S. & Gibney, R. T. N. Anemia and blood transfusion practices in the critically ill: a prospective cohort review. Heart Lung J. Crit. Care 39, 217–225 (2010).

Thongprayoon, C. et al. Temporal trends in the utilization of vasopressors in intensive care units: an epidemiologic study. BMC Pharmacol. Toxicol. 17, 19 (2016).

Tiglis, M. et al. Overview of renal replacement therapy use in a general intensive care unit. Int. J. Environ. Res. Public. Health 19, 2453 (2022).

Vaswani, A. et al. Attention is all you need. arXiv https://doi.org/10.48550/arXiv.1706.03762 (2017).

Chen, R., Yan, X., Wang, S. & Xiao, G. DA-Net: dual-attention network for multivariate time series classification. Inf. Sci. 610, 472–487 (2022).

Liu, M. et al. Gated transformer networks for multivariate time series classification. arXiv https://doi.org/10.48550/arXiv.2103.14438 (2021).

Tipirneni, S. & Reddy, C. K. Self-supervised transformer for sparse and irregularly sampled multivariate clinical time-series. arXiv http://arxiv.org/abs/2107.14293 (2021).

Gu, A. & Dao, T. Mamba: linear-time sequence modeling with selective state spaces. arXiv https://doi.org/10.48550/arXiv.2312.00752 (2023).

Wornow, M. et al. Context Clues: evaluating long context models for clinical prediction tasks on EHRs. arXiv https://doi.org/10.48550/arXiv.2412.16178 (2025).

Burger, M. et al. Towards foundation models for critical care time series. arXiv https://doi.org/10.48550/arXiv.2411.16346 (2024).

Kataria, S. et al. Continuous Cardiac Arrest Prediction in ICU using PPG Foundation Model. arXiv https://doi.org/10.48550/arXiv.2502.08612 (2025).

Pollard, T. J. et al. The eICU Collaborative Research Database, a freely available multi-center database for critical care research. Sci. Data 5, 180178 (2018).

Johnson. A. E. W. et al. MIMIC-IV, a freely accessible electronic health record dataset. Sci. Data 10, 1 (2023).

Balch, J. A. et al. Building an automated, machine learning-enabled platform for predicting post-operative complications. Physiol. Meas. 44, 024001 (2023).

Perkins, N. J. & Schisterman, E. F. The Youden Index and the optimal cut-point corrected for measurement error. Biom. J. Biom. Z. 47, 428–441 (2005).

Tomašev, N. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572, 116–119 (2019).

Holder, A. L., Shashikumar, S. P., Wardi, G., Buchman, T. G. & Nemati, S. A locally optimized data-driven tool to predict sepsis-associated vasopressor use in the ICU. Crit. Care Med. 49, e1196–e1205 (2021).

Yu, L. et al. Machine learning methods to predict mechanical ventilation and mortality in patients with COVID-19. PLoS ONE 16, e0249285 (2021).

Bendavid, I. et al. A novel machine learning model to predict respiratory failure and invasive mechanical ventilation in critically ill patients suffering from COVID-19. Sci. Rep. 12, 1 (2022).

Bulatov, A., Kuratov, Y., Kapushev, Y. & Burtsev, M. S. Scaling Transformer 1M tokens beyond RMT https://doi.org/10.48550/arXiv.2304.11062 (2024).

Su, Y. & Kuo, C.-C. J. On extended long short-term memory and dependent bidirectional recurrent neural network. Neurocomputing 356, 151–161 (2019).

Qu, H. et al. A survey of mamba. arXiv https://doi.org/10.48550/arXiv.2408.01129 (2024).

Chen, Y.-C. et al. Risk factors for icu mortality in critically ill patients. J. Formos. Med. Assoc. 100, 656–661 (2001).

Saika, A., Bansal, S., Philip, M., Devi, B. I. & Shukla, D. P. Prognostic value of FOUR and GCS scores in determining mortality in patients with traumatic brain injury. Acta Neurochir. 157, 1323–1328 (2015).

Zhang, Z. & Xu, X. Lactate clearance is a useful biomarker for the prediction of all-cause mortality in critically ill patients: a systematic review and meta-analysis*. Crit. Care Med. 42, 2118 (2014).

Grim, C. C. A. et al. Association between an increase in serum sodium and in-hospital mortality in critically ill patients*. Crit. Care Med. 49, 2070 (2021).

Benediktsson, S., Hansen, C., Frigyesi, A. & Kander, T. Coagulation tests on admission correlate with mortality and morbidity in general ICU patients: an observational study. Acta Anaesthesiol. Scand. 64, 628–634 (2020).

Meddings, J. et al. The impact of disability and social determinants of health on condition-specific readmissions beyond medicare risk adjustments: a cohort study. J. Gen. Intern. Med. 32, 71–80 (2017).

Amrollahi, F. et al. Inclusion of social determinants of health improves sepsis readmission prediction models. J. Am. Med. Inform. Assoc. 29, 1263–1270 (2022).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD Statement. BMC Med. 13, 1 (2015).

Dorogush, A. V., Ershov, V. & Gulin, A. CatBoost: gradient boosting with categorical features support. arXiv https://doi.org/10.48550/arXiv.1810.11363 (2018).

Chung, J., Gulcehre, C. Cho, K. & Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv https://doi.org/10.48550/arXiv.1412.3555 (2014).

Akiba, T., Sano, S., Yanase, T., Ohta, T. & Koyama, M. Optuna: a next-generation hyperparameter optimization framework. arXiv https://doi.org/10.48550/arXiv.1907.10902.

Sena, J. et al. Wearable sensors in patient acuity assessment in critical care. Front. Neurol. 15, 1386728 (2024)

Raith, E. P. et al. Prognostic accuracy of the SOFA Score, SIRS Criteria, and qSOFA score for in-hospital mortality among adults with suspected infection admitted to the intensive care unit. JAMA 317, 290–300 (2017).

Han, Q., T. Wang, T., Chatterjee, S. & Samworth, R. J. Isotonic regression in general dimensions. arXiv https://doi.org/10.48550/arXiv.1708.09468. (2017).

Sundararajan, M. Taly, A. & Q. Yan. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning, PMLR, pp. 3319–3328 (2017).

Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 20, 37–46 (1960).

Acknowledgements

A.B, P.R., and T.O.B. were supported by NIH/NINDS R01 NS120924, NIH/NIBIB R01 EB029699. PR was also supported by NSF CAREER 1750192.

Author information

Authors and Affiliations

Contributions

M.C., A.B., and P.R. designed the study, conceptualized the method, and planned experiments. M.C., B.Silva, and B.Shickel developed the software. M.C. conducted computational experiments. T.O.B and Y.R. provided statistical support. M.C. and P.R. analyzed and interpreted results. Z.G. developed real-time data acquisition pipeline. M.C., A.D., T.O.B., J.B. and A.B. designed the clinical adjudication standard operating procedure. A.D. and J.B. recruited adjudicators. M.C. and A.D. conducted adjudicator training and collection of responses. J.Z., S.B., T.L., K.K., J.S., and S.N. provided feedback and scientific guidance throughout the development and evaluation of the study. M.C., J.S., and S.N. developed and maintained the GitHub repository to support code availability and reproducibility of the study. G.L. facilitated the acquisition and provided access to the UFH dataset for analysis and model development. A.B. and P.R. acquired funding and supervised the study. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous, reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions