Abstract

Spiking neural networks (SNNs) are biologically more plausible and computationally more powerful than artificial neural networks due to their intrinsic temporal dynamics. However, vanilla spiking neurons struggle to simultaneously encode spatiotemporal dynamics of inputs. Inspired by biological multisynaptic connections, we propose the Multi-Synaptic Firing (MSF) neuron, where an axon can establish multiple synapses with different thresholds on a postsynaptic neuron. MSF neurons jointly encode spatial intensity via firing rates and temporal dynamics via spike timing, and generalize Leaky Integrate-and-Fire (LIF) and ReLU neurons as special cases. We derive optimal threshold selection and parameter optimization criteria for surrogate gradients, enabling scalable deep MSF-based SNNs without performance degradation. Extensive experiments across various benchmarks show that MSF neurons significantly outperform LIF neurons in accuracy while preserving low power, low latency, and high execution efficiency, and surpass ReLU neurons in event-driven tasks. Overall, this work advances neuromorphic computing toward real-world spatiotemporal applications.

Similar content being viewed by others

Introduction

Given the current demand for developing more natural artificial intelligence, spiking neural networks (SNNs) are promising computational paradigms that draw deep inspiration from biological neural architectures1. In the early research of SNNs, their performance and scale lag significantly behind that of conventional artificial neural networks (ANNs) with continuous-valued activations. With recent advancements in surrogate gradient methods2,3, learning algorithms4, and coding schemes5,6, SNNs have gradually closed the performance gap with ANNs. Although SNNs are biologically more plausible and computationally more powerful than ANNs due to rich temporal dynamics of spiking neurons7, researchers have not yet fully unleashed their advantages and potential in practical applications, particularly in spatiotemporal tasks8.

Here, we uncover that the performance gap between theory and practice in SNNs may stem from significant information loss in spatiotemporal encoding by vanilla spiking neurons. Specifically, neurons such as Leaky Integrate-and-Fire (LIF) and Hodgkin-Huxley9, or their variants10,11, primarily focus on modeling neuronal dynamics, assuming that neurons are connected via single synapses (i.e., single channels). Due to the binary information representation in spiking neurons, the single-channel connection between neurons makes it difficult for SNNs to simultaneously encode spatiotemporal dynamics of input signals. On the one hand, vanilla spiking neurons can use rate coding to encode spatial intensity distributions through the averaged firing rate across multiple timesteps, as commonly applied in ANN-to-SNN conversion algorithms12,13,14 and directly-trained SNNs15,16,17 with repeated input. However, this coding scheme sacrifices the capability of modeling temporal dynamics. On the other hand, vanilla spiking neurons can utilize temporal coding to represent the temporal information of input signals through precise spike timing, making them well-suited for temporal computing tasks2,11. Consequently, vanilla spiking neurons face a challenging dilemma: they are unable to simultaneously encode the spatial intensity distribution and temporal dynamics of input signals.

Biological brains can process rich naturalistic sensory stimuli within short periods18. For example, the human brain efficiently handles and recognizes flickering lights and music with varying intensities and frequencies (Fig. 1a). Evidence from the visual and auditory cortices of monkeys has also shown that both spike counts and spike timing in neural firing encode visual and acoustic stimuli, demonstrating that the combination of the two coding schemes is not only more informative but also helps to reduce the effects of noise19,20. Moreover, hippocampal pyramidal cells can simultaneously use rate and temporal coding to represent two independent variables21. However, there is still a lack of explicit research on integrating the advantages of both coding schemes into a spiking neuron model for simultaneously encoding spatiotemporal dynamics. Recent consecutive studies22,23,24,25,26,27,28 have uncovered an important biological structure: multisynaptic connections, where a single axon establishes multiple synapses on the same post-synaptic neuron. This structure, overlooked in current spiking neuron models, has been observed in biological brains, including those of Caenorhabditis elegans22, flies23,24, mice25,26, rats27, and humans28 (Fig. 1b). While the functional role of these multisynaptic connections remains unclear, it has been hypothesized that they may facilitate rapid or strong responses to specific stimuli28. Inspired by the biological observations, we propose a multisynaptic spiking neuron named Multi-Synaptic Firing (MSF) neuron, where an axon can establish multiple synapses with different firing thresholds on the same postsynaptic neuron. The design of multiple thresholds is motivated by the biological phenomenon that voltage-gated calcium channels in presynaptic terminals exhibit different activation thresholds29, which govern their ability to open and mediate Ca2+ influx, ultimately triggering vesicular neurotransmitter release. It is important to note that the MSF neuron is a biologically inspired computational model designed for large-scale neuromorphic computing30, aiming to leverage the energy-efficient algorithms we learnt from the study of neuronal computation in organisms. In MSF neurons, the instantaneous firing rate across multiple synapses and the precise spike timing can simultaneously encode the spatial intensity distribution and temporal dynamics of input signals, thereby enabling full spatiotemporal coding.

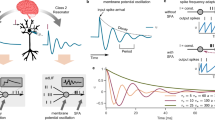

a The brain efficiently handles and recognizes flickering lights and music with varying intensities and frequencies, effectively encoding spatiotemporal dynamics of input signals in biological neurons. b Recent research on the human cerebral cortex has discovered multisynaptic connections, where a single axon establishes multiple synapses on the same post-synaptic neuron28. c The vanilla LIF neurons cannot effectively handle stimuli of varying intensities. d The classical ReLU neurons can encode stimuli as continuous values but cannot process temporal information. e We propose a multisynaptic spiking neuron named Multi-Synaptic Firing (MSF) neuron. The pre-synaptic neuron can establish up to D synapses on the post-synaptic neuron, each with its corresponding threshold. The strong stimulus input will trigger multiple spikes, which are then simultaneously transmitted to the post-synaptic neuron via the multisynaptic structure. f The internal operation schematic of the layer-wise MSF model.

We first show that MSF neurons represent a general and detailed neural abstraction model, encompassing vanilla LIF neurons and classical Rectified Linear Unit (ReLU) neurons as their special cases, thereby revealing the underlying relationships between ANNs and SNNs. Then, we theoretically derive the optimal solution for threshold selection and provide a parameter optimization criterion for different surrogate gradient functions under backpropagation through time (BPTT) learning, enabling SNNs with MSF neurons to scale up to large, deep models without performance degradation. To elucidate the encoding mechanism of MSF neurons, we design signal reconstruction tasks involving oscillating temporal signals and demonstrate that MSF neurons can simultaneously encode the spatial intensity distribution and temporal dynamics of input signals using independent rate and temporal coding. On extensive benchmarks for static and dynamic recognition, image-based and event-based object detection, biological signal processing, and reinforcement learning, MSF neurons achieve significant performance improvements over LIF neurons while maintaining the low-power and low-latency advantages, and exhibit high generalization across tasks. Notably, MSF-based SNNs can surpass the performance of ReLU-based ANNs with the same network structure in spatiotemporal continuous event stream tasks. The efficient execution of MSF-based SNNs on neuromorphic hardware for event-based object detection tasks in autonomous driving scenarios demonstrates the high execution efficiency and hardware compatibility of MSF neurons. Interestingly, multisynaptic connections are sparse in the trained models, similar to that observed in a cubic millimeter of the human temporal cortex28; this supports the idea that distributed computations on spatio-temporal encodings profit of network structures with few strong connections and several weaker ones, as observed in biological networks31. Overall, this work suggests that multisynaptic connections observed in biological brains are critical for learning spatiotemporal dynamics, significantly advancing neuromorphic computing toward spatiotemporal applications.

Results

Spiking neuron model with multisynaptic connections

Vanilla spiking neurons struggle to effectively process stimuli with varying intensities, which may be a key factor preventing SNNs from matching or surpassing the performance of ANNs. For instance, as illustrated in Fig. 1c, regardless of how high the membrane potential rises due to strong stimuli, the output of the LIF neuron can only be a single spike. In contrast, the output of the ReLU neuron increases proportionally with the input (Fig. 1d), enabling ReLU neurons to encode stimulus intensity as continuous values.

Recent work12,14,15,16,17 has adopted rate coding in SNNs to encode stimulus intensity. As shown in Supplementary Fig. S1, four timesteps (T = 4) can divide stimulus intensity into four levels. This rate-coded method encodes stimulus intensity using the averaged firing rate over multiple timesteps, making it well-suited for static data. However, it has two main drawbacks. First, it sacrifices the temporal information processing capability of spiking neurons, rendering it unsuitable for temporal computing tasks. Second, the intensity encoding introduces delays and errors due to temporal dynamics. For example, different input spike trains such as (1110, 0111, 1101, and 1011) have the same averaged firing rate and can be considered to be converted from the same stimulus intensity. When these spike trains are fed into LIF neurons, they lead to different outputs (see Supplementary Fig. S1c), potentially introducing sequential errors12. The precise spike timing of spiking neurons is typically used to encode timing of stimulus, i.e., temporal coding.

The main idea of this work is to explore how to integrate the advantages of both coding schemes into a spiking neuron model for encoding spatiotemporal dynamics of input signals simultaneously. Inspired by the multisynaptic connections of biological neurons, we propose the MSF neuron model. As depicted in Fig. 1e, the pre-synaptic neuron can establish up to D synapses to the post-synaptic neuron. Inspired by the different activation thresholds of voltage-gated calcium ion channels in synapses29, we design a corresponding multi-threshold firing mechanism. When the input stimulus at time t is strong, the membrane potential increases significantly, triggering multiple spikes through multiple threshold decisions, which are then simultaneously transmitted to the post-synaptic neuron via the multisynaptic structure. These multiple spikes generated under strong stimulation resemble burst spikes described by Friedenberger et al.32. Conversely, when the input stimulus at time t is weak, the membrane potential rises slightly, possibly resulting in one spike or no spike. To proportionally encode the stimulus intensity and directly train MSF-based SNNs using the BPTT algorithm, multiple thresholds are determined by an initial threshold Vth and a threshold interval h = 1 (see “Direct training with surrogate gradients” in Methods). Additionally, a parameter optimization criterion is provided for various surrogate gradient functions (see “Direct training with surrogate gradients” in Methods). The instantaneous firing rate across multiple synapses can encode spatial intensity distributions, which can be termed as instantaneous rate coding. The precise spike timing encodes the temporal information of the stimulus, i.e., temporal coding. Overall, the MSF neurons can simultaneously encode the spatial intensity distribution and temporal dynamics of input signals using independent rate and temporal coding, making them highly effective for handling spatiotemporal dynamic tasks. Comparative and theoretical analyses show that vanilla LIF neurons and classical ReLU neurons are two special cases of our MSF neurons under specific parameters (see “Relationship between classical artificial neuron and multisynaptic spiking neuron” in Methods). When the maximum number of synapses D is reduced to 1, the MSF neurons degenerate into LIF neurons. Conversely, when T = 1 and D → ∞, the MSF neurons approximate classical ReLU neurons.

The internal operation schematic of the layer-wise MSF model is shown in Fig. 1f. The transmission and integration of spikes utilize the multi-channel connection structure, ensuring that the integration process remains additive operations. Although the number of synaptic connections between the pre-synaptic and post-synaptic neurons has increased, the multisynaptic connections adopt the shared weights. This means that we have not increased the number of model parameters, indicating that the performance improvement is attributable to the spatiotemporal encoding capability, rather than to an increase in model size. Kasthuri et al.25 found that pairs of synapses sharing both the same axon and the same dendrite tend to be more similar than pairs of synapses sharing either just the same axon or just the same dendrite by comparing the structural features of synapses. This biological phenomenon is similar to our approach of using shared weights for multiple synaptic connections between pre-synaptic and post-synaptic neurons.

Signal reconstruction using spiking autoencoder

To elucidate the underlying encoding mechanisms of MSF neurons compared to LIF neurons, we first design a signal reconstruction task using oscillating signals with varying amplitudes and frequencies. As shown in Fig. 2a, we construct a simplified spiking autoencoder comprising a hidden layer of 32 spiking neurons followed by a readout layer. The input signals are classified into three types: (1) sinusoidal temporal signals of 300 time points (TP = 300) with varying frequencies and amplitudes, where intensity changes over time; (2) square wave temporal signals (TP = 300) with different frequencies, where intensity is either 0 or 1; (3) temporally compressed versions of the sinusoidal signals in (1), representing static signals (TP = 1) of different intensities. By reconstructing these three types of input signals, we can easily evaluate the ability of LIF and MSF neurons in encoding spatiotemporal dynamics.

a The overview of the spiking autoencoder for simple signal reconstruction. b Examples of original and reconstructed temporal signals (TP = 300) based on LIF (T = 300) and MSF (T = 300) neurons. The upper part shows sinusoidal temporal signals with varying frequencies and amplitudes, while the lower part shows square wave temporal signals with varying frequencies, where intensity is either 0 or 1. c Intensity distributions of original and reconstructed spatial signals (T = 1) based on LIF (T = 1), rate-coded LIF (T = 4), and MSF (T = 1) neurons. d Relative reduction percentage in image and video reconstruction errors achieved by MSF neurons compared to baseline LIF neurons. e Visualization comparison of reconstructed images and videos.

The first and second types of temporal signals reconstructed using LIF and MSF neurons are illustrated in Fig. 2b. The results demonstrate that both LIF and MSF neurons can precisely encode the temporal information of input signals using temporal coding. When the input signal is a square wave with intensities of either 0 or 1, the reconstructed signals from both LIF and MSF neurons show no significant differences. However, when the input signal intensity varies over time, LIF neurons fail to accurately encode the intensity distribution of the input signal, particularly at the peaks and troughs. Benefiting from the multisynaptic connections, our MSF neurons can simultaneously encode the intensity distribution and temporal dynamics of input signals. To further compare the intensity encoding differences between LIF and MSF neurons, we reconstruct the third type of static signals using LIF (T = 1), MSF (T = 1, D = 4), and the commonly used rate-coded LIF (T = 4). The rate-coded LIF (T = 4) method involves repeatedly inputting the static signals over four timesteps and using the mean firing rate over multiple timesteps to encode signal intensity. As shown in Fig. 2c, the reconstructed signal intensity based on LIF (T = 1) are limited to a few discrete values, showing a significant deviation from the original signal intensity. The intensity distribution of the reconstructed signal also shows a considerable difference from the original signal. When using the rate-coded LIF (T = 4) for reconstruction, there remains a significant error between the reconstructed and original signals in the high intensity range. Notably, the signal intensity reconstructed by our MSF (T = 1, D = 4) neurons shows minimal error compared to the original signal, and the intensity distribution of the reconstructed signals closely matches that of the original signal. This indicates that MSF (T = 1, D = 4) neurons can more accurately encode the intensity distribution of input signals with lower latency compared to rate-coded LIF (T = 4) neurons. We find that increasing the maximum number of synapses D can enhance the accuracy of encoding static signals with greater intensity variations (see Supplementary Fig. S2).

For image reconstruction on the ImageNet dataset33 and video reconstruction on the HMDB51 action recognition dataset34, we construct a spiking autoencoder model consisting of an encoder and a decoder component. The encoder comprises three downsampling spiking convolutional layers for generating encoded features, while the decoder mirrors this structure with three upsampling spiking deconvolutional layers that reconstruct the input from the encoded representation. The detailed experimental settings of the model are provided in Supplementary Table S7. By employing both LIF and our MSF neurons in the same network and measuring the error between all pixels of the reconstructed and input images, we can evaluate their spatiotemporal information encoding capabilities. As shown in Fig. 2d, MSF-based SNNs achieve 29.6% and 42.9% lower reconstruction errors than LIF-based counterparts in image and video reconstruction tasks, respectively. This significant improvement highlights the superior performance of MSF neurons in encoding spatiotemporal dynamics. As shown in Fig. 2e, the images and videos reconstructed using our MSF neurons exhibit significantly better detail, texture, and color compared to those reconstructed using LIF neurons.

Comprehensive evaluation on static and dynamic recognition

In this subsection, we aim to demonstrate the advantages of our MSF neurons in static and spatiotemporal information processing through static and dynamic recognition tasks. We apply the MSF neuron model to the multi-scale hierarchical spiking residual attention network (MHSANet), which comprises pre-activation bottleneck residual blocks with integrated multi-scale feature extraction and attention mechanisms. For static image recognition, we conduct experiments on three benchmark datasets: CIFAR-10, CIFAR-100, and ImageNet. We employ networks of different depths according to dataset complexity: MHSANet-29 for CIFAR-10 and CIFAR-100, and the deeper MHSANet-50 and MHSANet-101 architectures for the large-scale ImageNet dataset. The detailed experimental settings are provided in Supplementary Table S8. We test our MHSANet with ReLU, LIF, and MSF neurons, respectively. Consistent with previous works17,35, we set the number of timesteps T to 1 and 4 for all experiments. Here, the input at T = 4 refers to the same image being input repeatedly at four timesteps, which is a common practice in direct training methods17. To fairly compare with vanilla spiking neurons using four timesteps, we also set the maximum number of synaptic connections D to 4. For the more challenging ImageNet dataset, we also test the maximum number of synaptic connections D of 8. An analysis of the impact of the maximum number of synaptic connections D and init membrane threshold Vth on performance can be found in Supplementary Fig. S3.

We comprehensively compare the theoretical power consumption and classification performance of different models and neurons. As depicted in Fig. 3a and b, three key conclusions can be derived. First, compared to ReLU neurons, MSF neurons can achieve comparable or even better classification performance with 5-18 times lower power consumption. For instance, when comparing ReLU with our MSF (T = 1, D = 4) neurons on CIFAR-10, the Top-1 accuracy improves from 97.15% to 97.28%, while the energy consumption is reduced by 17.8 times. On ImageNet, MSF (T = 1, D = 8) neurons also surpass ReLU neurons (79.89% vs. 79.72%) with less than 5.4 times the power consumption (6.58 mJ vs. 35.49 mJ). Second, compared to baseline LIF neurons, our MSF neurons achieve a significant performance improvement while maintaining the low-power and low-latency advantage. For example, on CIFAR-10, the highest classification accuracies achieved by MSF (T = 1, D = 4), LIF (T = 1), and LIF (T = 4) neurons are 97.28%, 96.46%, and 96.91%, respectively, with corresponding power consumptions of 0.65 mJ, 0.36 mJ, and 1.23 mJ. Compared to LIF (T = 1) neurons, our MSF neurons significantly improve model performance at the expense of slightly higher power consumption. In contrast to LIF (T = 4) neurons, our MSF (T = 1, D = 4) neurons achieve better performance and lower power consumption, demonstrating that MSF neurons can encode instantaneous stimulus intensity more accurately with lower latency and power consumption than rate-coded LIF (T = 4) neurons. Supplementary Fig. S4 shows the classification accuracy curves and the spiking rates of MSF and LIF neurons. We also visualize the spatial distribution of spike maps for four layers of MSF (T = 1, D = 4) and LIF (T = 1) neurons on ImageNet (see Supplementary Fig. S5). It can be observed that the spikes of our MSF neurons are concentrated on the edges and important features of objects, while fewer spikes are emitted in irrelevant background areas. In contrast, LIF neurons emit a large number of spikes in the background, which not only negatively impacts classification accuracy but also increases unnecessary power consumption. Third, using multiple timestep rate coding for static data processing is inefficient. Although vanilla LIF neurons can leverage rate coding across multiple timestep to improve classification performance on static data, they come at the cost of a significant increase in power consumption, approximately four times higher. Extending MSF (T = 1) to MSF (T = 4) only slightly improves classification performance but significantly increases power consumption. Details of the comparison with state-of-the-art methods on CIFAR-10, CIFAR-100, and ImageNet can be found in Supplementary Table S1 and Table S2. Overall, our MSF-based SNNs can achieve high performance with low power consumption, addressing the performance-power trade-off faced by ANNs and vanilla SNNs.

Performance-power comparison of different models and neurons on CIFAR-10 (a), and ImageNet (b) datasets. c Performance comparison of LIF, ReLU, and MSF on CIFAR10-DVS. d Event stream data of one sample from HARDVS and the corresponding accumulated frame images. e Performance-power comparison of LIF, ReLU, and MSF on HARDVS. f Spatial distribution of spike maps at four time points for one hidden layer of MSF and LIF neurons on HARDVS. g Synaptic number distributions of multisynaptic neurons in the human cerebral cortex28 and our trained models on the CIFAR-10, HARDVS, and ImageNet datasets.

Subsequently, we evaluate the spatiotemporal information processing capabilities of the models on dynamic event-based classification and recognition. We test MSF, LIF, and ReLU neurons on the event-based classification dataset (CIFAR10-DVS) and human action recognition dataset (HARDVS). To ensure a fair comparison between ReLU and MSF neurons, we also feed images across multiple timesteps T into the network based on ReLU neurons, ensuring that the inputs for ReLU neurons are consistent with those for MSF neurons. The feature maps from these T timesteps are averaged before being fed into the classification layer. The detailed experimental setup can be found in Methods and Supplementary Table S8. As shown in Fig. 3c, our MSF neurons improve classification accuracy by 1.4% and 2.0% over LIF neurons on CIFAR10-DVS. Notably, our MSF neurons also surpass ReLU neurons in classification accuracy by 0.3% and 1.2% on CIFAR10-DVS. Figure 3d illustrates the raw event stream data of HARDVS and the accumulated frames, showing the temporal evolution of human actions. In the power-accuracy comparison (Fig. 3e), under the two model scales of MHSANet, MSF neurons achieve a significant performance enhancement of 3.8% and 4.2% compared to LIF neurons, respectively, with only a slight increase or even reduction in power consumption. Furthermore, when compared to ReLU neurons, MSF neurons exhibit an improvement in recognition accuracy by 0.2% and a substantial reduction in power consumption by 5.7 times and 10.5 times across the two models. The results demonstrate the significant advantage of MSF neurons in handling spatiotemporal computing tasks. We further visualize the spatial distribution of spike maps at four timesteps for one hidden layer of MSF and LIF neurons on HARDVS (Fig. 3f). It can be observed that MSF neurons focus on human actions, particularly hand movements, while LIF neurons generate numerous irrelevant spikes in the background. We also find similar results on the shallow and deep layers of MSF and LIF neurons on HARDVS (see Supplementary Fig. S6). Additional comparisons and details are provided in Supplementary Table S3 and Table S4.

To analyze the distribution of multisynaptic connections in the trained models, we set the maximum number of synapses D to 30. The number of synapses for all MSF (T = 1, D = 30) neurons in the trained model is determined by counting the maximum number of spikes each neuron fires in a batch of data. As shown in Fig. 3g, the synaptic number distributions are similar across the three datasets (CIFAR-10, HARDVS, and ImageNet), with the majority of neurons requiring only one synaptic connection. As the number of synapses increases, the number of neurons decreases exponentially. Additionally, we find that with increasing task complexity, the proportion of neurons with two or more synaptic connections also increases. Notably, the red curve represents the synaptic number distribution observed in the human cerebral cortex28. Interestingly, multisynaptic connections are sparse in the trained models, similar to that observed in a cubic millimeter of the human temporal cortex28.

Comprehensive evaluation on image-based and continuous event-based object detection

Current SNNs face significant challenges in handling challenging computer vision tasks such as object detection. In this subsection, we aim to evaluate the performance of our MSF neurons on static image object detection and dynamic event-based object detection tasks. We adopt the one-stage detection framework YOLOX, replacing the backbone with our MHSANet and all activation functions with spiking neurons (see “Experimental settings” in Methods). Firstly, we test the performance of the model on the static COCO dataset. As depicted in the Fig. 4a, our MHSANet-YOLO based on MSF neurons outperforms other SNNs by a large margin. For example, our MHSANet-YOLO based on MSF (T = 1, D = 4) neurons achieves a significant performance enhancement of 10.4% mAP compared to the baseline MHSANet-YOLO with LIF (T = 1) neurons, at the cost of a slight increase in power consumption. Although SpikeYOLO36 performs comparably to our method in terms of accuracy, it has significantly higher power consumption. Notably, our model can surpass or match classical ANN detection models with much lower energy consumption, demonstrating the great potential of our multisynaptic spiking neurons in challenging computer vision tasks. Further comparisons and details can be found in Supplementary Table S5. For instance, our MHSANet-YOLO based on MSF (T = 1, D = 8) neurons achieves the same detection performance with a 10.2x reduction in power consumption compared to MHSANet-YOLO with ReLU neurons. Figure 4b shows the detection visualization results of our model using LIF (T = 1) and MSF (T = 1, D = 4) neurons on the COCO dataset. It is evident that our MSF neurons can detect objects that are difficult for LIF neurons to identify.

a Performance-power comparison of different models and neurons on the COCO dataset. b Detection visualization comparison of LIF and MSF neurons on the COCO dataset. c Event stream data of one sample from Gen1 and the corresponding accumulated frame images. d Performance-power comparison of different models and neurons on Gen1. e Detection visualization comparison of LIF and MSF neurons on the Gen1 dataset. To elucidate the influence of timesteps on detection capability, we have varied the timesteps of MSF neurons to 1, 3, and 6, respectively.

Secondly, we evaluate the ability of MSF-based SNNs to handle complex spatiotemporal dynamics on the large-scale neuromorphic object detection dataset Gen137. Figure 4c illustrates the raw event stream data of Gen1 and the accumulated frames. The performance-power comparison results are provided in Fig. 4d. The results show that our MHSANet-YOLO can achieve 72.4% and 73.7% mAP with MSF (T = 1, D = 4) neurons, significantly higher than the best-reported accuracy of prior SNN works. As illustrated in Supplementary Table S6, our MHSANet-YOLO with MSF neurons achieves higher performance and significantly lower power consumption compared to MHSANet-YOLO with ReLU neurons. For example, the larger MHSANet-YOLO (76.2M) with MSF (T = 1, D = 4) neurons achieves higher detection performance than that with ReLU neurons (73.7% mAP vs. 72.4% mAP) with 16.6 times less power consumption (5.5 mJ vs. 91.5 mJ). When the timesteps are extended to 3 and 6, our MHSANet-YOLO with MSF neurons can further improve detection accuracy (mAP) from 73.7% to 75.4% and 76.7%, respectively. However, MHSANet-YOLO with ReLU neurons shows only marginal performance improvements with increased timesteps, highlighting the advantage of SNNs in temporal dynamic modeling. To ensure a fair comparison between ReLU and MSF neurons, we also feed images across multiple timesteps T into the network based on ReLU neurons, ensuring that the inputs for ReLU neurons are consistent with those for MSF neurons. The feature maps from these T timesteps are averaged before being fed into the detection head. Furthermore, as presented in Fig. 4e, we visualize the detection results of our model with LIF and MSF neurons on the Gen1 dataset. The results indicate that MSF (T = 1, D = 4) neurons demonstrate better detection performance than LIF (T = 1) neurons, and increasing the timesteps further significantly enhances detection performance on sparse event streams.

Application to biological signal processing and reinforcement learning

Biological signals are spatiotemporal dynamic continuous data, including electroencephalogram (EEG), electrocardiogram (ECG), and speech. To evaluate the potential of our MSF neurons in biological signal processing, we conduct experiments across various tasks: speech recognition on auditory data converted into spike trains in the SHD dataset38; EEG-based emotion recognition on EEG data from participants while they watched music videos in the DEAP dataset39; EEG-based motor imagery classification on EEG data in the MMIDB dataset40, where subjects imagined specific movements (e.g., left-hand movement); EEG-based sleep stage scoring on EEG data from participants during sleep in the Sleep-EDF-18 dataset40,41; and ECG wave-pattern classification on annotated ECG data in the QTDB dataset42. To test the generalization performance of MSF neurons across different models, we adopt state-of-the-art SNNs designed for their corresponding tasks, including DH-SNNs, SRNNs, and Spiking EEGNet. The detailed experimental settings and model parameters can be found in the Methods and Supplementary Table S9. The comparison between LIF and MSF neurons is conducted under the same experimental settings and model parameters.

Examples of the speech, EEG, and ECG data are visualized in Fig. 5a–c, where each sample contains several hundred timesteps, rich in spatiotemporal dynamics. As shown in Fig. 5d, our MSF neurons achieve consistent and significant performance improvements over LIF neurons in all tasks, demonstrating the superiority and high generalization of MSF neurons. For example, our MSF neurons achieve 86.7% and 87.0% accuracy for valence and arousal recognition on DEAP, which are 11.6% and 8.5% higher than vanilla LIF neurons. On MMIDB, MSF neurons boost the accuracy of four-class motor imagery classification from 54.8% to 61.7%. The EEG topography maps for left and right-hand motor imagery are shown in Fig. 5e. It can be found that our MSF neurons help locate more precise brain regions. For instance, left-hand motor imagery can be accurately located to the right Primary Motor Cortex, evidencing the superiority of MSF neurons in spatiotemporal information encoding. Moreover, the performance improvement in sleep stage scoring on Sleep-EDF-18 is also significant (from 70.9% to 78.4%) by replacing LIF neurons with our MSF neurons in the spiking EEGNet. Figure 5f presents the confusion matrices for LIF and MSF neurons, demonstrating that MSF neurons significantly outperform LIF neurons at all stages except for the N3 stage. In short, these results indicate that MSF neurons have great potential in handling temporal computing tasks.

a An example of the input spike trains from SHD dataset (b) Illustrations of three brain-computer interface tasks and EEG data. c An example of a single ECG signal channel labeled for each timestep. d Comparison of recognition accuracy between LIF and MSF neurons with identical network parameters and experimental settings across all biological signal recognition tasks. e Comparison of EEG topographies for left and right hand motor imagery tasks using LIF and MSF neurons. f Comparison of confusion matrices for LIF and MSF neurons on the Sleep-EDF-18 dataset.

To further validate the generalization performance of the multisynaptic neuron model, we test MSF neurons on deep spiking reinforcement learning tasks. Specifically, we utilize four commonly used reinforcement learning tasks supported by the OpenAI-gym43 and MuJoCo44 environments. The network architecture employs the advanced spiking reinforcement learning algorithm (PopSAN) proposed by Tang et al.45. The overall workflow is illustrated in Supplementary Fig. S7a and includes an encoder, decoder, and spiking actor network (SAN). The training process uses TD346, a popular deep reinforcement learning algorithm. To thoroughly evaluate the performance of MSF neurons in deep spiking reinforcement learning tasks, we employ two encoding methods. The first is population encoding used in PopSAN, which converts continuous values into stimulus intensities and then encodes them into spikes of population neurons (T = 5). Using this encoding method, we compare the performance of LIF (T = 5) and MSF (T = 5) neurons across 10 random seeds. Supplementary Fig. S7b illustrates the performance comparison between MSF and LIF neurons in four different reinforcement learning tasks. The experimental results reveal that SANs built with MSF neurons achieve significantly higher average maximum rewards in all four tasks compared to their LIF-based counterparts, while also exhibiting more stable performance across different random seeds. In the HalfCheetah-v3 and Hopper-v3 tasks, the SANs with MSF neurons demonstrate substantial performance enhancements over those with LIF neurons, with improvements of 13.96% and 36.54%, respectively. Furthermore, in terms of stability across different initial states, the SANs with MSF neurons significantly outperform those with LIF neurons on the Hopper-v3 and Ant-v3 tasks.

Additionally, we propose the second FC-based direct encoding method, where continuous state values are directly encoded into spikes within a single timestep through a fully connected layer and MSF neurons. The experimental results presented in Supplementary Fig. S7b indicate that the SANs with MSF (T = 1) neurons outperform their multiple timestep LIF-based methods, suggesting that employing MSF is an effective approach to encoding stimulus intensity.

Surrogate gradient optimization

In this paper, we adopt the BPTT learning algorithm2 for directly training SNNs with vanilla LIF neurons and our MSF neurons. The computational graph for forward and backward propagation is shown in Fig. 6a. A critical issue in training SNNs is addressing the differentiable spike activity and the resulting problems of gradient vanishing and explosion. Various surrogate functions σ( ⋅ ) have been proposed to approximate the discontinuous derivative of the activation function in LIF neurons, such as rectangular, sigmoid, and arctangent functions. Unlike LIF neurons, the multi-threshold firing mechanism of MSF neurons results in multiple non-differentiable points in the backward propagation process (Fig. 6b). Therefore, the surrogate gradient \({f}^{{\prime} }(\cdot )\) of our MSF neurons comprises a sum of multiple surrogate functions σ( ⋅ ) at different thresholds. The threshold interval h and the curve steepness α of the surrogate functions jointly determine the surrogate gradient of MSF neurons. Theoretical analysis shows that regardless of the surrogate function chosen, the threshold interval h = 1 is the optimal solution to mitigate gradient vanishing and explosion issues, ensuring the surrogate gradient remains stable around 1. We visualize the surrogate gradient \({f}^{{\prime} }(\cdot )\) of MSF (D = 4) neurons based on rectangular (Fig. 6c) and sigmoid (Fig. 6d) functions with different threshold intervals h. It can be observed that only when h = 1, the surrogate gradient \({f}^{{\prime} }(\cdot )\) of MSF neurons can remain stable around 1. Moreover, we present an extreme example with a maximum number of synapses D = 100 (see Supplementary Fig. S8). We also analyze the impact of the threshold interval h on the classification performance on CIFAR-10. As shown in Fig. 6e, the optimal performance is always achieved when h = 1, regardless of the surrogate gradient function chosen.

a The forward and backward computation graph of spiking neurons as used for backpropagation through time (BPTT). b Activation functions of ReLU, LIF, and MSF. Surrogate gradients \({f}^{{\prime} }(\cdot )\) of MSF (D = 4) neurons based on rectangular (c) and sigmoid (d) functions σ with different threshold intervals h. e The impact of the threshold interval h on the classification performance on CIFAR-10. Surrogate gradients of MSF (D = 4) neurons based on rectangular (f) and sigmoid (g) functions with different curve steepness α. h The impact of the curve steepness α on the classification performance on CIFAR-10. i Classification performance comparison between different depths of ResNet with LIF and MSF neurons.

Once the threshold interval h is set to 1, the oscillation amplitude of the surrogate gradient \({f}^{{\prime} }(\cdot )\) around 1 can be adjusted by modifying the curve steepness α. We further evaluate the impact of the curve steepness α on the surrogate gradient \({f}^{{\prime} }(\cdot )\) and model performance. The surrogate gradient \({f}^{{\prime} }(\cdot )\) of MSF neurons based on rectangular and sigmoid functions with different α are shown in Fig. 6f, g. The results indicate that when h = 1, different α values determine the extent to which the surrogate gradient of MSF fluctuates around 1. Therefore, when choosing different surrogate functions σ( ⋅ ), we need to adjust their curve steepness α to stabilize the surrogate gradient \({f}^{{\prime} }(\cdot )\) of MSF neurons around 1. As presented in Fig. 6h, the optimal α value varies for different surrogate functions. The highest classification performance on CIFAR-10 corresponds to the optimal α value that stabilizes the surrogate gradient \({f}^{{\prime} }(\cdot )\) of MSF neurons around 1.

Overall, theoretical and experimental results jointly demonstrate that the threshold interval h = 1 is the optimal solution for MSF neurons, ensuring the surrogate gradient remains stable around 1. Additionally, we provide a parameter optimization criterion for selecting the curve steepness α of different surrogate functions, enabling the extension of SNNs with MSF neurons to large, deep networks without degradation problems. To further demonstrate the scalability of our model to deeper architectures without degradation problems, we evaluate various depths of vanilla ResNet architectures using both LIF and MSF neurons on CIFAR-10. The tested models include ResNet-20, ResNet-32, and ResNet-56 based on the basic block, as well as ResNet-65, ResNet-83, and ResNet-101 based on the bottleneck blocks. As shown in Fig. 6i, models incorporating our MSF neurons consistently achieve higher test accuracy as network depth increases. In contrast, the performance of models using LIF neurons deteriorates with increasing depth. Notably, when the depth increases from 65 to 83 layers, the test accuracy of the LIF-based model drops sharply from 53.9% to 20.4%, indicating a clear degradation problem. The detailed analysis and comparison of training loss and accuracy are provided in Supplementary Fig. S9. These results demonstrate that our MSF neurons with optimized surrogate gradients can effectively mitigate the degradation problem in deep SNNs, thereby unlocking the potential of deep SNNs.

Model interpretability

Bursting activity is commonly observed in biological neurons47,48 and typically characterized by multiple spikes firing at short time intervals49,50. Friedenberger et al.32 reported that burst spikes are more frequent under strong, contrasting stimulation. Given the trade-off between accuracy and computational efficiency, we adopt a time discretization greater than 1 ms per simulation timestep, which is consistent with common practice in machine learning applications of SNNs2,10,11,51. In the coarse time discretization, due to the single synapse with a single threshold, the LIF neuron cannot generate burst spikes even under strong stimulation. The key innovation of our MSF neuron model is its ability to emit multiple spikes (i.e., burst spikes) within a single timestep (Fig. 1e). This design allows for more effective spatiotemporal encoding while maintaining the computational efficiency of SNNs, thus providing an elegant solution to the trade-off between representational power and computational cost. Consequently, the MSF neuron model facilitates the development of high-performance and high-efficiency SNNs in practical machine learning applications.

Shapson-Coe et al.28 provided the statistical results of the synaptic number distribution in the human brain. As shown by the red curve in Supplementary Fig. S10a, single-synapse neurons account for 96.49% of the total. Neurons with two-synapse, three-synapse, and four or more synapse connections constitute 2.99%, 0.35%, and 0.092% of the total, respectively. Then, we examine the percentage of neurons with different number of synapses in the trained models. As shown in Supplementary Fig. S10b–e, we find that the multisynaptic connections in trained models on the CIFAR-10, CIFAR-100, ImageNet, and HARDVS datasets exhibit sparsity, similar to that observed in the human cerebral cortex. For example, in the trained model on CIFAR-10, axons with one synapse account for 94.88%, those with two synapses for 3.61%, three synapses for 1.01%, and four or more synapses for 0.5%. As the number of synapses increases, the percentage of neurons decreases exponentially.

Furthermore, we aim to further understand the relationship between the MSF and ReLU neurons through activation distribution visualization. Specifically, we visualize the activation distribution of the trained MHSANet with ReLU on CIFAR-10 (see Supplementary Fig. S10f). It can be observed that the activations are mainly concentrated in the range of 0-4, with over 99% of the activation values being less than 4. When we count the frequency within each integer interval, it is found that the ReLU activations within each integer interval decrease exponentially from 0 to 15. Therefore, the activation patterns of ReLU neurons are very similar to those of MSF neurons, with the key difference being that ReLU neurons can partition activation values more finely, allowing for more precise encoding of input stimuli. Theoretical derivations indicate that the ReLU neuron is a special case of the MSF neuron. Moreover, experimental findings by Glorot et al.52 demonstrate that ReLU neurons can yield sparse representations similar to spiking neurons, highlighting the functional similarity between the two neuron models.

The signal reconstruction task demonstrates that MSF neurons can more accurately encode the spatiotemporal dynamics of input signals compared to LIF neurons. To analyze the exact differences in reconstruction capability between the two neuron types, we conduct a detailed comparative experiment using a single LIF neuron, a single MSF (D = 4) neuron, and a single 4LIF neuron, where 4LIF refers to a configuration of four LIF neurons that share the same weights to and from other neurons but have different firing thresholds. As shown in Supplementary Fig. S11, a single LIF neuron, due to its binary output, can only represent two intensity levels, resulting in substantial reconstruction error. In contrast, the MSF (D = 4) neuron offers more quantization levels, enabling a finer-grained signal representation. Although the 4LIF neuron similarly increases quantization levels, its four independent subthreshold dynamics affect the accurate encoding of temporal signals. To further compare MSF and 4LIF neurons at the network level, we evaluate MSF-based and 4LIF-based SNNs on the CIFAR10-DVS dataset. As shown in Supplementary Fig. S12a, MSF-based SNNs outperform 4LIF-based SNNs in both classification accuracy and computational efficiency. Furthermore, the activation frequency distribution presented in Supplementary Fig. S12b shows that 4LIF neurons generate significantly more spikes in the range of 2-4 compared to MSF neurons, leading to increased overall power consumption.

Efficient execution on neuromorphic hardware

High-performance computing with graphics processing units (GPUs) often leads to substantial power consumption53. Neuromorphic computing platforms, however, promise to deliver high-performance computing with significantly lower power consumption. Many general-purpose platforms with ANN-SNN hybrid computing architectures, such as Loihi 254, SpiNNaker 255, and the Tianjic series56 and its derivatives Lynxi chips, have been developed. In this subsection, we deploy the spiking detection network with LIF and MSF neurons on Lynxi chips and conduct event-based object detection tasks in real-world autonomous driving scenarios to demonstrate the computational efficiency and application potential of our MSF neurons. The pipeline is shown in Supplementary Fig. S13a. In our experiments, we use an autonomous vehicle to simultaneously capture event stream and RGB image data in an urban street environment. The RGB images are used for bounding box annotation, and the obtained labels are transferred to the event stream data as ground truth. The collected event stream data is processed by the spiking detection network deployed on the neuromorphic chip. We compare spiking detection models based on LIF and MSF neurons on the Lynxi chip. As shown in Supplementary Fig. S13b, our MSF neurons achieve significant improvements of 16.4% mAP in detection performance compared to LIF neurons. The visualization results in Supplementary Fig. S13c further illustrate that the MSF-based spiking detection model exhibits superior detection performance compared to the LIF-based model. As summarized in Supplementary Fig. S13d, on the Lynxi chip, both MSF-based and LIF-based models consume ~1.3 W, with inference frame rates of 16 FPS and 39 FPS, respectively. The same models based on LIF and MSF neurons achieve inference frame rates of ~106 FPS and 86 FPS, respectively, on an NVIDIA 4090 GPU, with both consuming 87 W of dynamic power consumption. These results indicate that our MSF neurons achieve high-performance object detection at the expense of some inference time. Overall, the efficient execution of MSF-based SNNs demonstrates the high execution efficiency and hardware compatibility of MSF neurons.

Discussion

Inspired by the multisynaptic connections of biological neurons, we propose the MSF neuron model that combines computational efficiency with powerful spatiotemporal encoding capabilities. Specifically, the MSF neurons can simultaneously encode the spatial intensity distribution and temporal dynamics of input signals using independent rate and temporal coding. By deriving the theoretically optimal solution for threshold selection and providing a parameter optimization criterion for different surrogate gradient functions, we can scale up MSF-based SNNs to large, deep models without degradation problems.

Extensive experimental results on various benchmarks indicate that our MSF-based SNNs can achieve superior performance while maintaining the energy efficiency advantage, enabling them to achieve or even surpass the performance of ANNs with identical network architectures on spatiotemporal continuous event stream tasks. Compared to LIF neurons, MSF neurons do not introduce additional parameters due to the shared weights used in the multisynaptic connections, thereby enabling efficient execution on neuromorphic hardware and facilitating deployment in resource-constrained edge computing scenarios. Overall, this work demonstrates that biologically inspired neural models can significantly advance neuromorphic computing toward real-world applications.

The MSF model offers a practical solution for efficiently encoding stimulus intensity through multiple spikes within a single timestep. By modeling multi-spike transmission via a parallel multisynaptic structure, the MSF model reduces temporal redundancy and enables faster, more energy-efficient information processing. This design avoids the need for prolonged spike trains or complex spike-timing mechanisms, making it well-suited for real-time neuromorphic applications such as low-latency recognition, decision-making, and control.

With the training and performance bottlenecks of large-scale SNNs resolved through our approach, applying these models to real-world agents and edge computing devices represents promising future work. The successful deployment of MSF-based SNNs on hybrid-paradigm neuromorphic hardware has already demonstrated superior performance, power efficiency, and hardware compatibility. Further application of these models to asynchronous event-driven neuromorphic chips will realize the low-power and low-latency advantages of SNNs. Algorithm-hardware co-design will be key to advancing neuromorphic computing toward practical applications. An interesting future research direction is to develop dedicated neuromorphic chips that physically implement multisynaptic connections with different thresholds between neurons to achieve even higher performance and efficiency for neuromorphic computing.

Increasing evidence from neuroscience research indicates that multisynaptic connections exist across various animals, with significant variation in the number of synapses between neuron pairs. In the primary visual cortex of mice24,57, the maximum number of synapses in the multisynaptic connections between pyramidal neurons is only five. However, in flies23,24, the number of synapses can reach up to 100 or even 1000. The strongest connection, from a visual centrifugal neuron to a wide-field lobula neuron, contains more than 2400 synapses. In our experiments, the maximum number of synapses D is empirically set, typically to 4. A more rational approach for determining synapse numbers could involve neural evolution strategies tailored to specific tasks and requirements.

Methods

Multisynaptic spiking neuron model

The fundamental unit of spiking neural networks is the spiking neuron, which is analogous to biological neurons and processes binary signals. The widely used spiking neuron model is the simplified LIF model7,58, which can be described by the following differential equation:

where u represents the membrane potential of the post-synaptic neuron, τ is the time constant, and I denotes the pre-synaptic input. When the membrane potential u exceeds a predefined threshold Vth, a spike is generated, and the membrane potential is reset to ureset.

For the convenience of simulating on a computer, the iterative LIF model3,59 is proposed by numerical discretization. By incorporating the firing-and-resetting mechanism, the iterative LIF model can be formulated as

where \({u}_{i}^{t,n}\) represents the membrane potential of the i-th neuron in the n-th layer at timestep t. \({s}_{i}^{t,n}\) denotes the spiking output of the i-th neuron in the n-th layer at timestep t, and wij is the synaptic weight from the presynaptic neuron j to the postsynaptic neuron i. \(\beta={e}^{-\frac{dt}{\tau }} < 1\) denotes the decay factor. Θ( ⋅ ) is the Heaviside step function that equals 0 when the input is less than 0 and equals 1 when the input is greater than or equal to 0.

However, vanilla spiking neurons such as LIF neurons and their variants are connected through single synapses (i.e., single channels). Due to the binary information representation in spiking neurons, the single-channel connection between neurons makes it difficult for SNNs to simultaneously encode both the spatial intensity distribution and temporal dynamics of input signals. To address this limitation, we design MSF neurons, where the pre-synaptic neuron can establish up to D synapses with the post-synaptic neuron. Each synapse corresponds to a firing threshold value \({V}_{\,{{\rm{th}}}\,}^{d}(d=1,2,\ldots,D)\). When the input stimulus at time t is strong, the membrane potential increases significantly, triggering multiple spikes through multiple threshold decisions, which are then simultaneously transmitted to the post-synaptic neuron via the multi-channel structure. The instantaneous firing rate across multiple synapses can be used to encode spatial intensity distributions. The MSF neuron model can be formulated as follows:

where sign( ⋅ ) represents the sign function and the upper index d denotes the d-th synapse. To construct a SNN model, we first extend the MSF neuron model to a layer-wise MSF model, which can be described as follows:

where t, n, and d represent the timestep, layer, and synapse, respectively. ⊙ denotes the element-wise multiplication. Vreset denotes the reset potential, which is typically set to 0. Wn denotes the fully connected or convolutional weight matrix. Ut,n, St,n,d, Ht,n, and Xt,n denote the membrane potential tensor, spiking output tensor, hidden state tensor, and spatial feature tensor, respectively. All tensors within the same layer share the same dimensions, i.e., Ut,n, St,n,d, Ht−1,n, Ht,n, and \({{{{\bf{X}}}}}^{t,n}\in {{\mathbb{R}}}^{{c}_{n}\times {h}_{n}\times {w}_{n}}\). Here, cn refers to the number of channels, while hn and wn refer to the height and width of the feature map, respectively.

As illustrated in Fig. 1f, the input spike tensor St,n−1 from the previous layer is processed through a convolutional layer to obtain the spatial feature map Xt,n. We adopt the shared weights for multiple synaptic connections, i.e., Wn,1 = Wn,2 = … = Wn,D. The membrane potential Ut,n at the current timestep is derived by integrating the state input from the previous timestep Ht−1,n with the spatial feature map at the current timestep Xt,n. The output spiking tensor St,n,d is obtained by comparing the membrane potential Ut,n with multiple threshold values \({V}_{\,{{\rm{th}}}\,}^{d}\). The membrane potential of neurons that have fired will be reset to Vreset, while the membrane potential of neurons that have not fired will decay by being multiplied by the decay factor β.

Direct training with surrogate gradients

Inappropriate gradients may lead to the issues of gradient explosion or vanishing in deep neural networks. Taking a multi-layer neural network as an example, the relationships between layers can be formulated as:

where F( ⋅ ) represents either a convolutional layer or a fully connected layer, Wl denotes the weight and bias of l-th layer, and f( ⋅ ) is the neuronal activation function. The gradient of the output Sl with respect to the output Sl−1 can be calculated as:

From the chain rule of backpropagation, we have

where \({{{\mathcal{E}}}}\) denotes the loss function. We can find that the gradient of the activation function, \({f}^{{\prime} }\), directly impacts the stability of network training. As the depth L of the network increases, the issues of vanishing or exploding gradients can be mitigated only when \({f}^{{\prime} }\) consistently equals 1. This is why using ReLU as the activation function in deep ANNs is more effective than using sigmoid or tanh52. Similarly, in deep SNNs, setting the surrogate gradient to 1 can partially prevent the vanishing and exploding gradient problems during backpropagation.

Determining the values of multiple thresholds, as well as directly training MSF using surrogate gradients, are critical issues in the implementation of this model. In the direct training methods for the LIF model, the non-differentiable property of the spiking activity necessitates the use of surrogate gradients to replace the discontinuous gradient near the threshold Vth, which can be described as:

where σ( ⋅ ) denotes the gradient surrogation function in the LIF model. Various effective surrogate gradient functions have been proposed, including linear, sigmoid, rectangular, and arctangent functions. According to Equation (4), to reduce memory overhead during training, the calculation of St,n in the forward propagation can be described as \({{{{\bf{S}}}}}^{t,n}={\sum }_{d=1}^{D}\Theta \left({{{{\bf{U}}}}}^{t,n}-{V}_{\,{{\rm{th}}}\,}^{d}\right)\).

In the backpropagation process, the gradient is discontinuous at multiple locations. The surrogate gradient of MSF consists of multiple surrogate functions, which can be described as:

Therefore, the setting of thresholds and the choice of surrogate functions directly determine the gradients of the activation functions during the backpropagation process. To proportionally measure the magnitude of membrane potential, it is generally necessary to have equal intervals between thresholds when selecting multiple threshold values. By setting an initial threshold Vth and a threshold interval h > 0, the surrogate gradient \({f}^{{\prime} }({{{{\bf{U}}}}}^{t,n})\) of MSF can be described as follows:

To simplify the expression, we can shift the summation index to make the summation symmetric about zero. Define a new index \({d}^{{\prime} }=d-\frac{D-1}{2}\), which shifts the range of summation to:

When D → ∞, the summation can be approximated by a continuous integral. By approximating the summation over discrete \({d}^{{\prime} }\) with an integral over the continuous variable \(x={d}^{{\prime} }h\), we obtain:

Let y = Ut,n − Vth − x, which gives dy = − dx. Substituting this into the integral, we get:

Given that the surrogation gradient function σ(x) satisfies \(\int_{-\infty }^{\infty }\sigma (x)\,dx=1\), we can obtain:

Ideally, the surrogate gradient \({f}^{{\prime} }({{{{\bf{U}}}}}^{t,n})\) should stabilize around 1 to prevent the backpropagation algorithm from encountering issues of gradient vanishing or gradient explosion. This means that regardless of the surrogate gradient function σ(x) used, h = 1 is the optimal solution to ensure the surrogate gradient fluctuates around 1. The oscillation amplitude of the surrogate gradient around 1 can be adjusted by modifying the curve steepness α of the surrogation gradient functions. To ensure more stable training, the curve steepness α should be adjusted to minimize the oscillation amplitude of the surrogate gradient around 1. Please see Fig. 6 and Supplementary Fig. S8 for detailed experimental analyses on the impact of the threshold interval h and the curve steepness α.

Relationship between classical artificial neuron and multisynaptic spiking neuron

Glorot et al.52 found that the ReLU neurons can yield sparse representations similar to those produced by spiking neurons, which are both biologically plausible and computationally efficient. In this paper, we aim to reveal the underlying relationship between ReLU neurons and our multisynaptic spiking neurons through experiments and theoretical derivations. Here, we analyze the relationship between ReLU neurons and our multisynaptic spiking neurons from the theoretical perspective. The ReLU neurons can be described as:

The integration can be approximated by summation when the step size h is sufficiently small:

The calculation of the spatial feature map Xn in the forward propagation of a network based on ReLU neurons can be described as:

When t = 1 and D → ∞, Equation (4) of MSF can be described as:

Similarly, the calculation of the spatial feature map Xn in the forward propagation of a network based on MSF neurons can be described as:

Comparing Equations (17) and (19), we find that when Vth = 0, the MSF and ReLU neurons are approximately equivalent. In other words, ReLU can be considered a special case of MSF. When the timestep t = 1, the initial threshold Vth = 0, and the maximum number of synapses D → ∞, MSF can approximate ReLU. Moreover, when the maximum number of synapses D is reduced to 1, the MSF neurons degenerate into LIF neurons.

Multi-scale hierarchical spiking residual attention network

In this paper, the bottleneck block is chosen as the fundamental residual block due to its reduced computational complexity and improved efficiency60. Inspired by MS-ResNet35 and Att-ResNet17, we construct a pre-activation bottleneck block (see Supplementary Fig. S14). Moreover, multi-scale features play a crucial role in various computer vision tasks61. By extracting features from different scales, this technique captures structural and textural information across multiple sizes, providing a more comprehensive and accurate representation of the image. The stacking of multiple convolutional operators can increase the receptive field, allowing for the natural extraction of fine-to-coarse multi-scale features. Therefore, by stacking multiple convolutional layers in a residual block, deeper layers in the residual block can access a larger receptive field, enabling them to capture more global contextual information. As shown in Supplementary Fig. S14, after the 1 × 1 convolution, we split the feature maps into s groups along the channel dimension, denoted by \(\{{{{{\bf{X}}}}}_{1}^{t,n},{{{{\bf{X}}}}}_{2}^{t,n},...,{{{{\bf{X}}}}}_{s}^{t,n}\}\). The s groups are then fed into a hierarchical residual-like convolutional layers. Specifically, for every Xi except X1, there exists a corresponding 3 × 3 convolution operation Convi( ⋅ ) with output Yi. The feature group Xi is combined with the output of Convi−1( ⋅ ) and subsequently processed by Convi( ⋅ ).

In the case where i > 2, Convi operates not just on Xi but on the sum of Xi and the output of the previous convolution Yi−1. This cumulative effect ensures that features from earlier layers are propagated and considered in subsequent layers, thereby increasing the receptive field. As i increases, each Convi processes features from different scales: the original input features (Xi) as well as features that have been processed by previous convolutions (Yi−1). This blending of information from different scales within the network allows it to capture both fine-grained details from the earlier layers and more abstract, high-level information from the later layers.

Subsequently, the split groups are concatenated and fed into 1 × 1 convolutional layer. The 1 × 1 convolution operation serves to integrate features across the split groups after they have been concatenated along the channel dimension. It allows for the fusion of multi-scale features by aggregating information from different split groups and enhancing the representation capability of the network. Furthermore, we can also add the split-transform-merge strategy62 to replace the 3 × 3 convolution with the 3 × 3 group convolution. In this paper, s and c are used to denote the number of scales and groups, respectively, while w represents the number of channels per sub-feature map. s controls the receptive field sizes, whereas c and w control the model’s complexity.

Visual selective attention is an essential brain function possessed by both humans and animals63, and it has been widely and effectively applied in computer vision tasks64. Here, we focus on the refinement effect of selective attention mechanisms on neural firing. Previous research65,66 has shown that selective attention affects visually driven responses by modulating the spiking activity of individual neurons and critical neuronal populations. Recent studies17,67 indicate that attention mechanisms can increase neural firing in regions of significant information and decrease firing in irrelevant background regions, thereby enhancing the computational performance and efficiency of SNNs. As shown in Supplementary Fig. S14, channel and spatial attention mechanisms are used to refine the feature map along spatial and channel dimensions. For the channel attention mechanism, average-pooling and max-pooling operations are firstly applied to capture global information. The global features are then fed into a shared two-layer FC network to obtain the attention weights. The process can be described as follows:

where AvgPoolc( ⋅ ), MaxPoolc( ⋅ ) are pooling operations along the temporal and spatial dimensions, W0 and W1 are the shared weights of the two-layer FC network, and \({{{{\bf{X}}}}}_{\,{{\rm{CA}}}\,}^{t,n}\) denotes the refined feature map after applying channel attention.

For the spatial attention mechanism, we adopt average-pooling and max-pooling operations followed by a convolution layer to obtain the spatial weights. The refined feature map in the spatial dimension can be computed as:

where AvgPools( ⋅ ), MaxPools( ⋅ ) are pooling operations along the temporal and channel dimensions, Conv7×7 represents a convolution layer with a filter size of 7 × 7, and \({{{{\bf{X}}}}}_{\,{{\rm{SA}}}\,}^{t,n}\) denotes the refined feature map after applying spatial attention. The Multi-scale Hierarchical Spiking Residual Attention Network, termed MHSANet, is designed for static and dynamic recognition as well as image-based and event-based object detection. Similar to ResNet, MHSANet can be adapted to various dataset scales by adjusting parameters such as the number of blocks in different stages, the kernels, scales, and groups of convolutional layers.

Datasets and tasks

In this paper, we first design a spiking autoencoder model to test the spatiotemporal information encoding capability of our MSF neurons. Subsequently, we validate the SNNs with MSF neurons on various classification tasks, including static images and dynamic event streams. Finally, we assess the SNNs with MSF neurons on static image-based object detection, dynamic event-based object detection in real-world autonomous driving scenarios, biological signal processing, and reinforcement learning, providing a comprehensive evaluation of their spatiotemporal information processing capabilities.

Image and video reconstruction: The image reconstruction task uses the ImageNet-1k dataset33, while the video reconstruction task uses action videos from the HMDB51 dataset34. The model’s encoding and decoding capabilities are assessed by comparing the reconstructed images and videos with the originals.

Static image classification: We test our models on standard image classification benchmarks, including the CIFAR-10, CIFAR-100, and ImageNet-1k datasets. Both CIFAR-10 and CIFAR-100 datasets consist of 50,000 training images and 10,000 test images, all with a resolution of 32 × 32 pixels. CIFAR-10 contains 10 categories, while CIFAR-100 contains 100 categories. ImageNet-1k is a large-scale image classification dataset, comprising 1.28 million training images and 50k validation images across 1000 categories.

Dynamic event stream classification: We test our models on event-based classification benchmarks, including CIFAR10-DVS68 and HARDVS69. CIFAR10-DVS is an event-based dataset derived from static images captured by an event camera, consisting of 10,000 event streams across 10 different classes with a spatial resolution of 128 × 128. The dataset is divided into 9,000 training samples and 1000 test samples. HARDVS is a large-scale human activity recognition (HAR) dataset collected using a DAVIS346 camera with a resolution of 346 × 260 pixels. It includes a total of 107,646 video sequences representing 300 common human activities, split into 60% for training, 10% for validation, and 30% for testing.

Object detection: Complex computer vision tasks such as object detection present significant challenges for current SNNs. We test our models on the standard static object detection benchmark (COCO2017) and the event-based object detection benchmark (Gen1). The COCO2017 dataset70 is a large-scale frame-based object detection dataset, consisting of 118K training images and 5K validation images for 80 object categories. The image size is 640 × 640 pixels. The Gen1 dataset37 is a large-scale event-based object detection dataset capturing diverse driving scenarios on open roads. The dataset encompasses 39 h of driving footage with a spatial resolution of 304 × 240 pixels. The dataset comprises 228 K annotated bounding boxes for vehicles and 27K annotated bounding boxes for pedestrians. We use COCO mean average precision at IOU = 0.5 (mAP@50) as the performance metric to evaluate the models.

Reinforcement learning: We apply our MSF neurons in reinforcement learning tasks. Specifically, we used four commonly used reinforcement learning tasks supported by the OpenAI-gym43 and the MuJoCo44 environments, including HalfCheetah-v3, Walk2d-v3, Hopper-v3, and Ant-v3.

Biological signal processing: To further demonstrate the spatiotemporal dynamic modeling capability of our MSF neurons, we apply our MSF neurons to speech, EEG, and ECG signal processing tasks. For speech recognition, we use the Spiking Heidelberg Digits (SHD) dataset38, derived from original auditory data converted into spike formats using a bionic inner ear model. The SHD dataset consists of ~10,000 high-fidelity audio recordings of spoken digits ranging from 0 to 9 in both English and German by 12 different speakers, forming a classification task with 20 categories. The SHD dataset contains 8,156 training samples and 2264 testing samples. In speech recognition, we follow the same settings and pre-processing steps used by Zheng et al.11. Each sample input to the model is a 700 × 1000 matrix, where 700 and 1000 represent channels and timesteps, respectively.

For EEG signal processing, tasks included emotion recognition, motor imagery classification, and sleep stage scoring. The EEG-based emotion recognition task used the DEAP dataset39, which comprises 32-channel EEG data and 8-channel peripheral physiological signals from 32 participants, recorded while they watched 40 one-minute music videos. The EEG signals, initially sampled at 512 Hz, were downsampled to 128 Hz and had electrooculography (EOG) artifacts removed. The dataset dimension is 32 × 40 × 32 × 8064 (participants × trials × channels × temporal length). Participants rated their emotions on arousal, valence, liking, and dominance scales from 1 to 9 after each video. In the experiments, only 32-channel EEG data was used, focusing on arousal and valence, which were mapped to low, medium, and high labels, or just low and high. Before feeding the data into the model, we follow the same pre-processing steps as Tao et al.71 and Zheng et al.11. The processed EEG data is then reshaped to 25600 × 32 × 384 (samples × channels × timesteps). The dataset is split into 90% for training and 10% for testing.

The EEG-based motor imagery classification task is conducted on the MMIDB dataset provided by Physionet40, a comprehensive public dataset containing EEG recordings from 109 subjects. Each participant complete 14 experimental runs, consisting of 2 baseline runs and 12 runs involving motor imagery (MI) and actual movement, with 6 runs dedicated to each category. Each run lasts ~1 minute for resting states and 2 minutes for MI and movement tasks. The data are collected through 64 EEG channels, sampled at a frequency of 160 Hz. Our analysis focuses on data from 6 runs involving four types of MI tasks: left hand, right hand, both hands, and both feet. Data from four subjects (S88, S92, S100, and S104) are excluded due to incomplete experimental trials. Each run contains valid MI trials, each lasting four seconds, equivalent to 640 timesteps. The trials were categorized into four types: rest with eyes open, and MI of the left hand, right hand, and both feet. Notably, MI tasks involving both hands are excluded due to their high similarity to single-hand MI data. 10-fold cross-validation across subjects is utilized for the experiments.

The EEG-based sleep stage scoring task utilizes the Sleep-EDF-18 dataset40,41, which is contributed in 2018 and contains 197 polysomnograms (PSGs). This dataset includes recordings from healthy subjects (Sleep Cassette, SC) and subjects with mild difficulty falling asleep after taking temazepam (Sleep Telemetry, ST). Each whole-night PSG was recorded at a sampling rate of 100 Hz and comprises EEG data from the Fpz-Cz and Pz-Oz electrode locations, EOG, chin electromyography (EMG), and event markers. The hypnograms, segmented into 30-second epochs, were manually labeled by trained technicians according to the Rechtschaffen and Kales standard72. For this study, we follow AASM guidelines by merging N3 and N4 stages into a single N3 stage and excluding movement time (M) and unscored stages, resulting in five sleep stages: wake (W), rapid eye movement (REM), N1, N2, and N3. Additionally, we only include 10 minutes of wakefulness before and after sleep as part of the wake stage. Our evaluations specifically utilize the Fpz-Cz EEG channel from the SC recordings with 10-fold cross-validation scheme. The dataset dimensions are originally 187596 × 1 × 3000 (samples × channels × temporal length). The data is then reshaped to 187596 × 30 × 100 (samples × timesteps × temporal length).

The ECG wave-pattern classification task utilizes the QTDB dataset42. We follow the preprocessing method of Yin et al.2 by encoding the original continuous values into spike trains using a level-crossing scheme. The ECG signals are divided into six distinct characteristic waveforms (P, PQ, QR, RS, ST, and TP), with amplitude fluctuations and durations providing clinicians with crucial information about cardiovascular function. The ECG wave-pattern classification task is an online streaming labeling task that utilizes only historical information. The dataset is divided into a training set with 618 samples and a test set with 141 samples. Each sample comprises four channels and has a time length of 1301, containing 5-7 heartbeat rhythms.

Experimental settings

For the one-dimensional signal reconstruction task, we design a simplified spiking autoencoder consisting of a hidden layer followed by a readout layer. The readout layer is implemented as a linear decoder that reconstructs the original signal from the spike outputs of the hidden layer. The model is trained for 30 epochs with a batch size of 32 using the Adam optimizer, initialized with a learning rate of 0.001. A cosine annealing learning rate scheduler is applied throughout training. The mean squared error (MSE) loss is used as the training criterion, and reconstruction performance is evaluated by the MSE between the original and reconstructed signals. All experiments are conducted using the PyTorch framework on an NVIDIA RTX 4090 GPU. For the image and video reconstruction tasks on the ImageNet and HMDB51 datasets, both the encoder and decoder are composed of three convolutional layers. We compare the proposed MSF neuron with the baseline LIF neuron across all reconstruction experiments. The number of timesteps T is set to 1 for image reconstruction and 4 for video reconstruction, respectively.

For static image classification and event-based classification tasks, we employ the proposed MHSANet (see Supplementary Fig. S14). The specific network structures, such as depth and width, are chosen based on the dataset scale. For instance, on the CIFAR-10, CIFAR-100, and CIFAR10-DVS datasets, MHSANet-29 (32w × 4s) and MHSANet-29 (4c × 24w × 4s) are chosen as the base architecture. For ImageNet, MHSANet-50 (26w × 4s) and MHSANet-101 (26w × 4s) are chosen as the base architectures, while for HARDVS, MHSANet-29 (32w × 4s) and MHSANet-50 (18w × 4s) are used. For large-scale object detection tasks, we employ our MHSANet-50 (26w × 4s) and MHSANet-50 (4c × 24w × 4s) as the backbone. The detection head uses the YOLOX-L head, an anchor-free detection head known for its strong performance. All neurons in the detection head are replaced with spiking neurons.