Abstract

Molten salts are crucial for clean energy applications, yet exploring their thermophysical properties across diverse chemical space remains challenging. We present the development of a machine learning interatomic potential (MLIP) called SuperSalt, which targets 11-cation chloride melts and captures the essential physics of molten salts with near-DFT accuracy. Using an efficient workflow that integrates systems of one, two, and 11 components, the SuperSalt potential can accurately predict thermophysical properties such as density, bulk modulus, thermal expansion, and heat capacity. Our model is validated across a broad chemical space, demonstrating excellent transferability. We further illustrate how Bayesian optimization combined with SuperSalt can accelerate the discovery of optimal salt compositions with desired properties. This work provides a foundation for future studies that allows easy extensions to more complex systems, such as those containing additional elements.

Similar content being viewed by others

Introduction

Over the past several decades, atomic-scale simulation has emerged as an indispensable tool for predicting and providing microscopic insights into experimentally observed phenomena in molten salt materials. Numerous scientifically important thermophysical and transport properties of molten salts can be accurately evaluated using molecular dynamics (MD) simulations, in which atomic motion is determined by integrating Newton’s second law of motion. The predictive accuracy of MD simulations is highly dependent on the quality of the underlying potential energy surface (the potential) that determines the forces that act on each atom. Standard physics-based approaches to potentials, such as classical force fields (FFs)1,2,3,4 and quantum mechanics (QM) methods, particularly density functional theory (DFT)5,6, have been employed successfully to model various molten salt systems7,8,9. However, these approaches involve a well-known trade-off between computational cost, accuracy, and generality. Classical FFs, while computationally efficient, often require reparameterization for specific systems or reactions. Conversely, QM-based methods are computationally expensive, limiting their utility for large-scale and long-timescale MD simulations. This trade-off is especially critical for modeling complicated properties, such as viscosities. Thus, MD simulations urgently need a fast, accurate, and broadly applicable reactive potential to enable the reliable prediction of thermophysical salt properties and salt reactions with their environment (e.g., corrosion).

Recently, machine learning interatomic potentials (MLIPs)10,11 have emerged as a promising alternative to overcome these limitations. MLIPs can achieve near-DFT accuracy while maintaining computational costs close to those of classical FFs, enabling MD simulations with 103–104 atoms over nanosecond timescales. This capability unlocks unprecedented opportunities for studying complex molten salt systems12. Up to this point, almost all MLIPs for molten salts were tailored to specific chemical compositions, focusing on a narrow range of properties. For example, Attarian et al. developed MLIPs for FLiBe molten salts13,14, accurately predicting properties such as density, heat capacity, and ionic conductivity. Similarly, Lu et al. constructed MLIPs for SrCl2-KCl-MgCl2 melts15, predicting local structures and thermophysical properties. This has led to 38 separate papers since 2021 fitting MLIPs to chloride salts (mainly on unary and binary systems). While effective, these approaches necessitate repeated efforts to construct training datasets and fit MLIPs for each new system, a time-intensive process.

A promising alternative lies in the development of so-called universal potentials16,17,18,19,20,21,22,23, which are trained on datasets encompassing 50 or more chemical species. These potentials offer broad applicability and reduce the need for system-specific reparameterization. However, they often exhibit significant errors in some properties and are generally less accurate than MLIPs designed for specific systems. For instance, M3GNet demonstrates relatively high errors in predicting vibrational properties24.

To address these challenges, we propose an intermediate approach that focuses on a subset of 10–20 chemically similar elements, aiming to achieve near-DFT accuracy while maintaining computational efficiency. Such a strategy reduces the inefficiencies of developing numerous highly specific MLIPs while enabling high accuracy across a substantial composition space. Notably, a single MLIP trained on N elements effectively models all 2N suballoys, offering substantial computational savings. For example, a potential trained on 11 elements inherently describes all 2048 suballoys, significantly simplifying the exploration of complex chemical spaces.

In this work, we developed an efficient workflow to construct an MLIP, referred to as the “SuperSalt” potential, tailored to all liquid-phase compositions of 11-cation chloride melts: LiCl, NaCl, KCl, RbCl, CsCl, MgCl2, CaCl2, SrCl2, BaCl2, ZnCl2, and ZrCl4. The 11-cation chloride salts were selected for their chemical diversity and relevance to molten salt applications. ZnCl2 and ZrCl4 were specifically included for their ability to lower melting points, which benefits thermal and electrochemical performance. This composition enables a broad exploration of composition-property relationships in multicomponent systems. Extensive validation demonstrates that the SuperSalt potential achieves near-DFT accuracy in predicting key properties such as densities, bulk moduli, radial distribution functions, specific heat capacities, and thermal expansion coefficients for multicomponent molten salt systems. Furthermore, the potential exhibits excellent transferability across different chemical compositions, enabling reliable predictions for unexplored systems. The SuperSalt potential also facilitates targeted optimization of material properties within the 11-cation composition space. By leveraging Bayesian optimization (BO)25,26, we identified optimal compositions that meet specific property requirements, showcasing the potential’s utility in accelerating materials discovery. Such discovery is not possible with traditional empirical or ab initio methods and is enabled by our ability to model 11 cations with machine learning-based strategies. The SuperSalt potential offers a robust and efficient platform for simulating and optimizing multicomponent molten salt systems. With further extension, this approach has the potential to provide a foundational MLIP framework for the majority of molten salts of interest, bridging the gap between computational efficiency, accuracy, and general applicability.

This paper is arranged as follows: The results section first outlines the comprehensive workflow developed for generating a robust SuperSalt potential, including details on the training dataset and the clustering framework employed. Secondly, we present the training and testing results, showcasing the accuracy and transferability of the SuperSalt model across various molten salt systems. Then, we evaluate the performance of the SuperSalt model by comparing its predictions of thermophysical properties with ab initio molecular dynamics (AIMD) and experimental data. The last subsection demonstrates how the SuperSalt potential, in combination with Bayesian optimization, can expedite the discovery of molten salt compositions with optimal properties. Finally, the discussion section highlights the implications of this work for the broader molten salts community and potential future directions for expanding the model.

Results

Comprehensive workflow for complex molten salts

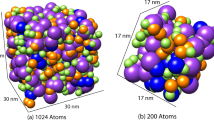

The design of a robust MLIP depends crucially on the choice of training dataset. For molten salt applications, the primary focus is on liquid properties, such as the heat capacity (CP), thermal expansion, density (ρ), molecular structure, equation of state, etc. For transferability, the MLIP should be able to make good predictions on random compositions across the whole chemical space of the 11-cation chloride melts. To this end, we have developed a comprehensive dataset of atomistic structures and quantum-mechanical reference data for 11-cation chloride melts, as well as an interatomic potential fitted to that database in the higher-order equivariant message passing neural networks framework (as shown in Fig. 1). The chemical space for 11 chlorides consists of 211 − 1 = 2047 chemical combinations, including 11 unaries, 55 binaries, 165 ternaries, etc. Constructing a training dataset by enumerating all possible chemical combinations is formidable. However, it is well-known that the physics of salts is largely dominated by relatively simple pairwise electrostatics, so we expected that the fitting of 1- and 2-component systems would provide much of the essential physics. We assumed some many-component interactions would be important and that the MLIP would require some experience with the many-element environments to ensure transferability, so we also included data from 11-cation chloride salts in our training set. We employed the Dirichlet distribution method27 to control and generate the compositions of the 11-component system systematically. This method provides flexibility in sampling different composition spaces while maintaining a fixed total concentration, which is crucial for accurately capturing the complex behavior of the multicomponent molten salt system. By using this approach, we ensure that each configuration sampled represents a physically realistic and consistent composition, facilitating the exploration of various mixtures across the 11-component phase space. Therefore, our training dataset included only 1-component, 2-component, and 11-component configurations. We chose the nonlinear neural-network multilayer atomic cluster expansion (MACE) model28 for the SuperSalt potential because of its capability to capture higher-order atomic interactions with high accuracy and efficiency. MACE is built on an equivariant message-passing neural network framework, which allows it to handle complex atomic geometries by encoding many-body interactions at each layer of the network. MACE has demonstrated the ability to create a universal foundation model that is applicable across essentially the entire periodic table16,29.

The procedure for obtaining the database and training the model is outlined as follows: 1-component, 2-component, and 11-component compositions are introduced into molecular dynamics (MD) simulations under specific conditions, resulting in the generation of an initial structure database. Subsequently, a clustering framework based on Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN)32,33,34 is utilized to automatically sample uncorrelated learning configurations from the initial datasets by leveraging the underlying cluster structure present in the database. The selected learning configurations are sequentially sampled from these uncorrelated clusters and iteratively trained using the multilayer atomic cluster expansion (MACE) model28 until the desired accuracy is attained. ERMSE and FRMSE represent the root mean square error (RMSE) of energy and force.

The procedure for generating the initial data pool, as illustrated in Fig. 1, follows an NPT “melt-quench” MD approach. This method involves heating a randomly packed structure to an elevated temperature and subsequently quenching it to a lower temperature, creating the initial configuration database. Further details of this procedure are provided in the “Methods” section. Then, we use a clustering framework workflow to automatically sample uncorrelated learning configurations from the initial datasets by exploiting the underlying cluster structure embedded in the dataset30 and partitioning them into “N” uncorrelated clusters31. The learning configurations are sequentially sampled from the uncorrelated clusters and trained using the MACE model until the desired accuracy has been achieved. For the clustering, we use Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN)32,33,34, a density-based hierarchical clustering method. This algorithm has been successfully applied previously to partition and analyze MD trajectories35. The details of the clustering framework workflow and the MD simulations are described in the “Methods” section. Note that the entire “melt-quench” region was mapped to the SuperSalt model with only 2% of configuration space (initial structures).

The final database was obtained by merging the clustering-extracted data of the unary, binary, and 11-cation systems, and each subsystem has a few hundred structures. This database contains around 70,000 structures with a total of ≈7 million atoms. For validation, we held out 10% of these structures from training, selected at random.

Training and testing results

Using the clustering-learned training dataset, we trained a MACE model (see Method for details on the hyperparameters) using the higher-order equivariant message passing neural networks framework. We refer to this MACE model as the SuperSalt potential, representing the first attempt at constructing a unified MACE model for the multi-component molten salt system.

The parity plots for energy and force for different databases affirm the high accuracy of the SuperSalt potential (Fig. 2a–c). In Fig. 2a, b, for training and validation sets, the deviation of the SuperSalt potential predictions from DFT results for potential energy and atomic forces is reported. We use root mean square error (RMSE) as a measure of the overall testing errors. Specifically, the RMSE of total potential energy predictions reaches a value as low as 0.5 meV/atom for both datasets, and the RMSEs of atomic forces predictions are 13.7 meV/Å and 16.0 meV/Å, respectively. These very small testing errors suggest an accuracy close to the DFT level. To further validate the accuracy and check the transferability of the SuperSalt potential, we show its performance on two newly generated testing sets: the Test_1 set containing 3300-ternary atomic configurations and Test_2 set containing 800-random multicomponent atomic configurations, which are unrelated to our training set. The details of the testing data generation are discussed in the Methods section. The testing RMSEs of the SuperSalt for the Test_1 dataset are 0.6 meV/atom and 19.6 meV/Å. For the Test_2 dataset, the testing RMSEs of the SuperSalt are 1.3 meV/atom and 24.4 meV/Å. These results are somewhat higher than the training and validation data but still very low and even better than those reported as training RMSEs in many previous publications for specific melt systems36 (Fig. 2b, c). Furthermore, the comparison demonstrates that the SuperSalt potential, trained on 1-component, 2-component, and 11-component structures, performs exceptionally well for 3-component, 4-component systems, and beyond, including in the Test_1 and Tes_2 data sets. This highlights the excellent transferability of the SuperSalt potential across the entire chemical space of 11-cation chloride melts. Interestingly, based on the force errors across different elements, those with higher valence states exhibit more significant discrepancies. Notably, Zr with a 4+ valence state shows the most significant force error in all three scenarios shown in Fig. 2 (see third column of Fig. 2a–d). This may be due to the more complex chemical environment occurring around higher valent cations, but more studies are needed to be sure. The testing errors in atomic forces for three databases largely follow a Gaussian distribution and are concentrated in the range from −25 to 25 meV/Å (see the histogram plot and normal distribution fitting on the right side of Fig. 2a–d). Additionally, the performance of the SuperSalt in predicting pressure values in four datasets is shown in Fig. S5. The SuperSalt demonstrates good accuracy with low RMSE values.

Parity plots for energy and force, comparing density functional theory (DFT) reference data with SuperSalt potential predictions, are shown for a the training dataset, b the validation dataset, c Test_1 containing the 3300-ternary dataset, and d Test_2 containing the 800-multicomponent dataset. The corresponding force root mean square error (RMSE) per element for each dataset is presented as histograms in the third column. The testing errors in atomic forces for the four databases in the right panels largely follow a Gaussian distribution and are concentrated in the range of −25 to 25 meV/Å.

Given the existence of multiple universal MLIPs, it is important to establish that a multi-element but specialized MLIP tailored for molten salt systems can provide better results meaningfully. To explore this, the foundation model MACE-MP016 was tried on the current testing databases by including the DFT-D3 correction to make the predictions consistent with the calculated DFT data of the testing sets. As shown in Fig. S1, the resulting errors for the Test_1 and Test_2 datasets are 55.8 meV/atom and 49.3 meV/atom, respectively, with corresponding force errors of 191.2 meV/Å and 172.3 meV/Å. These values are approximately an order of magnitude larger than those obtained with our SuperSalt potential, demonstrating that the latter has significantly superior accuracy when applied to molten salt systems.

Performance of SuperSalt potential

EOS and RDFs

Next, we evaluate the performance of our SuperSalt potential by testing various liquid properties commonly used to identify potential candidate molten salts. We used random atomic configurations and compared their energy vs. volume curves obtained from the SuperSalt potential and DFT. To do this, we started by generating random atomic configurations and ran MD simulations at three temperatures, 700 K, 1200 K, and 1500 K, then randomly picked structures from these simulations and applied hydrostatic strains between −10 and 10% at every 1% point on these structures and calculated their energy by both methods. Overall, 66 structures were selected for this test, and the energy vs. volume curves for some of these structures and partial results are shown in Fig. 3a. From these curves, we calculated the bulk modulus, the minimum energy, and the equilibrium density for each structure at 0 K. We find that the RMSE in the predictions of bulk modulus, minimum energy, and equilibrium density between the SuperSalt potential and DFT are 0.23 GPa, 2.4 meV/atom, and 3 × 10−3 g/cm3, respectively. The list of structures and the detailed comparison between the calculated values using DFT and SuperSalt potential are provided in the supplementary materials. In the supplementary materials, we also make the same comparison between MACE-MP0 and DFT without considering D3 correction, so it would be consistent with the MACE-MP0 training data. The MACE-MP0 results show RMSEs of 2.7 GPa, 22 meV/atom, and 2.7 × 10−2 g/cm3, respectively, for bulk modulus, minimum energy, and equilibrium density predictions. Thus, we again find that the SuperSalt potential, which is directly fitted to the molten salt configurations, outperforms the MACE-MP0 in calculating key properties.

a Equations of state (EOS) at 700 K for 8 different multicomponent salts, calculated using SuperSalt molecular dynamics (SuperSalt-MD), show excellent agreement with ab initio molecular dynamics (AIMD) results. b Radial distribution functions (RDF) of random molten salts, spanning unary, binary, ternary, and 11-component systems, predicted by SuperSalt-MD (shown as colorful solid lines), match excellently with those obtained from AIMD (shown as colorful circles).

We then examine the liquid structures from both our AIMD simulations and SuperSalt molecular dynamics (SuperSalt-MD) simulations. To effectively characterize the average structure of various melts, we computed partial radial distribution functions (RDFs) for all atomic pairs. As shown in Fig. 3b, the partial RDFs from the SuperSalt-MD simulations nearly overlap with those from the AIMD simulations. Using MgCl2 as an example, we performed both AIMD and SuperSalt-MD simulations under identical conditions, such as the NVT ensemble (constant number of atoms N, volume V, and temperature T) with T = 1200 K and at equilibrium volume. After a 10 ps equilibration, the average RDFs were computed from MD trajectories over 50 ps for AIMD and 100 ps for SuperSalt-MD. As shown in Fig. 3b, the partial RDFs from the SuperSalt-MD simulations (solid line) closely overlap with those from AIMD (circle dot), with both methods showing the same first and second peak positions (\({R}_{1}^{Na-Na}=3.9\) Å; \({R}_{1}^{Na-Cl}=2.7\) Å; \({R}_{1}^{Cl-Cl}=4.0\) Å; \({R}_{2}^{Na-Na}=8.1\) Å; \({R}_{2}^{Na-Cl}=6.2\) Å; \({R}_{2}^{Cl-Cl}=8.0\) Å) and overlapping shoulders after the first peak in both Na-Na and Cl-Cl RDFs. This indicates excellent agreement between SuperSalt-MD and AIMD structures. These results also show good agreement with other MLIP studies conducted exclusively on NaCl melts36. Although the atomic pair interactions are more complex in multi-component melts, both methods still exhibit the same prominent peak positions.

Density, heat capacity, and thermal expansion

We further compare the mass densities of different melts at various temperatures from AIMD and the SuperSalt potential, as these reflect changes in system volume and are closely linked to the local structures. To showcase the versatility and accuracy of the SuperSalt potential across a diverse range of compositions and temperatures, we selected several systems that encompass unary, binary, ternary, and multicomponent molten salts. These systems span a wide range of temperature conditions, including well-characterized salts such as NaCl, eutectic NaCl-MgCl2, 0.58NaCl-0.12CaCl2-0.3ZnCl2, as well as more complex mixtures containing all the relevant elements (Li2Na4Mg3K5Ca9ZnRb3Sr2Zr6Cs2Ba2Cl74). As shown in Fig. 4a, SuperSalt demonstrates excellent agreement with AIMD, with an average deviation of less than 2% across a wide temperature range (900 K–1400 K). It should be noted that since both the SuperSalt potential and AIMD predictions use around 100 atoms, the density deviations are unlikely to be caused by finite-size effects. Compared to experimental measurements37,38,39, as shown in Fig. 4b, the mass densities predicted by SuperSalt show a slight deviation, with an average difference of 5%. We believe that the density deviations observed in SuperSalt-MD simulations compared to experimental measurements may be attributed to the approximations inherent in DFT calculations used for fitting the potential, such as the choice of electronic exchange-correlation functionals and dispersion corrections. Several previous studies40,41,42 have highlighted that dispersion interactions significantly influence the calculated density.

In the left panels, various properties calculated by SuperSalt molecular dynamics (SuperSalt-MD) are compared with ab initio molecular dynamics (AIMD) or experimental results, while in the right panels, relative error deviations are shown as histograms. a SuperSalt potential predictions for 9 molten salt systems at two different temperatures show excellent agreement with AIMD results, with an average deviation of less than 2%. b SuperSalt potential predictions for 6 molten salt systems at different temperatures agree well with experimental results, with an average deviation of less than 5%. c Heat capacities of 9 molten salts were calculated from the enthalpy deviations between two temperatures. A 100-ps-long SuperSalt-MD simulation was performed at each temperature. The largest difference between SuperSalt predictions and AIMD calculations is around 5% for the 0.58NaCl-0.12CaCl2-0.3ZnCl2 system. d The volumetric thermal expansion coefficients for nine molten salt systems, calculated from the volume change deviations between two temperatures, are compared with AIMD results. Error bars in AIMD calculations, corresponding to standard deviations ±property values, are shown in the left panels and gray histograms in the right panels. The `Big' system means an 11-component system, namely, Li2Na4Mg3K5Ca9ZnRb3Sr2Zr6Cs2Ba2Cl74.

The excellent heat transfer performance of molten salts is critical for applications in molten salt reactors (MSR) and concentrated solar power (CSP). Among various properties, heat capacity (Cp) is the most significant factor influencing the heat transfer efficiency of liquid coolants and heat storage media. Figure 4 presents both the AIMD and SuperSalt-predicted average Cp. These values are calculated by \({C}_{p}={(\frac{\delta H}{\delta T})}_{p}\) based on the slope of average enthalpy H at each temperature between two data points. As demonstrated, our SuperSalt predictions for average Cp are generally in good agreement with the AIMD results, with the largest deviation being 4.8% for 0.58NaCl-0.12CaCl2-0.3ZnCl2, which remains within the error margin of the AIMD data. From the equilibrium volumes at two closely spaced temperature values, we can further estimate the volumetric thermal expansion coefficient \({\alpha }_{V}=\frac{1}{V}{(\frac{\delta V}{\delta T})}_{p}\). Compared to AIMD results, as shown in Fig. 4d, the thermal expansion coefficients predicted by SuperSalt show a slight deviation, with an average difference of 6.7%.

Additional diffusivity calculations were conducted, with results summarized in Table S1 and Fig. S3, showing a generally good overall correlation between SuperSalt-MD and AIMD.

Expediting the discovery of the desired salt system

With the highly accurate and robust SuperSalt potential, we can now perform molecular dynamics simulations with large supercells on nanosecond timescales, providing an unprecedented opportunity to explore the complex landscape of properties for molten salts. However, calculating complex properties remains highly time-consuming for specific applications, even using MLIP in molecular dynamics simulations. For example, computing viscosity for a large supercell can take several months, greatly restricting our ability to explore uncharted regions of the melt space with desired properties. Considering the 11-component system modeled here poses a vast compositional space (approximately forty-six trillion combinations with 1 at.% interval), which is impractical to explore exhaustively, even for theoretical calculations. Therefore, an efficient search algorithm is required with a proper balance between exploration and exploitation, especially when a property is difficult to calculate.

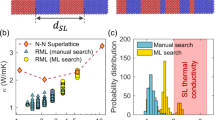

Here, the practical application of meta-adaptive hybrid Bayesian Optimization (BO) for efficiently searching the 11-cation chloride melts space to identify compositions with optimal properties is demonstrated. Three experiments were conducted to evaluate the capabilities of the SuperSalt-BO algorithm within this 11-component molten salt system. These experiments were designed to demonstrate the capacity of the model to efficiently explore and exploit under data-scarce conditions in inverse design tasks of varying complexity. Fundamentally, the effectiveness of exploration and exploitation is dependent on the extrapolation and interpolation capabilities of the surrogate model, respectively. However, when only limited information is available due to data scarcity, simultaneously achieving high accuracy in both becomes very challenging. Therefore, these experiments also aim to assess whether the surrogate model can effectively extract latent information and dynamically adjust its focus between global exploration and local optimization, guided by the optimization objective and the current state of the model.

To thoroughly test the algorithm’s versatility and effectiveness, we selected two target properties, density and mixing enthalpy, that exhibit varying degrees of complexity in their composition-property mappings. Density, as a property, generally allows for relatively straightforward interpretation based on compositional factors, while mixing enthalpy involves more complex underlying physical mechanisms, thus representing a more challenging optimization scenario.

To isolate and evaluate the model’s exploration and exploitation capabilities, the three experimental scenarios were constructed as follow: (1) The first experiment aimed to evaluate the algorithm’s exploration capabilities by searching for the composition with the highest density at 1200 K, starting from an initial dataset containing 10 randomly selected compositions, all exhibiting densities below 2.4 g/cm3; (2) The second experiment posed a more demanding challenge, requiring the identification of a precise quaternary composition with a density within the narrowly defined target range of 2.200–2.2001 g/cm3 at 1200 K, beginning with 10 randomly chosen compositions with densities below 2.1 g/cm3; and (3) the third experiment further tested the algorithm’s capacity for dynamic balancing of exploration and exploitation under more complex conditions, specifically targeting the property of mixing enthalpy. The experiment was configured with an initial dataset comprising 10 randomly selected quinary compositions, each having mixing enthalpy values greater than −0.031 eV/atom. The goal was to efficiently identify a quinary composition within a highly specific mixing enthalpy window of −0.1001 to −0.1000 eV/atom, representing a significant departure from the initial data range and thus rigorously testing the algorithm’s ability to explore and exploit the compositional space effectively.

In the first test, where the maximum density is unknown, we used a stopping criterion based on both data and SuperSalt-BO convergence. On the data side, the experiment halts if, for two consecutive iterations, the algorithm fails to find a better solution, and the standard deviation of the candidates in each iteration consistently decreases. On the BO side, if the surrogate model repeatedly predicts that the current solution is the best after exploring all candidates, which are generated during this iteration, and training data points, we stop the experiment.

As shown in Supplementary Fig. S7, the BO algorithm quickly found the maximum density of 2.9 g/cm3 in the first iteration, corresponding to pure BaCl2. Although no higher values were found in subsequent rounds, triggering the stopping criteria, we observed that the mean density of selected candidates increased steadily while their standard deviation shrank, indicating the BO’s convergence. The observation that BaCl2 has the highest density among the 11 components can be explained by BaCl2’s relatively high atomic mass and compact ionic structure. This result confirms the effectiveness of our algorithm and strategy in efficiently navigating the molten salt property space.

Unlike the first test, which sought the global maximum, the second test required the algorithm to find a precise target in a quaternary system, thus assessing the algorithm’s precision in exploitation. Starting again with 10 randomly selected points below 2.1 g/cm3, our BO found a composition with a density of 2.20003 g/cm3 after six iterations, corresponding to the Cs8Rb28Sr10Zr5Cl76 composition, as shown in Fig. 5b. From the third iteration onward, our BO identified compositions with densities very close to the 2.2 g/cm3 target, and by the fifth iteration, all selected candidates converged tightly around this value. This demonstrates the algorithm’s capability to exploit the molten salt property space with high precision.

a Illustration of the workflow of SuperSalt-based Bayesian Optimization (SuperSalt-BO) for targeting properties in 11-cation chloride melts. b Target 2 is to identify a quaternary system with a density close to 2.2 g/cm3 at 1200 K in this 11-cation chloride salt system. The initial datasets, consisting of 10 random compositions for both targets, are also shown for reference. c Target 3 is to identify a 5-component system with a mixing enthalpy (EMixing) close to −100 meV/atom at 1200 K in this 11-cation chloride salt system. The initial datasets, consisting of 10 random compositions for both targets, are also shown for reference. “Inter. N” means the N round of iteration of SuperSalt-BO. In both the initial and iterative steps, each upper bar represents the target property value for the corresponding composition shown by the lower colorful bar. Each lower bar denotes a specific composition, which is iteratively adjusted to approach the desired property value.

Interestingly, in the first iteration, the algorithm selected compositions with much higher densities (around 2.5 g/cm3). This behavior can be attributed to the initial training set, where no sample had a density near g/cm3. As a result, the algorithm initially prioritized exploration by selecting compositions at the opposite end of the target density to balance the training data distribution. This ensured that the surrogate model could more robustly map the relationship between the property and the feature space. In the second iteration, the algorithm adopted a more conservative strategy, slightly lowering the mean of the overall predictions to around 2.4 g/cm3. However, it preserved the exploratory tendencies of some surrogate models, which discovered a component with a density very close to the target density window. This discovery served as a turning point, allowing the algorithm to rapidly narrow down the search in subsequent iterations and converge efficiently on the target window. This adaptive process showcases the algorithm’s good ability to balance exploration and exploitation, dynamically adjusting its search behavior based on real-time data feedback to reach the desired outcome.

In the third experiment, focusing on the more intricate mapping of mixing enthalpy, the challenge was compounded by the fact that the target range was an extreme outlier relative to the initial dataset (offset by ~11.95 standard deviations). Consequently, the model’s first three iterations primarily emphasized exploration, deliberately widening the boundaries of the training data to systematically approach the distant target. Specifically, the most negative mixing enthalpy identified in each round was −5.57, −3.14, and −3.02 standard deviations away from the current dataset’s mean, respectively, indicating the model’s concerted effort to push beyond its initial knowledge boundary. By the end of these exploratory rounds, the updated training set had sufficiently closed in on the target vicinity, enabling the fourth round to pinpoint a composition (Cs13K10Rb5Na3Mg20Cl71) whose mixing enthalpy was −0.10006 eV/atom, well within the desired range. Although this achievement alone could have concluded the experiment, we proceeded with two additional rounds after observing exploitation tendencies in the CaCsKMgRb and CsKMgNaSr systems. These subsequent runs further validated the model’s adaptive approach, as it successfully identified additional compositions near the target value, thus demonstrating the robust balance of exploration and exploitation and underscoring our BO framework’s capacity to navigate even highly nonlinear and data-scarce property spaces.

Overall, these tests illustrate that our SuperSalt-BO framework, coupled with the SuperSalt-MD calculations, provides a highly effective approach for exploring and optimizing molten salt properties in an extensive compositional space. Whether targeting a global maximum or a specific range, our model demonstrates remarkable efficiency in identifying compositions with desired properties in this 11-component molten salt system.

Discussion

In this work, we have developed a comprehensive workflow for generating an efficient dataset of quantum mechanical reference data tailored to molten salt systems. Given that the behavior of salts is predominantly governed by simple pairwise electrostatics, we anticipated that fitting the 1- and 2-component systems would capture much of the essential physics. However, recognizing the potential importance of many-component interactions and the need for the MLIP to handle complex environments, we also incorporated data from multicomponent systems, including 11-cation chloride salts, to ensure transferability and accuracy across diverse compositions. Therefore, we created a database consisting of representative 1-component, 2-component, and 11-component structures. This database enables the development of a general-purpose interatomic potential, the SuperSalt potential, applicable to the LiCl-NaCl-KCl-RbCl-CsCl-MgCl2-CaCl2-SrCl2-BaCl2-ZnCl2-ZrCl4 salt system across a wide range of compositions.

The SuperSalt potential is specifically developed for molten salt systems, which has guided our primary focus on ionic liquids. Nevertheless, since the training set was constructed using MD simulations initiated from crystalline structures, we hypothesized that SuperSalt may also be applicable to solid phases. To test this, we evaluated energies and forces of relevant solid structures from the Materials Project database, and found that SuperSalt correctly identifies the most stable phases (see Supporting Information). Furthermore, we assessed melting points of four unary salts using the solid-liquid coexistence method, achieving errors within 100 K, with a mean error of 17 K, MAE of 60 K, and RMSE of 77 K. These results demonstrate promising accuracy and suggest SuperSalt’s potential for modeling solid phases and phase transitions, while emphasizing the need for further validation in such applications.

By minimizing a loss function that considers both potential energy and atomic forces, our SuperSalt potential achieves DFT-level accuracy in energy and force predictions while offering good stability for long timescale and large-length scale MD simulations under various ensembles. Extensive validation of material properties, including density, thermal expansion coefficient, specific heat, bulk modulus, and radial distribution function across various molten salt systems, shows excellent agreement between SuperSalt-MD and AIMD simulations. Furthermore, property evaluations based on SuperSalt-MD also align well with a wide range of experimental measurements, highlighting the potential of SuperSalt for robust molten salt modeling.

We demonstrate that SuperSalt-MD calculations and a SuperSalt-BO search method can effectively explore the complex landscape of molten salts and find compositions with optimal properties. The results on density show that with just tens of SuperSalt-MD calculations, a composition with the target property values can be identified. This result suggests that other properties accessible through SuperSalt-MD can be designed efficiently, accelerating the identification and optimization of suitable salt systems for various applications.

We view the present database and SuperSalt potential model as a starting point for broader studies within the molten salts community. Our efficient database generation strategy enables straightforward extensions to more complex systems. For example, to train a new model for a 12-component system, one could simply add one extra unary component, 12 relative binary systems, and active-learned 12-component configurations into our current database to ensure adequate representation—though determining the right combination for higher-order systems may require careful selection. One could also develop faster, more targeted potentials by fitting to subsets of our training data.

In the future, we plan to expand the potential to include more components, such as impurities from fission or corrosion products and F anions. Additionally, we will upgrade our SuperSalt-BO algorithm to support multi-objective, multi-task, and multi-fidelity searches. Thus, SuperSalt-MD modeling and SuperSalt-BO searching offer a promising approach to mitigating the long-standing trade-off between accuracy and computational cost in molten salt assessments.

Methods

Machine-learning potential fitting

All models trained in the work use the MACE architecture43. MACE is an equivariant message-passing graph tensor network, where each layer encodes many-body information of atomic geometry. At each layer, many-body messages are formed using a linear combination of a tensor product basis44,45,46. This basis is constructed by taking tensor products of a sum of two-body permutation-invariant polynomials, expanded on a spherical basis. The final output is the energy contribution of each atom to the total potential energy. For a more detailed description of the architecture, see refs. 43,47. All models referred to in this work use two MACE layers, a spherical expansion up to \({l}_{\max }=3\), and 4-body messages in each layer (correlation order 3). Each model uses a 64-channel dimension for tensor decomposition. We apply a radial cutoff of 8 Å and expand the interatomic distances into 10 Bessel functions, multiplied by a smooth polynomial cutoff function to construct radial features, which are then fed into a fully connected feed-forward neural network with three hidden layers of 64 units and SiLU non-linearities. The irreducible representations of the messages have alternating parity, 128 × 0e + 128 × 1o for our model. The models are trained with the AMSGrad variant of Adam with default parameters β1 = 0.9, β2 = 0.999, and ϵ = 10−8. We use a learning rate of 0.001 and an exponential moving average (EMA) learning scheduler with a decaying factor of 0.995. We employ a gradient clipping of 100. We trained the model using a single NVIDIA A100 GPU with 80 GB of RAM, and the whole process took roughly 96 GPU hours.

DFT computations

The DFT calculations were performed to obtain energies, forces, and stresses of these atomic configurations using the VASP 6.4.2 code48. The PAW-PBE potentials49,50,51 which were used in this study are Li_sv (1s22s1), Na_pv (2p63s1), Mg (3s2), Cl(3s23p5), K_sv(3s23p64s1), Ca_sv(3s23p64s2), Zn(4s23d10), Rb_sv(4s24p65s1), Sr_sv(4s24p65s2), Zr_sv(4s24p64d25s2), Cs_sv(5s25p66s1), Ba_sv(5s25p66s2). To correct for dispersion effects, we used the PBE-D3 method52. An energy cutoff of 700 eV and a K-point mesh of 1 × 1 × 1 were used to perform the DFT calculations.

AIMD workflows

To compare the performance of the potentials with DFT, we conducted AIMD simulations for several salts at different temperatures under ambient conditions. Each simulation was run for 50 ps with a time step of 1 fs using the PBE-D3 functional in VASP. The simulation cells contained around 100 atoms. From these simulations, we calculated key properties such as the radial distribution function (RDF), density, average specific heat, and average thermal expansion coefficient over the temperature range.

MD workflows

The atomic configurations we used for training were generated using MD simulations with MACE-MP0 universal potential and LAMMPS package (23 Jun 2022)53. Such a universal potential, which is fitted on crystalline systems, may not be accurate enough for predicting thermophysical properties of molten systems (we found significant errors when its predictions were compared to equivalent AIMD results in supplementary materials), but it is very useful for raw data generation (atomic configuration for which the energies and forces are yet to be calculated by DFT). We separately ran MD simulations for unary, binary, and 11-cation systems in the temperature range 0 K < T < 1600 K and in the pressure range 0 GPa < P < 1 GPa, each for 0.5 ns. MD simulation for each unary system started from its crystalline structure with a supercell of around 100 atoms. For each binary system, there are infinite possible compositions of AxB(1−x), A and B being different unary salts. In previous work54, we showed that only including data from unaries (A and B salts) and binaries at x = 0.33, 0.67 can provide enough data diversity to train a compositionally transferable potential across the AxB(1−x) system. In this work, we decided to include binary data at three compositions x = 0.25, 0.50, 0.75 to add extra diversity to the training data. As there are 55 possible binary combinations for an 11-cation set, overall, 55 × 3 = 165 MD simulations were run for binary systems. The initial configuration for each binary system was generated randomly using the PACKMOL code (v21.0.2)55, and each configuration had around 100 atoms. We also ran MD simulations of 40 different compositions of 11-cation salt to provide data for the multispecies system for our potential. The initial configurations for these 11-cation systems were generated using PACKMOL, and each system contained around 200 atoms. We employed the Dirichlet distribution method27 to control and generate the compositions of the 11-component system systematically. The Dirichlet distribution, a multivariate generalization of the Beta distribution, allows for the random generation of non-negative fractions that sum to one, ensuring the proper representation of the relative proportions of each component in the system. Overall, more than 20,000,000 raw atomic configurations were generated. Using a clustering algorithm based on the HDBSCAN method, we collected around 70,000 configurations for training the potentials (roughly 1/3 unaries, 1/3 binaries, and 1/3 11-cation systems). A validation set including around 6000 configurations was also collected to be used during the training of the potential. To test the potential, we generated two separate testing sets. The Test_1 set included configurations from ternary salts. For an 11-cation set, there are 165 possible ternary systems. We only used A0.33B0.33C0.34 compositions for each ternary system (A, B and C are different unary salts such as (NaCl)0.33(ZnCl4)0.33(RbCl2)0.34) and at each composition we used 20 configurations (overall 3300 configurations in Test_1). The Test_2 set included 800 randomly generated multicomponent salts (from binary systems to 11-cation systems) with random compositions. To collect the data for each of these testing sets, the initial configurations were generated by PACKMOL, and we ran an MD simulation similar to what was done for generating training data. In the end, we randomly picked atomic configurations from the MD trajectory and later calculated the energy and force using DFT.

BO workflows

In our application of BO to the problem, we explore the complete composition search space, which includes LiCl, NaCl, KCl, RbCl, CsCl, MgCl2, CaCl2, SrCl2, BaCl2, ZnCl2, and ZrCl4. Each component’s concentration can vary from 0 to 100% with an interval of 1%, resulting in a vast search space of 46,897,636,623,981 unique compositions.

However, three key challenges arise from the inherently high-dimensional search space and the complex, uneven composition-property landscape. First, how can we construct a more well-conditioned and informative composition-property mapping to mitigate the effects of non-convexity and local discontinuities that are frequently observed in multi-component material systems. In such systems, even minor perturbations in the concentration of certain components, especially those involving elements with strong electronic, ionic, or structural influences, can induce abrupt changes in material properties. This leads to sharp local gradients or even non-smooth transitions in the property landscape, making the mapping highly nonlinear and difficult to learn. Second, how can we ensure reliable model extrapolation in regions of sparse data coverage, given the vastness of the compositional design space relative to the limited number of evaluations. Third, how can the sampling strategy be dynamically adapted to balance global exploration and local exploitation, thereby enabling efficient navigation through the high-dimensional, anisotropic, and often sparsely informative property landscape.

To address the first challenge and better capture the intricate relationships among components, we employ Magpie descriptors56, which provide a rich array of fundamental chemical attributes (e.g., atomic radius, electronegativity, formation energy) along with composition-dependent statistical properties. By embedding each composition in a 146-dimensional descriptor space, we transform the originally non-convex “composition-property” mapping into a more continuous and physically grounded “feature-property” representation. This expansion helps mitigate abrupt property transitions where small compositional changes can trigger steep gradients, and yields a smoother view of chemical trends even for rare elements or unconventional combinations.

However, simply projecting compositions into a higher-dimensional space does not inherently alleviate data sparsity. Instead, it requires the surrogate model to possess robust, adaptive mechanisms for distinguishing which features are most relevant to each target property. Different properties may emphasize different subsets of descriptors, so the model must dynamically weigh them to capture complex behaviors accurately. By combining these physically meaningful features with an adaptive feature-importance strategy, to retain the advantages of a richer representation while ensuring that computational resources focus on the most informative dimensions. This ultimately leads to more reliable predictions, more effective exploration, and a stronger capacity for local refinement across a wide range of materials design objectives.

To address the second challenge and to meet the model requirements imposed by the expanded and heterogeneous feature space described above, we designed a custom ensemble surrogate model that integrates bootstrapping and stacking strategies57. This architecture enhances robustness against data sparsity by aggregating diverse learners trained on different data subsets, while simultaneously enabling adaptive weighting across models to better capture complex, nonlinear trends in the high-dimensional, descriptor-based input space. This ensemble framework includes algorithms like Lasso58, Ridge59, ElasticNet60, DecisionTreeRegressor61, RandomForest62, SVR63, MLPRegressor64, GradientBoostingRegressor65, ExtraTreesRegressor66, and XGBoost67 as the base learners. The key motivation behind using such a diverse set of base learners is twofold: firstly, the ensemble model provides strong predictive power by blending the strengths of different base learners. Thus, the robustness of the prediction can be guaranteed; secondly, each base learner, while ensuring robust predictions, also serves as a sampler focusing on different data characteristics. Combined with our BO framework’s model inference68, this guarantees diversity in the sampling process, helping to explore various data trends and thus avoiding bias toward any single model’s predictions.

As for the last challenge, even with a pre-trained surrogate model, it is computationally infeasible to evaluate all potential data points across the vast search space. Furthermore, traditional sampling techniques, such as Markov Chain Monte Carlo (MCMC), particle swarm optimization (PSO), or simulated annealing, are prone to getting trapped in local optima due to the sparse initial sampling. To overcome these limitations, we implemented a hierarchical sampling strategy as follows: First, the model predicts over a 5% interval of the search space, generating a candidate set of 30,045,015 points. From this pool, the top 5000 candidates are selected via the acquisition function. For each of these, a local search is performed using PSO within a ±3% perturbation range. During this process, each base learner serves as the objective function for the PSO, enabling the search to efficiently adapt to different algorithm evaluations. Ultimately, for each center point, 10 finely tuned neighboring points are selected, resulting in 5000 × 10 new candidate points for each algorithm. From these locally optimized neighborhoods, we select 10 final compositions with the highest acquisition scores.

To summarize, our SuperSalt-BO workflow begins by generating an initial training set through random sampling and a candidate set with a 5% interval, followed by feature transformation using Magpie descriptors. In each iteration, an ensemble of surrogate models is trained via bootstrap sampling and weighted based on a hybrid of out-of-bag scores and stacking. A coarse-to-fine acquisition strategy is employed: candidate compositions are first filtered using a weighted UCB acquisition function, then refined through localized optimization using PSO techniques. The top-ranked candidates are evaluated, incorporated into the training set, and the process repeats until convergence. The detailed algorithmic procedure and experimental settings of SuperSalt-BO, including ensemble construction, acquisition strategy, and optimization workflow, are provided in the pseudo code in Supplementary Fig. S6.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Source data are provided with this paper, deposited in the Zenodo repository (https://doi.org/10.5281/zenodo.15734854)71. The Source Data repository contains all numerical data used to generate the main figures, as well as the Python plotting scripts. These scripts are necessary because we visualize model performance (e.g., parity plots for energy and forces) by directly reading large datasets (e.g., .xyz or .npy files) that contain training and test predictions. The training and test datasets in extended .xyz format, potential parameter files, and the trained SuperSalt model have been deposited in the Zenodo repository (https://doi.org/10.5281/zenodo.15734798)72.

Code availability

The source code for SuperSalt-BO is available at the Zenodo repository (https://doi.org/10.5281/zenodo.15734958)73 and the Github repository (https://github.com/darmstadtshenchen/SuperSalt.git).

References

Fumi, F. & Tosi, M. Ionic sizes and born repulsive parameters in the NaCl-type alkali halides-I: the Huggins-Mayer and Pauling forms. J. Phys. Chem. Solids 25, 31–43 (1964).

Sangster, M. & Dixon, M. Interionic potentials in alkali halides and their use in simulations of the molten salts. Adv. Phys. 25, 247–342 (1976).

Aguado, A., Bernasconi, L., Jahn, S. & Madden, P. A. Multipoles and interaction potentials in ionic materials from planewave-DFT calculations. Faraday Discuss. 124, 171–184 (2003).

Salanne, M. et al. Including many-body effects in models for ionic liquids. Theor. Chem. Acc. 131, 1–16 (2012).

Hohenberg, P. & Kohn, W. Inhomogeneous electron gas. Phys. Rev. 136, 864 (1964).

Kohn, W. & Sham, L. J. Self-consistent equations including exchange and correlation effects. Phys. Rev. 140, 1133 (1965).

Bengtson, A., Nam, H. O., Saha, S., Sakidja, R. & Morgan, D. First-principles molecular dynamics modeling of the LiCl–KCl molten salt system. Comput. Mater. Sci. 83, 362–370 (2014).

Nam, H. O. & Morgan, D. Redox condition in molten salts and solute behavior: a first-principles molecular dynamics study. J. Nucl. Mater. 465, 224–235 (2015).

Li, X. et al. Dynamic fluctuation of U3+ coordination structure in the molten LiCl-KCl eutectic via first principles molecular dynamics simulations. J. Phys. Chem. A 121, 571–578 (2017).

Behler, J. & Parrinello, M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Jacobs, R. et al. A practical guide to machine learning interatomic potentials–Status and future. Curr. Opin. Solid State Mater. Sci. 35, 101214 (2025).

Lam, S. T., Li, Q.-J., Ballinger, R., Forsberg, C. & Li, J. Modeling LiF and FLiBe molten salts with robust neural network interatomic potential. ACS Appl. Mater. Interfaces 13, 24582–24592 (2021).

Attarian, S., Morgan, D. & Szlufarska, I. Studies of Ni-Cr complexation in FLiBe molten salt using machine learning interatomic potentials. J. Mol. Liq. 400, 124521 (2024).

Attarian, S., Morgan, D. & Szlufarska, I. Thermophysical properties of FLiBe using moment tensor potentials. J. Mol. Liq. 368, 120803 (2022).

Zhao, J., Feng, T., Lu, G. Deep learning potential assisted prediction of local structure and thermophysical properties of the SrCl2-KCl-MgCl2 melt. J. Chem. Theory Comput. 20, 7611–7623 (2024)

Batatia, I. et al. A foundation model for atomistic materials chemistry. arXiv preprint: https://doi.org/10.48550/arXiv.2401.00096 (2023).

Chen, C. & Ong, S. P. A universal graph deep learning interatomic potential for the periodic table. Nat. Comput. Sci. 2, 718–728 (2022).

Choudhary, K. et al. Unified graph neural network force-field for the periodic table: solid state applications. Digital Discov. 2, 346–355 (2023).

Takamoto, S. et al. Towards universal neural network potential for material discovery applicable to arbitrary combination of 45 elements. Nat. Commun. 13, 2991 (2022).

Xie, F., Lu, T., Meng, S. & Liu, M. Gptff: a high-accuracy out-of-the-box universal AI force field for arbitrary inorganic materials. Sci. Bull. 69, 3525–3532 (2024)

Merchant, A. et al. Scaling deep learning for materials discovery. Nature 624, 80–85 (2023).

Park, Y., Kim, J., Hwang, S. & Han, S. Scalable parallel algorithm for graph neural network interatomic potentials in molecular dynamics simulations. J. Chem. Theory Comput. 20, 4857–4868 (2024)

Deng, B. et al. CHGNet as a pretrained universal neural network potential for charge-informed atomistic modelling. Nat. Mach. Intell. 5, 1031–1041 (2023).

Yu, H., Giantomassi, M., Materzanini, G., Wang, J. & Rignanese, G.-M. Systematic assessment of various universal machine-learning interatomic potentials. MGE Adv. 58, e58 (2024).

Frazier, P.I. & Wang, J. Bayesian optimization for materials design. Inform. Sci. Mater. Discov. Desig. 225, 45–75 (2016).

Lookman, T., Balachandran, P. V., Xue, D. & Yuan, R. Active learning in materials science with emphasis on adaptive sampling using uncertainties for targeted design. Npj Comput. Mater. 5, 21 (2019).

Ng, K.W., Tian, G.-L. & Tang, M.-L. Dirichlet and related distributions: theory, methods and applications (Wiley, 2011).

Bochkarev, A., Lysogorskiy, Y., Ortner, C., Csányi, G. & Drautz, R. Multilayer atomic cluster expansion for semilocal interactions. Phys. Rev. Res. 4, 042019 (2022).

Kovács, D. P. et al. Mace-off: Short-range transferable machine learning force fields for organic molecules. J. Am. Chem. Soc. 147, 17598–17611 (2025).

Dasgupta, S. & Hsu, D. Hierarchical sampling for active learning. In Proc. 25th International Conference on Machine Learning 208–215 (Association for Computing Machinery, New York, NY, 2008).

Dasgupta, S. Two faces of active learning. Theor. Comput. Sci. 412, 1767–1781 (2011).

Campello, R.J., Moulavi, D. & Sander, J. Density-based clustering based on hierarchical density estimates. In: Pacific-Asia Conference on Knowledge Discovery and Data Mining. 160–172 (Springer, 2013).

McInnes, L. et al. hdbscan: hierarchical density based clustering. J. Open Source Softw. 2, 205 (2017).

McInnes, L. & Healy, J. Accelerated hierarchical density based clustering. In 2017 IEEE International Conference on Data Mining Workshops (ICDMW). 33–42 (IEEE, 2017).

Melvin, R. L. et al. Uncovering large-scale conformational change in molecular dynamics without prior knowledge. J. Chem. Theory Comput. 12, 6130–6146 (2016).

Li, Q.-J. et al. Development of robust neural-network interatomic potential for molten salt. Cell Rep. Phys. Sci. 2, 100359 (2021).

Haynes, W. Density of molten elements and representative salts. in CRC Handbook of Chemistry and Physics (CRC Press, 2012)

Wang, J., Wu, J., Lu, G. & Yu, J. Molecular dynamics study of the transport properties and local structures of molten alkali metal chlorides. Part III. Four binary systems LiCl-RbCl, LiCl-CsCl, NaCl-RbCl and NaCl-CsCl. J. Mol. Liq. 238, 236–247 (2017).

Li, Y. et al. Survey and evaluation of equations for thermophysical properties of binary/ternary eutectic salts from NaCl, KCl, MgCl2, CaCl2, ZnCl2 for heat transfer and thermal storage fluids in CSP. Sol. Energy 152, 57–79 (2017).

Nam, H. et al. First-principles molecular dynamics modeling of the molten fluoride salt with Cr solute. J. Nucl. Mater. 449, 148–157 (2014).

Liu, M., Masset, P. & Gray-Weale, A. Solubility of sodium in sodium chloride: a density functional theory molecular dynamics study. J. Electrochem. Soc. 161, 3042 (2014).

Andersson, D. & Beeler, B. W. Ab initio molecular dynamics (AIMD) simulations of NaCl, UCl3 and NaCl-UCl3 molten salts. J. Nucl. Mater. 568, 153836 (2022).

Batatia, I., Kovacs, D. P., Simm, G., Ortner, C. & Csányi, G. Mace: Higher order equivariant message passing neural networks for fast and accurate force fields. Adv. Neural Inf. Process. Syst. 35, 11423–11436 (2022).

Witt, W.C. et al. ACE potentials: a Julia implementation of the atomic cluster expansion. J. Chem. Phys. 159, 164101 (2023).

Batatia, I. et al. The design space of E(3)-equivariant atom-centred interatomic potentials. Nat. Mach. Intell. 7, 56 (2022).

Darby, J. P. et al. Tensor-reduced atomic density representations. Phys. Rev. Lett. 131, 028001 (2023).

Magdău, I.-B. et al. Machine learning force fields for molecular liquids: Ethylene Carbonate/Ethyl Methyl Carbonate binary solvent. Npj Comput. Mater. 9, 146 (2023).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558 (1993).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865 (1996).

Blöchl, P. E. Projector augmented-wave method. Phys. Rev. B 50, 17953 (1994).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758 (1999).

Grimme, S., Antony, J., Ehrlich, S. & Krieg, H. A consistent and accurate ab initio parametrization of density functional dispersion correction (DFT-D) for the 94 elements H-Pu. J. Chem. Phys. 132, 154104 (2010).

Thompson, A. P. et al. LAMMPS-a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022).

Attarian, S., Shen, C., Morgan, D. & Szlufarska, I. Best practices for fitting machine learning interatomic potentials for molten salts: a case study using NaCl-MgCl2. Comput. Mater. Sci. 246, 113409 (2025).

Martínez, L., Andrade, R., Birgin, E. G. & Martínez, J. M. PACKMOL: a package for building initial configurations for molecular dynamics simulations. J. Comput. Chem. 30, 2157–2164 (2009).

Ward, L., Agrawal, A., Choudhary, A. & Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. Npj Comput. Mater. 2, 1–7 (2016).

Ribeiro, M. H. D. M., Silva, R. G., Moreno, S. R., Mariani, V. C. & Santos Coelho, L. Efficient bootstrap stacking ensemble learning model applied to wind power generation forecasting. Int. J. Electr. Power Energy Syst. 136, 107712 (2022).

Ranstam, J. & Cook, J. A. LASSO regression. J. Br. Surg. 105, 1348–1348 (2018).

McDonald, G. C. Ridge regression. Wiley Interdiscip. Rev. Comput. Stat. 1, 93–100 (2009).

De Mol, C., De Vito, E. & Rosasco, L. Elastic-net regularization in learning theory. J. Complex. 25, 201–230 (2009).

Luo, H., Cheng, F., Yu, H. & Yi, Y. SDTR: soft decision tree regressor for tabular data. IEEE Access 9, 55999–56011 (2021).

Rigatti, S. J. Random forest. J. Insur. Med. 47, 31–39 (2017).

Awad, M., Khanna, R., Awad, M. & Khanna, R. Support vector regression. In Efficient learning machines: Theories, concepts, and applications for engineers and system designers, 67–80 (Apress, 2015)

Dutt, M. I. & Saadeh, W. A multilayer perceptron (MLP) regressor network for monitoring the depth of anesthesia. In 2022 20th IEEE Interregional NEWCAS Conference (NEWCAS), 251–255 (IEEE, 2022).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

Sudhamathi, T. & Perumal, K. Ensemble regression based extra tree regressor for hybrid crop yield prediction system. Meas.: Sens. 35, 101277 (2024).

Chen, T., Guestrin, C. XGBoost: A scalable tree boosting system. In Proc. 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, 785–794 (ACM, 2016).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Brown, S. T. et al. Bridges-2: a platform for rapidly evolving and data-intensive research. In Practice and Experience in Advanced Research Computing, PEARC’21 (Association for Computing Machinery, 2021).

Boerner, T. J., Deems, S., Furlani, T. R., Knuth, S. L. & Towns, J., Access: Advancing innovation: Nsf’s advanced cyberinfrastructure coordination ecosystem: Services & support, in Practice and Experience in Advanced Research Computing, PEARC ’23 p. 173–176 (Association for Computing Machinery, New York, NY, USA, 2023).

Shen, C. Source Data for SuperSalt Potential. Zenodo https://doi.org/10.5281/zenodo.15734854 (2025).

Shen, C. SuperSalt model: Equivariant Neural Network Force Fields for Multicomponent Molten Salts System. Zenodo https://doi.org/10.5281/zenodo.15734798 (2025).

Shen, C. SuperSalt BO for SuperSalt Potential. Zenodo https://doi.org/10.5281/zenodo.15734958 (2025).

Acknowledgements

We express our sincere gratitude to Dr. Jicheng Guo and Dr. Ganesh Sivaraman from Argonne National Laboratory for their valuable discussions and technical assistance. We gratefully acknowledge support from the Department of Energy (DOE) Office of Nuclear Energy’s (NE) Nuclear Energy University Programs (NEUP) under award # 21-24582. This work used Bridges-2 cluster69 at Pittsburgh Supercomputing Center (PSC) and Stampede3 cluster at Texas Advanced Computing Center (TACC) through allocations MAT240071 and MAT240075 from the Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support (ACCESS)70 program, which is supported by the National Science Foundation grants #2138259, #2138286, #2138307, #2137603, and #2138296. We also used the computational resources provided by the Center for High Throughput Computing (CHTC) at the University of Wisconsin-Madison. The authors gratefully acknowledge the computing time provided to them on the high-performance computer Lichtenberg at the NHR Centers NHR4CES at TU Darmstadt.

Author information

Authors and Affiliations

Contributions

C.S. and S.A. performed all computations and analysis, with guidance from D. M., I.S., HB.Z., and M.A. The SuperSalt-BO was designed by YX.Z., C.S., and HB.Z. All authors contributed substantially to the design of the research and the interpretation of the results. C.S. and S.A. wrote the paper with input from all authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Takuji Oda and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shen, C., Attarian, S., Zhang, Y. et al. SuperSalt: equivariant neural network force fields for multicomponent molten salts system. Nat Commun 16, 7280 (2025). https://doi.org/10.1038/s41467-025-62450-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-62450-1