Abstract

Mental health is a major global concern, yet findings remain fragmented across studies and databases, hindering integrative understanding and clinical translation. To address this gap, we present the Mental Disorders Knowledge Graph (MDKG)—a large-scale, contextualized knowledge graph built using large language models to unify evidence from biomedical literature and curated databases. MDKG comprises over 10 million relations, including nearly 1 million novel associations absent from existing resources. By structurally encoding contextual features such as conditionality, demographic factors, and co-occurring clinical attributes, the graph enables more nuanced interpretation and rapid expert validation, reducing evaluation time by up to 70%. Applied to predictive modeling in the UK Biobank, MDKG-enhanced representations yielded significant gains in predictive performance across multiple mental disorders. As a scalable and semantically enriched resource, MDKG offers a powerful foundation for accelerating psychiatric research and enabling interpretable, data-driven clinical insights.

Similar content being viewed by others

Introduction

Mental health disorders are a leading cause of disability globally, incurring trillions of dollars in economic costs1. Despite advancements in research, understanding the pathophysiological pathways of mental disorders and identifying reliable diagnostic and therapeutic biomarkers remains challenging1,2,3,4,5,6. The research community has generated a vast and rapidly expanding corpus of information on mental disorders, including journal articles, databases, and trial reports. The sheer magnitude and growth rate of these resources make it difficult for individual researchers to fully gather, organize, and stay current with all available information. Efficient utilization of these accumulating resources can help accelerate mental disorders research, offering meaningful benefits to a broad segment of the population.

Knowledge graphs (KGs) can help address this challenge by organizing real-world knowledge in a format accessible to both humans and machines7,8,9. For instance, they can transform a statement like “The orbitofrontal-hippocampal pathway is crucial in mediating depression”10 into a structured format known as a triplet—a semantic unit in the form of (head entity, relation, tail entity)—such as (orbitofrontal-hippocampal pathway, associated with, depression), optionally enriched with additional contextual properties for precision. By integrating diverse data sources into a unified graph structure, KGs summarize existing knowledge and apply statistical and machine-learning techniques to uncover hidden patterns and connections, leading to new insights. This structured approach makes KGs particularly promising for investigating complex human illnesses like mental disorders, which are highly polygenic and exhibit biological alterations across various data types shared between diagnoses1,2,3,4,5,6,8.

Despite several pioneering efforts in developing KGs for mental disorders11,12,13,14,15,16, disease-specific KGs remain rare, with no published KGs for extensively researched disorders such as schizophrenia and bipolar disorder8. Furthermore, the effectiveness of mental disorder-specific KGs is fundamentally limited from their inception. Creating high-quality training data for information extraction is costly and time-consuming, limiting the development of precise models for mental disorders. Existing KG databases often omit crucial lifestyle information and focus on a limited number of relationship types, restricting our understanding of complex relationships in mental disorder studies. Many KGs fail to capture the complexity of biomedical facts due to the omission of contextual details, reducing their generalizability and accuracy. Additionally, varying confidence levels across data sources compromise the integrity of the extracted knowledge and skew analyses.

Here, we address these challenges by creating the Mental Disorders Knowledge Graph (MDKG), a framework starting with data annotation and culminating in a disease-specific KG tailored for the mental disorders domain. As shown in Fig. 1a, MDKG is a multi-relational, attributed biomedical KG encompassing over 10 million interconnections sourced from biomedical literature and pre-existing databases. Each relationship extracted from the literature is enriched with diverse, domain-specific contextual information, such as conditional statements, baseline characteristics, and contextual side information—ensuring both semantic and biomedical validity, and enabling researchers to conduct subgraph studies under specific conditions. In terms of data volume, MDKG contains 5–50 times more data on mental disorders than existing KGs, capturing a broad spectrum of therapeutic, risk, and lifestyle-related relationships embedded in the literature—many of which are absent from current databases. Beyond its construction, we conducted an expert evaluation, demonstrating a correctness rate of 0.79 and showing that the inclusion of contextual features improves usability, reducing evaluation time by ~70% compared to other KGs. Furthermore, we leveraged MDKG embeddings to encode patient medical histories from the UK Biobank, improving the prediction of major depressive disorder (MDD), anxiety, and bipolar disorder. Across all three conditions, integrating KG embeddings led to performance gains, with the Area Under the Receiver Operating Characteristic Curve (AUC) improvements ranging from 0.02 to 0.21, depending on the experimental setting. These results highlight MDKG’s potential to enhance predictive modeling in mental health research, even in scenarios with limited clinical data. Additionally, the MDKG contains extensive causal pathways, revealing numerous potential mechanisms underlying mental disorder etiology. As a structured resource, it supports ongoing research efforts and may facilitate the development of improved treatments and a deeper understanding of these conditions.

a Example of a structured triplet representation derived from literature, demonstrating how biomedical relationships are contextualized with supporting metadata. In this case, the association between Lewy bodies in the amygdala and increased risk of major depression in Alzheimer’s disease is enriched with source information, sample characteristics, and analytical context48. b Schematic overview of the MDKG construction pipeline. Unstructured data from literature abstracts and structured data from biomedical databases are processed through a multi-stage workflow involving GPT-4-assisted pre-labeling, active learning-based annotation, named entity recognition, relation extraction, tabular data parsing, and large language model (LLM)-powered triplet refinement. The resulting knowledge is aligned to a unified schema and enriched with contextual attributes to support downstream applications. The source data are provided as a Source Data file.

Results

Overview of MDKG

MDKG is a semantically enriched biomedical KG tailored for mental health research. It encompasses entities across nine categories—including health factors, anatomy, phenotypes, clinical signs, biomedical procedures, physiological processes, genes and proteins, diseases and symptoms, and drugs or chemicals—and encodes relationships spanning seven relation types, such as risk, diagnostic, characteristic, association, therapeutic, hierarchical, and abbreviation relationships (see Supplementary for detailed definitions of entity and relationship types). Each relationship is enriched with three types of contextual information: conditional statements specifying population or experimental contexts, baseline characteristics describing cohort-level metadata (e.g., age, gender, sample size), and side information such as source provenance and confidence scores to support downstream interpretation (see Fig. 1a).

After extracting information from the unstructured data of PubMed abstracts, we construct a literature-based MDKG with 236,542 entities and 1,913,461 triplets, including 880,692 normalized unique triplets. Among these, 210,096 triplets feature conditional statements, 115,391 include baseline characteristics of the study population, and all carry side information. Our analysis of the MDKG’s graph structure, with an average in/out-degree of 5.87, indicates a densely interconnected network. This connectivity enriches the potential for uncovering complex biomedical relationships. The identification of 99,472 strongly connected components highlights distinct clusters within the graph, likely representing specialized domains in medical research, such as specific diseases or biological pathways. This structural complexity enhances the graph’s utility for detailed insights and nuanced explorations of medical data.

To further enrich our literature-based MDKG, we employ a knowledge fusion methodology to integrate high-quality, diverse biomedical databases. This process begins with a meticulous evaluation of various databases, examining origins, data generation methodologies, evidence robustness, and update frequencies. Using systematic entity alignment and linking techniques (details in “Methods” subsection “Entity and relationship alignment”), we merge this curated data with the literature-based MDKG, transforming heterogeneous datasets into a coherent, standardized framework. As a result, our enriched MDKG now encompasses 1,642,543 entities across nine categories and graphs 10,702,976 relationships within nine relationship categories. It is publicly accessible via the BiomedKG portal (https://biomedkg.com), providing researchers with efficient access to structured mental health knowledge.

As depicted in Fig. 2b, integrating existing biomedical databases significantly enhances the depth of MDKG, particularly in associative and hierarchical relationships, which predominate in established biomedical knowledge databases. However, excluding these two types, a substantial portion of MDKG’s relationships remains literature-based. Specifically, in the diagnostic category, literature-based relationships make up 53.75% of the total; in location-based relationships, they constitute 72.79%; in risk relationships, they account for 32.01%; and in therapeutic relationships, an impressive 77.16%. This demonstrates that despite the wealth of structured database data, significant knowledge remains embedded within unstructured text, highlighting the importance of extracting knowledge from unstructured text for advancing medical research.

a Distribution of relation types and entity pairs in the literature-based Mental Disorders Knowledge Graph (MDKG). b Distribution of MDKG triplets across curated biomedical resources (e.g., DrugBank, DisGeNET, UMLS), model predictions, and other sources. c Example output of knowledge retrieval from MDKG for major depressive disorder (MDD), showing interconnected concepts such as physiological abnormalities, behavioral features, genetic variants, and therapeutic options. The source data are provided as a Source Data file.

Enhancing graph expressiveness through contextualized triplets

In our research, we enhance the traditional KG by integrating three types of contextual information from literature: conditional statements that provide nuanced dependencies between entities at the sentence level, baseline characteristics regarding experiments and research populations at the study level, and ancillary side information to supplement the primary data (shown in Fig. 1a). These additions transform the KG into a multi-dimensional and trustworthy resource, offering a deeper understanding of complex interrelationships and enabling more accurate and user-friendly applications in medical research and practice.

-

(1)

Conditional Statements: Biomedical statements are often conditional, with their validity depending on specific contextual conditions17. Omitting these details can misrepresent relationships between entities. Existing biomedical KGs typically use a flat representation, neglecting these crucial conditions. For example, a typical biomedical statement is as follows: “There are differences in CCN during the early stage of MDD, as identified by increased FCs among part of the frontal gyrus, parietal cortex, cingulate cortex...”10 or “Depression is associated with FMS among women but not among men.”18

Conventional KGs extract basic facts, e.g., (MDD, characteristic of, increased FCs) or (Depression, associated with, FMS). However, this approach often omits crucial details like the location of increased FCs or the specific populations (e.g., women), reducing the confidence of the triplet. To address this, we propose a refined representation. We assign attributes to the head and tail entities within a triplet, including relevant features or descriptive information. We then incorporate specific conditions into the triplets, outlining the precise contexts in which these relationships are valid. Applying this framework to the example would yield the following results:

-

Triplet 1: {“triplet”: [“MDD”, “characteristic of”, “increased FCs”], “head entity attributes”: None, “tail entity attributes”: {“located in”: [“frontal gyrus”, “parietal cortex”, “cingulate cortex”]}, “relation conditions”: None};

-

Triplet 2: {“triplet”: [“Depression”, “associated with”, “FMS”], “head entity attributes": None, “tail entity attributes”: None, “relation conditions”: “women”}.

By incorporating entity attributes and relation conditions, we enhance the precision and reliability of the relationships in MDKG.

-

-

(2)

Baseline Characteristics about the Study Population: In addition to conditional statements, baseline characteristics of the study population, such as cohort information, are critically important. Results from a narrowly defined sample may not be generalizable to a wider population and may introduce systematic biases. This is especially significant in mental disorders, where demographic factors like age, gender, and race heavily influence outcomes. To ensure our MDKG provides a comprehensive view, we incorporate seven essential characteristics as triplet properties: average age, gender distribution, racial composition, educational attainment, employment status, overall sample size, and cohort name.

-

(3)

Side Information: Our research extracts information from scholarly publications and integrates data from various sources, such as biomedical databases and existing KGs. Given the varying credibility of each data source, it is crucial to consider source reliability in practical applications. To address this, we append two types of side information to the triplets in our MDKG, enabling users to trace the origins and assess the quality of the embedded knowledge.

-

Source Information: It refers to the source of extracted entities and relationships, such as literature, databases, or other KGs. For triplets derived from literature, this includes details like the publication name, first author, and publication date. Documenting this source information allows for easy verification and updates of the graph’s content.

-

Confidence: To assess the credibility of triplets within MDKG, we incorporate multiple factors that influence their confidence levels. Reliability is first evaluated through cross-source consensus, where triplets appearing in multiple sources are considered more trustworthy. For literature-derived triplets, we further document key attributes such as publication type (e.g., reviews, clinical trials, meta-analyses) and analytical methods used (e.g., multivariate analysis, multiple regression, logistic regression), as these factors contribute to varying levels of evidentiary strength. To enhance reliability assessment, we assign a confidence score (detailed in Supplementary) to each triplet. This score integrates multiple factors, including model prediction scores, journal impact, frequency across sources, distinct publication years count, maximum publication year, and contradiction counts in the data. By appending this confidence score to each triplet, MDKG enables users to prioritize high-quality data and better assess the reliability of the extracted knowledge.

-

Literature-derived insights into mental health mechanisms and treatments

Our literature-based MDKG has been instrumental in identifying the connections between various lifestyles and diseases, capturing 10% of the total relationships through “Association” and “Risk” (shown in Fig. 2a). For instance, in the context of endogenous anxiety, MDKG reveals significant associations between physical activity and mental health. Factors such as “daily walking,” “poor activity participation,” and “physical activity intensity” are linked to endogenous anxiety levels, indicating a risk relationship between physical activity and mental well-being. Additionally, MDKG highlights the impact of dietary habits, associating “obesity” and “high-fat diets” with heightened risks of endogenous anxiety, aligning with evidence that conditions like “gestational and type 2 diabetes” and “hypertension” exacerbate psychological distress.

Beyond individual lifestyle factors, MDKG illuminates the influence of social determinants on endogenous anxiety. Notable associations include “long-term social comparison,” “social eating behavior,” “working in a public hospital,” and “social demands,” showcasing the complex interplay between social context and mental health outcomes. Modern lifestyle factors such as “social media usage” and “free-time internet use” are also identified as contributors to endogenous anxiety. These insights highlight MDKG’s role in deepening our understanding of the multifaceted nature of endogenous anxiety risks, providing a foundation for developing targeted, personalized treatment and prevention strategies.

In addition to risk-related insights, MDKG also contributes to the characterization and treatment of specific mental disorders. For instance, inquiries into MDD reveal associations such as “abnormal oxidative metabolism,” “increased cerebral blood flow,” and “brain anomalies” (shown in Fig. 2c). Additional observations include “lateralization in brain electrical activity,” “memory deficits,” “reduced perceptual judgment,” and “difficulties in processing facial emotional expressions,” enhancing our understanding of MDD’s complexity. Regarding treatment, MDKG outlines options such as “scopolamine,” “sertraline,” “s-adenosyl methionine,” “esketamine,” and “serotonin-norepinephrine reuptake inhibitors,” along with non-pharmacological interventions like “web-based guided self-help,” “electroconvulsive therapy,” “biopsychosocial approaches,” and “mindfulness-based cognitive therapy.” These insights offer a holistic approach to studying MDD.

Population-specific mental health concerns revealed by conditional statements

In our literature-based MDKG, we analyze 283,417 conditional statements. This analysis, visualized through a word cloud of these condition-specific populations (as shown in Fig. 3a), highlights three primary areas of focus. First, we note substantial references to demographic groups across various ages and genders, including “children,” “women,” “adults,” “adolescents,” “offspring,” “young adults,” and “outpatients.” Second, we pinpoint groups linked to specific diseases such as “schizophrenia,” “bipolar disorder,” “depression,” “cancer,” “type 2 diabetes,” and “Parkinson’s disease.” Third, our research subjects predominantly include “humans” and various experimental animals like “rodents,” “rats,” and “mice.” These conditional statements enrich our MDKG by clarifying the contexts in which specific relationships hold, facilitating focused subgroup analyses. The recurrent appearance of terms associated with particular age and gender groups, alongside a broad spectrum of disease-related terms, underscores the intricate link between mental disorders and distinct population segments. This association is further complicated by the overlap with other medical conditions.

a Word cloud showing the most frequent condition-related entities extracted from literature-derived triplets in the MDKG. Font size is proportional to entity frequency, highlighting prevalent clinical and behavioral features linked to mental disorders. b Top 10 most frequently mentioned condition-related entities in elderly and adolescent populations based on literature triplets containing conditional statements. c Example of a causal knowledge graph centered on depression, constructed by linking unidirectional risk relationships extracted from the literature. Nodes represent biological, psychological, or environmental factors, while directed edges indicate potential causal influence as inferred from the literature. The source data are provided as a Source Data file.

Building on this foundation, a deeper analysis of entity frequencies based on conditional statements in our literature-based MDKG highlights different mental health concerns prevalent among adolescents and the elderly (as shown in Fig. 3b). In elderly populations, “depression pathophysiology” is the most frequently mentioned entity, significantly more so than in adolescents, alongside issues such as “mortality,” “peripheral muscle,” and “disability,” reflecting health problems accumulated with aging. In contrast, adolescent studies frequently mention “depression pathophysiology” but also highlight “attention deficit hyperactivity disorder,” “autism spectrum disorders,” “conduct disorder,” and “eating disorders,” underscoring concerns typical of developmental periods. This difference emphasizes the need for age-tailored mental health interventions and strategies that account for unique biopsychosocial factors at different life stages.

Mapping causal pathways in mental disorders

Based on the risk relationships identified in the literature-based MDKG, we construct a causal KG for mental disorders that illustrates unidirectional causal relationships. This graph reveals the etiology and risk links between mental disorders and related factors, providing a valuable reference for exploring the mechanisms underlying these disorders. As shown in Fig. 3c, the graph displays multiple causal pathways for depression, including one with intermediary nodes: “hyperactive glutamate transport – abnormal glutamatergic neurotransmission – hypofunction of NMDA receptors – cognitive impairments – stress – ablation of neurogenesis – hippocampal size reduction – depression.” This pathway begins with hyperactive glutamate transport, leading to a reduction in synaptic glutamate concentrations and disrupting neural transmission. This reduction impairs NMDA receptor function, crucial for neuronal plasticity and long-term potentiation, essential for memory and learning. Dysfunctional NMDA receptors cause cognitive deficits, increasing vulnerability to stress. Over time, heightened stress sensitivity suppresses neurogenesis in the hippocampus, essential for emotional regulation and cognitive function. The decline in hippocampal neurogenesis reduces hippocampal volume, impairing emotional regulation and cognitive processing. Ultimately, these physiological changes amplify depressive symptoms, highlighting a potential mechanistic pathway from glutamate dysregulation to depression. Exploring these causal pathways enables advances in understanding mental disorder mechanisms, improving early detection, and enhancing treatment strategies. This exploration also generates hypotheses for subsequent validation.

Comparative evaluation of triplet quality across knowledge graphs

We conducted a comparative analysis of MDKG against two large-scale general biomedical KGs—PrimeKG19 and SPOKE20—as well as a mental health-specific KG, GENA16. Our focus was on the density of directly connected information relevant to MDD, anxiety, bipolar disorder, schizophrenia, and depression. The analysis revealed that MDKG contains 5 to 50 times more information on these mental disorders than the comparative KGs, as shown in Fig. 4c, highlighting its superior coverage and comprehensiveness.

a Distribution of relation types in triplets related to anxiety and schizophrenia across PrimeKG, GENA, and MDKG. b Triplet quality evaluation across knowledge graphs by four independent human evaluators. Left: Correctness scores (box plots); Middle: Insightfulness ratings (1–3 scale, box plots); Right: Evaluation time per triplet (in seconds). Each dot represents the mean value from one evaluator based on 200 evaluated triplets. Box plots show the median (center line), interquartile range (box: 25th to 75th percentile), and whiskers extending to 1.5 times the interquartile range (IQR). Points outside this range are shown as outliers. c Coverage of triplets relevant to five mental disorders (major depressive disorder, anxiety, bipolar disorder, schizophrenia, and depression) across five knowledge graphs. d Inter-rater agreement on triplet evaluations. Kappa values and agreement rates are shown for six evaluator pairs across correctness and insightfulness judgments. e Ontological distribution of entity types in the integrated MDKG. The source data are provided as a Source Data file.

To further assess MDKG’s quality, we conducted a human evaluation of randomly selected relationships from PrimeKG, MDKG, and GENA. Four evaluators—two psychiatrists (d1, d2) and two medical PhDs (phd1, phd2)—assessed the correctness and relevance of the extracted triplets. Given the varying information richness of the three KGs, we provided the maximum available data for each: triplets, PMIDs, and sentences for GENA; triplets and database sources for PrimeKG; and triplets, PMIDs, sentences, journal, impact factor, frequency, and date for MDKG. For evaluation, we selected two diseases, schizophrenia and anxiety disorders, and randomly sampled 100 non-redundant triplets per disease. Each evaluator reviewed the same set of examples, ensuring a consistent and unbiased comparison.

Evaluation metrics included correctness (whether the triplet was correct based on the provided sentence), insightfulness (rated 1–3 for novelty, reliability, and usefulness), and evaluation time (average time for 200 examples). Correctness was excluded for PrimeKG, as it lacks original sources. Data analysis used Cohen’s Kappa for inter-rater consistency, with values ranging from −1 (no agreement) to 1 (perfect agreement), and the agreement rate between evaluators. Following is a summary of our findings:

-

Comparative Analysis of Triplet Distributions: Overall, for triplets related to anxiety and schizophrenia, the relation and entity architecture across the KGs demonstrate distinct graph differences, as shown in Fig. 4a. PrimeKG predominantly contains generic associations, with ~90% of the relations labeled as “associated with,” mostly involving gene entities. In contrast, MDKG exhibits more clinically actionable relationships, including ~20% “therapeutic relationships,” 11% “risk relationships,” and 10% “diagnostic relationships.” GENA’s relationship distribution demonstrates linguistic specificity but clinical fragmentation, with ~85% of relations occurring ≤5 times. As a result, many of these relations lack standardization, such as “RECEIVE APPROVAL BY U.S. FOOD AND DRUG ADMINISTRATION FOR TREATMENT OF.”

-

Quality Metric Performance: For correctness (Fig. 4b), MDKG achieved an accuracy of 79.0% (SD = 0.01%), slightly outperforming GENA, which reached 78.3% (SD = 0.01%). The errors in MDKG were primarily attributed to semantic ambiguities (28%), rather than factual inaccuracies (12%). In terms of insightfulness, reported as mean ± SD, were highest for GENA (2.29 ± 0.03), followed by MDKG (2.18 ± 0.02) and PrimeKG (2.09 ± 0.01). This difference likely reflects evaluators’ emphasis on the novelty of knowledge as a key metric. While PrimeKG and MDKG offer more extensive knowledge integration—especially MDKG, which incorporates clinical consensus data dating back to 1985—24.34% of MDKG’s schizophrenia-related triplets were derived from studies published before 2010. Evaluators frequently described these entries as “consolidated textbook knowledge" in their post-evaluation feedback. This observation highlights the challenge of the need to balance evidence maturity (strength) with temporal relevance (novelty) in KG development.

-

Inter-Rater Reliability Assessment: We observed significant intra-group vs inter-group discrepancies in evaluation consistency (Fig. 4d). Clinical experts (d1–d2) achieved moderate agreement in correctness assessment (kappa: 0.43, Agreement: 76.7%), substantially higher than cross-disciplinary pairs like d2–phd1 (kappa: 0.04). Similarly, the PhD researchers (phd1–phd2) demonstrated strong consensus in the insightfulness evaluation (kappa: 0.63, agreement: 80.0%), while clinician–researcher pairs showed considerably lower agreement, with kappa values ranging from 0.21 to 0.29. This pattern suggests divergent evaluation priorities—clinicians may have prioritized therapeutic validity (e.g., contraindications, treatment effects), while researchers placed greater emphasis on mechanistic explanations.

-

Time Cost of Evaluation: MDKG’s enriched contextual features significantly reduced evaluation time by 62–77% compared to PrimeKG and GENA (Fig. 4b). Clinicians spent an average of 31 ± 2.24 s per MDKG triplet, compared to 129 ± 11.51 s for PrimeKG and 85.25 ± 4.66 s for GENA. This reduction in evaluation time was strongly correlated with metadata completeness, highlighting MDKG’s efficiency in facilitating rapid and informed assessments.

-

Validation of Confidence Metric: Our confidence score exhibited a strong positive correlation with human insightfulness ratings (mean correlation: 0.78, std: 0.00), indicating that the factors used in its design closely align with the criteria humans consider when assessing the value of a triplet. This strong alignment further validates the robustness and reliability of our approach.

Enhancing MDD prediction through knowledge graph embeddings

We propose utilizing MDKG as an external knowledge source to enhance the prediction accuracy for mental disorders. Our study leverages Electronic Health Records (EHRs) from the UK Biobank, covering the period from 2006 to 2022 (application number: 98327). We source patients’ medical history from self-reports (verbal interviews at assessment centers, UK Biobank Field ID: 20002) and healthcare records (primary care, mortality records, and hospital inpatient data) from the UK National Health Service. Additionally, we selected PheCodes to enhance clinical relevance and model robustness by consolidating granular ICD-10 classifications into clinically meaningful phenotypes, reducing dimensionality by ~75% while prioritizing core disease manifestations over administrative distinctions. This approach mitigates sparsity and diagnostic inconsistencies in mental health disorders, aligning with PheWAS objectives to capture broad phenotypic patterns in EHR data. By emphasizing symptom-driven categorizations, PheCodes improve model generalizability and interpretability, overcoming the transient severity variations inherent in ICD-10 classifications21,22.

We constructed a case-control cohort focusing on three target conditions: MDD, anxiety, and bipolar disorder. For each case, we compiled the patient’s pre-diagnosis health profiles based on medical records from 1 to 7 years prior to their first recorded diagnosis, aiming to capture early predictive signals. To reduce potential noise from misdiagnoses, individuals with only a single recorded diagnosis were excluded. The control group consisted of individuals with no psychological diagnoses, excluding all those with PheCodes in the 295–306.99 range (see Supplementary Table 7). A 1:2 case-to-control ratio was adopted to ensure a balanced and statistically robust comparison. The baseline characteristics of participants are provided in Table 1.

To effectively model patients’ longitudinal medical history, we developed a KG embedding approach based on MDKG (see Fig. 5a and “Methods” subsection “Representing patients’ medical history using knowledge graph embeddings”). Traditional models often encode diseases as dummy variables, which can lead to the curse of dimensionality, particularly in studies with limited sample sizes. For instance, representing 1578 phenotypes typically requires an equally high-dimensional variable space, yet the available training samples may be insufficient to support such complexity. To address this, our approach condenses a patient’s medical history into a 200-dimensional feature vector. This representation not only reduces the model’s dimensional burden but also integrates medical background knowledge from the KG, potentially improving diagnostic predictions and clinical insights.

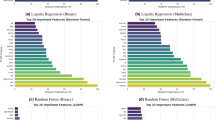

a Workflow illustrating the integration of UK Biobank Electronic health records (EHRs) and the MDKG for mental disorder prediction. EHRs are first cleaned and preprocessed to extract non-medical factors (e.g., lifestyle, environmental exposures, family history). ICD-10 diagnoses and Phecodes are mapped to knowledge graph entities, and RDF2Vec is used to generate embeddings for the aligned medical entities. Each patient’s medical history embedding is computed by averaging these vectors. Predictive models are then trained under three input settings: (1) EHR factors only, (2) medical knowledge grpah (KG) embeddings only, and (3) a combination of both. b SHAP-based feature importance for predicting major depressive disorder (MDD) using environmental factors only (top) and using environmental factors combined with KG embeddings (bottom). Bars represent the average absolute SHAP value of each feature across all predictions. c Prediction performance (AUC) for MDD, anxiety, and bipolar disorder across different models and input feature settings. Each box plot shows results from 10-fold cross-validation for four classifiers—logistic regression (LR), random forest (RF), support vector machine (SVM), and XGBoost—under three experimental conditions: KG Embeddings Only, EHR Factors Only, and EHR + KG Embeddings. Box plots show the median (center line), interquartile range (box: 25th–75th percentile), and whiskers extending to 1.5 times the interquartile range (IQR). Each dot represents the AUC score from one cross-validation fold. Points beyond the whiskers are plotted as outliers. The source data are provided as a Source Data file.

We evaluated the predictive utility of KG embeddings using ten-fold cross-validation across four classifiers: logistic regression (LR), random forest (RF), support vector machine (SVM), and XGBoost. Three experimental settings were compared: (1) KG embeddings only, (2) non-medical EHR factors only (e.g., environment, lifestyle, family), and (3) integrated models combining both. The results, summarized in Fig. 5c, are reported as mean ± standard deviation of AUC.

-

Major Depressive Disorder: When utilizing comprehensive non-medical EHR factors (see Supplementary for details), the integrated model (EHR + KG) achieved superior performance. XGBoost attained the highest AUC of 0.89 ± 0.02, improving by 0.07 over the EHR-only model (0.82 ± 0.03) and by 0.18 over the KG-only model (0.71 ± 0.02). Similar trends emerged for LR (The integrated model: 0.88 ± 0.03 vs. EHR-only: 0.84 ± 0.04; KG-only: 0.75 ± 0.02) and RF (The integrated model: 0.86 ± 0.02 vs. EHR-only: 0.82 ± 0.03). Notably, models relying solely on environmental EHR factors exhibited a significant performance boost when enhanced with KG embeddings. XGBoost improved from 0.63 ± 0.03 (EHR-only) to 0.75 ± 0.02, reflecting a gain of 0.12. Similarly, SVM’s EHR-only performance (0.57 ± 0.06) was relatively weak but improved significantly to 0.78 ± 0.03 after incorporating KG embeddings.

-

Bipolar Disorder: XGBoost achieved an AUC of 0.88 ± 0.02, outperforming EHR-only (0.85 ± 0.02) and KG-only (0.66 ± 0.03) approaches. LR demonstrated the highest relative improvement (+0.17), increasing from 0.70 ± 0.03 (KG-only) to 0.87 ± 0.02 (the integrated model), highlighting the benefits of multimodal integration.

-

Anxiety Disorders: The integrated model consistently improved AUC across all classifiers (range: 0.81–0.83), compared to KG-only (0.65–0.69) and EHR-only (0.78–0.79). LR performed best (0.83 ± 0.02 for integrated model vs. 0.79 ± 0.01 for EHR-only). The non-medical EHR-only model for anxiety performed the weakest among the three conditions, suggesting that lifestyle, environmental, and familial factors may contribute differently to anxiety disorders compared to MDD and bipolar disorder.

SHapley Additive exPlanations (SHAP) feature attribution analysis, shown in Fig. 5b, further illustrates the significant shifts in feature importance upon including medical history KG embeddings. In the environmental-only MDD scenario, PM2.5 was the most influential factor (SHAP value: 0.43), underscoring the role of air pollution in MDD risk—consistent with prior findings linking particulate exposure to altered brain function and depressive symptoms23,24. However, in the integrated model, PM2.5’s importance slightly declined to 0.40, while new medical history embeddings (e.g., Embedding 47, 162, 190) emerged as top contributors. Similarly, features like “distance to coast” also saw reduced SHAP values (0.20 → 0.16), while demographic factors such as “sex” and “greenspace percentage” remained consistently important.

Overall, these findings demonstrate the consistent advantage of integrating KG embeddings, particularly in feature-limited scenarios, effectively addressing limitations inherent to exclusive reliance on traditional EHR factors. By combining the depth of KG embeddings with the breadth of conventional EHR data, our integrated approach significantly enhances predictive accuracy, representing a meaningful advancement in clinical diagnostic methodologies.

Discussion

This study presents MDKG, a comprehensive KG specifically designed for mental disorders. This multi-relational, attributed biomedical KG contains over 10 million interconnections sourced from various biomedical literature and pre-existing databases. MDKG’s schema is meticulously designed to enrich relationships extracted from literature with domain-specific information, such as conditional statements and contextual features, ensuring both semantic and biomedical validity. We have developed an automated data annotation process by integrating LLMs with active learning techniques, enabling us to annotate 500 abstracts related to mental disorders. We also demonstrate the practical applications of MDKG and its potential to advance mental health research and clinical practices.

Despite these advancements, our MDKG has several potential limitations under the current construction framework.

-

Limited Scope and Techniques of Information Extraction: Our current triplet extraction process in MDKG is restricted to sentence-level information from abstracts, targeting specific predefined entities and relationships. This limitation may result in missing unforeseen knowledge. Future developments should expand the analysis to full text, supplementary materials, tables, and images, and incorporate advanced methodologies like LLMs, document-answering technologies, and other AI tools to enhance the scope and effectiveness of information extraction.

-

Challenges in Data Quality and Integrity: Although we’ve assembled large-scale interactions from various databases and literature, the quality and integrity of this metadata cannot be fully guaranteed. A significant issue is the alignment of medical entities, which often have many synonyms and abbreviations. This complexity makes it difficult to align entities from different sources, leading to lower connectivity in our KG and limiting the linking of entities.

-

Potential for LLMs and KG Integration in Question Answering (QA) Models: Advancements in LLM technology present an opportunity to integrate LLMs with KG in a QA model. Currently, our MDKG mainly serves medical researchers. To extend its utility to the general public, we can develop a user-friendly QA tool that combines LLMs’ comprehension capabilities with the extensive knowledge of MDKG. This would help make complex medical information accessible and understandable to those without a medical background, broadening the applicability and impact of our MDKG.

Methods

Ethics statement

This study includes analyses based on both publicly available biomedical resources and individual-level data from the UK Biobank. The use of UK Biobank data was approved under Application Number 98327. Ethical approval was granted by the North West Multi-centre Research Ethics Committee, acting as a Research Tissue Bank. All UK Biobank participants provided written informed consent for data collection, storage, and linkage. The UK Biobank study protocol is publicly available at https://www.ukbiobank.ac.uk. No ethical approval was required for the use of publicly available datasets retrieved from PubMed, biomedical literature repositories, or open-access biomedical databases.

Statistics and reproducibility

No statistical method was used to predetermine sample size. No data were excluded from the analyses. The experiments were not randomized. The investigators were not blinded to allocation during annotation, model training, or outcome assessment.

Entity and relation extraction models were trained and evaluated on fixed training, validation, and test splits, using precision, recall, micro F1, and macro F1 as metrics. For downstream classification tasks, repeated 10-fold cross-validation was used, and performance was assessed with AUC, reporting standard deviation across folds.

Human evaluation of KG triplet quality was conducted on randomly selected triplets, sampled independently per graph. Evaluators scored correctness, insightfulness, and evaluation time. Evaluation consistency between raters was quantified using Cohen’s Kappa coefficient and inter-rater agreement rates. Mean evaluation time per example and its standard error were also recorded. Pearson correlation was used to assess the alignment between model-derived confidence scores and human-assigned insightfulness ratings.

Data annotation

Significant effort was dedicated to annotating a dataset for knowledge extraction pertaining to mental disorders, resulting in the creation of the Mental Disorders-Related Information Extraction Corpus (MDIEC), which is publicly available at https://doi.org/10.5281/zenodo.10960357.

The annotation process began by selecting keywords representing mental disorders with significant global disease burdens, as defined in the Global Burden of Diseases 2019 report2,25. These keywords include depressive disorder, anxiety disorder, bipolar disorder, and schizophrenia, among others. Using these keywords, we retrieved 234,087 articles from PubMed (https://pubmed.ncbi.nlm.nih.gov/) as of January 1, 2024. We then applied an automated annotation process (shown in Fig. 6a) for preliminary data annotation. Our team meticulously reviewed and refined annotations for a subset of 500 abstracts to ensure data quality and accuracy. The annotated corpus’s statistics are presented in Table 2.

a Workflow for literature-based triplet annotation using large language models (LLMs). The process begins with downloading abstracts and applying biomedical named entity recognition (e.g., BERN2, QuickUMLS). Annotated entities are used to construct transformed sentences, which are then submitted to GPT-based prompts for relation extraction. Human annotators manually review and refine the generated triplets to ensure accuracy. b Pipeline for extracting baseline characteristics from PDFs using LLM-powered question answering.

Entity annotation

We segmented the abstract annotation process into ten batches using an active learning strategy for sequential annotation. Initially, we employed BERN226 (Advanced Biomedical Entity Recognition and Normalization), a neural network-based tool, to pre-annotate the texts. BERN2 efficiently identifies entities such as genes, chemicals, diseases, and cell types. However, BERN2’s proficiency in identifying methods and physiological activities is limited, necessitating additional processing for comprehensive extraction.

To address this, we first created an entity-matching dictionary of annotated entities, which we omitted only in the initial annotation round. This dictionary was then used to perform exact matching on BERN2-processed texts. Since entities often appear as noun phrases, we focused on extracting these phrases. Traditional extraction methods rely on established NLP tools27 or predefined grammar28, sometimes compromising accuracy. To improve this, we employed GPT-4.0, an LLM, to extract noun phrases from the abstracts in a question-and-answer format. We then compared each extracted phrase with the dictionary entries, defining a match as successful if it met a specific threshold of text similarity, measured by edit distance.

For noun phrases where the entity types were unclear, we used QuickUMLS29, a tool that matches noun phrases to terms in the UMLS30 (Unified Medical Language System) based on string similarities. Two neurosurgery experts selected 108 relevant semantic types from the UMLS and mapped these to our nine predetermined entity categories.

After annotating the relationships, we reviewed and corrected the annotated entities. Once manually verified, these entities were integrated into the entity-matching dictionary. This updated dictionary was then used for subsequent annotation processes, enabling automated annotation of terms not typically covered by BERN2 or UMLS but significant for mental disorders research.

Relationship annotation

Annotating relationships presents two primary challenges: it is labor-intensive and often subjective, leading to inconsistencies due to varied interpretations by different annotators. Although automatic relationship annotation methods like distant supervision31 exist, their accuracy is limited. These methods assume that “if two entities have a certain relationship in the knowledge base, then all sentences containing these two entities will express this relationship.” However, the complexity and variability of the text often invalidate this assumption.

To mitigate these challenges, we integrated GPT-4.0 into our relationship annotation workflow, leveraging its advanced text understanding abilities and extensive biomedical knowledge (shown in Fig. 6a). We start with texts pre-annotated for entities. The primary goal at this stage is to identify potential relationships between entity pairs based on the sentence’s context. We enhance GPT-4.0’s efficiency using a two-step inquiry strategy32. Initially, GPT-4.0 determines whether a sentence indicates a particular relationship type. If the answer is yes, GPT-4.0 then identifies and outputs the entity pairs involved in this relationship. Finally, our annotators thoroughly review these identified pairs, checking their relationship type to ensure annotation accuracy and consistency. To further improve consistency between annotators, we provide relationship annotation guidelines (details in Supplementary), summarizing common relational expression sentences.

Actively candidate annotation abstract selection

Annotating randomly selected abstracts indiscriminately presents two major challenges:

-

1.

Data imbalance. Specific relationship types, such as risk relationships, are rarely found in abstracts. This scarcity leads to underrepresentation of these relationship types in our dataset, negatively impacting the model’s ability to classify them accurately.

-

2.

Inefficient Utilization of Resources. Our analysis reveals that over half of the sentences in typical abstracts do not contain essential relationship information. As a result, many sentences are uninformative for our information extraction model. Despite this, annotators expend equal effort on analyzing these uninformative sentences as on informative ones, leading to unnecessary cost increases.

To mitigate the identified challenges, we implement an active learning framework for more effective, model-driven annotation of our training dataset. This method focuses on identifying samples with significant uncertainty regarding certain types of relationships, employing a unique region-aware sampling strategy33 designed to balance uncertainty and diversity. By prioritizing the most informative samples, we enhance the model’s performance.

-

1.

Identifying Sentences with Target Relationships: In each annotation-training loop t, we identify sentences containing both the head and tail entities of a target relationship type, such as “risk factor of,” as our initial candidate annotation sentences {S(t)}. This identification uses known triplets from the already labeled dataset or an existing database, ensuring that we concentrate on sentences relevant to the target relationship.

-

2.

Weighted K-means Clustering: Sentences \({s}_{i}^{(t)}\in \{{S}^{(t)}\}\) are transformed into embeddings \({{{{\bf{x}}}}}_{i}^{(t)}\in \{{{{{\bf{X}}}}}^{(t)}\}\) using the [CLS] token of our information extraction model’s encoder \(f{({{{{\boldsymbol{\theta }}}}}^{(t-1)})}_{{{{\rm{encoder}}}}}\). These embeddings are clustered using weighted K-means clustering, with the weight of each sentence determined by its uncertainty level, calculated based on the model’s estimated relationships’ cross-entropy

$${a}_{i}^{(t)}=a\left({s}_{i}^{(t)},{{{{\boldsymbol{\theta }}}}}^{(t-1)}\right).$$(1) -

3.

Uncertainty and Diversity of Clusters: After obtaining K clusters \({{{{\mathcal{C}}}}}_{k}^{(t)}\) for k = 1, …, K with the corresponding sentence embeddings, we derive the uncertainty of each cluster from two metrics:

$${u}_{k}^{(t)}=U({{{{\mathcal{C}}}}}_{k}^{(t)})+\beta I({{{{\mathcal{C}}}}}_{k}^{(t)})$$(2)where

$$U({{{{\mathcal{C}}}}}_{k}^{(t)})={\left\vert {{{{\mathcal{C}}}}}_{k}^{(t)}\right\vert }^{-1}{\sum}_{{s}_{i}^{(t)}\in {{{{\mathcal{C}}}}}_{k}^{(t)}}a\left({s}_{i}^{(t)},{{{{\boldsymbol{\theta }}}}}^{(t-1)}\right)$$(3)is the average uncertainty of all sentences in cluster k, \(\left\vert {{{{\mathcal{C}}}}}_{k}^{(t)}\right\vert\) is the size of the cluster,

$$I({{{{\mathcal{C}}}}}_{k}^{(t)})=-{\sum}_{j\in {{{\mathcal{L}}}}}{g}_{j,k}^{(t)}\log {g}_{j,k}^{(t)}$$(4)is the inter-relationship type diversity, where \({{{\mathcal{L}}}}\) is the set of relationship categories, and β is a scaling parameter. Here,

$${g}_{j,k}^{(t)}=\frac{{\sum }_{{s}_{i}^{(t)}\in {{{{\mathcal{C}}}}}_{k}^{(t)}}{{{\bf{1}}}}\left\{{\tilde{y}}_{i}^{(t)}=j\right\}}{\left\vert {{{{\mathcal{C}}}}}_{k}^{(t)}\right\vert }$$(5)is the frequency of relationship type j in cluster k, and \({\tilde{y}}_{i}^{(t)}\) refers to the pseudo-relationship label of sentence \({s}_{i}^{(t)}\).

-

4.

Hierarchical Sampling: Clusters are ranked by their uncertainty \({u}_{k}^{(t)}\). The M most uncertain clusters are chosen, and from each, the top b sentences with the highest uncertainty are selected to construct a batch. This stratified approach ensures diverse and highly uncertain sentence querying.

-

5.

Annotation: Finally, the selected sentences are actively annotated and added to the training set, facilitating the next round of model training.

Information extraction in medical literature

This study outlines a structured approach to extracting information from medical literature (see Fig. 1b), involving two main steps: 1. Extraction of Named Entities, Relationships, and Conditional Statements: Our initial step involves extracting named entities, relationships, and conditional statements from medical literature abstracts. To refine this process, we integrate active learning methods, aiming to enhance sample utilization efficiency, minimize annotation costs, and boost our model’s overall performance. 2. Extraction of Baseline Characteristics of the Study Population: A crucial part of our study is extracting the characteristics of the study population from medical literature, enriching the background of the extracted data. We focus on two main areas: extracting baseline characteristics from full-text tables and gleaning study-specific information from abstracts. ChatGPT plays a key role in analyzing these elements, especially in interpreting complex tables. This approach ensures a comprehensive understanding of the triples extracted from articles, significantly enhancing the depth and quality of our data generation.

Named entities, relationships, and conditional statements extraction

For the Named Entity Recognition (NER) and Relationship Extraction (RE) process, we employed the SpERT.PL34 framework, which leverages the transformer network BERT35 as its core and integrates shallow classifiers for joint entity and relation classification. Unlike previous methods based on BIO/BILOU labels36, SpERT.PL utilizes a span-based approach, where any token subsequence (or span) can constitute a potential entity, and a relationship can exist between any pair of spans. Our model training followed the SpERT.PL methodology with three key modifications to enhance performance: 1. Active Learning Workflow: The training process integrates an active learning approach, where the BERT-based NER/RE model identifies and selects the most informative abstracts according to its current preferences. Experts then annotate these abstracts and incorporate them into the training dataset. 2. CODER++ Encoder Integration: We incorporated the CODER++37 encoder, a transformer-based pre-trained model trained on the UMLS corpus. CODER++ not only embeds biomedical information from the UMLS but also captures relationship structure information to align equivalent biological concepts more effectively. 3. NoisyTune Implementation: We implemented NoisyTune38, which adds uniform noise to parameter matrices to enhance fine-tuning. The best-performing model on the validation set is then selected. With its optimal parameters, a final model is trained using all annotated data to recognize entities and extract relationships in new, unannotated texts. After extracting entities and relationships with the BERT-based model, we refined the extracted triplets using GPT-4o. The refinement process includes verifying entity spans, confirming entity types, validating relationships, and scoring each triplet (1-10) for overall accuracy. Triplets with a score below 5 are reprocessed. In cases of contradictory statements, we input the conflicting triplets along with the full abstract into GPT-4o to determine whether the contradictions stem from differing conclusions in the articles. If the contradictions are due to differing conclusions, they are retained; otherwise, the model selects the correct triplet, removing the incorrect one from the KG.

The best-performing NER model achieved a micro F1 score of 0.89 and a macro F1 score of 0.88, indicating reliable precision across different entity types. The RE task presented more challenges, with initial micro and macro F1 scores of 0.68 and 0.67, respectively. When both tasks are performed jointly, the model achieved micro and macro F1 scores of 0.63 and 0.63, highlighting the complexity of integrating these processes. After post-processing refinement steps, the valid joint NER & RE evaluation achieved a micro F1 score of 0.79 and a macro F1 score of 0.76, demonstrating a marked enhancement in overall performance.

Baseline characteristics of the study population extraction

In medical literature, abstracts often do not fully reflect the baseline characteristics of research populations. To address this, we designed a process to examine the baseline characteristics tables to gather the demographic information of interest. Extracting data from tables involves several stages, including locating the table, linking the caption, identifying the structure, and extracting the data. The most challenging task is understanding the table’s structure, which is crucial for accurately interpreting each cell’s meaning. However, a study39 shows that in over 70% of cases, in-depth consideration of table structure is unnecessary. In the remaining 30%, a simple understanding of the structure, such as the alignment of rows and columns, suffices without complex reasoning chains. Combined with ChatGPT’s excellent performance in question and answer tasks, we found that ChatGPT can provide fairly accurate answers based on the table text without the table structure. Therefore, we have developed a table question-answer pipeline that integrates Optical Character Recognition (OCR) and ChatGPT. The specific steps are as follows:

-

1.

PDF/XML Acquisition: Based on the publication type of articles obtained from PubMed, we selectively downloaded PDFs or XMLs of articles from PubMed Central or other journal websites, excluding those classified as “Review” or “Meta.”

-

2.

Table Location and Extraction: After acquiring the PDF and XML files, we searched these documents for pages containing the terms “Characteristics” or “Demographics.” For PDFs, we utilized PaddleOCR’s40 table detection capability to identify the presence of tables on the relevant pages. When a table is detected, we isolated and extracted its contents using pdfplumber41. In the case of XML files, we employed Python scripts to parse the layout and search for tables specifically tagged or labeled with pertinent keywords. We collected ~73,000 XML and 95,637 PDF files for our research analysis.

-

3.

Ask ChatGPT for an Answer: After extracting the content of the target tables from XML or PDF files, we approached the task as a question-answering task, querying ChatGPT with questions related to the extracted table content (details in Fig. 6b).

-

4.

Post-processing: After retrieving answers from ChatGPT, we first ensured that the answers conform to the JSON format. Any answers failing to meet this standard are discarded. For these tables, we initiated a second round of questioning. Additionally, if an answer is properly formatted in JSON but consistently responds with “Unknown” for all queries, we infer that the corresponding table likely lacks information on study population characteristics, and these responses are also discarded.

We have conducted a manual review of this procedure’s effectiveness on roughly 300 articles. This review involved assessing the accuracy of the process and identifying the causes of any errors encountered. The results of this evaluation, including a detailed breakdown of accuracy and error categorization, are presented in Supplementary Table 3.

Entity and relationship alignment

Integrating data from various sources necessitates meticulously aligning entities and relationships to maintain a unified framework for future research endeavors.

Our method starts by aligning and integrating the types of entities and relationships from biomedical databases, as outlined in Table 3, with those predefined in our MDKG. To achieve precise entity alignment, we computed the SapBert embedding for each entity and employed the FAISS42 library to identify the closest match. If the cosine similarity between two entities was above 0.90, they were considered identical. Before this step, we disambiguated abbreviations identified in the literature, using the “abbreviation for” relationship to expand abbreviations to their full forms based on the context within the same abstract, thereby enhancing entity alignment accuracy.

For unique identifier (ID) assignment, our strategy leveraged tools such as SciSpacy27 and QuickUMLS, as well as cosine similarity metrics computed from SapBERT embeddings. Entities in categories such as phenotype, method, signs, health factors, and drug were linked to the UMLS30. Disease entities were connected via the MONDO43 database or UMLS, anatomy entities through UBERON44, and genes and physiological functions via the Gene Ontology (GO45). More precisely, we computed the cosine similarity between an entity and every entity within the corresponding databases through SapBert embeddings. The entity that demonstrated the highest similarity, exceeding a threshold of 0.9, was selected as the link target. Due to the extensive number of entities in UMLS, making an exhaustive comparison unfeasible, our method for UMLS was distinct. We employed QuickUMLS or SciSpacy to generate a list of candidate entities for each term, then calculated the SapBert embeddings’ cosine similarity among these candidates and the original entity.

For entities not linked to a specific database, we clustered them based on their SapBert embeddings and selected the most frequently occurring entity from each cluster as the standard entity. Subsequently, we assigned a unique ID to each cluster of entities to ensure their distinct recognition within our MDKG.

Representing patients’ medical history using knowledge graph embeddings

After collecting a patient’s medical history, we generate their medical KG embeddings through the following steps:

-

1.

Our approach begins by mapping entities from MDKG to ICD-10 codes by calculating the SapBERT embeddings for each entity and ICD-10 codes, then determining their similarity. Using the established mapping between ICD-10 codes and Phecodes, we further map the MDKG entities to their corresponding Phecodes. SapBERT is particularly well-suited for this task due to its pre-training on the UMLS, a comprehensive database that includes a wide array of biomedical and clinical terms. This pre-training enables SapBERT to effectively capture the semantic relationships between medical concepts, allowing for accurate mappings of MDKG entities to Phecodes.

-

2.

After mapping MDKG entities to Phecodes, we compute graph embeddings for those entities that successfully map to Phecodes using the RDF2vec46 model. RDF2vec is a highly efficient technique that converts the structure of the KG into fixed-dimensional vector representations. Compared to Graph Neural Networks, RDF2vec requires far fewer computational resources, making it a more feasible option for large-scale applications like ours.

-

3.

Next, we average the graph embeddings of the entities that map to the same Phecode to generate the final Phecode embedding.

-

4.

To generate the final patient embedding, we average the embeddings of all Phecodes recorded for the patient in the UK Biobank. This process results in a compact and informative 200-dimensional medical embedding that summarizes the patient’s medical history for diagnostic predictions.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The annotated dataset used in this study, the Disorders-Related Information Extraction Corpus (MDIEC), has been deposited in the Zenodo repository under accession code [10960357] (https://zenodo.org/records/10960357). The Mental Disorders Knowledge Graph (MDKG) generated in this study is available through the BiomedKG portal at https://biomedkg.com. The individual-level data from the UK Biobank (UKB) used in this study are available under restricted access to protect participant privacy, in accordance with UK Biobank’s data governance policy. Access can be obtained by submitting a research application via the UK Biobank Access Management System (https://www.ukbiobank.ac.uk/enable-your-research/apply-for-access). Approval is limited to qualified researchers at recognized institutions, and access is granted following review and approval of the research protocol. The review timeline varies depending on project complexity and compliance requirements. Once granted, data access is valid for the duration of the approved project in accordance with UKB policy. Source data are provided with this paper.

Code availability

The code used in this study is publicly available on GitHub at https://github.com/MonsterTea/MDKG and is permanently archived with a DOI at Zenodo: https://doi.org/10.5281/zenodo.1569823747.

References

Scangos, K. W., State, M. W., Miller, A. H., Baker, J. T. & Williams, L. M. New and emerging approaches to treat psychiatric disorders. Nat. Med. 29, 317–333 (2023).

Vigo, D., Jones, L., Atun, R. & Thornicroft, G. The true global disease burden of mental illness: still elusive. Lancet Psychiatry 9, 98–100 (2022).

Craske, M. G., Herzallah, M. M., Nusslock, R. & Patel, V. From neural circuits to communities: an integrative multidisciplinary roadmap for global mental health. Nat. Ment. Health 1, 12–24 (2023).

Tozzi, L. et al. Personalized brain circuit scores identify clinically distinct biotypes in depression and anxiety. Nat. Med. 30, 2076–2087 (2024).

Derks, E. M., Thorp, J. G. & Gerring, Z. F. Ten challenges for clinical translation in psychiatric genetics. Nat. Genet. 54, 1457–1465 (2022).

Andreassen, O. A., Hindley, G. F., Frei, O. & Smeland, O. B. New insights from the last decade of research in psychiatric genetics: discoveries, challenges and clinical implications. World Psychiatry 22, 4–24 (2023).

Ji, S., Pan, S., Cambria, E., Marttinen, P. & Yu, P. S. A survey on knowledge graphs: representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 33, 494–514 (2022).

Freidel, S. & Schwarz, E. Knowledge graphs in psychiatric research: potential applications and future perspectives. Acta Psychiatr. Scand. 151, 180–191 (2025).

Hogan, A. et al. Knowledge graphs. ACM Comput. Surv. 54, 1–37 (2021).

Shen, T. et al. Increased cognition connectivity network in major depression disorder: a FMRI study. Psychiatry Investig. 12, 227–234 (2015).

Sun, H. et al. MMiKG: a knowledge graph-based platform for path mining of microbiota-mental diseases interactions. Brief. Bioinform. 24, 340 (2023).

Fu, C., Huang, Z., Harmelen, F., He, T. & Jiang, X. Food recommendation for mental health by using knowledge graph approach. In Proc. Health Information Science: 11th International Conference, HIS 2022, Virtual Event, October 28-30, 2022 231–242. https://doi.org/10.1007/978-3-031-20627-6_22 (Springer, 2022).

Li, Z. Construction of Depression Knowledge Graph Based on Biomedical Literature. In Proc. 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) 1849–1855. https://doi.org/10.1109/BIBM52615.2021.9669447; https://ieeexplore.ieee.org/document/9669447 (2021).

Huang, Z., Yang, J., Harmelen, F. & Hu, Q. Constructing knowledge graphs of depression. in (eds Siuly, S., Huang, Z., Aickelin, U., Zhou, R., Wang, H., Zhang, Y. & Klimenko, S.) Health Information Science. Lecture Notes in Computer Science. 149–161. https://doi.org/10.1007/978-3-319-69182-4_16 (Springer, 2017).

Wang, Y. & Yang, W. Research on the method of constructing knowledge graph of affective disorder based on multi-source data fusion. In Proc. 5th International Conference on Information Technologies and Electrical Engineering. ICITEE ’22 57–60. https://doi.org/10.1145/3582935.3582945 (Association for Computing Machinery, (2023).

Dang, L. D., Phan, U. T. P. & Nguyen, N. T. H. GENA: A knowledge graph for nutrition and mental health. J. Biomed. Inform. 145, 104460 (2023).

Miller, D. L. The nature of scientific statements. Philos. Sci. 14, 219–223 (1947).

Vishne, T. et al. Fibromyalgia among major depression disorder females compared to males. Rheumatol. Int. 28, 831–836 (2008).

Chandak, P., Huang, K. & Zitnik, M. Building a knowledge graph to enable precision medicine. Sci. Data 10, 67 (2023).

Morris, J. H. & Soman, K. et al. The scalable precision medicine open knowledge engine (SPOKE): a massive knowledge graph of biomedical information. Bioinformatics 39, 080 (2023).

Wu, P. et al. Mapping ICD-10 and ICD-10-CM codes to Phecodes: workflow development and initial evaluation. JMIR Med. Inform. 7, e14325 (2019).

Bastarache, L. Using phecodes for research with the electronic health record: from PheWAS to PheRS. Annu. Rev. Biomed. Data Sci. 4, 1–19 (2021).

Nobile, F., Forastiere, A., Michelozzi, P., Forastiere, F. & Stafoggia, M. Long-term exposure to air pollution and incidence of mental disorders. A large longitudinal cohort study of adults within an urban area. 181, 108302. https://doi.org/10.1016/j.envint.2023.108302.

Li, Z. et al. Air pollution interacts with genetic risk to influence cortical networks implicated in depression. 118, 2109310118. https://doi.org/10.1073/pnas.2109310118.

Global, regional, and national burden of 12 mental disorders in 204 countries and territories, 1990-2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet Psychiatry 9, 137–150. https://doi.org/10.1016/S2215-0366(21)00395-3 (2022).

Sung, M. et al. BERN2: an advanced neural biomedical named entity recognition and normalization tool. Bioinformatics 38, 4837–4839 (2022).

Neumann, M., King, D., Beltagy, I. & Ammar, W. ScispaCy: fast and robust models for biomedical natural language processing. In Proc. 18th BioNLP Workshop and Shared Task 319–327. https://doi.org/10.18653/v1/W19-5034; https://www.aclweb.org/anthology/W19-5034 (Association for Computational Linguistics, 2019).

Eftimov, T., Koroušić Seljak, B. & Korošec, P. A rule-based named-entity recognition method for knowledge extraction of evidence-based dietary recommendations. PLoS ONE 12, 0179488 (2017).

Soldaini, L. & Goharian, N. QuickUMLS: a fast, unsupervised approach for medical concept extraction. MedIR Workshop, SIGIR (2016).

Bodenreider, O. The Unified Medical Language System (UMLS): integrating biomedical terminology. Nucleic Acids Res. 32, 267–270 (2004).

Mintz, M., Bills, S., Snow, R. & Jurafsky, D. Distant supervision for relation extraction without labeled data. In: Proc. Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP (eds Su, K.-Y., Su, J., Wiebe, J. & Li, H.) 1003–1011. https://aclanthology.org/P09-1113 (Association for Computational Linguistics, 2009).

Wang, B. et al. Towards understanding chain-of-thought prompting: an empirical study of what matters. In Proc. 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (eds Rogers, A., Boyd-Graber, J. & Okazaki, N.) 2717–2739. https://doi.org/10.18653/v1/2023.acl-long.153; https://aclanthology.org/2023.acl-long.153 (Association for Computational Linguistics, 2023).

Yu, Y., Kong, L., Zhang, J., Zhang, R. & Zhang, C. AcTune: uncertainty-based active self-training for active fine-tuning of pretrained language models. In Proc. 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (eds Carpuat, M., Marneffe, M.-C., Meza Ruiz, I. V.) 1422–1436. https://doi.org/10.18653/v1/2022.naacl-main.102; https://aclanthology.org/2022.naacl-main.102 (Association for Computational Linguistics, 2022).

Eberts, M. & Ulges, A. Span-based joint entity and relation extraction with transformer pre-training. Front. Artif. Intell. Appl. 325, 2006–2013 (2020).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In: Proc. Conf. North Am. Chapter Assoc. Comput. Linguist. Vol. 1, 4171–4186 (NAACL-HLT, 2019).

Bekoulis, G., Deleu, J., Demeester, T. & Develder, C. Joint entity recognition and relation extraction as a multi-head selection problem. Expert Syst. Appl. 114, 34–45 (2018).

Zeng, S., Yuan, Z. & Yu, S. Automatic biomedical term clustering by learning fine-grained term representations. In Proc. 21st Workshop on Biomedical Language Processing 91–96. https://aclanthology.org/2022.bionlp-1.8 (Association for Computational Linguistics, 2022).

Wu, C., Wu, F., Qi, T. & Huang, Y. NoisyTune: a little noise can help you finetune pretrained language models better. In: Proc. 60th Annu. Meet. Assoc. Comput. Linguist. (Vol. 2: Short Papers), 680–685. (ACL, 2022).

Wang, Z., Jiang, Z., Nyberg, E. & Neubig, G. Table retrieval may not necessitate table-specific model design. arXiv https://doi.org/10.48550/arXiv.2205.09843; http://arxiv.org/abs/2205.09843 (2022).

PaddlePaddle/PaddleOCR. PaddlePaddle. original-date: 2020-05-08T10:38:16Z. Accessed 27 November 2023, https://github.com/PaddlePaddle/PaddleOCR (2023).

Singer-Vine, J. The pdfplumber contributors pdfplumber. original-date: 2015-08-24T03:14:48Z. https://github.com/jsvine/pdfplumber (2023).

Johnson, J., Douze, M. & Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 7, 535–547 (2019).

Vasilevsky, N. A. et al. Mondo: unifying diseases for the world, by the world. medRxiv https://doi.org/10.1101/2022.04.13.22273750 (2022).

Mungall, C. J., Torniai, C., Gkoutos, G. V., Lewis, S. E. & Haendel, M. A. Uberon, an integrative multi-species anatomy ontology. Genome Biol. 13, 5 (2012).

Ashburner, M. et al. Gene Ontology: tool for the unification of biology. Nat. Genet. 25, 25–29 (2000).

Paulheim, H., Ristoski, P. & Portisch, J. Embedding Knowledge Graphs with RDF2vec. Synthesis Lectures on Data, Semantics, and Knowledge. https://doi.org/10.1007/978-3-031-30387-6; https://link.springer.com/10.1007/978-3-031-30387-6 (Springer, 2023).

Gao, S. Collaborators MDKG: Mental Disorders Knowledge Graph Construction Code. https://github.com/MonsterTea/MDKG. Version used in this study. https://doi.org/10.5281/zenodo.15698237 (2025).

Lopez, O.L., Becker, J.T., Sweet, R.A., Martin-Sanchez, F.J. & Hamilton, R.L. Lewy bodies in the amygdala increase risk for major depression in subjects with Alzheimer disease. 67, 660–665. https://doi.org/10.1212/01.wnl.0000230161.28299.3c.

Acknowledgements

We thank the UK Biobank for providing access to the dataset used in this study. This work was supported by the National Key R&D Program of China (No. 2022YFA1003701; N.T.) and the Gilling Innovative Lab on Generative AI (H.Z.).

Author information

Authors and Affiliations

Contributions

S.G., K.Y., Y.Y., N.T., and H.Z. proposed the concept and designed the methodology. S.G., K.Y., Y.Y., and H.Z. supervised the project. S.G. and C.S. annotated the dataset. S.G. carried out the analysis and drafted the initial manuscript. S.G., K.Y., and Y.Y. developed the website. S.G., K.Y., Y.Y., S.Y., X.W., and H.Z. suggested revision ideas and revised the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Kexin Huang, Sebastian Olbrich, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gao, S., Yu, K., Yang, Y. et al. Large language model powered knowledge graph construction for mental health exploration. Nat Commun 16, 7526 (2025). https://doi.org/10.1038/s41467-025-62781-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-62781-z