Abstract

In applications, an anticipated issue is where the system of interest has never been encountered before and sparse observations can be made only once. Can the dynamics be faithfully reconstructed? We address this challenge by developing a hybrid transformer and reservoir-computing scheme. The transformer is trained without using data from the target system, but with essentially unlimited synthetic data from known chaotic systems. The trained transformer is then tested with the sparse data from the target system, and its output is further fed into a reservoir computer for predicting its long-term dynamics or the attractor. The proposed hybrid machine-learning framework is tested using various prototypical nonlinear systems, demonstrating that the dynamics can be faithfully reconstructed from reasonably sparse data. The framework provides a paradigm of reconstructing complex and nonlinear dynamics in the situation where training data do not exist and the observations are random and sparse.

Similar content being viewed by others

Introduction

In applications of complex systems, observations are fundamental to tasks such as mechanistic understanding, dynamics reconstruction, state prediction, and control. When the available data are complete in the sense that the data points are sampled according to the Nyquist criterion and no points are missing, it is possible to extract the dynamics or even find the equations of the system from data by sparse optimization1,2. In machine learning, reservoir computing has been widely applied to complex and nonlinear dynamical systems for tasks such as prediction3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23, control24, and signal detection25. Quite recently, Kolmogorov–Arnold networks (KANs)26, typically small neural networks, were proposed for discovering the dynamics from data, where even symbolic regression is possible in some cases to identify the exact mathematical equations and parameters. It has also been demonstrated27 that the KANs have the power of uncovering the dynamical system in situations where the methods of sparse optimization fail. In all these applications, an essential requirement is that the time-series data are complete in the Nyquist sense.

A challenging but not uncommon situation is where a new system is to be learned and eventually controlled based on limited observations. Two difficulties arise in this case. First, being new means that the system has not been observed before, so no previous data or recordings exist. If one intends to exploit machine learning to learn and reconstruct the dynamics of the system from observations, no training data are available. Second, the observations may be irregular and sparse: the observed data are not collected at some uniform time interval, e.g., as determined by the Nyquist criterion28, but at random times with the total data amount much less than that from Nyquist sampling. It is also possible that the observations can be made only once. The question is, provided with one-time sparse observations or time-series data, is it still possible to faithfully reconstruct the dynamics of the underlying system?

Limited observations or data occur in various real-world situations29,30. For example, ecological data gathered from diverse and dynamic environments inevitably contain gaps caused by equipment failure, weather conditions, limited access to remote locations, and temporal or financial constraints. Similarly, in medical systems and human activity tracking, data collection frequently suffers from issues such as patient noncompliance, recording errors, loss of followup, and technical failures. Wearable devices present additional challenges, including battery depletion, user error, signal interference from clothing or environmental factors, and inconsistent wear patterns during sleep or specific activities where devices may need to be removed. A common feature of these scenarios is that the available data are only from random times without any discernible patterns. This issue becomes particularly problematic when the data is sparse. Being able to reconstruct the dynamics from sparse and random data is particularly challenging for nonlinear dynamical systems due to the possibility of chaos leading to a sensitive dependence on small variations. For example, large errors may arise when predicting the values of the dynamical variables in various intervals in which data is missing. However, if training data from the same target system is available, machine learning can be effective for reconstructing the dynamics from sparse data31 (Additional background on machine-learning approaches is provided in Supplementary Note 1).

It is necessary to define what we mean by random and sparse data. We consider systems whose dynamics occur within certain finite frequency band. For chaotic systems with a broad power spectrum, in principle the cutoff frequency can be arbitrarily large, but power contained in a frequency range near and beyond the cutoff frequency can often be significantly smaller than that in the low frequency domain and thus can be neglected, leading realistically to a finite yet still large bandwidth (see Supplementary Note 3). A meaningful Nyquist sampling frequency can then be defined. An observational dataset being complete means that the time series are recorded at the regular time interval as determined by the Nyquist frequency with no missing points. In this case, the original signal can be faithfully reconstructed. Random and sparse data mean that some portion of the data points as determined by the Nyquist frequency are missing at random times. We aim to reconstruct the system dynamics from random and sparse observations by developing a machine-learning framework to generate smooth continuous time series. When the governing equations of the underlying system are unknown and/or when historical observations of the full dynamical trajectory of the system are not available, the resulting lack of any training data makes the reconstruction task quite challenging. Indeed, since the system cannot be fully measured and only irregularly observed data points are available, direct inference of the dynamical trajectory from these points is infeasible. Furthermore, the extent of the available observed data points and the number of data points to be interpolated can be uncertain.

In practice, the sampling rate Δs is chosen to generate the complete dataset of a dynamical system. It should ensure that the attractor remains sufficiently smooth while limiting the number of sampled points. Specifically, the norm of the first derivative of the chaotic signals is ensured to stay below a predefined small threshold. Let the total number of samples in the dataset be Ls and the actual number of randomly selected observational points be \({L}_{s}^{O}\). The sparsity measure of the dataset can be defined as \({S}_{m}=({L}_{s}-{L}_{s}^{O})/{L}_{s}\). However, this measure depends on the sampling rate of the dynamical system.

To quantitatively describe the extent of sparsity in the observational data from an information-theoretic perspective, we introduce a metric that incorporates the constraints from the Nyquist sampling theorem:

where \({L}_{s}^{N}=2{f}_{\max }\cdot T\) represents the minimum number of samples required according to the Nyquist theorem with \({f}_{\max }\) being the effective cutoff frequency of the signal and T the total time duration corresponding to Ls. In this definition, Sr = 0 indicates fully observed data (at the sampling rate), Sr = 1 corresponds to the theoretical minimum sampling case (at the Nyquist rate), and Sr > 1 indicates sub-Nyquist sampling where perfect reconstruction becomes theoretically impossible without additional constraints. Our framework takes into account not only high sparsity but also the randomness in observations. More information about the determination of cutoff frequency of chaotic systems can be found in Supplementary Note 3.

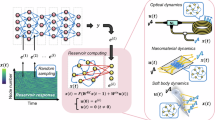

In this paper, we develop a transformer-based machine-learning framework to address the problem of dynamics reconstruction and prediction from random and sparse observations with no training data from the target system. Our key innovation is training a hybrid machine-learning framework in a laboratory environment using a variety of synthetic dynamical systems other than data from the target system itself, and deploy the trained architecture to reconstruct the dynamics of the target system from one-time sparse observations. More specifically, we exploit the machine-learning framework of transformers32 with training data not from the target system but from a number of known, synthetic systems that show qualitatively similar dynamical behaviors to those of the target system, for which complete data are available. The training process can thus be regarded as a laboratory-calibration process during which the transformer learns the dynamical rules generating the synthetic but complete data. The so-trained transformer is then deployed to a real application with the random and sparse data, and is expected to adapt to the unseen data and reconstruct the underlying dynamics. To enable long-term prediction of the target system, we exploit reservoir computing that has been demonstrated to be particularly suitable for predicting nonlinear dynamics3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22 by feeding the output of the transformer into the reservoir computer. The combination of transformer and reservoir computing constitutes a hybrid machine-learning framework. We demonstrate that it can successfully reconstruct the dynamics of approximately three dozen prototypical nonlinear systems with high reconstruction accuracy, providing a viable framework for reconstructing complex and nonlinear dynamics in situations where training data from the target system do not exist and the observations or measurements are insufficient.

Figure 1 highlights the challenge of reconstructing the dynamics from sparse data without training data. In particular, Fig. 1a shows the textbook case of a random time series uniformly sampled at a frequency higher than the Nyquist frequency, which can be completely reconstructed. To illustrate random and sparse data in an intuitive setting, we consider a set of six available data points from a unit time interval, as shown in Fig. 1b, c. The time interval contains approximately two periods of oscillation, which defines a local frequency denoted as flocal = 2. As the signal is chaotic or random, the cutoff frequency \({f}_{\max }\) in the power spectrum can be higher than the frequency represented by the two oscillation cycles as shown. As a concrete example, we assume \({f}_{\max }=3{f}_{{{{\rm{local}}}}}\), so the Nyquist frequency is fNyquist = 6flocal. If the signal is sampled at the corresponding Nyquist time interval ΔT = 1/fNyquist = 1/12, 12 data points would be needed. If these 12 points are sampled uniformly in time, then the signal in the two oscillation cycles can be reconstructed. The task becomes quite challenging due to two factors: the limited availability of only six data points and their random distribution across the unit time interval. Consider points #5 and #6, which occur during a downward oscillation cycle in the ground truth data. Accurately reconstructing this downward oscillation presents a key challenge. When training data from the same target system is available, standard machine learning techniques can faithfully reconstruct the dynamics31, as illustrated in Fig. 1b. However, without access to training data from the target system, previous methods were unable to reconstruct the dynamics from such sparse observations. A related question is, after the reconstruction, can the long-term dynamics or attractor of the system be predicted? We shall demonstrate that both the reconstruction and long-term prediction problems can be solved with hybrid machine learning, as schematically illustrated in Fig. 1d–f.

a The textbook case of a random time series sampled at a frequency higher than the Nyquist frequency. b Training data from the target system (left) and a segment of time series of six data points in a time interval containing approximately two cycles of oscillation. According to the Nyquist criterion, the signal can be faithfully reconstructed with more than 12 uniformly sampled data points (see text). When the data points are far fewer than 12 and are randomly sampled, reconstruction becomes challenging. However, if training data from the same target system are available, existing machine-learning methods can be used to reconstruct the dynamics from the sparse data31. c If no training data from the target system are available, hybrid machine learning proposed here provides a viable solution to reconstructing the dynamics from sparse data. d Problem statement. Given random and sparse data, the goal is to reconstruct the dynamics of the target system governed by dx/dt = f(x, t). A hurdle that needs to be overcome is that, for any given three points, there exist infinitely many ways to fit the data, as illustrated on the right side. e Training of the machine-learning framework using complete data from a large number of synthetic dynamical systems [h1, h2, ⋯ , hk]. The framework is then adapted to reconstruct and predict the dynamics of the target systems [f1, ⋯ , fm]. f An example: in the testing (deployment) phase, sparse observations are provided to the trained neural network for dynamics reconstruction.

Results

We test our approach on three nonlinear dynamical systems in the deployment phase: a three-species chaotic food-chain system33, the classic chaotic Lorenz system34, and Lotka–Volterra system35. The transformer had no prior exposure to these systems during its training (adaptation) phase. We use sparse observational data from each system to reconstruct their underlying dynamics. For clarity, in the main text, we present the results from the food-chain system, with those the other two testing systems in Supplementary Information.

Altogether, 28 synthetic chaotic systems with same dimensions as the target systems are used to train the transformer, enabling it to learn to extract dynamic behaviors from sparse observations (see Supplementary Note 2 for a detailed description). To enable the transformer to handle data from new, unseen systems of arbitrary time series length Ls and sparsity Sr, we employ the following strategy at each training step: (1) randomly selecting a system from the pool of synthetic chaotic systems and (2) preprocessing the data from the system using a uniformly distributed time series length \({L}_{s} \sim U(1,{L}_{s}^{\max })\), and uniformly distributed sparse measure Sm ~ U(0, 1). By so doing, we prevent the transformer from learning any specific system dynamics too well, encouraging it to treat each set of inputs as a new system. In addition, the strategy teaches the transformer to master as many features as possible. Figure 2a illustrates the training phase, with examples shown on the left side. On the right side, the sampled examples are encoded and fed into the transformer. The performance is evaluated by MSE loss and smoothness loss between the output and ground truth, and is used to update the neural network weights. For predicting the long-term dynamics, reservoir computing (Supplementary Note 4) is used. (Hyperparameter optimization for both the transformer and reservoir computer is described in Supplementary Note 5).

a Training (adaptation) phase, where the model is trained on various synthetic chaotic systems, each divided into segments with uniformly distributed sequence lengths Ls and sparsity measure Sm. The data is masked before being input into the transformer, and the ground truth is used to minimize the MSE (mean squared error) loss and smoothness loss with the output. By learning a randomly chosen segment from a random training system each time, the transformer is trained to handle data with varying lengths and different levels of sparsity. b Testing (deployment) phase. The testing systems are distinct from those in the training phase, i.e., the transformer is not trained on any of the testing systems. Given sparsely observed set of points, the transformer is able to reconstruct the dynamical trajectory.

Dynamics reconstruction

The three species food-chain system33 is described by

where R, C, and P are the population densities of the resource, consumer, and predator species, respectively. The system has seven parameters: K, xc, yc, xp, yp, R0, C0 > 0. Figure 2b presents an example of reconstructing the dynamics of the chaotic food-chain system for Ls = 2000 and Sr = 1 (Sm = 0.86). The target output time series for each dimension should contain 2000 points (about 40 cycles of oscillation), but only randomly selected \({L}_{s}^{N}=280\) points are exposed to the trained transformer. The right side of Fig. 2b shows the reconstructed time series, where the three dynamical variables are represented by different colors, the black points indicate observations, and the gray dashed lines are the ground truth. Only a segment of a quarter of the points is displayed. This example demonstrates that, with such a high level of sparsity, directly connecting the observational points will lead to significant errors. Instead, the transformer infers the dynamics by filling the gaps with the correct dynamical behavior. It is worth emphasizing that the testing system has never been exposed to the transformer during the training phase, requiring the neural machine to explore the underlying unknown dynamics from sparse observations based on experience learned from other systems. Extensive results with varying values of the parameters Ls and Sr for the three testing systems can be found in Supplementary Note 6.

Performance of dynamics reconstruction

To characterize the performance of dynamics reconstruction, we use two measures: MSE and prediction stability Rs(MSEc), the probability that the transformer generates stable predictions (see “Methods”). Figure 3a shows the working of the framework in the testing phase: a well-trained transformer receives inputs from previously unseen systems, with random sequence length Ls and sparsity Sr, and is able to reconstruct the dynamics. Some representative time-series segments of the reconstruction and the ground truth are displayed. Figure 3b, c depict the ensemble-averaged reconstruction performance for the chaotic food-chain system. As Ls increases and Sr decreases, the transformer can gain more information to facilitate reconstructions. When the available data become more sparse, the performance degrades. Overall, under conditions with random noisy observations, satisfactory reconstruction of new dynamics can be achieved for Sr ≤ 1.0 and a sequence length larger than 500 (about 10 cycles of oscillation).

a Illustration of reconstruction results for the chaotic food-chain and Lotka–Volterra systems as the testing targets that the transformer has never been exposed to. For each target system, two sets of sparse measurements of different length Ls and sparsity Sr are shown. The trained transformer reconstructs the complete time series in each case. b Color-coded ensemble-averaged MSE values in the parameter plane (Ls, Sr) (b1). Examples of testing MSE versus Sr and Ls only are shown in (b2) and (b3), respectively. c Ensemble-averaged reconstruction stability indicator Rs(MSEc) versus Sr and Ls, the threshold MSE is MSEc = 0.01. d Robustness of dynamics reconstruction against noise: ensemble-averaged MSE in the parameter plane (σ, Sr) (d1) and (σ, Ls) (d2), with σ being the noise amplitude. An example of reconstruction under noise of amplitude σ = 0.1 is shown in (d3). The values of the performance indicators are the result of averaging over 50 independent statistical realizations.

It is essential to assess how noise affects the dynamics reconstruction. Figure 3d shows the effects of the multiplicative noise (see “Methods”) on the reconstruction performance. The results indicate that, for reasonably small noise (e.g., σ < 10−1), robust reconstruction with relatively low MSE values can be achieved. We have also studied the effect of additive noise, with results presented in Supplementary Note 7.

Key features of dynamics reconstruction

Transformer has the ability to reconstruct the time series of previously unknown dynamical systems, particularly under high sparsity. This capability stems from the generalizability of the transformer during its training on sufficiently diverse chaotic systems with large data. Here we study how reconstruction performance depends on the number of training systems. Specifically, we train the transformer on k chaotic systems, where k ranges from 1 to 28. For each value of k, we randomly sample a subset from the pool of 28 chaotic systems and calculate the average MSE over 50 iterations. To ensure robustness, the MSE is averaged across the sparsity measure Sm whose value ranges from 0 to 1 at the interval of 0.05. As shown in Fig. 4a, the MSE decreases with increasing k following a power-law trend with saturation, demonstrating that training our transformer-based framework on a diverse of chaotic systems with sufficient data is crucial for successful dynamics reconstruction. Moreover, once the model has acquired this generalization ability, it can infer the governing dynamics of new systems from sparse observations. To demonstrate this, we have shown that our trained transformer performs well on 28 additional unseen target systems36 (Supplementary Note 12).

a Power-law decrease of MSE as the number of training systems k increase. b Scaled frequency \({f}_{s}^{d}\) of training and target systems. c Example of a time series reconstructed by the transformer, compared with linear and spline interpolations, shown in blue, green, and orange, respectively. Traditional interpolation methods fail to recover the time series accurately due to their inability to capture the underlying dynamics. d MSE versus sparsity. While all methods perform similarly under low sparsity, the transformer outperforms the other two methods in reconstructing dynamics when the observational points are sparse. In all cases, 50 independent realizations are used. Error bars and shaded areas represent standard deviations across these realizations.

To assess the frequency properties of the training and target systems more systematically, we analyze the power spectral density (PSD) for each system. The calculated PSDs are shown in Supplementary Note 3. Since each chaotic system is assigned a different sampling time Δs, chosen to ensure a smooth attractor while limiting the number of sample points, it is important to also compare the experimental frequencies in a consistent manner. We normalize both the dominant frequency by the sampling time to achieve this. Specifically, we compute the scaled dominant frequency as \({f}_{d}^{s}={f}_{d}\cdot \Delta s\) for each system, ensuring a fair comparison across the systems. As depicted in Fig. 4b, \({f}_{d}^{s}\) exhibits a broad distribution across the systems. It can be seen that the target systems fall within the wide range of the training systems in both measures, contributing to the strong generalizability observed.

While our method is applicable across a wide range of sparsity, traditional techniques such as linear and spline interpolations can also achieve high accuracy when the sparsity level is low. These classical methods are simple and computationally efficient, and perform adequately in regimes with sufficient observational data. However, as the data sparsity level increases, the limitations of traditional interpolation become evident. To explicitly demonstrate this, we take two examples of traditional interpolation methods as an example: linear and spline interpolation, where the former approximates missing values by connecting the nearest available data points with straight lines and the latter constructs piecewise polynomial functions to produce smooth transitions between observed points37,38. Both methods, despite their simplicity, by design lack the capacity to capture the intrinsic dynamics of complex systems. Figure 4c shows a representative example for sparsity Sr = 1.0 (Sm = 0.86), where the transformer successfully reconstructs the underlying dynamics, but the linear and spline interpolation methods fail to recover the correct temporal structure.

To quantify the performance, we calculate the MSE between the reconstructed time series and the ground truth. Figure 4d shows the reconstruction performance across a range of sparsity levels for a fixed sequence length, Ls = 2000 (approximately 400 oscillation cycles). Results are averaged over 50 independent realizations, with shadowed areas indicating the standard deviation. When the sparsity measure Sr is low, all three methods—transformer, linear, and spline interpolation—perform comparably. However, once Sr exceeds approximately 0.7, the transformer begins to outperform the other methods, with its advantage becoming more pronounced as the available data become increasingly more sparse.

In addition to traditional interpolation methods, compressed sensing (CS) can also work as a signal reconstruction framework. CS assumes the the signal is sparse in a known basis and often employs optimization-based recovery39,40. It is important to note that the definition of the term sparse in CS is referred to as the signal having only a few non-zero components when expressed in an appropriate basis (e.g., Fourier or wavelet). However, the strict assumption can limit the applicability of CS. In contrast, our method is model-free, data-driven, and capable of generalizing across unseen complex dynamical systems, regardless of the dynamics are sparse or not. Simulation results show that, for a target system, when the observational sparsity is low to moderate, CS performs better than the transformer. However, when the observational sparsity is high, our hybrid machine-learning framework outperforms CS significantly (Supplementary Note 10). Hereafter, the term high sparsity in this study is used to mean not only a limited number of available observations but also the failure to reconstruct the system accurately at this level of sparsity by the traditional methods such as linear and spline interpolation, CS, and conventional machine-learning techniques.

Prediction of long-term dynamical climate

The results presented so far are for reconstruction of relatively short-term dynamics, where the sequence length Ls is limited to below 3000, corresponding to approximately 60 cycles of dynamical oscillation in the data. Can the long-term dynamical behavior or climate as characterized by, e.g., a chaotic attractor, be faithfully predicted? To address this question, we note that reservoir computing has the demonstrated ability to generate the long-term behavior of chaotic systems5,7,13,16,21. Our solution is then employing reservoir computing to learn the output time series generated by the transformer so as to further process the reconstructed time series. The trained reservoir computer can predict or generate any length of time series of the target system, as exemplified in Fig. 5a. It can be seen that the reservoir-computing generated attractor agrees with the ground truth. More details about reservoir computing, its training and testing can be found in Supplementary Note 4.

a An illustration of hybrid transformer/reservoir-computing framework. The time series reconstructed by the transformer is used to train the reservoir computer that generates time series of the target system of arbitrary length, leading to a reconstructed attractor that agrees with the ground truth. b RMSE and DV versus the sparsity parameter. Shaded areas represent the standard deviation. c Color-coded ensemble-averaged DV in the reservoir-computing hyperparameter plane (Tl, Ns) for Sr = 0.93 (Sm = 0.8). d DV versus training length Tl for Ns = 500 and versus reservoir network size Ns for Tl = 105. In all cases, 50 independent realizations are used.

To evaluate the performance of the reservoir-computing generated long-term dynamics, we use two measures: root MSE (RMSE) and deviation value (DV) (“Methods”). Figure 5b presents the short- and long-term prediction performance by comparing the reservoir-computing predicted attractors with the ground truth. We calculate the RMSE using a short-term prediction length of 150 (corresponding to approximately 3 cycles of oscillation), and the DV using a long-term prediction length of 10,000 (approximately to 200 cycles of oscillation). The reconstructed time series and attractors are close to their respective ground truth when the sparsity parameter Sr is below 0.93, i.e., sparse measure is below 0.8, as indicated by the low RMSE and DV values. The number of available data segments from the target system tends to have a significant effect on the prediction accuracy. Figure 5c, d show the dependence of the DV on two reservoir-computing hyperparameters: the training length Tl and the reservoir network size Ns. As the training length and network size increase, DV decreases, indicating improved performance.

Discussion

Exploiting machine learning to understand, predict and control the behaviors of nonlinear dynamical systems have demonstrated remarkable success in solving previously deemed difficult problems24,41. However, an essential prerequisite for these machine-learning studies is the availability of training data. Often, extensive and uniformly sampled data of the target system are required for training. In addition, in most previous works, training and testing data are from the same system, with a focus on minimizing the average training errors on the specific system and greedily improving the performance by incorporating all correlations within the data (iid—independently and identically distributed assumption). While the iid setting can be effective, unforeseen distribution shifts during testing or deployment can cause the optimization purely based on the average training errors to perform poorly42. Several strategies have been proposed to handle nonlinear dynamical systems. One approach trains neural networks using data from the same system under different parameter regimes, enabling prediction of new dynamical behaviors including critical transitions16. Another method uses data from multiple systems to train neural networks in tasks like memorizing and retrieving complex dynamical patterns43,44. However, this latter approach fails when encountering novel systems not present in the training data. Meta-learning has been shown to achieve satisfactory performance with only limited data, but training data from the target systems are still required to fine-tune the network weights45. In addition, a quite recent work used well-defined, pretrained large language models not trained using any chaotic data and showed that these models can predict the short-term and long-term dynamics of chaotic systems46.

We have developed a hybrid transformer-based machine-learning framework to construct the dynamics of target systems, under two limitations: (1) the available observational data are random and sparse and (2) no training data from the system are available. We have addressed this challenge by training the transformer using synthetic or simulated data from numerous chaotic systems, completely excluding data from the target system. This allows direct application to the target system without fine-tuning. To ensure the transformer’s effectiveness on previously unseen systems, we have implemented a triple-randomness training regime that varies the training systems, input sequence length, and sparsity level. As a result, the transformer will treat each dataset as a new system, rather than adequately learning the dynamics of any single training system. This process continues with data from different chaotic systems with random input sequence length and sparsity until the transformer is experienced and able to perceive the underlying dynamics from the sparse observations. The end result of this training process is that the transformer gains knowledge through its experience by adapting to the diverse synthetic datasets. It is worth noting that the dimension of the systems (i.e., the number of variables) provided to the transformer in the inference phase should match those in the testing phase. During the testing or deployment phase, the transformer reconstructs dynamics from sparse data of arbitrary length and sparsity drawn from a completely new dynamical system. When multiple segments of sparse observations are available, we were able to reconstruct the system’s long-term climate through a two-step process. First, the transformer repeatedly reconstructs system dynamics from these data segments. Second, reservoir computing uses these transformer-reconstructed dynamics as training data to generate system evolution over any time duration. The combination of the transformer and reservoir computing constitutes our hybrid machine-learning framework, enables reconstruction of the target system’s long-term dynamics and attractor from sparse data alone.

We emphasize the key feature of our hybrid framework: reconstructing the dynamics from sparse observations of an unseen dynamical system, even when the available data has a high degree of sparsity. We have tested the framework on two benchmark ecosystems and one classical chaotic system. In all cases, with extensive training conducted on synthetic datasets under diverse settings, accurate and robust reconstruction has been achieved. Empirically, the minimum requirements for the transformer to be effective are: the dataset from the target system should have the length of at least 20 average cycles of its natural oscillation and the sparsity degree is less than 1. For subsequent learning by reservoir computing, at least three segments of the time series data from the transformer are needed for reconstructing the attractor of the target system. We have also addressed issues such as the effect of noise and hyperparameter optimization. The key to the success of the hybrid framework lies in versatile dynamics: with training based on the dynamical data from a diverse array of synthetic systems, the transformer will gain the ability to reconstruct the dynamics of the never-seen target systems. In essence, the reconstruction performance on unseen target systems follows a power-law trend with respect to the number of synthetic systems used during the training phase. We have provided a counter example that, when dynamics are lacking in the time series, the framework fails to perform the reconstruction task (Supplementary Note 8).

It is worth noting that both the training and target dynamical systems in our experiments are autonomous. However, real-world systems can often be nonautonomous. To adapt the framework to target nonautonomous systems, we have developed a mixed training strategy that involves both autonomous and nonautonomous systems (Supplementary Note 9). With regard to long-term prediction, climate dynamics are not stationary but often time-variant, i.e., nonautonomous. When providing the reservoir computer with high-fidelity outputs generated by the transformer from sparse observations, long-term climate prediction becomes feasible. Moreover, we have demonstrated the superiority of our proposed hybrid machine-learning scheme to traditional interpolation methods, traditional recurrent neural networks, and CS (Supplementary Note 10). Additional results from chaotic systems are presented in Supplementary Note 12. While the proposed machine-learning framework demonstrates promising performance on most of the target systems, we note that there are few cases where the transformer struggles to produce accurate reconstruction due to the particularly complex dynamics and high sparsity observation (Supplementary Notes 11 and 12).

A question is whether our proposed framework can function as a universal. The observed power-law relationship between the reconstruction error and training set diversity suggests its potential to function as a foundation model. In addition, extensive experiments on a broad set of target systems have demonstrated the emergence of extrapolation capabilities, where the model utilizes global patterns in sparse observations to infer the underlying dynamics. However, establishing the theoretical base for this generalization remains challenging. Classical statistical learning theory (e.g., VC dimension, bias-variance trade-off) has proven inadequate in explaining the empirical success of over parameterized deep neural networks47,48. As stated in the Standford CRFM report49, the community currently has a quite limited theoretical understanding of foundation models. Our current insights are empirical and based on physical intuition, warranting the development of a rigorous formalization of the inner mechanisms of the proposed hybrid machine-learning framework.

Moreover, there are constraints related to data segment length and sparsity. Specifically, performance deteriorates if the available data is insufficient or the sparsity exceeds a critical threshold. These thresholds are not universal across different systems, and determining them for a new target system is challenging. A reasonable solution is to supply longer observation windows or more segments when possible. In this work, we have provided such conditions through extensive experiments and the statistical results consistently reveal trends that are likely to generalize to other systems with similar levels of sparsity and sequence length. It is worth mentioning that this challenge in fact emerges commonly in time series analysis. For example, while weather forecasts can be extensively validated using historical data, it remains fundamentally uncertain whether predictions for the coming days will be accurate. Only statistical confidence can be offered.

Overall, our hybrid transformer/reservoir-computing framework has been demonstrated to be effective for dynamics reconstruction and prediction of long-term behavior in situations where only sparse observations from a newly encountered system are available. In fact, such a situation is expected to arise in different fields. Possible applications extend to medical and biological systems, particularly in wearable health monitoring where data collection is often interrupted. For instance, smartwatches and fitness trackers regularly experience gaps due to charging, device removal during activities like swimming, or signal interference. Another potential application is predicting critical transitions from sparse and noisy observations, such as detecting when an athlete’s performance metrics indicate approaching over training, or when a patient’s vital signs suggest an impending health event. In these cases, our hybrid framework can reconstruct complete time series from incomplete wearable device data, serving as input to parameter-adaptable reservoir computing16,50 for anticipating these critical transitions. This approach is particularly valuable for continuous health monitoring where data gaps are inevitable, whether from smart devices being charged, removed, or experiencing connectivity issues.

Methods

Hybrid machine learning

Consider a nonlinear dynamical system described by

where \({{{\bf{x}}}}(t)\in {{\mathbb{R}}}^{D}\) is a D-dimensional state vector and F(⋅) is the unknown velocity field. Let \({{{\bf{X}}}}={({{{{\bf{x}}}}}_{0},\cdots,{{{{\bf{x}}}}}_{{L}_{s}})}^{\top }\in {{\mathbb{R}}}^{{L}_{s}\times D}\) be the full uniformly sampled data matrix of dimension Ls × D with each dimension of the original dynamical variable containing Ls points. A sparse observational vector can be expressed as

where \(\tilde{{{{\bf{X}}}}}\in {{\mathbb{R}}}^{{L}_{s}\times D}\) is the observational data matrix of dimension Ls × D and gα( ⋅ ) is the following element-wise observation function:

with α representing the probability of matrix element Xij being observed. In Eq. (4), Gaussian white noise of amplitude σ is present during the measurement process, where \(\Xi \sim {{{\mathcal{N}}}}(0,1)\). Our goal is utilizing machine learning to approximate the system dynamics function F( ⋅ ) by another function \({{{{\bf{F}}}}}^{{\prime} }(\cdot )\), assuming that F is Lipschitz continuous with respect to x and the observation function produces sparse data: \({{{\bf{g}}}}:{{{\bf{X}}}}\to \tilde{{{{\bf{X}}}}}\). To achieve this, it is necessary to design a function \({{{\mathcal{F}}}}(\tilde{{{{\bf{X}}}}})={{{\bf{X}}}}\) that comprises implicitly \({{{{\bf{F}}}}}^{{\prime} }(\cdot )\approx {{{\bf{F}}}}(\cdot )\) so that it reconstructs the system dynamics by filling the gaps in the observation, where \({{{\mathcal{F}}}}(\tilde{{{{\bf{X}}}}})\) should have the capability of adapting to any given unknown dynamics.

Selecting an appropriate neural network architecture for reconstructing dynamics from sparse data requires meeting two fundamental requirements: (1) dynamical memory to capture long-range dependencies in the sparse data, and (2) flexibility to handle input sequences of varying lengths. Transformers32, originally developed for natural language processing, satisfy these requirements due to their basic attention structure. In particular, transformers has been widely applied and proven effective for time series analysis, such as prediction51,52,53, anomaly detection54, and classification55. Figure 6 illustrates the transformer’s main structure. The data matrix \(\tilde{{{{\bf{X}}}}}\) is first processed through a linear fully-connected layer with bias, transforming it into an Ls × N matrix. This output is then combined with a positional encoding matrix, which embeds temporal ordering information into the time series data. This projection process can be described as56:

where \({{{{\bf{W}}}}}_{p}\in {{\mathbb{R}}}^{D\times N}\) represents the fully-connected layer with the bias matrix \({{{{\bf{W}}}}}_{b}\in {{\mathbb{R}}}^{{L}_{s}\times N}\) and the position encoding matrix is \({{{\bf{PE}}}}\in {{\mathbb{R}}}^{{L}_{s}\times N}\). Since the transformer model does not inherently capture the order of the input sequence, positional encoding is necessary to provide the information about the position of each time step. For a given position \(1\le {{{\rm{pos}}}}\le {L}_{s}^{max}\) and dimension 1 ≤ d ≤ D, the encoding is given by

The projected matrix \({{{{\bf{X}}}}}_{p}\in {{\mathbb{R}}}^{{L}_{s}\times N}\) then serves as the input sequence for Nb attention blocks. Each block contains a multi-head attention layer, a residual layer (add & layer norm), and a feed-forward layer, and a second residual layer. The core of the transformer lies in the self-attention mechanism, allowing the model to weight the significance of distinct time steps. The multi-head self-attention layer is composed of several independent attention blocks. The first block has three learnable weight matrices that linearly map Xp into query Q1 and key K1 of the dimension Ls × dk and value V1 of the dimension Ls × dv:

where \({{{{\bf{W}}}}}_{{{{{\bf{Q}}}}}_{1}}\in {{\mathbb{R}}}^{N\times {d}_{k}}\), \({{{{\bf{W}}}}}_{{{{{\bf{K}}}}}_{1}}\in {{\mathbb{R}}}^{N\times {d}_{k}}\), and \({{{{\bf{W}}}}}_{{{{{\bf{V}}}}}_{1}}\in {{\mathbb{R}}}^{N\times {d}_{v}}\) are the trainable weight matrices, dk is the dimension of the queries and keys, and dv is the dimension of the values. A convenient choice is dk = dv = N. The attention scores between the query Q1 and the key K1 are calculated by a scaled multiplication, followed by a softmax function:

where \({{{{\bf{A}}}}}_{{{{{\bf{Q}}}}}_{1},{{{{\bf{K}}}}}_{1}}\in {{\mathbb{R}}}^{{L}_{s}\times {L}_{s}}\). The softmax function normalizes the data with \({{{\rm{softmax}}}}({x}_{i})=\exp ({x}_{i})/{\sum }_{j}\exp ({x}_{j})\), and the \(\sqrt{{d}_{k}}\) factor mitigates the enlargement of standard deviation due to matrix multiplication. For the first head (in the first block), the attention matrix is computed as a dot product between \({{{{\bf{A}}}}}_{{{{{\bf{Q}}}}}_{1},{{{{\bf{K}}}}}_{1}}\) and V1:

where \({{{{\bf{O}}}}}_{11}\in {{\mathbb{R}}}^{{L}_{s}\times {d}_{v}}\). The transformer employs multiple (h) attention heads to capture information from different subspaces. The resulting attention heads O1i (i = 1, …, h) are concatenated and mapped into a sequence \({{{{\bf{O}}}}}_{1}\in {{\mathbb{R}}}^{{L}_{s}\times N}\), described as:

where \({{{\mathcal{C}}}}\) is the concatenation operation, h is the number of heads, and \({{{{\bf{W}}}}}_{o1}\in {{\mathbb{R}}}^{h{d}_{v}\times N}\) is an additional matrix for linear transformation for performance enhancement. The output of the attention layer undergoes a residual connection and layer normalization, producing XR1 as follows:

A feed-forward layer then processes this data matrix, generating output \({{{{\bf{X}}}}}_{F1}\in {{\mathbb{R}}}^{{L}_{s}\times N}\) as:

where \({{{{\bf{W}}}}}_{{F}_{a}}\in {{\mathbb{R}}}^{N\times {d}_{f}}\), \({{{{\bf{W}}}}}_{{F}_{b}}\in {{\mathbb{R}}}^{{d}_{f}\times N}\), ba and bb are biases, and \(\max (0,\cdot )\) denotes a ReLU activation function. This output is again subjected to a residual connection and layer normalization.

The output of the first block operation is used as the input to the second block. The same procedure is repeated for each of the remaining Nb–1 blocks. The final output passes through a feed-forward layer to generate the prediction. Overall, the whole process can be represented as \({{{\bf{Y}}}}={{{\mathcal{F}}}}(\tilde{{{{\bf{X}}}}})\).

The second component of our hybrid machine-learning framework is reservoir computing, which takes the output of the transformer as the input to reconstruct the long-term climate or attractor of the target system. A detailed description of reservoir computing used in this context and its hyperparameters optimization are presented in Supplementary Notes 4 and 5.

Machine learning loss

To evaluate the reliability of the generated output, we minimize a combined loss function with two components: (1) a mean squared error (MSE) loss that measures absolute error between the output and ground truth, and (2) a smoothness loss that ensures the output maintains appropriate continuity. The loss function is given by

where α1 and α2 are scalar weights controlling the trade-off between the two loss terms. The first component \({{{{\mathcal{L}}}}}_{{{{\rm{mse}}}}}\) measures the absolute error between the predictions and the ground truth:

with n being the total number of data points, yi and \({\hat{y}}_{i}\) denoting the ground truth and predicted value at time point i, respectively. The second component \({{{{\mathcal{L}}}}}_{{{{\rm{smooth}}}}}\) of the loss function consists of two terms: Laplacian regularization and total variation regularization, which penalize the second-order differences and absolute differences, respectively, between consecutive predictions. The two terms are given by:

and

We assign the same weights to the two penalties, so the final combined loss function to be minimized is

We set αs = 0.1. It is worth noting that the smoothness penalty is a crucial hyperparameter that should be carefully selected. Excessive smoothness leads the model to learn overly coarse-grained dynamics, while absence of a smoothness penalty causes the reconstructed curves to exhibit poor smoothness (Supplementary Note 5).

Computational setting

Unless otherwise stated, the following computational settings for machine learning are used. Given a target system, time series are generated numerically by integrating the system with time step dt = 0.01. The initial states of both the dynamical process and the neural network are randomly set from a uniform distribution. An initial phase of the time series is removed to ensure that the trajectory has reached the attractor. The training and testing data are obtained by sampling the time series at the interval Δs chosen to ensure an acceptable generation. Specifically, for the chaotic food-chain, Lorenz and Lotka–Volterra systems, we set Δs = 1, Δs = 0.02, and Δs = 1 respectively, corresponding to approximately 1 over 30 ~ 50 cycles of oscillation. A similar procedure is also applied to other synthetic chaotic systems (See Table S3 for Δs values for each system). The time series data are preprocessed by using min-max normalization so that they are in the range [0,1]. The complete data length for each system is 1,500,000 (about 30,000 cycles of oscillation), which is divided into segments with randomly chosen sequence lengths Ls and sparsity Sr. For the transformer, we use a maximum sequence length of 3000 (corresponding to about 60 cycles of oscillation)—the limitation of input time series length. We apply Bayesian optimization57 and a random search algorithm58 to systematically explore and identify the optimal set of various hyperparameters. Two chaotic Sprott systems—Sprott0 and Sprott1—are used as validation systems to find the optimal hyperparameters and to train the final model weights, ensuring no data leakage from the testing systems. The optimized hyperparameters for the transformer are listed in Table 1. All simulations are run using Python on computers with six RTX A6000 NVIDIA GPUs. A single training run of our framework typically takes about 30 min using one of the GPUs.

Prediction stability

The prediction stability describe the probability that the transformer generates stable predictions, which is defined as the probability that the MSE is below a predefined stable threshold MSEc:

where n is the number of iterations and [ ⋅ ] = 1 if the statement inside is true and zero otherwise.

Deviation value

For a three-dimensional target system, we divide the three-dimensional phase space into a uniform cubic lattice with the cell size Δ = 0.05 and count the number of trajectory points in each cell, for both the predicted and true attractors in a fixed time interval. The DV measure is defined as21

where mx, my, and mz are the total numbers of cells in the x, y, and z directions, respectively, fi,j,k and \({\hat{f}}_{i,j,k}\) are the frequencies of visit to the cell (i, j, k) by the predicted and true trajectories, respectively. If the predicted trajectory leaves the phase space boundary, we count it as if it has landed in the boundary cells where the true trajectory never goes.

Noise implementation

We study how two types of noise affect the dynamics reconstruction in this work: multiplicative and additive noise. We use normally distributed stochastic processes of zero mean and standard deviation σ, while the former perturbs the observational points x to x + x ⋅ ξ after normalization and the latter perturbs x to x + ξ. Note that multiplicative (demographic) noise is common in ecological systems.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The data generated in this study is publicly available via Zenodo at https://doi.org/10.5281/zenodo.1401497459.

Code availability

The code is publicly available via Zenodo at https://doi.org/10.5281/zenodo.1427934760 and via GitHub at https://github.com/Zheng-Meng/Dynamics-Reconstruction-ML.

References

Wang, W.-X., Yang, R., Lai, Y.-C., Kovanis, V. & Grebogi, C. Predicting catastrophes in nonlinear dynamical systems by compressive sensing. Phys. Rev. Lett. 106, 154101 (2011).

Lai, Y.-C. Finding nonlinear system equations and complex network structures from data: a sparse optimization approach. Chaos 31, 082101 (2021).

Haynes, N. D., Soriano, M. C., Rosin, D. P., Fischer, I. & Gauthier, D. J. Reservoir computing with a single time-delay autonomous Boolean node. Phys. Rev. E 91, 020801 (2015).

Larger, L. et al. High-speed photonic reservoir computing using a time-delay-based architecture: million words per second classification. Phys. Rev. X 7, 011015 (2017).

Pathak, J., Lu, Z., Hunt, B., Girvan, M. & Ott, E. Using machine learning to replicate chaotic attractors and calculate Lyapunov exponents from data. Chaos 27, 121102 (2017).

Lu, Z. et al. Reservoir observers: model-free inference of unmeasured variables in chaotic systems. Chaos 27, 041102 (2017).

Pathak, J., Hunt, B., Girvan, M., Lu, Z. & Ott, E. Model-free prediction of large spatiotemporally chaotic systems from data: a reservoir computing approach. Phys. Rev. Lett. 120, 024102 (2018).

Carroll, T. L. Using reservoir computers to distinguish chaotic signals. Phys. Rev. E 98, 052209 (2018).

Nakai, K. & Saiki, Y. Machine-learning inference of fluid variables from data using reservoir computing. Phys. Rev. E 98, 023111 (2018).

Roland, Z. S. & Parlitz, U. Observing spatio-temporal dynamics of excitable media using reservoir computing. Chaos 28, 043118 (2018).

Griffith, A., Pomerance, A. & Gauthier, D. J. Forecasting chaotic systems with very low connectivity reservoir computers. Chaos 29, 123108 (2019).

Tanaka, G. et al. Recent advances in physical reservoir computing: a review. Neu. Net. 115, 100–123 (2019).

Fan, H., Jiang, J., Zhang, C., Wang, X. & Lai, Y.-C. Long-term prediction of chaotic systems with machine learning. Phys. Rev. Res. 2, 012080 (2020).

Klos, C., Kossio, Y. F. K., Goedeke, S., Gilra, A. & Memmesheimer, R.-M. Dynamical learning of dynamics. Phys. Rev. Lett. 125, 088103 (2020).

Chen, P., Liu, R., Aihara, K. & Chen, L. Autoreservoir computing for multistep ahead prediction based on the spatiotemporal information transformation. Nat. Commun. 11, 4568 (2020).

Kong, L.-W., Fan, H.-W., Grebogi, C. & Lai, Y.-C. Machine learning prediction of critical transition and system collapse. Phys. Rev. Res. 3, 013090 (2021).

Patel, D., Canaday, D., Girvan, M., Pomerance, A. & Ott, E. Using machine learning to predict statistical properties of non-stationary dynamical processes: system climate, regime transitions, and the effect of stochasticity. Chaos 31, 033149 (2021).

Kim, J. Z., Lu, Z., Nozari, E., Pappas, G. J. & Bassett, D. S. Teaching recurrent neural networks to infer global temporal structure from local examples. Nat. Machine Intell. 3, 316–323 (2021).

Bollt, E. On explaining the surprising success of reservoir computing forecaster of chaos? The universal machine learning dynamical system with contrast to VAR and DMD. Chaos 31, 013108 (2021).

Gauthier, D. J., Bollt, E., Griffith, A. & Barbosa, W. A. Next generation reservoir computing. Nat. Commun. 12, 1–8 (2021).

Zhai, Z.-M., Kong, L.-W. & Lai, Y.-C. Emergence of a resonance in machine learning. Phys. Rev. Res. 5, 033127 (2023).

Yan, M. et al. Emerging opportunities and challenges for the future of reservoir computing. Nat. Commun. 15, 2056 (2024).

Panahi, S. et al. Machine learning prediction of tipping in complex dynamical systems. Phys. Rev. Res. 6, 043194 (2024).

Zhai, Z.-M. et al. Model-free tracking control of complex dynamical trajectories with machine learning. Nat. Commun. 14, 5698 (2023).

Zhai, Z.-M., Moradi, M., Kong, L.-W. & Lai, Y.-C. Detecting weak physical signal from noise: a machine-learning approach with applications to magnetic-anomaly-guided navigation. Phys. Rev. Appl. 19, 034030 (2023).

Liu, Z. et al. KAN: Kolmogorov-Arnold networks. Preprint at https://arxiv.org/abs/2404.19756 (2024).

Panahi, S., Moradi, M., Bollt, E. M. & Lai, Y.-C. Data-driven model discovery with Kolmogorov-Arnold networks. Phys. Rev. Res. 7, 023037 (2025).

Tan, L. & Jiang, J. Digital Signal Processing: Fundamentals and Applications, 3rd edn. (Academic Press, Inc., USA, 2018).

Sonnewald, M. et al. Bridging observations, theory and numerical simulation of the ocean using machine learning. Environ. Res. Lett. 16, 073008 (2021).

Cismondi, F. et al. Missing data in medical databases: impute, delete or classify? Artif. Intell. Med. 58, 63–72 (2013).

Yeo, K. Data-driven reconstruction of nonlinear dynamics from sparse observation. J. Comput. Phys. 395, 671–689 (2019).

Vaswani, A. et al. Attention is all you need. Advances in Neural Information Processing Systems 30 (2017).

McCann, K. & Yodzis, P. Nonlinear dynamics and population disappearances. Am. Nat. 144, 873–879 (1994).

Lorenz, E. N. Deterministic nonperiodic flow. J. Atmos. Sci. 20, 130–141 (1963).

Vano, J., Wildenberg, J., Anderson, M., Noel, J. & Sprott, J. Chaos in low-dimensional Lotka-Volterra models of competition. Nonlinearity 19, 2391 (2006).

Gilpin, W. Chaos as an interpretable benchmark for forecasting and data-driven modelling. Preprint at https://arxiv.org/abs/2110.05266 (2021).

Salgado, C.M., Azevedo, C., Proença, H. & Vieira, S.M. Missing data. Second. Anal. Electron. Health Rec. 143–162 (2016).

Junninen, H., Niska, H., Tuppurainen, K., Ruuskanen, J. & Kolehmainen, M. Methods for imputation of missing values in air quality data sets. Atmos. Environ. 38, 2895–2907 (2004).

Donoho, D. L. Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006).

Duarte, M. F. & Eldar, Y. C. Structured compressed sensing: from theory to applications. IEEE Trans. Signal Process. 59, 4053–4085 (2011).

Kim, J. Z. & Bassett, D. S. A neural machine code and programming framework for the reservoir computer. Nat. Mach. Intell. 5, 622–630 (2023).

Liu, J. et al. Towards out-of-distribution generalization: a survey. Preprint at https://arxiv.org/abs/2108.13624 (2021).

Kong, L.-W., Brewer, G. A. & Lai, Y.-C. Reservoir-computing based associative memory and itinerancy for complex dynamical attractors. Nat. Commun. 15, 4840 (2024).

Du, Y. et al. Multi-functional reservoir computing. Phys. Rev. E 111, 035303 (2025).

Zhai, Z.-M., Glaz, B., Haile, M. & Lai, Y.-C. Learning to learn ecosystems from limited data - a meta-learning approach. Preprint at https://arxiv.org/abs/2410.07368 (2024).

Zhang, Y. & Gilpin, W. Zero-shot forecasting of chaotic systems. Preprint at https://arxiv.org/abs/2409.15771 (2024).

Zhang, C., Bengio, S., Hardt, M., Recht, B. & Vinyals, O. Understanding deep learning requires rethinking generalization. Preprint at https://arxiv.org/abs/1611.03530 (2016).

Belkin, M., Hsu, D., Ma, S. & Mandal, S. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proc. Natl. Acad. Sci. USA 116, 15849–15854 (2019).

Bommasani, R. et al. On the opportunities and risks of foundation models. Preprint at https://arxiv.org/abs/2108.07258 (2021).

Panahi, S. & Lai, Y.-C. Adaptable reservoir computing: a paradigm for model-free data-driven prediction of critical transitions in nonlinear dynamical systems. Chaos 34, 051501 (2024).

Zhou, H. et al. Informer: beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35, 11106–11115 (AAAI Press, 2021).

Wen, Q. et al. Transformers in time series: a survey. In Proc. 32nd International Joint Conference on Artificial Intelligence (IJCAI) (2023).

Liu, Y. et al. iTransformer: inverted transformers are effective for time series forecasting. Preprint at https://arxiv.org/abs/2310.06625 (2023).

Xu, J., Wu, H., Wang, J. & Long, M. Anomaly transformer: time series anomaly detection with association discrepancy. In Proc. International Conference on Learning Representations (ICLR). https://openreview.net/forum?id=LzQQ89U1qm_ (2022).

Foumani, N. M., Tan, C. W., Webb, G. I. & Salehi, M. Improving position encoding of transformers for multivariate time series classification. Data Min. Knowl. Discov. 38, 22–48 (2024).

Yıldız, A. Y., Koç, E. & Koç, A. Multivariate time series imputation with transformers. IEEE Signal Process. Lett. 29, 2517–2521 (2022).

Nogueira, F. Bayesian optimization: open source constrained global optimization tool for Python. https://github.com/bayesian-optimization/BayesianOptimization (2014).

Bergstra, J. & Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012).

Zhai, Z.-M. Time series of chaotic systems. Zenodo. https://doi.org/10.5281/zenodo.14014974 (2023).

Zhai, Z.-M. Dynamics reconstruction ML. Zenodo. https://doi.org/10.5281/zenodo.14279347 (2023).

Acknowledgements

We thank J.-Y. Huang for some discussion. This work was supported by the Air Force Office of Scientific Research under Grant No. FA9550-21-1-0438, by the Office of Naval Research under Grant No. N00014-24-1-2548, and by Tufts CTSI NIH Clinical and Translational Science Award (UM1TR004398).

Author information

Authors and Affiliations

Contributions

Z.-M.Z., B.D.S., and Y.-C.L. designed the research project, the models, and methods. Z.-M.Z. performed the computations. Z.-M.Z., B.D.S., and Y.-C.L. analyzed the data. Z.-M.Z. and Y.-C.L. wrote the paper. Y.-C.L. edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhai, ZM., Stern, B.D. & Lai, YC. Bridging known and unknown dynamics by transformer-based machine-learning inference from sparse observations. Nat Commun 16, 8053 (2025). https://doi.org/10.1038/s41467-025-63019-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-025-63019-8