Abstract

Accurate pathological diagnosis is crucial in guiding personalized treatments for patients with central nervous system cancers. Distinguishing glioblastoma and primary central nervous system lymphoma is particularly challenging due to their overlapping pathology features, despite the distinct treatments required. To address this challenge, we establish the Pathology Image Characterization Tool with Uncertainty-aware Rapid Evaluations (PICTURE) system using 2141 pathology slides collected worldwide. PICTURE employs Bayesian inference, deep ensemble, and normalizing flow to account for the uncertainties in its predictions and training set labels. PICTURE accurately diagnoses glioblastoma and primary central nervous system lymphoma with an area under the receiver operating characteristic curve (AUROC) of 0.989, with the results validated in five independent cohorts (AUROC = 0.924-0.996). In addition, PICTURE identifies samples belonging to 67 types of rare central nervous system cancers that are neither gliomas nor lymphomas. Our approaches provide a generalizable framework for differentiating pathological mimics and enable rapid diagnoses for central nervous system cancer patients.

Similar content being viewed by others

Introduction

More than 86,000 patients in the U.S. are diagnosed with CNS neoplasms annually, leading to over 16,000 deaths each year1. The 2021 WHO Classification of CNS Tumors (WHO CNS5)2 identifies 109 distinct tumor subtypes based on pathology and molecular profiles3. Because treatments and prognoses of different CNS tumors vary considerably4,5,6,7, obtaining accurate pathological diagnoses is critical. Glioblastoma, the most common brain cancer in the U.S., has a dismal median survival of 8 months1,5, and surgical resection remains the cornerstone of initial treatment7. Notably, previous studies showed that primary central nervous system lymphoma (PCNSL) is the cancer type most frequently misdiagnosed as glioblastoma8,9,10,11,12. This misclassification has important clinical implications: patients with PCNSL have a median survival of more than three years following diagnosis and often respond well to radiotherapy4,5. Although patients’ age, immune status, and imaging features from magnetic resonance imaging influence clinicians’ initial diagnostic assessments, pathology evaluation using tumor samples provides the final diagnosis6,7. When PCNSL is diagnosed during surgery with the intent for tumor removal, neurosurgeons will usually discontinue further surgical intervention to preserve neurological function and refer patients for radiotherapy combined with chemotherapy7,8,9. In addition, final diagnosis using formalin-fixed, paraffin-embedded (FFPE) tissue confirms tumor types and guides long-term treatment planning2. Thus, accurate distinction between glioblastoma and PCNSL at both intraoperative and final diagnostic stages is therefore essential to avoid unnecessary surgery and ensure timely initiation of appropriate therapy.

Several challenges have hindered the accurate pathological diagnosis of CNS neoplasms1,6. The current issue in diagnosing glioblastoma and PCNSL lies in the inherent variability and uncertainty in both frozen section and FFPE evaluations13,14. Intraoperative frozen section diagnostics are invaluable for immediate assessment during brain cancer surgeries. However, prior studies have reported that 9.7% to 46.2% of frozen section diagnoses differ from the final FFPE-based diagnoses9,10,11,15,16. Recent studies have reported an inter-observer disagreement rate of up to 16% in FFPE diagnoses13,14. While the definitive diagnosis of brain cancers relies on FFPE tissue analysis, which enables thorough evaluations of the morphological patterns observed in CNS neoplasms, these microscopic findings across cancer types are sometimes distinct and, at other times, overlapping. For example, the glioblastoma pathology is highly variable and shares features with other tumors, including PCNSL. Glioblastomas typically manifest as infiltrating hypercellular neoplasms with nuclear pleomorphism, microvascular proliferation, and necrosis with or without surrounding pseudopalisading17. The neoplastic cells may be fibrillary, epithelioid, or round cells, the latter mimicking lymphoma cells. Further complicating diagnoses, PCNSL may also exhibit nuclear pleomorphism, necrosis, increased mitotic activity, and a perivascular propensity that can mimic pseudopalisading18. In addition, the atypia of reactive glia in PCNSL and infiltrating lymphoma cells within the brain parenchyma can lead to misinterpretation18,19.

Weakly supervised machine learning applied to pathology images has demonstrated the potential to assist cancer cell detection, subtype classification, and prognostic prediction20. Nevertheless, current deep learning-based approaches for neuro-oncological diagnostics remain largely confined to radiological applications. Existing pathological diagnostic models focus on differentiating glioma types or applying few-shot learning techniques to rarer subtypes due to the limitation of data availability. In addition, models trained on cohorts without sufficient diversity often experience substantial performance decay when applied to new patient populations due to differences in sample preparation and slide scanning protocols21. Due to the morphological heterogeneity17, previous studies showed substantial variations in AI models’ diagnostic performance for this deadly cancer22. In addition, standard machine learning models inevitably classify any new data points into one of the categories they were trained with, regardless of the nature of the new samples23. These caveats have limited the application of AI models in cancer diagnoses24.

In this study, we present the Pathology Image Characterization Tool with Uncertainty-aware Rapid Evaluations (PICTURE). PICTURE leverages epistemic uncertainty quantifications25,26 to identify atypical pathology manifestations and uses diverse pathology images presented in medical literature to guide the development of self-supervised deep neural networks. We successfully validate the PICTURE system and show that it significantly outperforms the state-of-the-art computational pathology methods using samples from five independent patient cohorts worldwide (the Mayo Clinic, the Hospital of the University of Pennsylvania, Brigham and Women’s Hospital, the Medical University of Vienna, Taipei Veterans General Hospital), and one consortium (The Cancer Genome Atlas). In addition, our uncertainty-aware machine learning approaches enhance the model’s generalizability to both formalin-fixed paraffin-embedded (FFPE) and frozen section slides. Furthermore, our uncertainty-based out-of-distribution detection (OoDD) module quantifies the model’s confidence level and correctly flags previously unseen CNS cancer types and normal tissues not represented in the training dataset, which enables the identification of overconfident and potentially misleading predictions from conventional models27. These findings underscore the value of integrating uncertainty quantification and foundation model ensembles for enhancing deep learning models’ generalizability and reliability in computational pathology analyses. Our approaches effectively distinguish histological mimics with clinical significance, thereby enabling accurate pathological diagnoses and personalized cancer treatments.

Results

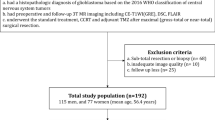

Study populations

We collected 1760 formalin-fixed paraffin-embedded permanent slides and 381 frozen section whole-slide images (WSIs) of CNS neoplasms from five medical centers internationally. For glioblastoma and PCNSL, the sample set includes 273 FFPE slides from the Mayo Clinic (Mayo; one slide per patient), 141 FFPE slides and 125 frozen section slides from the Hospital of the University of Pennsylvania (UPenn; from 150 patients in total), 125 FFPE slides and 77 frozen section slides from Brigham and Women’s Hospital (BWH; 135 patients), 528 FFPE slides and 96 frozen section slides from the Medical University of Vienna (568 patients)28, and 693 FFPE slides and 83 frozen section slides from Taipei Veterans General Hospital (TVGH; 568 patients). We further obtained 862 FFPE slides and 1169 frozen section slides from The Cancer Genome Atlas (TCGA; 608 patients)29. In the Mayo, UPenn, and BWH cohorts, FFPE and frozen sections were collected from the same tissues. To evaluate our machine learning models’ capability of detecting rare pathology manifestations not encountered in the training process, we obtained 874 additional FFPE WSIs (from 817 patients) of 67 types of non-PCNSL and non-glioblastoma CNS neoplasms and 33 WSIs of normal brain tissues (from 33 patients). All samples were collected prior to treatments, and all patients provided written informed consent at the time of enrollment. Detailed patient characteristics are summarized in Tables 1 and 2.

Overview of PICTURE

The overall workflow of PICTURE is summarized in Fig. 1. We tessellated all WSIs from our multi-center study cohorts (Table 1) into patches. We identified patches containing mostly blank backgrounds using their color and cell density profiles and removed them from our analyses. We normalized the colors using the mean and standard deviation of pixel intensities to mitigate study site-related batch effects. To ensure robust pathology feature identification, we utilized nine state-of-the-art pathology foundation models to extract image features: CTransPath30, Phikon31, Lunit32, UNI33, Virchow234, CONCH35, GPFM36, mSTAR37, and CHIEF20. These models were trained in a self-supervised or weakly supervised manner using different backbone architectures and training sets. PICTURE employed three uncertainty quantification methods to enhance model generalizability across diverse patient populations and study settings. Specifically, we quantified predictive uncertainty using a Bayesian-based method on prototypical pathology images of CNS cancers from PubMed and other medical literature, and only patches with low predictive uncertainty were used during the training process. Second, PICTURE enhanced predictive stability during inference by incorporating an uncertainty-based deep ensemble, which combined predictions from different trained models. Patches with higher certainty across foundation models were given higher weights. In addition, we designed an OoDD module that employed normalizing flow38 to identify atypical pathology manifestations not encountered during training, enabling the detection of CNS tumor types not represented in the training datasets. We validated our model using FFPE and frozen section slides collected from the University of Pennsylvania, Brigham and Women’s Hospital, and the Medical University of Vienna. Our detailed approaches are described in the “Methods” section.

A We collected 2141 pathology slides of formalin-fixed paraffin-embedded (FFPE) and frozen section CNS tissues from five medical centers, including Brigham and Women’s Hospital, Mayo Clinic, the Hospital of the University of Pennsylvania, Taipei Veterans General Hospital, and the Medical University of Vienna. B We employed pathology foundation models (CTransPath, UNI, Lunit, Phikon, Virchow2, CONCH, GPFM, mSTAR, and CHIEF) to extract concise representations of high-resolution pathology image features. These feature extractors were trained on diverse datasets without labels of pathology diagnosis. C To enhance the model’s generalizability across patient populations, we curated additional pathology images from neuropathology publications and incorporated them into model training and uncertainty quantification processes18,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90. D We focused on the classification of glioblastoma and primary CNS lymphoma (PCNSL) due to their clinical significance. We partitioned the development dataset (i.e., Mayo Clinic) into fivefolds. PICTURE integrates three distinct uncertainty quantification methods: (U.1) Bayesian inference, which leverages prototypical images to detect atypical pathology profiles; (U.2) deep ensembling, which aggregates predictions from multiple foundation models and refines whole-slide predictions by excluding uncertain tiles; and (U.3) normalizing flow, which identifies central nervous system (CNS) cancer types not present in the training dataset. The graphics in (A–C) and the layout of (D) were created in BioRender. Zhao, J. (2025) https://BioRender.com/v04o604.

PICTURE differentiates PCNSL and glioblastoma and attained higher generalizability across patient cohorts

We first employed standard computational pathology pipelines to train baseline models, including (1) state-of-the-art foundation models without uncertainty control, including CTransPath30, Phikon31, Lunit32, UNI33, Virchow234, CONCH35, GPFM36, mSTAR37, and CHIEF20; and (2) a standard end-to-end weakly supervised Swin Transformer39. FFPE slides collected at the Mayo Clinic served as the model development set due to the diverse staining patterns represented in their referred cases. Next, we evaluated the performance of PICTURE in the same study settings, using both area under the receiver operating characteristic curve (AUROC) and balanced accuracy (Supplementary Data 1). PICTURE significantly outperformed state-of-the-art deep neural networks trained without uncertainty quantification or ensembling (Fig. 2 and Supplementary Data 1). Our results showed that PICTURE achieved near-perfect performance when tested on patients not included in the training process (Fig. 2B; the average AUROC of PICTURE is 0.998, and the 95% confidence interval (CI) is 0.995–1.0). In contrast, baseline foundation models showed variable performance, with AUROCs ranging from 0.833 (Phikon) to the level comparable to PICTURE (e.g., Virchow2 and UNI).

The red line indicates the estimated AUROC of PICTURE; the shaded region shows the 95% confidence interval derived from 1000 bootstrap samples. P-values were calculated using one-sided bootstrap hypothesis tests. A, B We developed PICTURE using digital pathology slides from the Mayo Clinic and evaluated its performance on the held-out FFPE test set, PICTURE achieved AUROCs comparable to all foundation models. To assess generalizability, we validated PICTURE on four independent cohorts. Sample counts per site are shown. C–F PICTURE demonstrated consistently high performance on FFPE samples from independent cohorts: at UPenn, it achieved an AUROC of 0.996, outperforming UNI (0.976, P < 0.001) and performing comparably to Virchow2 (0.995, P = 0.243); at BWH, it reached 0.987, significantly higher than UNI (0.977, P = 0.025) and Virchow2 (0.975, P = 0.014); in Vienna, it attained 0.992, outperforming UNI (0.966, P < 0.001) and Virchow2 (0.964, P < 0.001); and at TVGH, it achieved 0.992, exceeding CONCH (0.982, P = 0.001) and Virchow2 (0.980, P < 0.001). G–J PICTURE enabled real-time intraoperative diagnostic support using frozen section slides. At UPenn, it reached 0.958, significantly outperforming the Swin Transformer (0.898, P < 0.001) and showing modest improvement over CONCH (0.946, P = 0.067). At BWH, both PICTURE and CONCH achieved 0.987 (P = 0.505), outperforming CHIEF (0.971, P < 0.001). In Vienna, PICTURE reached 0.988, surpassing UNI (0.966, P < 0.001) and Virchow (0.940, P < 0.001). At TVGH, PICTURE attained 0.924, performing comparably to CONCH (0.919, P = 0.427) and better than CTransPath (0.896, P = 0.672). Asterisks indicate significantly better performance by PICTURE (*P < 0.05; **P < 0.01; ***P < 0.001). Tissue slide illustrations in (A) were created in BioRender. Zhao, J. (2025) https://BioRender.com/j32r478.

We further evaluated the generalizability of PICTURE and other deep learning methods using independent validation cohorts from multiple medical centers. PICTURE consistently demonstrated high performance across these patient populations (Fig. 2C–F), achieving AUROC of 0.996 (95% CI = 0.990–1.0) in the Hospital of the University of Pennsylvania (UPenn) cohort, 0.987 (95% CI = 0.961–1.0) in the Brigham and Women’s Hospital (BWH) cohort, 0.992 (95% CI = 0.982–0.999) in the Medical University of Vienna (Vienna) cohort, and 0.992 (95% CI = 0.986–0.998) in the Taipei Veterans General Hospital (TVGH) cohort. Although standard Swin Transformer with weakly supervised training performed reasonably well on the held-out partition from the Mayo Clinic, it did not generalize effectively to the independent validation cohorts, yielding significantly lower performance compared with PICTURE across all FFPE cohorts (one-sided bootstrap significance test P-value < 0.05; Supplementary Table 3). Similarly, most self-supervised foundation models, despite being trained on large and diverse datasets, exhibited notable performance decay in FPPE cohorts and occasionally attained lower performance than Swin Transformer. Among the foundation models evaluated, Virchow2, CTransPath, CHIEF, UNI, and CONCH showed relatively high generalizability. Nonetheless, their performance was significantly worse than PICTURE in nearly all FFPE cohorts (one-sided bootstrap significance test P-value < 0.05), with the only exceptions of Virchow2 in the UPenn cohort (AUROC = 0.995, P-value = 0.387). Furthermore, ablation studies revealed that additional stain normalization methods, larger model ensembles, alternative tile filtering, and different aggregation strategies did not yield significant performance improvement when combined with PICTURE (Supplementary Tables 9–11).

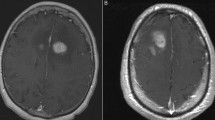

We next examined the tissue microenvironmental patterns leveraged by PICTURE in its diagnostic predictions (Fig. 3a, b). Dense tumor regions in both glioblastoma and PCNSL samples received high attention weights from the PICTURE model. Geographic necrosis, infiltration, and perivascular tumor regions were highlighted in both tumor types. Our models consistently attended to leptomeningeal involvement in glioblastoma samples. Thrombosis, microvascular proliferation, and pseudopalisading necrosis are unique to glioblastomas and were recognized by PICTURE. Edema was predominantly highlighted in glioblastomas and only occasionally in lymphomas. PICTURE further demonstrated high confidence in identifying compact tumors, extensive necrotic regions, and clear-cell or spindle-cell formations in glioblastomas. Interestingly, the model paid less attention to polymorphous nuclei observed in glioblastoma samples. In PCNSL samples, areas with high cellular density and typical lymphoid morphology were highlighted. It is worth noting that PICTURE marked regions demonstrating crush artifacts, bleeding, or adjacent benign tissues as areas of low confidence (Fig. 4C and Supplementary Fig. 7). These observations indicate that PICTURE independently identified distinct pathological features associated with CNS tumor types in a data-driven manner. Our neuropathologists (M.P.N., N.S., T.R.-P., A.W., J.A.G.) evaluated 40 randomly selected heatmaps of correct PICTURE’s predictions, identifying common features with the following frequencies: dense tumor (90%), microvascular proliferation (25%), infiltration (77.5%), pseudopalisading necrosis (22.5%), and surgical-related hemorrhage (72.5%).

Red and blue regions denote areas highly indicative of PCNSL and glioblastoma, respectively. In the uncertainty heatmaps, brighter regions correspond to areas where the model exhibits greater confidence in its prediction. The slides are reviewed by six pathologists independently (T.M.P., A.W., S.C.L., N.S., J.A.G., M.P.N.). a PICTURE predictions of four representative glioblastoma FFPE samples. The PICTURE model highlighted regions with compact tumor and spindle cells as strong indicators of glioblastoma. Regions with polymorphous nuclei, blood, and surgical material obtained low confidence scores. In addition, perivascular inflammation and thrombosis are highlighted by PICTURE. b PICTURE predictions of four representative PCNSL FFPE samples. Cell-dense regions showing typical lymphoid morphology and scattered “tangible body macrophages” in a “starry sky” pattern are highlighted with high confidence for differentiating PCNSL from primitive glioblastoma mimics. Regions with pronounced squeezing artifacts, such as hemorrhage, are marked as areas with low diagnostic confidence. c PICTURE predictions for two representative glioblastoma frozen section samples. PICTURE associated regions with dense glioma cells, edemas, and necrosis with high prediction confidence. Low-confidence regions have lower cellularity but exhibit microvascular proliferation. d PICTURE predictions for two representative PCNSL frozen section samples. PICTURE marked compact tumors with clear intercellular separation as low confidence, and regions with clustered compact tumors received high confidence in the diagnostic prediction. PICTURE’s uncertainty mechanism can correct inaccurate preliminary direct model predictions in difficult cases, highlighting malignant cells. Characteristic features include angiocentric malignant cells and perivascular cuffs. Scale bars: 1 mm.

A Typical pathological patterns of glioblastoma and PCNSL possess distinct image features extracted by foundation models. This figure panel shows the UMAP projection of PICTURE’s tile-level (CTransPath) image feature space using 149,914 samples from the Mayo Clinic. PICTURE quantified the epistemic uncertainty of its predictions by comparing the morphological similarities between the image under evaluation and prototypical images from the literature. Glioblastoma and PCNSL pathology images with high certainty in their diagnoses occupied distinct feature space. Images with low certainty (i.e., high uncertainty) reside in similar regions. By excluding training instances with uncertainty scores higher than the median score, we obtained a generalizable model with high classification performance in all four external validation cohorts. The gradient in color saturation shows the level of uncertainty associated with each sample. Darker hues represent high certainty (i.e., low uncertainty). The star signs in this figure panel mark the prototypical histopathology images obtained from the literature, which guides our uncertainty-aware model training process. The box with a black outline shows a specimen with areas of collagenous tissue consistent with dura mater. This tissue is eosinophilic with wavy architecture and includes nonviable muscle fibers. B PICTURE identified distinct tissue characteristics of glioblastoma and PCNSL. This analysis was performed on the FFPE cohorts from BWH and UPenn, comprising 1,213,656 glioblastoma and 365,779 PCNSL image tiles. Glioblastoma samples are more likely to contain regions with necrosis, and the cell nuclei of glioblastoma have more heterogeneous sizes compared with PCNSL samples. PCNSL samples contain denser cells on average. The reported values are from two-sided Mann–Whitney U tests with no corrections as the statistical tests were used to describe group differences rather than to establish definitive significance. C Six selected examples of regions receiving highly confident predictions from PICTURE. PCNSL samples (blue outlines) contain distinct pathology patterns, including vessels surrounded by tumor cells (red arrows), macrophages amidst tumor cells (blue arrows), and malignant hematopoietic cells (green arrows). Glioblastoma samples (orange outlines) show microvascular proliferation (pink arrows), infiltrating glioma cells (purple arrows), perivascular tumors (orange arrows), abnormal vessels, as well as hemorrhage (yellow arrows). Scale bars: 100 μm.

PICTURE enables accurate frozen section diagnoses of glioblastoma and PCNSL

Given the clinical importance of frozen section evaluation in differentiating glioblastoma from PCNSL during surgery, we further examined PICTURE’s capability for diagnosing these two aggressive cancer types based on frozen section slides obtained intraoperatively. Using frozen section samples from independent patient cohorts, we successfully validated the PICTURE framework and observed strong performance (Fig. 2G–J): AUROC values were 0.958 (95% CI = 0.919–0.988) for UPenn, 0.987 (95% CI = 0.956–1.0) for BWH, 0.982 (95% CI = 0.956–0.997) for Vienna, and 0.924 (95% CI = 0.846–0.985) for TVGH. Bootstrap significance tests revealed no significant drop in performance for frozen sections compared to FFPE samples from the same medical center in general, with the exception of the UPenn cohort. Nevertheless, PICTURE consistently outperformed baseline foundation models across all cohorts and achieved similar performance across each independent cohort compared to the development dataset (Supplementary Table 2), despite glioblastoma frozen section samples being 3.87 ± 1.91 times more abundant than PCNSL samples. By contrast, the standard weakly supervised Swin Transformer and most self-supervised foundation models experienced a substantial performance decline on frozen section samples. Visualization of model prediction revealed that PICTURE focused on dense glioma cells, necrosis, and edema to diagnose glioblastoma using frozen section samples (Fig. 3c). In addition, PICTURE employed clusters of compact lymphoid cells to identify PCNSL (Fig. 3d). Our results highlighted the robustness of PICTURE in handling domain shifts across study populations and sample preparation methods. To further benchmark performance, we evaluated PICTURE and these off-the-shelf foundation models on differentiating PCNSL and glioblastoma by pooling all available samples. Using fivefold validation, PICTURE (AUROC = 0.999), Virchow2 (AUROC = 1.000), UNI (AUROC = 0.999), Lunit (AUROC = 0.995), Phikon (AUROC = 0.994), and GPFM (AUROC = 0.987) attained near-perfect performance. In contrast, CTransPath (AUROC = 0.950), CONCH (AUROC = 0.959), CHIEF (AUROC = 0.962), and mSTAR (AUROC = 0.973) showed significantly lower performance (DeLong’s test P-value < 0.05; Supplementary Fig. 5).

Inter-observer variability in FFPE and intraoperative diagnoses

To evaluate the clinical challenge of differentiating between PCNSL and glioblastoma, we developed a web-based user interface and conducted a multi-institutional study to quantify inter-observer variability (Supplementary Fig. 8A). Nine board-certified neuropathologists were recruited from the United States, Europe, East Asia, and Southeast Asia, representing a range of clinical experience: three junior (< 5 years), four intermediate (5–15 years), and two senior (> 15 years) neuropathologists. We curated a representative set of 20 frozen section slides and 20 FFPE slides, each manifesting diverse morphological features of PCNSL and glioblastoma. Each neuropathologist independently evaluated all slides. All cases had been correctly diagnosed by PICTURE. To reflect the time constraints commonly encountered in clinical settings, participants first assessed each case under a 90-s time limit40. They were then allowed to re-examine the slides at their own pace and revise their initial diagnoses if desired. No immunohistochemical stains or genomic testing results were provided.

For each evaluator, the diagnosis made without a time constraint was generally consistent with the one made within the first 90 s. Only one frozen section case of PCNSL and one FFPE case of glioblastoma were corrected in the untimed session, with an intermediate and a senior neuropathologist each changing their initial diagnosis to the correct one. Because these corrections were rare, we focused our analyses on diagnoses made in the initial timed session. Agreement with ground truth varied across individuals, with Jaccard similarity scores ranging from 0.56 to 0.87 for FFPE slides and 0.49 to 0.81 for frozen sections (Supplementary Fig. 8B, C). Inter-observer agreement was slight to moderate, with Fleiss’ kappa values of 0.271 for FFPE and 0.248 for frozen sections. Senior and intermediate pathologists performed significantly better than junior pathologists (Mann–Whitney U test: P-value = 0.0128 for FFPE and P-value = 0.0318 for frozen section samples). Notably, PCNSL was misclassified as glioblastoma in 38% of all evaluations, reflecting a systematic challenge in distinguishing these histologically similar entities. Of the 113 misdiagnosed instances across evaluators, 81 (71.7%) were associated with evaluator-reported low to moderate diagnostic confidence (≤ 50%). Several participants noted in post-evaluation feedback that the task was “more challenging than expected.” These findings highlighted the intrinsic complexity of CNS tumor evaluation, both in FFPE-based final assessment and frozen section-based intraoperative diagnosis.

Uncertainty quantification enhanced the generalizability of machine learning models for computational pathology evaluation

To better understand PICTURE’s performance stability in previously unseen patient populations, we inspected the feature space and the quantified diagnostic uncertainty of our training and independent validation cohorts. Figure 4A illustrates a two-dimensional projection of the morphological features of the Mayo Clinic extracted using CTransPath. Results showed that glioblastoma and PCNSL pathology images with low diagnostic uncertainty occupied distinct spaces. In addition, the image prototypes collected from the medical literature (marked by the stars) capture the diverse representations of glioblastoma and PCNSL morphologies useful for efficient model development, which guides our uncertainty-aware model training process. Tiles from a specimen, located in the top-left corner, outline areas of collagenous tissue consistent with dura mater. This tissue exhibits an eosinophilic, wavy architecture and contains nonviable muscle fibers. Morphologies of low-grade small lymphocytic lymphoma with dispersed plasmacytoid cells were captured in PCNSL cases adjacent to a prototype. The visualization confirms the feature representations extracted from the foundation models have learned meaningful morphological patterns20,21,33.

We further visualized the effects of the uncertainty control step in the PICTURE framework. PICTURE employed a weakly supervised machine learning approach that only required slide-level diagnostic annotations, rather than detailed pixel-level annotations. Although this approach is highly efficient and can leverage existing pathology data from many medical centers, noise arising from image regions without definitive diagnostic signals is inevitable. To account for this issue, image tiles from our training cohort (i.e., Mayo Clinic) with higher than the median epistemic uncertainty score were purposely omitted to prevent overfitting the noisy samples and limiting generalizability. As a comparison, we developed an otherwise identical model but without the uncertainty control module, which yielded lower performance (Supplementary Data 1). These results revealed the benefits of incorporating uncertainty measurements and image prototypes in PICTURE’s model training process. We further investigated the regions with high prediction confidence by PICTURE, and results showed that high confidence regions in glioblastoma samples are more likely to contain regions with necrosis (Mann–Whitney U test P-value = 1.97 × 10−4). Additionally, glioblastoma exhibited greater heterogeneity in nuclei sizes compared with PCNSL (Mann–Whitney U test P-value = 1.51 × 10−4), characterized by a distribution with longer tails. PCNSL samples contain significantly denser cells on average (Mann–Whitney U test P-value = 1.57 × 10−23; Fig. 4B and Supplementary Fig. 4). These results highlighted the morphological differences PICTURE leverages to differentiate glioblastoma from its major pathology mimic.

Lastly, we found that frozen section slides have higher epistemic uncertainty scores than permanent sections in general (average uncertainty score = 0.070 and 0.103 for glioblastoma frozen section and PCNSL frozen section slides, respectively; average uncertainty score = 0.045 and 0.048 for glioblastoma FFPE and PCNSL FFPE slides, respectively; Supplementary Fig. 2 and Supplementary Table 5), which is consistent with the diagnostic performance of machine learning models. Overall, PCNSL samples have slightly higher uncertainty scores than glioblastomas (Supplementary Fig. 2). Furthermore, we found that PICTURE’s performance remained consistent across normalization methods, sample types, and the number of slides per patient. (Supplementary Tables 4, 6, and 9−11).

PICTURE detected CNS cancer types not encountered in the model training process

Although distinguishing glioblastoma from PCNSL is of critical clinical importance, they represent only two among numerous CNS cancer types that clinicians encounter in their practice. Based on the wide variety of pathology and molecular profiles observed in CNS cancers, the most recent version of WHO classification defined more than 100 different subtypes, and many of them have an incidence rate lower than 0.1 in 100,000 person-years. These rare subtypes make it challenging for clinicians to accurately diagnose all patients. Because standard machine learning methods are often trained to classify instances into pre-defined categories, the resulting models will pigeonhole each patient as one of the diagnostic groups defined in the training phase, even if the patient does not belong to any of these groups. To address this critical clinical challenge, we collected 874 WSI samples from 817 patients belonging to 67 subtypes of non-glioblastoma, non-PCNSL CNS cancers, in addition to 33 normal brain tissue samples from 33 patients (Fig. 5A and Supplementary Data 1). We evaluated PICTURE’s capability of flagging out-of-distribution (OOD) samples (i.e., neither glioblastoma nor PCNSL) not represented in the training set. Results showed that PICTURE’s epistemic uncertainty estimation module can identify samples that are neither GBM nor PCNSL and unseen in the training phase. Specifically, our OoDD module achieved an AUROC of 0.919 in distinguishing in-distribution (ID; i.e., glioblastoma and PCNSL) samples from OOD cases (Fig. 5B), with a 95% confidence interval (CI) of 0.914–0.923 (see Supplementary Table 6 for stratified accuracy by CNS cancer subtypes), significantly outperforming standard OOD detection methods including Monte Carlo dropout41 (AUROC = 0.666, 95% CI = 0.638–0.695) and deep ensemble42 (AUROC = 0.554, 95% CI = 0.525–0.584). The overall uncertainty scores of OOD samples are higher than those of either Glioblastoma (Mann–Whitney U test P-value < 0.001) or PCNSL (P-value = 0.008), which helps PICTURE identify pathological profiles not encountered in the training set (Supplementary Fig. 3).

A The 2021 WHO Classification of Tumors of the Central Nervous System defines 109 types of CNS cancers, and most of these cancer types have an incidence rate lower than 0.1 per 100,000 person-years. We employed the uncertainty quantification capability of PICTURE to identify normal tissues and CNS tumor types (non-glioblastoma and non-PCNSL; diagnostic categories not included in the training dataset). B A PICTURE model trained to recognize glioblastoma and PCNSL successfully identified non-glioblastoma and non-PCNSL samples with an AUROC of 0.919, significantly outperforming existing OOD detection methods such as Monte Carlo dropout (AUROC = 0.666, P-value < 0.001) and deep ensemble (AUROC = 0.554, P-value < 0.001). P-values were determined by one-sided bootstrap significance tests (N = 1000). The shaded areas show the 95% confidence intervals estimated by 1000 bootstrap samples, and the solid lines represent the average sensitivity and specificity. C UMAP visualization of the image feature space in the test set showed that in-distribution glioblastoma (red isolines), PCNSL (blue isolines), and out-of-distribution (orange isolines) samples occupied distinct feature spaces. The color of the dots shows the epistemic certainty measurement of a given sample quantified by PICTURE, and the colored isolines show the kernel density distribution of each tumor type. Lighter dots represent samples with high levels of certainty. Samples represented by darker colors have lower certainty scores according to the PICTURE model, which was trained with in-distribution cases only. Samples with certainty scores lower than 0.5 are predominantly (84.7%) non-glioblastoma and non-PCNSL cases, while the remaining 15.3% consist of misidentified GBM (75.3%) and PCNSL (24.7%) cases.

By visualizing the extracted latent image features from ID and OOD samples, we observed a visible separation between the feature distributions of OOD and ID samples (Fig. 5C). Specifically, the glioblastoma (red isolines) and PCNSL (blue isolines) samples formed two distinct clusters on the top of the projected feature embedding, while a large cluster predominantly composed of OOD samples (orange isolines) occupied the space at the bottom of the figure. We employed color saturation to visualize the level of uncertainty quantified by PICTURE, with lighter saturation representing samples with lower certainty in their diagnostic prediction. Notably, samples with low certainty scores (< 0.5) are predominantly (84.7%) non-glioblastoma and non-PCNSL cases, while the remaining 15.3% consist of misidentified GBM (75.3%) and PCNSL (24.7%) cases. We examined ID cases possessing image tiles with low certainty scores, and we found that these image tiles generally possessed low cellularity (Mann–Whitney U test P-value < 0.0001). These results highlighted the efficacy of PICTURE’s uncertainty quantification approach for identifying CNS tumor subtypes that are not represented in the training data, without pigeonholing them into one of the known categories.

Discussion

Here we presented PICTURE, a robust and uncertainty-aware deep learning system for identifying pathological differences and differentiating clinically important pathology mimics with the highest misdiagnosis rates across CNS tumor types8,9,10,11,12. To ensure generalizability across pathology samples collected from multiple study populations, we developed PICTURE using three complementary strategies: (1) incorporating pathology images from the literature as prototypes for uncertainty quantification, (2) enhancing model stability through ensemble-based uncertainty score aggregation, and (3) detecting out-of-distribution samples with Bayesian posterior and density estimation38 These innovations allowed PICTURE to outperform off-the-shelf foundation models, demonstrating greater generalizability and resilience to domain shifts caused by variability in sample preparation and digitization protocols30.

Given the clinical importance of differentiating glioblastoma from its mimics10, a few studies attempted to identify their histological differences using custom microscopy techniques in small study cohorts. For example, stimulated Raman histology and convolutional neural networks have been used to identify the pathology characteristics of CNS cancers, including glioblastoma and PCNSL43. However, only two PCNSL samples were included in that study. In contrast, our validation across independent patient cohorts showed that PICTURE attained reliable diagnostic performance across multiple medical centers and pathology slides digitized by different scanners. In particular, PICTURE identified microscopic tissue morphologies that differentiated glioblastoma and PCNSL in high-quality FFPE slides and generalized these quantitative imaging signals to frozen sections obtained intra-operatively. Once digital pathology images are obtained, our computational pipeline typically processes each whole-slide image in under one minute. This includes fewer than 5 s for tissue segmentation and patch coordinate identification, 30–50 s for parallel feature extraction using multiple foundation models, and 300–700 milliseconds for final diagnostic inference. Recent advances in AI-based preprocessing techniques may further reduce preprocessing time and enhance the overall efficiency of digital pathology workflows. Thus, PICTURE has the potential to provide real-time and affordable diagnostic support without requiring immunocytochemical or mass spectroscopy facilities.

Previous studies suggest that inadequate control of prediction uncertainty is a key factor leading to the poor generalizability of many machine learning systems26. Although recent methods for uncertainty quantification have improved the performance of histopathology diagnostic models44,45, domain shift remains a substantial challenge when applying models to samples obtained from different clinical settings46,47. PICTURE directly addressed this issue by combining self-supervised feature extractors with diverse training data and robust uncertainty modeling. This integrative design enabled PICTURE to maintain consistent performance across heterogeneous pathology samples, thereby enhancing model generalizability. Our study provides insights into the behavior of foundation models in a challenging and clinically important diagnostic task, where models are applied to cancer types and sample types (e.g., frozen section) that were underrepresented in their original training datasets. We found that models trained on diverse and extensive multi-institutional datasets tended to exhibit relatively stable performance across both FFPE and frozen section slides. In contrast, models primarily trained on public repositories showed greater variability in performance when applied to independent cohorts. This highlights the importance of increasing training data diversity to enhance model robustness. In addition, different foundation models occasionally produced conflicting predictions, underscoring the need for advanced ensemble strategies to ensure reliable deployment in clinical settings.

An additional key aspect of PICTURE is that it identifies CNS cancer types that traditional machine learning approaches fail to address by implementing an OoDD model. Because there are many rare subtypes of CNS cancers that affect intra-operative and post-operative treatment decision-making28, it is crucial for machine learning models to flag atypical histopathology patterns with low incidence rates. In the standard machine learning approach, if a sample encountered in the inference phase does not belong to any of the known categories, it will be incorrectly classified as one of the groups that the model is trained to predict38. This conventional approach in AI is particularly problematic for CNS cancer diagnosis because it may increase the likelihood of clinicians missing rare cancer subtypes. PICTURE addressed this issue by leveraging a Bayesian uncertainty quantification method. Similar to conformal prediction frameworks48, our Bayesian method offers a mathematically principled approach for estimating uncertainty in predictions, thereby enhancing the reliability of model output44. We demonstrated that PICTURE accurately captured CNS cancer types and benign cases not included in its training population. Our OoDD module can be integrated as a screening layer within standard classification frameworks, requiring minimal modifications to the clinical workflow. By flagging cases likely to be neither glioblastoma nor PCNSL, it enables clinicians to promptly identify rare or atypical presentations that warrant further evaluation, thereby enhancing the trustworthiness of computer-aided diagnostics. These results suggested that PICTURE could facilitate routine pathology diagnosis, which requires clinicians to accurately identify common cancers while staying vigilant for rare diseases and atypical pathology manifestations.

Acquiring a large and diverse cohort of PCNSL remains challenging due to its low incidence. The limited representation of PCNSL in publicly available datasets such as TCGA (n = 2) underscores the necessity for dedicated case collection to enable pathology AI research. To address this gap, we assembled 355 PCNSL and 1,822 glioblastoma cases from five medical centers spanning three continents. Our study evaluated the clinical utility of pathology AI for brain cancer diagnosis, providing a unique opportunity to assess model generalizability across diverse populations and clinical settings.

Our study has a few limitations. First, although PICTURE was trained and validated across multiple medical centers internationally, our patients are predominantly Caucasian. Additional research is required to evaluate the performance of the proposed model in diverse populations49,50. In addition, given the increasing role of multi-omics data in defining cancer subtypes, incorporating digital pathology with genomic, epigenomic, transcriptomic, and proteomic data may provide further insights into defining additional subtypes of CNS cancers. Furthermore, our proof-of-concept analyses focused on glioblastoma and its major pathology mimics. Future research can extend our approach to evaluate samples of other disease types. Lastly, the number of patients from individual sites is limited, particularly for PCNSL, due to its low incidence. Future work should incorporate larger, more diverse cohorts to enhance model validation and ensure robust performance, especially for underrepresented populations.

In summary, we demonstrated that PICTURE is a reliable pathology diagnosis system for differentiating prevalent CNS cancers with the highest likelihood of misdiagnosis9. PICTURE attains superior performance in diagnosing pathology samples collected from multiple centers worldwide, provides decision support to intra-operative frozen section evaluation, and recognizes rare cancer types not included in the training cohorts. Our approach provides reliable and efficient real-time diagnostic support for brain cancers and is generalizable to the pathological evaluation of other complex diseases.

Methods

Data sources

We collected 2667 formalin-fixed paraffin-embedded permanent slides and 1386 frozen section slides of 2447 brain cancer patients from BWH, UPenn, Mayo Clinic, the Medical University of Vienna, and TVGH. The slides from BWH, UPenn, and the Medical University of Vienna were scanned by Hamamatsu S210 scanners at 40× magnification, with pixel widths ranging from 0.221 to 0.253 microns per pixel. Slides from the Mayo Clinic are scanned at 40× with Aperio GT450 scanners. Slides from TVGH are scanned at 0.25 microns per pixel with Philips UFS scanners. For our supplemental results, we also included 862 permanent slides and 1169 frozen section slides (from 597 brain cancer patients) from the TCGA cohort from the National Cancer Institute Genomic Data Commons (https://portal.gdc.cancer.gov/). There was no patient overlap between any of these study cohorts. All patients provided written informed consent at the time of enrollment. Our multi-center study was approved by the Harvard Medical School Institutional Review Board (IRB20-1509). Our MI-CLAIM checklist is included in the appendix.

Image preprocessing of gigapixel whole-slide images

We tessellated each gigapixel digital pathology slide into image patches with a resolution of 1000 × 1000 pixels at 20× magnification, and we employed a step size of 250 pixels in both the X and Y directions27,51. We excluded uninformative tiles that only contained blank backgrounds or stain smudges using their color (≥ 95% of pixels with intensity < 10/255 or > 240/255, hue ≥ 70/360 or < 230/360, or saturation < 0.2) and cell density profiles (< 100 cell nuclei per tile; cell nuclei were detected using the blob detection algorithm in the scikit-image library, with a maximum blob radius of 10 pixels, and a threshold value of 0.2). On average, we excluded 67.1% of tiles from each gigapixel WSI and selected up to 4000 tiles with the highest foreground ratios and cell densities. All tiles were normalized using ImageNet-1K standards52. Standard Reinhard53 and Macenko54 normalization methods were also employed for comparison, which showed no significant differences, consistent with findings from prior benchmarking results55 (Supplementary Table 9).

Pathology image feature extraction via self-supervised machine learning

We employed nine histopathological foundation models (CTransPath, Lunit, Phikon, UNI, CHIEF, CONCH, GPFM, mSTAR, and Virchow2) to extract quantitative representations from digital pathology images. These models were selected based on their demonstrated performance20,30,31,32,33,34,35,36,37 and extensive benchmark studies56. CTransPath employed three initial convolutional layers to enable local feature extraction and boost training stability, followed by four Swin Transformers39, yielding a 765-dimensional latent vector per image. CHIEF enhanced the CTransPath architecture by integrating image features through attention-based feature fusion, instance-level feature identification, and WSI-level weakly supervised learning. Additionally, it aligned image and text representations using a pre-trained text encoder from the Contrastive Language–Image Pre-training model57. Lunit, UNI, and Virchow2 are based on the Dino Vision Transformer trained on proprietary whole-slide image datasets. CONCH used an image encoder pretrained with the iBOT framework. GPFM employed a teacher-student architecture during training and combined masked image modeling loss with self-distillation. mSTAR adopts a two-stage pretraining strategy, incorporating patch-level self-supervised training followed by slide-level contrastive learning. Virchow2 is an updated version of the original Virchow model, developed using an expanded dataset comprising up to 3.1 million WSIs. Unlike other models trained at a single magnification level, Virchow2 employs mixed-magnification learning, concurrent training the model across four magnification levels (5, 10, 20, and 40×). We extracted the latent features of all input image tiles and trained a multi-layer perceptron with one hidden layer to classify PCNSL and glioblastoma. We evaluated Ciga58 as a feature extractor, which failed to achieve good performance in the internal validation of the Mayo Clinic cohort (AUROC = 0.469; Supplementary Table 8). We therefore excluded it from our revised ensemble model.

We employed the Mayo Clinic cohort as the development training set because it contains diverse samples from consulting cases and a balanced class distribution. We evaluated the internal validity of our approaches using fivefold cross-validation before applying the trained model to independent patient populations. The PICTURE framework uses ensembles of models trained across fivefolds, leveraging the top-performing foundation models (Virchow2 and UNI) selected based on their performance in the Mayo development set (Supplementary Data 1 and Supplementary Table 10). We ensured that there was no patient overlap between the folds for model development and the evaluation fold in the Mayo Clinic cohort.

Uncertainty quantification for domain generalization

To capture a wide range of pathology manifestations of patients with CNS cancers, we acquired hematoxylin and eosin (H&E)-stained images of glioblastoma and PCNSL from medical literature in PubMed, Pathology Outlines, and Radiopaedia.org published between 2000 and 2023. Considering the significantly lower incidence rate of primary CNS lymphoma (PCNSL) in our clinical cohorts, we aimed to maximize the usage of all available pathology images of PCNSL from the medical literature to build our set of image prototypes. In addition, we adjusted the PCNSL to glioblastoma ratio according to the reciprocal of their respective prevalence rates to prevent our model from focusing on only one of these diagnostic groups. For PCNSL, we used the search terms “(primary central nervous system lymphoma) AND (histology)” and then iterated all accessed literature (free access to figures) and extracted the prototypical images. We input these images into our feature extractor to obtain low-dimensional embeddings of pathology images typical of these diagnoses (i.e., “prototypes”59,60). We computed the similarity matrix between all pairs of images (including all prototypes and all image tiles extracted from our study cohorts) by the inverse Gaussian of L2 distances. To increase computational efficiency, we set the similarity between any two images (image \({{{\bf{I}}}}\) and image \({{{\bf{J}}}}\)) to 0 if \({{{\bf{J}}}}\) is not among the \(k\) nearest neighbors of \({{{\bf{I}}}}\). Based on observations of the similarity score distribution, we set \(k\) to 500.

To compute the diagnostic uncertainty for each sample in the training set, we ran Monte Carlo permutation tests61 to generate the posterior distribution of the uncertainty measurement. Initially, except for the prototypes, all samples were unlabeled. Using the similarity score of the unlabeled images to the prototypes, we assigned pseudo-labels for all unlabeled samples. Images that are not among \(k\) nearest neighbors of any prototypes remained unlabeled after this procedure. We then selected an unlabeled sample \({{{\bf{S}}}}\). If the sum of the distances between \({{{\bf{S}}}}\) and all samples with PCNSL labels was smaller than the distance between a random unlabeled sample \({{{\bf{R}}}}\) and samples with PCNSL labels, we labeled sample \({{{\bf{S}}}}\) as PCNSL; otherwise, it is labeled as glioblastoma. We repeated this process until all samples were labeled. Using the Monte Carlo permutation test, we computed \({{{{\rm{P}}}}}_{{{{\rm{PCNSL}}}}}\) and \({{{{\rm{P}}}}}_{{{{\rm{GBM}}}}}\), the estimated probabilities of each data point being PCNSL and glioblastoma, respectively. We used Shannon entropy62 to represent the uncertainty for each data point, which is defined as \(H\left({{{\bf{S}}}}\right)=-{P}_{{{{\rm{PCNSL}}}}}\log {P}_{{{{\rm{PCNSL}}}}}-{P}_{{{{\rm{GBM}}}}}\log {P}_{{{{\rm{GBM}}}}}\). We employed feature vectors with entropies lower than the median entropy to train our diagnostic classification model.

We employed ensemble-based epistemic uncertainty quantification, an unsupervised domain adaptation strategy63, to build our final models. For each foundation model, the models derived from all fivefolds of the training data were aggregated into a model ensemble, and each input image yields an ensemble prediction. We approximate the Bayesian distribution of the prediction via bootstrap aggregation64. We employed the mean prediction score as the tile-level prediction and the standard deviation of the score as the uncertainty measurement. For each slide, we identified 50% of the tile-level predictions with the lowest prediction uncertainty, and we obtained the slide-level predictions using the mean predictions among this set of tiles. We evaluated our model using pathology images from independent patient cohorts.

Ablation experiments

We performed ablation studies to evaluate PICTURE’s performance against two baseline models: (1) otherwise identical classifiers trained with features from foundation models but without uncertainty control and (2) a standard end-to-end supervised machine learning model based on the Swin Transformer architecture. In addition, we conducted additional experiments comparing PICTURE to several of its variants: (1) a version incorporating stain normalization across all foundation models; (2) versions of PICTURE incorporating different model combinations consisting of two or three foundation models; (3) variants applying different thresholds in the uncertainty-based tile filtering step, including one that retained only the top 25% most confident tiles and two others using absolute uncertainty cutoffs of 0.25 and 0.1; and (4) variants employing alternative aggregation strategies, using the 10th, 30th, 50th, 70th, and 90th percentile of the tile-level prediction scores.

Uncertainty quantification for out-of-distribution sample detection

We employed the Out-of-Distribution Detector (OoDD) module38 to identify out-of-distribution samples not represented in the training set. Specifically, OoDD is built upon a PostNet framework38, and we trained a linear classifier with a fixed image encoder using glioblastoma and PCNSL samples. Each training sample was mapped to a low-dimensional latent space using the trained image encoder θ, and then sent to two masked autoregressive flow components to learn \(P({{{\bf{z}}}}{|c;}\theta )\), the probability of latent feature \({{{\bf{z}}}}\) given each possible category c. For an input image \({{{\bf{X}}}}\) and its corresponding label \(y\), the categorical distribution prediction \(P\left({y|}{{{\bf{X}}}};\theta \right)\) approximated \({{{\rm{Dir}}}}\)(α), a Dirichlet distribution of the diagnostic categories included in the training set. The concentration parameter \(\alpha\) in the Dirichlet distribution can be interpreted as the number of observed samples that follow the same distribution as the input38. Hence, \(\alpha\) served as the epistemic certainty measure for OoDD, where a high \(\alpha\) indicates likely in-domain samples (i.e., samples whose pathology diagnoses are included in the training set) and a low \(\alpha\) indicates likely out-of-distribution samples (i.e., samples whose diagnoses are not represented in the training set). After we estimated the epistemic uncertainty of all image tiles, we computed patient-level uncertainty scores for detecting out-of-distribution cancer types. We derive the patient-level uncertainty scores by averaging the tiles within the lowest 5% of epistemic uncertainty for each patient. To validate the OoDD framework, we conducted a tenfold cross-validation. We employed hyperparameter tuning (learning rate: 10−6–10−3; L2 regularization weight: 0.0001–0.1; latent dimension of 4, 8, or 16; entropy weight: 10−9–10−3) in model development and reported the average performance of the final model on the test set. The OoDD module was trained using GBM and PCNSL samples from the Mayo cohort and was tested on the previously unseen CNS subtypes from the Vienna cohort, with the sample sizes for each subtype shown in Supplementary Table 7. Note that these subtypes were not included in the training dataset, and thus their class imbalance will not affect the trained model.

We further compared the performance of the OoDD framework with two other standard OoDD methods, Mante Carlo dropout41 and deep ensemble42. Monte Carlo dropout involves training the model with the GBM vs. PCNSL classification task and then estimating the ensembled class probabilities of each tile through random dropout on the testing cohort. Subsequently, the slide-level uncertainty is defined by the averaged tile-level uncertainty across all tiles. The out-of-distribution through deep ensemble is achieved in a similar manner, except that the ensembled class probabilities are estimated through multiple models trained with random initialization. In our experiments, we used 5 ensembles for each method, and the dropout probability was set to p = 0.5 for Monte Carlo dropout.

Evaluation of model generalizability using independent patient populations

We evaluated the generalizability of our machine learning models using the AUROCs in the BWH, UPenn, the Medical University of Vienna, and TVGH cohorts. We further validated PICTURE in TCGA (Supplementary Fig. 1). We examined the differences in AUROCs of different machine learning methods using the one-sided bootstrap significance test65. For visualization, the lower and upper bounds of the confidence interval for the ROC curves were estimated from 1000 random bootstrap samples using the stats.bootstrap function from the scipy package (version 1.2.3).

Interpreting tissue attributes identified by PICTURE

To quantify the cellular and tissue attributes underpinning PICTURE’s predictions, we first segmented the tiles using HoverNet66 trained with the PanNuke dataset67 to identify cell nuclei. We then computed the roundness and size of each nucleus and the number of cells in each image tile. We determined the size of the nucleus by counting the pixels within its segmented contour. We computed the nuclei roundness using the circularity formula: \(C=4\pi ({{{\rm{Area}}}})/{{{{\rm{Perimeter}}}}}^{2}\), where a value of 1 indicates a perfect circle. In addition, we developed a necrosis identifier trained with 3384 instances of necrosis in brain cancer tissues annotated by a neuropathologist (M.P.N.). This classifier attained an AUROC of 0.92 in the held-out test set. We employed this classifier to compute a necrosis score, where 1 indicates regions showing typical necrotic changes and 0 indicates regions unlikely to contain necrosis. We applied these computational methods to quantify cellular and tissue characteristics of regions receiving high confidence scores from PICTURE.

Prediction result visualization

We visualized the prediction results of the PICTURE model using a red-blue colormap, where regions with high predicted probabilities were highlighted in vibrant red, while areas with lower predicted probabilities were shown in blue. To facilitate visual inspection, we optimized the contrast of the colormaps using histogram equalization. We implemented a Gaussian kernel to smooth color gradients, enhancing visualization and creating detailed, seamless heatmaps. The resulting heatmaps were evaluated and interpreted by neuropathologists with cumulative practice experience of over 40 years (T.R.P., A.W., M.P.N., and J.A.G.). A set of histologic features was scored qualitatively on a scale of 1 to 10 using 33 digital slides of glioblastoma and PCNSL with high prediction confidence based on the PICTURE model. Subsequently, we compared the scores of PCNSL and glioblastoma samples. The features assessed were as follows: density of tumor, microvascular proliferation, pseudopalisading necrosis, geographic necrosis, tumor infiltration, leptomeningeal involvement, thrombosed vessels, perivascular inflammation, perivascular tumor, surgical-related hemorrhage, vacuolization, and edema.

To interpret the feature space employed by PICTURE, we employed the Uniform Manifold Approximation and Projection (UMAP)68 with PCA initialization to visualize the learned image representations in a two-dimensional space while preserving both local and global data structures. We further plotted the uncertainty score of each sample and labeled the data points by diagnoses and sample collection sites. Our codes and trained models are available at https://github.com/hms-dbmi/picture.

Evaluation of inter-observer variability

To assess diagnostic variability using real-world sample sets, we conducted a multi-institutional study involving nine board-certified neuropathologists69. We recruited these participants from the United States, European Union, East Asia, and Southeast Asia, representing a spectrum of clinical experience: three junior (< 5 years of practice), four intermediate (5–15 years), and two senior (> 15 years) neuropathologists. We curated a representative set of 40 slides, comprising 20 FFPE slides and 20 frozen section slides. Each neuropathologist evaluated all slides independently. To simulate real-world diagnostic constraints, participants review each case during a time-limited session of 90 s40. Following this initial evaluation, neuropathologists had the option to re-evaluate the samples and modify their initial diagnoses as needed.

To evaluate diagnosis performance based solely on H&E slides, we did not provide immunohistochemistry, genomic data, or clinical history to the evaluators. For each case, neuropathologists recorded a binary diagnosis (PCNSL or glioblastoma) along with a confidence level on a five-point scale (0, 25, 50, 75, or 100%). We further provided an optional free-text field for comments.

To quantify the overall inter-observer agreement, we computed Fleiss’ kappa, a statistical measure of consistency across multiple raters. In addition, we assessed pairwise diagnostic similarity among neuropathologists, as well as between each neuropathologist and the ground truth, using the Jaccard index70. We stratified our analyses by FFPE and frozen section samples.

Statistics and reproducibility

All experiments have been independently repeated at least three times with consistent results. Experiments involving performance quantification were repeated five times.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The data from The Cancer Genome Atlas can be obtained from the National Cancer Institute Genomic Data Commons (https://gdc.cancer.gov/). The pathology and diagnostic information of the Medical University of Vienna cohort is deposited at https://doi.org/10.25493/WQ48-ZGX. Researchers may obtain de-identified data directly from the Mayo Clinic, Brigham & Women’s Hospital, Taipei Veterans General Hospital, the Medical University of Vienna (frozen sections), and the Hospital of the University of Pennsylvania upon request, subject to review, institutional ethical approvals, and data use agreements. Data access enquiries should be directed to K.-H.Y. We aim to forward all requests to the managers of these institutional datasets in 2 weeks, and these requests will be evaluated according to their institutional policies. Data are strictly only for non-commercial academic use. Publicly available case data were obtained from Radiopaedia, American Society of Hematology Image Bank, WebPathology, PathologyOutlines, Wikimedia Commons, and The Internet Pathology Laboratory databases. These resources can be accessed at the following URLs: https://radiopaedia.org/cases/primary-cns-lymphoma-histology; https://imagebank.hematology.org/reference-case/58/primary-cns-diffuse-large-bcell-lymphoma; https://www.webpathology.com/images/neuropath/non-glial-tumors/cns-lymphomas; https://www.pathologyoutlines.com/topic/lymphomaprimaryCNSlymphoma.html; https://commons.wikimedia.org/wiki/File:Glioblastoma_-_high_mag.jpg; https://www.tau.ac.il/medicine/tau-only/webpath/cnshtml/cnsidx.htm. This study relies on retrospective analysis of anonymized pathology slides. Source Data is provided with this paper. The remaining data are available within the Article, Supplementary Information, or Source Data file. Source data are provided with this paper.

Code availability

Our codes and trained models are available at https://github.com/hms-dbmi/picture.

References

Ostrom, Q. T., Cioffi, G., Waite, K., Kruchko, C. & Barnholtz-Sloan, J. S. CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the United States in 2014-2018. Neuro Oncol. 23, iii1–iii105 (2021).

Kurdi, M. et al. Simple approach for the histomolecular diagnosis of central nervous system gliomas based on 2021 World Health Organization Classification. World J. Clin. Oncol. 13, 567–576 (2022).

Louis, D. N. et al. The 2021 WHO classification of tumors of the central nervous system: a summary. Neuro Oncol. 23, 1231–1251 (2021).

Bondy, M. L. et al. Brain tumor epidemiology: consensus from the brain tumor epidemiology consortium. Cancer 113, 1953–1968 (2008).

Giese, A. & Westphal, M. Treatment of malignant glioma: a problem beyond the margins of resection. J. Cancer Res. Clin. Oncol. 127, 217–225 (2001).

Low, J. T. et al. Primary brain and other central nervous system tumors in the United States (2014-2018): a summary of the CBTRUS statistical report for clinicians. Neurooncol Pr. 9, 165–182 (2022).

Stupp, R. et al. Radiotherapy plus concomitant and adjuvant temozolomide for glioblastoma. N. Engl. J. Med. 352, 987–996 (2005).

Gritsch, S., Batchelor, T. T. & Gonzalez Castro, L. N. Diagnostic, therapeutic, and prognostic implications of the 2021 World Health Organization classification of tumors of the central nervous system. Cancer 128, 47–58 (2022).

Bhatt, V. R., Shrestha, R., Shonka, N. & Bociek, R. G. Near misdiagnosis of glioblastoma as primary central nervous system lymphoma. J. Clin. Med. Res. 6, 299–301 (2014).

Omuro, A. M., Leite, C. C., Mokhtari, K. & Delattre, J. Y. Pitfalls in the diagnosis of brain tumours. Lancet Neurol. 5, 937–948 (2006).

Sugita, Y. et al. Intraoperative rapid diagnosis of primary central nervous system lymphomas: advantages and pitfalls. Neuropathology 34, 438–445 (2014).

Sugita, Y. et al. Primary central nervous system lymphomas and related diseases: pathological characteristics and discussion of the differential diagnosis. Neuropathology 36, 313–324 (2016).

Reinecke, D. et al. Fast intraoperative detection of primary CNS lymphoma and differentiation from common CNS tumors using stimulated Raman histology and deep learning. Neuro Oncol. 27, 1297–1310 (2024).

Önder, E. et al. Corticosteroid pre-treated primary CNS lymphoma: a detailed analysis of stereotactic biopsy findings and consideration of interobserver variability. Int. J. Clin. Exp. Pathol. 8, 7798–7808 (2015).

Kurdi, M. et al. Diagnostic discrepancies between intraoperative frozen section and permanent histopathological diagnosis of brain tumors. Turk. Patoloji Derg. 38, 34–39 (2022).

Cao, L., Zhang, M., Zhang, Y., Ji, B. & Wang, X. Progress of radiological‑pathological workflows in the differential diagnosis between primary central nervous system lymphoma and high‑grade glioma (Review). Oncol. Rep. 49, 20 (2023).

Alexander, B. M. & Cloughesy, T. F. Adult glioblastoma. J. Clin. Oncol. 35, 2402–2409 (2017).

Lauw, M. I. S., Lucas, C. G., Ohgami, R. S. & Wen, K. W. Primary central nervous system lymphomas: a diagnostic overview of key histomorphologic, immunophenotypic, and genetic features. Diagnostics (Basel) 10, 1076 (2020).

Wu, S. et al. The role of surgical resection in primary central nervous system lymphoma: a single-center retrospective analysis of 70 patients. BMC Neurol. 21, 190 (2021).

Wang, X. et al. A pathology foundation model for cancer diagnosis and prognosis prediction. Nature 634, 970–978 (2024).

El Nahhas, O. S. M. et al. From whole-slide image to biomarker prediction: end-to-end weakly supervised deep learning in computational pathology. Nat. Protoc. 20, 293–316 (2024).

Redlich, J.-P. et al. Applications of artificial intelligence in the analysis of histopathology images of gliomas: a review. npj Imaging 2, 16 (2024).

Tofte, K., Berger, C., Torp, S. H. & Solheim, O. The diagnostic properties of frozen sections in suspected intracranial tumors: a study of 578 consecutive cases. Surg. Neurol. Int. 5, 170 (2014).

Yu, K. H. & Kohane, I. S. Framing the challenges of artificial intelligence in medicine. BMJ Qual. Saf. 28, 238–241 (2019).

Hüllermeier, E. & Waegeman, W. Aleatoric and epistemic uncertainty in machine learning: an introduction to concepts and methods. Mach. Learn. 110, 457–506 (2021).

Chua, M. et al. Tackling prediction uncertainty in machine learning for healthcare. Nat. Biomed. Eng. 7, 711–718 (2022).

Ghaffari Laleh, N. et al. Benchmarking weakly-supervised deep learning pipelines for whole slide classification in computational pathology. Med. Image Anal. 79, 102474 (2022).

Roetzer-Pejrimovsky, T. et al. The digital brain tumour atlas, an open histopathology resource. Sci. Data 9, 55 (2022).

Wang, Z., Jensen, M. A. & Zenklusen, J. C. A practical guide to the Cancer Genome Atlas (TCGA). Methods Mol. Biol. 1418, 111–141 (2016).

Wang, X. et al. Transformer-based unsupervised contrastive learning for histopathological image classification. Med. Image Anal. 81, 102559 (2022).

Filiot, A. et al. Scaling self-supervised learning for histopathology with masked image modeling. Preprint at https://www.medrxiv.org/content/10.1101/2023.07.21.23292757v1 (2023).

Kang, M., Song, H., Park, S., Yoo, D. & Pereira, S. Benchmarking Self-Supervised Learning on Diverse Pathology Datasets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3344–3354 (2023).

Chen, R. J. et al. Towards a general-purpose foundation model for computational pathology. Nat. Med 30, 850–862 (2024).

Zimmermann, E. et al. Virchow2: scaling self-supervised mixed magnification models in pathology. Preprint at https://arxiv.org/abs/2408.00738 (2024).

Lu, M. Y. et al. A visual-language foundation model for computational pathology. Nat. Med. 30, 863–874 (2024).

Ma, J. et al. A generalizable pathology foundation model using a unified knowledge distillation pretraining framework. Nat. Biomed. Eng. https://doi.org/10.1038/s41551-025-01488-4 (2025).

Xu, Y. et al. A multimodal knowledge-enhanced whole-slide pathology foundation model. Preprint at https://arxiv.org/abs/2407.15362 (2024).

Charpentier, B., Zügner, D. & Günnemann, S. Posterior network: uncertainty estimation without ood samples via density-based pseudo-counts. Adv. Neural Inf. Process. Syst. 33, 1356–1367 (2020).

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10012–10022 (2021).

ALQahtani, D. A. et al. Factors underlying suboptimal diagnostic performance in physicians under time pressure. Med. Educ. 52, 1288–1298 (2018).

Gal, Y. A. G. Z. Dropout as a Bayesian approximation: representing model uncertainty in deep learning. Proc. 33rd Int. Conf. Int. Conf. Mach. Learn. 48, 1050–1059 (2016).

Mehrtash, A., Wells, W. M., Tempany, C. M., Abolmaesumi, P. & Kapur, T. Confidence calibration and predictive uncertainty estimation for deep medical image segmentation. IEEE Trans. Med Imaging 39, 3868–3878 (2020).

Hollon, T. C. et al. Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat. Med. 26, 52–58 (2020).

Dolezal, J. M. et al. Uncertainty-informed deep learning models enable high-confidence predictions for digital histopathology. Nat. Commun. 13, 6572 (2022).

Rączkowski, Ł., Możejko, M., Zambonelli, J. & Szczurek, E. ARA: accurate, reliable and active histopathological image classification framework with Bayesian deep learning. Sci. Rep. 9, 14347 (2019).

Yu, K. H. et al. Reproducible machine learning methods for lung cancer detection using computed tomography images: algorithm development and validation. J. Med Internet Res 22, e16709 (2020).

Thagaard, J. et al. Can you trust predictive uncertainty under real dataset shifts in digital pathology? In Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. MICCAI 2020. Lecture Notes in Computer Science, Vol. 12261, (Springer, Cham, 2020).

Linmans, J., Elfwing, S., van der Laak, J. & Litjens, G. Predictive uncertainty estimation for out-of-distribution detection in digital pathology. Med. Image Anal. 83, 102655 (2023).

Du, M., Yang, F., Zou, N. & Hu, X. Fairness in deep learning: a computational perspective. IEEE Intell. Syst. 36, 25–34 (2020).

Barocas, S., Hardt, M. & Narayanan, A. Fairness in machine learning. Nips Tutor. 1, 2017 (2017).

Nasrallah, M. P. et al. Machine learning for cryosection pathology predicts the 2021 WHO classification of glioma. Med 4, 526–540.e524 (2023).

Russakovsky, O. et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 115, 211–252 (2015).

Reinhard, E., Adhikhmin, M., Gooch, B. & Shirley, P. Color transfer between images. IEEE Comput. Graph. Appl. 21, 34–41 (2001).

Macenko, M. et al. A method for normalizing histology slides for quantitative analysis. In 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, pp. 1107–1110 (Boston, MA, USA, 2009).

Neidlinger, P. et al. Benchmarking foundation models as feature extractors for weakly-supervised computational pathology. Preprint at https://arxiv.org/abs/2408.15823 (2024).

Campanella, G. et al. A clinical benchmark of public self-supervised pathology foundation models. Nat. Commun. 16, 3640 (2025).

Radford, A. et al. Learning transferable visual models from natural language supervision. Proc. 38th Int. Conf. Mach. Learn. 139, 8748–8763 (2021).

Ciga, O., Xu, T. & Martel, A. L. Self supervised contrastive learning for digital histopathology. Mach. Learn. Appl. 7, 100198 (2022).

Li, O., Liu, H., Chen, C. & Rudin, C. Deep learning for case-based reasoning through prototypes: a neural network that explains its predictions. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence (AAAI'18/IAAI'18/EAAI'18), 432, 3530–3537 (AAAI Press, 2018).

Chen, C. et al. This looks like that: deep learning for interpretable image recognition. 33rd Conference on Neural Information Processing System, Vol. 7, 8930–8941 (NeurIPS, 2019).

Durmus, A., Moulines, E. & Pereyra, M. Efficient Bayesian computation by proximal Markov chain Monte Carlo: when Langevin meets Moreau. SIAM J. Imaging Sci. 11, 473–506 (2018).

Shannon, C. E. The mathematical theory of communication. 1963. MD Comput 14, 306–317 (1997).

Lakshminarayanan, B., Pritzel, A. & Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. Adv. Neural Inf. Process. Syst. 30, 6405–6416 (2017).

Clyde, M. & Lee, H. Bagging and the Bayesian bootstrap. In International Workshop on Artificial Intelligence and Statistics, pp. 57–62 (PMLR, 2001).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics 44, 837–845 (1988).

Graham, S. et al. Hover-Net: simultaneous segmentation and classification of nuclei in multi-tissue histology images. Med. Image Anal. 58, 101563 (2019).

Graham, S. et al. MILD-Net: minimal information loss dilated network for gland instance segmentation in colon histology images. Med. Image Anal. 52, 199–211 (2019).

Healy, J. & McInnes, L. Uniform manifold approximation and projection. Nat. Rev. Methods Prim. 4, 82 (2024).

Clayton, D. A. et al. Are pathologists self-aware of their diagnostic accuracy? Metacognition and the diagnostic process in pathology. Med. Decis. Mak. 43, 164–174 (2023).

Real, R. & Vargas, J. M. The probabilistic basis of Jaccard’s index of similarity. Syst. Biol. 45, 380–385 (1996).

Gaillard, F. Primary CNS lymphoma (histology). Radiopaedia.org. https://radiopaedia.org/cases/primary-cns-lymphoma-histology (2010).

Ferreri, A. J. M. et al. Primary central nervous system lymphoma. Nat. Rev. Dis. Prim. 9, 66 (2023).

Thirunavukkarasu, B. et al. Primary diffuse large B-cell lymphoma of the CNS, with a “Lymphomatosis cerebri” pattern. Autops Case Rep. 11, e2021250 (2021).

American Society of Hematology. Primary CNS diffuse large B-cell lymphoma. ASH Image Bank. https://imagebank.hematology.org/reference-case/58/primary-cns-diffuse-large-bcell-lymphoma (2025).

WebPathology. Primary CNS lymphoma. https://www.webpathology.com/images/neuropath/non-glial-tumors/cns-lymphomas (2025).

PathologyOutlines. Primary CNS lymphoma. https://www.pathologyoutlines.com/topic/lymphomaprimaryCNSlymphoma.html (2025).