Abstract

When developing deep learning models for accurate property prediction, it is sometimes overlooked that some material physical properties are insensitive to the local atomic environment. Here, we propose the elemental convolution neural networks (ECNet) to obtain more general and global element-wise representations to accurately model material properties. It shows better prediction in properties like band gaps, refractive index, and elastic moduli of crystals. To explore its application on high-entropy alloys (HEAs), we focus on the FeNiCoCrMn/Pd systems based on the data of DFT calculation. The knowledge from less-principal element alloys can enhance performance in HEAs by transfer learning technique. Besides, the element-wise features from the parent model as universal descriptors retain good accuracy at small data limits. Using this framework, we obtain the concentration-dependent formation energy, magnetic moment and local displacement in some sub-ternary and quinary systems. The results enriched the physics of those high-entropy alloys.

Similar content being viewed by others

Introduction

In recent years, machine learning (ML) methods have been successfully employed for classification, regression, and clustering tasks in material science1. To date, there have already been a number of excellent advances in ML approaches in the field of materials science. For example, lots of predictive models have been developed in order to model specific properties case by case, e.g., the prediction of numerous material properties such as melting temperatures2, the superconducting critical temperature3, bandgap energies4, and mechanical properties5. Typically, hand-crafted high-dimensional descriptors are used to suit the physics of the underlying property, in which the stoichiometric attributes, elemental and structural properties, and correlated physical properties are considered descriptors in general5,6,7. Combined with a series of ML algorithms like random forests and support vector machines, this type of method could achieve good accuracy on a specific problem. Recently, another prevalent approach appeared, which is based on graph models and deep neural network methods. Schnet8, CGCNN9, and MEGNet10 are examples of such graph models, making it convenient to learn any material property simply based on raw crystal input. The remarkable expressive power of deep learning graph models allow these models to precisely describe the material properties of various systems and achieve state-of-the-art performance.

One of the most important applications of ML in materials science is the alloy modeling including metallic glass, high-entropy alloys (HEAs), magnets, superalloys, etc11. HEAs have garnered substantial interest due to their outstanding properties, such as high mechanical strength, good resistance to corrosion, and attractive magnetic and electronic properties12,13,14. The multiple principal (at least five) alloying elements in HEAs make the calculations based on density functional theory (DFT) difficult due to the solid solution feature, which needs large supercells and complex crystal structure space involving multiple prototypes. A large amount of studies demonstrated that the ML methods could alleviate such problems. Based on developed ML potential, many fundamental properties like the screw, defects, and segregation of the MoNbTaW and MoNbTaVW alloy systems could be studied systematically15,16. In addition, the data-driven ML approaches like the phase classification problem17,18 and predictions of configurational energies19 to accelerate the discovery of HEAs are explored and gained progress. Though the data-driven ML models could learn properties in HEAs, the data set size of HEAs is small compared with those DFT databases such as Materials Project20, Open Quantum Materials Database21, and AFLOWLIB22, which have limited the effectiveness and applicability of ML models.

Graph models could transform crystals into a graph described by atomic attributes, bond attributes, and even ground state attributes. However, when predicting the properties of materials, not all cases would form a one-to-one correspondence between the target property and encoded compositional and structural features. For example, the purely compositions-based ML models such as ElemNet23 and Roost24 do not rely on knowledge of crystal structures, but are capable to achieve high performance in the average formation enthalpy and bandgap. Furthermore, for properties like bandgap or superconducting critical temperature, the same target values may correspond to large differences in crystal structures. For this purpose, we highlight the benefit of using more global or general representations for materials. Here, we propose the operation of elemental convolution in the deep neural networks, and merge the atom-wise features into element-wise features. We demonstrate that this approach could achieve competitive performance in modeling properties like shear modulus, bandgap, and refractive index, compared with previous models. Furthermore, multi-task learning with multiple regression objectives can enhance performance by joint learning and make properties prediction very convenient.

In this work, we develop elemental convolution graph neural networks (ECNet) to predict material properties. This framework is capable of learning material representation from elemental embeddings trained on a data set by itself. Under the operation of the elemental convolution, the element-wise features are the intermediate and final describers, which can extract the knowledge of both atomic information crystal structures and can be updated by learning the target material properties. Our approach is to construct a more general and global attribute than delicate representations in crystal graphs when making the material-property relationship prediction, in which the global attributes are demonstrated to be superior to the previous models in some intrinsic property predictions. Utilizing the developed ECNet model, we focus on the application in the realm of high-entropy alloys, especially on the FeNiCoCrMn (Cantor alloy) and FeNiCoCrPd systems25,26. We model the solid solution using special quasirandom structures (SQSs) and calculate properties of formation energy, total energy, mixing energy, magnetic moment, and root mean-square displacement (RMSD) from DFT-based calculations. Since the available data points in HEAs are limited, we utilize transfer learning (TL) techniques to overcome the small data size problem. Considering it is easier to explore and calculate the simple alloys, we demonstrate the feasibility to utilize the information from low and medium entropy alloys to obtain better performance in predicting HEAs. Specifically, the model is initially learned from the less-principal element alloy systems and then transfers the weights into the HEAs data. Furthermore, we also have tried another TL approach, where the pre-trained ECNet is used as encoders to generate universal element-wise features, and demonstrate these features can achieve good performance using simple multi-layer perceptron (MLP) models at small data limits.

Results

The ECNet model

The model proposed here is based on the graph deep neural networks. Briefly, the representation of the crystals is described as an undirected graph \({{{\mathcal{G}}}}=({{{\mathcal{E}}}},{{{\mathcal{V}}}})\), where the edges \({{{\mathcal{E}}}}\) represents bond connections and the nodes \({{{\mathcal{V}}}}\) represents atoms in crystal. The initially embedded feature matrices are \({{{\bf{V}}}}=\left[{{{{\bf{v}}}}}_{1};\ldots ;{{{{\bf{v}}}}}_{{N}_{a}}\right]\in {{\mathbb{R}}}^{{N}_{a}\times {N}_{f}}\), where Na is the number of atoms, Nf is the size of hidden features, and \({v}_{i}\in {{{\mathcal{V}}}}\) is the features of the ith atom initialized from the atom type Zi. It is a type of atom-wise feature generated via an atom embedding layer (\({\mathbb{Z}}\to {{\mathbb{R}}}^{{N}_{f}}\)), which is then used as the atom (node) attributes in the structural graph. In this work, we propose the operation of elemental convolution (EC) to obtain a more general and global attribute to represent the materials. The atom-wise features are averaged according to its atomic type, as shown in Fig. 1. Then, the atom-wise features are merged into element-wise attributes with \({{{{\bf{X}}}}}^{l}=\left({{{{\bf{x}}}}}_{1}^{l},\ldots ,{{{{\bf{x}}}}}_{T}^{l}\right)\) with \({{{{\bf{X}}}}}^{l}\in {{\mathbb{R}}}^{T\times {N}_{f}}\), where T is the number of elemental types. To be clear, the graph features are still updated using the connectivity of bonds, which are the basic characteristics of graph models.

The embedding layer is used to encode initial inputs from the atomic numbers. In the interaction block, a series of neural networks is used to transform the crystal structures into atomic attributes. The elemental convolution operation takes average values of atom-wise features according to the atomic element type.

In the interaction blocks, the crystal structures are extracted to be part of atom representations. Through elemental convolution, the raw structure is transformed into element-wise representations at given properties, and these features are greatly reduced after processing knowledge from physical and geometrical properties in contrast with other graph representations. It should be noted that we have used multi-task learning in our framework in order to improve learning efficiency and prediction accuracy27. As illustrated in Fig. 1, EC-based multi-task learning (ECMTL) or single-task learning (ECSTL) could be selected according to the number of tasks. The architecture of ECMTL shares a common set of layers (shared physical knowledge in an elemental dimension) across all tasks and then some task-specific multi-layer perceptron networks (tower layers) are designed for each individual task. This feature makes it possible to learn multiple related tasks at the same time and mutually benefit the performance of individual tasks. Moreover, multi-task learning improves generalization performance by leveraging the domain-specific information contained in the training signals of related tasks28.

Model estimation

To investigate the predictive performance of ECMTL, we apply the model to the inorganic crystal data obtained from the materials project (MP)29. The data for formation energies and the band gaps in the MP data sets comprise ~69,000 crystals, while the training set is restricted to 60,000 in order to be consistent with prior settings in other works30,31. For the bandgap, a restricted data set that excludes the metal materials is also considered with a subset of 45,901 crystals (The bandgap with nonzero value is labeled as \({E}_{{{{\rm{g}}}}}^{{{{\rm{nz}}}}}\)). The elastic moduli properties, including bulk modulus and shear modulus, have a smaller amount of data with 5830 samples. In our model, we select correlated properties as predicted targets. The first set is the formation energies Ef, and bandgap Eg, and the other set is the bulk modulus KVRH, and shear modulus GVRH. In addition, the refractive index, optical gap, and direct gap for 4040 compounds are considered to estimate the performance, which is computed by density functional theory and high-throughput methods32. Except for the formation energy and band gaps, the data set of other properties is randomly divided into a training set (90%) and a test set (10%). The validation data set is set to be one-tenth of the training set when the validation is used in order to optimize the hyperparameters. For comparison, we also take into account single-task learning, called ECSTL. Table 1 shows the comparison of the performance of the ECSTL, the ECMTL, the MEGNet, and MODNet models in the mean absolute errors. It should be noted that the developed EC models and MEGNet model are graph-based neural networks that utilize only the atomic number and spatial distance as inputs, greatly different from the MODNet model with a set of extracted feature set. Generally, the feature-based should be preferred for small to medium data sets, while the graph-based models are preferred for large data sets30.

The complete data set for the formation energy and the bandgap used in the development of the ECNet model is significantly large, with 60,000 samples. We note that the ECSTL/ECMTL (ECNet) for the Ef prediction have a higher MAE than the prior models, such as MODNet and MEGNet30,31. The reason is that the elemental convolution merged detailed structural information while the formation energy is sensitive to structural changes. Specifically, the MEGNet model provides an elaborate description of atomic, bond, and global state attributes, leading to a state-of-the-art performance in Ef. However, many other properties, such as bandgap, modulus, and refractive index, have complicated unknown relations, which is not a good choice to use the one-to-one mapping between the structural configurations and properties. The strategy of reducing the compositional and structural features may work better for those intrinsic target properties. Taking the bandgap as an example, the ECNet model systematically outperforms MODNet and MEGNet using a large data set or limited data set that has excluded the nonzero values. For the prediction of Eg and \({E}_{{{{\rm{g}}}}}^{{{{\rm{nz}}}}}\), the MAEs of ECSTL are 0.164 and 0.27 eV, while those of the ECMTL are 0.227 and 0.27 eV, respectively. Both the single-task and multi-task learning models outperform the MODNet and MEGNet in the bandgap predictions. In addition, the MAEs of shear modulus and refractive index are lower than MEGNet model. Among these models, SISSO33 is found to result in higher prediction errors in both large and small data set for different target properties. It should be noted that the ECMTL model learns bulk and shear modulus at the same time, and we observe that the MAE for the shear modulus is 0.046 \({\log }_{10}({{{\rm{GPa}}}})\), slightly lower than the ECSTL. This observation indicates that multi-task learning is more helpful when applied to related tasks since mutual benefit appears during the training process. However, the ECMTL shares a series of common NN layers with a large amount of common parameters, which is a limitation for the overall performance, especially for those un-related tasks, leading to lower prediction accuracies than ECSTL.

To investigate and understand the graph elemental convolution models, we visualize the learned elemental feature vectors in Fig. 2. Taking the SeO2 as an example, we visualize the 128-dimensional representations for each element. In Fig. 2a, It shows the outputs after three interaction blocks (IBs) in the ECNet model, in which negative and positive values are represented with blue and red colors, respectively. These element-wise features (IB-1, IB-2, IB3) are updated by iteratively passing messages from structural configurations within three successive IBs. In consequence, the domain knowledge tends to accumulate as layers stacked. It is clear that different elements show unique characteristics. However, the neural networks inside the IBs have encoded elemental features from local atomic environments to particular target properties. Thus, the special characteristics of different elements are the coupled effects between the physical elemental types and information learned on a large set of data samples. In Fig. 2b, we illustrate the third IB outputs of another SeO2 compound, which is in an orthorhombic crystal system (point group: mm2) while SeO2 in Fig. 2a is in a tetragonal lattice (point group: 4/mmm). In contrast with IB3 outputs of SeO2, we observe that the two features are in similar patterns due to the same chemical compositions of the two compounds. To quantify the difference, we compare the Frobenius norm of the feature matrix and the structural matrix, while the differences in them are represented as \({\left\Vert \Delta X\right\Vert }_{F}\) and \({\left\Vert \Delta S\right\Vert }_{F}\), respectively. We notice that the relative difference (\({\left\Vert \Delta X\right\Vert }_{F}/{\left\Vert X\right\Vert }_{F}\)) are similar for the two compounds with 0.66 and 0.63 for the first and third interaction blocks. The relative difference in structural matrix of \({\left\Vert \Delta S\right\Vert }_{F}/{\left\Vert S\right\Vert }_{F}\) is 0.91, slightly larger than the difference in feature vectors. The relative structural matrix norm shift is almost consistent with those in the feature matrix. This observation shows that these features contain preprocessed physical geometrical knowledge under the graph-based framework, and the ECNet model is capable to distinguish different materials even using a more global element-wise representation. For the readout feature vectors of Co3Cr alloy, see Supplementary Figs. 1, 2.

Application in HEAs

To investigate the applications in HEAs, we focus on FeNiCoCrMn/Pd quinary high-entropy alloy and binary, ternary, as well as quaternary subsystems. The systems are among of the most interesting in the HEA field. The quinary FeNiCoCrMn HEA, formed from five 3d transition metal elements (called Cantor alloy), has remarkable strength and ductility at high temperatures34. By intentionally substituting Mn with the 4d element Pd, which has obviously larger atomic radius and electronegativity than 3d elements, the FCC quinary FeNiCoCrPd HEA is recently reported with around 2.5 times higher strength than that of FeNiCoCrMn at similar grain size, which also comparable to those of advanced high-strength steels26. We have conducted a DFT-based study on these two HEAs25 and found that the inhomogeneous feature of Pd increases the average atomic local displacement to a moderate amount, consequently enhancing its mechanical properties. Along this work, we have calculated several hundreds of subsystems of binary, ternary, and quaternary for all equiatomic compositions and several typical non-equiatomic compositions for FCC and BCC single-phase solid solution (SPSS) of these two systems. These DFT data became the base for using the current ECNet model to study further the properties relations in HEAs systematically.

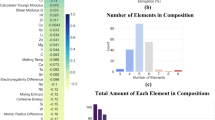

It should be noted that one critical factor for developing an effective and robust machine learning model is to prepare diverse training data encompassing a good range of structural environments and compositional space. Therefore, we considered the above DFT data for both FCC and BCC SPSS, and not only equiatomic but also various non-equiatomic compositions as the training data, including the total energy (Etot), the formation energy (Eform), the mixing energy (Emix), root mean-square displacement (RMSD), as well as magnetic moment per atom (ms) and per cell (mb). The data set statistics is shown in Fig. 3. The mean magnetic moment is 0.89 μB/atom, and the values span a similar range for FCC and BCC phases, as shown in Fig. 3a. However, the BCC phase spans a larger region than the FCC phase for the RMSD since Pd introduced large inhomogeneous into the BCC and some structures are near collapsed. While there are some outliers lying beyond the 1.5 interquartile range (IQR) in FCC ternary alloy, as shown in Fig. 3b. The outlier data points also appear in the formation energies and mixing energies (Supplementary Fig. 3). These outlier data points are included in the training as we consider each alloy calculation will show critical underlying physics. Besides, the distribution of RMSD for Mn-based alloys shows a concentrated distribution from 0 to 0.2 Å, and spans a smaller range than that of Pd-based alloys. The statistics of subsystems is summarized in Fig. 3d.

Statistical box plot over different types of subsystems in solid-solution FCC and BCC phases for a magnetic moments and b RMSD. The mean values and median values are represented with green squares and black lines in the interquartile range. The box region shows the 25–75% percentile range, and the whiskers extend to 1.5 times the interquartile range. c Histogram of the RMSD for different kinds of alloys (Mn-based alloys, Pd-based alloys, and others.) d The statistics of different subsystems.

To begin with, the ECNet model architectures are trained from scratch, where the model parameters are initialized randomly from a uniform distribution. All the elemental features, model weights and biases are learned from the input training data. Our initial ML models are constructed with ECMTL by learning the six properties as a unit at the same time. Besides, we subdivide the data set into three categories: (1) all the known data, (2) binary and ternary compounds, and (3) quaternary and quinary compounds. The unit model performance on the test set is illustrated in Table 2 (model Scratch-unit). We can observe this unit model has shown good accuracies at energy-related properties across the different data sets. However, it shows not a good performance for cases like magnetic moments (MAE: 0.700 μb/cell at binary and ternary data set) and RMSD (MAE: 0.138 Å at quaternary and quinary data set). Within the unit framework, it is impractical to further optimize the model performance by adjusting hyperparameters to make all properties be fitted well at the same time. Since multi-task learning concerns the problem of optimizing a model with respect to many properties, it is crucial to understand which tasks could probably help in an MTL process28. In the ECMTL deep networks, the different tasks share some common hidden layers, which is called hard parameter sharing in deep neural networks35, and this method would learn a joint representation among these multiple tasks or do model interpolation to regularize certain targets36. Each task will be learned better if it is trained in the network that is learning other related tasks at the same time, while uncorrelated tasks appear as noise for other tasks to improve generalization performance35. Here, we first investigate the correlations between different properties to find related tasks. The Pearson correlation coefficients for the five properties are shown in Supplementary Fig. 4. The ms and mb have a correlation coefficient of 0.43, which is an obvious result because the two magnetic moments are merely values in different scales. Generally, we can observe that the RMSD is less correlated with the magnetic properties as well as the energy-related properties, since the correlation coefficients are small, e.g., −0.1 between RMSD and Eform and −0.12 between RMSD and ms. We select tasks of ms and mb with significant correlation to develop an ECMTL model. Then the Etot, Eform, and Emix are adopted together in the multi-task learning, while the energy-related properties can be considered to be dependent physically in such cases in addition to the correlations. Finally, we train RMSD within the single-task framework.

As shown in Fig. 4, the parity plots show the comparison of the DFT-calculated and ECNet- predicted results for the six properties of FeNiCoCrMn/Pd alloys for both the training and test data sets. The corresponding MAE values are displayed in Table 2 (model Scratch-group), which shows that the performance of the ECNet model is significantly improved compared to the previous unit model results. Specifically, the test MAEs for the Etot, Emix, Eform, ms, MB, and RMSD are 0.122, 0.022, 0.009 eV/atom, 0.058 μb/atom, 0.386 μb/cell, and 0.01 Å, respectively. Taken the RMSD as an example, the mean absolute error has been reduced with a percentage of 87.3% relative to the error in the framework of the unit model, and the error percentages are −79.6 and −90.6% at binary and ternary (2 + 3), and quaternary and quinary (4 + 5) data sets, respectively. The similar well-distributed data shapes between DFT-calculated and ECNet predicted values also indicate good predictive performance in all domains. More critically, we can observe that high performance is achieved across the entire range of those properties even when the distribution of the training and test data are not similar, as illustrated in Fig. 4.

The DFT-based calculations are much more computationally demanding for HEAs. To circumvent the HEAs bottleneck, one approach is to use the transfer learning (TL) technique37. We consider that the knowledge related to chemical and physical similarities between different elements could be transferred from the less-principal element alloys into multiple-principal element systems. To validate the feasibility, we propose the approach that the binary and ternary data are used as the source data set for transfer learning, and the quaternary and quinary data are used as the target data. For the task of transfer learning, we introduce two types of transfer learning. The first one is that we transfer the feature representations, including tower layers (TL-I), while the other is excepting the weights from tower layers (TL-II). Both types of source models are learned from binary and ternary data sets. As seen in Table 2, we find that the prediction errors drop after using transfer learning no matter what models have been employed (unit or group). For example, the error percentages of Etot, MB, and RMSD are around −52, −94, and −54% under grouped models using TL-II compared with the scratch method. Since the current models are approximately optimal solutions, it is difficult to further optimize model performance even using the transfer learning technique. In effect, some error percentages have risen after transfer learning. It is interesting to find that the performance of TL-II is better than TL-I. Since the difference is whether to include the task-specific layers, we conclude that the shared common layers are more general feature representations, which is helpful to transfer the domain knowledge from source data. Generally, the use of transfer learning is very effective in such cases, and it makes the predictions closer to the true calculated properties.

The element-wise features that are encoded with compositional and local geometric information are passed through multi-layer neural networks in the ECNet framework naturally. Thus, the pre-trained ECNet models can be considered as an encoder of a material to generate universal elemental features. The readout vectors after the atom embedding layer, three interaction block layers, and the graph final output are represented with V0, IB1, IB2, IB3, and f. These features are all extracted from the parent models, and can be regarded as compositional (V0), or structural descriptors (IB1, IB2, IB3, and f) related to output properties. As NN layers are stacked, it would gain more and more global information within each element since the internal message on each atom can be propagated to further distances. Since the information gained from previous model training is retained, the readout element-wise vector can be regarded as a TL scheme, which is similar to the TL approach used in AtomSets models38. In this TL framework, we take the redout element-wise vector trained from magnetic moments as a descriptor and use the multi-layer perceptron (MLP) model to predict properties.

Figure 5 shows the model convergence study of the MLP models, where the data points are randomly sampled from original data sets at a percentage of 20, 40, 60, 80, and 100%. Such compositional models can not distinguish two alloys in the same stoichiometry but in different phases. We provide the test results of FCC and BCC solid solution phases for magnetic property in Fig. 5a, b, and formation energy in Fig. 5c, d, respectively. Compared with the TL features, we encode elements as a one-hot vector with a dimensional size of 100 to yield a non-TL MTP model. As the data size increases, the predicted MAE in magnetic property drops as expected. The convergence of the MLP-f models are not rapid, in which the features f were generated from the final task-specific layers in the magnetic model. This result shows that the quality of f descriptor is worse than other transfer learning features. We believe that this is caused by the lower data quality in the magnetic moment data, which severely affects the TL features that are closest to the final outputs. However, the MTL-IB1 and MTL-IB3 achieve relatively high performance at all data sizes and generally show more rapid convergence than non-TL models. In particular, the MTL-IB1 models reach 0.052 and 0.114 μb atom−1 errors in FCC and BCC data sets, respectively, when 100% data points are considered. Furthermore, those descriptors are applied in the training of formation energy, as illustrated in Fig. 5c, d. Though the descriptors are extracted from the magnetic moment model, we can observe the TL models achieve consistent convergence results similar to those in ms. In both cases, the simple transferred MLP models (MTL-IB1 and MTL-IB3) show much lower errors at tiny data sizes.

Interpolations on the alloy systems

The physical properties of FeNiCoCrMn/Pd alloy systems were well learned by the framework. By taking advantage of the efficient predictions of trained ML models, we could have a detailed characterization of this alloy system and sub-chemical systems. The alloy structures are necessary for the original ECNet model, however, the generation of new SQSs are computationally demanding, which makes it time-consuming when carrying out interpolation in certain systems. Hereby, we utilize the MTL model with transferred features to perform exploration, which have shown equivalent performance. Figure 6 shows the interpolation results of formation free energy and magnetic moment for two ternary systems, e.g., Fe-Co-Mn and Fe-Co-Pd for the whole concentration range, in a single FCC solid-solution phase under the MTL-IB3 model. The formation free energy here is defined as ΔHf = ΔEf − TSmix (ΔEf is the formation energy at 0 K, and Smix is the mixing entropy). It measures the stability in terms of the ground state formation energy ΔEf and the configurational entropy effect −TSmix at finite temperature. Firstly, observing the two figures for T = 0 K in Fig. 6a, c, we find that the energy spans a range from −0.03 to 0.12 eV/atom for the Fe-Co-Mn and a range from −0.06 to 0.05 eV/atom for the Fe-Co-Pd. In Fe-Co-Mn, it is easy to identify three regions (I), (II), and (III) (see Fig. 6a) and the phase stability is reduced subsequently. Only the narrow concentration region (I) along the edge of Co-Fe shows negative formation energy, indicating that the Fe-Co-Mn is less favorite for FCC solid solution. The Fe-Co-Pd system is generally more stable than the Fe-Co-Mn. Besides the narrow area (I) along Co-Fe has a concentration of stable FCC solid solution, which is similar to the Fe-Co-Mn case, the Pd-rich corner (region (I\({}^{{\prime} }\)) in Fig. 6c) also has a stable concentration, which can be taken as the role of Pd since its ground state structure to be FCC. In addition, we also report the stability variation of other ternary systems, as shown in Supplementary Fig. 5, the formation energies of Fe-Co-Ni and Fe-Co-Cr ternaries in FCC and BCC phases predicted by our ML model. Then, the figures of T = 500 and 1000 K in Fig. 6a, c clearly show the effect of the configurational entropy from atomic mixing at finite temperature to stabilize these two ternary systems. In Fe-Co-Mn, the stable SPSS concentration (ΔHf < 0) is slightly enlarged from the 0 K situation at 500 K. At 1000 K, the stable SPSS further spread to the near-equiatomic concentration. In Fe-Co-Pd, the effect of configurational entropy is more obviously observed, even at 500 K, almost the whole concentration except part of the Pd-Co edge and Fe-corner become stable. The above discussion shows how the configurational entropy effect stabilize random structures, while the full thermodynamic stability investigation should be based on the free energy, which includes the contribution from various physical effects such as lattice vibration, atomic configuration, thermal electrons excitation and spin ordering, etc.

In the magnetic diagram of Fe-Co-Mn (Fig. 6b), it is possible to separate the strong-magnetic region (concentration of %Fe and %Co high and %Mn low) from the weak magnetic one (high %Mn and low % Fe, %Co). In the BCC solid-solution phase (Supplementary Fig. 6a), we also observe that the total magnetic moment decreases from the Co-Fe edge towards the Mn corner, which is consistent with magnetization measurements in Fe-Mn alloys39 and Co-Mn alloys40. The reason is that the local magnetic moment of Mn couples to neighboring moments in an antiferromagnetic manner, leading to a cancellation of total magnetic moment41. However, the overall trend and intrinsic mechanism for the magnetic behavior should consider many different factors, e.g. local atomic environment, and temperature42. From the magnetic diagram (Fig. 6b, d), we found that the area of the strong-magnetic domain is larger in Fe-Co-Pd than in the Fe-Co-Mn system, which is interesting since pure Pd itself is not a magnetic element, while its substitution for Mn enhances magnetic moment of Fe-Co-Pd obviously. From a material engineering point of view, knowing the information prior could be useful for identifying target physical properties, preparing and controlling synthesis conditions.

A further example showing the predictive power of our ECMTL model is illustrated in Fig. 7. The ML predictions span a concentration from 0 to 0.4 on each principal element one by one, based on DFT data at particular concentrations. Here the RMSD was calculated as a function of the constituent element’s concentration, for FeNiCoCrMn and FeNiCoCrPd quinary systems in FCC SPSS. The related formation energies are shown in Fig. 7a, b. According to the ML prediction, the RMSD of FeNiCoCrPd HEAs is always larger than those of the Cantor ones in the whole range of considered concentration. More interestingly, in the Pd-based HEA, both the RMSD and formation energies show a nearly larger fluctuation than cases of Mn-based HEA (Cantor systems) with respect to the constituent element concentration. The substitution of Mn atoms by Pd atoms in the FeNiCoCrMn is not a simple replacement, but induces substantial changes for each of the constituent elements. In the FeNiCoCrPd alloy, the Pd has the largest electronegativity43 and the largest atomic size, which introduces the inhomogeneities into the alloy, leading to larger RMSD and changes in the local environments of atoms. RMSD is an important property for HEAs since it was proposed by ref. 44 as a scaling factor to predict solid solution strengthening from the empirical rule, in which they found it based on the experiments, that the square root of mean-square atomic displacement (RMSD) of a HEA being proportional to its modulus-normalized strength. Experimental measurement of yield strengths of FeNiCoCrMn and FeNiCoCrPd26, and our previous theoretical calculations25 revealed that the promising mechanical properties of FeNiCoCrPd is related directly to the larger RMSD due to Pd is introduced. From this point of view, larger RMSD is favorite for a better mechanical property; however, since this larger inhomogeneous also leads to unstable structure, the balance between the inhomogeneous and stability is important. Besides a couple of concentrations calculated in FeNiCoCrPd with both large RMSD and stable FCC solid solution in our previous DFT work25, the systematic ML prediction found three promising concentrations with rather large RMSD and low formation energies, as shown in Fig. 7b, d: x = 0.31 in Pdx(FeNiCoCr)1−x (x = 0.2 is the one predicted in our DFT calculation25); x = 0.25 in Cox(FeNiCrPd)1−x; x = 0.11 in Crx(FeNiCoPd)1−x. At these concentrations, the formation energies are very small positive values within 60–80 meV/atom. At finite temperatures, the entropy effects, especially the negative mixing entropy contribution (−TΔSmix)45 to the formation free energy of HEAs can cancel out the small positive formation energies at moderate temperatures to make the stable solid solution.

Based on these facts, some interesting physical insights can be inferred and meaningful results can be provided from the ML results. First of all, we can extend the conclusion in the work of ref. 26 that FeNiCoCrPd HEA has higher mechanical strength than the Cantor one, not only at equiatomic composition, but also at various non-equiatomic ones. Next, concerning the FeNiCoCrPd HEA, higher strength can be achieved by adding more Pd content into the system. This is because the second maximum at %Pd equal to 0.31 has a higher pick than the first one (x = 0.2 in Fig. 7d). We believe that these observations would be useful for the future investigation of the Cantor alloy family.

Discussion

In summary, we have developed a graph-based deep neural network based on the operation of elemental convolution, which provides a powerful tool for understanding the composition-structure-property relationships. The models provide a more general and global element-wise feature vector to represent materials, and they show better performance in some intrinsic properties like band gaps, elastic moduli, and refractive index. Furthermore, we utilize the multi-task learning technique and find that it improves the learning efficiency and prediction accuracy compared to training a separate model for each task. Then, we explore applications in high-entropy alloys and focus on FeNiCoCrMn/Pd systems. We have developed models to accurately predict several physical properties. Furthermore, transfer learning techniques are used to enhance the performance in high-entropy alloys. Since the model has learned relevant physical and chemical similarities between structural and elemental information, it is better than models trained from scratch. We demonstrate the feasibility to use the low-principal element alloys as source data to enhance performance on multiple-principal element alloys by TL technique. Moreover, the TL element-wise feature vectors from the parent ECNet model can be used as universal descriptors. We find that multi-layer perceptrons combined with these descriptors could reach good accuracies even at small data limits. Finally, we take FeNiCoCrMn/Pd as an example to interpolate the concentration-dependent formation energy, magnetic moment and local displacement in some sub-ternary and quinary systems using this framework. The results enriched the physics of those high-entropy alloys. By taking advantage of the trained ML models, we eliminate the difficulty of a near-infinite compositional space.

Methods

Model details

In the framework of ECNet, we update the ith feature successively by passing messages from neighboring vertices. The relations between two consecutive layers are \({{{{\bf{v}}}}}_{i}^{l+1}={\sum }_{j\in {{{{\mathcal{N}}}}}_{i}}{{{{\bf{v}}}}}_{j}^{l}\circ {W}^{l}\left({{{{\bf{r}}}}}_{i}-{{{{\bf{r}}}}}_{j}\right)\), where “o” represents the element-wise multiplication, and \({W}^{l}={{{\rm{Dense}}}}(\{{e}_{k}\left({{{{\bf{r}}}}}_{j}-{{{{\bf{r}}}}}_{i}\right)\})\times {f}_{c}\) are networks to map the atomic positions to the filters (\({{\mathbb{R}}}^{3}\to {{\mathbb{R}}}^{F}\)). Here, we define a cutoff function according to Coulomb’s law, where the interaction between two atoms is proportional to the square of the distance between them. Meanwhile, the distances between atomic pairs are expanded on the basis of Gaussians.

where rc is the distance cutoff, and {μk ∈ (0, rc)} are imposed to decorrelating the filter values. The scaling parameter γ and the number of Gaussians determine the resolution of the interatomic distances. The symmetric form of interatomic distances fulfills a number of constraints, such as rotational and permutational invariances. In the cutoff function fc, we add the infinitesimal number ϵ to avoid illegal infinite quantity.

In this work, mean absolute error (MAE) is adopted as a loss function considering the real-value regression problem. The total loss function for the network is the weighted linear combination of individual losses from each specific task, which is also a common setup for the multi-task problem27.

where \({L}_{i}={\left\Vert {p}_{i}-{\hat{p}}_{i}\right\Vert }^{2}\) and wi are individual losses and weights for each task-specific layers. Here, equal weights for different tasks are used since we did not show any preference for the specific task. The final output of the predicted property \({\hat{p}}_{i}\) can be arbitrary properties such as bandgap, bulk/shear modulus or RMSD of alloys.

Model training

To assess the performance of the ML models, we follow the standard procedure in which the data set is divided into mutually exclusive training, validation, and test sets. Generally, the training, validation, and test sets are set to be 80, 10, and 10% of the total available data set. For the model convergence test, each model training at a specific data size is performed five times by randomly shuffling the training data with seeds 1, 2, 3, 4, and 5. For the ECNet model, we initially test many hyperparameters to tune the model performance, including the learning rate, the batch size, the loss function, the number of stacked interaction blocks, the cutoff radius, and the size of the hidden channel. After the test, we set parameters with no complex structures but similar performance. The final model has a 128-dimensional size of the hidden channel, three interaction blocks, and two layers of neural networks with 128 and 64 neurons in the final tower layer blocks.

DFT calculations

To model the FCC and BCC SPSS of FeNiCoCrMn/Pd HEAs, we used a special quasirandom structure (SQS)46 that provides a structure considered as an approximation of the random state. The number of atoms in SQS supercell were varied from 16 up to 160, depending on compositions and number of principal elements included in, e.g., binary, ternary, quaternary or quinary. The SQS structures of binary and ternary HEAs were adopted from previous studies47,48,49,50, while for those of quaternary and quinary, we generated ourselves as part of this work by the mcsqs code in the ATAT package51.

All the spin-polarized DFT calculations at 0K temperature were carried out using the projector augmented wave (PAW)52 method as implemented in the Vienna Ab Initio Simulation Package (VASP)53,54. Also, the generalized gradient approximation and the Perdew-Burke-Ernzerhof pseudo potential (GGA-PBE) were used in treating the exchange and correlation energy55. An energy cutoff was set up at 400 eV for the plane-wave basis. For the Brillouin-zone integrations, a Monkhorst-Pack mesh56 was set up in such a way that it is varied, depending on SQS supercell size, but in general, was always greater than 5000 k-points per unit cell. All the SQS structures are fully relaxed until all force components were smaller than 2 meV/Å. The computational setup is consistent with our previous study25.

Data availability

The crystal data from MP data sets can be found in the public repository https://figshare.com/articles/dataset/Graphs_of_materials_project/7451351and the refractive index data sets are available from ref. 32. The FeNiCoCrMn/Pd data from the DFT calculation used to train the ECNet model are available at https://github.com/xinming365/ECNet.

Code availability

The ECNet models reported in this work for generating the results are available at https://github.com/xinming365/ECNet.

References

Schmidt, J., Marques, M. R. G., Botti, S. & Marques, M. A. L. Recent advances and applications of machine learning in solid-state materials science. npj Comput. Mater. 5, 83 (2019).

Seko, A., Maekawa, T., Tsuda, K. & Tanaka, I. Machine learning with systematic density-functional theory calculations: application to melting temperatures of single- and binary-component solids. Phys. Rev. B 89, 054303 (2014).

Stanev, V. et al. Machine learning modeling of superconducting critical temperature. npj Comput. Mater. 4, 29 (2018).

Zhuo, Y., Mansouri Tehrani, A. & Brgoch, J. Predicting the band gaps of inorganic solids by machine learning. J. Phys. Chem. Lett. 9, 1668–1673 (2018).

Isayev, O. et al. Universal fragment descriptors for predicting properties of inorganic crystals. Nat. Commun. 8, 15679 (2017).

Ward, L. A general-purpose machine learning framework for predicting properties of inorganic materials. npj Comput. Mater. 7, 16028 (2016).

Ghiringhelli, L. M., Vybiral, J., Levchenko, S. V., Draxl, C. & Scheffler, M. Big data of materials science: critical role of the descriptor. Phys. Rev. Lett. 114, 105503 (2015).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J., Tkatchenko, A. & Müller, K.-R. Schnet – a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145301 (2018).

Chen, C., Ye, W., Zuo, Y., Zheng, C. & Ong, S. P. Graph networks as a universal machine learning framework for molecules and crystals. Chem. Mater. 31, 3564–3572 (2019).

Hart, G. L. W., Mueller, T., Toher, C. & Curtarolo, S. Machine learning for alloys. Nat. Rev. Mater. 6, 730–755 (2021).

Cantor, B., Chang, I., Knight, P. & Vincent, A. Microstructural development in equiatomic multicomponent alloys. Mater. Sci. Eng. A 375–377, 213–218 (2004).

Tsai, M.-H. & Yeh, J.-W. High-entropy alloys: a critical review. Mater. Res. Lett. 2, 107–123 (2014).

Senkov, O., Wilks, G., Scott, J. & Miracle, D. Mechanical properties of nb25mo25ta25w25 and v20nb20mo20ta20w20 refractory high entropy alloys. Intermetallics 19, 698–706 (2011).

Li, X.-G., Chen, C., Zheng, H., Zuo, Y. & Ong, S. P. Complex strengthening mechanisms in the nbmotaw multi-principal element alloy. npj Comput. Mater. 6, 70 (2020).

Byggmästar, J., Nordlund, K. & Djurabekova, F. Modeling refractory high-entropy alloys with efficient machine-learned interatomic potentials: defects and segregation. Phys. Rev. B 104, 104101 (2021).

Yan, Y., Lu, D. & Wang, K. Accelerated discovery of single-phase refractory high entropy alloys assisted by machine learning. Comput. Mater. Sci. 199, 110723 (2021).

Lee, K., Ayyasamy, M. V., Delsa, P., Hartnett, T. Q. & Balachandran, P. V. Phase classification of multi-principal element alloys via interpretable machine learning. npj Comput. Mater. 8, 1–12 (2022).

Zhang, J. et al. Robust data-driven approach for predicting the configurational energy of high entropy alloys. Mater. Des. 185, 108247 (2020).

Jain, A. et al. The materials project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Kirklin, S. et al. The open quantum materials database (oqmd): assessing the accuracy of dft formation energies. npj Comput. Mater. 1, 15010 (2015).

Curtarolo, S. et al. Aflowlib.org: a distributed materials properties repository from high-throughput ab initio calculations. Comput. Mater. Sci. 58, 227–235 (2012).

Jha, D. et al. Elemnet: deep learning the chemistry of materials from only elemental composition. Sci. Rep. 8, 17593 (2018).

Goodall, R. E. A. & Lee, A. A. Predicting materials properties without crystal structure: deep representation learning from stoichiometry. Nat. Commun. 11, 6280 (2020).

Tran, N.-D., Saengdeejing, A., Suzuki, K., Miura, H. & Chen, Y. Stability and thermodynamics properties of crfenicomn/pd high entropy alloys from first principles. J. Phase Equilib. Diffus. 42, 606–616 (2021).

Ding, Q. et al. Tuning element distribution, structure and properties by composition in high-entropy alloys. Nature 574, 223–227 (2019).

Cipolla, R., Gal, Y. & Kendall, A. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 7482-7491 (IEEE, 2018).

Ruder, S. An overview of multi-task learning in deep neural networks. Preprint at http://arxiv.org/abs/1706.05098 (2017).

Jain, A. et al. Commentary: the materials project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

De Breuck, P.-P., Hautier, G. & Rignanese, G.-M. Materials property prediction for limited datasets enabled by feature selection and joint learning with MODNet. npj Comput. Mater. 7, 83 (2021).

Chen, C., Ye, W., Zuo, Y., Zheng, C. & Ong, S. P. Graph networks as a universal machine learning framework for molecules and crystals. Chem. Mater. 31, 3564–3572 (2019).

Naccarato, F. et al. Searching for materials with high refractive index and wide band gap: a first-principles high-throughput study. Phys. Rev. Mater. 3, 044602 (2019).

Ouyang, R., Ahmetcik, E., Carbogno, C., Scheffler, M. & Ghiringhelli, L. M. Simultaneous learning of several materials properties from incomplete databases with multi-task sisso. J. Phys. Mater. 2, 024002 (2019).

Peng, H. et al. Large-scale and facile synthesis of a porous high-entropy alloy CrMnFeCoNi as an efficient catalyst. J. Mater. Chem. A 8, 18318–18326 (2020).

Caruana, R. Multitask learning: a knowledge-based source of inductive bias. In Proc. of the 10th International Conference on Machine Learning 41–48 (Morgan Kaufmann, 1993).

Bingel, J. & Søgaard, A. Identifying beneficial task relations for multi-task learning in deep neural networks. In Proc. of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, 164–169. (EACL, 2017) at https://arxiv.org/abs/1702.08303 (2017).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2010).

Chen, C. & Ong, S. P. Atomsets as a hierarchical transfer learning framework for small and large materials datasets. npj Comput. Mater. 7, 173 (2021).

Yamauchi, H., Watanabe, H., Suzuki, Y. & Saito, H. Magnetization of α-phase fe-mn alloys. J. Phys. Soc. Jpn. 36, 971–974 (1974).

Crangle, J. The magnetization of cobalt-manganese and cobalt-chromium alloys. Philos. Mag. 2, 659–668 (1957).

Sakurai, M. & Chelikowsky, J. R. Enhanced magnetic moments in mn-doped feco clusters owing to ferromagnetic surface mn atoms. Phys. Rev. Mater. 3, 044402 (2019).

King, D. J. M. et al. Density functional theory study of the magnetic moment of solute mn in bcc fe. Phys. Rev. B 98, 024418 (2018).

Watson, R. E. & Bennett, L. H. Transition metals: d-band hybridization, electronegativities and structural stability of intermetallic compounds. Phys. Rev. B 18, 6439–6449 (1978).

Okamoto, N. L., Yuge, K., Tanaka, K., Inui, H. & George, E. P. Atomic displacement in the CrMnFeCoNi high-entropy alloy – A scaling factor to predict solid solution strengthening. AIP Adv. 6, 125008 (2016).

George, E. P., Raabe, D. & Ritchie, R. O. High-entropy alloys. Nat. Rev. Mater. 4, 515–534 (2019).

Zunger, A., Wei, S.-H., Ferreira, L. G. & Bernard, J. E. Special quasirandom structures. Phys. Rev. Lett. 65, 353–356 (1990).

Wolverton, C. Crystal structure and stability of complex precipitate phases in al–cu–mg–(si) and al–zn–mg alloys. Acta Mater. 49, 3129–3142 (2001).

Jiang, C., Wolverton, C., Sofo, J., Chen, L.-Q. & Liu, Z.-K. First-principles study of binary bcc alloys using special quasirandom structures. Phys. Rev. B 69, 214202 (2004).

Shin, D., van de Walle, A., Wang, Y. & Liu, Z.-K. First-principles study of ternary fcc solution phases from special quasirandom structures. Phys. Rev. B 76, 144204 (2007).

Jiang, C. First-principles study of ternary bcc alloys using special quasi-random structures. Acta Mater. 57, 4716–4726 (2009).

van de Walle, A. et al. Efficient stochastic generation of special quasirandom structures. Calphad 42, 13–18 (2013).

Blöchl, P. E. Projector augmented-wave method. Phys. Rev. B 50, 17953–17979 (1994).

Kresse, G. & Hafner, J. Ab initio molecular-dynamics simulation of the liquid-metal-amorphous-semiconductor transition in germanium. Phys. Rev. B 49, 14251–14269 (1994).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Monkhorst, H. J. & Pack, J. D. Special points for brillouin-zone integrations. Phys. Rev. B 13, 5188–5192 (1976).

Acknowledgements

This research was supported by the Tohoku-Tsinghua Collaborative Research Funds, the National Natural Science Foundation of China under Grant No. 92270104 and the Tsinghua University Initiative Scientific Research Program, Grants-in-Aid for Scientific Research on Innovative Areas on High Entropy Alloys through the grant number P18H05454 of JSPS. N.-D.T. and Y.C. acknowledge the Center for Computational Materials Science of the Institute for Materials Research, Tohoku University, for the support of the supercomputing facilities.

Author information

Authors and Affiliations

Contributions

J.N. and X.W. designed the research. X.W. worked on the model. N.-D.T. and Y.C. calculated all HEA data. X.W. and J.N. wrote the text of the manuscript. All authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Tran, ND., Zeng, S. et al. Element-wise representations with ECNet for material property prediction and applications in high-entropy alloys. npj Comput Mater 8, 253 (2022). https://doi.org/10.1038/s41524-022-00945-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-022-00945-x

This article is cited by

-

High entropy alloy property predictions using a transformer-based language model

Scientific Reports (2025)

-

EFTGAN: Elemental features and transferring corrected data augmentation for the study of high-entropy alloys

npj Computational Materials (2025)

-

Machine Learning-Based Computational Design Methods for High-Entropy Alloys

High Entropy Alloys & Materials (2025)