Abstract

A neural network model is developed to search vast compositional space of high entropy alloys (HEAs). The model predicts the mechanical properties of HEAs better than several other models. It’s because the special structure of the model helps the model understand the characteristics of constituent elements of HEAs. In addition, thermodynamics descriptors were utilized as input to the model so that the model predicts better by understanding the thermodynamic properties of HEAs. A conditional random search, which is good at finding local optimal values, was selected as the inverse predictor and designed two HEAs using the model. We experimentally verified that the HEAs have the best combination of strength and ductility and this proves the validity of the model and alloy design method. The strengthening mechanism of the designed HEAs is further discussed based on microstructure and lattice distortion effect. The present alloy design approach, specialized in finding multiple local optima, could help researchers design an infinite number of new alloys with interesting properties.

Similar content being viewed by others

Introduction

A High Entropy Alloy (HEA) is an alloy formed by mixing five or more elements in similar proportions. Since the discovery of HEA by Yeh et al.1 and Cantor et al.2, it has received much attention from material researchers because of its high strength, hardness, wear resistance, corrosion resistance, and high-temperature stability3,4,5,6,7,8. The fundamental properties of conventional alloys are predominantly determined by the main element. This is because conventional alloys contain major amounts of the main element with small amounts of several alloying elements. On the other hand, the properties of an HEA are very different from any of the constituent elements, since an HEA does not have a dominant main element.

HEAs provide opportunities for material researchers to design new materials with desired properties, based on the wide compositional space where HEAs can be formed9. At the same time, investigating this large compositional space of alloy designs using the conventional trial-and-error method is highly inefficient. Thus, those who design HEAs need an approach that will allow them to quickly and efficiently search for an HEA with desired properties. Previous material researchers have used thermodynamic modeling and computational simulation approaches to predict the phase stability of HEAs or to analyze the strengthening mechanism of HEAs10,11,12,13,14,15,16. Each of these approaches has its own strengths and weaknesses. For example, thermodynamic modeling can be very useful for predicting various thermodynamic properties and equilibrium phases of alloys. However, other properties (such as mechanical properties) cannot be directly predicted using this approach. Computational simulation approaches such as ab initio or atomistic simulations can be helpful to investigate fundamental mechanisms for various properties of HEAs. Nevertheless, they are practically unsuited for predicting the properties of an alloy because the microstructure of the alloy cannot be completely reproduced due to spatial and time scale issues and limited computational resources.

Machine learning, a data-driven approach, has been employed to predict the properties of HEAs as well as several other alloys. Furthermore, material researchers have found it can overcome the limitations of the above-mentioned approaches17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34. Zhang et al.18, Wen et al.27,30, Zheng et al.29, Klimenko et al.32, Guo et al.23, and Li et al.26 developed machine learning models which predict the mechanical properties of the HEAs. Predicted mechanical properties are yield strength (YS), hardness, elastic modulus, or critical resolved shear stress (CRSS), and they have provided validation of their own models. Models have been reported for not only HEAs but also other alloys like steels, magnesium alloys, aluminum alloys, or copper alloys. Peng et al.17, Lee et al.28, Xie et al.31, Shen et al.33,34, Dey et al.19, Suh et al.20, Chen et al.21, Du et al.22, Desu et al.24, and Zhang et al.25 have developed machine learning models to predict YS, ultimate tensile strength (UTS), total elongation (T.EL.), or hardness. These models developed for alloys other than HEAs were also validated.

All of the aforementioned studies successfully predicted mechanical properties using machine learning. However, most of the studies did not design alloys using the trained machine learning model, or even when they did, they didn’t conduct an experimental verification of the designed alloy. Even in the few studies that conducted experimental verification of the designed alloy, the accuracy of the model used to design the alloy was not clearly stated in the paper or was low. Moreover, those who developed models for HEAs did not appropriately consider process conditions such as heat treatment and metalworking which could have a decisive influence on the mechanical properties of HEAs.

A machine learning model that combines thermodynamic modeling, a deep neural network (DNN), and a convolutional neural network (CNN) was developed to overcome the limitations of the studies. In particular, a CNN used in the present work was specially customized to easily extract features of the constituent elements of HEAs. The developed model was trained to predict mechanical properties such as YS and UTS. The model shows a better prediction performance than other machine learning models with various feature sets and model types. Two HEAs were designed by performing an inverse prediction of the model. It should be noted here that the inverse prediction in this study is obtaining input features that possibly have specific mechanical properties, instead of predicting the properties from input features. The designed HEAs had better or similar mechanical performance than the existing best-performance HEAs. In addition, microstructure analysis and molecular dynamics (MD) simulation were performed to examine the strengthening mechanism of the designed HEAs. All MD simulations conducted in this work used a second nearest-neighbor modified embedded-atom method (2NN MEAM) interatomic potential35.

Results and discussion

Performance evaluation of the developed machine learning model

The superiority of our model is examined by comparing the accuracy of the model with other possible machine learning models with various feature sets and model types. However, a direct comparison of performance with models published in the literature referenced in the “Introduction” section was not attempted. This was because the purposes and inputs of those models were different from our model. Most of the models in the papers had different purposes from our model, which aimed to predict the strength of HEAs at room temperature. It may not be fair to compare the ML modeling performance of models with different purposes because the performance can vary depending on the target properties. A few models with the same purposes as our model had different inputs from our model. For instance, in those modeling works, input data for a single process condition was considered while in our work data from various process conditions were considered. Better R2 values can be expected from the input data set for a single process condition than those for multiple process conditions. Therefore, a direct comparison between our model and the other models published in the literature cannot properly evaluate the superiority of the modeling work.

The comparison of accuracy presented here is a comparison of the models’ YS prediction. The comparison of UTS prediction accuracy is omitted here and presented in the supplementary information instead because the accuracy trend was the same for both cases.

The input features and a short description of the models are presented in Table 1. CD, TD, and CTD are simple DNN models. The only differences among them are the input features that each model used. CD + CNN, TD + CNN, CTD + CNN, and D + CNN are neural network models with complicated structures. They consist of three main parts: the DNN part, the CNN part, and the regression part. The DNN part and the CNN part are for feature extraction. The regression part gets extracted features from the DNN part and the CNN part to determine the specific value of the output that the input deserves. We will describe the details of those models in the Methods section. ‘w/o T’, ‘w/o CNN’, and ‘w/ T&C’ are ensemble models which determine output by averaging the outputs of the above-mentioned neural network models. ‘w/o T’ used the models without thermodynamic descriptor input features such as CD, CD + CNN, and D + CNN. ‘w/o CNN’ averaged the outputs of the models without the CNN part, such as CD, TD, and CTD to calculate the output. ‘w/ T&C’ is an ensemble model which used all of the models to get an averaged output.

The coefficient of determination (R2) and root mean square error (RMSE) between the actual and the predicted values for the models were calculated. The same test set was used for calculating the coefficient of determination and RMSE to correctly compare the prediction performance of the models. The coefficient of determination is expressed as

and RMSE is expressed as

where yi is the actual value, \(\bar y\) is the mean of the actual value, \(\widehat {y_i}\) is the predicted value, and n is the number of data. The comparison of accuracy among the models is presented in Fig. 1a, b. Figure 1a, b indicate that all of the ensemble models predicted better than the models that each ensemble model used for averaging. This is because an ensemble model can partly overcome three problems a single model often suffers from: the statistical problem, the computational problem, and the representation problem36. An ensemble model can increase the prediction accuracy of the model by reducing high “bias” and high “variance” caused by the problems36. Moreover, the models without thermodynamic descriptors show better accuracy than the models with them, which might lead to the misconception that the descriptors are not helpful for the prediction. However, the fact that the prediction of ‘w/ T&C’ is more accurate than that of ‘w/o T’ indicates that the models with the descriptors helped the ensemble model predict better. The representation problem, which means that the hypothesis space might not contain any hypotheses that are good approximations of the true function, is partly solved by adding the models with the descriptors. This is because the models with the descriptors have a completely different hypothesis space than the models without the descriptors.

Figure 1a, b also show that the models with the CNN part predicted better than the models without it. Before discussing the performance improvement mechanism of the model with the CNN part, Fig. 2 is presented for a better understanding of the CNN part. A\(H_{kernel} \times W_{kernel} \times N_{output\,channel}\) kernel (\(H_{kernel} \ne 1,W_{kernel} \ne 1\)) is usually used for image input, but we used a kernel with the shape of \(H_{kernel} = 1,W_{kernel} = N,N_{output\,channel} = N\). (where \(H_{kernel}\) is the height of the kernel, \(W_{kernel}\) is the width of the kernel, \(N_{output\,channel}\) is the number of the output channel and the filter of the kernel, N is the width of the input space and the number of elemental descriptors, and M is the height of the input space and the number of elements.) \(W_{kernel}\) and \(N_{output\,channel}\) are set to N to make the shape of the output into M × 1× N and transform it into M × N × 1 through reshaping so that the layers depicted in Fig. 2 can be used repeatedly.

The output space has the same shape as the input space thanks to the kernel (weight space). Due to the structure of the layers, it is possible to process a lot of information even when using a small number of weights, and repeated hidden layers allow the model to learn complex correlations between input features and outputs.

The three major reasons why concatenating CNN improves accuracy are reduced number of parameters, easy extraction of features from the elements, and suitable kernel to understand compositional space.

CNN, which uses a kernel with a specific height and width, doesn’t require all of the input features to compute a single output value, unlike DNN, in which all input features are fully connected. Hence, CNN requires fewer parameters (weights) to be trained than DNN. For example, assuming that DNN is used instead of CNN in our model for interpreting elemental descriptors, the number of parameters required per DNN layer is 69376 when the dimensionality of the output space \(N_{output\,channel}\) is 256. This is because the total number of inputs \(N_{input\,channel}\) is 10 (number of elements) × 27 (number of elemental descriptors) = 270. On the other hand, our model only needs 27 × (1 × 27 × 1 + 1) = 756 parameters per CNN layer since the input space is (10 × 27 × 1), the kernel space is (1 × 27 × 27), and the output space is (10 × 27 × 1). Thus, only 1.09% of the number of parameters are needed to train a model for CNN compared to DNN. The reduced number of parameters lessens the burden of training and makes it easy to find a good approximation of the true function.

Furthermore, the (1 × 27) shaped kernel plays a key role in extracting the features of each element. The information of two or more elements will never be mixed during convolution by setting the height of the kernel to 1, in the input space where the type of element changes in the height direction. This shape of the kernel has no choice but to yield an output by considering the element descriptors of only one element. Thus, models using the kernel would try to understand the characteristic of the element and extract features of the element. As a result, CNN learns the appropriate rule of the mixture by itself.

Last but not least, the (1 × 27) shaped kernel strides in the height direction, so the weight in the kernel is multiplied by the same elemental descriptor every time it strides. While training the model, each weight is changed to become suitable for the interpretation of the corresponding elemental descriptor. This allows the model to better understand the input space, despite using fewer parameters than DNN.

The coefficient of determination and RMSE were also calculated for the machine learning models that were not neural network models. We present the comparison of accuracy between the present work (w/ T&C) and the machine learning models in Fig. 1c, d. The compared machine learning algorithms were XGBoost, Linear regression, support vector machine, decision tree, random forest, and K-nearest neighbors. Figure 1c, d show that the prediction performances of the ensemble models like XGBoost, random forest, and ‘w/ T&C’ were better than the other models. Notably, the present work predicted the mechanical properties better than all the other algorithms compared. Thus, we selected ‘w/ T&C’ as the machine learning model for the inverse prediction and alloy design. A comparison of the predicted and actual data of the present model is plotted in Fig. 1e, f.

Inverse prediction and alloy design

We performed an inverse prediction of the present work to design HEAs with high mechanical performance. Our goal was to design not the HEA with the highest YS or UTS, but HEAs with higher YS or UTS than the existing best-performing HEAs. This is because finding the optimum solution of the given input dimension is not possible due to its high dimensionality. Hence, a conditional random search, which has the advantage of being able to find local optimal values, was selected as the inverse predictor among metaheuristic methods. In addition, we selected random search instead of grid search since random search is known to find a better solution faster than grid search 37.

We set the conditions of the random search so that it could yield HEAs with not only high strength but also equally good elongation. The reason for setting the conditions instead of modeling the elongation is that modeling elongation is much more difficult than modeling strength. This is because the mechanism for enhancing elongation is so complex that the mechanism is hardly known, while the strengthening mechanism has been clarified a lot. For instance, examples of well-known strengthening mechanisms are work hardening, solid solution strengthening, precipitation hardening, and grain boundary strengthening. On the other hand, the only well-known mechanism for enhancing elongation in metals is the strength-ductility trade-off, but strengthening does not always reduce the elongation of metals. As such, the mechanism of elongation is very complex to the extent that the mechanism has not been well clarified until now.

Therefore, instead of training the model for elongation, several conditions of the random search were set to avoid HEAs with too low elongation. For example, the range of the annealing temperature is severely limited when randomly generating an input set. This is because the random search will likely design an HEA with a very low annealing temperature if the annealing temperature is not limited. A low annealing temperature will likely produce an HEA with very high strength but also very low elongation. Thus, to make the strength and elongation of HEA equally good, limiting the annealing temperature is inevitable. For another example, the input set is discarded if the mole fraction, thermodynamically calculated using the input set, of the matrix at the homogenization temperature is less than 100% to prevent a coarse precipitate. This is because a coarse precipitate harms the mechanical performance of an alloy. The details of the conditions are provided in the Methods section. Finally, we designed two HEAs, HEA1 and HEA2, using the inverse prediction and tabulated their chemical compositions, process conditions, and predicted mechanical properties in Table 2.

Tensile properties

The mechanical properties of HEA1 and HEA2 were measured by performing a tensile test multiple times. Figure 3a, b show the engineering stress-strain curves of HEA1 and HEA2. The averaged mechanical properties, YS, UTS, T.EL., and uniform elongation (U.EL.), are tabulated in Table 3 along with their raw data and standard deviations (std). We could confirm that most of the second tensile tests successfully reproduced yield strength, ultimate tensile strength, and even uniform elongation of the first tensile tests. However, in the case of HEA1 and HEA2 using annealing condition, 900 ˚C and 15 min, a relatively large difference in uniform elongation was observed between the first and second tensile tests, and in such cases, one more tensile test was conducted. To determine the necessity of the third test, we paid attention to uniform elongation rather than strength or total elongation. This was because the relative difference in strength was smaller than that of uniform elongation and the total elongation may vary considerably for each measurement depending on the location of crack initiation or the condition of the samples, unlike uniform elongation. Both HEAs showed the best mechanical performance under the process conditions of 900 °C annealing temperature and 30 min annealing time. The averaged YS, UTS, and T.EL. of HEA1 are 1048 MPa, 1501 MPa, and 31% under the aforementioned process conditions, respectively. Likewise, HEA2 has a YS of 1042 MPa, UTS of 1514 MPa, and T.EL. of 22%.

The engineering stress-strain curves of (a) HEA1 and (b) HEA2 measured in multiple tensile tests for each sample. Ashby plots of (c) YS vs T.EL. and (d) UTS vs T.EL., for the HEAs from previous machine learning studies26,29, HEA1, HEA2, and the input data. Ashby plots of (e) YS vs T.EL. and (f) UTS vs T.EL., for HEA1, HEA2, and the input data with similar process conditions to HEA1 and HEA2. The references of the input data are given in the supplementary information.

Ashby plots of YS vs T.EL. and UTS vs T.EL. for machine learning designed HEAs from previous studies26,29, HEA1 and HEA2, and the input data which was used to train the present model are shown in Fig. 3c, d. The Ashby plots are presented to demonstrate the outstanding mechanical properties of the presently designed HEAs. Figure 3c indicates that the YS and T.EL. of the two present HEAs are equally as good as the existing best-performing HEAs. Figure 3d shows that the combinations of the UTS and T.EL. of the present HEAs are better than the input data, as well as the HEAs from previous machine learning studies 26,29.

Ashby plots of YS vs T.EL. and UTS vs T.EL. for the presently designed HEAs and the HEAs with similar process conditions to the present HEAs are shown in Fig. 3e, f, respectively, for a more comprehensive comparison. The criteria for similar process conditions are the homogenization temperature of 1373–1573 K, the reduction rate of 70–90%, the annealing temperature of 1123 to 1223 K, and the annealing time of 10 min to 1 h. There is only one HEA that has a higher YS than the present HEAs in Fig. 3e, but the T.EL. and UTS of the present HEAs are significantly improved compared to that HEA. In addition, the combinations of YS and T.EL. of the present HEAs are superior to that of the HEA with higher YS. Other HEAs with similar process conditions show as good elongation as the designed HEAs or even better elongation than the present HEAs. However, the strengths of the present HEAs are much better than those alloys. Hence, Fig. 3e, f shows that the presently designed HEAs gained plenty of strength while maintaining sufficient elongation.

To validate the reliability of our experimental procedures, we conducted additional experiments on a CrCoNi alloy which is one38 of the previously reported alloys shown in Fig. 3e, f. These experiments were conducted to confirm if it will be meaningful to directly compare the tensile tests of the HEAs designed in this study with the collected data from literatures, as data collected from multiple groups may show scatterings due to differences in experimental settings. The process conditions for the CrCoNi alloy were homogenization at 1473 K, rolling at a rate of 90%, annealing at 1173 K, and annealing time of 30 min. The mechanical properties of the alloy reported in the original literature38 were YS of 389 MPa, UTS of 859 MPa, and T.EL. of 74%. We conducted two tests for the CrCoNi alloy under the same process conditions mentioned above.

The measured mechanical properties of the CrCoNi alloy were YS of 373 MPa and 385 MPa, UTS of 879 MPa and 891 MPa, and T.EL. of 77% and 79%. The figure presented in the supplementary information (Supplementary Fig. 5) shows the stress-strain curves of the two tests for the CrCoNi alloy. The agreement between the literature values and our measurements indicates that it is meaningful to directly compare our experimental data with the collected data and also that our experimental procedures are valid and reliable. Besides, we would like to point out that more than half of the previously reported alloys shown in Fig. 3e, f was measured in our group, which adds consistency and credibility to our tensile test results.

The predicted values underestimated the experimental values by about 200 MPa as shown in Tables 2, 3. The RMSE of the present model is 122 MPa when predicting YS and 113 MPa when predicting UTS, so the errors are about 80 MPa larger than the RMSE. An error of this magnitude can occur even at the level of accuracy of the present model. On top of that, Fig. 1e, f shows that the present model tends to underestimate the alloys with high strength, having a lower strength than the actual strength. Given that fact, it is not surprising that the predicted values somewhat underestimate the experimental values. Furthermore, the conditional random search using the machine learning model attempts to find the local optimal value in a given condition. Hence, it can be said that the random search found HEAs with relatively high mechanical properties within the learned latent space of the model. As shown in Fig. 3, the random search did a great job finding the local optimal values of the latent space, and the designed HEAs showed mechanical performance superior to the previously reported HEAs.

Microstructure analysis

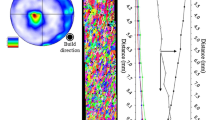

Microstructure analysis was conducted to investigate the strengthening mechanism of the designed HEAs. Figure 4 shows the backscattered electron (BSE) image (Fig. 4a), the mole fractions of equilibrium phases in the temperature range of 500–1000 °C (Fig. 4b), the XRD pattern (Fig. 4c), and the BSE-EDS (BSE-Energy Dispersive Spectroscopy) mapping (Fig. 4d) of the HEA1. The BSE images reveal that the HEA1 has a partially recrystallized microstructure mixed with coarse non-recrystallized grains and fine recrystallized grains. In addition, fine precipitates with diameters less than 200 nm are formed within the grains. The BSE-EDS mapping indicates that white precipitates are a Mo-rich phase and black precipitates are an Al-rich phase. The stability of the μ and B2 were underestimated in the present thermodynamic calculation. Nevertheless, we can estimate from further thermodynamic calculations and Fig. 4b that the Mo-rich phase is the μ phase and the Al-rich phase is the B2 phase. The XRD pattern confirmed that the HEA1 had a μ phase and also showed that the matrix of the HEA1 was an fcc structure. However, the XRD peak of the B2 phase was not detected because the fraction of the B2 phase in the HEA1 was very small.

We present the BSE image, the mole fraction of the equilibrium phases in the temperature range of 600–1000 °C, the XRD pattern, and the BSE-EDS mapping of HEA2 in Fig. 5a–d, respectively. The BSE image shows that the HEA2 was fully recrystallized with fine grains, unlike HEA1, but fine precipitates had formed within the grain like HEA1. Similarly, the BSE-EDS mapping indicates that white precipitates are a Mo-rich phase and black precipitates are an Al-rich phase. Like HEA1, the Mo-rich phase and the Al-rich phase were estimated to be the σ phase and the B2 phase, respectively. The stability of the precipitate phases was also underestimated in our thermodynamic calculation, as with HEA1. The XRD pattern showed that the matrix of HEA1 was an fcc structure, but the XRD peak of the σ phase and the B2 phase was not detected because the fraction of the phases was too small.

What HEA1 and HEA2 have in common is that they contain more elements than common HEAs, which usually contain 3–5 elements, and the formation of nanoscale precipitates was observed in their microstructure analysis. Solid-solution induced back-stress, caused by the multi-component chemical composition of the designed HEAs, and the nanoscale precipitates result in excellent strain-hardening abilities of the designed HEAs. Their excellent strain-hardening abilities allow both HEAs to have notable UTS. Particularly, precipitation strengthening is a key factor in both HEAs’ strengthening mechanisms. Fine precipitates densely formed within the recrystallized grains of both HEAs would help the HEAs to have higher strength without becoming more brittle.

The most notable difference between HEA1 and HEA2 was the degree of recrystallization. A previous study39 confirmed that a partially recrystallized microstructure considerably improves yield strength, due to deformation twins. The fact that the HEA1 had a better yield strength than HEA2 also supports the previous study 39.

The reason for the difference in the degree of recrystallization between the designed HEAs is the difference in the chemical composition of Mo between the HEAs. HEA1 has twice as much Mo, which has a much higher melting point than the other constituent elements, as in HEA2. Thus, the calculated melting point of HEA1, which is generally proportional to recrystallization temperature, was 35 °C higher than that of HEA2. That’s why HEA1 had a partially recrystallized microstructure while HEA2 had a fully recrystallized one.

Calculation of lattice distortion

We calculated the lattice distortion effect using an MD simulation to account for the high yield strength of the designed HEAs. Okamoto et al. showed that a mean squared atomic displacement (MSAD) can be used to quantify a local lattice distortion, and the MSAD has a linear relationship with 0 K yield strength40. We used the MD simulation to calculate the MSAD of the HEAs instead of using ab initio for two major reasons. The designed HEAs are not equiatomic and some of the constituent elements have a very small fraction in the HEAs. Therefore, the chemical disorder of the samples with hundreds of atoms would be very hard to achieve in ab initio calculations, even using special quasi-random structures41 (SQS). On the other hand, MD simulations are relatively free from the sample size problem compared to ab initio calculations. Thus, the samples with much more than hundreds of atoms can be used for simulations (e.g., the MSAD calculation in this work used samples with 108000 atoms.). Moreover, because of its ability to simulate a large number of atoms, MD simulations can be used for further studies. For example, MD simulations can be utilized to calculate properties like CRSS or to elucidate the underlying causes of the phenomena in HEAs including sluggish diffusion and micro-twining12. In the present work, the simulation samples were prepared by randomly mixing the 108000 atoms according to alloy composition with an fcc lattice structure and the samples were relaxed at 0 K for a clear analysis of lattice distortion.

The calculated results of the MSAD for nine equiatomic HEAs using the MD simulation are presented in Fig. 6a, and compared with DFT calculations42, which are a kind of ab initio calculation. Figure 6a shows that the MD calculation results do not perfectly reproduce the DFT results. However, considering that VCoNi, an HEA with large lattice distortion, ranks high among the MD results, it is conceivable that a relative comparison of lattice distortion will be possible. Supplementary Fig. 6 shows a comparison between MD and DFT calculations of MSAD for the VCoNi and other equiatomic HEAs. There also exist differences between MD and DFT in the ordering of the other equiatomic HEAs which show small mutual differences in the MSAD. Nevertheless, the large MSAD of the VCoNi alloy compared to the other equiatomic HEAs is clearly predicted by the MD calculation as well as the DFT calculation.

a Comparison of MSAD calculations for MD and DFT42. b Calculation of MSAD for various alloys using MD simulation including the designed HEAs.

MSAD calculations using the MD including the designed HEAs are shown in Fig. 6b. The designed HEAs are compared with the equiatomic HEAs with very different compositions to emphasize that the calculated lattice distortion of the designed HEA of the current composition is very severe, compared to well-known equiatomic HEAs. Since the degree of lattice distortion is determined by the composition of HEAs, there will be no significant difference when comparing the lattice distortion of the designed HEAs with those of alloys with similar compositions.

Figure 6b indicates that the averaged MSAD1/2 of the designed HEAs are larger than those of the compared equiatomic HEAs, and this suggests that very high lattice distortions exist in the designed alloys. A lattice friction stress caused by high lattice distortions is a potential contributor to the superior mechanical properties of the designed HEAs.

The compared HEAs in Fig. 6b are competing HEAs in the Ashby plot and have lower mechanical performance than the designed HEAs. Table 4 indicates that the designed HEAs with high MSAD have higher YS than the equiatomic HEAs43,44,45. Particularly, the VCoNi alloy, which is known to have severe lattice distortion, also has a much higher yield strength than the other equiatomic HEAs. These results demonstrate that lattice distortion can be one of the main causes of mechanical performance and the MD calculation has good reproducibility enough to distinguish whether an HEA has severe lattice distortion or not.

Comparison with existing studies

The HEAs designed by the present model are both different from the existing HEAs in terms of chemical composition and process conditions. None of the alloys in the data set used to train or test the model contains a small amount of aluminum, a medium amount of vanadium, and a small amount of chromium like the designed HEAs. The fact that both alloys consist of a combination of new elements indicates that both alloys have entirely different chemical compositions from the existing HEAs. The addition of new elements to an alloy significantly alters its mechanical properties as the newly added elements affect the phase stability and microstructure of the alloy. For example, the absence of vanadium in HEA1 leads to the lower stability of the μ phase and B2 phase, as shown in Supplementary Fig. 7 of the supplementary information. The μ phase and B2 phase would not be formed at the annealing temperature of HEA1, so a microstructure with fine μ precipitate would not have existed due to the lowered stability. Thus, the UTS of HEA1 without vanadium would have been lowered than that of HEA1 with vanadium because the nanoscale precipitates, which serve as dislocation obstacles and sources, would not be formed in HEA1 without vanadium46. The process conditions were also tailored to the chemical compositions which were previously unknown to ensure that the designed HEA have the best mechanical performance.

It should be also noted here that a recent study by Giles et al.47, which also used machine learning to design HEAs with improved properties, has some similarities with our study. Both studies utilized descriptors to train machine learning models, designed alloys using metaheuristic algorithms, and set HEAs as target alloys. However, there are significant differences between the two studies. Firstly, the target properties in the two studies are different. Giles et al. focused on compressive strength, while we focused on the more challenging prediction of tensile strength. Tensile strength is known to vary considerably due to a variety of factors, such as the initiation position of a crack tip and local stress, making its prediction more difficult than compressive strength. Secondly, the target property items are also different. While Giles et al. focused on compressive yield strength, we focused on both tensile yield strength and ultimate tensile strength. These differences in target properties may account for the differences in feature selection and algorithm choice. Lastly, our model incorporated not only process conditions but also phase information through thermodynamic calculation, which was not included in the model by Giles et al. This provides a more comprehensive understanding of the material’s properties and can lead to more accurate predictions when designing an alloy through extrapolation.

In summary, we developed a highly accurate machine learning model and verified that the model had the highest accuracy compared to other models with different algorithms, inputs, and structures. The reason for the good accuracy of the model was discussed in terms of reduced number of parameters, feature extraction, and the effect of the kernel. A conditional random search was adopted as the inverse prediction method for the model and was utilized for the alloy design approach, to find multiple alloy candidates. The model and the alloy design approach were experimentally validated by conducting tensile tests with the two designed HEAs. The results showed that both had superior mechanical properties. We also investigated the reasons for the superior mechanical properties using microstructure analysis and lattice distortion effect calculation. The microstructure analysis revealed that the fine precipitates strengthened both designed HEAs and confirmed that the difference in recrystallization behavior of the HEAs results in HEA1 having a higher yield strength than HEA2. The MSAD calculation showed that a severe lattice distortion exists in the designed HEAs, and the distortion possibly contributes to the good mechanical properties of the HEAs. The alloy design approach can be further leveraged for the design of HEAs with more potential elements, and advanced properties, if more data is added to the dataset used for model training.

Methods

Dataset preparation

We collected 501 data from previous studies to be used for machine learning model training and validation. The references to the collected data are provided in the supplementary information. The data includes the chemical composition, process condition, and tensile mechanical properties of HEAs. This collected raw data is provided in the supplementary information of this manuscript. We used the TCFE2000 thermodynamic database and its upgraded version48,49,50,51,52 for the thermodynamic calculation using Thermo-Calc53 and OpenCalphad54 software. We designated annealing temperature (for general cases), homogenization temperature (for non-annealed cases), or temperature just below melting temperature (for as-cast cases) as the equilibrium temperature for some thermodynamic descriptors which needed temperature for calculation. The elemental descriptors were the fundamental properties of the 10 elements, C, Al, V, Cr, Mn, Fe, Co, Ni, Cu, and Mo, considered in this work, and the properties of the stable phase at room temperature for each element. We collected the elemental descriptors from previous literature55,56,57,58,59,60,61,62. All of the input features, which are chemical composition, process condition, thermodynamic descriptors, and elemental descriptors, are provided in the supplementary information.

Machine learning models

We used Keras63, an open-source neural network library written in Python, to develop the neural network models. XGBoost library64, an open-source software library that provides a gradient boosting framework, was used to develop the XGBoost model. We developed the other models using Scikit-learn65, a free software machine learning library for Python. The smallest repeated layers of the simple DNN models consist of a dense layer with a LeakyReLU activation function and a dropout layer. The input data of the simple DNN models had a 1-dimensional shape so that all the input features could be fully connected to the dense layer. In addition, the input data was standardized using mean and standard deviation values. The models were validated using the holdout validation method, which randomly splits the dataset into a train set, validation set, and test set. The holdout validation was conducted 100 times and the accuracy of each holdout validation was averaged to get a reliable accuracy value.

The concatenated DNN and CNN models have a more complicated structure than the simple DNN models. As we mentioned, the concatenated DNN and CNN models consist of the DNN part, the CNN part, and the regression part. The DNN part is exactly the same as the simple DNN models, except that the end layer of the DNN part is concatenated with the end layer of the CNN part and the concatenated one is connected to the regression part. The simplified smallest repeated layers of the CNN part are described in Fig. 2. In detail, the smallest repeated layers consist of a convolution layer with a LeakyReLU activation function and batch normalization and a reshape layer. The reshape layer changes the shape of the output space of the convolution layer into M × N × 1 so that the next repeated layers get the same input shape. The input data of the CNN part had a shape of M × N × 1 and the kernel of the convolution layer had a shape of 1 × N × N. Consequently, the kernel can stride to the direction in which the element changes and the output space has a shape of N × M × 1.

The atomic fractions in each alloy are entered in parallel with the elemental descriptors as shown in Fig. 2, instead of just scaling the elemental descriptors using the atomic fractions. However, the atomic fractions are standardized differently from elemental descriptors. The atomic fractions vary depending on input data, so the atomic fractions are standardized using mean and standard deviation calculated from input data. On the other hand, the elemental descriptors do not vary depending on input data but vary depending on an element. Hence, the elemental descriptors are standardized using mean and standard deviation calculated from element-dependent values. For example, valence electron concentrations (VEC) of C, Al, V, Cr, Mn, Fe, Co, Ni, Cu, and Mo are 4, 3, 5, 6, 7, 8, 9, 10, 11, and 6, respectively. Thus, the mean and standard deviation of VEC are 6.9 and 2.6, respectively. The standardized atomic fraction of each element is concatenated with the standardized elemental descriptors of the corresponding element and the concatenated data is inputted into the CNN to extract features.

The concatenated DNN and CNN model was validated in the same manner as the simple DNN. All of the neural network models developed in this study used an Adam66 optimizer and mean squared error for the optimization algorithm and loss, respectively. We developed the ensemble models by averaging all or some of the neural networks described above. The holdout validation was conducted for the ensemble models as well, but when training neural network models, the random seed used for splitting dataset was fixed to prevent some models from training other models’ test set data. Moreover, the holdout validation method used for the ensemble models randomly splits the dataset into train set, validation set, 2nd validation set, and test set. The validation set was used to prevent overfitting while training the neural network models of the ensemble, and the 2nd validation set was used to filter the overfitted or underfitted models. Any model with R2 calculated using the 2nd validation set which couldn’t reach 0.81 (for the YS prediction models) or 0.75 (for the UTS prediction models) was excluded from the ensemble. Most of the other models were trained using a default hyperparameter. However, the hyperparameters of SVR were manually tuned since its accuracy changes greatly by changing the kernel. The input data and validation methods were the same as those of the simple DNN models.

Conditional random search

The conditional random search was performed by repeating the random input generation step, prediction step, and selection step. First of all, 4 input sets are randomly generated but with certain conditions in the random input generation step. The randomly generated input sets consist of the chemical composition and process conditions. A randomly generated range of each input is limited to the range where data exists for each input in the dataset. Furthermore, all of the chemical composition input is normalized by the sum of the chemical composition input so that the sum is 100%. In particular, the range of the annealing temperature is more severely limited (above 1173 K and below the temperature where the data exists) than the other inputs.

We needed to transform the generated input sets so that they became the inputs of the trained model. Thus, thermodynamic descriptors were calculated for the generated input sets and the generated input sets were combined with the calculated thermodynamic descriptors and the elemental descriptors to make a shape suitable for the trained model. The generated and transformed input sets were fed into the trained model to predict YS or UTS in the prediction step.

Finally, half of the input sets with the highest predicted values were selected in the selection step. However, before selection, some inappropriate input sets were discarded. The input set is discarded if the mole fraction, thermodynamically calculated using the input set, of the matrix at the homogenization temperature is less than 100%. Moreover, the input set where the chemical composition was too similar to that of another input set was also discarded before selection. The criteria that one input set is “too similar” to another input set is expressed as

where ai and bi are the standardized chemical composition and process conditions of the two input sets. After selection, we randomly generated input sets as many times as the number of the discarded input sets. HEAs with good mechanical performance can be designed by repeating the above-mentioned process. The iteration of the process stops when the input sets selected in the selection step are the same for 10,000 iterations.

Experimental procedure

We fabricated ingots of the designed alloys, HEA1 and HEA2, based on the machine learning model, using vacuum induction melting, with elements whose purity was above 99.9 wt%. The ingots were homogenized at 1200 ˚C for 6 h under an Ar atmosphere. The homogenized ingots were then cold-rolled to 80% thickness reduction. The cold-rolled plates of HEA1 were annealed for 15 min or 30 min at 900 ˚C the HEA2 plates were annealed for 15 min or 30 min at 900 °C or for 5 min at 1000 ˚C.

The microstructure analysis of the annealed samples was carried out using XRD and high-resolution FE-SEM (JEOL FE-SEM 7100). BSE analysis and EDS elemental mapping were performed in the FE-SEM. The crystal structures of the annealed alloys were analyzed with synchrotron X-ray diffraction (XRD) at the 8D beamline of Pohang Accelerator Laboratory, with a Cu-Kα radiation with a wavelength of 0.15404 Ȧ. The XRD scans were performed with a step size of 0.02° and a dwell time of 0.5 s. We mechanically polished the samples for the XRD and SEM analysis with up to 1200 SiC grit paper and electropolished them using a solution of 92% CH3COOH + 8% HClO4 at 20 V so that the strain layer and the scratches caused by mechanical polishing were removed.

We tested the tensile properties of the designed alloys using Instron 1361 at 298 K and a strain rate of 10-3 s-1. Dog-bone-shaped specimens with a gauge length of 6.4 mm and a gauge width of 2.5 mm were cut along the rolling direction and prepared for tensile testing of the alloys. We utilized a digital image correlation method to accurately measure the strain (ARAMIS M12, GOM Optical Measuring Techniques).

The CoCrFeNiAlMoV septenary interatomic potential

The 2NN MEAM interatomic potential, which is essential for running the MD simulation, for the CoCrFeNiAlMoV septenary system needs potential parameter sets for unary, binary, and ternary systems of the constituent elements. The potentials for the unary elements (Co67, Cr68, Fe69, Ni67, Al70, Mo71, and V69) and the binary systems (Co-Cr68, Co-Fe68, Co-Ni72, Co-Al73, Co-Mo74, Co-V75, Cr-Fe76, Cr-Ni77, Cr-Mo74, Cr-V78, Fe-Ni77, Fe-Al79, Fe-Mo74, Fe-V78, Ni-Al72, Ni-Mo74, Ni-V80, Al-Mo74, Al-V80, and Mo-V81) have already been published except for the Al-Cr binary potential. Thus, to complete the septenary potential, an Al-Cr potential was developed during this work so that the potential satisfactorily reproduced the fundamental materials properties of the system.

The previously developed unary parameter sets for the Al and Cr, the parameters of the developed binary potential, and the validation of the developed potential are presented in the supplementary information of this paper. Furthermore, it should be noted here that the Co-Fe68 and Fe-V78 potentials were modified and have different parameter values from the published ones. This is because third nearest-neighbor interactions are not completely screened in bcc structures and cause some problems for the stability of bcc structures with low values of Cmin parameters smaller than 0.47. The details of the problems and modification of the potentials will be published later separately.

The potentials of the ternary systems (Co-Cr-Fe, Co-Cr-Ni, Co-Fe-Ni, and Cr-Fe-Ni) are already available and have been published12,77, but the others have not been developed yet. Hence, we determined the parameters of the undeveloped potentials in the same manner that Choi et al.12 used. The potentials of a ternary system need six screening parameters: three Cmin(i-j-k) and three Cmax(i-j-k). The screening parameters indicate the degree of screening by an atom j for the interactions between two adjacent atoms i and k. The screening parameters were given mechanically derived values by taking a kind of an average concept and, finally, the septenary interatomic potentials were completed. The parameters of the septenary interatomic potential are provided in LAMMPS82 format in the supplementary information. We used a radial cutoff distance of 4.8 Å for all MD simulations conducted in this study.

Data availability

All data are available in the main text or the supplementary materials.

Code availability

The codes are available from the corresponding author upon reasonable request.

References

Yeh, J.-W. et al. Nanostructured high-entropy alloys with multiple principal elements: Novel alloy design concepts and outcomes. Adv. Eng. Mater. 6, 299–303 (2004).

Cantor, B., Chang, I. T. H., Knight, P. & Vincent, A. J. B. Microstructural development in equiatomic multicomponent alloys. Mater. Sci. Eng. A 375–377, 213–218 (2004).

Miracle, D. B. & Senkov, O. N. A critical review of high entropy alloys and related concepts. Acta. Mater. 122, 448–511 (2017).

Chuang, M. H., Tsai, M. H., Wang, W. R., Lin, S. J. & Yeh, J. W. Microstructure and wear behavior of AlxCo1.5CrFeNi1.5Tiy high-entropy alloys. Acta. Mater. 59, 6308–6317 (2011).

Ding, J. et al. High entropy effect on structure and properties of (Fe,Co,Ni,Cr)-B amorphous alloys. J. Alloy. Compd. 696, 345–352 (2017).

Nene, S. S. et al. Corrosion-resistant high entropy alloy with high strength and ductility. Scr. Mater. 166, 168–172 (2019).

Kwon, H. et al. Precipitation-driven metastability engineering of carbon-doped CoCrFeNiMo medium-entropy alloys at cryogenic temperature. Scr. Mater. 188, 140–145 (2020).

Karati, A., Guruvidyathri, K., Hariharan, V. S. & Murty, B. S. Thermal stability of AlCoFeMnNi high-entropy alloy. Scr. Mater. 162, 465–467 (2019).

Ye, Y. F., Wang, Q., Lu, J., Liu, C. T. & Yang, Y. High-entropy alloy: Challenges and prospects. Mater. Today 19, 349–362 (2016).

Saal, J. E., Berglund, I. S., Sebastian, J. T., Liaw, P. K. & Olson, G. B. Equilibrium high entropy alloy phase stability from experiments and thermodynamic modeling. Scr. Mater. 146, 5–8 (2018).

Ma, D. et al. Phase stability of non-equiatomic CoCrFeMnNi high entropy alloys. Acta. Mater. 98, 288–296 (2015).

Choi, W.-M., Jo, Y. H., Sohn, S. S., Lee, S. & Lee, B.-J. Understanding the physical metallurgy of the CoCrFeMnNi high-entropy alloy: An atomistic simulation study. npj Comput. Mater. 4, 1–9 (2018).

Jarlöv, A. et al. Molecular dynamics study on the strengthening mechanisms of Cr–Fe–Co–Ni high-entropy alloys based on the generalized stacking fault energy. J. Alloy. Compd. 905, 164137 (2022).

Ma, D., Grabowski, B., Körmann, F., Neugebauer, J. & Raabe, D. Ab initio thermodynamics of the CoCrFeMnNi high entropy alloy: Importance of entropy contributions beyond the configurational one. Acta. Mater. 100, 90–97 (2015).

Lederer, Y., Toher, C., Vecchio, K. S. & Curtarolo, S. The search for high entropy alloys: A high-throughput ab-initio approach. Acta Mater. 159, 364–383 (2018).

Jo, Y. H. et al. FCC to BCC transformation-induced plasticity based on thermodynamic phase stability in novel V10Cr10Fe45CoxNi35−x medium-entropy alloys. Sci. Rep. 9, 2948 (2019).

Peng, J., Yamamoto, Y., Hawk, J. A., Lara-Curzio, E. & Shin, D. Coupling physics in machine learning to predict properties of high-temperatures alloys. Npj Comput. Mater. 6, 141 (2020).

Zhang, L., Qian, K., Huang, J., Liu, M. & Shibuta, Y. Molecular dynamics simulation and machine learning of mechanical response in non-equiatomic FeCrNiCoMn high-entropy alloy. J. Mater. Res. Technol. 13, 2043–2054 (2021).

Dey, S., Sultana, N., Kaiser, M. S., Dey, P. & Datta, S. Computational intelligence based design of age-hardenable aluminium alloys for different temperature regimes. Mater. Des. 92, 522–534 (2016).

Suh, J. S., Suh, B. C., Lee, S. E., Bae, J. H. & Moon, B. G. Quantitative analysis of mechanical properties associated with aging treatment and microstructure in Mg-Al-Zn alloys through machine learning. J. Mater. Sci. Technol. 107, 52–63 (2022).

Chen, Y. et al. Machine learning assisted multi-objective optimization for materials processing parameters: A case study in Mg alloy. J. Alloy. Compd. 844, 156159 (2020).

Du, J. L., Feng, Y. L. & Zhang, M. Construction of a machine-learning-based prediction model for mechanical properties of ultra-fine-grained Fe–C alloy. J. Mater. Res. Technol. 15, 4914–4930 (2021).

Guo, T. et al. Machine learning accelerated, high throughput, multi-objective optimization of multiprincipal element alloys. Small 17, 2102972 (2021).

Desu, R. K., Nitin Krishnamurthy, H., Balu, A., Gupta, A. K. & Singh, S. K. Mechanical properties of Austenitic Stainless Steel 304L and 316L at elevated temperatures. J. Mater. Res. Technol. 5, 13–20 (2016).

Zhang, H., Fu, H., Zhu, S., Yong, W. & Xie, J. Machine learning assisted composition effective design for precipitation strengthened copper alloys. Acta Mater. 215, 117118 (2021).

Li, J. et al. Performance-oriented multistage design for multi-principal element alloys with low cost yet high efficiency. Mater. Horiz. 9, 1518 (2022).

Wen, C. et al. Modeling solid solution strengthening in high entropy alloys using machine learning. Acta. Mater. 212, 116917 (2021).

Lee, J.-W. et al. A machine-learning-based alloy design platform that enables both forward and inverse predictions for thermo-mechanically controlled processed (TMCP) steel alloys. Sci. Rep. 11, 11012–11143 (2021).

Zheng, T. et al. Tailoring nanoprecipitates for ultra-strong high-entropy alloys via machine learning and prestrain aging. J. Mater. Sci. Technol. 69, 156–167 (2021).

Wen, C. et al. Machine learning assisted design of high entropy alloys with desired property. Acta. Mater. 170, 109–117 (2019).

Xie, Q. et al. Online prediction of mechanical properties of hot rolled steel plate using machine learning. Mater. Des. 197, 109201 (2021).

Klimenko, D., Stepanov, N., Li, J., Fang, Q. & Zherebtsov, S. Machine Learning-Based Strength Prediction for Refractory High-Entropy Alloys of the Al-Cr-Nb-Ti-V-Zr System. Mater. (Basel) 14, 7213 (2021).

Shen, C. et al. Physical metallurgy-guided machine learning and artificial intelligent design of ultrahigh-strength stainless steel. Acta Mater. 179, 201–214 (2019).

Shen, C. et al. Discovery of marageing steels: Machine learning vs. physical metallurgical modelling. J. Mater. Sci. Technol. 87, 258–268 (2021).

Lee, B.-J. & Baskes, M. I. Second nearest-neighbor modified embedded-atom-method potential. Phys. Rev. B 62, 8564–8567 (2000).

Dietterich, T. G., others. Ensemble learning. Handb. Brain Theory Neural Netw. 2, 110–125 (2002).

Bergstra, J. & Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012).

Jodi, D. E., Choi, N., Park, J. & Park, N. Mechanical performance improvement by nitrogen addition in N-CoCrNi compositionally complex alloys. Metall. Mater. Trans. A Phys. Metall. Mater. Sci. 51, 3228–3237 (2020).

Jo, Y. H. et al. Cryogenic strength improvement by utilizing room-temperature deformation twinning in a partially recrystallized VCrMnFeCoNi high-entropy alloy. Nat. Commun. 8, 1–8 (2017).

Okamoto, N. L., Yuge, K., Tanaka, K., Inui, H. & George, E. P. Atomic displacement in the CrMnFeCoNi high-entropy alloy – A scaling factor to predict solid solution strengthening. AIP Adv. 6, 125008 (2016).

Zunger, A., Wei, S. H., Ferreira, L. G. & Bernard, J. E. Special quasirandom structures. Phys. Rev. Lett. 65, 353 (1990).

Sohn, S. S. et al. Ultrastrong medium-entropy single-phase alloys designed via severe lattice distortion. Adv. Mater. 31, 1807142 (2019).

Wu, Z., Bei, H., Pharr, G. M. & George, E. P. Temperature dependence of the mechanical properties of equiatomic solid solution alloys with face-centered cubic crystal structures. Acta Mater. 81, 428–441 (2014).

Li, Z. et al. Effect of annealing temperature on microstructure and mechanical properties of a severe cold-rolled FeCoCrNiMn high-entropy alloy. Metall. Mater. Trans. A Phys. Metall. Mater. Sci. 50, 3223–3237 (2019).

Tian, J. et al. Effects of Al alloying on microstructure and mechanical properties of VCoNi medium entropy alloy. Mater. Sci. Eng. A 811, 141054 (2021).

Peng, S., Wei, Y. & Gao, H. Nanoscale precipitates as sustainable dislocation sources for enhanced ductility and high strength. Proc. Natl Acad. Sci. USA. 117, 5204–5209 (2020).

Giles, S. A., Sengupta, D., Broderick, S. R. & Rajan, K. Machine-learning-based intelligent framework for discovering refractory high-entropy alloys with improved high-temperature yield strength. npj Comput. Mater. 8, 1–11 (2022).

Sundman B. TCFE2000: The Thermo-Calc Steels Database, upgraded by B.-J. Lee. KTH, Stockholm (1999).

Choi, W.-M. et al. A thermodynamic modelling of the stability of sigma phase in the Cr-Fe-Ni-V High-Entropy Alloy System. J. Phase Equilibria Diffus 39, 694–701 (2018).

Choi, W.-M. et al. A thermodynamic description of the Co-Cr-Fe-Ni-V system for high-entropy alloy design. Calphad 66, 101624 (2019).

Do, H.-S., Choi, W.-M. & Lee, B.-J. A thermodynamic description for the Co–Cr–Fe–Mn–Ni system. J. Mater. Sci. 57, 1373–1389 (2022).

Do, H.-S., Moon, J., Kim, H. S. & Lee, B.-J. A thermodynamic description of the Al–Cu–Fe–Mn system for an immiscible medium-entropy alloy design. Calphad 71, 101995 (2020).

Andersson, J. O., Helander, T., Höglund, L., Shi, P. & Sundman, B. Thermo-Calc & DICTRA, computational tools for materials science. Calphad 26, 273–312 (2002).

Sundman, B., Kattner, U. R., Palumbo, M. & Fries, S. G. OpenCalphad - a free thermodynamic software. Integr. Mater. Manuf. Innov. 4, 1–15 (2015).

Frantz, E. L. nonferrous alloys and special-purpose materials. ASM International (1990).

Hölzl, J. & Schulte, F. K. Work function of metals. Solid Surf. Phys. 85, 1–150 (2006).

Samsonov, G. V. Handbook of the Physicochemical Properties of the Elements. (Springer Science & Business Media, 1968).

Nayar, A. The metals databook. (McGraw-Hill Companies, 1997).

Cottrell, T. L. The strengths of chemical bonds. (Butterworths Scientific Publications, 1958).

Lide, D. R. CRC handbook of chemistry and physics. 85, (CRC press, 2004).

Ross, R. B. Metallic materials specification handbook. (Springer Science & Business Media, 1992).

Schwerdtfeger, P. & Nagle, J. K. Table of static dipole polarizabilities of the neutral elements in the periodic table. Mol. Phys. 117, 1200–1225 (2018).

Gulli, A. & Pal, S. Deep learning with Keras. (Packt Publishing Ltd, 2017).

Chen, T. & Guestrin, C. XGBoost: A Scalable Tree Boosting System. in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794. https://doi.org/10.1145/2939672. (ACM, 2016).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Kingma, D. P. & Ba, J. L. Adam: A Method for Stochastic Optimization. in 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings. https://doi.org/10.48550/arxiv.1412.6980. (International Conference on Learning Representations, ICLR, 2014).

Kim, J.-S., Seol, D. & Lee, B.-J. Critical assessment of Pt surface energy – An atomistic study. Surf. Sci. 670, 8–12 (2018).

Choi, W.-M., Kim, Y., Seol, D. & Lee, B.-J. Modified embedded-atom method interatomic potentials for the Co-Cr, Co-Fe, Co-Mn, Cr-Mn and Mn-Ni binary systems. Comput. Mater. Sci. 130, 121–129 (2017).

Lee, B.-J., Baskes, M. I., Kim, H. & Koo Cho, Y. Second nearest-neighbor modified embedded atom method potentials for bcc transition metals. Phys. Rev. B 64, 184102 (2001).

Lee, B.-J., Shim, J.-H. & Baskes, M. I. Semiempirical atomic potentials for the fcc metals Cu, Ag, Au, Ni, Pd, Pt, Al, and Pb based on first and second nearest-neighbor modified embedded atom method. Phys. Rev. B - Condens. Matter Mater. Phys. 68, 144112 (2003).

Kim, J.-S. et al. Second nearest-neighbor modified embedded-atom method interatomic potentials for the Pt-M (M = Al, Co, Cu, Mo, Ni, Ti, V) binary systems. Calphad Comput. Coupling Phase Diagr. Thermochem 59, 131–141 (2017).

Kim, Y.-K., Jung, W.-S. & Lee, B.-J. Modified embedded-atom method interatomic potentials for the Ni–Co binary and the Ni–Al–Co ternary systems. Model. Simul. Mater. Sci. Eng. 23, 055004 (2015).

Dong, W.-P., Kim, H.-K., Ko, W.-S., Lee, B.-M. & Lee, B.-J. Atomistic modeling of pure Co and Co-Al system. Calphad Comput. Coupling Phase Diagr. Thermochem 38, 7–16 (2012).

Oh, S.-H., Kim, J.-S., Park, C. S. & Lee, B.-J. Second nearest-neighbor modified embedded-atom method interatomic potentials for the Mo-M (M = Al, Co, Cr, Fe, Ni, Ti) binary alloy systems. Comput. Mater. Sci. 194, 110473 (2021).

Oh, S.-H., Seol, D. & Lee, B.-J. Second nearest-neighbor modified embedded-atom method interatomic potentials for the Co-M (M = Ti, V) binary systems. Calphad Comput. Coupling Phase Diagr. Thermochem. 70, 101791 (2020).

Lee, B.-J., Shim, J.-H. & Park, H. M. A semi-empirical atomic potential for the Fe-Cr binary system. Calphad 25, 527–534 (2001).

Wu, C., Lee, B.-J. & Su, X. Modified embedded-atom interatomic potential for Fe-Ni, Cr-Ni and Fe-Cr-Ni systems. Calphad 57, 98–106 (2017).

Choi, W. M. et al. Computational design of V-CoCrFeMnNi high-entropy alloys: An atomistic simulation study. Calphad 74, 102317 (2021).

Lee, E. & Lee, B.-J. Modified embedded-atom method interatomic potential for the Fe–Al system. J. Phys. Condens. Matter 22, 175702 (2010).

Shim, J.-H. et al. Prediction of hydrogen permeability in V-Al and V-Ni alloys. J. Memb. Sci. 430, 234–241 (2013).

Wang, J. & Lee, B.-J. Second-nearest-neighbor modified embedded-atom method interatomic potential for V-M (M = Cu, Mo, Ti) binary systems. Comput. Mater. Sci. 188, 110177 (2021).

Plimpton, S. Fast parallel algorithms for short-range molecular dynamics. J. Comput. Phys. 117, 1–19 (1995).

Acknowledgements

This work has been financially supported by the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT, Korea (2016M3D1A1023383 and NRF-2022R1A5A1030054). Also, special thanks to Dr. Hyun-Seok Do (Department of Materials Science and Engineering, POSTECH, Republic of Korea) for the upgrade of the 2NN MEAM interatomic potentials.

Author information

Authors and Affiliations

Contributions

J.W.: conceptualization, methodology, software, validation, formal analysis, investigation, data curation, writing – original draft, visualization. H.K.: writing – review & editing, validation, formal analysis, investigation, resources. H.S.K.: supervision, project administration, funding acquisition. B.-J.L.: conceptualization, methodology, writing – review & editing, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, J., Kwon, H., Kim, H.S. et al. A neural network model for high entropy alloy design. npj Comput Mater 9, 60 (2023). https://doi.org/10.1038/s41524-023-01010-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01010-x

This article is cited by

-

Unlocking the black box beyond Bayesian global optimization for materials design using reinforcement learning

npj Computational Materials (2025)

-

Multiscale computational framework linking alloy composition to microstructure evolution via machine learning and nanoscale analysis

npj Computational Materials (2025)

-

Experimentally validated inverse design of FeNiCrCoCu MPEAs and unlocking key insights with explainable AI

npj Computational Materials (2025)

-

Critical raw material-free multi-principal alloy design for a net-zero future

Scientific Reports (2025)

-

Elemental numerical descriptions to enhance classification and regression model performance for high-entropy alloys

npj Computational Materials (2025)