Abstract

First principles approaches have revolutionized our ability in using computers to predict, explore, and design materials. A major advantage commonly associated with these approaches is that they are fully parameter-free. However, numerically solving the underlying equations requires to choose a set of convergence parameters. With the advent of high-throughput calculations, it becomes exceedingly important to achieve a truly parameter-free approach. Utilizing uncertainty quantification (UQ) and linear decomposition we derive a numerically highly efficient representation of the statistical and systematic error in the multidimensional space of the convergence parameters for plane wave density functional theory (DFT) calculations. Based on this formalism we implement a fully automated approach that requires as input the target precision rather than convergence parameters. The performance and robustness of the approach are shown by applying it to a large set of elements crystallizing in a cubic fcc lattice.

Similar content being viewed by others

Introduction

Density functional theory (DFT) has evolved as work-horse method to routinely compute essentially all known materials their properties. While DFT is parameter-free in the sense that no materials specific input parameters are needed, it is not free of numerical convergence parameters. Carefully selecting these parameters is critical: Setting them too low may sacrifice the predictive power, selecting them too high may waste valuable computational resources. Since DFT-based calculations consume huge amounts of supercomputer resources worldwide1 being able to reduce the cost for such calculations, without having to sacrifice their precision, would open large opportunities in saving computational resources.

Until recently, the selection of these parameters was based on a few guidelines and manual benchmarks. However, exciting new applications such as e.g. the fitting of highly precise machine learning potentials that routinely achieve mean errors in the order of a few meV/atom require DFT input data sets with a precision that is well below the xc-potential error.2 Also predicting finite temperature materials properties reliably requires a precision in DFT energies on the order of 1 meV/atom.3 The precision needed for such applications goes well beyond previous criteria. To guarantee this level of precision a detailed uncertainty quantification as well as an automated approach to determine the optimum set of convergence parameters that guarantee a predefined target error is needed.

DFT input parameters can be roughly classified into two categories: Those, that cannot be systematically controlled and improved. A prominent example is the xc-correlation functional and to some extend the choice of the pseudopotential. The other category contains controllable parameters, which can be systematically improved. The most important parameters in the second category are the number of basis functions and the k-point sampling. These reflect the need to approximate an infinite basis set such as e.g. plane waves (PW) or a continuous set of k-points in the Brillouin zone by discretized finite sets that can be represented on the computer. The controllable parameters behind these approximations can often be described by a single scalar such as the energy cutoff ϵ or the number of symmetry inequivalent k-points κtot.

The total energy surface \({E}_{tot}(\{\overrightarrow{{R}_{I}},{Z}_{I}\};{\kappa }_{{\rm{tot}}},\epsilon ,\ldots \,)\) is thus not only a function of atom coordinates \(\overrightarrow{{R}_{I}}\) and species ZI, but also of the convergence parameters κtot, ϵ, etc. Since the total energy surface is the key quantity to derive materials properties, any derived quantity f[Etot] thus depends on the choice of the convergence parameters as well.

Numerically accurate, i.e. converged, results would be obtained when the convergence parameters approach infinity. In practice, this strategy is not feasible since the required computational resources also scale with the convergence parameters. Therefore, from the beginning of DFT calculations, a judicious choice of the convergence parameters was mandatory4,5,6,7. To optimally use computational resources the convergence parameters have to be chosen such that (i) the actual error Δf (ϵ, κ, … ) is smaller than the target error Δftarget of the quantity of interest and (ii) the required computational resources are minimized. Since the required computational resources scale monotonically with the convergence parameters the latter condition translates in keeping the convergence parameters as small as possible without violating (i).

Extensive convergence checks have been mainly reported for simple bulk systems by computing the total potential energy surface (PES) as a function of the volume per unit cell8,9,10,11. Knowing the energy-volume PES allows for a direct computation of important materials properties such as the equilibrium lattice structure, and mechanical response such as the bulk modulus or the cohesive energy12,13,14. For more complex quantities derived from the PES such as phonon spectra, surface energies, or free energies routine benchmarks such as by how much a target quantity changes when increasing a specific convergence parameter are common. However, systematic convergence checks are rarely reported, e.g.3,15 for phonons or16 for finite temperature-free energies.

To address the challenge of identifying the computationally most efficient convergence parameters (ϵ, κ) to achieve a given convergence goal, we derive the asymptotic behavior of the systematic and statistical errors. For this purpose we consider the energy-volume dependence and derived quantities such as the equilibrium lattice constant and the bulk modulus of cubic materials. The proposed approach can be straightforwardly extended to additional convergence parameters as well as to other material properties, with the energy-volume dependence being a widely-used example to test the convergence of more complex materials properties or the performance of DFT codes (see e.g.17). We start by computing an extensive set of DFT data E(V, ϵ, κ) spanning the full range of physical (i.e. volume V) as well as convergence parameters (i.e., ϵ, κ).

By carefully analysing these we show in a first step that the three-dimensional array can be decomposed into four two-dimensional arrays (see Fig. 3) with full control on systematic and statistical errors. In the second step, we develop an efficient decomposition approach also for derived quantities such as the equilibrium bulk modulus Beq(ϵ, κ). The availability of these extensive and easy-to-compute (via linear decomposition into lower dimensional arrays or vectors) data sets reveals surprising and hitherto not reported correlations between these critical DFT parameters. The derived formalism also allows us to construct and implement a computationally efficient algorithm that in a fully automated fashion predicts optimum convergence parameters that minimize computational effort while simultaneously guaranteeing convergence below a user-given target error.

Results and Discussion

We developed an automated tool to compute error surface and optimized convergence parameters for a wide range of chemical elements and pseudopotentials reducing the computational costs by more than an order of magnitude. Figure 1 shows the convergence behavior for 9 elements using as target quantity the bulk modulus. Next to these fcc metals we have also tested the approach for other crystallographic structures (bcc W) as well as for semiconductors (Si). The results for the latter two are given in Supplementary Figs. 1 and 2. For each calculation the PBE-GGA pseudopotential recommended by the VASP manual18 is chosen (VASP PAW 5.4). These are also the same pseudopotentials used in the Delta project17. To visualize the convergence contour lines at target errors of 0.1 to 5 GPa are shown on the error surface. Some of the elements such as e.g. Ca allow a convergence down to 0.1 GPa even for rather modest convergence parameters. Others, such as e.g. Cu achieve this lower limit barely at the maximum set of convergence parameters studied here. In general, systematic convergence trends that would allow to extract some simple rules for finding optimum convergence parameters are lacking. This emphasizes the importance to provide automatized tools for this task and the challenge to reduce the computational cost for sampling the full convergence parameter space.

The red lines mark iso-contours of constant error (see legend in a). The blue squares mark the parameter set recommended by VASP. The orange and magenta filled squares mark values used in the Materials Project19,20,21 and the delta project17, respectively. Some elements like Ca (a), Al (b), Pb (d), Ir (g), Pt (h) and Au (i) achieve a precision of up to 0.1 GPa. Others like Cu (c), Pd (e) and Ag (f) are limited to 0.5 GPa in the considered parameter space. Above each contour plot the element and its determined (ϵmax, κmax) bulk modulus are given. The grey line denotes the boundary where the systematic and statistical error are equal.

For our minimum parameter set the statistical error, caused by changing the number of plane waves (basis set) when varying the cell volume7, dominates for all elements except for Al, Ca and Pb as indicated by the grey line in Fig. 1, which marks the boundary where the statistical error and systematic error are equal. Going to errors below 1 GPa the systematic error, caused by the finite basis set, becomes the dominating contribution except for elements with a very high bulk modulus such as Ir and Pt. In the region dominated by the systematic error the cutoff and k-point related error contribution are additive (Eq. (7)). As a consequence, the contour lines in Fig. 1 are either parallel to the κ-axis (i.e. the dominating error is due to the energy cutoff ϵ) or to the ϵ-axis (with κ causing the leading error). The difference in convergence of the bulk modulus for elements like Al and Au which have similar equilibrium volumes highlights the need to determine element-specific convergence parameters. Simple scaling relations, e.g., using the volume do not capture the complexity of the underlying electronic structure.

To ‘benchmark’ the choices made by our automated tool against the choices made by human experts we include the parameters used in two large and well-established high-throughput studies: The Materials Project19,20,21 (orange squares) and the delta project17 (magenta squares). The delta project, which aims at high precision to allow a comparison between different DFT codes, systematically shows an error between 1 and 5 GPa, with a clear tendency towards the 1 GPa limit. The Materials Project, where the focus is on computational efficiency and not the highest precision the error is close to the 5 GPa limit, for several elements (e.g. Ir, Pt, Au) the error becomes 10 GPa or larger.

By analyzing the dependence of the total energy not only as a function of a single convergence parameter, as commonly done, but as a function of a physical parameter (in the present study the volume) as well as multiple convergence parameters (energy cutoff and k-point sampling) simultaneously we identified powerful relations to compute both the statistical and systematic error of the total energy and derived quantities. The identified relations summarized in Eqs. (7) and (8) provide an accurate and computationally efficient approach (Fig. 3), thus allowing to describe error surfaces of multiple convergence parameters for important materials quantities such as e.g. the bulk modulus. This allows DFT practitioners to construct such energy surfaces for both the systematic and statistical error with a modest number of simple DFT calculations opened the way to construct error phase diagrams that tell whether for any given parameter set one or the other error type dominates. They also provide direct insight of how multiple convergence parameters together affect the errors and allowed us to construct contour lines of constant error. Having this detailed insight is helpful for DFT practitioners to choose and validate accurate yet computationally efficient convergence parameters. It also allowed us to develop and implement a robust and computationally efficient algorithm. The resulting fully automated tool, implemented in pyiron22, predicts an optimum set of convergence parameters with minimum user input, i.e. choice of pseudopotential, desired target error, and quantity of interest (e.g. bulk modulus). We expect that this approach where explicit convergence parameters are replaced by a user-selected target error will be particularly important for applications where for a large number of DFT calculations a systematically high accuracy is crucial, e.g. for high-throughput studies and for constructing data sets to be used in machine learning23.

Analysis of the DFT convergence parameters

As a first step towards an automated uncertainty quantification of the total energy surface and derived quantities we start with an analysis of DFT convergence. As a model system we consider the energy-volume curve of bulk fcc-Al constructed by changing the lattice constant alat of the cubic cell at T = 0 K. Due to crystal symmetry all atomic forces exactly vanish, so that the only degree of freedom is the lattice constant or equivalently the volume of the primitive cell (\(V={a}_{lat}^{3}/4\)). The actual calculations are performed using VASP24,25,26. The results and conclusions are not limited to this specific code but can be directly transferred to any plane wave pseudpotential DFT code.

In this study, we focus on the two most relevant convergence parameters of a plane wave (PW) DFT code: the PW energy cutoff ϵ and the k-point sampling κ. While these two convergence parameters are the most prominent ones, in an actual DFT plane wave pseudopotential code many more exist, describing e.g. the size of the various numerical meshes to perform the Fast Fourier Transformations (FFT), describe the electronic density to compute exchange correlation, etc. All these parameters scale in a DFT code with the energy cutoff, i.e., converging the energy cutoff will automatically also converge these convergence parameters. We will therefore consider these parameters not explicitly in this study, but rather fix the Fourier mesh for the plane waves at 2 × Gcut and the mesh for the charges at double the number of grid points in each direction compared to the Fourier mesh. We note, however, that optimizing them separately would open additional savings in computational resources. Furthermore, we set the electronic convergence to 10−9 eV to guarantee the resulting fluctuations are always lower than the convergence error.

A key step in any DFT code with periodic boundary conditions such as a plane wave approach is the integration over the occupied states in the Brillouin zone. Here, the k-point sampling defines the size of the integration mesh. The convergence of this integration is largely affected by the presence of a steep jump in occupations at the Fermi surface. To improve convergence, two main schemes have been developed: Smearing methods that replace the sharp step at the Fermi surface by a smooth function and tetrahedron methods. The first type requires a convergence parameter - commonly called smearing parameter. Large values for this parameter improve the k-point convergence rate but can show substantial deviations from the converged result at zero smearing, i.e., for the sharp Fermi surface. In contrast, the tetrahedron method requires no additional convergence parameter. Figure 2 illustrates the convergence behavior of the two schemes. To avoid additional complexity due to an additional convergence parameter we use here the tetrahedron method with Blöchl corrections27. For the generation of the k-point mesh various approaches have been developed. A widely used approach is the Monkhorst-Pack mesh, which requires as input the grid-dimensions (κ × κ × κ) with κ an integer value and no offset from the original Brillouin zone. Since this scheme is available in practically all DFT codes we will use it in the present study. We note however, that alternative k-point generation schemes28 could be tested and employed to enhance k-point convergence.

The Methfessel-Paxton method colored based on the smearing parameter sigma σ and the tetrahedron method with Blöchl corrections27 as dashed line in black (TB).

To discuss and derive our approach we first compute and analyze the total energy surface E(V, ϵ, κ) of a primitive cubic cell as a function of volume V, energy cutoff ϵ, and k-point sampling. The k-point sampling is given by κ the number of k-points in one dimension. If needed the number of symmetry inequivalent k-points κtot can be straightforwardly translated into k-point density, which is the more convenient description when studying a wide variety of differently sized and shaped supercells. We map the energy surface on an equidistant set of nV volumes Vi ranging over an interval ± 10% around the equilibrium volume Veq (see Section “Analysis and fit of the energy-volume curve”), nϵ energy cutoffs ϵ and nκk-point samplings κ (see Fig. 3). The generated shifted k-point mesh starts at a minimal k-point sampling of 3 × 3 × 3(κmin = 3) and goes up to a maximum of 91 × 91 × 91(κmax = 91) and an energy cutoff of ϵmin = 200 eV up to ϵmax = 1200 eV. The k-point sampling is increased in equidistant steps of Δκ = 2 to always include the Gamma point. The energy cutoff is increased in equidistant steps of Δϵ = 20 eV. For the following analysis we focus on fcc bulk aluminum. Extensive tests for other elements and pseudopotentials show qualitatively the same behavior and as discussed in the beginning of Section “Results and Discussion for fcc elements” and in Supplementary Information 2 for non-fcc elements. In total nV × nϵ × nκ = 21 × 51 × 45 = 48195 DFT calculations have been performed for a single pseudopotential. We note already here that such a large number of DFT calculations is not required for the final algorithm. The complete mapping of cutoff and k-point-sampling is used here only to derive and benchmark the asymptotic behavior for E(V, ϵ, κ) and \({B}_{x}({\epsilon }_{i},{\kappa }_{j}){| }_{{V}_{eq}}\) when going towards large (i.e. extremely well converged) parameters. To analyze the energy surface E(V, ϵ, κ) we define the convergence error with respect to the maximum energy cutoff ϵmax and k-point sampling κmax, respectively:

This formal decomposition is motivated by the fact that the two parameters affect the convergence of two very different physical phenomena. Increasing the energy cutoff primarily improves the description near the nuclei, whereas increased k-point sampling improves the description of bonding, Fermi surface etc. In Section “Physical origin” we analyse in more detail the individual energy contributions of the total energy to explain the physical origin. Before that, we will first analyze the dependence of the energy over volume of these quantities since this dependence directly impacts the convergence of equilibrium quantities such as bulk modulus, lattice constant etc. The energy volume dependence of the energy cutoff convergence ΔEϵ is shown in Fig. 4b for a fixed κ. As can be seen, using a non-converged energy cutoff ϵmin = 260 eV gives rise to a convergence error in the energy that strongly depends on the volume. In the shown example the error is largest for small volumes and monotonously decreases with increasing volume. As a consequence, the equilibrium volume will be shifted to a smaller value compared to the fully converged one.

a Energy-volume curves for a set of minimum and maximum convergence parameters ϵmin = 260 eV to ϵmax = 1200 eV and κmin = 7 to κmax = 91. b, c show the ϵ and κ convergence ΔEϵ(V, ϵ, κ) and ΔEκ(V, ϵ, κ) as defined in Eqs. (1) and (2) for a fixed k-point sampling κ and cutoff ϵ, respectively. d Residual (statistical) error according to Eq. (4).

Figure 4b also reveals a remarkable and highly useful behavior of the energy cutoff convergence ΔEϵ: It is in first order independent of the k-point sampling. Taking the difference

between any two k-point samplings κ1 and κ2 for a fixed energy cutoff ϵ results in a volume dependence that resembles random noise. This is shown exemplary in Fig. 4d when computing ΔΔE setting κ1 and κ2 to the minimum and maximum cutoff value: The average \({\langle \Delta \Delta E(V) \rangle }_{V}\) is approximately zero. We validated that the distribution is Gauss-like and any smooth volume dependence is absent. Due to these characteristics we can therefore regard the variance of this contribution

as a statistical error. Its origin are discretization errors arising from discontinuous (discrete) jumps whenever a continuous change in κ or ϵ results in a discontinuous change in the integer number of k-vectors or plane waves (see e.g. ref. 7). The physical origin of this statistical error is explained in Section “Physical origin”.

Figure 4c shows for the k-point convergence ΔEκ(V, ϵ, κ) an analogous behavior: It is in first order independent of ϵ. The difference ΔΔE, is shown in Fig. 4d and by construction (see Supplementary Information 1) identical to the one obtained from the cutoff convergence ΔEϵ(V, ϵ, κ). We can therefore conclude that the energy cutoff and k-point convergence can be separately considered, i.e.,

and

The above equations can be summarized in the following expression of the total energy surface:

The above equation decomposes the original three dimensional array, which would require to perform nV × nϵ × nκ DFT calculations into four two dimensional arrays. The first two require only nV × (nϵ + nκ) DFT calculation. The last contribution, ΔΔE(ϵ, κ) requires for each pair of ϵ, κ also the computation at all volumes, i.e., nV × nϵ × nκ DFT calculations. In the following Section we will analyze the statistical error and derive a computationally efficient approach to compute this error.

Analysis of the statistical error

Having the full dataset of DFT energies as function of V, ϵ and κ allows us to directly compute the statistical error as defined in Eq. (4). The results are summarized in Fig. 5a. In the double-logarithmic plot the k-point convergence shows an almost linear dependence, with a vertical shift when changing the energy cutoff (color coded). The fact that the slope remains unchanged when changing the energy cutoff indicates that the k-point convergence of the statistical error is independent of the energy cutoff except for a proportionality factor. To verify this independence we plot the normalized statistical error σE(ϵ, κ)/σE(ϵ, κmin) in Fig. 5b. Having this insight we consider the normalized statistical error along the energy cutoff, σE(ϵ, κ)/σE(ϵmin, κ). The fact that all curves coincide clearly shows that except for a proportionality factor the cutoff dependence is identical.

a Convergence over k-point mesh with the different colors denoting the different energy cutoffs. b Normalized k-point convergence (σE(ϵ, κ)/σE(ϵ, κmin)). The red solid line illustrates the mean. Finally the inset in b relates the statistical error in energy σE to an error in the bulk modulus \({\sigma }^{{B}_{0}}\) using bootstrapping (s. Section “Uncertainty quantification of derived physical quantities”).

Using the above identified empirical relations we can approximate the statistical error by:

The computation of σE(ϵ, κmin) and σE(ϵmin, κ) requires nV × (nϵ + nκ) additional DFT calculations. To obtain a sufficiently large magnitude of the statistical error these calculations are performed at the minimum of the convergence parameter set where the statistical noise is largest. As a consequence these extra calculations are computationally inexpensive. To compute the statistical error a second reference next to ϵmin or κmin is needed (see Eq. (3)). We find that ϵmax and κmax provide an accurate estimate. These values do not require any additional DFT calculations since they are identical to the ones used to construct the systematic convergence errors ΔEϵ and ΔEκ in Eq. (7). The above formulation allows a highly efficient computation of the statistical error by reducing the computational effort from (nV × nϵ × nκ) DFT calculations to 2nV(nϵ + nκ).

Analysis and fit of the energy-volume curve

In contrast to the conventional approach of validating the level of convergence for a given point E(V, ϵ, κ) of the total energy surface by varying ϵ and κ independently (see e.g. refs. 3,9,18), Eq. (7) together with Eq. (8) provide a powerful and computationally highly efficient approach to interpolate energy-volume curves E(V, ϵ, κ) for any set of convergence parameters ϵmin < ϵ < ϵmax and κmin < κ < κ max. They also form the basis for the automated approach to derive optimum convergence parameters to achieve a given level of precision at the minimal computational cost, that will be derived in the following. The underlying relations and assumption have been carefully validated by computing and analysing an extensive set of pseudopotentials and chemical elements, as presented below.

Figure 6 shows the computed energy-volume curves E(V, ϵ, κ) for two sets of convergence parameters: One with parameters as recommended by the VASP-manual18 (i.e. ϵmin = 240 eV and κmin = 11), the other one for an extremely well converged parameter set (i.e. ϵmax = 1000 eV and κmax = 101). Looking at the results over a large volume range (± 5%; Fig. 6a) the two curves appear to be smooth and well behaved. This may give the impression that the main impact of the convergence parameters is on the absolute energy scale resulting only in a vertical shift. However, going to a 5 times smaller volume range (Fig. 6b) the surface with the recommended convergence parameters shows discontinuities that divide the curve. While the segments between two neighboring discontinuities are smooth and analytically well-behaved their boundaries to the neighboring segments are discontinuous in absolute values and derivatives. As a consequence, even a well-defined energy minimum with zero first derivative, which is the definition of the T = 0 K ground state, does not exist.

The first one (marked by the blue and orange dots) has been computed using the recommended18 set of convergence parameters for energy cutoff ϵ = 240 eV and k-point sampling κ = 11. The second one (marked by the red and green dots) has been obtained using an extremely high (i.e. well converged) set of parameters (ϵ = 1000 eV and κ = 101). Three different volume ranges are shown: a vrange = ± 5% to compare the absolute energies, followed by b vrange = ± 1% to compare the energy changes in reference to the minimum energy and finally c vrange = ± 0.05% again the energy changes. The graphs are coloured based on the number of plane waves, with the color changing whenever the total number of plane waves changes.

The discontinuous behaviour is a well-known artifact of PW-pseudopotential total energy calculations7. The origin is that when changing the volume the number of basis functions (plane waves) changes. Since the number of plane waves is an integer, changing the volume continuously results in discontinuous changes in the number of PWs. Improving the convergence parameters reduces the magnitude of the discontinuity (see line marked by red and green dots in middle figure) but does not remove it (see Fig. 6c where the volume range has been reduced to ± 0.05%). Note also that in this interval the lower converged curve (straight line marked by blue dots) has no resemblance at all to the expected close to parabolic energy minimum.

A common strategy to overcome the discontinuous behaviour is to fit the energy-volume points obtained from the DFT-calculations to a smooth fitting function6. Alternatively, Francis and Payne7 described an analytical correction schema to remove these discontinuities. We first describe and analyze fitting approaches. In Section “Physical origin” we analyze and compare the performance of the fitting approaches with the Francis and Payne correction7.

Two main categories for fitting the energy-volume curve exist: First, using a physics-based fit function e.g. Birch-Murnaghan expressions for the equation of state that describes the relation between the volume of a body and the pressure applied to it12,13,14. From this set we chose the Birch-Murnaghan equation of state, since it is the most popular choice in fitting such energy-volume curves. The second approach is to use polynomial fits.

In contrast to the discontinuous energy surface, having a smooth fit to the energy-volume data points allows one to obtain the minimum as well as higher order derivatives around it. Particularly important in this respect are the energy minimum (related to the cohesive energy), the volume at which the energy becomes minimum (equilibrium volume Veq at T = 0K), as well as the second and third derivative (related to the bulk modulus Beq and its derivative \({B}_{eq}^{{\prime} }\)). These quantities can be measured experimentally and thus allow a direct comparison with the theoretical predictions.

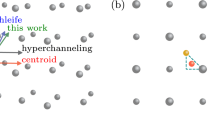

Fitting the data points using either analytical or polynomial functions introduces next to DFT related convergence parameters (e.g. ϵ and κ) additional parameters that need to be carefully chosen. For the energy-volume curve these are (i) the number of energy-volume pairs and (ii) the volume range. For a polynomial fit, in addition, also (iii) the maximum polynomial degree is a parameter that needs to be tested. Since these parameters are related to the fit and not to the DFT calculation we call them in the following hyper-parameters.

Similarly to the DFT convergence parameters the fit-related hyper-parameters have to be chosen such that (i) the error related to them is smaller than the target error in the physical quantity of interest and (ii) minimize the computational effort (number and computational expense of the necessary DFT calculations). To construct a suitable set of hyper-parameters we consider the bulk modulus:

The reason for choosing this parameter is that quantities related to higher derivatives in the total energy surface are more sensitive to fitting/convergence errors. Thus, identifying a set of hyper-parameters for this quantity will guarantee that it works also for less sensitive quantities. Indeed, we checked and validated this hypothesis for quantities related to lower orders in the total energy surface such as equilibrium volume Veq or minimum energy Eeq.

We first study the dependence of the bulk modulus with respect to the volume range. As input we use the DFT data set E(V, ϵ, κ) constructed in Section “Analysis of the DFT convergence parameters”, i.e., 21 energy-volume data points equidistantly distributed over the considered volume range. For polynomial fits we also tested the impact of the polynomial degree. The accuracy of the fit increases until a maximum degree of d = 11, i.e., a degree which roughly corresponds to 1/2 of the number of data points. Going to higher degrees does not reduce the fitting error.

The results are summarized in Fig. 7. Next to a polynomial fit we also show the results using a physics based analytical expression (Birch-Murnaghan equation of state13). As minimum limit for the target error we chose 0.1 GPa. We will later show that this target is much smaller than the DFT error related to the exchange-correlation functional, which is on the order of 10 GPa and the one related to typical DFT convergence parameters (≈ 1…5 GPa).

Comparison of the volume range dependence of the bulk modulus for a primitive aluminium supercell with the convergence parameters low (ϵ = 240 eV, κ = 11)/high (ϵ = 1040 eV and κ = 91) for the Birch Murnaghan (BM.) equation in blue/green in comparison to a polynomial fit (Poly.) in orange/red, each with N = 21 energy-volume pairs.

Figure 7 shows that the performance of the two fitting approaches depends on whether low or high convergence parameters are used. For low convergence parameters the analytical fit based on Birch-Murnaghan (blue) is rather insensitive to the exact choice of the volume interval—it remains almost unchanged for intervals between 2 and 10%. Only when going above 10% the underlying analytical model with its four free parameters becomes too unflexible giving rise to an increasing model error. For the commonly recommended interval of ±10%18 the polynomial fit (orange) shows a similar performance but deteriorates both for smaller and larger volume ranges.

For very high convergence parameters, however, the polynomial approach (red) clearly outperforms the analytical one (green). The polynomial fit is highly robust and largely independent on the chosen volume interval that ranges between 0.1% and 30%. Also, the number of 21 energy-volume points is sufficient to achieve the targeted error of 0.1 GPa. In this high-convergence regime the analytical Birch-Murnaghan fit provides precise predictions only for small volume ranges up to 2%. The reason is that the analytical expression, which contains only four free fitting parameters is no longer able to adjust to the actual shape of the DFT energy surface.

Based on this discussion we will use in the following a volume interval of ±10% and 21 sample points. With this set of hyper-parameters we verified that the errors arising from the polynomial fit are below the target of 0.1 GPa for both the systematic and the statistical error.

Physical origin

As mentioned in Section “Analysis of the DFT convergence parameters”, DFT practitioners commonly assess the convergence of an individual set of convergence parameters (ϵ, κ) by testing their convergence separately. This involves keeping one parameter constant (e.g., ϵ) and then increasing the other parameter (e.g., κ), or vice versa. The rationale behind this approach lies in the distinct impact of these parameters on different properties: increasing the energy cutoff ϵ primarily improves the description near the nuclei, while augmenting k-point sampling κ enhances the description of bonding, Fermi surface, and related characteristics. In the later part of this section, a concise qualitative analysis is presented to explain why the various total energy contributions exhibit markedly different convergence behaviors.

The anticipated decoupling of the convergence of the two parameters is reflected by the systematic error contribution in Eq.(7) (middle term). This contribution is expressed as a linear superposition of the errors obtained when converging a single parameter (ϵ or κ) while keeping the other one fixed. The explicit analytical formulation derived here not only allows the specification of absolute error bars but also enables the correction of systematic errors.

While the expression of the systematic error is in line with the strategies employed by DFT practitioners the expression identified for the statistical error has no direct relation to these strategies. Having a product rather than a sum of the individual error contributions (see last term in Eq. (7)) implies a strong coupling between them, in obvious contrast to the systematic error contributions. The origin of the statistical error are discretization errors that arise when replacing integrals over the DFT supercell or Brillouin zone by a summation over a finite mesh or when truncating the infinite PW basis to a finite set of G vectors. This error gives rise to discontinuous jumps in DFT quantities such as e.g. total energies or forces and occurs when changing the size and shape of the supercell. These discontinuities pose a challenge whenever energy differences are needed, e.g. to compute derivatives such as the bulk modulus as discussed here or when computing formation energies. Such energies are routinely computed to describe e.g. the thermodynamic stability of bulk, surface or defect structures which have widely varying supercells.

A prominent example where the determination of derivatives is hampered by these discontinuities is the here studied example of the energy-volume curve and the determination of the minimum (equilibrium) or of the second derivative (related to the bulk modulus - Eq. (9)). These challenges and their origin have been early on recognized and corrections have been proposed. For the energy volume curve Franics and Payne suggested already more than 30 years ago an elegant correction that replaces the discontinuous change in the number of plane waves and k-points by introducing a smooth scaling factor7.

While this approach can be straightforwardly extended to multiple continuous variables (not only the cubic lattice constant) an approach to handle differences between systems that are not connected by a continuous transformation, which is the case when computing energy differences between vastly different systems as needed for formation energies, is missing. Since the convergence checks performed on the energy-volume curve are in most practical cases meant to serve as a surrogate model for estimating the convergence error of more complex calculations it is not a good idea to apply a correction on the surrogate model that is not available for the actual calculation, e.g., for computing formation energies. We therefore will refrain from applying this correction when discussing and applying our approach.

There is also a second reason why we will not apply this correction in the following: Applying the Francis and Payne correction to our computed energy-volume curves we find a very different performance. Two extreme examples are shown in Fig. 8. As shown there the correction is doing an excellent job for bulk Al but shows no improvement for bulk Cu. The reason for this very different performance are discussed in the next subsection.

The plots show the change of energy over lattice constant for the convergence parameters recommended by the VASP manual18 (ϵAl = 240eV, ϵCu = 400eV and κAl = κCu = 5). For aluminium the Francis and Payne correction largely reduces the noise in the energy. Only the small noise from the plane wave double counting remains. In contrast, for copper the noise of the plane wave double counting is dominant from the beginning, so the Francis and Payne correction has no effect.

In order to understand this rather puzzling behavior we analyze in the following the nature of the statistical error in the various energy contributions. The DFT total energy can be expressed as:

The first term in the above expression is the bandstructure energy

with the indices i and k running over the electronic bands and k-points. εik are the Kohn Sham single particle energies and fik the occupation numbers. The second part accounts for the double counting corrections and contains the Hartree potential and the exchange correlation energy. To understand the convergence of the various contributions we use that the wavefunctions in a PW approach are described by Bloch’s theorem, i.e.,

with the Bloch factor eikr and the envelope function u(r). The latter is periodic to the supercell and depends only weakly on k29,30. The k-dependence is almost exclusively in the Bloch factor.

A key quantity in DFT is the charge density

Since in the above expression, the strongly k-dependent Bloch vector drops the charge density becomes only weakly k-dependent (see e.g.29,30). Since the Hartree and exchange correlation energy depend only on the charge density these contributions show only a weak dependence on k.

For the following discussion we therefore focus on contributions which are non-local in real space and where thus the Bloch factor does not cancel. One such contribution is the kinetic energy, with the kinetic energy operator in Fourier space reading (G+k)2 with G the plane wave reciprocal vector. The kinetic energy enters the one-particle energies and thus the bandstructure energy but not the double counting contributions. In contrast to the charge density the kinetic energy shows a strong k-dependence. The second non-local contribution are the PAW pseudopotential projectors containing matrix elements of the form < G + k∣r > which enter the double counting contributions.

In both contributions G and k do not occur separately but exclusively as sum G + k. This is in contrast to contributions such as the charge density. The specific form G + k provides an intuitive explanation of why the total statistical error Eq. (8) is the product rather than the sum of the individual variances. We therefore note that the resulting discretization error can be asymptotically reduced to zero when increasing the k-point sampling: With increasing number of k-points the weight per k-point decreases, giving rise to smaller and smaller discontinuous jumps in the energy. The statistical error can be also eliminated when keeping the k-point sampling fixed and increasing the plane wave basis set, i.e., the number of G vectors. The reason here is that the plane wave coefficients c(G + k) converge to zero for G → 0. This convergence behavior is inconsistent to a sum of the two statistical errors but is naturally explained by the product expression (Eq. (8)).

Based on the above discussion we can also explain why the Francis and Payne correction works well for the noise in the kinetic energy but fails for the one in the double counting contribution. The kinetic energy operator (G+k)2 is computed solely in reciprocal space. In contrast, the non-local PAW operator < G + k∣r > has in addition also a spatial component described by the real space meshes. Since the Francis and Payne approach corrects only the PW discretization error but not the one in the real space meshes it cannot correct them.

The above analysis provides a consistent and intuitive explanation of the origin of the numerical noise and explains why Francis and Payne are unable to remove noise in the plane wave double counting (PAW). For the following analysis, we therefore do not apply the Francis and Payne correction.

Uncertainty quantification of derived physical quantities

The compact representation of the energy surface E(V, ϵ, κ) as function of both physical and materials parameters (i.e. volume V) and DFT convergence parameters (ϵ, κ) by Eq. (7) together with the set of converged hyper-parameters derived in Section “Analysis and fit of the energy-volume curve“ allows us to interpolate the convergence of important materials parameters such as equilibrium bulk modulus, lattice constant, cohesive energy etc. Applying this approach provides an efficient route to identify a set of convergence parameters (ϵ, κ) that guarantee a given level of convergence with a very modest number of DFT calculations and thus at minimal computational cost. To test the accuracy and predictive power of the approximate energy surface Eq. (7) we first compute the physical quantity from the full set (i.e. Vi, ϵj, κk) of DFT data. Like in the previous section we focus on the bulk modulus, which is highly sensitive to even small errors.

The deviation between the bulk modulus and its converged value B0(ϵmax, κmax) as a function of the convergence parameters is shown in Fig. 9a. The color code shows the magnitude of the convergence error in a logarithmic scale. As expected, the error shows a general decrease when going towards higher convergence parameters. The actual dependence, however, is surprisingly complex showing a non-monotonous behavior and several local minima. Performing the same fitting approach on the approximate energy surface Eq. (7) (see Fig. 9c) gives a convergence behavior that shows the same complexity and is virtually indistinguishable from the one shown in Fig. 9a. Thus, Eq. (7) provides a highly accurate and computationally efficient approach for uncertainty quantification of DFT convergence parameters.

a calculated (raw) error, b applying the convex hull construction described in the text and c the reconstruction using Eq. (7). The middle and bottom row show the convex hull of the systematic error, the statistical error and the total error for Al (d–f) and Cu (g–i). The solid red line in i is the (phase) boundary separating regions (lower-left part) where the statistical error dominates from regions (upper-right) where the systematic one is large.

It may be tempting to identify the local minima in the error surface as optimum convergence parameters that combine low error with low computational effort. Unfortunately, these local minima are a spurious product of an oscillatory convergence behavior, where at the nodal points the value becomes close to the converged result. Since DFT convergence parameters should be robust against perturbations caused e.g. by changing the shape of the cell, by atomic displacements etc. the local minima are likely to shift, merge or disappear. Thus, selecting parameters based on such local minima would make these parameters suitable only for the exact structure for which the uncertainty quantification has been performed. We therefore construct in the following an envelope function that connects the local maxima. The envelope represents the amplitude of the oscillatory convergence behavior and is roughly independent on the exact position of the nodes (phase shifts).

Figure 9b shows the resulting envelope function. It is much smoother than the original error surface (Fig. 9a and c), decreases monotonically when increasing any of the convergence parameters and is free of any spurious local minima. For the further discussion and interpretation of convergence behavior and errors we will exclusively use the envelope function.

The energy expression given by Eq. (7) consists of two systematic contributions (Δϵ and Δκ) that smoothly change with the convergence parameters and provide an absolute value. It also includes a statistical contribution ΔΔE, which quantifies the magnitude of the fluctuations around this value. This decomposition into systematic and statistical contributions can be directly transferred to the physical quantities derived from the energy surface. To get the systematic contribution only the systematic part of the energy (i.e. the first three terms in Eq. (7)) are used as input for the fit. The statistical contribution is obtained using a Monte Carlo bootstrapping approach: The last term in Eq. (7) is replaced by a normal distribution N(μ, σ2 = ΔΔE). The fitting is performed over a large number of such distributions. In practice, we found sets of 100 random samples for the energy-volume curve sufficient. As a reference for the normal distribution the best converged surface E(V, ϵmax , κmax) + N(μ = 0, σ2 = ΔΔE(ϵ, κ)) has been used. The inset in Fig. 5b shows the computed propagation of the statistical error in total energy E to the statistical error in the bulk modulus. One can observe a linear relation between the error in the bulk modulus and the magnitude of the noise in the energy-volume curve. This is because the bulk modulus is obtained as the second derivative of the polynomial fitted to the DFT-computed energy-volume curve. Because both fitting and taking the second derivative are linear operations, the resulting bulk modulus is a linear functional of the energy values. Hence, the noise in the bulk modulus scales linearly with the simulated noise in the energy-volume curve.

Figure 9 shows the error surface for the statistical error (Fig. 9a and d), the systematic error (Fig. 9b and e) as well as the total convergence error (Fig. 9c and f) for Al and Cu. The two elements have been chosen since they represent the two most different cases that we observe for all investigated potentials.

Before using the computed error surfaces to derive optimum convergence parameters we briefly recap existing strategies and recommendations for choosing them. There is a broad consensus in the DFT community that energy cutoff and k-point convergence are largely decoupled (for a recent study see e.g.31). This assumption is intensively used in routine convergence checks: Rather than having to check for k-point and cutoff convergence simultaneously DFT practitioners commonly rely on performing convergence checks separately for the two parameters. This strategy is recommended in many DFT forums and has been implemented e.g. in automated tools to find suitable convergence parameters31,32.

Having an explicit expression for the systematic and the statistical error allows us to solve the inverse challenge of identifying the minimal convergence parameters to achieve a given level of convergence at minimal computational cost. We note that the systematic error formulated in the second term in Eq. (7) is the mathematical equivalent of the decoupling assumption: The total systematic error decomposes into the sum of the individual systematic errors. Our explicit error formulation, however, provides important additional insight. Since we explicitly know the systematic error, we can use it to not only estimate the magnitude of the error but also its sign, i.e., by how much a given set of convergence parameters over or underestimates the predicted quantity.

It also allows one to find the domain of convergence parameters where the decoupling works and where it breaks down. We thereto note that the statistical error, which is the product of the individual statistical errors (Eq. (8)), does not decouple cutoff and k-point convergence. To utilize this insight it is important to identify the regions in the error surface where the statistical error dominates over the systematic error and vice versa. In the following we therefore discuss the behavior of the two error contributions.

Generally, the statistical error becomes dominant for low convergence parameters (in the bottom-left region) while the systematic error dominates for medium and high convergence parameters. The red solid line in Fig. 9i shows the boundary where the magnitude of both errors becomes equal. For Al (Fig. 9c) this line is absent since the systematic error dominates over the entire region, i.e., even for the lowest considered convergence parameters. Since we have chosen the minimum at or only slightly below the VASP recommended settings the region captures convergence parameters that are commonly chosen. For Cu, in contrast, the statistical error dominates even when using convergence parameters that are well above the recommended ones, i.e., at lower k-point sampling even for an energy cutoff of more than 600 eV.

The boundary (red line in Fig. 9i) that separates the regions where either the statistical or the systematic error dominates can be interpreted like a boundary in a phase diagram: It directly provides information about the dominating error (phase) for any given set of convergence parameters (state variables). Such diagrams can be thus regarded as error phase diagrams. The knowledge of the error type has direct practical consequences. If the systematic error dominates, the convergence error becomes a simple linear superposition of each individual convergence error (see Eq. (7)). (Strictly speaking Eq. (7) applies only for the total energy. For derived quantities such as the bulk modulus we observe sizeable deviations. The reason is that next to an explicit dependence of e.g. the bulk modulus on the convergence parameters also an implicit one via the equilibrium volume occurs, i.e. B0(ϵ, κ, V0(ϵ, κ))) It thus allows us for any set of (κ, ϵ) values to determine the deviation from the converged result including its sign. Due to its additive nature the total systematic error will be always dominated by the least converged parameter.

In contrast, the total statistical error can be reduced to any target by just converging a single convergence parameter, which is a direct consequence of its multiplicative rather than additive nature (see Eq. (8)). It also is the reason why the statistical error decays faster than the systematic error when increasing both convergence parameters simultaneously.

Knowledge of the above introduced and constructed error phase diagram can be directly used to find convergence parameters that minimize computational resources for a given target accuracy. In the region where the statistical error dominates, the multiplicative nature, i.e., where the targeted accuracy can be achieved by converging only a single parameter, which in practice will be the computationally less expensive one. In typical cases, this will be the k-point sampling since the necessary computational time scales linearly with the number of k-points and the number of k-points decreases with increasing system size. In contrast, if highly converged calculations are desired one will be in the region of the error phase diagram where the systematic error dominates. Since in this region the errors of the individual convergence parameters are additive, converging one parameter better than the other would be a waste of computational time. Thus, the availability of such error phase diagrams will allow us to provide a highly systematic and intuitive way of identifying optimum sets of DFT parameters. In a way, error phase diagrams may become what thermodynamic phase diagrams are for materials engineers today: Roadmaps for identifying optimum paths (convergence parameters) in materials design (DFT calculations).

Methods

Automated Approach

Using the concepts outlined in the previous sections allows us to construct an easy-to-implement automated approach. This approach computes for a given chemical element and its pseudopotential a set of optimum convergence parameters. These optimized parameters guarantee that the error for a user-selected quantity (e.g. bulk modulus, lattice constant etc.) is below a user-defined target error. In the present implementation, the developed tool accepts only cubic structures where the unit cell can be fully described by the lattice constant as single variable.

The key steps of the automated approach are as follows:

-

Determine the approximate lattice constant at ϵmax and κmax using the experimental lattice constant, 21 volume points, a volume interval of ± 10%, and a polynomial fit of order d = 11.

-

Perform DFT calculations around the computed equilibrium lattice constant using again 21 volume points and a volume interval ± 10%. Compute on each of the volume points the energies along (ϵmin, κi), (ϵmax, κi), (ϵi, κmin) and (ϵi, κmax).

-

Use Eq. (7) to compute the total energy E on the full 3d mesh (V,ϵ, κ). No extra DFT calculations are needed.

-

Compute the systematic and the statistical error of the target quantity A (e.g. bulk modulus) following the discussion given in Section “Analysis and fit of the energy-volume curve”. Construct the envelope function of the combined error.

-

Determine on the error surface A(ϵ, κ) the line ΔA(ϵopt, κopt) = ΔAtarget, i.e., sets of convergence parameters where the predicted error equals the target error.

-

Take the set where the curvature of the line is maximum (i.e., close to a 90o angle. At this point the required computational resources for both convergence parameters are minimal.

The above algorithm has been implemented in the Pyiron framework22. The Pyiron-based module requires as input only the pseudopotential and the selection of the target quantity and error. The setup of the DFT jobs, submission on a compute cluster, analysis etc. is done fully automatically without any user intervention.

Data availability

In addition to the Jupyter Notebooks also the corresponding data will be provided on our freely accessible pyiron repository (https://github.com/pyiron/pyiron-dft-uncertainty).

Code availability

The Jupyter Notebooks developed to run the calculations and to analyze the data will be provided on our freely accessible Pyiron repository (https://github.com/pyiron/pyiron-dft-uncertainty). The fully interactive Jupyter Notebooks together with our Pyiron framework contain the entire code and allow to easily reproduce all calculations and the analysis.

References

VASP Performance on HPE Cray EX Based on NVIDIA A100 GPUs and AMD Milan CPUs, Cray User Group Proceedings.

Zuo, Y. et al. Performance and cost assessment of machine learning interatomic potentials. J. Phys. Chem. A 124 (2020). https://doi.org/10.1021/acs.jpca.9b08723.

Grabowski, B., Hickel, T. & Neugebauer, J. Ab initio study of the thermodynamic properties of nonmagnetic elementary fcc metals: Exchange-correlation-related error bars and chemical trends. Phys. Rev. B 76, 024309 (2007).

Chadi, D. J. & Cohen, M. L. Special points in the Brillouin zone. Phys. Rev. B 8, 5747–5753 (1973).

Monkhorst, H. J. & Pack, J. D. Special points for Brillouin-zone integrations. Phys. Rev. B 13, 5188–5192 (1976).

Dacosta, P. G., Nielsen, O. H. & Kunc, K. Stress theorem in the determination of static equilibrium by the density functional method. J. Phys. C: Solid State Phys. 19, 3163–3172 (1986).

Francis, G. P. & Payne, M. C. Finite basis set corrections to total energy pseudopotential calculations. J. Phys.: Condens. Matter 2, 4395–4404 (1990).

Soler, J. M. et al. The SIESTA method for ab initio order-n materials simulation. J. Phys.: Condens. Matter 14, 2745–2779 (2002).

Beeler, B. et al. First principles calculations for defects in u. J. Phys.: Condens. Matter 22, 505703 (2010).

Kratzer, P. & Neugebauer, J. The basics of electronic structure theory for periodic systems. Front. Chem. 7, 106 (2019).

Choudhary, K. & Tavazza, F. Convergence and machine learning predictions of Monkhorst-Pack k-points and plane-wave cut-off in high-throughput DFT calculations. Comput. Mater. Sci. 161, 300–308 (2019).

Murnaghan, F. D. The compressibility of media under extreme pressures. Proc. Natl Acad. Sci. 30, 244–247 (1944).

Birch, F. Finite elastic strain of cubic crystals. Phys. Rev. 71, 809–824 (1947).

Vinet, P., Smith, J. R., Ferrante, J. & Rose, J. H. Temperature effects on the universal equation of state of solids. Phys. Rev. B 35, 1945–1953 (1987).

Duong, D. L., Burghard, M. & Schön, J. C. Ab initio computation of the transition temperature of the charge density wave transition in TiSe2. Phys. Rev. B 92, 245131 (2015).

Grabowski, B., Söderlind, P., Hickel, T. & Neugebauer, J. Temperature-driven phase transitions from first principles including all relevant excitations: The FCC-to-BCC transition in Ca. Phys. Rev. B 84, 214107 (2011).

Lejaeghere, K. et al. Reproducibility in density functional theory calculations of solids. Science 351 (6280) (2016). https://doi.org/10.1126/science.aad3000, https://science.sciencemag.org/content/351/6280/aad3000.full.pdf.

VASP manual. https://www.vasp.at/wiki/index.php/The_VASP_Manual.

Jain, A. et al. A high-throughput infrastructure for density functional theory calculations. Comput. Mater. Sci. 50, 2295–2310 (2011).

Jain, A. et al. Commentary: The materials project: A materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Anubhav, J. et al. Fireworks: a dynamic workflow system designed for high-throughput applications. Concurr. Comput.: Pract. Exp. 27, 5037–5059 (2015).

Janssen, J. et al.Pyiron: An integrated development environment for computational materials science. Comput. Mater. Sci. 163, 24 – 36 (2019).

Carbogno, C. et al. Numerical quality control for DFT-based materials databases. npj Comput. Mater. 8, 69 (2022).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558–561 (1993).

Kresse, G. & Furthmüller, J. Efficiency of ab-initio total energy calculations for metals and semiconductors using a plane-wave basis set. Comput. Mater. Sci. 6, 15–50 (1996).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Blöchl, P. E., Jepsen, O. & Andersen, O. K. Improved tetrahedron method for brillouin-zone integrations. Phys. Rev. B 49, 16223–16233 (1994).

Morgan, W. S., Jorgensen, J. J., Hess, B. C. & Hart, G. L. Efficiency of generalized regular k-point grids. Comput. Mater. Sci. 153, 424–430 (2018).

Marzari, N., Mostofi, A. A., Yates, J. R., Souza, I. & Vanderbilt, D. Maximally localized Wannier functions: Theory and applications. Rev. Mod. Phys. 84, 1419–1475 (2012).

Blount, E. In Formalisms of band theory (eds Seitz, F. & Turnbull, D.), Vol. 13 of Solid State Physics 305–373 (Academic Press, 1962). https://www.sciencedirect.com/science/article/pii/S0081194708604592.

Youn, Y. et al. Amp2: A fully automated program for ab initio calculations of crystalline materials. Comput. Phys. Commun. 256, 107450 (2020).

Yates, J. R., Wang, X., Vanderbilt, D. & Souza, I. Spectral and fermi surface properties from wannier interpolation. Phys. Rev. B 75, 195121 (2007).

Acknowledgements

JJ and JN thank Kurt Lejaeghere and Christoph Freysoldt for stimulating discussions and the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Projektnummer 405621217 for financial support. EM and AVS acknowledge the financial support from the Russian Science Foundation (grant number 18-13-00479).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

J.J. and J.N. developed the concepts of automated uncertainty quantification. J.J. implemented the method in the Pyiron framework. J.J. and E.M. compared the predictions with existing results. J.J., E.M., T.H., A.S., and J.N. contributed to the generalization of the method and the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

Authors JJ, EM, TH, AS declare no financial or non-financial competing interests. Author JN serves as Associate Editor of this journal and had no role in the peer-review or decision to publish this manuscript. Author JN declares no financial competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Janssen, J., Makarov, E., Hickel, T. et al. Automated optimization and uncertainty quantification of convergence parameters in plane wave density functional theory calculations. npj Comput Mater 10, 263 (2024). https://doi.org/10.1038/s41524-024-01388-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41524-024-01388-2

This article is cited by

-

Heterogeneous ensemble enables a universal uncertainty metric for atomistic foundation models

npj Computational Materials (2025)

-

Review: beyond the surface—exploring the complexities of 2D materials with density functional theory

Journal of Materials Science (2025)