Abstract

High Entropy Alloys (HEAs) have drawn great interest due to their exceptional properties compared to conventional materials. The configuration of HEA system is considered a key to their superior properties, but exhausting all possible configurations of atom coordinates and species to find the ground energy state is extremely challenging. In this work, we proposed a quantum annealing-assisted lattice optimization (QALO) algorithm, which is an active learning framework that integrates the Field-aware Factorization Machine (FFM) as the surrogate model for lattice energy prediction, Quantum Annealing (QA) as an optimizer and Machine Learning Potential (MLP) for ground truth energy calculation. By applying our algorithm to the NbMoTaW alloy, we reproduced the Nb depletion and W enrichment observed in bulk HEA. We found our optimized HEAs to have superior mechanical properties compared to the randomly generated alloy configurations. Our algorithm highlights the potential of quantum computing in materials design and discovery, laying a foundation for further exploring and optimizing structure-property relationships.

Similar content being viewed by others

Introduction

High Entropy Alloys (HEAs), which are alloys comprising four or more elements1,2,3, have received great interest due to their exceptional mechanical properties4,5,6,7,8,9,10 and thermal properties11,12,13,14 under extreme conditions (high temperature and pressure). Researchers have made intense efforts to reveal the underlying mechanisms governing the unique properties, such as lattice distortion15,16, phase transitions17 and solid solution strengthening18, and more importantly, the dominant effect of atomic configurations (i.e., cocktail effect) has become a wide consensus14,19,20. HEAs usually possess a simple phase constitution, and various types of constituent atoms are randomly distributed at the crystallographic lattice sites21. The chemical complexity and component tunability make it possible for HEAs to obtain optimal properties. While traditional alloy development, including more than two atoms, is mostly located at the corner of the phase diagram14, the large area or volume of the phase space near the center of the diagram, which points to the higher entropy systems, is what studying HEAs needs to explore (Fig. 1a).

a Schematic phase diagrams of ternary and quaternary alloy systems. The development of traditional alloys is mainly in the corner regions (yellow regions), while the HEA expands toward the center space of the phase diagram (high-entropy (blue) regions); b Binary embedding for lattice configurations. A 2D M × N binary matrix xM×N is used to represent the cell with M types of elements and N atomic sites. For example, xij = 1 means the i-th element will occupy the j-th site.

Computational simulations, such as density functional theory (DFT)22,23,24 and molecular dynamics (MD) simulations25,26, are widely used to simulate and predict the properties of HEAs. However, due to the high computational cost, using DFT calculations to explore large numbers of atomic configurations of HEA is challenging22. MD simulations, although they can benefit from the development of machine learning potentials (MLPs) to greatly improve efficiency while maintaining DFT accuracy27,28,29,30, still require sampling methods (i.e., Monte Carlo, MC) to explore the phase space of HEAs31,32, which does not guarantee the finding of the ground state. Therefore, existing simulation methods are difficult to complete the high-dimensional search and global optimization for HEA configurations.

Quantum algorithms, especially quantum annealing (QA), have become an emerging strategy for solving practical optimization problems due to their excellent performance33,34,35,36,37,38. QA is a quantum analog of classical simulated annealing, which exploits the quantum tunneling effect to help explore low-energy solutions and ultimately return the ground state of the corresponding quantum system39,40. Compared with quantum algorithms based on universal gate-based quantum hardware in the noisy intermediate-scale quantum (NISQ) era41, QA have become a viable tool for practically solving discrete optimization problems in material science domain42,43. Kitai et al.44, Guo et al.45 and Kim et al.46,47 utilized QA and factorization machine (FM) for designing planar multilayer (PML) and diodes for optical applications. Their algorithms delivered superior optimization speed and precision compared to classical algorithms and hardware. Hatakeyama-Sato et al.48 introduced a quantum physics-inspired annealing machine to tackle the challenge of large search space for material discovery, achieving at least 104 times faster speeds than conventional approaches. For the HEA optimization problems, QA may be leveraged for its quantum advantage to address the challenges posed by the extremely large search space of HEA configurations and find the global minimum.

In this work, we propose a QA-assisted lattice optimization (QALO) algorithm and demonstrate it on HEA configuration optimization. The algorithm is developed based on the active learning framework, in which the field-aware factorization machine (FFM) is trained with data from DFT calculations (and MLP labeling in the following iterations) for converting the lattice configuration optimization problems into quadratic unconstrained binary optimization (QUBO) problems and then solved by a quantum annealer. The algorithm is applied to optimize the bulk lattice configuration of the refractory body-centered cubic (bcc) NbMoTaW alloy system. Our algorithm can quickly obtain optimized structures of the quaternary HEA, and the results successfully reproduce the Nb depletion and W enrichment phenomena in the bulk phase driven by thermodynamics, which are usually observed in experiments and MC/MD simulations. The mechanical properties of the optimized configurations are then calculated and compared with randomly generated alloys. The proposed algorithm may provide a powerful tool for designing and discovering new alloy materials with high stability and exceptional properties.

Results

QUBO representation for lattice optimization problem

All the problems that can be solved by the state-of-the-art QA algorithm need to be formulated as an Ising model or, equivalently, a QUBO model (the details of QA and QUBO problems are in the Methods section). Therefore, mapping the target optimization problem to the QUBO problem as accurately as possible is the prerequisite for the effective use of QA. For the lattice optimization problems, the general process of obtaining a reliable QUBO mapping is to (1) establish a binary representation for lattice configuration and (2) establish a QUBO-like “feature-label” relationship that can accurately represent the properties of interest as the functions of configurations.

For a HEA system, M types of atoms are randomly distributed at N sites in the 3D lattice for a certain composition. The number and coordinates of sites are pre-defined by the space group of the HEA, and therefore, the configuration of an HEA lattice can be modeled by a discrete approximation. In this approximation, the sites in the 3D lattice are assigned with N indices, and a binary variable x with M × N dimensions can represent the occupation status for all the sites in the lattice. For example, xij = 1 represents an atom of type i occupying site j (as Fig. 1b shows). The solution to this discretization of configuration representation might not provide the true global optimum as it does not consider lattice distortion, but its solution can serve as the initial structure for further lattice relaxation49. For selected systems, we performed additional relaxations before calculating their mechanical properties (see the Method section for details).

The thermodynamic stability of an alloy at a given temperature and pressure results from the Gibbs free energy of mixing14,50,51. Therefore, the target of the HEA lattice optimization is to optimize the Gibbs free energy of the lattice, which includes the enthalpy and the entropy components, ΔGmix = ΔHmix – T∙ΔSconf. Within the binary regular solution model52, the mixing enthalpy ΔHmix of a HEA lattice can be defined as a linear combination of the pair interactions among the constituent elements:

in which ci and cj represents the fraction of elements i and j, and Ωij is the pair interaction between i and j. The binary interaction is obtained from the enthalpy of mixing of the binary system as

Where ΔHij is the mixing enthalpy of the binary system made of element i and j, Eij is the ensemble average of the lattice energy of the binary system represented by the special quasirandom structure (SQS)51 composed of element i and j, and Ei is the lattice energy of the reference unary system containing element i in the same lattice structure. As we do not consider lattice distortion of the lattice, the energies of the reference unary systems are constants in Eq. (2), and thus the mixing enthalpy of the alloy expressed by Eq. (1) depends only on ci, cj and Eij. Therefore, the minimization of ΔHmix can be achieved by minimizing the lattice energy. Then, we establish a lattice energy model that can be mapped into a QUBO problem for QA optimization by employing the widely used cluster expansion (CE) method53,54,55. For versatility and simplicity when applying CE to HEA systems, the effective pair interaction (EPI) model is used56. We can convert the EPI model into the Ising model, so it is compatible with QA. The effective energy at lattice site j can be expressed as57:

where JX(j)Y(l) is the pair-wise interatomic potential between element X and Y at sites j and l, respectively, cl is the occupation parameter of site l, and J0 is the concentration-dependent part. Summing up the energy over all the N atomic sites yields the total energy of the lattice:

where σXY is the percentage of XY pairs in the system. Combining the previously defined binary representation of lattice configurations with an explicit expression for the pair interaction energy Uijkl, the energy of the lattice can be written as:

where the quadratic term Uijkl xij xkl represents the contribution to the total energy when the atom of type i and atom of type k occupy sites j and l, respectively.

The configurational entropy ΔSconf is described by the Boltzmann’s entropy formula58,59,60:

where Xi represents the mole fraction of the atom and R is the ideal gas constant. For HEA systems, configurational entropy is a compositional property that assumes that atoms are randomly distributed on the lattice points and is maximum when the alloy composition is equiatomic61 (i.e., the proportion of each element is equal). Therefore, configurational entropy can be considered as an additional constraint on the alloy composition to control the optimized structure to always be in the high entropy region in the middle of the phase diagram (as shown in Fig. 1a). Applying the proposed binary representation for a N-site lattice of M elements, Eq. (6) can be rewritten as

For the HEA system with M elements, the maximum entropy is obtained at equiatomic distribution (Xi = M-1), and the polynomial approximation of Eq. (7) around M-1 is

which also has a second-order form that can be used to construct a QUBO-like formula together with the energy in Eq. (5). Therefore, the objective function for the HEA optimization can be constructed by the energy term in Eq. (5) and entropy term in Eq. (8).

In addition, there are physical constraints that need to be applied to the objective function. The constraint in Eq. (9) is the assignment constraint to ensure that each type of atom can only occupy a certain number of sites, which is determined by the composition. The constraint in Eq. (10) ensures that at most one atom occupies each lattice site. The constraint in Eq. (11) applies extra physical constraints, such as periodic boundary conditions, where f(i,j) is the constraint function for xij on the boundary.

With the energy and entropy in Eqs. (5) and (8), and incorporating all physical constraints from Eq. (9) ~ (11), the QUBO formulation of the Hamiltonian (hQUBO) to be minimized for lattice optimization problem can be written as:

where λi are the weighing factors for the i-th constraint, which are usually much larger than Uijkl to enforce the constraints.

The construction of the hQUBO shown in Eq. (12) requires the knowledge of interaction coefficients Uijkl in the EPI model. Factorization machine (FM), introduced by Rendle et al.62, is a regression model designed for learning sparse feature interactions. Due to the consistency in mathematical form, the 2nd-order FM is often employed as the surrogate model for mapping the original problems to the QUBO formulation63. By training a 2nd-order FM regression model using DFT-calculated energy data and retrieving linear coefficients and interacting coefficients, we can obtain the coefficient Uijkl and construct the EPI energy model shown in Eq. (5), and then use entropy in Eq. (8) and constraints to construct the QUBO model in Eq. (12). In the meantime, based on physical intuition, atomic pairs in the lattice at different sites will show unique interaction behaviors, so FFM is selected as the advanced version of FM to accurately capture different pair interactions by assigning each of the N sites in the lattice to an individual field (See the Methods section for detailed discussion on the FM and FFM).

QALO algorithm

The QALO algorithm is developed based on the active learning framework integrating FFM, QA and MLP in an iterative loop for the proper QUBO mapping of the target optimization problem, solving the QUBO and new data production, respectively (Fig. 2). The initial data pool for the active learning is constructed with randomly generated alloy configurations and their energies calculated using DFT (see Methods section for the details of DFT calculations). An ensemble random sampling strategy57 is used to generate initial random configurations, which means several different supercell sizes are considered simultaneously to provide diverse degrees of atomic disorder in the dataset. Large supercells can better capture long-range order, while small supercells help capture short-range order and preserve low computational cost. Both are important in HEAs, which are important factors affecting the mechanical properties of alloys. Therefore, including different supercell sizes in the dataset helps to establish a more comprehensive Hamiltonian by the surrogate model14,64. With the iterations going on, new data points from each QALO loop will be added to the dataset. To maintain the representativeness of the data pool for different degrees of disorders by preventing the algorithm from keeping adding new data of the same supercell size, randomly generated configurations of other sizes that different from the designing target and their energies calculated by MLP are also added to the data pool.

The FFM model, which serves as a surrogate mode for mapping the original optimization problem to the QUBO form, is trained on a sub-dataset randomly sampled from the whole data pool. The FFM models in this work are implemented in the open-source package “xLearn”65. For each training, 70% of the data points are randomly sampled from the data pool and split into the training set, validation set, and test set with a ratio of 8:1:1. After the FFM model is trained, the QUBO model is constructed according to the discussion in the last section. QA is then used to solve the hQUBO to find the ground state. Comparing with classical algorithms, the usage of QA can greatly accelerate the optimization for the high dimensional problems. In this work, we use the hybrid QA (HQA, powered by the hybrid partitioning algorithm66) to address the challenge of high dimensional optimization (see Methods section for the details). The MLP is then used to calculate the energy for this QA-predicted configuration without computationally expensive DFT calculations. The MLP used in this work is the spectral neighbor analysis potential (SNAP)67 and is trained with the entire data pool (see Methods section for the details of SNAP model and the training of MLP). Since the MLP usually has high accuracy and strong interpolation ability, the re-training of the MLP is not necessary in each iteration but is only needed when the root mean squared error (RMSE) of energy on the small test set (randomly sampled from the data pool) is larger than a pre-set threshold (RMSE > 1.0 eV in this work).

The optimization for the HEA lattice should not be limited to obtaining the most stable atomic arrangement but also includes obtaining the optimal composition in the alloy system. The assignment constraint in Eq. (9) ensures that atoms of each type strictly occupy a certain number of sites that are determined by the corresponding composition ci. Therefore, the solution of the hQUBO constructed with the assignment constraint will just be the optimal atomic arrangement under a given elemental composition. However, if the assignment constraint is relaxed during the construction of the hQUBO, the solutions obtained from QA module will have the possibility to break the constraint and move to a different elemental composition. This can lead to the optimization toward better composition. Figure 3 shows how the QA module in the QALO algorithm is designed. As mentioned above, the optimization for composition is realized by a relatively loose constraint, while the optimization for atomic arrangement is realized by a tight constraint. In the QA module, the hybrid quantum annealer will be called multiple times in a serial way and return the solution sets of a series of hQUBOs (as depicted by Si in Fig. 3, each solution set contains all the solutions from multiple QA shots for the same hQUBO) whose assignment constraints are adjusted from loose to tight by the exponential increase of the weighting factor λ1 (as Eq. (13) shows, where r is the increasing rate of constraint weighting factors, λmax is the maximum weighting factor in the loose-to-tight QA deployment, λi is the weighting factor in the i-th HQA run, and n is the total number of HQA calls in one QA module). The optimal composition obtained from the previous solution will be applied to the assignment constraint of the following hQUBO, so that both atomic arrangement and composition can be optimized simultaneously within a single QALO iteration.

For each calling of QA, the EPI energy model obtained from FFM coefficients, and the initial composition of the current iteration are passed to the QA module. Then, a series of hQUBO ℋi is constructed based on the EPI energy model with different assignment constraints from loose to tight. D-Wave quantum annealer will return optimal configurations Si for the current hQUBO and pass the composition information of the optimal solution to the next hQUBO construction. Finally, the QA module returns both optimal elemental composition and atomic configurations.

Optimization of NbMoTaW HEA lattice

The established QALO algorithm is then used to optimize the bulk lattice configuration of the refractory NbMoTaW alloy system. The refractory bcc NbMoTaW exhibits outstanding high-temperature mechanical strength6,10, and one of the major limitation is the brittleness, exhibiting limited room-temperature compressive strain32,68,69. Therefore, studying the relationship between the configuration of NbMoTaW and its mechanical property can help improve alloys with better performance, especially the microscopic atomic arrangement is directly related to short-range orders (SROs), which also determines the stability of lattice structure19.

As mentioned in the last section, an ensemble random sampling strategy is used to randomly generate configurations with different supercell sizes using DFT calculations for the initial data pool. Table 1 shows the sampling method used in this work. Four different supercell sizes (2 × 2 × 2, 4 × 2 × 2, 4 × 4 × 2 and 4 × 4 × 4) are used, and 200 different configurations for each size are randomly drawn. Our target lattice for optimization is 8 × 8 × 8 supercells to minimize artifacts due to the finite size effect70. During the active learning iterations, as new data for the 8 × 8 × 8 supercell will be continuously added to the data pool, random configurations of the other sizes will be randomly generated and added to the data pool after each iteration to maintain their representativeness in the entire data pool.

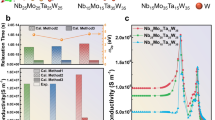

Before starting the active learning iterations, we trained the FFM and SNAP-MLP with a sub-dataset (400 data points randomly sampled from the initial data pool) for benchmark testing of the two surrogate models. Figure 4 shows the parity plots for FFM and SNAP-MLP models on the training set and a test set (40 data points from the initial data pool not seen in the training). For comparison, the energy values are normalized according to the supercell sizes. It can be seen that FFM can work well on both training set and test set, showing its good performance on describing the energy landscape and interpolation. MLP shows the accuracy comparable to DFT ground truth and higher accuracy than FFM on both the training set and the test set. Therefore, using the active learning strategy to continuously increase the capacity and representativeness of the database to improve the accuracy of FFM is necessary for the high-quality mapping of the original problem to the QUBO form, while the re-training of MLP does not need to be performed frequently due to its high accuracy, by which we can reduce the computational cost for the algorithm.

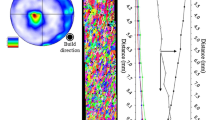

QALO is then used to optimize the configuration of the NbMoTaW lattice. The initial composition for the optimization is set to be equiatomic quantities, i.e., 25% for each of the Nb, Mo, W, and Ta species. The hyperparameters and setups for each module are listed in Table 2. Figure 5a shows the evolution of the Hamiltonian in 700 QALO iterations, within which the energy of the system converges. At the beginning of the optimization, the active learning continuously produces configurations with better atomic arrangements under the equiatomic composition, resulting in a slight but gradual decrease in the lattice total energy. However, after a few cycles, the QALO algorithm starts to explore the phase space under the guidance of the HQA and the loose constraint in hQUBO, leading to the update on the composition ratios of the four elements. Concurrently, the energy of the solution exhibits a precipitous drop after the update of the composition. Such dual modes of energy evolution alternately appear in the subsequent optimization process. Finally, the composition of the four elements tends to converge, and the optimizations further focus on the atomic arrangements. Figure 5b shows the composition evolution during the iterations. Clear depletion of Nb and enrichment of W can be observed during the optimization, and the composition converges to Nb 18.0%, Mo 23.6%, Ta 26.5% and W 31.9%, which are similar to the observations of Li et al.32 on the bulk grain phase (Nb, 15.5%, Mo 24.6%, Ta 28.0% and W 31.9%), where the Nb depletion and W enrichment driven by thermodynamics were simulated by MC/MD simulations. In the real alloy synthesis, the heat treatments that stabilize the alloy’s microstructure, such as annealing or homogenization, are also driven by the thermodynamics, but they are not considered in the present work71.

The configuration of HEA systems can be characterized by the partial radial pair correlation functions (RDF)32,72. Figure 6 shows the partial RDF for the optimized structure. The RDF curves reveal that the pair correlations between elements from different groups, such as Mo-Ta, Ta-W, and Nb-Mo, are significantly stronger than those between elements within the same group, such as Nb-Ta and Mo-W73. In addition, the SRO parameters are calculated in the lattice to further qualify the local atomic arrangement. The Warren-Cowley SRO parameter is defined as74

in which \({P}_{k}^{i,j}\) is the probability of finding element j at the k-th neighbor shell of element i, and cj is the concentration of element j. A negative \({\alpha }_{k}^{i,j}\) suggests the tendency of element j clustering around the element I, while the positive \({\alpha }_{k}^{i,j}\) indicates the opposite. If \({\alpha }_{k}^{i,j}=0\) for each of the k-th shell, then the distribution can be regarded as completely random. In Fig. 6, we also show the SRO parameters \({\alpha }_{1}^{i,j}\) of each pair of elements, which are consistent with the partial RDF analysis.

One of the major advantages of HEA is its superior mechanical properties, which are related to the composition and atomic configuration. We perform MD simulations at 300 K with the MLP to study the uniaxial stress-strain responses of a randomly generated equiatomic NbMoTaW configuration, a suboptimal NbMoTaW configuration with the converged composition but random atomic arrangement, and the optimized NbMoTaW configurations from the QALO algorithm, as shown in Fig. 7. The optimized HEA configuration is obtained by selecting the solution from the last QALO iteration. The compressive and tensile deformations are applied on the structure along the x-axis (see Methods section for the details of strain-stress simulations). It can be seen that the optimized configuration exhibits much higher moduli under both compression and tension than the randomly generated alloy structure and the suboptimal structure. From QALO, we see that the optimization of the lattice of bulk grain will lead to special SRO and create regions with varying compositions and local environments formed by elements with strong chemical affinities, which can contribute to the solid solution effect known to enhance the mechanical property of HEAs18. This result suggests that the algorithm we proposed can simultaneously optimize the HEA structure in terms of composition and atomic arrangement, thereby obtaining the structure with excellent mechanical properties and thermodynamical stabilities. The comparison of the mechanical properties of the three alloy systems also illustrates the importance of both composition optimization and configuration optimization for HEA property, highlighting the advantages of our proposed algorithm when facing a large search space.

Computational efficiency analysis

For comparison with QA, the same lattice optimization problem is also solved by the mixed-integer linear program (MILP) solver Gurobi75 and simulated annealer76, which are classical optimizers that are generally more efficient at escaping local minima compared to traditional MC methods. These two classical optimizers are carried out on the classical computer with Dual 12 core Intel® Xeon® 2.50 GHz CPU and 256 GB RAM. The loose-to-tight deployment strategy (as Fig. 2 depicted) is also used for constructing the hQUBOs for these two optimizers to optimize the compositions and configurations. Table 3 shows the computational time and the minimum configurational energy of the three solvers. The running time is calculated by averaging the wall time of all the loose-to-tight optimization modules during the entire QALO optimization, and the minimum configurational energy is the MLP-calculated energy of the optimized configuration after the iteration converges. For small optimization problems (2 × 2 × 2 supercell), Gurobi shows a faster convergence speed than simulated annealing and QA. In this scenario, the non-negligible charge time (including communication and problem embedding time on quantum devices) on the quantum annealer hardware will become an obstacle to computing efficiency. As the target problem becomes larger (4 × 4 × 4 supercell), the computational efficiency of Gurobi becomes much lower, and the optimization results are also less satisfactory compared to the other two annealing algorithms. At the same time, the advantages of QA in both computational efficiency and global searching accuracy are gradually emerging. As the scale of the problem further increases (8 × 8 × 8 supercell), Gurobi and simulated annealer cannot converge to the optimal solution within 6 hours due to the physical memory limitations of the classical computer. It is foreseeable that as the optimization problem becomes larger and larger, the advantage of the QA algorithm will become more significant. The limitations of quantum hardware (e.g., number of interconnect qubits) still largely limit the size of the problems. However, for the HEA problem in this study, 4x4x4 a cell size is found yielding converged state when considering the per atom energy of the optimized lattice.

Discussion

In this work, we explore the possibility of using quantum algorithms for lattice materials design and optimization. The proposed QALO algorithm combines the QA algorithm and the machine learning algorithm to achieve the global search of the large material design space through an active learning scheme. The QALO algorithm achieves simultaneous optimization for composition and atomic arrangement through the proper deployment of QA in a two-tiered optimization strategy. When applied to optimizing the configuration of the refractory NbMoTaW alloy, our algorithm can reproduce the enrichment of W and depletion of Nb in the bulk lattice. At the same time, the optimized alloy configuration also shows higher mechanical strength. With the development of quantum devices in the future, the current optimization framework is expected to be further enhanced. More qubits and more complex architectures of quantum computers will allow for the realization of higher-dimensional and complex optimization problems, which will greatly expand the application potential of the QALO algorithm.

Methods

DFT calculations

We performed the DFT calculations for total energies of randomly generated HEA configurations using the Perdew–Burke–Ernzerhof (PBE)77 exchange-correlation functional and projector-augmented plane wave (PAW)78 potentials as implemented in the Vienne ab initio simulation package (VASP)79. The plane-wave cutoff energy is 520 eV, and the k-point density is 3 × 3 × 3 for the 4 × 4 × 4 supercells. For other supercell sizes, the densities of k-point are scaled according to the size of their lattices in the reciprocal space, which are inversely proportional to the supercell sizes. The energy threshold for self-consistency and the force threshold for structure relaxation are 10−8 eV and 0.02 eV/Å, respectively. The Python Materials Genomics (pymatgen)80 library is used for all structure manipulations and analysis of DFT computations. All the energies calculated by VASP are first normalized according to the supercell sizes and then used to construct the datasets.

SNAP potential and LAMMPS calculations

The SNAP model67 expresses the energies and forces of a collection of atoms as a function of the coefficients of the bispectrum of the atomic neighbor density function. For the linear SNAP model, the energy of atom i is expressed as81:

where \({B}_{k}^{i}\) is the k-th bispectrum component of atom i, and \({\beta }_{k}^{{\mu }_{i}}\) is the corresponding linear coefficient that depends on \({\mu }_{i}\), which is the SNAP element of atom i. Similarly, the force on each atom j can be written as a weighted sum over the derivatives of the bispectrum components of each atom i:

The bispectrum coefficients B are given by32,67,81:

where \({C}_{{j}_{1}{m}_{1}{j}_{2}{m}_{2}}^{{jm}}\) and \({C}_{{j}_{1}{m}_{1}^{{\prime} }{j}_{2}{m}_{2}^{{\prime} }}^{j{m}^{{\prime} }}\) are Clebsch-Gordan coupling coefficients82, and \({u}_{m,{m}^{{\prime} }}^{j}\) are the coefficients of four-dimensional hyperspherical harmonics extracted from the Fourier expansion of the atomic density functions (see Thompson et al.67 for more details of the SNAP descriptor). SNAP is employed because it can be trained very efficiently compared to other MLPs while not losing accuracy.

In this work, we used the LAMMPS package83 and the FitSNAP package84 to calculate the bispectrum coefficients for all the training structures67. The key hyperparameters for training a SNAP model are the cutoff radius Rcut for bispectrum computation and the order of the bispectrum coefficients jmax. The values of these parameters have been given in Table 2.

All the MD simulations in this work are also performed by LAMMPS. The relaxations are carried out on the undistorted structures under NPT ensembles at 300 K and 0 pressure. For the stress-strain simulations, the undistorted structures are first enlarged to about 40 × 40 × 40 Å. Then, the enlarged structures are relaxed for 2 ns. Uniaxial tensile deformation and compression deformation are applied on the relaxed structures with a strain rate of 5 × 108 s-1 along the x-direction under NPT ensemble at 1 atm and 300 K. The timestep is set to be 1 fs for the simulation.

FM and QUBO problem

FM is a machine learning model that extends traditional linear regression models by incorporating interactions between different input features62. The 2nd order FM can be written as:

where xi is the i-th feature of the input variable, ω are linear coefficients, and \({v}_{i},{v}_{j}\) represents the inner product of the latent vectors associated with features i and j.

However, the simple mathematical form of FM sometimes cannot capture more complex or nuanced interactions between features, such as the pair atomic interactions in the lattice. These interactions are usually related to the context of features85. FFM is an improved version of FM, which allows each feature to have multiple latent vectors, one for each field with which it interacts. The 2nd order FFM can be written as86:

where fi and fj denote the fields of features i and j, respectively, and \({v}_{i,{f}_{j}}\) represents the latent vector of feature i when interacting with a feature from field fj. In the lattice with N sites and M types of elements, each of the N × M features will correspond to N fields, so that the 2nd order coefficients in the FFM will accurately describe spatially dependent atomic pair interactions. By introducing field-wise latent vectors, the complexity of the latent space is highly increased.

QUBO problems are a class of optimization problems that involve finding the binary variable assignment that minimizes a quadratic objective function87. Mathematically, the QUBO problem can be written as:

where x is a binary variable with N components and Q is the hQUBO. The consistency between QUBO and factorization machines (FM and FFM) lies in the structure of their formulations, both of which involve the interactions between features (as Eqs. (18)~(20) shown). Therefore, FM and FFM are always regarded as an efficient way for mapping a general combinatorial optimization problem to QUBO form44,46.

Quantum annealing

Quantum annealing is a quantum analog of classical simulated annealing that permits quantum tunneling to facilitate the efficient exploring of energy landscapes and find the global minimum (as shown in Fig. 8a)39. Compared with simulated annealing, quantum annealing always exhibits a stronger ability to converge to the ground state (global minimum of the energy landscape). In quantum annealing, the system is initialized in the lowest-energy eigenstate of the initial Hamiltonian. The annealing process then proceeds by evolving the quantum state adiabatically towards a target problem Hamiltonian for the system. If the annealing took place slowly, the adiabatic theorem88,89,90 ensures that in all the transformation phases, the system will be kept at the ground state of the Hamiltonian91.

The D-Wave system is the pioneer in the development of commercial quantum annealers. In order to be solved on the D-Wave system, all the problems need to be formulated as an Ising model or, equivalently, a QUBO model. These models can be further represented by a graph comprising a collection of nodes and edges between them, while the corresponding quantum processing unit is expressed as a lattice of qubits interconnected in a design known as a Chimera graph92,93 (as shown in Fig. 8b), which is typical for D-Wave quantum annealers and their operations. The nodes and edges of the objective function graph are mapped to the qubits and couplers of the Chimera graph by a minor-embedding process94,95,96,97.

However, due to the limitation of the QPU size, large-scale QUBO problems (such as the 8 × 8 × 8 optimization problem in this work) cannot be directly fit on a modest-sized chimera lattice. The hybrid-QA algorithm is proposed as a bridge to larger applications66, in which the full QUBO problem is partitioned into sub-QUBOs that fit QPU lattice size98. Each iteration of the hybrid-QA algorithm comprises multiple calls to the quantum annealer to solve each sub-QUBO, and the solution of the original QUBO problem is constructed from the sub-solutions. In this work, all the QA optimizations are implemented by the Leap’s hybrid binary quadratic model solver on the D-Wave Quantum Annealer (Advantage system 4.1), which incorporates the 5000-qubit Advantage (QPU) that can handle up to 20,000 fully connected variables.

Data availability

Requests for data and materials should be sent to the corresponding authors or Z.X. (zxu8@nd.edu).

Code availability

The codes for this study are available at https://github.com/ZhihaoXu0313/qalo-kit.git.

References

George, E. P., Raabe, D. & Ritchie, R. O. High-entropy alloys. Nature reviews materials 4, 515–534 (2019).

Yeh, J. W. et al. Nanostructured high‐entropy alloys with multiple principal elements: novel alloy design concepts and outcomes. Advanced engineering materials 6, 299–303 (2004).

Cantor, B., Chang, I., Knight, P. & Vincent, A. Microstructural development in equiatomic multicomponent alloys. Materials Science and Engineering: A 375, 213–218 (2004).

Gludovatz, B. et al. A fracture-resistant high-entropy alloy for cryogenic applications. Science 345, 1153–1158 (2014).

Youssef, K. M., Zaddach, A. J., Niu, C., Irving, D. L. & Koch, C. C. A novel low-density, high-hardness, high-entropy alloy with close-packed single-phase nanocrystalline structures. Materials Research Letters 3, 95–99 (2015).

Senkov, O. N., Wilks, G. B., Scott, J. M. & Miracle, D. B. Mechanical properties of Nb25Mo25Ta25W25 and V20Nb20Mo20Ta20W20 refractory high entropy alloys. Intermetallics 19, 698–706 (2011).

George, E. P., Curtin, W. A. & Tasan, C. C. High entropy alloys: A focused review of mechanical properties and deformation mechanisms. Acta Materialia 188, 435–474 (2020).

Gao, L. et al. High‐entropy alloy (HEA)‐coated nanolattice structures and their mechanical properties. Advanced Engineering Materials 20, 1700625 (2018).

Li, Z., Zhao, S., Ritchie, R. O. & Meyers, M. A. Mechanical properties of high-entropy alloys with emphasis on face-centered cubic alloys. Progress in Materials Science 102, 296–345 (2019).

Feng, X. et al. Stable nanocrystalline NbMoTaW high entropy alloy thin films with excellent mechanical and electrical properties. Materials Letters 210, 84–87 (2018).

Tsai, M.-H. Physical properties of high entropy alloys. Entropy 15, 5338–5345 (2013).

Karati, A., Guruvidyathri, K., Hariharan, V. & Murty, B. Thermal stability of AlCoFeMnNi high-entropy alloy. Scripta Materialia 162, 465–467 (2019).

Schuh, B. et al. Mechanical properties, microstructure and thermal stability of a nanocrystalline CoCrFeMnNi high-entropy alloy after severe plastic deformation. Acta Materialia 96, 258–268 (2015).

Zhang, Y. et al. Microstructures and properties of high-entropy alloys. Progress in Materials Science 61, 1–93 (2014).

Song, H. et al. Local lattice distortion in high-entropy alloys. Physical Review Materials 1, 023404 (2017).

Lee, C. et al. Lattice distortion in a strong and ductile refractory high-entropy alloy. Acta Materialia 160, 158–172 (2018).

Strumza, E. & Hayun, S. Comprehensive study of phase transitions in equiatomic AlCoCrFeNi high-entropy alloy. Journal of Alloys and Compounds 856, 158220 (2021).

LaRosa, C. R., Shih, M., Varvenne, C. & Ghazisaeidi, M. Solid solution strengthening theories of high-entropy alloys. Materials Characterization 151, 310–317 (2019).

Xiao, L.-Y., Wang, Z. & Guan, J. Optimization strategies of high-entropy alloys for electrocatalytic applications. Chemical Science (2023).

Li, W., Liu, P. & Liaw, P. K. Microstructures and properties of high-entropy alloy films and coatings: a review. Materials Research Letters 6, 199–229 (2018).

Zhou, Y. et al. The understanding, rational design, and application of high-entropy alloys as excellent electrocatalysts: A review. Science China Materials 66, 2527–2544 (2023).

Zhang, Y., Zhuang, Y., Hu, A., Kai, J.-J. & Liu, C. T. The origin of negative stacking fault energies and nano-twin formation in face-centered cubic high entropy alloys. Scripta Materialia 130, 96–99 (2017).

Niu, C., LaRosa, C. R., Miao, J., Mills, M. J. & Ghazisaeidi, M. Magnetically-driven phase transformation strengthening in high entropy alloys. Nature communications 9, 1363 (2018).

Wang, Y. et al. Computation of entropies and phase equilibria in refractory V-Nb-Mo-Ta-W high-entropy alloys. Acta Materialia 143, 88–101 (2018).

Zhang, L., Qian, K., Huang, J., Liu, M. & Shibuta, Y. Molecular dynamics simulation and machine learning of mechanical response in non-equiatomic FeCrNiCoMn high-entropy alloy. Journal of Materials Research and Technology 13, 2043–2054 (2021).

Jiang, J., Sun, W. & Luo, N. Molecular dynamics study of microscopic deformation mechanism and tensile properties in AlxCoCrFeNi amorphous high-entropy alloys. Materials Today Communications 31, 103861 (2022).

Wang, H., Zhang, L., Han, J. & Weinan, E. DeePMD-kit: A deep learning package for many-body potential energy representation and molecular dynamics. Computer Physics Communications 228, 178–184 (2018).

Behler, J. Perspective: Machine learning potentials for atomistic simulations. The Journal of chemical physics 145 (2016).

Li, R. et al. Enhanced thermal boundary conductance across GaN/SiC interfaces with AlN transition layers. ACS Applied Materials & Interfaces (2024).

Chen, C. et al. Accurate force field for molybdenum by machine learning large materials data. Physical Review Materials 1, 043603 (2017).

Widom, M., Huhn, W. P., Maiti, S. & Steurer, W. Hybrid Monte Carlo/molecular dynamics simulation of a refractory metal high entropy alloy. Metallurgical and Materials Transactions A 45, 196–200 (2014).

Li, X.-G., Chen, C., Zheng, H., Zuo, Y. & Ong, S. P. Complex strengthening mechanisms in the NbMoTaW multi-principal element alloy. npj Computational Materials 6, 70 (2020).

Wang, Y., Li, Y., Yin, Z.-q. & Zeng, B. 16-qubit IBM universal quantum computer can be fully entangled. npj Quantum information 4, 46 (2018).

Gibney, E. D-Wave upgrade: How scientists are using the world’s most controversial quantum computer. Nature 541 (2017).

Aspuru-Guzik, A., Dutoi, A. D., Love, P. J. & Head-Gordon, M. Simulated quantum computation of molecular energies. Science 309, 1704–1707 (2005).

Booth, G. H., Grüneis, A., Kresse, G. & Alavi, A. Towards an exact description of electronic wavefunctions in real solids. Nature 493, 365–370 (2013).

Endo, K., Matsuda, Y., Tanaka, S. & Muramatsu, M. A phase-field model by an Ising machine and its application to the phase-separation structure of a diblock polymer. Scientific reports 12, 10794 (2022).

Kim, S. et al. A review on machine learning-guided design of energy materials. Progress in Energy (2024).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Physical Review E 58, 5355 (1998).

Sandt, R. & Spatschek, R. Efficient low temperature Monte Carlo sampling using quantum annealing. Scientific Reports 13, 6754 (2023).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Ajagekar, A., Humble, T. & You, F. Quantum computing based hybrid solution strategies for large-scale discrete-continuous optimization problems. Computers & Chemical Engineering 132, 106630 (2020).

P. dos Santos, L. C. et al. Elastic energy driven multivariant selection in martensites via quantum annealing. Physical Review Research 6, 023076 (2024).

Kitai, K. et al. Designing metamaterials with quantum annealing and factorization machines. Physical Review Research 2, 013319 (2020).

Guo, J., Kitai, K., Jippo, H. & Shiomi, J. Boosting the quality factor of Tamm structures to millions by quantum inspired classical annealer with factorization machine. arXiv preprint arXiv:2408.05799 (2024).

Kim, S. et al. High-performance transparent radiative cooler designed by quantum computing. ACS Energy Letters 7, 4134–4141 (2022).

Kim, S. et al. Quantum annealing-aided design of an ultrathin-metamaterial optical diode. Nano Convergence 11, 1–11 (2024).

Hatakeyama-Sato, K., Kashikawa, T., Kimura, K. & Oyaizu, K. Tackling the challenge of a huge materials science search space with quantum‐Inspired annealing. Advanced Intelligent Systems 3, 2000209 (2021).

Phillips, A. T. & Rosen, J. B. A quadratic assignment formulation of the molecular conformation problem. Journal of Global Optimization 4, 229–241 (1994).

Tamm, A., Aabloo, A., Klintenberg, M., Stocks, M. & Caro, A. Atomic-scale properties of Ni-based FCC ternary, and quaternary alloys. Acta Materialia 99, 307–312 (2015).

Chen, W. et al. A map of single-phase high-entropy alloys. Nature Communications 14, 2856 (2023).

Zunger, A., Wei, S.-H., Ferreira, L. & Bernard, J. E. Special quasirandom structures. Physical review letters 65, 353 (1990).

Kikuchi, R. A theory of cooperative phenomena. Physical review 81, 988 (1951).

Sanchez, J. M., Ducastelle, F. & Gratias, D. Generalized cluster description of multicomponent systems. Physica A: Statistical Mechanics and its Applications 128, 334–350 (1984).

van de Walle, A. & Ceder, G. Automating first-principles phase diagram calculations. Journal of Phase Equilibria 23, 348 (2002).

Liu, X., Zhang, J., Eisenbach, M. & Wang, Y. Machine learning modeling of high entropy alloy: the role of short-range order. arXiv preprint arXiv:1906.02889 (2019).

Zhang, J. et al. Robust data-driven approach for predicting the configurational energy of high entropy alloys. Materials & Design 185, 108247 (2020).

Jaynes, E. T. Gibbs vs Boltzmann entropies. American Journal of Physics 33, 391–398 (1965).

Tilley, D. (IOP Publishing, 1980).

Gao, M. C., Yeh, J.-W., Liaw, P. K. & Zhang, Y. High-entropy alloys: fundamentals and applications. (Springer, 2016).

Takeuchi, A. Mixing entropy of exact equiatomic high-entropy alloys formed into a single phase. Materials Transactions 61, 1717–1726 (2020).

Rendle, S. in 2010 IEEE International conference on data mining. 995-1000 (IEEE).

Wilson, B. A. et al. Machine learning framework for quantum sampling of highly constrained, continuous optimization problems. Applied Physics Reviews 8 (2021).

Guo, W. et al. Local atomic structure of a high-entropy alloy: an X-ray and neutron scattering study. Metallurgical and Materials Transactions A 44, 1994–1997 (2013).

Ma, C. xLearn, https://github.com/aksnzhy/xlearn (2019).

Raymond, J. et al. Hybrid quantum annealing for larger-than-QPU lattice-structured problems. ACM Transactions on Quantum Computing 4, 1–30 (2023).

Thompson, A. P., Swiler, L. P., Trott, C. R., Foiles, S. M. & Tucker, G. J. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. Journal of Computational Physics 285, 316–330 (2015).

Sun, B. et al. Promoted high-temperature strength and room-temperature plasticity synergy by tuning dendrite segregation in NbMoTaW refractory high-entropy alloy. International Journal of Refractory Metals and Hard Materials 118, 106469 (2024).

Pozuelo, M. & Marian, J. In-situ observation of ‘chemical’strengthening induced by compositional fluctuations in Nb-Mo-Ta-W. Scripta Materialia 238, 115750 (2024).

Kresse, G. & Furthmüller, J. Efficiency of ab-initio total energy calculations for metals and semiconductors using a plane-wave basis set. Computational materials science 6, 15–50 (1996).

Huaizhi, Q. et al. Effect of heat treatment time on the microstructure and properties of FeCoNiCuTi high-entropy alloy. Journal of Materials Research and Technology 24, 4510–4516 (2023).

He, Q. et al. Understanding chemical short-range ordering/demixing coupled with lattice distortion in solid solution high entropy alloys. Acta Materialia 216, 117140 (2021).

Miracle, D. B. & Senkov, O. N. A critical review of high entropy alloys and related concepts. Acta materialia 122, 448–511 (2017).

de Fontaine, D. The number of independent pair-correlation functions in multicomponent systems. Journal of Applied Crystallography 4, 15–19 (1971).

Gurobi Optimization LLC. Gurobi optimizer reference manual. (2020).

Bertsimas, D. & Tsitsiklis, J. Simulated annealing. Statistical science 8, 10–15 (1993).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Physical review letters 77, 3865 (1996).

Blöchl, P. E. Projector augmented-wave method. Physical review B 50, 17953 (1994).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Physical review B 54, 11169 (1996).

Ong, S. P. et al. Python Materials Genomics (pymatgen): A robust, open-source python library for materials analysis. Computational Materials Science 68, 314–319 (2013).

Wood, M. A. & Thompson, A. P. Extending the accuracy of the SNAP interatomic potential form. The Journal of chemical physics 148 (2018).

Alex, A., Kalus, M., Huckleberry, A. & von Delft, J. A numerical algorithm for the explicit calculation of SU (N) and SL(N, C)SL(N, C) Clebsch–Gordan coefficients. Journal of Mathematical Physics 52 (2011).

Plimpton, S. Fast parallel algorithms for short-range molecular dynamics. Journal of computational physics 117, 1–19 (1995).

Rohskopf, A. et al. FitSNAP: Atomistic machine learning with LAMMPS. Journal of Open Source Software 8, 5118 (2023).

Juan, Y., Lefortier, D. & Chapelle, O. in Proceedings of the 26th International Conference on World Wide Web Companion. 680-688.

Juan, Y., Zhuang, Y., Chin, W.-S. & Lin, C.-J. in Proceedings of the 10th ACM conference on recommender systems. 43-50.

Punnen, A. P. The quadratic unconstrained binary optimization problem. Springer International Publishing 10, 978–973 (2022).

Jansen, S., Ruskai, M.-B. & Seiler, R. Bounds for the adiabatic approximation with applications to quantum computation. Journal of Mathematical Physics 48 (2007).

Lidar, D. A., Rezakhani, A. T. & Hamma, A. Adiabatic approximation with exponential accuracy for many-body systems and quantum computation. Journal of Mathematical Physics 50 (2009).

Cheung, D., Høyer, P. & Wiebe, N. Improved error bounds for the adiabatic approximation. Journal of Physics A: Mathematical and Theoretical 44, 415302 (2011).

Hauke, P., Katzgraber, H. G., Lechner, W., Nishimori, H. & Oliver, W. D. Perspectives of quantum annealing: Methods and implementations. Reports on Progress in Physics 83, 054401 (2020).

Vert, D., Sirdey, R. & Louise, S. in Proceedings of the 16th ACM international conference on computing frontiers. 226-229.

Dash, S. A note on QUBO instances defined on Chimera graphs. arXiv preprint arXiv:1306.1202 (2013).

Klymko, C., Sullivan, B. D. & Humble, T. S. Adiabatic quantum programming: minor embedding with hard faults. Quantum information processing 13, 709–729 (2014).

Hamilton, K. E. & Humble, T. S. Identifying the minor set cover of dense connected bipartite graphs via random matching edge sets. Quantum Information Processing 16, 94 (2017).

Goodrich, T. D., Sullivan, B. D. & Humble, T. S. Optimizing adiabatic quantum program compilation using a graph-theoretic framework. Quantum Information Processing 17, 1–26 (2018).

Okada, S., Ohzeki, M., Terabe, M. & Taguchi, S. Improving solutions by embedding larger subproblems in a D-Wave quantum annealer. Scientific reports 9, 2098 (2019).

Booth, M., Reinhardt, S. P. & Roy, A. Partitioning optimization problems for hybrid classical. quantum execution. Technical Report, 01-09 (2017).

Acknowledgements

This research was supported by the Quantum Computing Based on Quantum Advantage Challenge Research (grant RS-2023-00255442) through the National Research Foundation of Korea (NRF) funded by the Korean government (Ministry of Science and ICT). This research also used resources of the Oak Ridge Leadership Computing Facility at the Oak Ridge National Laboratory, which is supported by the Office of Science of the U.S. Department of Energy under Contract No. DEAC05-00OR22725. The authors also would like to thank the Notre Dame Center for Research Computing for supporting all the simulations in this work. Notice: This manuscript has in part been authored by UT-Battelle, LLC under Contract No. DEAC05-00OR22725 with the U.S. Department of Energy. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the U.S. Government retains a non-exclusive, paid up, irrevocable, world-wide license to publish or reproduce the published form of the manuscript, or allow others to do so, for U.S. Government 15 purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-publicaccess-plan).

Author information

Authors and Affiliations

Contributions

Z.X., E.L. and T.L. conceived the idea; Z.X. designed and programmed the QALO algorithm for HEA systems; W.S. and S.K. helped with the implementation of FM and QA modules; Z.X. wrote most parts of the manuscript; All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xu, Z., Shang, W., Kim, S. et al. Quantum annealing-assisted lattice optimization. npj Comput Mater 11, 4 (2025). https://doi.org/10.1038/s41524-024-01505-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41524-024-01505-1

This article is cited by

-

Convergence of Computational Materials Science and AI for Next-Generation Energy Storage Materials

Journal of Electronic Materials (2026)

-

Predicting symmetric structures of large crystals with GPU-based Ising machines

Communications Physics (2025)

-

Quantum computing and the implementation of precision medicine

npj Genomic Medicine (2025)

-

Inverse binary optimization of convolutional neural network in active learning efficiently designs nanophotonic structures

Scientific Reports (2025)