Abstract

First-principles Monte Carlo (MC) simulations at finite temperatures are computationally prohibitive for large systems due to the high cost of quantum calculations and poor parallelizability of sequential Markov chains in MC algorithms. We introduce scalable Monte Carlo at eXtreme (SMC-X), a generalized checkerboard algorithm designed to accelerate MC simulation with arbitrary short-range interactions, including machine learning potentials, on modern accelerator hardware. The GPU implementation, SMC-GPU, harnesses massive parallelism to enable billion-atom simulations when combined with machine-learning surrogates of density functional theory (DFT). We apply SMC-GPU to explore nanostructure evolution in two high-entropy alloys, FeCoNiAlTi and MoNbTaW, revealing diverse morphologies including nanoparticles, 3D-connected NPs, and disorder-stabilized phases. We quantify their size, composition, and morphology, and simulate an atom-probe tomography (APT) specimen for direct comparison with experiments. Our results highlight the potential of large-scale, data-driven MC simulations in exploring nanostructure evolution in complex materials, opening new avenues for computationally guided alloy design.

Similar content being viewed by others

Introduction

A central goal of computational materials science is to predict the physical properties of materials using only fundamental inputs such as atomic species and physical constants. A widely adopted strategy towards this goal is the direct solution of the quantum equations governing electron dynamics: the Schrödinger equation for non-relativistic cases and the Dirac equation for relativistic cases. However, the complexity of quantum many-body interactions necessitates approximations. For such a purpose, density functional theory (DFT)1 has emerged as a particularly successful approach, which approximates intractable many-body effects through an exchange-correlation function within a single-body framework. This approximation significantly enhances computational efficiency, enabling the routine prediction of ground-state physical properties for systems with several hundred atoms. However, for systems requiring more than thousands of atoms to simulate, such as nanodefects, non-stoichiometric compounds, and complex solid-solution alloys, conventional DFT methods become prohibitively expensive due to their intrinsic O(N3) scaling behavior with system size. A further complication arises when considering finite-temperature effects2. Even for relatively small systems, rigorously accounting for temperature via ensemble averaging in statistical mechanics necessitates the evaluation of an enormous number of configurations, making DFT-based finite-temperature simulations prohibitively expensive. For example, a direct thermodynamic simulation of the CuZn alloy using a 250-atom supercell requires the calculation of 600,000 DFT energies3, a task demanding some of the world’s largest supercomputers.

One common strategy to accelerate atomistic simulations is to replace computationally expensive electronic structure calculations with efficient atomistic models. From the perspective of renormalization group theory4,5,6, the atomistic models can be interpreted as the effective model obtained by integrating out the electronic degrees of freedom, which substantially reduces the computational cost. Indeed, traditional empirical models, such as the embedded atom model (EAM) and cluster expansion methods, have been widely employed for large-scale thermodynamic simulations. However, traditional empirical models are limited in terms of functional form, which restricts their generalization capability and predictive accuracy. On the other hand, the advent of machine learning (ML) for atomistic systems7,8,9,10 has introduced a new paradigm to the construction of effective atomistic models11,12,13,14,15. Instead of being predefined, the parameters in ML atomistic models are automatically determined by training from high-quality first-principles datasets, therefore rendering it possible for the model to automatically capture complex interatomic interactions16,17,18,19. Well-trained ML models are typically orders of magnitude faster than DFT methods, while can still retain their high accuracy10,20,21. This renders it possible to carry out high-accuracy atomistic simulations using supercells containing millions of atoms22,23,24,25, far exceeding the thousands of atoms limits of conventional DFT approaches.

However, most machine learning potentials have been developed primarily to accelerate molecular dynamics (MD) simulations8,9,22,23,26,27, with relatively limited applications in Monte Carlo (MC) simulations17,28,29,30. This is notable given that MC represents one of the two cornerstone methods in atomistic simulations, alongside MD31. A key challenge lies in the intrinsic sequential updating nature of widely used MC algorithms, such as the Metropolis algorithm. The MC trials are generally attempted site by site, which hinders large-scale parallelization. This is in stark contrast to MD, where all the atoms are updated simultaneously in a single step. The sequential updating nature of MC quickly becomes the bottleneck as the system size grows, which undermines the efficiency advantage of ML atomistic models when integrating the two. On the other hand, for many interesting phenomena32,33,34, such as order-disorder transitions in chemically complex materials, MC simulations remain the only viable approach due to their sampling efficiency. Consequently, developing highly scalable MC algorithms that overcome the limitations of sequential updating is of paramount importance for realizing the potential of ML atomistic models.

A widely adopted technique to address the parallelization challenge in Monte Carlo (MC) simulations is the checkerboard algorithm, originally developed for the Ising model with nearest-neighbor interactions. The combination of the Ising model and checkerboard algorithm presents great parallel opportunities at the core level, and has been successfully applied across various accelerators, including GPUs35,36, GPU tensor cores37, TPUs38, and ASICs39. Extending the checkerboard algorithm to non-Ising models poses greater challenges for achieving efficient parallelism. One of the earliest such efforts was by ref. 40 for the Lennard–Jones potential. Later, J. Anderson and collaborators proposed an extension for off-lattice hard-disk systems41,42. However, a key limitation of these efforts is their restriction to pairwise interactions, making them unsuitable for machine-learned potentials (MLPs), which often involve many-body interactions. For general short-range interactions, B. Sadigh and co-authors introduced the Scalable Parallel Monte Carlo (SPMC) algorithm43, which supports higher-order interactions such as those in cluster expansion models. Their implementation was later integrated into the hybrid MC-MD framework in the LAMMPS package44 and has been widely adopted for use with MLPs, including models like SNAP45 and MTP46. Nevertheless, the original SPMC implementation was designed for CPU architectures, not GPUs. Moreover, it relies on a static domain decomposition scheme, which limits the parallel scalability. Finally, the practice of confining the local trial moves to non-interacting domains produces weak spatial correlations that can slow down the approach to equilibrium43.

To overcome the above challenges, we introduce the Scalable Monte Carlo at eXtreme (SMC-X) method. Compared to previous methods summarized in Table 1, SMC-X is unique in that it is designed for two targets: (1) efficient implementation on accelerators, (2) support for arbitrary short-range interactions, such as those found in machine-learned potentials (MLPs). The key innovation in SMC-X is the introduction of the local-interaction zone (LIZ) within the link-cell (LC) strategy (a checkerboard variant), which enables efficient execution on accelerators. Although SMC-X shares conceptual similarities with the SPMC method43, it avoids the key limitations of that approach mentioned above. In SMC-X, the LIZ + LC region is dynamically centered on the atoms updated in each mini-sweep, which brings two major advantages: (1) The parallelism scales linearly with the number of atoms, far exceeding the core-limited scalability of SPMC, and making it well-suited for high-throughput accelerators such as GPUs; (2) All sites are treated uniformly, maintaining equivalence with serial Monte Carlo updates (aside from update order, which does not affect detailed balance) and avoiding the spatial correlation issues inherent in SPMC.

To demonstrate the capability of SMC-X in tackling challenging materials science problems that were previously intractable due to limitations in spatial and temporal scales, we employ SMC-X to study the nanoparticles in high-entropy alloys (HEAs)47,48. HEA is a class of chemically complex materials that have received significant attention due to their exceptional mechanical properties. These properties include overcoming the traditional strength-ductility trade-off49,50,51, attributed to phenomena such as chemical short-range order34,52,53, nanoprecipitates50, and nanophases54. Understanding these features necessitates large-scale MC simulations. Beyond mechanical properties, the size and morphology of nanostructures in HEAs also offer promising opportunities for catalysis, sparking widespread interest in recent years55,56,57. As a general algorithm, SMC-X can be applied to different accelerators. In this work, we tailored the parallelization strategies in SMC-X for efficient execution on GPUs, and refer to this implementation as SMC-GPU. Specifically, we apply SMC-GPU to investigate the nanostructure evolution in the Fe29Co29Ni28Al7Ti7 and MoNbTaW HEAs, employing two simple machine learning energy models using the local short-range order parameters33,58,59 as input features. We demonstrate the excellent efficiency and scalability of the SMC-X method, which enables the simulation of atomistic systems exceeding one billion atoms using a single GPU (graphic processing unit). Our results reveal a rich diversity of interesting nanoscale phenomena, including nanoparticle (NP), 3D-connected NPs, and disorder-protected nanophases. We quantitatively analyze the size, composition, and morphology of the nanostructures, which align well with available experimental results obtained with atom-probe tomography (APT) and electron microscopy. Finally, our results reveal that the intricate nanoscale interplay of order and disorder in high-entropy alloys (HEAs) stems from the combined effects of chemical complexity and temperature, offering valuable guidance for alloy design.

Results

Performance of the SMC-X algorithm

The SMC-X algorithm is a generalization of the checkerboard algorithm, as explained in Method “Implementation of SMC-GPU”. The key insight lies in rendering sequential Monte Carlo (MC) trials independent by partitioning the system into sufficiently large link-cells, thereby isolating the influence of individual MC trial moves. To simplify the explanation, a schematic of the link-cell for the 2D square lattice is shown in Fig. 1a. Each site is labeled by two indices (iC, iA), where iC represents different link-cells and iA represents the different atoms within the link-cell. For the sake of discussion, let us assume that the atoms have only nearest-neighbor interactions. In such a case, the MC updates of the atoms with the same iA index but different iC indexes are independent of each other. This obviously presents a parallelism opportunity. For instance, consider atom (1, 13). During MC update, atom (1, 13) can swap with any of its nearest neighbors (1, 12), (1, 14), (1, 8), and (1, 18), which are colored as yellow in Fig. 1a. Due to the short interaction range (nearest-neighbor), these moves would have no impact on calculating the energy changes for the swap moves of atom (iC, 13) (other red sites in Fig. 1a), and the degree of parallelism is the number of cells nC. We denote the yellow and green sites surrounding each red site in Fig. 1 as a local-interaction zone (LIZ), which is a name inspired by the locally self-consistent multiple scattering (LSMS) method60,61. In other words, for any two sites, as long as the MC trial of one site does not affect the energies of the LIZ of the other site, then these two sites are independent. It is easy to see that the above discussion based on the 2D square lattice can be extended to the case of a 3D crystal. Furthermore, in the SMC-X method, a domain decomposition scheme is introduced to distribute the lattice among multiple GPUs, which further enhances the achievable system size, as illustrated in Fig. 1b. Generally, to determine the energy change resulting from the MC swap trial at site i, the local energies of each site within the local-interaction zone of site i must be evaluated. Additionally, chemical environment information extending beyond the LIZ is required for the energy change calculation, as illustrated by the LIZ+ region in Fig. 1c. Note that for a pair-interaction model, the above discussion can be simplified since the total energy changes can be calculated from the local energies of the two sites involved in the swap trial. As a result, the speed of the effective pair-interaction (EPI) model is faster than the generalized nonlinear model by approximately a factor of 50, as shown in Fig. 1d.

a Illustrate the SMC-X method with a 2D square lattice, in which each site is denoted by two indices (iC, iA), where iC represents different link-cells, and iA represents the different atoms within the nA atom cell. b The whole chemical configuration is distributed on multiple GPUs, and the atoms near the boundary need to be communicated between GPUs nA times in every MC sweep. c A 2D view of the fcc or bcc lattice, site 1 (red) can swap with each of its nearest neighboring sites (yellow), and the green sites represent the ones that the local energies can be affected. The yellow and green sites, together with the centering atom, form the LIZ. The chemical environment with LIZ+ are needed to calculate the energy change due to a swap trial. d A log-log plot of computation time vs the system size to illustrate the speedup ratio of the SMC-X method as compared to DFT (MuST-KKR), linear-DFT (LSMS), and GNN (Allegro). The measured values for SMC-X are signified as stars and ideal scaling is assumed for all lines.

To illustrate the strength of our method, we compare the theoretical speed of the SMC-X method with other schemes to integrate MC with high-accuracy energy prediction methods, such as DFT, linear-DFT, and GNN (graph neural networks), as shown in Fig. 1d. Note that the lines are drawn by extrapolating from a data point using ideal scaling behavior. The actual computational speeds of the various methods are inherently influenced by many factors, such as hardware, software implementations, material systems, and computational parameters. Therefore, the discussion presented here is intended solely to provide a theoretical estimation of their relative performance in terms of orders of magnitude. The stars in the figure represent the measured values of the SMC-X method, and the lines show the ideal scaling behaviors, which are explained in Method “Theoretical speedup of SMC-X vs traditional methods”. From Fig. 1d, we see that for a one million atom system, we have the speedup S = 1025, as highlighted in Fig. 1d. We can also see that for a nonlinear model, such as the qSRO model introduced in the following section, the speedup will be reduced by a factor of 50, due to the aforementioned necessity of evaluating the local energies of each site within the LIZ. If we increase the system size to one billion atoms, then the speedup of SMC-X with respect to DFT will be further increased by a factor of 109 to reach 1034, as highlighted in Fig. 1d. This analysis clearly demonstrates the exceptional performance of the SMC-X method for large-scale MC simulations.

In addition to theoretical estimations, we compare our work with selected previous studies, as summarized in Table 2. It can be seen that our work represents the largest system size achieved in atomistic MC simulations at ab initio accuracy. Such capability enables us to directly study large nanostructures comprising millions of atoms, in contrast to previous works that are limited to short-range order (SRO), long-range order (LRO), and small nanoprecipitates. To contextualize the simulation scale of our work, we extend our search beyond HEAs and MC to identify the largest atomistic simulation system reported in the literature. A recent study achieved this with a system of 29 billion atoms, which employs 35 million CPU cores on one of the world’s largest supercomputers (comprising 90,000 computing nodes, ~84% of the entire system). In contrast, constrained by computational resources, our work utilized only two NVIDIA H800 GPUs, yet already achieved a simulation scale of one billion atoms. Note that this already surpasses the 100-million-atom system size of the 2020 Gordon-Bell Prize winner22. Furthermore, the comparison presented above demonstrates that, through careful design of the Monte Carlo algorithm to fully exploit its inherent parallelization potential, MC simulations can rival molecular dynamics (MD) methods in terms of scalability, which are traditionally viewed as advantageous over MC methods in simulation scale. Finally, we highlight that the excellent computational efficiency of the SMC-X method not only presents a success in pushing the simulation length scale forward, but also provides a particularly important tool for understanding the vital role of nanostructures51,62 in the exceptional mechanical properties in HEAs50,57,63, such as overcoming the trade-off in strength and ductility50,62.

Energy model

In this work, we focus on two types of energy models: the effective pair-interaction (EPI) model58,64, and a nonlinear model that uses the local-SRO parameter as the input feature. The EPI model is a generalized Ising model constructed via machine learning from the DFT data. By automatically selecting the interaction range via Bayesian information criterion (BIC)58, the EPI model has demonstrated that it can predict the DFT-calculated configuration energies of a series of HEAs with very high accuracy33. The local-SRO model, proposed in this work, generalizes the EPI model by adding a quadratic term. As will be shown in the following discussion, this simple nonlinear term has a profound impact on the computing pattern, and serves as a prototypical representation for machine learning models. A detailed description of the two energy models are present in Method “The EPI and qSRO model”.

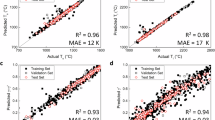

Using a train-validation splitting of 70 and 30% for the DFT dataset described in Method “DFT dataset”, we evaluated the accuracy of the second-nearest-neighbor (2nd-NN) EPI model and the loca_SRO model, and the results are shown in Fig. 2a–d. Both models are trained with the Adam optimizer and back-propagation algorithm. It can be seen that both models demonstrate very high accuracy. For the EPI model, the validation root mean square error (RMSE) is 0.1819 mRy/atom, or ~2.5 meV/atom, which is well within quantum chemical accuracy (~3.16 mRy/atom), as well as smaller than the typical errors of 5–12 meV/atom in the DFT methods65. The qSRO model demonstrates slightly higher accuracy than the EPI model, with a validation RMSE of 0.1643 mRy/atom, which can be attributed to its inclusion of high-order interactions. The high accuracy of the models is also demonstrated in the R2 scores, which are all higher than 0.995. The errors from the train and validation datasets are also very close to each other, indicating the absence of overfitting in the models. For comparison, we notice that this accuracy is higher than that in ref. 29, which is reported as 14.7 meV/atom by applying a similar pair-interaction model in the FeCoNiAlTiB system. We think one reason for the enhanced accuracy is our approach to obtaining the DFT dataset, which combines both random configurations, as well as configurations from Monte Carlo simulations, as proposed in ref. 33 and described in Method “DFT dataset”.

a The training error of the EPI model for FeCoNiAlTi. b The validation error of the EPI model for FeCoNiAlTi (0.7/0.3 train-valid splitting). c The training error of the qSRO (Local_SRO) model for FeCoNiAlTi. d The validation error of the qSRO model for FeCoNiAlTi. e The specific heats CV of FeCoNiAlTi were calculated with different supercell sizes. f The specific heats of MoNbTaW were calculated with a one million-atom supercell.

Using the trained models, we calculated the specific heats CV in the FeCoNiAlTi HEA in temperatures ranging from 50 to 2000 K. The calculation details are given in Method “MC simulation”, and the results are shown in Fig. 2e. It can be seen that there is a sharp order-disorder transition at a low temperature of about 300 K. The sharp peak evolves to a singularity with the increase of the system size, which signifies the occurrence of an order-disorder transition. We also calculated the CV curve for the MoNbTaW refractory HEA. The results in Fig. 2f agree well with the results in ref. 33, which are calculated using a small system of 1000 atoms. Note that the order-disorder transition at lower temperatures (between 250 and 500 K) in the CV curve generally cannot be directly compared with experiments due to kinetic barrier effects. On the other hand, the results at elevated temperatures are more suitable for comparison if experimental data are available, as demonstrated in ref. 34. A comparison of Fig. 2e, f in the temperature range between 500 and 2000 K shows an interesting difference between the two HEAs: The CV curve in Fe29Co29Ni28Al7Ti7 is generally flat but demonstrates clear fluctuation as the temperature changes, indicating the existence of complex second phases or short-range order. By comparison, the CV curve of MoNbTaW in the same temperature region is smooth, but with an obvious order-disorder transition around 1000 K. Note that the exact location of the order-disorder transition temperature depends on the energy model and different values, as having been extensively reported in various theoretical works28,33,66,67.

Nanostructures in Fe29Co29Ni28Al7Ti7

In this section, we report the nanostructure evolution of the Fe29Co29Ni28Al7Ti7 fcc HEA. This alloy has demonstrated exceptional combined strength and ductility in experiments, and such an attractive property has been attributed to the formation of nanoparticles in the FeCoNi matrix50, which hinder the motion of dislocations. In the experiment, the alloy sample is homogenized at 1150 °C (1423 K) for 2 h and aged at 780 °C (1053 K) for 4 h50. Using the qSRO model, we first simulated the chemical phase changes of the Fe29Co29Ni28Al7Ti7 fcc alloy using a supercell of approximately one million atoms. The lattice dimension is chosen as 72 × 60 × 60; therefore, the total number of atoms is 72 × 60 × 60 × 4 = 1,036,800. We initialize the configurations randomly at 2000 K, then decrease the simulation temperature by 50 K each time until 1000 K, which is approximately the temperature at which the samples are aged. At each temperature, we skip the 4 × 105– 1.5 × 106 MC sweeps before recording the following 20,000 sweeps to calculate the specific heat CV in Fig. 2e. The configurations at 2000 and 1000 K are demonstrated in Fig. 3a–e. From Fig. 3d, e it can be seen that the system is chemically disordered at 2000 K. As the temperature decreases to 1000 K, a nanoparticle (NP) forms in the system. The distribution of elements in the NP, as shown in Fig. 3a, b, reveals the formation of the L12 structure. The two different layers of the L12 nanoparticle can be seen in Fig. 3b, c. This agrees well with the experimental results in ref. 50, as reproduced in Fig. 3f, g, in which the nanoparticles are referred to as multi-component intermetallic nanoparticles (MCINP). Similar results are also reported in ref. 68 for the Fe25Co25Ni25Al15Ti10 HEA, where the fcc phase consisted of a γ Fe-(Co,Ni)-based solid-solution matrix (A1), and coherent primary \({\gamma }^{{\prime} }\) (Ni,Co)3-(Ti,Al)-based intermetallic L12 precipitates. Other than chemical structure, size is another important feature of the nanoparticles. The diameter of the cluster shown in Fig. 3a is about 17.8 nm, which is in agreement with the experimental observation shown in Fig. 3f, g. Moreover, we extract the compositions from the simulation data and show it in Fig. 4g, along with the experiment results in ref. 50, as shown in Fig. 4h. It can be seen that the compositions from the MC simulation generally agree well with experiment, although there are also some differences, such as slightly higher concentration of Ni in MCINP.

a A snapshot of the (001) face at 1000 K. b A perspective 3D snapshot of the configuration at 1000 K, from the signified direction. c Slices of the atomic system to show the nanoparticle, using a step of 10 fcc layers. d A snapshot view of the (001) face at 2000 K. e A 3D view of the configuration at 2000 K. f High-resolution atom maps showing the atomistic distribution within the L12 nanoparticles of the Al7Ti7 alloy. Reproduced from Fig. 2 in ref. 50. g TEM image of the Al7Ti7 alloy showing the nanostructured morphology. Reproduced from Fig. 1 in ref. 50 with reprint permission from Science.

a A histogram for all clusters of sizes larger than 100 atoms. Five clusters of different sizes are selected and shown as vertical dashed lines. b The chemical concentrations of the matrix (cluster_0) and cluster_1, as well as the rest clusters. c–f Snapshots of the [001] face of NiCoFeAlTi at T = 1000 K, with c as the enlarged upper-right corner of (d), and e as the enlarged upper-right corner of (f). c, d Show the different elements and e, f show the atomic local energies. g Compositions of the NP (MCINP) and matrix (MCM) from the 1M atom simulation via sampling along the x direction using a radius of 6.4 nm. h Compositions of the NP (MCINP) and matrix (MCM) from experiment, as reproduced from ref. 50 with permission from Science.

From Fig. 3b, c, we see that the one-million-atom supercell can only accommodate one NP in the FeCoNiAlTi HEA, and larger systems are necessary for a direct comparison of experimental data that contains more than one NP, such as the TEM image in Fig. 3g. To investigate the microstructures of the FeCoNiAlTi HEA at a larger length scale, we employ a 630 × 630 × 630 fcc supercell, in which the total number of atoms is 1,000,188,000. Due to the huge supercell size, we use the faster EPI model rather than the local-SRO model, and the simulation details are specified in Method “MC simulation”. The simulation results for this one-billion-atom system are presented in Figs. 4, 5. To study the size, composition, and morphologies of the nanoparticles, we employ the union-find algorithm to identify the nanoparticles, as detailed in Method “MC simulation”. We refer to all sets of atoms identified by the union-find algorithm as clusters, which can be of sizes ranging from a single isolated atom to the whole matrix phase. We make a histogram of all clusters of sizes larger than 100 atoms in Fig. 4a. The largest one is cluster_0, which is the matrix phase. Cluster_0 has a total of 0.6 billion atoms, and contains only Fe, Co, and Ni, as demonstrated in Fig. 4b. Note that the absence of Al and Ti atoms in cluster_0 is a result of the union-find algorithm, which automatically identifies isolated Al and Ti atoms in cluster_0 as individual clusters of size one. From Fig. 4c, we see that the FeCoNi matrix phase is a disordered A1 structure. The cause of the disorder can be seen from Fig. 4e, f, which shows the local energies of each atom with the color bar. It can be seen that in the matrix phase, the local energies of different atoms are generally close to each other, which means that the Fe, Co, and Ni atoms have no significant site preferences. On the other hand, the nanoparticles, which form L12 structure as shown in Fig. 4c, contain sites with much lower atomic local energies, as shown in Fig. 4e. In fact, the local energy difference is exactly the criteria we make use of to distinguish the matrix and nanoparticles, as described in Method “MC simulation”. The FeCoNi matrix phase is the entropy-stabilized disordered phase, while the L12 phase is the enthalpy-favorable ordered phase. In other words, the occurrence of both disordered A1 structure and ordered L12 structure is a result of the competition between the entropy and enthalpy contributions in the free energy.

a–c 3D snapshot of cluster_4, 3, and 2, respectively. d APT results from fcc grains of FNAT sample (47-h aging at 973 K) showing the distribution and composition of L12 precipitates in fcc matrix. e Same as (d), but for a sample obtained via 4-h aging. Both (d, e) are reproduced from ref. 51. f The APT results of the Fe29Co29Ni28Al7Ti7 HEA, as reproduced from ref. 50. g The simulation result obtained using SMC-X. The blue region shows the NP identified, and the red region shows the matrix phase. h The simulation result obtained with SMC-X. The chemical species are explicitly shown.

To study the size, compositions, and morphologies of the nanoparticles in more detail, we choose four representative nanoparticles, as illustrated in Fig. 4a as cluster_1, 2, 3, and 4, which have 264,792,042, 1,007,916, 102,389, and 20,937 atoms. Their chemical concentrations are also listed in Table 3, which aligns well with the experimental results29. From Fig. 4a, we note that the peak of the histogram is at about 105 atoms, which corresponds to a cluster of 29 atoms, or 10.3 nm in diameter. For cluster_1, a similar calculation gives a value of 22.0 nm in diameter, if we assume that the particles are spherical. However, inspecting the 3D morphology of the clusters reveals that they are actually not simply spheres or ellipsoids, as shown in Fig. 5a–c: While ellipsoid is a good approximation for the smaller cluster_4, the larger cluster_3 and cluster_2 are both comprising of multiple nanoparticles, which indicates that the smaller nanoparticles can merge together to form larger ones, as shown by the dendritic structures at the surface of the nanoparticles. In fact, from Fig. 4a, b, we see that the largest L12 cluster: cluster_1, contains a total of more than 264 million atoms, which accounts for 66% of the atoms in the NP phases. In other words, most of the nanoparticles shown in Fig. 4c–f are actually connected at three-dimension and are parts of cluster_1. Compared to simple NP, we propose that this 3D-connected-NPs (3DCNP) can further hinder the motion of dislocations, which can serve as a strengthening mechanism in these types of HEAs. Verifying the existence of the 3DCNP demands high-precision experimental techniques such as atom-probe tomography (APT). The APT image of Fe29Co29Ni28Al7Ti7 is available in ref. 50, as reproduced in Fig. 5f. However, the image only shows the surface of the sample instead of the 3D chemical structure. Although the theoretical observation of the 3DCNP still requires experimental validation, we contend that its existence is plausible, given the high density of NPs observed in experiment50. Moreover, the APT result of the FNAT alloy51, a medium entropy alloy made up of the Fe, Ni, Al, and Ti elements, seems to support the existence of L12 3DCNP, as shown in Fig. 5d, e. For a direct comparison with the experimental results, we also show the simulation results of the APT sample needle in Fig. 5g, h. It is easy to see that the general shapes of the NPs from simulation resemble the experimental results of FNAT, despite their different chemical compositions. The sizes of the NPs from the simulation is generally smaller than experimental results, which could be due to the relatively limited 105 MC simulation steps. Again, the importance of the SMC-X method is highlighted by the capability to directly simulate an APT needle comprising 10 million atoms. Other than the mechanical properties, the NPs can also have important application in catalysis69, which would be an interesting topic for future research56,70.

Nanostructures in MoNbTaW

In addition to FeCoNiAlTi, we also employ our method to study the nanostructure evolution of a well-studied bcc HEA: MoNbTaW. The size of the supercell is 795 × 795 × 795 × 2 = 1,004,919,750 atoms. The size of the link-cell is 3 × 3 × 3, and the energy model is 2nd-NN EPI. The simulation temperatures decrease from 2000 to 250 K, with a temperature interval of 50 K. For each temperature, we run the simulation for 105 sweeps. The simulation results are shown in Fig. 6. For T = 250 K, we note that there are two types of nanostructures: The smaller ones are mainly made up of Nb and W, and of a feature size of about 10 nm. It can be seen that the distributions of Nb and W elements are relatively random. The shapes of these small nanoparticles are also more diverse than that of FeCoNiAlTi. Other than the small nanostructure, there are also larger nanophases of feature sizes of about 100 nm, which are at the same length scale as the supercell. The two nanophases are shown as yellow and purple in the figure. It is easy to see that they are actually the MoTa B2 phases, which is in agreement with previous results28,33,71. The difference between the yellow and the purple nanophases is that one of them has Ta at the center position of the perfect bcc lattice (denote as B2- in Fig. 6), while the other has Mo at the center site (denote as B2+ in Fig. 6). These two nonophases can be understood as the spontaneous symmetry breaking of an Ising-like model along either the plus or the minus directions. At T = 250 K, a chemical grain boundary made up of Nb and Ta can be clearly seen between the two nanophases, and the thickness of the chemical grain boundary is about 3 nm. As the temperature increases to 750 K, it can be seen that the larger nanophases still exist, which means that those features are more stable against thermal fluctuations. On the other hand, the smaller nanoparticles vanish, along with the chemical grain boundary between the yellow and purple nanophases. The stability of the nanophases presents an interesting phenomenon, which we propose to explain as follows: at low temperatures, the disordered A2 boundary acts as a protective barrier, preventing the formation of energetically unfavorable AA or BB nearest-neighbor pairs when the two nanophases come into contact. However, as the temperature increases, the disordered chemical grain boundary will dissolve into B2 phases, which introduces additional chemical disorder. This disorder reduces the energy cost at the boundary, allowing the nanostructures to persist even in the absence of the protective chemical grain boundary. It would be intriguing if this “disorder-protected nanophase” revealed in the simulations could be directly verified by future experiments.

Discussion

The method innovations are summarized as follows: We introduce a scalable Monte Carlo (SMC-X) method that overcomes the parallelization bottlenecks inherent in conventional Monte Carlo (MC) simulations. By generalizing the checkerboard algorithm through the introduction of dynamical link cells, our approach reduces the computational complexity of a conventional MC sweep from O(N2) to O(N). (Note that algorithms such as Swendsen-Wang72 and Wang-Landau73 do not necessarily require sequential site-by-site updates, but are not general-purpose replacements for simpler MC algorithms like Metropolis.) Our method is not only applicable to pairwise interactions but also extends to nonlinear local interactions through the introduction of a local-interaction zone, making it highly compatible with machine learning (ML)-enhanced atomistic models, and overcomes the drawbacks in prior methods, as discussed in the introduction section and illustrated in Table 1. The GPU-accelerated implementation of the SMC-X method enables the simulation of atomistic systems exceeding a billion atoms while maintaining the accuracy of density functional theory (DFT). This unprecedented capability makes it possible for us to directly observe the nanostructures in HEAs, which can be of more than millions of atoms. Understanding the nanoscale evolution of NPs is vital for understanding the origin of the superb mechanical properties in HEAs.

The applications of SMC-X are summarized below: Using the SMC-GPU code, we investigated the size, composition, and morphologies of the NPs in the fcc Fe29Co29Ni28Al7Ti7 HEA using a one-billion-atom supercell. This large supercell not only makes it possible to directly observe the nanostructure evolution in HEAs, but also enables the direct comparison with experimental results from TEM and APT. The size and composition of the nanoparticles generally align well with available experimental findings50. Moreover, we find that seemingly separate NPs may, in fact, be connected, highlighting the intricate nature of high-entropy alloys (HEAs) and prompting a reconsideration of traditional grain size measurement methods. We further investigated the bcc MoNbTaW high-entropy alloy (HEA) using a one-billion-atom supercell. The results reveal the formation of hierarchical nanostructures: The smaller NPs consist of a disordered A2 structure comprising Nb and W, with a feature size of approximately 10 nm; The larger nanophases exhibit a B2 structure composed of Ta and Mo, with a feature size of about 100 nm. Interestingly, we find that the Ta-Mo B2 structures decompose into two distinct nanophases, B2+ and B2−, which are separated by a grain boundary enriched with Nb and W. The simulation results suggest that the disordered Nb-W grain boundary acts as a protective barrier for the two nanophases to reduce the enthalpy of the system.

To facilitate better interpretation of the simulation results, we also compare the results with previous studies. Note that the interaction range used in this work is limited to the first and second-nearest neighbors. In contrast, ref. 74 suggests that effective pair interactions (EPIs) can be long-ranged, with contributions from up to 99 coordination shells (\({p}_{\max }\)) required for accurate total energy estimation. However, those EPIs were derived using the EMTO+CPA electronic structure method and are not necessarily equivalent to the EPIs used in this work, which were obtained by regression from direct DFT calculations. In a previous study, Liu et al. investigated the influence of interaction range on model performance. Their findings indicate that the optimal cutoff depends on the training dataset, governed by the well-known bias–variance trade-off. In general, the first two coordination shells capture the most significant contributions to the energy, while extending the range can improve accuracy if supported by a larger DFT dataset. Comparing this work, which uses a cutoff of \({p}_{\max }=2\), to ref. 74, which used \({p}_{\max }=6\), the resulting order-disorder transition temperatures are 1050 and 873 K, respectively. This relatively modest difference supports the adequacy of a short-range model for the current study, given the size of the available DFT dataset.

A further complication is lattice distortion. Note that in this work, lattice distortions are not included in the DFT calculations. This can potentially change the order-disorder transition temperature significantly, as noticed for MoNbTaW28. The lack of lattice relaxation in our DFT data stems from the use of the LSMS method, which, while computationally efficient, does not currently support lattice relaxation. Although the lattice distortions in the HEAs studied here are generally small, their precise impact should be scrutinized in future work. Other than lattice distortion, several other factors can influence phase transitions. A rigorous treatment of these effects requires evaluating all relevant contributions to the Gibbs free energy, including electronic, vibrational (phonon), and configurational terms. When the crystal structure remains unchanged, it is generally reasonable to approximate phase stability using only the configurational term, as chemical disorder predominantly introduces smearing of the Fermi surface and phonon dispersion. Moreover, volume thermal expansion is typically less than 1% between room temperature and 1000 K75, and such a minor change alone is unlikely to significantly affect the phase transition behavior. Nonetheless, a comprehensive evaluation of all relevant contributions is required for a definitive conclusion, which is beyond the scope of this study.

Methods

Theoretical speedup of SMC-X vs traditional methods

The theoretical speedup of the SMC-X method as compared to other methods are estimated as follows: For DFT and linear-DFT, we assume that a 100-atoms SCF calculation takes 1 h, based on our experience with the MuST-KKR code76. The speed of the GNN method (Allegro) is estimated from the Li3PO4 structure of 421,824 atoms in ref. 24. Note that an MC sweep is defined as making an MC trial move over each lattice site. Combining it with the intrinsic O(N3) scaling in the DFT method, the total computational cost of DFT then scales as O(N4), as signified in Fig. 1d. Building on the principles of nearsightedness77, the LSMS method employs an approximation that confines electron scattering to a local-interaction zone. This approximation reduces the computational cost of energy evaluation to linear scaling, therefore the total computational cost for an MC sweep scales approximately as O(N2). For a one-million-atom system, this reduces the computational cost by a factor of 108, as shown in the orange line in Fig. 1d. Despite the enhanced scaling behavior, linear-DFT still requires solving the Schrödinger equations explicitly, which contributes a large prefactor to the total computational cost. By replacing the computationally expensive DFT method with machine learning models such as the GNN model, the computational cost can be further reduced by a factor of 108, as shown in the green line in Fig. 1d. The speedup from the SMC-GPU implementation can be divided into multiple parts: First, the SMC-X parallelization strategy replaces the energy evaluation of the whole system with calculating the energy changes of the LIZ, which is independent of the system size. This strategy reduces the computational complexity from O(N2) to O(N), as illustrated in Fig. 1d. Second, the SMC-X method can simultaneously harness the thousands or tens of thousands of cores in a modern high-performance GPU (e.g., 14,592 FP32 CUDA cores in an NVIDIA H800 GPU). Third, the energy models used in this work are relatively simple compared to the GNN models. Based on the preceding discussion, the total acceleration ratio of the SMC-GPU method relative to a CPU-based DFT method, for a system of N atoms, can be decomposed into contributions from nearsightedness SNS, ML acceleration SML, and SMC-X implementation SSMC−GPU, and written as:

as illustrated in Fig. 1d.

Implementation of SMC-GPU

While there are many parallel opportunities in MC simulation, such as in temperature, and independent runs (see Supplementary Note 3 for detailed discussion), the trial moves are generally challenging to parallelize due to the sequential nature of commonly used updating algorithms such as Metropolis, as pointed out in previous works39,43,78. For a simple nearest-neighbor 2D Ising model, a widely used parallelization scheme is the checkerboard algorithm. In the checkerboard algorithm, the 2D square lattice is divided into two sublattices, one colored black, and the other white. Note that the lattice sites of the same color will not interact with each other; therefore, the sites of one color can be updated simultaneously by fixing the sites of the other color. The checkerboard algorithm is very efficient and has been applied to study the 2D and 3D Ising models on different accelerators, including GPU35,36,79, TPU80, and FPGA81,82.

The checkerboard algorithm cannot be directly applied when the Hamiltonian contains interactions beyond the nearest neighbors. Therefore, it is rarely used for studying real materials, where the interaction range is typically beyond nearest neighbors. For such a purpose, a generalization of the checkerboard algorithm can be employed to harness the move-parallelism opportunity. This method is referred to as a parallelized link-cell algorithm83. In the link-cell algorithm, the lattice is decomposed into nC cells of fixed size acell, which should be larger than the interaction range. With the link-cell algorithm, the serial MC code can be accelerated up to nC times. For a multi-GPU system, the nC cells are evenly distributed on all the GPUs, and ghost boundaries are added to take into account the interactions between atoms from different GPUs, as shown in Fig. 1b.

-

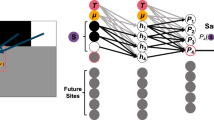

Parallelization implementation: The actual parallelization implementation on GPUs depends on the energy model. For pairwise models such as EPI, the iteration over the iC index is executed in parallel as CUDA threads on the GPU. For more general energy models such as the qSRO model, the iC index is parallelized in the CUDA blocks dimension, and the calculation of the local energies for each site within LIZ is parallelized with the CUDA threads, as demonstrated in the flowchart in Fig. 7.

Fig. 7: A schematic flowchart for the link-cell parallelism algorithm for a machine learning energy model. -

Link-cell size: Using the link-cell algorithm, the one MC sweep (MC trials over every site) is decomposed into nA sequential steps, with each one of nC parallel moves. For instance, for a 300 × 300 × 300 bcc supercell (a total of 3003 × 2 = 54,000,000 atoms), assuming next-to-nearest-neighbor EPI interaction, then the link-cell length can be chosen as 3 × a, where a is the lattice constant. The link-cell size nA is 3 × 3 × 3 × 2 = 54, and the number of link-cell is nC = 1,000,000.

-

Random number generator: An efficient random number generator is important for MC simulation because random numbers are needed for every MC trial, as shown in the fourth and fifth steps in Fig. 7. Our implementation makes use of the NVIDIA CUDA random number generation library (cuRAND), which delivers high-performance GPU-accelerated pseudorandom numbers.

-

Search neighbors: Another important consideration is how to efficiently find the neighboring sites, for which their chemical species need to be read from the memory, as shown in the third step in Fig. 7. In practice, we make use of the lattice structure and directly calculate the indices of the neighbors, as well as the features of the local chemical environment, on-the-fly using a list of the relative positions of the neighboring atoms. This method has a constant time complexity, which is advantageous over the more common practice of using a k-D tree to store the atomic positions and search the neighbors, which has a time complexity of \(O(\log (N))\).

The EPI and qSRO model

In the EPI model, the effective Hamiltonian of the system is made up of chemical pair interactions of the centering atom with neighboring atoms within some cutoff radius. The local chemical environment is specified by \(\overrightarrow{\sigma }=({\sigma }^{0},{\sigma }^{1},\cdots \,,{\sigma }^{{N}_{n}-1})\), which denotes the chemical species of the Nn-th neighboring atoms. The local energy Ei is given by

where ϵ is the uncertainty of the EPI model, V0 is the bias term, same for all sites, \({V}_{i}^{p}\) is a single-site term depending only on the chemical component p of atom i, Vf are the EPI parameters, and πf are the number of pair interactions of type f. The feature index f is actually made up of three parts \((p,{p}^{{\prime} },m)\), representing the element of the local atom, the element of the neighboring atoms, and the coordination shell, respectively. For a system of fixed chemical concentrations, summing up the local energies over all sites, the total energy is then given by

where N is the total number of atoms and \({\Pi }_{m}^{p{p}^{{\prime} }}\) is the proportion of \(p{p}^{{\prime} }\) interaction in the m-th neighboring shell. Note that due to the fixed chemical concentration in the canonical system, the single-site term \({V}_{i}^{p}\) has been absorbed into the constant, and the number of independent EPI parameters is M(M − 1)/2 for an fixed-concentration M-component system, which is the reason for the \({p}^{{\prime} } < p\) requirement. In practice, the EPI parameters are determined via Bayesian regression33 or stochastic gradient descent.

As mentioned, since the EPI model contains only pairwise interactions, the calculation of the energy changes after an MC swap trial is simple: we simply multiply the local energy changes of the two swapping sites by a factor of 2 to take into account the local energy changes of other sites. For more general energy models that contain higher-order/nonlinear interactions, the total energy changes need to be explicitly calculated by adding up the local energies of all sites within the LIZ. As a demonstration of our method for more general machine learning models, here we modify the EPI model by adding a simple quadratic term, and the local energy for each site Ei is:

in which

is the percentage of \(p{p}^{{\prime} }\) interactions for the m’s coordination shell, and \({n}_{m}^{p{p}^{{\prime} }}\) is the number of pairs with index \((m,p,{p}^{{\prime} })\). Note that we limit the quadratic interaction to the same-element pairs to reduce the number of higher-order terms. The third term on the right of Eq. (6) is a l2 regularization of the weights of the quadratic term, reflecting our prior that the higher-order interactions should be a small correction to the EPI model. We name it the qSRO model since \({\Pi }_{m}^{p{p}^{{\prime} }}\) describes the short-range order within the local chemical environment. It should be noted that, unlike EPI, this qSRO model is no longer a linear or even quadratic function of the pairwise interactions. Instead, it can be expressed as a power series (infinite degree polynomial) of the pairwise interactions due to the \({({\pi }_{m}^{p{p}^{{\prime} }})}^{2}\) term. It is easy to see that the nonlinear interactions can also be modeled with neural networks such as the multilayer perceptrons:

However, due to a large number of principal elements, the number of parameters in such an MLP model can easily go beyond the number of data points used in this work (e.g., 220 for FeCoNiAlTi), which leads to a high risk of overfitting. Therefore, in this work we will use the local-SRO model as a representation of general ML energy models, and leave more complex models for future study when significantly larger DFT datasets are available.

DFT dataset

We use the LSMS method60 to calculate the total energy of the system. LSMS is an all-electron electronic structure calculation method, in which the computational cost scales linearly with respect to the number of atoms. For FeCoNiAlTi, we use a 100-atom supercell with a lattice constant of 6.72 Bohr (0.356 nm). We employ a spin-polarized scheme to account for the magnetic interactions in the system. The angular momentum cutoff lmax for the electron wavefunctions is chosen as 3, and the LIZ is chosen as 86 atoms. We used PBE as the exchange-correlation functional. To enhance the representativeness of the DFT data, the total dataset is made up of 120 random generated configurations and 100 configurations from Monte Carlo simulation, as proposed in ref. 33. Therefore, the total number of configurations is 220, with each one comprising 100 atoms.

For MoNbTaW, the DFT dataset has been reported in ref. 33, which is also made up of the random samples and the MC samples. The 704 random samples are calculated with supercells of 64, 128, 256, and 512 atoms. The 72 MC samples are obtained from MC simulations at different temperatures, on a 1000-atom supercell. When calculating the energies of the MoNbTaW system, the lattice constant is set at 6.2 Bohr, and the angular momentum cutoff is chosen as 3. The Barth–Hedin local-density approximation are used as the exchange-correlation functional. The local-interaction zone is maintained at 59 atoms. To adequately capture the effects of heavier elements in the system, the scalar-relativistic equation is used instead of the conventional Schrödinger equation.

MC simulation

The results shown in this work are based on five simulation results using different models and systems, as shown in Table 4. The MC simulation is a simulated annealing process in the canonical ensemble (NVT). The temperature is initialized as 2000 K and then decreases with a step of 50 K. The initial Nsweeps sweeps are discarded before making a measurement or taking a snapshot of the configuration. The number of Nsweeps generally increases as the temperature decreases to account for the lower acceptance ratio of MC moves. For instance, for FeCoNiAlTi_1M_EPI, the number of sweeps is 4 × 105 at 2000 K, then increase to 1.5 × 106 at T = 1000 K. For FeCoNiAlTi_1B_EPI, we evenly decrease the simulation temperature from 2000 to 1000 K using a step of 50 K. For the 1B atom system, we no longer record the MC configurations and run 105 MC sweeps before recording the configuration at T = 1000 K. Note that, generally speaking, the 105 number of MC sweeps is not large enough for a supercell as large as one billion atoms to reach thermal equilibrium, so the simulation is closer to simulated annealing. Nevertheless, this nonequilibrium does not necessarily present as a problem since the actual synthesis of alloys is typically a nonequilibrium process involving a rapid heating-cooling process, in which the phases at high temperatures can be trapped by the kinetic barrier and be maintained at low temperatures.

In order to extract the nanostructures from the simulation results, we employ the union-find algorithm, which uses a tree structure to efficiently manage and manipulate partitions of a set into disjoint subsets. In order to apply the union-find algorithm, we first calculate the local energy of each site and set a threshold of the energy to determine whether two sites belong to the same set (cluster). MC simulations are performed on a workstation with two 80 GB NVIDIA H800 SXM5 GPUs that are connected via 400 GB/s bidirectional bandwidth NVLink. In practice, we find that one GPU is large enough to fit the one-billion-atom system, and the two GPUs are simultaneously used via either temperature parallelization or lattice decomposition, as described in section “Performance of the SMC-X algorithm”. For visualization, we used VESTA84 to generate the 3D crystals and nanoparticles figures.

Data availability

The data supporting the findings of this study, including the simulated configurations in .xyz format, Python scripts for data analysis, and C++ code for nanoparticle extraction, are available at: https://github.com/xianglil/SMCX-Supplementary.

Code availability

Executable binaries or a containerized version of the SMC-GPU code are available from the authors upon reasonable request.

References

Burke, K. Perspective on density functional theory. J. Chem. Phys. https://doi.org/10.1063/1.4704546 (2012).

Ma, D., Grabowski, B., Körmann, F., Neugebauer, J. & Raabe, D. Ab initio thermodynamics of the CoCrFeMnNi high entropy alloy: Importance of entropy contributions beyond the configurational one. Acta Mater. 100, 90–97 (2015).

Khan, S. N. & Eisenbach, M. Density-functional Monte-Carlo simulation of CuZn order-disorder transition. Phys. Rev. B 93, 024203 (2016).

Wilson, K. G. The renormalization group: critical phenomena and the kondo problem. Rev. Mod. Phys. 47, 773–840 (1975).

Sanchez, J. M. Renormalized interactions in truncated cluster expansions. Phys. Rev. B 99, 134206 (2019).

Pawley, G. S., Swendsen, R. H., Wallace, D. J. & Wilson, K. G. Monte Carlo renormalization-group calculations of critical behavior in the simple-cubic ising model. Phys. Rev. B 29, 4030–4040 (1984).

Park, C. W. et al. Accurate and scalable graph neural network force field and molecular dynamics with direct force architecture. npj Comput. Mater. 7, 73 (2021).

Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun. 13, 2453 (2022).

Song, K. et al. General-purpose machine-learned potential for 16 elemental metals and their alloys. Nat. Commun. 15, 10208 (2024).

Zeni, C. et al. A generative model for inorganic materials design. Nature https://doi.org/10.1038/s41586-025-08628-5 (2025).

Ko, T. W., Finkler, J. A., Goedecker, S. & Behler, J. Accurate fourth-generation machine learning potentials by electrostatic embedding. J. Chem. Theory Comput. 19, 3567–3579 (2023).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Schütt, K. T. et al. Schnetpack: a deep learning toolbox for atomistic systems. J. Chem. Theory Comput. 15, 448–455 (2019).

Gasteiger, J., Becker, F. & Günnemann, S. Gemnet: Universal directional graph neural networks for molecules. In Proc. 35th International Conference on Neural Information Processing Systems (NeurIPS) 6790–6802 (Curran Associates Inc., 2021).

Ying, C. et al. Do transformers really perform badly for graph representation? In Thirty-Fifth Conference on Neural Information Processing Systems 28877–28888 (Curran Associates Inc., 2021).

Hart, G. L. W., Mueller, T., Toher, C. & Curtarolo, S. Machine learning for alloys. Nat. Rev. Mater. 6, 730–755 (2021).

Liu, X., Zhang, J. & Pei, Z. Machine learning for high-entropy alloys: progress, challenges and opportunities. Prog. Mater. Sci. 131, 101018 (2023).

Zhang, Y.-W. et al. Roadmap for the development of machine learning-based interatomic potentials. Model. Simul. Mater. Sci. Eng. 33, 023301 (2025).

Clausen, C. M., Rossmeisl, J. & Ulissi, Z. W. Adapting OC20-trained equiformerv2 models for high-entropy materials. J. Phys. Chem. C. 128, 11190–11195 (2024).

Chen, C. & Ong, S. P. A universal graph deep learning interatomic potential for the periodic table. Nat. Comput.Sci. 2, 718–728 (2022).

Huang, B., von Rudorff, G. F. & von Lilienfeld, O. A. The central role of density functional theory in the AI age. Science 381, 170–175 (2023).

Jia, W. et al. Pushing the Limit of Molecular Dynamics with Ab Initio Accuracy to 100 Million Atoms with Machine Learning (IEEE Press, 2020).

Deringer, V. L. et al. Origins of structural and electronic transitions in disordered silicon. Nature 589, 59–64 (2021).

Musaelian, A. et al. Learning local equivariant representations for large-scale atomistic dynamics. Nat. Commun. 14, 579 (2023).

Guo, Z. et al. Extending the limit of molecular dynamics with ab initio accuracy to 10 billion atoms. In Proc. 27th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP ’22, 205–218 (Association for Computing Machinery, 2022).

Doerr, S. et al. Torchmd: a deep learning framework for molecular simulations. J. Chem. Theory Comput. 17, 2355–2363 (2021).

Gao, X., Ramezanghorbani, F., Isayev, O., Smith, J. S. & Roitberg, A. E. Torchani: A free and open source pytorch-based deep learning implementation of the ani neural network potentials. J. Chem. Inf. Model. 60, 3408–3415 (2020).

Kostiuchenko, T., Körmann, F., Neugebauer, J. & Shapeev, A. Impact of lattice relaxations on phase transitions in a high-entropy alloy studied by machine-learning potentials. npj Comput. Mater. 5, 55 (2019).

Wang, Z. & Yang, T. Chemical order/disorder phase transitions in nicofealtib multi-principal element alloys: a Monte Carlo analysis. Acta Mater. 285, 120635 (2025).

Xie, J.-Z., Zhou, X.-Y., Jin, B. & Jiang, H. Machine learning force field-aided cluster expansion approach to phase diagram of alloyed materials. J. Chem. Theory Comput. 20, 6207–6217 (2024).

Jiang, D., Xie, L. & Wang, L. Current application status of multi-scale simulation and machine learning in research on high-entropy alloys. J. Mater. Res. Technol. 26, 1341–1374 (2023).

Körmann, F. & Sluiter, M. H. Interplay between lattice distortions, vibrations and phase stability in NbMoTaW high entropy alloys. Entropy http://www.mdpi.com/1099-4300/18/8/403 (2016).

Liu, X. et al. Monte carlo simulation of order-disorder transition in refractory high entropy alloys: a data-driven approach. Comput. Mater. Sci. 187, 110135 (2021).

Yin, J., Pei, Z. & Gao, M. C. Neural network-based order parameter for phase transitions and its applications in high-entropy alloys. Nat. Comput. Sci. 1, 686–693 (2021).

Preis, T., Virnau, P., Paul, W. & Schneider, J. J. Gpu accelerated Monte Carlo simulation of the 2d and 3d ising model. J. Comput. Phys. 228, 4468–4477 (2009).

Block, B., Virnau, P. & Preis, T. Multi-gpu accelerated multi-spin monte carlo simulations of the 2d ising model. Comput. Phys. Commun. 181, 1549–1556 (2010).

Romero, J., Bisson, M., Fatica, M. & Bernaschi, M. High performance implementations of the 2d ising model on gpus. Comput Phys. Commun. 256, 107473 (2020).

Liu, L. et al. High performance monte carlo simulation of ising model on tpu clusters. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis 1–20 (ACM, 2019).

Ortega-Zamorano, F., Montemurro, M. A., Cannas, S. A., Jerez, J. M. & Franco, L. Monte Carlo simulation of the ising model on FPGA. J. Comput. Phys. 237, 224–234 (2013).

Johnson, M. A. Concurrent Computation and Its Application to the Study of Melting in Two Dimensions. Thesis, California Institute of Technology (1986).

Anderson, J. A., Jankowski, E., Grubb, T. L., Engel, M. & Glotzer, S. C. Massively parallel Monte Carlo for many-particle simulations on gpus. J. Comput. Phys. 254, 27–38 (2013).

Anderson, J. A. & Glotzer, S. C. Scalable metropolis Monte Carlo for simulation of hard shapes. Comput. Phys. Commun. 204, 21–30 (2016).

Sadigh, B. et al. Scalable parallel Monte Carlo algorithm for atomistic simulations of precipitation in alloys. Phys. Rev. B 85, 184203 (2012).

Thompson, A. P. et al. Lammps - a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022).

Li, X.-G., Chen, C., Zheng, H., Zuo, Y. & Ong, S. P. Complex strengthening mechanisms in the NbMoTaW multi-principal element alloy. npj Comput. Mater. 6, 70 (2020).

Yin, S. et al. Atomistic simulations of dislocation mobility in refractory high-entropy alloys and the effect of chemical short-range order. Nat. Commun. 12, 4873 (2021).

Yeh, J.-W. et al. Nanostructured high-entropy alloys with multiple principal elements: novel alloy design concepts and outcomes. Adv. Eng. Mater. 6, 299–303 (2004).

Cantor, B., Chang, I., Knight, P. & Vincent, A. Microstructural development in equiatomic multicomponent alloys. Mater. Sci. Eng. A 375-377, 213–218 (2004).

Li, Z., Pradeep, K. G., Deng, Y., Raabe, D. & Tasan, C. C. Metastable high-entropy dual-phase alloys overcome the strength-ductility trade-off. Nature 534, 227 (2016).

Yang, T. et al. Multicomponent intermetallic nanoparticles and superb mechanical behaviors of complex alloys. Science 362, 933–937 (2018).

Yang, Y. et al. Bifunctional nanoprecipitates strengthen and ductilize a medium-entropy alloy. Nature 595, 245–249 (2021).

Ferrari, A., Körmann, F., Asta, M. & Neugebauer, J. Simulating short-range order in compositionally complex materials. Nat. Comput. Sci. 3, 221–229 (2023).

He, M., Davids, W. J., Breen, A. J. & Ringer, S. P. Quantifying short-range order using atom probe tomography. Nat. Mater. 23, 1200–1207 (2024).

Xiao, B. et al. Achieving thermally stable nanoparticles in chemically complex alloys via controllable sluggish lattice diffusion. Nat. Commun. 13, 4870 (2022).

Han, X. et al. Nanoscale high-entropy alloy for electrocatalysis. Matter 6, 1717–1751 (2023).

Li, M. et al. High-entropy alloy electrocatalysts go to (sub-)nanoscale. Sci. Adv. 10, eadn2877 (2024).

Yao, Y. et al. High-entropy nanoparticles: synthesis-structure-property relationships and data-driven discovery. Science 376, eabn3103 (2022).

Zhang, J. et al. Robust data-driven approach for predicting the configurational energy of high entropy alloys. Mater. Des. 185, 108247 (2020).

Liu, J. et al. Vase: a high-entropy alloy short-range order structural descriptor for machine learning. J. Chem. Theory Comput. 20, 11082–11092 (2024).

Wang, Y. et al. Order-N multiple scattering approach to electronic structure calculations. Phys. Rev. Lett. 75, 2867–2870 (1995).

Eisenbach, M. et al. LSMS https://www.osti.gov/biblio/1420087 (2017).

Li, Z., Pradeep, K. G., Deng, Y., Raabe, D. & Tasan, C. C. Metastable high-entropy dual-phase alloys overcome the strength–ductility trade-off. Nature 534, 227–230 (2016).

Fu, Z. et al. A high-entropy alloy with hierarchical nanoprecipitates and ultrahigh strength. Sci. Adv. 4, eaat8712 (2018).

Liu, X., Zhang, J., Eisenbach, M. & Wang, Y. Machine learning modeling of high entropy alloy: the role of short-range order. Preprint at arXiv:1906.02889 (2019).

Kirklin, S. et al. The open quantum materials database (oqmd): assessing the accuracy of DFT formation energies. npj Comput. Mater. 1, 15010 (2015).

Huhn, W. P. & Widom, M. Prediction of A2 to B2 phase transition in the high-entropy alloy Mo-Nb-Ta-W. JOM 65, 1772–1779 (2013).

Zhang, E. et al. On phase stability of Mo-Nb-Ta-W refractory high entropy alloys. Int. J. Refract. Met. Hard Mater. 103, 105780 (2022).

Fu, Z. et al. Atom probe tomography study of an Fe25Ni25Co25Ti15Al10 high-entropy alloy fabricated by powder metallurgy. Acta Mater. 179, 372–382 (2019).

Liao, Q. et al. Three-dimensionally connected platinum–cobalt nanoparticles as support-free electrocatalysts for oxygen reduction. ACS Appl. Nano Mater. 8, 3323–3332 (2025).

Zhu, W. et al. Nanostructured high entropy alloys as structural and functional materials. ACS Nano 18, 12672–12706 (2024).

Yao, Y. et al. Nanostructure and dislocation interactions in refractory complex concentrated alloy: From chemical short-range order to nanoscale b2 precipitates. Acta Mater. 281, 120457 (2024).

Swendsen, R. H. & Wang, J.-S. Nonuniversal critical dynamics in monte carlo simulations. Phys. Rev. Lett. 58, 86–88 (1987).

Wang, F. & Landau, D. P. Efficient, multiple-range random walk algorithm to calculate the density of states. Phys. Rev. Lett. 86, 2050–2053 (2001).

Körmann, F., Ruban, A. V. & M. H. S. Long-ranged interactions in bcc NbMoTaW high-entropy alloys. Mater. Res. Lett. 5, 35–40 (2017).

Laplanche, G. et al. Elastic moduli and thermal expansion coefficients of medium-entropy subsystems of the crmnfeconi high-entropy alloy. J. Alloy. Compd. 746, 244–255 (2018).

Liu, X., Wang, Y., Eisenbach, M. & Stocks, G. M. Fully-relativistic full-potential multiple scattering theory: a pathology-free scheme. Comput. Phys. Commun. 224, 265–272 (2018).

Prodan, E. & Kohn, W. Nearsightedness of electronic matter. Proc. Natl Acad. Sci. USA 102, 11635–11638 (2005).

Liang, Y., Xing, X. & Li, Y. A gpu-based large-scale monte carlo simulation method for systems with long-range interactions. J. Comput. Phys. 338, 252–268 (2017).

Lulli, M., Bernaschi, M. & Parisi, G. Highly optimized simulations on single- and multi-gpu systems of the 3d ising spin glass model. Comput. Phys. Commun. 196, 290–303 (2015).

Yang, K., Chen, Y.-F., Roumpos, G., Colby, C. & Anderson, J. High performance Monte Carlo simulation of ising model on tpu clusters. In Proc. International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’19 (Association for Computing Machinery, 2019).

Lin, Y., Wang, F., Zheng, X., Gao, H. & Zhang, L. Monte Carlo simulation of the ising model on fpga. J. Comput. Phys. 237, 224–234 (2013).

Ortega-Zamorano, F., Montemurro, M. A., Cannas, S. A., Jerez, J. M. & Franco, L. FPGA hardware acceleration of monte carlo simulations for the ising model. IEEE Trans. Parallel Distrib. Syst. 27, 2618–2627 (2016).

Yamakov, V. Parallel grand canonical monte carlo (paragrandmc) simulation code. (2016).

Momma, K. & Izumi, F. Vesta 3 for three-dimensional visualization of crystal, volumetric and morphology data. J. Appl. Crystallogr. 44, 1272–1276 (2011).

Heffelfinger, G. S. & Lewitt, M. E. A comparison between two massively parallel algorithms for monte carlo computer simulation: an investigation in the grand canonical ensemble. J. Comput. Chem. 17, 250–265 (1996).

Mick, J. et al. GPU-accelerated gibbs ensemble monte carlo simulations of Lennard-Jonesium. Comput. Phys. Commun. 184, 2662–2669 (2013).

Lepadatu, S. Accelerating micromagnetic and atomistic simulations using multiple GPUs. J. Appl. Phys. 134, 163903 (2023).

Santodonato, L. J., Liaw, P. K., Unocic, R. R., Bei, H. & Morris, J. R. Predictive multiphase evolution in Al-containing high-entropy alloys. Nat. Commun. 9, 4520 (2018).

Wang, X. et al. 29-billion atoms molecular dynamics simulation with ab initio accuracy on 35 million cores of new sunway supercomputer. IEEE Trans. Comput. 74, 1–14 (2025).

Acknowledgements

The work of X. Liu and F. Zhou was supported by the National Natural Science Foundation of China under Grant 12404283. The work of Y. Tian was supported by the National Natural Science Foundation of China under grants 62425101 and 62088102. This work was also supported by the Pengcheng Laboratory Key Project (PCL2021A13) to utilize the computing resources of Pengcheng Cloud Brain. X. Liu gratefully acknowledges Professor Robert Swendsen for his guidance and foundational training in the fascinating field of Monte Carlo simulation during his graduate studies at Carnegie Mellon University.

Author information

Authors and Affiliations

Contributions

X.L. proposed the SMC method, designed the workflow, calculated the DFT data, trained the energy model, interpreted the data, acquired the funding, supervised the work, and wrote the main manuscript text. K.Y. wrote the SMC-GPU code based on X.L.'s prototype, and ran the MC simulations. F.Z. contributed to Figs. 1d, 4a, b and Table 1. Z.P. co-analyzed the data. P.X. co-analyzed the data, acquired the computing resources, and co-supervised the work. Y.T. acquired the funding. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, X., Yang, K., Liu, Y. et al. Revealing nanostructures in high-entropy alloys via machine-learning accelerated scalable Monte Carlo simulation. npj Comput Mater 11, 267 (2025). https://doi.org/10.1038/s41524-025-01762-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41524-025-01762-8