Abstract

Wearable technologies enable real-time, continuous, noninvasive data collection, where long-term compliance is essential. The Personalized Parkinson Project (PPP) and the Parkinson’s Progression Markers Initiative (PPMI) utilized the Verily Study Watch. Participants, including people diagnosed with Parkinson’s disease (PD), prodromal PD, and healthy controls, were instructed to wear the watch for up to 23 h daily without data displaying or reporting data back to the participant. Compliance measures and user experiences were evaluated. A centralized support model identified barriers to data collection and enabled proactive outreach. Median daily wear time was 21.9 h for PPP and 21.1–22.2 h per day for PPMI over 2 years. Participants were highly motivated contributing to PD research. These results highlight strategies for achieving strong engagement without providing individual data. This approach offers valuable insights for study designs where returning data to participants could introduce bias or affect the data integrity.

Similar content being viewed by others

Introduction

Wearable technologies continue to grow in popularity and expand in applicability across multiple fields. The innovation of multi-sensor wearable devices is the ability to collect real-time, continuous measurements in a passive, noninvasive manner1. In clinical studies, data from wearable sensors can potentially act as an objective measure of daily function and varying symptomology. Such information is expected to improve clinical decision making and support self-management and chronic disease management through personalized healthcare approaches2,3. Furthermore, because of their independence from raters, outcome measures derived from wearable sensor devices are expected to have lower measurement error (and thus higher reliability) in comparison to clinical evaluations. It is to be expected that minimizing unwanted variability in outcome measures will allow for more efficient clinical trials4,5,6. To reach these aims, compliance with wearing the device and associated activities is key, especially as participants may be asked to use these devices for weeks, months, or even years.

Surfacing raw device data or processed information directly to participants can be a powerful motivator to maintain compliance: participants receive something in exchange for their efforts7. However, it is very difficult to manage, store, and process raw data in real time or near-real time8, and study protocols may choose to limit this type of data return. Providing feedback on performance is an intervention and may confound assessing longitudinal changes or the effectiveness of the intervention being studied. Moreover, when the protocol aims to validate digital endpoints from wearable sensor data, returning unvalidated information to participants could be problematic. Finally, there are concerns that offering feedback to participants that the digital data increases anxiety, or perhaps even prompts obsessive-compulsive behaviors in some9,10.

In this paper, we explored strategies to enhance compliance without utilizing the return of data to participants. We present experiences from two large-scale Parkinson’s disease (PD) studies, the Personalized Parkinson’s Project (PPP, NCT03364894)11 and the Parkinson’s Progression Markers Initiative (PPMI, NCT01141023) study12. The specific objectives for utilizing wearable devices in these projects were to further understand PD progression, characterize digital measures compared to in-person assessments, and assess sensitivity to signals in response to on/off medication13,14. These studies both included diverse participant populations, including individuals with motor impairments, cognitive decline, and older adults. Although these studies focused on PD, the authors believe that observations outlined here are applicable to other diseases.

Results

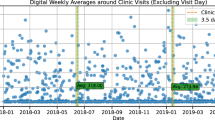

Study participants characteristics are outlined in Table 1. For PPP, Study Watch wear time remained consistent and stable over up to 3 years of device use, with a median wear time of 21.9 h per day (Fig. 1a). Across the PPMI cohorts, median wear time ranged from 21.1–22.2 h per day over the course of 2 years (Fig. 1b), without a clear difference between the cohorts. Across all cohorts, the drop-out rate was 4%. For those who completed the 2-year follow-up, median wear time was 22.0 h per day in the PPP cohort (n = 480, 93%) and 22.0 h per day across all PPMI cohorts (n = 48, 18%) (see Supplementary materials for the figures). The number of PPMI participants completing the 2-year follow-up was relatively low, due to a change in the protocol. In June 2020, PPMI transitioned into a new recruitment phase and discontinued the Verily sub-study. All devices were returned regardless of the duration of enrollment in the Verily sub-study.

The graph displays aggregated wear times of all individual participants during follow-up: a Parkinson’s disease cohort of PPP (baseline n = 517; 3-yr median of 21.9 h/day; 3-yr mean of 20.5 h/day); b Parkinson’s disease (baseline n = 111; 2-yr median = 21.7 h/day; 2-yr mean = 18.5 h/day), prodromal (baseline n = 138; 2-yr median = 21.1 h/day; 2-yr mean = 17.9 h/day), and healthy control (baseline n = 22; 2-yr median = 22.2 h/day; 2-yr mean = 19.6 h/day) cohorts of PPMI.

User experiences (PPP perspective)

Participants’ motivation in contributing to the study was high, in particular regarding the ability to contribute to research on PD in general and regarding the watch’s role in advancing research on PD (Supplementary material, Table S1). Almost all PPP participants (98%), found it very important (74%) or important (24%) to contribute to research. Similarly, 97% of all participants found it very important (73%) or important (24%) that the watch collects data valuable for PD research. Moreover, 83% expressed that contributing to the development of Study Watches was very important (34%) or important (49%).

The Study Watch received positive evaluations concerning both its physical attributes and overall comfort (Supplementary material, Table S2). Specifically, 93% of participants reported satisfaction with the size of the watch. In terms of display, 96% of participants rated the size of the text and images on the screen as good or acceptable. The visual appeal of the watch was favorably rated by 95% of participants, with nearly half (48%) describing it as “beautiful”. Additionally, 95% of participants found the watch band comfortable. Notably, 83% of participants indicated that the ability to display the time on the watch was either very important (38%) or important (45%), underscoring that traditional watch functions remain significant to users, even in research-oriented devices. In terms of operational ease, the Study Watch’s companion hub installation process was evaluated positively by participants. A majority (75%) reported no issues with the signal while installing the hub in their homes, and 72% of participants found the procedure for connecting to the hub to be “very easy”, reflecting a well-designed setup process that minimizes technical challenges for users. And in case of technical problems, the participants highlighted the importance of the helpdesk. Of all respondents, 71% contact the helpdesk at least once. In 75% of study participants the problem was always solved; only in 3% of the cases, the problem could not be solved.

Discussion

The observations from PPP and PPMI highlight the effectiveness of a centralized support model in sustaining or improving wear time compliance. Continuous monitoring of compliance data allowed both study teams to provide timely feedback to participants. This monitoring also helped quickly uncover barriers impacting data collection that could be proactively addressed. Providing access to a centralized team or contact helped to reduce both site and participant burden and maximized data collection for analysis. Box 1 summarizes the key factors for success.

Compared to other studies with passive monitoring devices15,16, the wear time numbers in both PPP and PPMI are exceptionally high, especially when considering the prolonged device use ranging from two to three years. Acceptability of wearable devices is a critical factor in understanding compliance. Acceptability is, first of all, influenced by their technical aspects. Ease of use is a critical factor, as users prefer devices that are simple and intuitive to operate17,18. The need for charging plays a significant role19, with shorter battery durations reducing acceptability18,20,21. Self-monitoring tasks that typically need to be performed repeatedly are often perceived as inconvenient, contributing to task fatigue and reduced compliance19,22,23. Furthermore, technical issues, such as problems with device synchronization or malfunctioning, are common reasons for users discontinuing use19,24,25.

Second, the acceptability of wearable devices is influenced by esthetic aspects. The visibility of a device is often mentioned as a barrier for wearing26,27. A user-friendly design is important: design issues are frequently mentioned as a big barrier for wearing the sensors24,28,29. In one study, participants expressed dissatisfaction when wearables clashed with their personal style or required replacing existing jewelry18.

Wearing comfort is a third factor that affects the acceptability of wearable devices. Perceiving devices as uncomfortable or inconvenient is a main reason for not wearing a device17,25,29. Devices that do not interfere with activities of daily living are better tolerated17,22,27. The location at which the wearable is attached may not determine the level of comfort: wristbands were sometimes perceived as uncomfortable25, while an IMU attached to the lower back was rated as “comfortable” and “unobtrusive”24.

The Study Watch in our studies had excellent technical aspects, participants appreciated the simple watch design, including long battery life, and rated wearing comfort as high. In addition, whenever encountering disruptions, both PPP and PPMI teams were able to maintain high wear time averages by actively monitoring wear time and quickly reaching out to participants with unexpected wear times. Active monitoring of wear time and contacting study participants proactively and reactively is a common strategy to maximize wear time18,19,20,30,31.

Various participants asked for insight into their own data collected with the Study Watch. We could not accommodate this request at the time, as the procedures to extract meaningful measures had not been validated yet, and raw sensor data cannot be interpreted. Yet, validated wearable sensor systems open a window of opportunities for feeding back the collected data. Feedback of data from wearables is often used as a strategy to support behavioral change or self-management32. For example, a sensor for continuous glucose monitoring, attached to the skin, is a well-known example of how a sensor can provide feedback to support self-management for diabetes mellitus patients33. Pedometers and physical activity trackers are often used to increase physical activity34. The isolated effects of feedback in these examples cannot be given, as the feedback is typically combined with other strategies, such as patient education, goal setting or coaching. Feedback from wearable sensors in isolation is often studied as part of feasibility studies, by collecting qualitative experiences from users after they have worn a wearable system for a few days or multiple weeks. These empirical studies show that feedback from wearables can enhance individuals’ self-awareness and understanding of physical health metrics, fostering insight into personal health dynamics20,35,36,37,38. Feedback from wearables can also motivate users to adopt healthier behaviors, such as increasing physical activity and improving sleep patterns35,37,38,39,40,41,42. Additionally, feedback can facilitate timely responses to rapid symptom changes or provide reassurance, contributing to an increased sense of security26,35,43,44. Enhanced patient-clinician communication is another benefit mentioned by users26,45,46. Furthermore, feedback can deepen patients’ understanding of their specific disease manifestation, support self-care, and promote self-reflection, leading to a more engaged and informed approach to self-management26,45,46.

Feeding back data to individuals with chronic conditions are not purely objective; instead, they evoke emotional responses, such as feelings of doubt, anxiety, and failure35,47. Such data can also confront individuals with the reality and progressive nature of the disease and worsening the burden of the disease, as reported by those living with PD1,46 and MS23,38. Many patients perceive the process of tracking personal health data as burdensome, requiring work46,47. The use of feedback is furthermore challenged by difficulties with data interpretation19,38,45. This also holds when participants question the relevance and accuracy of data provided by the wearable compared with lived experiences18,22,25,26,31,36.

The relationship between feedback and compliance with a wearable has barely been studied. In one study, where participants had to wear three different wearable devices 24/7 for seven days, participants mentioned feedback on physical activity and sleep behavior as being the biggest motivator for wearing the sensors29. In contrast, when device data has no perceived utility or value, this is pointed out as a major barrier for engagement19. In populations with various chronic conditions, participants found it reasonable to wear a wearable device without feedback in the context of a research study, even though the device was not considered to be useful and beneficial for themselves17,23. The latter observation is in line with our own experiences: study participants’ biggest motivation to participate stems from altruism. It can furthermore be questioned if feedback from a wearable device is of most interest for study participants. When healthy participants in a large cohort study were asked about their preferences for the return of individual research results, most participants preferred results from genetics (29.9%). Results from the wearable device were preferred by 15.8%48.

Advances in wearable technology offer enhanced, passive data collection that captures meaningful individual health information. This can offer a more comprehensive picture of patient functioning, particularly those with chronic conditions, while also reducing participants’ burden. Enhanced data capture enables artificial intelligence (AI) models ingesting large datasets for better, real-time analytics. These open up research and clinical possibilities including, (1) early detection of disease through continuous, sensitive monitoring, (2) personalized treatment in real-time allowing for dynamic adjustments to interventions to optimize efficacy and minimize side effects, (3) just-in-time adaptive interventions development to provide treatment when patients need it most, (4) improved patient engagement by empowering patients to take an active role in their health management via real-time feedback and personalized insights grounded in behavior change theory to motivate behavior change and improve adherence to treatment plans, (5) enhanced clinical decision making by providing clinicians with a more comprehensive and dynamic understanding of patient health to inform diagnoses, treatment plans, and patient management, and (6) reduced healthcare costs due to early detection, which may prevent hospitalizations, emergency room visits, and long-term care. Overall, integrating wearable sensor data with AI/machine learning (ML) algorithms may allow for a wealth of personalized, granular, and real-world data that is contextualized within a patient’s lived experience, providing a much richer and more ecologically valid picture of patients’ lives compared to traditional, episodic assessments. Future research should demonstrate if these promises also hold in clinical care.

By identifying key strategies, our studies reveal that high compliance is achievable without returning individual device data. A limitation of our research is that we did not investigate the impact of feedback on compliance and our observations are primarily based on people with PD or PD-risk rather than a broad disease range. However, the PPP and PPMI studies were conducted in multiple countries, with PD participants in various stages of their disease, with both prodromals and controls, and in single- and multi-center longitudinal studies. Each study must balance the needs and preferences of participants with study design requirements. In conclusion, we assert that it is possible to maintain high compliances with any type of wearable device over an extended period of time, in studies that aim to capture naturalistic, real-life behavior, where the return of information to participants is carefully controlled and acceptability aspects of the wearables are considered.

Methods

Verily Study Watch & Hub

The Verily Study Watch was developed as a clinical-grade device to unobtrusively collect high-resolution physiological data while also remaining easy to wear across broad demographic groups. The accompanying Study Hub both charged the Study Watch and allowed for encrypted cellular transmission of data to the cloud service (Fig. 2). These investigational devices were deployed across multiple clinical research studies, including the referenced PPP and PPMI projects11,12. Figures and statistical analyses for this study were generated using the Python programming language, making use of the Matplotlib49 and Seaborn50 libraries.

Single site example

The PPP study is a prospective, longitudinal, single-center study, supported by Verily Life Sciences and the Radboud University Medical Center (the Netherlands)11. The Institutional Review Board METC Oost-Nederland has approved the PPP study protocol (NL59694.091.17). Between 2017-2021, PD-diagnosed individuals were targeted for recruitment. During the recruitment phase, participants submitted contact information through the study website (www.parkinsonopmaat.nl) and were evaluated through a phone assessment by the Radboudumc study team. Eligible participants provided informed consent covering all study activities. They completed three annual in-person assessments, including biospecimen collection, and were required to wear the Study Watch continuously. The local study team managed regulatory approval for device use, tracked inventory, and coordinated with the Verily team for device returns. Each participant was assigned a single assessor, who conducted all assessments and deployed the Study Watch and Study Hub to enrolled participants during the baseline visit.

Multisite example

The PPMI study is an ongoing longitudinal observational study launched by the Michael J. Fox Foundation for Parkinson’s Research in 201012. PPMI has engaged clinical sites around the world to recruit thousands of participants (individuals diagnosed with PD, prodromal PD, and healthy controls) and standardize the collection of data and biospecimens. Between 2018–2020, PPMI launched a companion study to integrate wearable technology into the existing PPMI protocol for enrolled US-based participants. The University of Rochester Research Subjects Review Board approved the protocol of this companion study. Given the PPMI infrastructure, a centralized model was proposed to standardize participant materials, streamline device distribution, reduce site burden, and enhance participant support. After an individual PPMI clinical site received regulatory approval and completed required training, they were activated to receive devices from a clinical trials service core and could approach participants during any upcoming study visit to enroll in the companion study. Participation in this companion protocol was optional, and participants could decline without impacting their PPMI clinical enrollment. Those who agreed completed informed consent and were provided supporting educational materials. Their contact details and assigned device IDs were securely sent to the central help desk team at Indiana University (IU), allowing IU to reconcile compliance data with the appropriate participant.

Both PPP and PPMI instructed participants to wear the Study Watch for 23 h per day for up to 2 years, with the PPP participants provided the option to extend for up to 1 more year. For the PPP participants, a weekly task-based assessment, i.e., Virtual Motor Exam (VME), was added approximately two years after the first participant entered the study, as an optional element13.

Device distribution to participants and study support

Prior to launch, PPP and PPMI study teams received support training from the Verily team, including an overview of device icons and guidance on how to troubleshoot common issues. At in-person visits, trained PPP and PPMI personnel demonstrated the use of the Study Watch and Study Hub and explained the mechanism for data syncing and transfer. Participants were able to select a Study Watch band color of choice. The teams also outlined wear time goals and explained watch limitations (Box 2). In the era of commercially available smartwatches and direct access to smartwatch data, both the PPP and PPMI emphasized that Study Watch results would not be returned to enrolled participants. It was reinforced that the Study Watch was used solely for investigational purposes and that their data would be used in future analyses, which satisfied most participants.

In addition to the personal instruction, both PPP and PPMI teams supplied participants with educational materials (via printed handouts or website links) and help desk access to support their device use. A dedicated and staffed help desk available by phone and email during business hours can be of decisive value for a device-related study15. Introductory calls, made by the personal assessor in PPP or the IU central help desk coordinator in PPMI, in the first week of device deployment, provided further support and greater ability to assess potential initial errors. These calls reinforced wear time goals, reminding that reductions in wear time would trigger study team outreach. They also provided encouragement for help desk outreach for any questions. Investing in staff support and personalization may also help to reduce wearables “fatigue” and discontinuation of device wear after shorter timeframes30.

Data monitoring, troubleshooting, and retention strategies

The Study Watch (hardware version 2) collected accelerometer, gyroscope, photoplethysmography, and skin impedance sensor data. The Study Hub transmitted this data via cellular connection to a secure cloud environment (Google BigQuery database system). To identify individual wear time for PPP and PPMI participants, an embedded on-wrist detection algorithm was used that classified whether a participant wore the Study Watch every second. All on-wrist classifications were aggregated and generated a per-participant daily wear time. A compliance data file was generated daily that contained wear minutes, watch sync statuses, and hub check-ins. This compliance file was distributed to the study teams for monitoring purposes and used to trigger outreach if wear time targets were not observed. In some instances, where this daily target was not feasible or generated additional participant burden, personalized thresholds were allowed and accounted for in tracking by the study teams.

In PPP, a compliance review was performed weekly in a spreadsheet. Individual compliance patterns were reviewed by one dedicated person, looking for patterns deviating from what was normal for a participant (e.g., not receiving data for consecutive days or streaming data for fewer hours per day). These observations triggered phone calls by the personal assessor to better understand the disruptions.

In PPMI, a cumulative compliance file was populated on a secure Amazon cloud storage folder that was restricted to only IU team access. This data file was accessed and translated into a daily report that categorized participants by their daily wear time and their 10-day and overall wear time averages. Monthly, participants with a calculation of high compliance for the preceding month were sent an auto-email congratulating them for their excellent adherence to wearing and syncing their Study Watch. If low compliance was observed for greater than 10 days, the IU team contacted the participant to inquire about challenges with maintaining wear time goals and to encourage increased wear time.

Outgoing staff outreaches or incoming participant calls could identify a technical challenge that required troubleshooting. In PPP, the majority (>50%) of the issues are related to watch screen deficits (e.g., screen crack), while in a minority of cases (<5%), participants reported issues related to watch face condensation. In PPMI, the majority (>30%) of the issues are related to watch bezel separation, followed by watch face condensation and Study Hub connectivity issues (<15%), while in a minority of cases (<5%), participants reported issues related to watch screen deficits. While these issues were infrequent in the studies, they drove revisions and improvements of the product. Sporadically, user errors, such as wearing the Study Watch while swimming and causing water damage, also prompted helpdesk outreach. Some observed issues could be resolved through standard troubleshooting procedures that restored functionality. Most often, corrective actions began with attempts to reboot the devices to restore data syncing. If these strategies proved unsuccessful, study teams pivoted to a technical escalation pathway with Verily support services, in which high-quality and engaging technical supportive service was provided. With guidance from the Verily support team, study teams were able to determine if device replacement was required. If a device replacement was needed, a new device would be delivered to the participants the following day or within a couple of days to limit the impact on data quality.

User experiences

PPP participants who completed their 2-year follow-up in December 2021 through December 2023 received two surveys (Supplementary material Table S1and Table S2). In total, 214 participants (87.7% of those who were invited) completed the exit survey, covering user experiences with the Study Watch and Hub. In addition, 288 PPP participants (76.8% of those who were invited) completed a user experience survey covering general aspects related to study participation. Survey results are presented by descriptive statistics. PPMI participants did not complete an exit survey to collect experiences with the Study Watch.

Data availability

PPP data used in the present study were retrieved from the PEP database (https://pep.cs.ru.nl/index.html). The PPP data is available upon request. More details on the procedure can be found on the websitewww.personalizedparkinsonproject.com/home. PPMI data used in the preparation of this article were obtained May 2, 2023 from the Parkinson’s Progression Markers Initiative (PPMI) database (www.ppmi-info.org/access-data-specimens/download-data), RRID:SCR 006431. PPMI data are publicly available from the Parkinson’s Progression Markers Initiative (PPMI) database (www.ppmi-info.org/access-data-specimens/download-data). For up-to-date information on the study, visit www.ppmi-info.org.

References

Bloem, B. R., Post, E. & Hall, D. A. An apple a day to keep the Parkinson’s disease doctor away?. Ann. Neurol. 93, 681–685 (2023).

Maetzler, W., Klucken, J. & Horne, M. A clinical view on the development of technology-based tools in managing Parkinson’s disease. Mov. Disord. 31, 1263–1271 (2016).

Powell, D. & Godfrey, A. Considerations for integrating wearables into the everyday healthcare practice. NPJ Digit. Med. 6, 70 (2023).

Izmailova, E. S., Wagner, J. A. & Perakslis, E. D. Wearable devices in clinical trials: hype and hypothesis. Clin. Pharm. Ther. 104, 42–52 (2018).

Khan, I., Sarker, S. J. & Hackshaw, A. Smaller sample sizes for phase II trials based on exact tests with actual error rates by trading-off their nominal levels of significance and power. Br. J. Cancer 107, 1801–1809 (2012).

Servais, L. et al. First regulatory qualification of a novel digital endpoint in Duchenne muscular dystrophy: a multi-stakeholder perspective on the impact for patients and for drug development in neuromuscular diseases. Digit Biomark. 5, 183–190 (2021).

Siffels, L. E., Sharon, T. & Hoffman, A. S. The participatory turn in health and medicine: The rise of the civic and the need to ‘give back’ in data-intensive medical research. Hum. Soc. Sci. Commun. 8, 306 (2021).

Lee, J., Kim, D., Ryoo, H.-Y. & Shin, B.-S. Sustainable wearables: wearable technology for enhancing the quality of human life. Sustainability 8, 466 (2016).

Boogers, A., Fasano, A. & Lang, A. An apple a day will not keep the (Parkinson disease) doctor at bay!. Ann. Neurol. 95, 1012–1013 (2024).

Post, E., Hall, D. A. & Bloem, B. R. Reply to “An Apple a Day Will Not Keep the (Parkinson’s Disease) Doctor at Bay!”. Ann. Neurol. 95, 1013–1014 (2024).

Bloem, B. R. et al. The Personalized Parkinson Project: examining disease progression through broad biomarkers in early Parkinson’s disease. BMC Neurol. 19, 160 (2019).

Parkinson Progression Marker Initiative The Parkinson Progression Marker Initiative (PPMI). Prog. Neurobiol. 95, 629–635 (2011).

Burq, M. et al. Virtual exam for Parkinson’s disease enables frequent and reliable remote measurements of motor function. NPJ Digit. Med. 5, 65 (2022).

Oyama, G. et al. Analytical and clinical validity of wearable, multi-sensor technology for assessment of motor function in patients with Parkinson’s disease in Japan. Sci. Rep. 13, 3600 (2023).

Silva de Lima, A. L. et al. Feasibility of large-scale deployment of multiple wearable sensors in Parkinson’s disease. PLoS One 12, e0189161 (2017).

Zhang, Y. et al. Long-term participant retention and engagement patterns in an app and wearable-based multinational remote digital depression study. NPJ Digit. Med. 6, 25 (2023).

Keogh, A. et al. Acceptability of wearable devices for measuring mobility remotely: Observations from the Mobilise-D technical validation study. Digit. Health 9, 20552076221150745 (2023).

Whelan, M. E. et al. Examining the use of glucose and physical activity self-monitoring technologies in individuals at moderate to high risk of developing Type 2 diabetes: randomized trial. JMIR Mhealth Uhealth 7, e14195 (2019).

Goodday, S. M. et al. Value of engagement in digital health technology research: evidence across 6 unique cohort studies. J. Med. Internet Res. 26, e57827 (2024).

Maas, B. R. et al. Patient experience and feasibility of a remote monitoring system in Parkinson’s disease. Mov. Disord. Clin. Pr. 11, 1223–1231 (2024).

Stuart, T., Hanna, J. & Gutruf, P. Wearable devices for continuous monitoring of biosignals: Challenges and opportunities. Apl. Bioeng. 6, 021502 (2022).

Perlmutter, A., Benchoufi, M., Ravaud, P. & Tran, V. T. Identification of patient perceptions that can affect the uptake of interventions using biometric monitoring devices: systematic review of randomized controlled trials. J. Med. Internet Res. 22, e18986 (2020).

Wendrich, K. & Krabbenborg, L. Negotiating with digital self-monitoring: A qualitative study on how patients with multiple sclerosis use and experience digital self-monitoring within a scientific study. Health 28, 333–351 (2024).

Debelle, H. et al. Feasibility and usability of a digital health technology system to monitor mobility and assess medication adherence in mild-to-moderate Parkinson’s disease. Front. Neurol. 14, 1111260 (2023).

Grym, K. et al. Feasibility of smart wristbands for continuous monitoring during pregnancy and one month after birth. BMC Pregnancy Childbirth 19, 34 (2019).

Kang, H. S., Park, H. R., Kim, C. J. & Singh-Carlson, S. Experiences of using wearable continuous glucose monitors in adults with diabetes: a qualitative descriptive study. Sci. Diabetes Self Manag Care 48, 362–371 (2022).

Laar, A., Silva de Lima, A. L., Maas, B. R., Bloem, B. R. & de Vries, N. M. Successful implementation of technology in the management of Parkinson’s disease: Barriers and facilitators. Clin. Park Relat. Disord. 8, 100188 (2023).

Ferreira, J. J. et al. Quantitative home-based assessment of Parkinson’s symptoms: the SENSE-PARK feasibility and usability study. BMC Neurol. 15, 89 (2015).

Huberty, J., Ehlers, D. K., Kurka, J., Ainsworth, B. & Buman, M. Feasibility of three wearable sensors for 24 h monitoring in middle-aged women. BMC Women’s Health 15, 55 (2015).

Smuck, M., Odonkor, C. A., Wilt, J. K., Schmidt, N. & Swiernik, M. A. The emerging clinical role of wearables: factors for successful implementation in healthcare. NPJ Digit Med. 4, 45 (2021).

Cohen, S. et al. Characterizing patient compliance over six months in remote digital trials of Parkinson’s and Huntington disease. BMC Med. Inf. Decis. Mak. 18, 138 (2018).

Michie, S. et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann. Behav. Med. 46, 81–95 (2013).

Cappon, G., Vettoretti, M., Sparacino, G. & Facchinetti, A. Continuous glucose monitoring sensors for diabetes management: a review of technologies and applications. Diabetes Metab. J. 43, 383–397 (2019).

Ferguson, T. et al. Effectiveness of wearable activity trackers to increase physical activity and improve health: a systematic review of systematic reviews and meta-analyses. Lancet Digit. Health 4, e615–e626 (2022).

Andersen, T. O., Langstrup, H. & Lomborg, S. Experiences with wearable activity data during self-care by chronic heart patients: qualitative study. J. Med. Internet Res. 22, e15873 (2020).

LeBlanc, R. G., Czarnecki, P., Howard, J., Jacelon, C. S. & Marquard, J. Usability experience of a personal sleep monitoring device to self-manage sleep among persons 65 years or older with self-reported sleep disturbances. Comput Inf. Nurs. 40, 598–605 (2022).

Rossi, A. et al. Acceptability and feasibility of a Fitbit physical activity monitor for endometrial cancer survivors. Gynecol. Oncol. 149, 470–475 (2018).

Wendrich, K. et al. Toward digital self-monitoring of multiple sclerosis: investigating first experiences, needs, and wishes of people with MS. Int J. MS Care 21, 282–291 (2019).

Ashur, C. et al. Do wearable activity trackers increase physical activity among cardiac rehabilitation participants? A systematic review and meta-analysis. J. Cardiopulm. Rehabil. Prev. 41, 249–256 (2021).

Brickwood, K. J., Watson, G., O’Brien, J. & Williams, A. D. Consumer-based wearable activity trackers increase physical activity participation: systematic review and meta-analysis. JMIR Mhealth Uhealth 7, e11819 (2019).

Freak-Poli, R., Cumpston, M., Albarqouni, L., Clemes, S. A. & Peeters, A. Workplace pedometer interventions for increasing physical activity. Cochrane Database Syst. Rev. 7, CD009209 (2020).

Singh, B., Zopf, E. M. & Howden, E. J. Effect and feasibility of wearable physical activity trackers and pedometers for increasing physical activity and improving health outcomes in cancer survivors: A systematic review and meta-analysis. J. Sport Health Sci. 11, 184–193 (2022).

Connelly, M. A. & Boorigie, M. E. Feasibility of using “SMARTER” methodology for monitoring precipitating conditions of pediatric migraine episodes. Headache 61, 500–510 (2021).

Volčanšek, Š, Lunder, M. & Janež, A. Acceptability of continuous glucose monitoring in elderly diabetes patients using multiple daily insulin injections. Diabetes Technol. Ther. 21, 566–574 (2019).

Murnane, E. L. et al. Self-monitoring practices, attitudes, and needs of individuals with bipolar disorder: implications for the design of technologies to manage mental health. J. Am. Med. Inf. Assoc. 23, 477–484 (2016).

Riggare, S., Scott Duncan, T., Hvitfeldt, H. & Hagglund, M. “You have to know why you’re doing this”: a mixed methods study of the benefits and burdens of self-tracking in Parkinson’s disease. BMC Med. Inf. Decis. Mak. 19, 175 (2019).

Ancker, J. S. et al. “You Get Reminded You’re a Sick Person”: Personal data tracking and patients with multiple chronic conditions. J. Med. Internet Res. 17, e202 (2015).

Sayeed, S. et al. Return of individual research results: What do participants prefer and expect?. PLoS One 16, e0254153 (2021).

Hunter, J. D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 9, 90–95 (2007).

Waskom, M. Seaborn: statistical data visualization. J. Open Source Softw. 6, 3021 (2021).

Acknowledgements

PPP is financially supported by Verily Life Sciences LLC, Radboud University Medical Center, Radboud University, the city of Nijmegen, and the Province of Gelderland. PPP is co-funded by the PPP Allowance made available by Health~Holland, Top Sector Life Sciences & Health, to stimulate public-private partnerships. PPMI – a public-private partnership – is funded by the Michael J. Fox Foundation for Parkinson’s Research and funding partners, including 4D Pharma, Abbvie, AcureX, Allergan, Amathus Therapeutics, Aligning Science Across Parkinson’s, AskBio, Avid Radiopharmaceuticals, BIAL, BioArctic, Biogen, Biohaven, BioLegend, BlueRock Therapeutics, Bristol-Myers Squibb, Calico Labs, Capsida Biotherapeutics, Celgene, Cerevel Therapeutics, Coave Therapeutics, DaCapo Brainscience, Denali, Edmond J. Safra Foundation, Eli Lilly, Gain Therapeutics, GE HealthCare, Genentech, GSK, Golub Capital, Handl Therapeutics, Insitro, Jazz Pharmaceuticals, Johnson & Johnson Innovative Medicine, Lundbeck, Merck, Meso Scale Discovery, Mission Therapeutics, Neurocrine Biosciences, Neuron23, Neuropore, Pfizer, Piramal, Prevail Therapeutics, Roche, Sanofi, Servier, Sun Pharma Advanced Research Company, Takeda, Teva, UCB, Vanqua Bio, Verily, Voyager Therapeutics, the Weston Family Foundation and Yumanity Therapeutics. The Radboudumc Center of Expertise for Parkinson's and Movement Disorders was supported by a center of excellence grant from the Parkinson’s Foundation. We are grateful to the participants in the Personalized Parkinson’s Project and the Parkinson’s Progression Markers Initiative who volunteered for these studies, wore the Study Watch for many months, and contributed their experiences for research.

Author information

Authors and Affiliations

Contributions

1. Research project: A. Conception, B. Organization, C. Execution; 2. Statistical Analysis: A. Design, B. Execution, C. Review and Critique; 3. Manuscript Preparation: A. Writing of the first draft, B. Review and Critique. M.J.M.: 1A, 1B, 1C, 2C, 3A, 3B. L.H.: 1A, 1B, 1C, 2C, 3A, 3B. K.C.H.: 2A, 2B, 3B, L.R.: 1A, 3B, Ch.L.: 1A, 3B, B.R.B.: 1A, 2C, 3B. W.J.M. Jr: 1A, 2C, 3B. R.K.: 1A, 1B, 1C, 2C, 3B.

Corresponding author

Ethics declarations

Competing interests

The funders Verily LLC and the Parkinson’s Progression Markers Initiative, were involved in the analysis and interpretation of data, and the writing of this manuscript. Author MJM declares no financial or non-financial competing interests. The Michael J Fox Foundation provides research funding to Indiana University that supports the salary of author LH but declares no non-financial competing interests. Authors KCH and CL are currently employed by, and currently hold shares in, Verily Life Sciences, but declare no non-financial competing interests. Author LR is currently employed by, and currently holds shares in, Verily Life Sciences, but declares no non-financial competing interests. Author WJM was previously employed by, and currently serves as a paid consultant for, Verily Life Sciences but declares no non-financial competing interests. Author RK was previously employed by, and currently holds shares in, Verily Life Sciences, but declares no non-financial competing interests. Author BRB serves as the co-Editor in Chief for the Journal of Parkinson’s disease, serves on the editorial board of Practical Neurology and Digital Biomarkers, has received fees from serving on the scientific advisory board for the Critical Path Institute, Gyenno Science, MedRhythms, UCB, Kyowa Kirin and Zambon (paid to the Institute), has received fees for speaking at conferences from AbbVie, Bial, Biogen, GE Healthcare, Oruen, Roche, UCB and Zambon (paid to the Institute), and has received research support from Biogen, Cure Parkinson’s, Davis Phinney Foundation, Edmond J. Safra Foundation, Fred Foundation, Gatsby Foundation, Hersenstichting Nederland, Horizon 2020, IRLAB Therapeutics, Maag Lever Darm Stichting, Michael J Fox Foundation, Ministry of Agriculture, Ministry of Economic Affairs & Climate Policy, Ministry of Health, Welfare and Sport, Netherlands Organization for Scientific Research (ZonMw), Not Impossible, Parkinson Vereniging, Parkinson’s Foundation, Parkinson’s UK, Stichting Alkemade-Keuls, Stichting Parkinson NL, Stichting Woelse Waard, Health Holland / Topsector Life Sciences and Health, UCB, Verily Life Sciences, Roche and Zambon. Author BRB does not hold any stocks or stock options with any companies that are connected to Parkinson’s disease or to any of his clinical or research activities.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Meinders, M.J., Heathers, L., Ho, K.C. et al. Optimizing wrist-worn wearable compliance with insights from two Parkinson’s disease cohort studies. npj Parkinsons Dis. 11, 152 (2025). https://doi.org/10.1038/s41531-025-01016-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41531-025-01016-w