Abstract

Hybrid quantum-classical approaches offer potential solutions to quantum chemistry problems, yet they often manifest as constrained optimization problems. Here, we explore the interconnection between constrained optimization and generalized eigenvalue problems through the Unitary Coupled Cluster (UCC) excitation generators. Inspired by the generator coordinate method, we employ these UCC excitation generators to construct non-orthogonal, overcomplete many-body bases, projecting the system Hamiltonian into an effective Hamiltonian, which bypasses issues such as barren plateaus that heuristic numerical minimizers often encountered in standard variational quantum eigensolver (VQE). Diverging from conventional quantum subspace expansion methods, we introduce an adaptive scheme that robustly constructs the many-body basis sets from a pool of the UCC excitation generators. This scheme supports the development of a hierarchical ADAPT quantum-classical strategy, enabling a balanced interplay between subspace expansion and ansatz optimization to address complex, strongly correlated quantum chemical systems cost-effectively, setting the stage for more advanced quantum simulations in chemistry.

Similar content being viewed by others

Introduction

Accurately obtaining ground and excited state energies, along with the corresponding many-body wave functions, is pivotal in comprehending diverse physical phenomena in molecules and materials. This ranges from high-temperature superconductivity in materials like cuprates1 and bond-breaking chemical reactions to complex electronic processes in biological and synthetic catalysts with transition metals2 or f-block atoms3. The associated spin, electronic properties, and dynamics are crucial for deciphering the structure-property-function correlation in various fields, including catalysis, sensors, and quantum materials. However, this task becomes exceptionally challenging in the presence of non-trivial quantum effects, such as strong electron correlation, which influence the evolution of nuclei, electrons, and spins under external stimuli. Conventional wave function methodologies, like configuration interaction, coupled cluster, and many-body perturbation theory, are tailored for diverse electron correlation scenarios4. Still, they often fall short in handling complex cases or exhibit prohibitive scaling with increasing system size. Consequently, this has become a vigorous area of computational research, encompassing both classical and burgeoning quantum computing studies. The primary objective is to strike an optimal balance between accuracy and computational scalability.

In the realm of quantum computing, significant strides have been made towards promising near-term hybrid quantum-classical strategies, which includes variational quantum algorithm5,6,7,8,9,10,11,12,13,14,15,16,17,18,19, quantum approximate optimization algorithm20,21, quantum annealing22,23, Gaussian boson sampling24, analog quantum simulation25,26, iterative quantum assisted eigensolver17,27,28,29, and many others. These approaches typically delegate certain computational tasks to classical computers, thereby conserving quantum resources in contrast to exclusively to quantum methods. Within this framework, the variational quantum eigensolver (VQE) and its adaptive derivatives are seen as the frontrunners in leveraging near-future quantum advantages5,14. However, these anticipations are also potentially impeded by the heuristic nature inherent in the critical optimization processes. Issues such as the rigor of ansatz exactness, the challenges in navigating potential energy surfaces replete with numerous local minima, and the numerical nuances in minimization techniques, remain nebulous. These queries are further convoluted when considering the scalability of these methods concerning the number of operators or the depth of quantum circuits involved.

As an alternative to VQE, which employs a highly nonlinear parametrization of the wave function, other near-term strategies aim to construct and traverse a subspace within the Hilbert space to closely approximate the desired state and energy. Typical examples include the quantum subspace expansion11,30,31,32,33, the hybrid and quantum Lanczos approaches27,34,35, quantum computed moment approaches29,36,37,38,39, and quantum equation-of-motion approach40. These strategies often draw inspiration from truncated configuration interaction approaches or explore the Krylov subspace (see, e.g., ref. 41 for a recent review on subspace methods for electronic structure simulations on quantum computers). However, the comparison and interrelation of these methods, especially when contrasting subspace expansion with nonlinear optimization, frequently remain unclear. This uncertainty is exacerbated by the choice of ansätze, which rarely guarantees exactness.

Recently, motivated from the generator coordinate approaches42,43,44,45,46,47,48,49, we developed a generator coordinate inspired nonorthogonal quantum eigensolver as an alternative near-term approach50, potentially addressing the limitations identified in the VQE/ADAPT-VQE. Similar to its contemporaries, the Generator Coordinate Inspired Method (GCIM) employs low-depth quantum circuits and efficiently utilizes existing ansätze to explore a subspace, targeting specific states and energy levels. Nonetheless, the original GCIM approach demands a priori knowledge of the system to meticulously select both the ansätze and generators, thereby circumventing heuristic approaches but potentially affecting its scalability and efficiency.

In this study, we present novel quantum-classical hybrid approaches, inspired by the GCIM approach, aimed at establishing a theoretically exact yet automated subspace expansion procedure. This approach addresses the optimization challenges inherent in conventional VQE methods while maintaining an adaptive framework. Recent efforts have either focused on practical solutions to mitigate some of these optimization limitations-such as leveraging domain-specific knowledge from classical quantum chemistry to construct high-quality wave functions51-or have moved toward automated circuit-subspace VQE and its adaptive algorithms, though they still require optimization52,53. In our approach, we use the conventional Unitary Coupled Cluster (UCC) excitation generators as the basis for subspace expansion, establishing a lower bound on the constrained optimization problem typically encountered in VQE. More importantly, we introduce an optimization-free, gradient-based automated basis selection method from the UCC operator pool. This allows us to develop a hierarchical ADAPT quantum-classical strategy that enables controllable interplay between subspace expansion and ansatz optimization. Our preliminary results suggest that these new approaches not only excel in addressing strongly correlated molecular systems but also significantly reduce simulation time, facilitating deployment on real quantum computers.

Results

Comparison between VQE and GCIM approaches: general eigen-probelm vs. constrained optimization

We provided a brief review of generator coordinate methods (GCM) in Supplementary Information (Section I). A primary advantage of GCM is that variation occurs in the generating function, rather than directly on the scalar generator coordinate. This distinction enables the variational problem to be addressed through a straightforward eigenvalue process, contrasting with solving a constrained optimization problem, the advantages of which can even be demonstrated with a simple toy model.

Building on this, as depicted in Fig. 1, we explore the ground state of a toy model comprising two electrons and four spin orbitals using a VQE approach. The VQE approach is mathematically represented as:

where \(\vert {\psi }_{VQE}(\vec{\theta })\rangle\) employs Trotterized UCC single-type ansätze

with \(\left\vert {\phi }_{0}\right\rangle\) being a reference state and \({G}_{{p}_{i},{q}_{i}}({\theta }_{i})=\exp [{\theta }_{i}({A}_{{p}_{i},{q}_{i}}-{A}_{{p}_{i},{q}_{i}}^{\dagger })]\) being a single excitation Givens rotation and \({A}_{p,q}={a}_{p}^{\dagger }{a}_{q}\). The VQE approach here explores a two-dimensional parameter subspace. The consecutive actions of G2,4 and G1,3 generate four distinct configurations, including the reference. However, due to fewer free parameters than distinct configurations required, the VQE approach is constrained, unable to fully explore the configuration space to guarantee the most optimal solution, irrespective of the numerical minimizer used. In contrast, GCIM solver employs the same Givens rotations, separately or in the product form as discussed by Fukutome54, to create four generating functions. These functions correspond to a subspace consisting of four non-orthogonal superpositioned states, providing a scope sufficiently expansive to encapsulate the target state. Here, each Givens rotation is essentially a UCC single circuit, simplifying the quantum implementation compared to its double or higher excitation analogs.

a A toy model consists of two-electron (one alpha electron and one beta electron) in four spin-orbitals (two alpha spin-orbitals and two beta spin-orbitals), where the spin-flip transition is assumed forbidden. b The projection of the exact wave function on each configuration (yellow shadow). A constrained optimization of the free parameters will put limits on the projection (dashed line). c Comparative demonstration between GCIM method and VQE. The GCIM method generates a set of non-orthogonal bases, called generating functions. Then, the GCIM explores the projection of the system on these generating functions and solves a corresponding generalized eigenvalue problem for the target state and its energy. The conventional VQE essentially explores the parameter subspace for a given wave function ansatz through numerical optimization that can be usually constrained by many factors ranging from ansatz inexactness to barren plateaus and others. For given Givens rotations that generated excited state configurations, the lowest eigenvalue obtained from the GCIM method guarantees a lower bound of the most optimal solution from the VQE. It is worth mentioning that: (i) for a standard (single-circuit) VQE, having one two-qubit Givens rotation acting on a standard quantum-chemistry reference (restricted Hartree-Fock) would not improve the energy estimate; (ii) “fermionic swap” gates71 would be required if the excitations included in the ansätze involve spin-orbitals that are not mapped onto adjacent qubits for a given quantum architecture.

From the performance difference we observe that

-

Although the disentangled UCC ansätze can be crafted to be exact55, the ansätze employed in GCIM approach or in the generalized eigenvalue problem need not be exact. The only prerequisite for the ansätze used in this process is their ability to generate sufficient superpositioned states for a better approximation to the target state to be found in the corresponding subspace.

-

The N-qubit Givens rotations (N ≥ 2) used in both VQE and GCIM solver may originate from the same set, ensuring a comparable level of complexity in the quantum circuits involved. To achieve the same level of exactness, VQE would require a deeper circuit by incorporating additional Givens rotations, while the GCIM algorithm necessitates more measurements (than a single VQE iteration). A direct comparison of the total quantum resources utilized between the two methods would hinge on both the number of generating functions required in the GCIM and the number of iterations needed in VQE.

-

In the context of non-orthogonal quantum eigensolvers51,53,56,57, entangled basis sets are usually employed by applying entanglers on a single determinant reference. Some of these approaches rely on the modified Hadamard test of ref. 12 for evaluating the off-diagonal elements at a polynomial cost. Others53,57 often require domain-specific knowledge available in classical quantum chemistry to construct high-quality wave functions. Nevertheless, automating the sophisticated ansatz construction process to facilitate easier deployment of the non-orthogonal quantum solver, especially for non-experts, remains a challenge.

These observations then lead us to the pivotal question in advancing a more efficient GCIM algorithm for both classical and quantum computation: “How can we efficiently select the generating functions to construct a subspace in the Hilbert space that encompasses the target state?”

The scaling of number of generating functions: From exponential to linear

In constructing a non-trivial UCC ansatz for a molecular system comprising ne electrons in N spin orbitals, one might consider applying a sequence of K ≤ ne Givens rotations to a reference state \(\left\vert {\phi }_{0}\right\rangle\) to generate all the possible configurations. For example, if each Givens rotation corresponds to a single excitation, the disentangled UCC Singles ansatz can be expressed as:

where each \({G}_{{p}_{i},{q}_{i}}\) generates a superposition of no more than two states. Therefore, the total number of configurations, nc, within the superpositioned ansätze (3) —where \(\overrightarrow{\theta }=\{{\theta }_{i}| i=1,\cdots \,,K\}\) varies —cannot exceed 2K. This number indicates the maximum number of generating functions utilized to achieve the most optimal solution within the corresponding subspace but exhibits an exponential scaling relative to the count of Givens rotations applied. A strategy to potentially generate the full set of 2K generating functions involves applying 1, 2, ⋯, N two-qubit Givens rotations separately to the reference, as shown below and in Fig. 2:

-

\(\vert {\phi }_{\!0}\rangle ,\)

-

\({G}_{{p}_{i},{q}_{i}}({\theta }_{\!i})\vert {\phi }_{0}\rangle ,\,\,1\le i\le K,\)

-

\({G}_{{p}_{i},{q}_{i}}({\theta }_{i}){G}_{{p}_{j},{q}_{j}}({\theta }_{j})\left\vert {\phi }_{0}\right\rangle ,\,\,1\le i < j\le K,\)

-

\({G}_{{p}_{i},{q}_{i}}({\theta }_{i}){G}_{{p}_{j},{q}_{j}}({\theta }_{j}){G}_{{p}_{k},{q}_{k}}({\theta }_{k})\left\vert {\phi }_{0}\right\rangle ,\,\,1\le i < j < k\le K,\)

-

⋮

-

\(\mathop{\prod }\nolimits_{i = 1}^{K}{G}_{{p}_{i},{q}_{i}}({\theta }_{i})\left\vert {\phi }_{0}\right\rangle\)

Here, the number of generating functions employing k Givens rotations is equivalent to the number of k-combinations from the set of K Givens rotations, aligning with the combinatorial identity \(\mathop{\sum }\nolimits_{k = 0}^{K}\left(\begin{array}{c}K\\ k\end{array}\right)={2}^{K}\). Importantly, when K = ne, each sequence of Givens rotations in the above list can be tailored to include a unique excited configuration within the associated subspace, thereby mapping the entire configuration space to ensure exactness in this limit. This characteristic bears notable similarity to full configuration interaction treatments, with the distinctive aspect that this approach constructs a non-orthogonal many-body basis set. In practice, to capture strong correlation with a fewer number of generating functions, generalized N-qubit Givens rotations are commonly applied in quantum chemical simulations. A typical example includes the particle-hole one- and two-body unitary operators frequently employed in VQE and ADAPT-VQE simulations. Notably, it has been demonstrated that products of only these particle-hole one- and two-body unitary operators can approximate any state with arbitrary precision55.

It’s important to recognize that the generating functions, including the ansätze, are not generally orthogonal. This non-orthogonality necessitates extra resources for evaluating the associated off-diagonal matrix elements and the entire overlap matrix for solving a generalized eigenvalue problem. Additionally, if higher-order Givens rotations are involved, the configuration subspace expansion will be influenced by the ordering of the Givens rotations in the ansätze. Nevertheless, the ordering limitation can be partially circumvented by allowing the possible permutations of the Givens rotations within the generating functions.

The strategy outlined above can also be employed to establish a hierarchy of approximations to the GCIM wave function, targeting a configuration subspace where the ansatz (3) is fully projected out. For example, the approximation at level k (0 ≤ k < K) can be defined by a working subspace that incorporates both the ansatz (3) and some generating functions produced by acting a product of at most k Givens rotations on the reference. Notably, when k = K, regardless of the rotations in the generating functions, the configuration subspace has expanded sufficiently for the ansatz (3) to be fully projected out, completely removing the constraints in the optimization. For other approximations where 0 ≤ k < K, a variational procedure can be undertaken before the configurational subspace expansion, aiming to deduce a lower bound for the optimal expectation value of H with respect to the ansatz (3). In this fashion, the GCIM solver can be implemented as a subsequent step either after each VQE iteration or following a complete VQE calculation to unleash some constraints due to the local minima and the inexactness of the ansatz.

Integration of GCIM with ADAPT approach

To facilitate a more robust selection of generating functions, we consider the fluctuation of the GCIM energy when a new generating function is included. In the context of VQE, similar considerations have led to the development of its ADAPT (Adaptive Derivative-Assembled Pseudo-Trotter) version. In the GCIM framework, several routines can be proposed for a gradient-based ADAPT-GCIM approach. A straightforward method is to directly compute the GCIM energy gradient with respect to the scalar rotation, as detailed in the Supplementary Information (Section III). The energy gradient computed in this way depends on the eigenvector solved from the GCIM general eigenvalue problem after the inclusion of new generating functions. However, when solving a generalized eigenvalue problem, the eigenvalue (i.e. the energy) is more sensitive to the working subspace than to the scalar rotation in the employed generating functions. This sensitivity is exemplified in the Lemma 1 of Theorem 1 in the Supplementary Information (Section II), where, for a 2 × 2 generalized eigenvalue problem, the choice of the scalar rotations in the employed generating functions is relatively flexible.

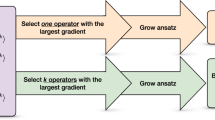

This consideration leads us to propose a more flexible, gradient-based ADAPT-GCIM algorithm (illustrated in Fig. 2, 3a). In this approach, we use a surrogate product state \({\vert \psi \rangle }_{s}\) that, irrespective of its specific non-singular scalar rotations, primarily serves as a metric to (i) abstract the change in the GCIM working subspace and (ii) approximate the GCIM energy gradient calculation. Utilizing this metric, we can compute the gradient in a manner similar to that in the conventional ADAPT-VQE. However, a significant difference from ADAPT-VQE is that in ADAPT-GCIM, we skip the optimization of phases, which as we will see later significantly reduces the simulation time. Also, the energy in ADAPT-GCIM is not directly obtained from the measurement of the expectation value with respect to the surrogate state. Instead, it is obtained from solving a generalized eigenvalue problem within the working subspace.

a Schematic depiction of the ADAPT-GCIM algorithm in (n + 1)-th iteration. The working subspace and its corresponding configuration subspace are characterized by a surrogate state \({\vert \psi \rangle }_{s}\). b In a two-configuration subspace, a Givens rotation can couple two configurations, effectively generating a surrogate state, a superposition of two configurations, that can be employed as a metric to characterize this subspace. The quality of the working subspace, comprising one configuration and the surrogate state, is not sensitive to the specific choice of scalar rotation.

Numerical and real hardware experiments

We have performed numerical experiments on four molecular systems across seven geometries to test the performance of our proposed ADAPT-GCIM approach in searching the ground states corresponding to different electronic configurations. All experiments use STO-3G basis. For instance, the more pronounced quasidegeneracy in the almost-square H4 compared to linear H450, and in stretched H6 as opposed to compact H6, presents strong static correlations that pose a challenge for traditional single-reference methods58. Additionally we proposed two GCIM modified ADAPT-VQE approaches: ADAPT-VQE-GCIM and ADAPT-VQE-GCIM(1) for performance analysis. In the ADAPT-VQE-GCIM approach, a GCIM step is performed after each ADAPT-VQE iteration, while in the ADAPT-VQE-GCIM(1) approach, a ‘one-shot’ GCIM calculation is performed at the conclusion of an ADAPT-VQE calculation (see Supplementary Information (Section IV) for more details). The performance results, as shown in Fig. 4, include comparisons with ADAPT-VQE. It is evident that the GCIMs offer several strategies for locating a lower bound to the ADAPT-VQE energy. In all the test cases, the ADAPT-VQE-GCIM and ADAPT-GCIM approaches exhibit steady monotonic converging curves, almost always reaching close to machine precision. To verify the accuracy, we reconstruct the ADAPT-GCIM approximation to the ground-state vector of H by

where f is the eigenvector solved from the generalized eigenvalue problem in ADAPT-GCIM at the final iteration and \(\{\vert {\psi }_{j}\rangle \}\) is the selected basis set. \({\mathcal{N}}\) denotes the normalization factor. Table 1 provides the overlaps between the exact ground-state vector, \(\vert {\psi }_{exact}\rangle\), and corresponding GCIM approximation for all molecules in this work, numerically proving the accuracy of the ADAPT-GCIM method. Furthermore, compared to ADAPT-VQE, GCIM approaches perform better in strongly correlated cases. For example, in the case of H6, as the H − H bond length increases, the number of ADAPT-GCIM or ADAPT-VQE-GCIM iterations decreases, while the number of ADAPT-VQE iterations increases.

For comparison, results from ADAPT-VQE, ADAPT-VQE-GCIM, and ADAPT-VQE-GCIM(1) are also included. The shaded region is when error is smaller than the chemical accuracy. ADAPT-VQE and ADAPT-VQE-GCIM are set to converged when the sum of the magnitudes of all gradients falls below 10−4. ADAPT-GCIM is terminated if the changes on lowest eigenvalue are under 10−6 a.u. for at most 10 (for H4) or 25 (the other molecules) consecutive iterations, where this threshold depends on the size of the operator pool and the amount of unchosen ansätze.

Using the same set of circuits, the GCIM formalism, in addition to ground-state energies, provides estimates of the excited-state energies corresponding to low-lying excited states. In Table 2, we compare excitation energies corresponding to EOMCCSD (equation-of-motion CC approaches with singles and doubles)59, EOMCCSDT (equation-of-motion CC approaches with singles, doubles, and triples)60, and the ADAPT-GCIM approaches. The character of excited states collated in Table 2 correspond to states of mixed configurational character dominated by single and double excitations from the ground-state Hartree-Fock determinant (SD) and challenging states dominated by double excitations (D). For the challenging low-lying doubly excited state of the H6 system at RH−H = 1.8521 Å, the ADAPT-GCIM approach yields excitation energy in good agreement with the EOMCCSDT one and outperforming the accuracy the EOMCCSD estimate.

In assessing the quantum resources needed for the ADAPT approaches, it is apparent that ADAPT-VQE-GCIM and ADAPT-VQE-GCIM(1) require additional resources compared to ADAPT-VQE approach to improve energy results. On the other hand, the direct comparison between ADAPT-VQE and ADAPT-GCIM is not straightforward. For example, the number of CNOT gates in ADAPT-VQE and ADAPT-GCIM simulations depends on the ansatz preparation and the number of measurements to reach a desired accuracy. Fig. 5 demonstrates the total number of CNOTs for preparing the following ansätze:

-

\(\mathop{\prod }\nolimits_{i = 1}^{k}{G}_{i}({\theta }_{i})\vert {\phi }_{{\rm{HF}}}\rangle\) at the k-th ADAPT-VQE iteration;

-

\({G}_{k}({\theta }_{k})\vert {\phi }_{{\rm{HF}}}\rangle\) and \(\mathop{\prod }\nolimits_{i = 1}^{k}{G}_{i}({\theta }_{i})\vert {\phi }_{{\rm{HF}}}\rangle\) at the k-th ADAPT-GCIM iteration,

at each energy error level. Here, Gi is either UCC single or double excitation operator, and chosen from the same operator pool. As can be seen, the ADAPT-GCIM, compared to ADAPT-VQE, appears requiring fewer CNOT gates for more strongly correlated cases.

For ADAPT-VQE, such ansatz is the product of operators that prepare the VQE state \(\vert {\psi }_{VQE}\rangle\). For ADAPT-GCIM, the newly formed ansätze are the newly selected Givens rotation operator and the product of Givens rotation operators. All circuits are generated from the order-1 trottered fermionic operators by Qiskit. The reduced counts are obtained using qiskit.transpile with optimization level 3, which includes canceling back-to-back CNOT gates, commutative cancellation, and unitary synthesis. Our estimations to ADAPT-VQE match with the corresponding calculations in ref. 61. A further discussion on the calculation of the number of CNOT gates is in the Supplementary Information (Section V).

Regarding measurements in fault tolerant quantum computation, ADAPT-VQE requires measurements for VQE and gradient calculations at each iteration. The number of VQE measurements depends on the average optimizations per iteration (\({\tilde{N}}_{{\rm{opt}}}\)) and the total number of iterations (Niter). Gradient measurements are roughly equal to the size of the pool multiplied by the number of unitary terms in the Hamiltonian (Nterm), which can be reduced with grouping techniques. Thus, total measurements for ADAPT-VQE scales as \({\mathcal{O}}({\tilde{N}}_{{\rm{opt}}}\times {N}_{{\rm{iter}}}+{N}_{{\rm{term}}}\times {N}_{{\rm{iter}}})\). In ADAPT-GCIM, measurements are needed for gradients and constructing the H and S matrices. The latter scales as the squared number of generating functions, \({\mathcal{O}}({N}_{{\rm{GF}}}^{2})\), but per iteration, it only grows as \({\mathcal{O}}({N}_{{\rm{GF}}})\), assuming a linear increase of generating functions with iterations. Since \({N}_{{\rm{GF}}} \sim {\mathcal{O}}({N}_{{\rm{iter}}})\), measurement comparison between the two ADAPT approaches reduces to \({\mathcal{O}}({\tilde{N}}_{{\rm{opt}}}\times {N}_{{\rm{iter(VQE)}}})\) vs. \({\mathcal{O}}({N}_{{\rm{iter(GCIM)}}}^{2})\).

It is worth mentioning, for weakly or moderately correlated cases, ADAPT-GCIM usually requires more iterations than ADAPT-VQE as shown in Fig. 4. The reason could be partially attributed to the ordering of the operators due to the skip of the parameter optimization, as well as the non-orthogonal nature of the bases. To enforce orthogonality of the GCIM bases, a secondary subspace projection can be performed on the effective Hamiltonian as illustrated in Section VI of the Supplementary Information, where the numerical simulations indeed exhibit slightly better convergence performance from some molecules (see LiH and H6 performance curves in Fig. S1). To improve the convergence due to the ordering of the operators, in Section VIII of the Supplementary Information, we consider a middle ground between ADAPT-VQE-GCIM and ADAPT-GCIM by allowing intermittent and truncated optimization, where for every m ADAPT iterations (“intermittent”), at most n rounds (“truncated”) of classical optimization can be conducted on ansatz parameters over the corresponding objective function. The proposed ADAPT-GCIM(m, n) approach indeed provides a significant improvement on the convergence speed: ADAPT-GCIM(5,2) reaches 10−6 error level faster than ADAPT-GCM and ADAPT-VQE as evidenced in Fig. S3 for two H6 test cases. Also, as shown in Table 3, the extra optimization rounds and simulation time brought by introducing the intermittent and truncated optimization to the ADAPT-GCM can be very minimal.

To account for the impact of optimization on the performance difference, we list the total rounds of classical optimization and simulation times for the energy calculations of seven geometries using the ADAPT approaches on the same computing platform in Table 3. Remarkably, even though the circuit for obtaining the H and S matrices in ADAPT-GCIM is different from the Hadamard circuit used in ADAPT-VQE, where the off-diagonal matrix elements requiring more CNOT gates for simultaneous base preparation, the ADAPT-GCIM approaches still significantly outperform the ADAPT-VQE in simulation time, in particular for more strongly correlated cases. For example, for the strongly correlated H6 molecule at a stretched H−H bond length of 5.0000 Å, the GCIM approaches completed in 12 –13 s, while ADAPT-VQE required approximately four hours to achieve a similar level of accuracy. It is important to note that stretched molecules often exhibit multi-reference characteristics, posing significant challenges for single-reference methods. However, quantum approaches, including VQEs and GCIM, with appropriately designed circuits/operators that account for this multi-reference nature should, in principle, offer superior simulation performance.

In practice, real quantum measurements often encounter significant noise, especially in solving the generalized eigenvalue problem where finite shot uncertainty is a concern50. Stronger correlations typically require substantially more measurements than weaker ones. As detailed in the Supplementary Information (Section VII), errors in the S matrix have a greater impact than those in the H matrix. However, with importance sampling, accuracy can still be improved by orders of magnitudes in most cases despite these challenges (see Fig. S2). We deployed the ADAPT-GCIM computation of the strongly correlated linear H4 molecule on an IBM superconducting quantum computer, ibm_osaka, to conduct a rudimentary test of the applicability of the GCIM approaches on emerging hardware. As shown in the Supplementary Information (Section IX), with problem-specific error mitigation methods, the error in ground-state energy estimation was reduced from 0.046 a.u. to 3.9 × 10−9 a.u.

Discussion

The GCIM approach stands out among other subspace expansion and generalized eigenvalue problem-solving methods due to its size-extensiveness, attributed to its use of UCC ansätze as bases. The approach’s scalability for larger systems will be enhanced by its direct application to qubit space, using the exponential of anti-hermitian Pauli strings as generating functions. This strategy aligns with developments like qubit-ADAPT-VQE, which offers scalability for the ADAPT-VQE method61.

The straightforwardness of the GCIM ansätze makes it a promising solver also for excited state computation and Hamiltonian downfolding, as demonstrated in the ADAPT-GCIM procedure (Fig. 3a). This lays the foundation for advanced quantum simulations in chemistry. To further characterize the quality of the ADAPT-GCIM wave function, we find the UCC-type ansatz that maximizes the overlap with the ADAPT-GCIM optimized state and find the corresponding UCC energy. That is, we need to find \(\vert {\psi }_{UCC}^{* }\rangle := \vert {\psi }_{UCC}({\vec{\theta }}^{* })\rangle\) where

The most straightforward scheme is to use the UCCSD ansatz. We illustrate the quality of the UCCSD ansatz defined in such a way through eight examples in Table 4, where Eexact is the exact ground-state energy and EUCCSD is its approximation from \(\left\vert {\psi }_{UCCSD}^{* }\right\rangle\). The optimizer for UCCSD ansatz is the limited-memory BFGS (L-BFGS) algorithm.

Last but not least, while the gate counts in Fig. 5 match with the estimations for fermionic operators in ref. 61, the CNOT counts can be greatly reduced by the implementations in other works in the future57,62,63,64. Another important direction to explore is to evade Hadamard test for evaluating off-diagonal matrix entries in ADAPT-GCIM to effectively reduce the hardware requirements in the circuit implementation12,41,65.

Methods

The operator pool employed in all the ADAPT simulations in the present study consists of generalized singly and doubly spin-adapted excitation operators, as explicitly shown in the Supplementary Information (Section V). In all the ADAPT-VQE simulations, the convergence is achieved when the norm of the gradient vector is less than 10−4. Here, we use a more strict criteria than the one in ref. 66 to show the full picture of the performance of ADAPT-VQE. Other criteria, such as the magnitude of the change of the lowest eigenvalue, can be used for earlier convergence with the assistance of ADAPT-VQE-GCIM.

For ADAPT-GCIM, the algorithm terminates when the change in the lowest eigenvalue is smaller than 10−6 a.u. after \(T=\min \{{T}_{auto},{T}_{usr}\}\) number of consecutive ADAPT iterations, where Tauto is set to 20% of the number of unselected operators in the pool and Tusr is a user-defined constant. In this work, Tusr is set to 10 for H4 and 25 for the other molecules, reflecting different sizes of operator pools. The reason why the termination condition is heuristic, rather than gradient-based like ADAPT-VQE, is that ADAPT-GCIM uses VQE’s objective function, as an approximation, along with unoptimized parameter values during the ansatz selection. Hence, at the later iterations, while ADAPT-GCIM may already provide highly accurate energy estimations, the norm of the gradient vector at the operator selection can still remain relatively large.

One issue in the GCIM simulations is the numerical instability of solving the generalized eigenvalue problem. It is possible that the overlap matrix S becomes numerically indefinite at certain iterations. In classical computing, such issue can be perfectly avoided by orthogonalizing the generating function basis set. However, in quantum computing, the quantum version of the orthogonalization algorithm like the quantum Gram-Schmidt process usually requires extra quantum resources, such as QRAM67. In the present study, when a singular overlap matrix is encountered, we choose to project both H and S using the eigenvectors of S corresponding to large positive eigenvalues33. Without the disturbance due to the finite number of shots, the threshold for a sufficiently “large” eigenvalue can be as relaxed as 10−13. Otherwise, under the finite-sampling noise (see Section VII of the Supplementary Information), this threshold is usually set to 10−5 and 10−6. A comparison test in the Supplementary Information (Section VI) shows that this mitigation is very effective in achieving the accuracy levels reachable by GCIM methods.

In the proposed ADAPT-VQE-GCIM(1), the number of bases in the working subspace equals to the number of Givens rotations in the ansatz generated at the last ADAPT-VQE iteration, incremented by one. This increment accounts for the product of these Givens rotations corresponding to the ansatz itself. Conversely, in the ADAPT-GCIM and ADAPT-VQE-GCIM approaches, the number of bases is twice the number of iterations. To illustrate this, consider the k-th iteration (k > 1): when the Givens rotation Gk with the greatest gradient is selected, two associated basis vectors,

-

\({G}_{k}({\theta }_{k})\left\vert {\phi }_{{\rm{HF}}}\right\rangle\) and

-

\({G}_{k}({\theta }_{k})\mathop{\prod }\nolimits_{i = 1}^{k-1}{G}_{i}({\theta }_{i})\left\vert {\phi }_{{\rm{HF}}}\right\rangle\)

are added to the working subspace. When k = 1, the basis set is \(\{\vert {\phi }_{{\rm{HF}}}\rangle ,{G}_{1}({\theta }_{1})\vert {\phi }_{{\rm{HF}}}\rangle \}\). So the number of bases is increased by two in every iteration. The key difference is that in ADAPT-GCIM, the phase is set to a constant scalar (e.g., π/4) for any Givens rotation in the ansatz, while in ADAPT-VQE-GCIM, the phases θ1 to θk are optimized during the k-th iteration. In all the experiments of ADAPT-GCIM, θk is set to π/4 for all iterations.

The implementation of ADAPT-VQE uses the original code in ref. 66 from the corresponding repository68, and its computation is based on the SciPy sparse linear algebra package69. To have the same foundation for comparison, calculations in Fig. 4 for three GCIM algorithms follow the same manner. The quantum resources estimation, shown in Fig. 5, utilizes Qiskit70 for trotterization and circuit generation for excitation operators. The data in Table 4 were computed on NERSC Perlmutter supercomputer. The rest of numerical experiments were carried on a laptop with an Apple M3 Max chip.

Data availability

The datasets generated and/or analyzed during the current study are available in the GitHub repository (https://github.com/pnnl/QuGCM).

Code availability

The underlying code [and training/validation datasets] for this study is available in “QuGCM” GitHub repository and can be accessed via this link https://github.com/pnnl/QuGCM.

References

Imada, M., Fujimori, A. & Tokura, Y. Metal-insulator transitions. Rev. Mod. Phys. 70, 1039–1263 (1998).

Witzke, R. J., Hait, D., Chakarawet, K., Head-Gordon, M. & Tilley, T. D. Bimetallic mechanism for alkyne cyclotrimerization with a two-coordinate fe precatalyst. ACS Catal. 10, 7800–7807 (2020).

Gould, C. A. et al. Ultrahard magnetism from mixed-valence dilanthanide complexes with metal-metal bonding. Science 375, 198–202 (2022).

Shavitt, I. & Bartlett, R.Many-Body Methods in Chemistry and Physics: MBPT and Coupled-Cluster Theory. Cambridge Molecular Science (Cambridge University Press, 2009). https://books.google.com/books?id=SWw6ac1NHZYC.

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014).

McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. N. J. Phys. 18, 023023 (2016).

Romero, J. et al. Strategies for quantum computing molecular energies using the unitary coupled cluster ansatz. Quantum Sci. Technol. 4, 014008 (2018).

Shen, Y. et al. Quantum implementation of the unitary coupled cluster for simulating molecular electronic structure. Phys. Rev. A 95, 020501 (2017).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242–246 (2017).

Kandala, A. et al. Error mitigation extends the computational reach of a noisy quantum processor. Nature 567, 491–495 (2019).

Colless, J. I. et al. Computation of molecular spectra on a quantum processor with an error-resilient algorithm. Phys. Rev. X 8, 011021 (2018).

Huggins, W. J., Lee, J., Baek, U., O’Gorman, B. & Whaley, K. B. A non-orthogonal variational quantum eigensolver. N. J. Phys. 22, 073009 (2020).

Cao, Y. et al. Quantum chemistry in the age of quantum computing. Chem. Rev. 119, 10856–10915 (2019).

Grimsley, H. R., Economou, S. E., Barnes, E. & Mayhall, N. J. An adaptive variational algorithm for exact molecular simulations on a quantum computer. Nat. Commun. 10, 1–9 (2019).

Grimsley, H. R., Claudino, D., Economou, S. E., Barnes, E. & Mayhall, N. J. Is the trotterized uccsd ansatz chemically well-defined? J. Chem. theory Comput. 16, 1–6 (2019).

Verteletskyi, V., Yen, T.-C. & Izmaylov, A. F. Measurement optimization in the variational quantum eigensolver using a minimum clique cover. J. Chem. Phys. 152, 124114 (2020).

McArdle, S. et al. Variational ansatz-based quantum simulation of imaginary time evolution. npj Quantum Inf. 5, 1–6 (2019).

McArdle, S., Endo, S., Aspuru-Guzik, A., Benjamin, S. C. & Yuan, X. Quantum computational chemistry. Rev. Mod. Phys. 92, 015003 (2020).

Tilly, J. et al. The variational quantum eigensolver: a review of methods and best practices. Phys Rep. 986, 1682 (2022).

Farhi, E., Goldstone, J. & Gutmann, S. A quantum approximate optimization algorithm. arXiv preprint arXiv:1411.4028 (2014).

Stein, S. et al. Eqc: ensembled quantum computing for variational quantum algorithms. In Proceedings of the 49th Annual International Symposium on Computer Architecture, 59–71 (2022).

Bharti, K. et al. Noisy intermediate-scale quantum (nisq) algorithms. Rev. Mod. Phys. 94, 015004 (2022).

Albash, T. & Lidar, D. A. Adiabatic quantum computation. Rev. Mod. Phys. 90, 015002 (2018).

Aaronson, S. & Arkhipov, A. The computational complexity of linear optics. In Proceedings of the Forty-Third Annual ACM Symposium on Theory of Computing, STOC ’11, 333–342 (Association for Computing Machinery, New York, NY, USA, https://doi.org/10.1145/1993636.1993682 (2011).

Trabesinger, A. Quantum simulation. Nat. Phys. 8, 263 (2012).

Georgescu, I. M., Ashhab, S. & Nori, F. Quantum simulation. Rev. Mod. Phys. 86, 153–185 (2014).

Motta, M. et al. Determining eigenstates and thermal states on a quantum computer using quantum imaginary time evolution. Nat. Phys. 16, 205–210 (2020).

Parrish, R. M. & McMahon, P. L. Quantum filter diagonalization: Quantum eigendecomposition without full quantum phase estimation. arXiv preprint arXiv:1909.08925 (2019).

Kyriienko, O. Quantum inverse iteration algorithm for programmable quantum simulators. npj Quantum Inf. 6, 1–8 (2020).

McClean, J. R., Kimchi-Schwartz, M. E., Carter, J. & de Jong, W. A. Hybrid quantum-classical hierarchy for mitigation of decoherence and determination of excited states. Phys. Rev. A 95, 042308 (2017).

Takeshita, T. et al. Increasing the representation accuracy of quantum simulations of chemistry without extra quantum resources. Phys. Rev. X 10, 011004 (2020).

McClean, J., Jiang, Z., Rubin, N., Babbush, R. & Neven, H. Decoding quantum errors with subspace expansions. Nat. Commun. 11, 636 (2020).

Urbanek, M., Camps, D., Van Beeumen, R. & de Jong, W. A. Chemistry on quantum computers with virtual quantum subspace expansion. J. Chem. Theory Comput. 16, 5425–5431 (2020).

Suchsland, P. et al. Algorithmic error mitigation scheme for current quantum processors. Quantum 5, 492 (2021).

Tkachenko, N. V. et al. Quantum davidson algorithm for excited states. arXiv 2204.10741 (2023).

Seki, K. & Yunoki, S. Quantum power method by a superposition of time-evolved states. PRX Quantum 2, 010333 (2021).

Kowalski, K. & Peng, B. Quantum simulations employing connected moments expansions. J. Chem. Phys. 153, 201102 (2020).

Vallury, H. J., Jones, M. A., Hill, C. D. & Hollenberg, L. C. L. Quantum computed moments correction to variational estimates. Quantum 4, 373 (2020).

Aulicino, J. C., Keen, T. & Peng, B. State preparation and evolution in quantum computing: A perspective from hamiltonian moments. Int. J. Quantum Chem. 122, e26853 (2022).

Ollitrault, P. J. et al. Quantum equation of motion for computing molecular excitation energies on a noisy quantum processor. Phys. Rev. Res. 2, 043140 (2020).

Motta, M. et al. Subspace methods for electronic structure simulations on quantum computers. Electron. Struct. 6, 013001 (2024).

Hill, D. L. & Wheeler, J. A. Nuclear constitution and the interpretation of fission phenomena. Phys. Rev. 89, 1102 (1953).

Griffin, J. J. & Wheeler, J. A. Collective motions in nuclei by the method of generator coordinates. Phys. Rev. 108, 311–327 (1957).

Rodríguez-Guzmán, R., Egido, J. & Robledo, L. Correlations beyond the mean field in magnesium isotopes: angular momentum projection and configuration mixing. Nucl. Phys. A 709, 201–235 (2002).

Bender, M., Heenen, P.-H. & Reinhard, P.-G. Self-consistent mean-field models for nuclear structure. Rev. Mod. Phys. 75, 121 (2003).

Ring, P. & Schuck, P.The nuclear many-body problem (Springer Science & Business Media, 2004).

Yao, J., Meng, J., Ring, P. & Vretenar, D. Configuration mixing of angular-momentum-projected triaxial relativistic mean-field wave functions. Phys. Rev. C. 81, 044311 (2010).

Egido, J. L. State-of-the-art of beyond mean field theories with nuclear density functionals. Phys. Scr. 91, 073003 (2016).

Hizawa, N., Hagino, K. & Yoshida, K. Generator coordinate method with a conjugate momentum: Application to particle number projection. Phys. Rev. C. 103, 034313 (2021).

Zheng, M. et al. Quantum algorithms for generator coordinate methods. Phys. Rev. Res. 5, 023200 (2023).

Baek, U. et al. Say no to optimization: A nonorthogonal quantum eigensolver. PRX Quantum 4, 030307 (2023).

Hirsbrunner, M. R. et al. Diagnosing local minima and accelerating convergence of variational quantum eigensolvers with quantum subspace techniques https://arxiv.org/abs/2404.06534 (2024).

Marti-Dafcik, D., Burton, H. G. A. & Tew, D. P. Spin coupling is all you need: Encoding strong electron correlation on quantum computers https://arxiv.org/abs/2404.18878 (2024).

Fukutome, H. The group theoretical structure of fermion many-body systems arising from the canonical anticommutation relation. i: Lie algebras of fermion operators and exact generator coordinate representations of state vectors. Prog. Theor. Phys. 65, 809–827 (1981).

Evangelista, F. A., Chan, G. K.-L. & Scuseria, G. E. Exact parameterization of fermionic wave functions via unitary coupled cluster theory. J. Chem. Phys. 151, 244112 (2019).

Lee, J., Huggins, W. J., Head-Gordon, M. & Whaley, K. B. Generalized unitary coupled cluster wave functions for quantum computation. J. Chem. Theory Comput. 15, 311–324 (2019).

Anselmetti, G.-L. R., Wierichs, D., Gogolin, C. & Parrish, R. M. Local, expressive, quantum-number-preserving vqe ansätze for fermionic systems. N. J. Phys. 23, 113010 (2021).

Jankowski, K. & Paldus, J. Applicability of coupled-pair theories to quasidegenerate electronic states: a model study. Int. J. Quantum Chem. 18, 1243–1269 (1980).

Comeau, D. C. & Bartlett, R. J. The equation-of-motion coupled-cluster method. applications to open-and closed-shell reference states. Chem. Phys. Lett. 207, 414–423 (1993).

Kowalski, K. & Piecuch, P. The active-space equation-of-motion coupled-cluster methods for excited electronic states: the eomccsdt approach. J. Chem. Phys. 113, 8490–8502 (2000).

Tang, H. L. et al. qubit-adapt-vqe: An adaptive algorithm for constructing hardware-efficient ansätze on a quantum processor. PRX Quantum 2, 020310 (2021).

Berry, D. W., Ahokas, G., Cleve, R. & Sanders, B. C. Efficient quantum algorithms for simulating sparse hamiltonians. Comm. Math. Phys. 270, 359–371 (2007).

Arrazola, J. M. et al. Universal quantum circuits for quantum chemistry. Quantum 6, 742 (2022).

Kottmann, J. S. Molecular quantum circuit design: A graph-based approach. Quantum 7, 1073 (2023).

Cortes, C. L. & Gray, S. K. Quantum krylov subspace algorithms for ground-and excited-state energy estimation. Phys. Rev. A 105, 022417 (2022).

Grimsley, H. R., Economou, S. E., Barnes, E. & Mayhall, N. J. An adaptive variational algorithm for exact molecular simulations on a quantum computer. Nat. Commun. 10, https://doi.org/10.1038/s41467-019-10988-2 (2019).

Zhang, K., Hsieh, M.-H., Liu, L. & Tao, D. Quantum gram-schmidt processes and their application to efficient state readout for quantum algorithms. Phys. Rev. Res. 3, https://doi.org/10.1103/physrevresearch.3.043095 (2021).

Grimsley, H. R., Economou, S. E., Barnes, E. & Mayhall, N. J. adapt-vqe. https://github.com/mayhallgroup/adapt-vqe (2022).

Virtanen, P. et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 17, 261–272 (2020). Scipy version 1.11.3.

Qiskit contributors. Qiskit: An open-source framework for quantum computing (2023). Qiskit version 0.45.0.

Kivlichan, I. D. et al. Quantum simulation of electronic structure with linear depth and connectivity. Phys. Rev. Lett. 120, 110501 (2018).

Acknowledgements

M.Z., B.P., and K.K. acknowledge the support by the “Embedding QC into Many-body Frameworks for Strongly Correlated Molecular and Materials Systems” project, which is funded by the U. S. Department of Energy, Office of Science, Office of Basic Energy Sciences (BES), the Division of Chemical Sciences, Geosciences, and Biosciences under FWP 72689. B.P. also acknowledges the support from the Early Career Research Program by the U. S. Department of Energy, Office of Science, under FWP 83466. A.L. and K.K. also acknowledges the support from Quantum Science Center (QSC), a National Quantum Information Science Research Center of the U.S. Department of Energy (under FWP 76213). X.Y. was supported by National Science Foundation CAREER DMS-2143915. M.Z. and X.Y. both also were supported by Defense Advanced Research Projects Agency as part of the project W911NF2010022: The Quantum Computing Revolution and Optimization: Challenges and Opportunities. This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725. This research used resources of the National Energy Research Scientific Computing Center (NERSC), a U.S. Department of Energy Office of Science User Facility located at Lawrence Berkeley National Laboratory, operated under Contract No. DE-AC02-05CH11231. The Pacific Northwest National Laboratory is operated by Battelle for the U.S. Department of Energy under Contract DE-AC05-76RL01830.

Author information

Authors and Affiliations

Contributions

B.P. conceptualized the idea and designed the research, M.Z. and B.P. implemented the approaches and performed the simulations. All authors analyzed the data and contributed to paper writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zheng, M., Peng, B., Li, A. et al. Unleashed from constrained optimization: quantum computing for quantum chemistry employing generator coordinate inspired method. npj Quantum Inf 10, 127 (2024). https://doi.org/10.1038/s41534-024-00916-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-024-00916-8