Abstract

Matrix geometric means between two positive definite matrices can be defined from distinct perspectives—as solutions to certain nonlinear systems of equations, as points along geodesics in Riemannian geometry, and as solutions to certain optimisation problems. We devise quantum subroutines for the matrix geometric means, and construct solutions to the algebraic Riccati equation—an important class of nonlinear systems of equations appearing in machine learning, optimal control, estimation, and filtering. Using these subroutines, we present a new class of quantum learning algorithms, for both classical and quantum data, called quantum geometric mean metric learning, for weakly supervised learning and anomaly detection. The subroutines are also useful for estimating geometric Rényi relative entropies and the Uhlmann fidelity, in particular achieving optimal dependence on precision for the Uhlmann and Matsumoto fidelities. Finally, we provide a BQP-complete problem based on matrix geometric means that can be solved by our subroutines.

Similar content being viewed by others

Introduction

Quantum computation is considered a rapidly emerging technology that has important implications for the development of algorithms. Many quantum algorithms that have theoretically demonstrated potential quantum advantage, however, have been chiefly directed towards linear problems—in part because quantum mechanics is itself linear. These include simulating solutions of linear systems of equations1, known as quantum linear algebra, and linear ordinary and partial differential equations2,3,4,5.

However, many problems of scientific interest are nonlinear. While most nonlinear systems of equations of interest for applications only appear after discretising nonlinear ordinary and partial differential equations, there is an important class of nonlinear systems of equations that is not only relevant to partial differential equations but is also of independent interest. This class consists of the algebraic Riccati equations, which are nonlinear matrix equations with quadratic nonlinearity6. These are also the stationary states of the Riccati matrix differential equations, which are essential for many applications in applied mathematics, science, and engineering problems. These nonlinear matrix equations are particularly relevant for optimal control, stability theory, filtering (e.g., Kalman filter7), network theory, differential games, and estimation problems8.

It turns out that solutions to the algebraic Riccati equations are closely connected with the concept of a matrix geometric mean. For example, the unique solution to the simplest algebraic Riccati equation can be precisely expressed as the standard matrix geometric mean, as we will recall later. The matrix geometric means are matrix generalisations of the scalar geometric mean and have a long history in mathematics9,10; there are diverse approaches to this same concept. For example, the standard matrix geometric mean can be defined as the output of an optimisation problem. The matrix geometric mean between two matrices also has an elegant geometric interpretation as a midpoint along the geodesic joining these two matrices that live in Riemannian space6. The Monge map between two Gaussian distributions, appearing in optimal transport, can also be expressed in terms of the matrix geometric mean11. The standard and weighted matrix geometric means appear in quantum information in the form of quantum entropic12,13 and fidelity14,15,16 measures.

However, computing the matrix geometric mean involves matrix multiplication and also nonlinear operations like taking inverses and square roots of matrices. Here classical numerical schemes can be inefficient, with costs that are polynomial in the size of the matrix17. The processing of several matrix multiplications can, under certain conditions, be more efficient through quantum processing. Our aim here is to construct quantum subroutines that embed the standard and weighted matrix geometric means into unitary operators and to determine the conditions under which these embeddings can indeed be conducted efficiently. There are many such possible unitary operators, and we choose a formalism called block-encoding18,19,20.

The block-encoding of a non-unitary matrix Y is a unitary matrix UY whose upper left-hand corner is proportional to Y. The construction of this unitary matrix allows realisation by means of a quantum circuit, which describes unitary evolution. The matrix Y can be subsequently recovered by extracting only the top-left corner through measurement. This provides a convenient building block for constructing sums and (integer and non-integer) powers of matrices Y by concatenating its block-encodings via unitary circuits. This formalism allows us to form the block-encoding of the standard and weighted matrix geometric means, which are products of matrices and their roots. From these block-encodings, one can also recover their expectation values with respect to certain states. These different expectation values are then relevant for various applications, like in machine learning and quantum fidelity estimation.

Under certain assumptions, we show how these can be efficiently implementable on quantum devices. This efficiency arises from the fact that matrix multiplications can be more efficient with quantum algorithms. This observation has an important consequence. It means that a quantum device can efficiently prepare solutions of the (nonlinear) algebraic Riccati equations. The expectation values of these solutions can also be shown to be efficiently recoverable for different applications. Our approach differs from many past works in three key respects: (a) ours is the first quantum subroutine, to the best of our knowledge, to prepare solutions of nonlinear matrix equations without using iterative methods. The solutions themselves are matrices and not vectors, which differs from other quantum algorithms for nonlinear systems of equations, for example21,22,23; (b) the solutions are not embedded in a pure quantum state, but rather an observable, thus introducing a novel embedding of the solution. This is important when solutions themselves are in matrix form (for matrix equations), which differs from the quantum embeddings of solutions of discretised nonlinear ordinary and partial differential equations (solutions not in matrix form)21,22,23; (c) we show the efficient recovery of outputs for nonlinear systems of equations directly relevant for applications.

One class of applications is in the area of machine learning. Machine learning algorithms often require an assignment of a metric, or distance measure, in order to compute distances between data points. The values of these distances then become central to the outcome, for instance, in making a prediction for classification. This means that the choice of the metric itself is important, but the best metric can depend on the actual data. Learning the metric from given data —called metric learning—can also be formulated as a learning problem. While most of these metric learning algorithms require iterative techniques like gradient descent to minimise the proposed loss function, a class of metric learning algorithms called geometric mean metric learning24 admits closed-form solutions. It has also been shown to attain higher classification accuracy with greater speed than previous methods. Here we devise efficient quantum algorithms, using our quantum subroutine for the matrix geometric mean, for geometric mean metric learning for both classical and quantum data. For quantum data, we propose new algorithms that can be used for the anomaly detection of quantum states, which differs from previous algorithms25. The applicability extends also to asymmetric cases for which there is a higher cost to be paid for false negatives or true positives. This is, in fact, related to the weighted matrix geometric mean.

There is also an important connection between the solution of the geometric mean metric learning problem and the Fuchs–Caves observable14, which appears in quantum fidelity estimation. This allows for a re-derivation of quantum fidelity from the point of view of machine learning. We show that our quantum subroutines for the matrix geometric mean can also be used in the efficient estimation of geometric Rényi relative entropies and the quantum fidelity by means of the Fuchs–Caves observable. This new way of estimating quantum fidelity has polynomially better performance in precision than previously known fidelity estimation algorithms. It is also shown to be optimal with respect to precision.

We can also extend our method to a more general class of nonlinear systems of equations of pth-degree. These are pth-degree polynomial generalisations of the simplest algebraic Riccati equations. We show that the unique solutions of these equations are weighted matrix geometric means. We similarly devise quantum subroutines to prepare their block-encodings. The weighted matrix geometric mean for two quantum states has an elegant geometric interpretation as the positive semi-definite operator at (1/p)-th of the length along the geodesic connecting two quantum states in Riemannian space. We also show these are relevant to the weighted version of our new quantum learning algorithm. Furthermore, preparing block-encodings of the weighted matrix geometric means allows us to construct, to the best of our knowledge, the first quantum algorithm for estimating the geometric Rényi relative entropies.

Results

Summary of our results

For convenience, we provide a brief summary of our results here. Our first contribution consists of basic quantum subroutines in the section “Quantum subroutines for matrix geometric means, algebraic Riccati equations, and higher-order nonlinear equations” for matrix geometric means (see Definition 1) and their weighted generalisation (see Definition 2).

Solving algebraic Riccati equations

We then consider the problem of solving the algebraic matrix Riccati equation

where A, B, and C are d × d complex-valued matrices. We delineate quantum algorithms with time complexity \(O\left({\rm{poly}}\log d\right)\) for solving Eq. (1) for well-conditioned matrices, in the section “Quantum subroutine for matrix geometric means” and section “B ≠ 0 algebraic Riccati equation”. Here, we say a matrix A is well-conditioned if \(A\ge I/({\rm{poly}}\log d)\). The higher-order case \(Y{\left(AY\right)}^{p-1}=C\) is studied in the section “Higher-order polynomial equations”. In section “BQP-hardness”, we show that it is \({\mathsf{BQP}}\)-complete to solve the equation YAY = C, a special case of Eq. (1), in which case the solution is Y = A−1#C (see Definition 1 for the meaning of this notation).

Geometric mean metric learning

We introduce quantum algorithms for learning the metric in machine learning, by phrasing this as an optimisation problem using a geometric perspective. Unlike other metric learning algorithms, this optimisation problem has a closed-form solution. This follows the geometric mean metric learning method24. The solution turns out to be expressible in terms of the matrix geometric mean Y = A−1#C. We design quantum algorithms for the learning task for classical data (section “Learning Euclidean metric from data”) as well as for quantum data (section “1-class quantum learning”). We present the conditions under which the quantum algorithm is more efficient than the corresponding classical algorithm. For example, the classical learning task with well-conditioned matrices A and C has time complexity \(O({\rm{poly}}(\log d,\log (1/\epsilon )))\). We also show that the quantum learning task with well-conditioned quantum states ρ and σ has time complexity \(O({\rm{poly}}(\log d,\log (1/\epsilon )))\). The latter learning task for quantum data is uniquely quantum in nature and has no classical counterpart.

(Uhlmann) fidelity estimation

Based on the Fuchs–Caves observable14, we design a new quantum algorithm for fidelity estimation in section “Fidelity” via the fidelity formula \(F\left(\rho ,\sigma \right)={\rm{Tr}}\left(\left({\sigma }^{-1}\#\rho \right)\sigma \right)\), which involves the matrix geometric mean. We show that our quantum algorithm has query complexity \(\tilde{O}\left({\kappa }^{4}/\epsilon \right)\) provided that ρ, σ ≥ I/κ for some known κ > 0, and that the ϵ-dependence is optimal up to polylogarithmic factors.

Geometric Rényi relative entropy

In the section “Geometric fidelity and geometric Rényi relative entropy”, we present the first quantum algorithm for computing the geometric Rényi relative entropy, to the best of our knowledge. In particular, we design a quantum algorithm for computing the geometric fidelity \({\widehat{F}}_{1/2}\left(\rho ,\sigma \right):={\rm{Tr}}\left(\rho \#\sigma \right)\) (also known as the Matsumoto fidelity15,16) with query complexity \(\tilde{O}\left({\kappa }^{3.5}/\epsilon \right)\) provided that ρ, σ ≥ I/κ for some known κ > 0, and we prove that the ϵ-dependence is optimal up to polylogarithmic factors.

Organisation of this paper

In the section “Background”, we begin with a review of the standard matrix geometric mean, weighted matrix geometric mean, the algebraic Riccati equation, and block-encoding. In section “Quantum subroutines for matrix geometric means, algebraic Riccati equations, and higher-order nonlinear equations” we compute the costs required to prepare block-encodings of the solutions of algebraic Riccati equations and their pth-order generalisations. Applications are presented section “Applications”. In section “BQP-hardness” we show how our new quantum subroutines for the matrix geometric mean can solve a \({\mathsf{BQP}}\)-complete problem. We end in section “Discussion” with discussions.

Background

In this section, we give a brief overview of the standard and weighted matrix geometric means and their role in solving algebraic Riccati equations (see ref. 26, Chapters 4 & 6 and refs. 9,10 for more details). We then provide a definition of block-encoding. Throughout the paper, unless otherwise stated, we deal with Hermitian matrices.

Matrix geometric means

Definition 1

(Matrix geometric mean). Fix \(D\in {\mathbb{N}}\). Given two D × D positive definite matrices A and C, the matrix geometric mean of A and C is defined as

Note that the matrix geometric mean between A−1 and C is thus defined by

Alternatively, the matrix geometric mean A#C can be equivalently be written as

where the ordering of Hermitian matrices is given by the Löwner partial order.

The matrix geometric mean appears in quantum information, for example, like the Fuchs–Caves observable14, in quantum fidelity and entropy operators like the Tsallis relative operator entropy27, and quantum fidelity measures between states12,15,16 and channels28. This concept can also be generalised to the weighted matrix geometric mean.

Definition 2

(Weighted matrix geometric mean). Fix p > 0. The weighted matrix geometric mean with a weight 1/p is defined as

The weighted matrix geometric mean between A−1 and C is then equal to

The canonical matrix geometric mean corresponds to the weighted geometric mean with weight 1/p = 1/2.

We will use the definitions in Eqs. (3) and (6) here and throughout because, as we will see later on, they are relevant to solutions of classes of nonlinear matrix equations like the algebraic Riccati equations.

For positive definite matrices (which include full-rank density matrices), the standard and weighted matrix geometric means have elegant geometric interpretations. It is known that the inner product on the real vector space formed by the set of Hermitian matrices gives rise to a Riemannian metric26, Chapter 6. This Riemannian metric is defined on the manifold MH formed by the set of positive definite matrices. Following ref. 26, Eqs. (6.2) & (6.4), a trajectory γ: [a, b] → MH on this manifold is a piecewise differential path on MH whose length is defined by \(L(\gamma ):=\mathop{\int}\nolimits_{a}^{b}{\left\Vert {\gamma }^{-1/2}(t){\gamma }^{{\prime} }(t){\gamma }^{-1/2}(t)\right\Vert }_{2}\,dt\). Then, the distance \(\delta ({A}^{-1},C)=\mathop{\inf }\nolimits_{\gamma }L(\gamma )\) between any two positive definite matrices A−1 and C on this manifold is defined to be the shortest length joining these two points. Then we have the following result.

Lemma 3

(Ref. 26, Theorem 6.1.6). If A−1 and C are two positive definite matrices, then there exists a unique geodesic joining A−1 and C. This geodesic has the following parameterisation with t ∈ [0, 1]:

This geodesic has a length given by

In the above, \(\parallel\!\! X{\parallel }_{2}:=\sqrt{{\rm{Tr}}[{X}^{\dagger }X]}\) denotes the Schatten 2-norm, whereas ∥ ⋅ ∥ refers to the operator norm throughout our paper.

From this viewpoint, the matrix geometric mean A−1#C = γgeod(t = 1/2) can clearly be interpreted as the midpoint along the geodesic joining A−1 and C. Similarly, the weighted geometric mean with weight 1/p can be interpreted as the point along the manifold when t = 1/p.

Algebraic Riccati equations

Let us begin with a general form of the algebraic Riccati equation for the unknown D × D matrix Y:

where A, B, and C are D × D matrices with complex-valued entries. This can be understood as a matrix version of the famous (scalar) quadratic equation ay2 − 2by − c = 0. Solutions of equations like (9) are not always guaranteed to exist, and certain conditions are required to prove the existence of, for instance, Hermitian solutions29. See ref. 30 for conditions on solvability. Even if existence can be shown, the solutions may not be unique or could alternatively be uncountably many31,32,33. However, there are unique solutions under certain conditions. For instance, if all the matrix entries are real-valued, then for symmetric positive semi-definite A, C and symmetric positive Y, there is a unique positive definite solution if and only if an associated matrix \(H=\scriptstyle\left(\begin{array}{cc}-B&A\\ C&{B}^{T}\end{array}\right)\) has no imaginary eigenvalues34.

In this paper, we confine our attention to simpler cases, for example in Lemmas 4 and 5, when there are unique solutions.

Lemma 4

(Solution of simple algebraic Riccati equation). Consider the following algebraic Riccati equation when A and C are positive definite matrices and Y is Hermitian:

This equation has a unique positive definite solution given by the standard matrix geometric mean:

Proof

This lemma is well known from refs. 35,36, but we provide a brief proof for completeness. Starting from the Riccati equation in (10) and by using the fact that A is positive definite with a unique square root, consider that

Since the matrix A1/2CA1/2 is positive definite and the equality in the last line above has been shown, both A1/2CA1/2 and \({({A}^{1/2}Y{A}^{1/2})}^{2}\) have a unique positive definite square root, implying that

thus justifying that Y = A−1#C is the unique positive definite solution as claimed. □

See ref. 37 for a discussion of (10) in the infinite-dimensional case.

If A and C are both positive definite with unit trace \({\rm{Tr}}(A)=1={\rm{Tr}}(C)\), then A and C can also be interpreted as density matrices. Then the operator A−1#C is also known as the Fuchs–Caves observable38, which is of relevance in the study of quantum fidelity. We will return to this point later. See also ref. 39, Section V for an interpretation of (10) when A and C are density matrices.

We can also extend Lemma 4 to the B ≠ 0 case, and the following holds.

Lemma 5

If A and C are positive definite, B is an arbitrary matrix, and \(({A}^{-1}B)={({A}^{-1}B)}^{\dagger }\), then a Hermitian solution to Eq. (9) can be expressed as

Proof

See Appendix IV A. □

Classical algorithms for solving algebraic Riccati equations are typically inefficient17 with respect to the size of the problem, i.e., polynomial in D. We will be looking at conditions for which a quantum algorithm for solving algebraic Riccati equations can be executed with less complexity.

Block-encoding

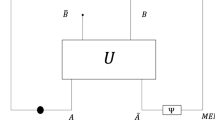

Classical information can be embedded in quantum systems in the form of quantum states, either pure or mixed, or in the form of quantum processes. A closed quantum system evolves under a unitary transformation, represented by a unitary matrix. In this paper, we will be focusing on how a matrix solution to a matrix equation can be embedded in a unitary matrix. Unlike other quantum subroutines that prepare solutions of a linear system of equations embedded in the amplitudes of a pure quantum state, here we first embed the solution Y into a unitary matrix.

There are different ways of embedding an arbitrary matrix into a unitary matrix. For instance, it is guaranteed by the Sz. Nagy dilation theorem (see, e.g., ref. 40, Theorem 1.1) that such a unitary matrix should always exist. We choose a flexible dilation known as block-encoding18,19,20. A unitary matrix UY is called a block-encoding of a matrix Y if it satisfies the following definition.

Definition 6

(Block-encoding). Fix \(n,a\in {\mathbb{N}}\) and ϵ, α ≥ 0. Let Y be an n-qubit operator. An (n + a)-qubit unitary UY is an (α, a, ϵ)-block-encoding of an operator Y if

Here \({| 0\left.\right\rangle }_{a}\) consists of all \(| 0\left.\right\rangle\) states in the computational basis of the a-ancilla qubits. The block-encoding formalism allows one to construct, for example, block-encodings of sums of matrices, linear combinations of block-encoded matrices, and polynomial approximations of negative and positive power functions of matrices18. We list several associated lemmas in Appendix IV B for convenience.

Quantum subroutines for matrix geometric means, algebraic Riccati equations, and higher-order nonlinear equations

Let us focus on cases where the solutions to the algebraic Riccati equations can be captured by the matrix geometric mean in Lemmas 4 and 5. The computation of the matrix geometric mean involves the computation of the square roots of matrices and several matrix multiplications. For D × D matrices, typically these costs will scale polynomially with D for a classical algorithm. However, quantum algorithms for matrix multiplications of block-encoded matrices can be performed more efficiently when compared to the number of classical numerical steps. These series of block-encoded matrix multiplications can be achieved in the quantum case via the block-encoding formalism.

Let us begin with the algebraic Riccati equation in Eq. (9):

It is our goal below first to construct a block-encoding of the solution Y, denoted UY, under the conditions obeyed in Lemmas 4 and 5. This we consider as a subroutine that we can then employ in various applications.

Below we assume that we also have access to the block-encodings of A, B, C—denoted UA, UB, UC, respectively—as well as their inverses \({U}_{A}^{\dagger }\), \({U}_{B}^{\dagger }\), \({U}_{C}^{\dagger }\) and controlled versions. For example, if A, B, and C are positive semi-definite matrices with unit trace, these can be considered density matrices. Then, from Lemma 30, we can prepare block-encodings UA, UB and UC by accessing the unitaries that prepare purifications of A, B and C, with only a single query to each purification and \(O(\log d)\) gates. In more general scenarios, we can leave the preparation of these block-encodings to a later stage, which also depends on the particular application. Below, κA and κC denote the condition numbers for A and C, respectively. It is important to clarify that, as assumed in ref. 1, all of our quantum algorithms for matrix geometric means assume that

This means that κA and κC are really equal to the inverses of the minimum eigenvalues of A and C, respectively, and \(\left\Vert A\right\Vert ,\left\Vert B\right\Vert ,\left\Vert C\right\Vert \le 1\). The upper bounds above are automatically satisfied whenever A and C are density matrices.

Quantum subroutine for matrix geometric means

As a warm-up, we present a quantum subroutine for implementing block-encodings of the weighted matrix geometric means.

Lemma 7

(Block-encoding of weighted matrix geometric mean). Suppose that UA, UC are (1, a, 0)-block-encodings of matrices A, C, respectively, where A ≥ I/κA, C ≥ I/κC, and I is the identity matrix. For ϵ ∈ (0, 1/2), one can implement a \((2{\kappa }_{A}^{1/p}{\gamma }_{p},5a+12,\epsilon )\)-block-encoding of Y for every fixed real p ≠ 0, where

and

using

-

\(\tilde{O}\left({\kappa }_{A}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) queries to UC, \(\tilde{O}\left({\kappa }_{A}^{2}{\kappa }_{C}{\log }^{4}\left(1/\epsilon \right)\right)\) queries to UA;

-

\(\tilde{O}\left(a{\kappa }_{A}^{2}{\kappa }_{C}{\log }^{4}\left(1/\epsilon \right)\right)\) gates; and

-

\({\rm{poly}}\left({\kappa }_{A},{\kappa }_{C},\log \left(1/\epsilon \right)\right)\) classical time.

Remark 1

In the above and in what follows, “queries to U” refers to access not only to U, but also to its inverse U†, controlled-U, and controlled-U†. Here and in the following, \(\tilde{O}(\cdot )\) suppresses logarithmic factors of functions appearing in (⋅). The same convention applies to \(\widetilde{\Omega }(\cdot )\) and \(\tilde{\Theta }(\cdot )\).

Proof sketch of Lemma 7. See Appendix IV C for a detailed proof. As an illustration for the construction of our quantum subroutines, we outline the basic idea. Other quantum subroutines later presented in this section are obtained using similar ideas. Our approach consists of three main steps:

-

1.

Implement a block-encoding of A−1/2, using roughly \(\tilde{O}\left({\kappa }_{A}\right)\) queries to a block-encoding of A (for simplicity, we ignore the ϵ-dependence in our brief explanation here). This is done by applying quantum singular value transformation (QSVT)18 with polynomial approximations of negative power functions (see Lemma 27).

-

2.

Implement a block-encoding of \({\left({A}^{-1/2}C{A}^{-1/2}\right)}^{1/p}\), using roughly \(\tilde{O}\left({\kappa }_{A}{\kappa }_{C}\right)\) queries to a block-encoding of A−1/2CA−1/2. This is done by applying QSVT with polynomial approximations of positive power functions (see Lemma 28). Note that a block-encoding of A−1/2CA−1/2 can be implemented using \(O\left(1\right)\) queries to block-encodings of A−1/2 and C by the method for realising the product of block-encoded matrices (see Lemma 24).

-

3.

Similar to Step 2, implement a block-encoding of \({A}^{1/2}{\left({A}^{-1/2}C{A}^{-1/2}\right)}^{1/p}{A}^{1/2}\), using \(O\left(1\right)\) queries to block-encodings of A1/2 and \({\left({A}^{-1/2}C{A}^{-1/2}\right)}^{1/p}\), where a block-encoding of A1/2 can be implemented using \(\tilde{O}\left({\kappa }_{A}\right)\) queries to a block-encoding of A.

To conclude, the overall query complexity is roughly \(\tilde{O}\left({\kappa }_{A}\right)\!\cdot\! \tilde{O}\left({\kappa }_{A}{\kappa }_{C}\right)+\tilde{O}\left({\kappa }_{A}\right)=\tilde{O}\left({\kappa }_{A}^{2}{\kappa }_{C}\right)\). Note that the construction is mainly based on QSVT and thus is also time efficient. So the overall time complexity is equal to the query complexity only up to polylogarithmic factors.

B = 0 algebraic Riccati equation

Let us begin with the unique positive definite solution to the algebraic Riccati equation with B = 0, i.e., Eq. (9), which can be expressed as the matrix geometric mean Y = A−1#C, according to Lemma 4, where A and C are positive definite matrices. Then we have the following lemma, which characterises a block-encoding of the solution in a quantum circuit.

Lemma 8

Suppose that UA, UC are (1, a, 0)-block-encodings of matrices A, C, respectively, with A ≥ I/κA and C ≥ I/κC. For ϵ ∈ (0, 1/2), one can implement a (2κA, 5a + 11, ϵ)-block-encoding of Y, where

using

-

\(\tilde{O}\left({\kappa }_{A}{\kappa }_{C}{\log }^{2}\left(1/\epsilon \right)\right)\) queries to UC and

\(\tilde{O}\left({\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) queries to UA;

-

\(\tilde{O}\left(a{\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) gates; and

-

\({\rm{poly}}\left({\kappa }_{A},{\kappa }_{C},\log \left(1/\epsilon \right)\right)\) classical time.

Proof

See Appendix IV D. □

B ≠ 0 algebraic Riccati equation

Here we want to construct a block-encoding of a Hermitian solution to the algebraic Riccati equation via the standard matrix geometric mean, according to Lemma 5. We then have the following lemma.

Lemma 9

Suppose that UA, UB, UC are (1, a, 0)-block-encodings of matrices A, B, C, respectively, with A ≥ I/κA, C ≥ I/κC and \({A}^{-1}B={\left({A}^{-1}B\right)}^{\dagger }\). For ϵ ∈ (0, 1/2), one can implement a \((2{\kappa }_{A}^{3/2},b,\epsilon )\)-block-encoding of Y, where \(b=O\left(a+\log \left({\kappa }_{A}{\kappa }_{C}/\epsilon \right)\right)\) and

using

-

\(\tilde{O}\left({\kappa }_{A}{\kappa }_{C}{\log }^{2}\left(1/\epsilon \right)\right)\) queries to UB and UC, and \(\tilde{O}\left({\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) queries to UA;

-

\(\tilde{O}\left(a{\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) gates; and

-

\({\rm{poly}}\left({\kappa }_{A},{\kappa }_{C},\log \left(1/\epsilon \right)\right)\) classical time.

Proof

See Appendix IV E. □

Higher-order polynomial equations

Algebraic Riccati equations are second-order nonlinear equations whose solutions are given by the second-order matrix geometric mean, i.e., p = 2. We can also generalise our formalism to particular pth-order nonlinear matrix equations, whose solutions involve p ∈ {3, 4, …} weighted matrix geometric means. For example, consider the following pth-order nonlinear matrix equations, which we call the pth-order YAY algebraic equations

where p is the highest order polynomial in Y. It is straightforwardly checked that the solutions can be written in terms of the weighted geometric mean from Definition 2:

See ref. 41 for a discussion of this kind of equation in the infinite-dimensional case.

Lemma 10

(Solution of simple pth-order algebraic YAY equation). Fix p ∈ {2, 3, 4, …}. Consider the pth-order algebraic YAY equation when A and C are positive definite matrices:

This equation has a unique positive definite solution given by the following weighted geometric mean:

Proof

The proof is a generalisation of that for Lemma 4, and we provide it for completeness. Starting from the equation in (29) and by using the fact that A is positive definite with a unique square root, consider that

where the last line is obtained by left and right multiplying the previous line by A1/2. Since the matrix A1/2CA1/2 is positive definite and the equality in the last line above has been shown, both A1/2CA1/2 and \({({A}^{1/2}Y{A}^{1/2})}^{p}\) have a unique positive definite p-th root, implying that

thus justifying that Y = A−1#1/pC is the unique positive definite solution as claimed. □

To construct a block-encoding for the weighted geometric mean (and thus the solution of (27)), we have proven the following lemma, which holds for every non-zero real number p.

Lemma 11

Suppose that UA, UC are (1, a, 0)-block-encodings of matrices A, C, respectively, with A ≥ I/κA and C ≥ I/κC and let p ≠ 0 be any fixed non-zero real number. For ϵ ∈ (0, 1/2), one can implement a (2κAγp, 5a + 11, ϵ)-block-encoding of Y, where

and

using

-

\(\tilde{O}\left({\kappa }_{A}{\kappa }_{C}{\log }^{2}\left(1/\epsilon \right)\right)\) queries to UC and

\(\tilde{O}\left({\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) queries to UA;

-

\(\tilde{O}\left(a{\kappa }_{A}^{2}{\kappa }_{C}{\log }^{3}\left(1/\epsilon \right)\right)\) gates; and

-

\({\rm{poly}}\left({\kappa }_{A},{\kappa }_{C},\log \left(1/\epsilon \right)\right)\) classical time.

Proof

See Appendix IV F. □

Applications

Here we explore two classes of applications for preparing block-encodings of the matrix geometric mean. The first class of applications is to learning problems, in particular for metric learning from data, both quantum and classical. Next, we demonstrate how having access to the matrix geometric mean also allows us to compute some fundamental quantities in quantum information, like the quantum fidelity between two mixed states via the Fuchs–Caves observable, as well as geometric Rényi relative entropies.

Quantum geometric mean metric learning

In learning problems, there is typically a loss function L that we want to optimise. Suppose we have D × D positive definite matrices Y, A, and C. We note that here the uniqueness result in Lemma 4 continues to hold. Consider the following optimisation problem:

It turns out that, for given A and C, the unique Y minimising L(Y) is Y = A−1#C. In ref. 24, this result was proven for real positive definite matrices A and C, and here we extend it to positive definite Hermitian matrices. In ref. 42, the same optimisation problem was considered in the context of quantum fidelity, where it was shown that the optimal value of (36) is equal to \({\rm{Tr}}[{({A}^{1/2}C{A}^{1/2})}^{1/2}]\).

Lemma 12

Fix A and C to be positive definite matrices. The unique solution to \(\mathop{\min }\limits_{Y\ge 0}L(Y)\) where \(L(Y)={\rm{Tr}}(YA)+{\rm{Tr}}({Y}^{-1}C)\) is the matrix geometric mean Y = A−1#C.

Proof

If L(Y) is both strictly convex and strictly geodesically convex, then the solution to ∇ L(Y) = 0 will also be a global minimiser. For the proof of strict convexity and strict geodesic convexity, see Appendix IV G. Now ∇ L(Y) = A − Y−1CY−1 = 0 implies the algebraic Riccati equation YAY = C or Y = A−1#C, which is the unique solution for positive definite matrices A and C. □

We will use this property and map two learning problems—one for classical data and another for quantum data—onto this optimisation problem. Using the block-encoding for the matrix geometric mean in Lemma 8, we then devise quantum algorithms for learning a Euclidean metric from data, as well as a 1-class classification algorithm for quantum states. We also extend to the case of weighted learning, where there are unequal contributions to the loss function in Eq. (36) from \({\rm{Tr}}(YA)\) and \({\rm{Tr}}({Y}^{-1}C)\).

Learning Euclidean metric from data

Machine learning algorithms rely on distance measures to quantify how similar one set of data is to another. Naturally, different distance measures can give rise to different results, and so choosing the right metric is crucial for the success of an algorithm. The distance measure itself can, in fact, be learned, for example, in a weakly supervised scenario, and this is called metric learning43. Here we are provided with the following two sets \({\mathcal{S}}\) (similar) and \({\mathcal{D}}\) (dissimilar) of pairs (training data)

and \({(({{\boldsymbol{x}}}^{(k)},{{\boldsymbol{x}}}^{{\prime} (k)}))}_{k}\) are the data points, where k labels all the pairs that either belong to \({\mathcal{S}}\) or \({\mathcal{D}}\). An important example in metric learning is learning the Euclidean metric from data, which can be reformulated as a simple optimisation problem with a closed-form solution. Learning a Euclidean metric is a common form of metric learning, where we can learn a Mahalanobis distance dY

with Y a real D × D symmetric positive definite matrix. To identify a suitable Y, one requires a suitable cost function.

In geometric mean metric learning24, we want dY to be minimal between data in the same class, i.e., \({\mathcal{S}}\). At the same time, when the data are in different classes, i.e., \({\mathcal{D}}\), we want \({d}_{{Y}^{-1}}\) to be minimal instead. Thus we want to minimise the sum \({\sum }_{{\mathcal{S}}}{d}_{Y}+{\sum }_{{\mathcal{D}}}{d}_{{Y}^{-1}}\). This leads to an optimisation problem of the form in Eq. (36)

where we assume A and C are positive definite. From Lemma 12, we see that the optimal solution to Eq. (40) is the matrix geometric mean Y = A−1#C. We see that this is also, in fact, the solution of the B = 0 algebraic Riccati equation YAY = C. In Lemma 8, we saw that, given access to the block-encodings of UA, UC, their inverses, and controlled versions, we can construct the block-encoding of Y, denoted UY. Lemma 8 also shows that the query and gate complexity costs are efficient in D, i.e. \(O(\,\text{poly}\,\log d)\), when the condition numbers for A and C are also polynomial in \(\log d\).

While we see that it is possible to efficiently recover UY, it is not sufficient for a direct application to machine learning. Learning Y is part of the learning stage, but reading off the classical components of Y directly from UY is inefficient. However, if we consider the testing stage in machine learning, then we need to compute the actual distance dY if we are given a new data pair \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\), known as testing data. For this testing data, we do not know its classification into \({\mathcal{S}}\) or \({\mathcal{D}}\) a priori. Thus the task is to show that, having access to UY, it is then sufficient to compute dY without needing to read out the elements of Y. For example, a large value of \({d}_{Y}({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\) means that we should classify \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\in {\mathcal{D}}\), whereas a small value of \({d}_{Y}({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\) means that we should classify \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\in {\mathcal{S}}\). We discuss later the preparation of the block-encodings of UA and UC.

Before proceeding, we first discuss the preparation of a quantum state that we later require. Given a new data pair (testing data) \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\) for which we want to compute dY, where we use the optimal Y, we can define a corresponding pure quantum state with \(m=O(\log d)\) qubits \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}=(1/{{\mathcal{N}}}_{\psi })\mathop{\sum }\nolimits_{i = 1}^{d}{({\boldsymbol{y}}-{{\boldsymbol{y}}}^{{\prime} })}_{i}| i\left.\right\rangle\), with normalisation constant \({{\mathcal{N}}}_{\psi }^{2}=\mathop{\sum }\nolimits_{i = 1}^{d}{({\boldsymbol{y}}-{{\boldsymbol{y}}}^{{\prime} })}_{i}^{2}\). Its amplitudes are proportional to \({\boldsymbol{y}}-{{\boldsymbol{y}}}^{{\prime} }\) for any pair \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\). We say that the state \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\) has sparsity σ if σ is the number of non-zero entries in the amplitude. We can use optimal state preparation schemes44,45,46 to prepare \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\).

Lemma 13

(Quantum state preparation,44,45,46). A quantum circuit producing an m-qubit state \(| z\left.\right\rangle =\mathop{\sum }\nolimits_{i = 1}^{{2}^{m}}{z}_{i}| i\left.\right\rangle\) from \(| 0\left.\right\rangle\) with given classical entries \({\{{z}_{i}\}}_{i = 1}^{{2}^{m}}\) can be implemented by using O(mσ) CNOT gates, \(O(\sigma (\log \sigma +m))\) one-qubit gates, and O(1) ancilla qubits, where the circuit description can be classically computed with time complexity \(O(m{\sigma }^{2}\log \sigma )\). Further, the gate depth complexity can be reduced to \(\Theta (\log (m\sigma ))\), if \(O(m\sigma \log \sigma )\) ancilla qubits are used.

Since here \(m=O(\log d)\), we see that as long as σ is small, e.g., \(\sigma =O({\rm{poly}}\log d)\), then the total initial state preparation cost, in either gate complexity and number of ancillas is \(O({\rm{poly}}\log d)\). Next we compute dY.

Theorem 14

Suppose we are given UA, UC, which are \((1,\log d,0)\)-block-encodings of \(A={\sum }_{({\boldsymbol{x}},{{\boldsymbol{x}}}^{{\prime} })\in {\mathcal{S}}}({\boldsymbol{x}}-{{\boldsymbol{x}}}^{{\prime} }){({\boldsymbol{x}}-{{\boldsymbol{x}}}^{{\prime} })}^{T}\) and \(C={\sum }_{({\boldsymbol{x}},{{\boldsymbol{x}}}^{{\prime} })\in {\mathcal{D}}}({\boldsymbol{x}}-{{\boldsymbol{x}}}^{{\prime} }){({\boldsymbol{x}}-{{\boldsymbol{x}}}^{{\prime} })}^{T}\), respectively. We also assume access to their inverses and controlled versions. We assume that the data obeys \({\kappa }_{A},{\kappa }_{C}=O({\rm{poly}}\log d)\). Given a testing data pair \(({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\), we assume that the corresponding state \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\) has sparsity \(O({\rm{poly}}\log d)\) and \({{\mathcal{N}}}_{\psi }=O({\rm{poly}}\log d)\). Then computing \({d}_{Y}({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })\) to precision ϵ has a query and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\).

Proof

We first make the observation that, for optimal Y,

where Y = A−1#C and UY is a (2κA, 5a + 11, ϵ) block-encoding of Y. The proportionality constant of 2κA comes from Lemma 8, which shows that \(|| Y-2{\kappa }_{A}{\left\langle 0\right\vert }_{5a+11}{U}_{Y}{| 0\left.\right\rangle }_{5a+11}||\, \le\, \epsilon\), where \(a=\log d\). For simplicity, we neglect the subscript on the \(| 0\left.\right\rangle\) states. This trace can be interpreted as an expectation value of \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\) with Y as the observable, and comes from the definition of dY and \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\).

To compute this trace given UY, we observe that \({\rm{Tr}}((S\otimes T)X)={\rm{Tr}}({X}_{T}S)\), where if \(T={\sum }_{n}{\lambda }_{n}| {u}_{n}\left.\right\rangle \,\langle {v}_{n}\vert\), then \({X}_{T}={\sum }_{n}{\lambda }_{n}\langle {v}_{n}\vert X\vert {u}_{n}\rangle\). So we can rewrite

where \({| \Psi \left.\right\rangle }_{y,{y}^{{\prime} }}={| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\otimes | 0\left.\right\rangle\). The last expectation value can be realised with a conventional swap test47,48 between the states \({| \Psi \left.\right\rangle }_{y,{y}^{{\prime} }}\) and \({U}_{Y}{| \Psi \left.\right\rangle }_{y,{y}^{{\prime} }}\). One can also use the destructive SWAP test (i.e., Bell measurements and classical post-processing)49. Alternatively, \({\rm{Tr}}(({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}{\langle \psi \vert }_{y,{y}^{{\prime} }}\otimes | 0\rangle\langle 0\vert ){U}_{Y})\) can also be computed through a Hadamard test (Lemma 31), where one is given the controlled-UY and state \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\otimes | 0\left.\right\rangle\). For example, applying the unitary UY to \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\otimes | 0\left.\right\rangle\) and using the swap test with \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\otimes | 0\left.\right\rangle\), we recover \({\langle \psi \vert }_{x,{x}^{{\prime} }}Y{| \psi \left.\right\rangle }_{x,{x}^{{\prime} }}\) to precision ϵ with query and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\), when \({\kappa }_{A}=O({\rm{poly}}\log d)\). Now, \({d}_{Y}({\boldsymbol{y}},{{\boldsymbol{y}}}^{{\prime} })={{\mathcal{N}}}_{\psi }^{2}{\langle \psi\vert }_{y,{y}^{{\prime} }}Y{| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\). Since we only have \(\sigma =O(\,\text{poly}\,\log d)\) non-zero entries in \({\boldsymbol{y}}-{{\boldsymbol{y}}}^{{\prime} }\), the cost in the classical computation of the normalisation constant is also of order \(O({\rm{poly}}\log d)\). Assuming that \({{\mathcal{N}}}_{\psi }^{2}=O({\rm{poly}}\log d)\), then we recover dY efficiently when given access to UY and \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\).

We saw that preparing the state \({| \psi \left.\right\rangle }_{y,{y}^{{\prime} }}\) according to Lemma 13 incurs a cost \(O({\rm{poly}}\log d)\). From Lemma 8 we can construct a \((2{\kappa }_{A},O(\log d),\epsilon )\)-block-encoding of Y with gate and query complexity \(O({\rm{poly}}({\kappa }_{A},{\kappa }_{C},\log (1/\epsilon )))\). Since \({\kappa }_{A},{\kappa }_{C}=O(\,{\text{poly}}\,\log d)\), then the theorem is proved. □

Thus, if our assumptions are obeyed, the quantum cost for computation of dY can be \(O(\,\text{poly}\,\log d)\), whereas classical numerical algorithms for computing the matrix geometric mean alone has cost O(polyd) for d × d matrices50,51.

In Theorem 14, we also assumed access to UA, UC. We show below the preparation of a block-encoding of density matrices, which are proportional to A and C and how this can be used to compute dY efficiently. First consider Lemma 30, which shows how to create a block-encoding of a density matrix. We first observe that we can define density matrices ρA and ρC where rewrite

where \(| {\psi }_{k}\left.\right\rangle =(1/{{\mathcal{N}}}_{{\psi }_{k}})\mathop{\sum }\nolimits_{i = 1}^{d}{({{\boldsymbol{x}}}^{(k)}-{{\boldsymbol{x}}}^{{\prime} (k)})}_{i}| i\left.\right\rangle\) and \({{\mathcal{N}}}_{{\psi }_{k}}^{2}=\mathop{\sum }\nolimits_{i = 1}^{d}{({{\boldsymbol{x}}}^{(k)}-{{\boldsymbol{x}}}^{{\prime} (k)})}_{i}^{2}\) is the corresponding normalisation. Then from Lemma 30, if we are given unitaries VA and VC that prepare purifications of ρA and ρC, respectively, it is possible to create \({U}_{{\rho }_{A}}\) and \({U}_{{\rho }_{C}}\) using one query to VA and VC respectively and \(O(\log d)\) gates. One such class of states purifying ρA and ρC are

where

To prepare \(| {\Sigma }_{A}\left.\right\rangle\) and \(| {\Sigma }_{C}\left.\right\rangle\) we require the controlled unitaries \({V}_{A}={\sum }_{k\in {\mathcal{S}}}| k\left.\right\rangle \,\left\langle \right.k\left.\right\vert \otimes {W}_{k}^{(A)}\) and \({V}_{C}={\sum }_{k\in {\mathcal{D}}}| k\left.\right\rangle \,\left\langle \right.k| \otimes {W}_{k}^{(C)}\) acting on states \({\sum }_{k\in {\mathcal{S}}}\sqrt{{p}_{k}^{(A)}}| k\left.\right\rangle | 0\left.\right\rangle\) and \({\sum }_{k\in {\mathcal{D}}}\sqrt{{p}_{k}^{(A)}}| k\left.\right\rangle | 0\left.\right\rangle\) respectively. Here \({W}_{k}^{(A)}\) and \({W}_{k}^{(C)}\) are the state preparation circuits from Lemma 13 that create \(| {\psi }_{k}\left.\right\rangle\) where \(k\in {\mathcal{S}}\) and \(k\in {\mathcal{D}}\), respectively. Since \({W}_{k}^{(A)}\) and \({W}_{k}^{(C)}\) are known circuits and assuming \(\sigma =O({\rm{poly}}\log d)\), it is similarly efficient and also straightforward to realise VA and VC. Then, from Lemma 30, it is possible to create \((1,O(\log d),0)\)-block-encodings of ρA and ρC with gate and query complexity \(O({\rm{poly}}\log d)\), denoted \({U}_{{\rho }_{A}}\) and \({U}_{{\rho }_{C}}\), respectively. In the case where \({\rm{Tr}}(A)=1={\rm{Tr}}(C)\), then this automatically gives us the unitaries UA and UC required in Theorem 14.

For general classical data, \({\rm{Tr}}(A)=1={\rm{Tr}}(C)\) does not hold in general. However, since \(A={\rm{Tr}}(A){\rho }_{A}\) and \(C={\rm{Tr}}(C){\rho }_{C}\), the proof in Theorem 14 holds in the same way if we began with \({U}_{{\rho }_{A}}\) and \(U_{{\rho }_{C}}\), from which we can create \({U}_{{Y}^{{\prime} }}\) where \({Y}^{{\prime} }\equiv {\rho }_{A}^{-1}\#{\rho }_{C}={({\rm{Tr}}(C)/{\rm{Tr}}(A))}^{1/2}Y\). This implies

Following through the same proof idea as in Theorem 14 allows us to extract \({d}_{Y}^{{\prime} }\). To recover dY, we just use \({d}_{Y}\approx {({\rm{Tr}}(A)/{\rm{Tr}}(C))}^{1/2}{d}_{Y}^{{\prime} }\). These normalisations can be efficiently recovered by assuming the states \(| {\psi }_{k}\left.\right\rangle\) have low sparsity \({\sigma }_{k}=O({\rm{poly}}\log d)\) for each k. This also means that the normalisations \({\rm{Tr}}(A)\) and \({\rm{Tr}}(C)\) are efficient to compute. So long as \({({\rm{Tr}}(A)/{\rm{Tr}}(C))}^{1/2}\) is \(O({\rm{poly}}\log d)\), then dY is efficiently estimable.

1-class quantum learning

Here we propose a new quantum classification problem that is a 1-class problem. This means that given a quantum state, we only want to know whether this state belongs to a class \({\mathcal{A}}\) or not. This problem occurs in many areas in machine learning, in particular in anomaly detection, where \({\mathcal{A}}\) is the class of states that are considered anomalous. Here we can be provided with the following training data:

where \({\{{\rho }_{i}\}}_{i}\) and \({\{{\sigma }_{i}\}}_{i}\) are sets of D-dimensional states. In anomaly detection scenarios, there are usually much fewer examples of anomalous states than ‘normal’ states, so that N ≪ M. However, we will not focus on subtleties associated with imbalanced training data here.

Suppose that we have an incoming quantum state ξ and we want to flag this as belonging to the class \({\mathcal{A}}\) or not. Then it is useful to learn an ‘observable’ or a ‘witness’ Y such that its expectation value \({\rm{Tr}}(Y\xi )\) is large when ξ is flagged as anomalous, belonging to \({\mathcal{A}}\), but this value is small when ξ is ‘normal’. Thus we can set up an optimisation problem of the form

It is sensible in the above to minimise \({\rm{Tr}}({Y}^{-1}\rho )\) in L(Y) above since it is simple to show that a small value of \({\rm{Tr}}({Y}^{-1}\rho )\) implies a large value of \({\rm{Tr}}(Y\rho )\). Since ρ is a density matrix, it can be shown that \({\rm{Tr}}({Y}^{-1}\rho )\ge {\rm{Tr}}{(Y\rho )}^{-1}\), which is a consequence of the operator Jensen inequality (see [52, Eqs. (29–35)]). Thus \({\rm{Tr}}({Y}^{-1}\rho )\le \lambda\) implies \({\rm{Tr}}(Y\rho )\ge 1/\lambda\).

When ρ and σ are (positive definite) density matrices, the unique solution to Eq. (55) is given by the matrix geometric mean Y = σ−1#ρ. We can, therefore, proceed as before to compute \({\rm{Tr}}(Y\xi )\), except now we do not need to be concerned with state preparation of ρ and σ, and we can assume that we are given copies of ρ and σ. Thus, given access to UY, we can estimate the following expectation:

where the κσ constant follows from Lemma 8 and the error in the above estimate is upper bounded by ϵ. We then have the following result.

Theorem 15

Suppose that we are given the block-encodings Uρ and Uσ, where \(\rho \in {\mathcal{A}}\), \(\sigma\, \notin\, {\mathcal{A}}\) and that we are also given access to multiple copies of ξ. Suppose further that \({\kappa }_{\rho },{\kappa }_{\sigma }=O({\rm{poly}}\log d)\). Then computing \({\rm{Tr}}(Y\xi )\) for the optimal Y in Eq. (55) to precision ϵ > 0 has a query and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\).

Proof

From Lemma 8 we can construct a \((2{\kappa }_{\sigma },O(\log d),\epsilon )\)-block-encoding of Y with gate and query complexity \(O({\rm{poly}}({\kappa }_{\rho },{\kappa }_{\sigma },\log (1/\epsilon )))\). Considering that \({\kappa }_{\rho },{\kappa }_{\sigma }=O({\rm{poly}}\log d)\), applying the unitary UY to \(\xi \otimes | 0\left.\right\rangle \,\left\langle 0\right\vert\), and using the Hadamard test (Lemma 31) with \(\xi \otimes | 0\left.\right\rangle \,\left\langle 0\right\vert\), we recover \({\rm{Tr}}(Y\xi )\) to precision ϵ with query and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\). □

We emphasise that this problem is entirely quantum in nature as we are given directly only quantum data.

Remark 2

The assumption of Uρ and Uσ as block-encodings of ρ and σ, respectively, is without loss of generality in practice. There are two quantum input models for quantum states that are commonly employed in quantum algorithms:

-

Quantum query access model. In this model, quantum unitary oracles \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) are given such that they prepare purifications of ρ and σ, respectively. By the technique of purified density matrix in ref. 20 (see Lemma 30), we can implement Uρ and Uσ from \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) with query and gate complexity \(\tilde{O}\left(1\right)\). Therefore, Theorem 15 can be adapted to the quantum query access model with query and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\).

-

Quantum sample access model. In this model, independent and identical copies of ρ and σ are given. By the technique of density matrix exponentiation53,54, we can implement unitary operators that are block-encodings of ρ and σ using their copies (which was first noted in ref. 55 and later investigated in refs. 56,57,58). In this way, Theorem 15 can be adapted to the quantum sample access model with sample and gate complexity \(O({\rm{poly}}(\log d,1/\epsilon ))\).

A very interesting observation to note here is that the matrix geometric mean solution Y to Eq. (55) is precisely the Fuchs–Caves observable14, which is important for distinguishing two states ρ and σ. From this observation, we can motivate the Fuchs–Caves observable as the observable that gives rise to a kind of ‘optimal witness’ that distinguishes ρ and σ and the value of this ‘witness’ is precisely quantum fidelity, as shown in the next section. This provides an alternative motivation for the form of quantum fidelity between two mixed states from a metric learning viewpoint. In fact, a protocol involving measurement of the Fuchs–Caves observable also achieves an upper bound on sample complexity for the quantum hypothesis testing problem in distinguishing ρ and σ [ref. 59, Appendix F]. Thus, up to constant factors, the strategy also minimises the number of copies of each state used for a given tolerated precision in distinguishing the states. We note that the loss function also appears in Eq. (6) in ref. 42, but this is motivated from a different perspective.

Extension to weighted geometric mean metric learning

The two terms in the loss function in Eq. (53), involving σ and ρ respectively, have equal weights. This means the learning algorithm deems closeness to ρ and farness to σ of equal ‘importance’. However, there are scenarios, especially in anomaly detection, where asymmetry is preferable. For example, this occurs when there is a higher cost of getting false negatives.

Modifying Eq. (53) by simply multiplying each of the two terms by different constants α, β leads to \(L(Y)=\alpha {\rm{Tr}}(Y\sigma )+\beta {\rm{Tr}}({Y}^{-1}\rho )\). However, this only rescales the optimal solution Y → (β/α)1/2Y by a constant factor, as observed in ref. 24. A new loss function is, therefore, necessary for the asymmetric case.

Following ref. 24, one can first observe that the solution Y = σ−1#ρ is in fact also a solution to the following optimisation problem when t = 1/2:

where δ is the geodesic distance defined in Eq. (8). While the mathematical proof is more involved, this fact can easily be understood from the geometric viewpoint. Here Y = σ−1#ρ can be understood as the midpoint along the unique geodesic in Riemannian space joining σ−1 and ρ. When t = 1/2, the optimal \(\tilde{Y}\) is then the point along this geodesic that simultaneously minimises the distance between \(\tilde{Y}\) and σ−1, as well as \(\tilde{Y}\) and ρ. This clearly must be the midpoint. Similar geometric reasoning leads one to generalise to t ≠ 1/2 where the solution to Eq. (57) is the weighted matrix geometric mean \(\tilde{Y}={\sigma }^{-1}{\#}_{t}\rho\). That this is the unique solution to Eq. (57) is a special case (the n = 2 case) in ref. 60 and proofs can also be found in ref. 26, Chapter 6. Also, see ref. 24 for a discussion in the context of geometric mean metric learning.

We can proceed similarly to 1-class quantum learning algorithm with equal weights as described in the previous section. The goal is also to output \({\rm{Tr}}(\tilde{Y}\xi )\) for some input test state ξ. Here, we require instead the construction of block-encodings of the weighted matrix geometric mean, as given in Lemma 11. However, for all t > 0 (p > 0 in Lemma 11), we see that there is no scaling difference for constructing the block-encoding for the weighted version. Thus, the cost, up to constant and logarithmic factors, is identical for the quantum-weighted geometric mean metric learning algorithm as for the unweighted version in Theorem 15.

Estimation of quantum fidelity and geometric Rényi relative entropies

Here, we describe our quantum algorithms for estimating quantum fidelity and geometric Rényi relative entropies using our quantum subroutines for preparing block-encodings of the standard and weighted matrix geometric means.

Fidelity

The fidelity between two mixed quantum states is defined by61

which is a commonly considered measure of the closeness of or similarity between two quantum states. Estimating the value of fidelity is a fundamental task in quantum information theory. When given matrix descriptions of the states ρ and σ, it can be calculated directly using the formula above or as the solution to a semi-definite optimisation problem42. Recently, several time-efficient quantum algorithms for fidelity estimation have been developed when one has access to state-preparation circuits for ρ and σ 56,62,63.

Here, we introduce a new approach for fidelity estimation that is based on the Fuchs–Caves observable14. For two quantum states ρ and σ, this observable is given by M = σ−1#ρ. Then the fidelity between ρ and σ can be represented as the expectation of M with respect to σ (cf. [ref. 38, Eq. (9.159)]):

Theorem 16

(Fidelity estimation via Fuchs–Caves observable). Suppose that \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) prepare purifications of mixed quantum states ρ and σ, respectively. Then, we can estimate \(F\left(\rho ,\sigma \right)\) within additive error ϵ using \(\tilde{O}(\min \{{\kappa }_{\rho }^{{2}},{\kappa }_{\sigma }^{2}\}\cdot {\kappa }_{\rho }{\kappa }_{\sigma }/\epsilon )\) queries to \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\), where κρ, κσ > 0 are such that ρ ≥ I/κρ and σ ≥ I/κσ.

Proof

Suppose that ρ and σ are n-qubit mixed quantum states and \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) are \(\left(n+a\right)\)-qubit unitary operators. By Lemma 30, we can implement two unitary operators Uρ and Uσ that are \(\left(1,n+a,0\right)\)-block-encodings of ρ and σ using \(O\left(1\right)\) queries to \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\), respectively. Then, by applying Lemma 8, we can implement a \(\left(2{\kappa }_{\sigma },b,\delta \right)\)-block-encoding UM of M = σ−1#ρ, using \(\tilde{O}({\kappa }_{\sigma }{\kappa }_{\rho }{\log }^{2}(1/\delta ))\) queries to Uρ and \(\tilde{O}({\kappa }_{\sigma }^{2}{\kappa }_{\rho }{\log }^{3}(1/\delta ))\) queries to Uσ, where \(b=O\left(n+a\right)\), and κρ and κσ satisfy ρ ≥ I/κρ and σ ≥ I/κσ.

By the Hadamard test (given in Lemma 31), there is a quantum circuit C that outputs 0 with probability \(\frac{1}{2}\left(1+{\rm{Re}}\{ {\rm{Tr}}\left({\left\langle \right.0| }_{b}{U}_{M}{| 0\left.\right\rangle }_{b}\sigma \right)\}\right)\), using one query to UM and one sample of σ. By noting that

we conclude that an \(O\left(\epsilon /{\kappa }_{\sigma }\right)\)-estimate of \({\rm{Re}} {\rm{Tr}}\left({\left\langle \right.0| }_{b}{U}_{M}{| 0\left.\right\rangle }_{b}\sigma \right)\) with \(\delta =\Theta \left(\epsilon /{\kappa }_{\sigma }\right)\) suffices to obtain an ϵ-estimate of \({\rm{Tr}}\left(M\sigma \right)\) (which is the fidelity according to Eq. (59)). By quantum amplitude estimation (given in Lemma 33), this can be done using \(O\left({\kappa }_{\sigma }/\epsilon \right)\) queries to C.

In summary, an ϵ-estimate of \(F\left(\rho ,\sigma \right)\) can be obtained by using \(\tilde{O}({\kappa }_{\sigma }^{3}{\kappa }_{\rho }/\epsilon )\) queries to \({{\mathcal{O}}}_{\sigma }\) and \(\tilde{O}({\kappa }_{\sigma }^{2}{\kappa }_{\rho }/\epsilon )\) queries to \({{\mathcal{O}}}_{\rho }\). The proof is completed by taking the minimum over symmetric cases (i.e., simply flipping the role of ρ and σ since the fidelity formula is symmetric under this exchange). □

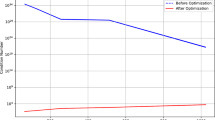

The current best quantum query complexity of fidelity estimation is \(\tilde{O}\left({r}^{2.5}/{\epsilon }^{5}\right)\), due to56, where r is the lower rank of the two input mixed quantum states. In comparison, our quantum algorithm for fidelity estimation based on the Fuchs–Caves observable, as given in Theorem 16, has a better dependence on the additive error ϵ, if κρ and κσ are known in advance.

Moreover, we note that the ϵ-dependence of the quantum algorithm given in Theorem 16 is optimal (up to polylogarithmic factors), as stated in Lemma 17 below.

Lemma 17

(Optimal ϵ-dependence of fidelity estimation). Suppose that \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) prepare purifications of mixed quantum states ρ and σ, respectively, satisfying ρ ≥ I/κρ and σ ≥ I/κσ for κρ, κσ > 0. Then, every quantum algorithm that estimates \(F\left(\rho ,\sigma \right)\) within additive error ϵ requires query complexity \(\Omega \left(1/\epsilon \right)\) even if \({\kappa }_{\rho }={\kappa }_{\sigma }=\Theta \left(1\right)\).

Proof

See Appendix IV I. □

In addition to the optimal \(\epsilon\)-dependence of fidelity estimation in Lemma 17, a quantum query algorithm for estimating the fidelity \(F(|\psi\rangle, |\varphi\rangle)\) between pure states with query complexity in \(\Theta(1/\epsilon)\) was given in64.

Remark 3

(Sample complexity for fidelity estimation). Using the method in Theorem 16, we can also estimate the fidelity by using only samples of quantum states, which is achieved by density matrix exponentiation53,54,56,58,65. As analysed in Appendix IV J, the sample complexity for fidelity estimation is shown to be \(\tilde{O}(\min \{{\kappa }_{\rho }^{5},{\kappa }_{\sigma }^{5}\}\cdot {\kappa }_{\rho }^{2}{\kappa }_{\sigma }^{2}/{\epsilon }^{3})={\rm{poly}}({\kappa }_{\rho },{\kappa }_{\rho })\cdot \tilde{O}(1/{\epsilon }^{3})\). The prior known sample complexity for fidelity estimation is \(\tilde{O}\left({r}^{5.5}/{\epsilon }^{12}\right)\) due to56, where r is the lower rank of the two input mixed quantum states.

We also show a sample lower bound \(\Omega \left(1/{\epsilon }^{2}\right)\) for fidelity estimation even if \({\kappa }_{\rho }={\kappa }_{\sigma }=\Theta \left(1\right)\) using the method in the proof of Lemma 17; this can be seen as an analogue of the sample lower bound \(\Omega \left(1/{\epsilon }^{2}\right)\) for pure-state fidelity estimation in ref. 66. In addition, a sample lower bound \(\Omega \left(r/\epsilon \right)\) for (low-rank) fidelity estimation is implied in refs. 67,68.

Currently, quantum algorithms for fidelity estimation with optimal sample complexity are only known for pure states. For estimating the squared fidelity \(F^2(|\psi\rangle, |\varphi\rangle)\), the sample complexity \(\Theta(1/\epsilon^2)\) can be achieved by the SWAP test48. In66, they showed that \(\Theta(\max\{1/\epsilon^2, \sqrt{d}/\epsilon\})\) samples are sufficient and necessary to estimate \(F^2(|\psi\rangle, |\varphi\rangle)\) when only single-copy measurements are allowed. Recently in69, the sample complexity of estimating the fidelity \(F(|\psi\rangle, |\varphi\rangle)\) was shown to be \(\Theta(1/\epsilon^2)\).

Geometric fidelity and geometric Rényi relative entropy

Here we present, to the best of our knowledge, the first quantum algorithm for computing the geometric α-Rényi relative entropy, as introduced in ref. 12. For \(\alpha \in (0,1)\cup \left(1,2\right]\), the geometric α-Rényi relative entropy is defined as (see, e.g., [ref. 13, Eq. (9)] and [ref. 70, Eq. (7.6.1)])

where

is known as the geometric α-Rényi relative quasi-entropy. When α ∈ (0, 1), we also refer to \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\) as the geometric α-fidelity. In particular, for the case of α = 1/2, the quantity \({\widehat{F}}_{1/2}\left(\rho ,\sigma \right)\) is the geometric fidelity (also known as the Matsumoto fidelity)15,16. The α-geometric Rényi relative entropy has several uses in quantum information theory, especially in analysing protocols involving feedback13,28,71.

Here we present quantum algorithms in Theorems 18 and 19 for computing the geometric Rényi relative (quasi-)entropy.

Theorem 18

Suppose that \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) prepare purifications of mixed quantum states ρ and σ, respectively. Then, for \(\alpha \in \left(0,1\right)\cup \left(1,2\right]\), we can estimate \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\) to within additive error ϵ using

-

\(\tilde{O}(\min {\{{\kappa }_{\rho },{\kappa }_{\sigma }\}}^{\min \left\{1+\alpha ,2-\alpha \right\}}\cdot {\kappa }_{\rho }{\kappa }_{\sigma }/\epsilon )\) queries for α ∈ (0, 1), and

-

\(\tilde{O}(\min \{{\kappa }_{\rho }{\kappa }_{\sigma }^{\alpha -1},{\kappa }_{\rho }^{\alpha -1}{\kappa }_{\sigma },{\kappa }_{\rho }^{1+\alpha },{\kappa }_{\sigma }^{1+\alpha }\}\cdot {\kappa }_{\rho }{\kappa }_{\sigma }/\epsilon )\) queries for \(\alpha \in \left(1,2\right]\)

to \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\), where κρ, κσ > 0 satisfy ρ ≥ I/κρ and σ ≥ I/κσ.

In particular, when α = 1/2, \({\widehat{F}}_{1/2}\left(\rho ,\sigma \right)\) is the geometric fidelity (also known as the Matsumoto fidelity), which can be estimated using \(\tilde{O}(\min {\{{\kappa }_{\rho },{\kappa }_{\sigma }\}}^{3/2}\cdot {\kappa }_{\rho }{\kappa }_{\sigma }/\epsilon )\) queries to \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\).

Proof

See Appendix IV H. □

Theorem 19

Suppose that \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) prepare purifications of mixed quantum states ρ and σ, respectively. Then, for \(\alpha \in \left(0,1\right)\cup \left(1,2\right]\), we can estimate \({\tilde{D}}_{\alpha }\left(\rho || \sigma \right)\) within additive error ϵ using

-

\(\tilde{O}(\min {\{{\kappa }_{\rho },{\kappa }_{\sigma }\}}^{\min \left\{1+\alpha ,2-\alpha \right\}}\cdot {\kappa }_{\rho }{\kappa }_{\sigma }^{2-\alpha }/\epsilon)\) queries for α ∈ (0, 1), and

-

\(\tilde{O}(\min \{{\kappa }_{\rho }{\kappa }_{\sigma }^{\alpha -1},{\kappa }_{\rho }^{\alpha -1}{\kappa }_{\sigma },{\kappa }_{\rho }^{1+\alpha },{\kappa }_{\sigma }^{1+\alpha }\}\cdot {\kappa }_{\rho }^{\alpha }{\kappa }_{\sigma }/\epsilon )\) queries for \(\alpha \in \left(1,2\right]\)

to \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\), where κρ, κσ > 0 satisfy ρ ≥ I/κρ and σ ≥ I/κσ.

Proof

See Appendix IV H. □

Notably, we show that our quantum algorithm for estimating the geometric fidelity \({\widehat{F}}_{1/2}\left(\rho ,\sigma \right)\) achieves an optimal ϵ-dependence. The optimality also holds for \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\) with α ∈ (0, 1).

Lemma 20

(Optimal ϵ-dependence of geometric α-fidelity estimation). Suppose that \({{\mathcal{O}}}_{\rho }\) and \({{\mathcal{O}}}_{\sigma }\) prepare purifications of mixed quantum states ρ and σ, respectively, with ρ ≥ I/κρ and σ ≥ I/κσ, where κρ, κσ > 0. Then, for every constant α ∈ (0, 1), every quantum algorithm that estimates \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\) within additive error ϵ requires query complexity \(\Omega \left(1/\epsilon \right)\) even if \({\kappa }_{\rho }={\kappa }_{\sigma }=\Theta \left(1\right)\), where \(\Omega \left(\cdot \right)\) hides a constant factor that depends only on α.

Proof

See Appendix IV I. □

It remains an open problem to determine whether optimality still holds for \(\alpha \in \left(1,2\right]\). However, note that when \(\alpha \in \left(1,2\right]\), the inequality \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\ge 1\) holds, and so \({\widehat{F}}_{\alpha }\left(\rho ,\sigma \right)\) cannot be interpreted as a fidelity for these values of α; thus, different techniques are required in order to establish optimality.

Remark 4

Similar to Remark 3, for estimating the corresponding quantities, we can extend Theorems 18 and 19 to quantum algorithms with sample complexity \({\rm{poly}}({\kappa }_{\rho },{\kappa }_{\rho })\cdot \tilde{O}\left(1/{\epsilon }^{3}\right)\), and extend Lemma 20 to a sample complexity lower bound of \(\Omega \left(1/{\epsilon }^{2}\right)\).

BQP-hardness

In this section, we consider the hardness of computing the matrix geometric mean. Precisely, we show that our quantum algorithm for matrix geometric means (given in Lemma 8) can be used to solve a \({\mathsf{BQP}}\)-complete problem (defined in Problem 1). Roughly speaking, this problem pertains to testing a certain property of the matrix geometric mean of two well-conditioned sparse matrices.

Problem 1 (Matrix geometric mean)

For functions \({\kappa }_{A}:{\mathbb{N}}\to {\mathbb{N}}\) and \({\kappa }_{C}:{\mathbb{N}}\to {\mathbb{N}}\), let \({\rm{MGM}}\left({\kappa }_{A},{\kappa }_{C}\right)\) be a decision problem defined as follows. For a size-n instance of \({\rm{MGM}}\left({\kappa }_{A},{\kappa }_{C}\right)\), let N = 2n and let \(A,C\in {{\mathbb{C}}}^{N\times N}\) be \(O\left(1\right)\)-sparse positive definite matrices satisfying \(I/{\kappa }_{A}\left(n\right)\le A\le I\) and \(I/{\kappa }_{C}\left(n\right)\le C\le I\), and given by a \({\rm{poly}}\left(n\right)\)-size uniform classical circuit \({{\mathcal{C}}}_{n}\) such that, for every 1 ≤ j ≤ N, the circuit \({{\mathcal{C}}}_{n}\left(j\right)\) computes the positions and values of the non-zero entries in the j-th row of A and C. Let \(Y\in {{\mathbb{C}}}^{N\times N}\) be the matrix geometric mean of A and C such that YAY = C. The task is to decide which of the following is the case, promised that one of the two holds:

-

Yes: \(\left\langle \right.\psi | M| \psi \left.\right\rangle \ge 2/3\);

-

No: \(\left\langle \right.\psi | M| \psi \left.\right\rangle \le 1/3\),

where \(| \psi \left.\right\rangle :=\frac{{Y}^{2}| 0\left.\right\rangle }{\left\vert {Y}^{2}| 0\left.\right\rangle \right\vert }\) and \(M=| 0\left.\right\rangle \,\left\langle \right.0| \otimes {I}_{N/2}\) measures the first qubit.

Theorem 21

\(\,\text{MGM}\,\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\) is \({\mathsf{BQP}}\)-complete.

Proof

The proof consists of two parts: Lemma 22 and Lemma 23.

-

1.

In Lemma 22, we state that \({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\) is \({\mathsf{BQP}}\)-hard; the proof employs a reduction of the quantum linear systems problem (QLSP).

-

2.

In Lemma 23, we state that \({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\) is in \({\mathsf{BQP}}\); the proof employs the quantum algorithm for the matrix geometric mean given in Lemma 8.

□

Lemma 22

\({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\) is \({\mathsf{BQP}}\)-hard.

Proof

We consider the quantum linear systems problem (QLSP) defined as follows. □

Problem 2 (QLSP)

For functions \(\kappa :{\mathbb{N}}\to {\mathbb{N}}\), let \({\rm{QLSP}}\left(\kappa \right)\) be a decision problem defined as follows. For a size-n instance of \({\rm{QLSP}}\left(\kappa \right)\), let N = 2n and \(A\in {{\mathbb{C}}}^{N\times N}\) be an \(O\left(1\right)\)-sparse Hermitian matrix such that \(I/\kappa \left(n\right)\le A\le I\), given by a \({\rm{poly}}\left(n\right)\)-size uniform classical circuit \({{\mathcal{C}}}_{n}\) such that for every 1 ≤ j ≤ N, \({{\mathcal{C}}}_{n}\left(j\right)\) computes the positions and values of the non-zero entries in the j-th row of A. The task is to decide which of the following is the case, promised that one of the two holds:

-

Yes item: \(\left\langle \right.\psi | M| \psi \left.\right\rangle \ge 2/3\);

-

No item: \(\left\langle \right.\psi | M| \psi \left.\right\rangle \le 1/3\),

where \(| \psi \left.\right\rangle :=\frac{{A}^{-1}| 0\left.\right\rangle }{\left\vert {A}^{-1}| 0\left.\right\rangle \right\vert }\) and \(M=| 0\left.\right\rangle \,\left\langle \right.0| \otimes {I}_{N/2}\) measures the first qubit.

It was shown in ref. 1 that \({\rm{QLSP}}\left({\rm{poly}}\left(n\right)\right)\) is \({\mathsf{BQP}}\)-complete. Here, we reduce \({\rm{QLSP}}\left({\rm{poly}}\left(n\right)\right)\) to \({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\), and therefore show the \({\mathsf{BQP}}\)-hardness of \({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\). Consider any instance (matrix) \(A\in {{\mathbb{C}}}^{N\times N}\) of \({\rm{QLSP}}\left(\kappa \right)\), where N = 2n and \(\kappa ={\rm{poly}}\left(n\right)\). We choose \(C=I\in {{\mathbb{C}}}^{N\times N}\) to be the identity matrix, which is a 1-sparse Hermitian matrix and each of whose rows can be easily computed. Note that the matrix geometric mean Y of A−1 and C is Y = A−1#C = A−1/2. Then, it can be seen that Y2 = A−1 and thus \(| {\psi }_{Y}\left.\right\rangle ={Y}^{2}| 0\left.\right\rangle /\left\Vert {Y}^{2}| 0\left.\right\rangle \right\Vert ={A}^{-1}| 0\left.\right\rangle /\left\Vert {A}^{-1}| 0\left.\right\rangle \right\Vert =| {\psi }_{A}\left.\right\rangle\). Consequently, any quantum algorithm that determines whether \(\left\langle \right.{\psi }_{Y}| M| {\psi }_{Y}\left.\right\rangle \ge 2/3\) or \(\left\langle \right.{\psi }_{Y}| M| {\psi }_{Y}\left.\right\rangle \le 1/3\) with success probability at least 2/3 can be used to determine whether \(\left\langle \right.{\psi }_{A}| M| {\psi }_{A}\left.\right\rangle \ge 2/3\) or \(\left\langle \right.{\psi }_{A}| M| {\psi }_{A}\left.\right\rangle \le 1/3\). In summary, \({\rm{QLSP}}\left(\kappa \right)\) can be reduced to \({\rm{MGM}}\left(\kappa ,1\right)\) through the above encoding. Therefore, \({\rm{MGM}}\left({\rm{poly}}\left(n\right),{\rm{poly}}\left(n\right)\right)\) is \({\mathsf{BQP}}\)-hard.

Lemma 23

MGM(poly(n), poly(n)) is in BQP.

Proof

See Appendix IV K. □

Discussion

We constructed efficient block-encodings of the matrix geometric mean (and the weighted matrix geometric mean). These are unique solutions to the simplest algebraic Riccati equations—quadratically nonlinear system of matrix equations. Unlike the output of most quantum algorithms for linear systems of equations, these solutions of the nonlinear matrix equations are not embedded in pure quantum states, but rather in terms of observables from which we can extract expectation values.

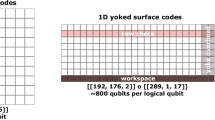

This allows us to introduce a new class of algorithms for quantum learning, called quantum geometric mean metric learning. For example, this can be applied in a purely quantum setting for picking out anomalous quantum states. This can also be adapted to the case of flexible weights on the cost of flagging an anomaly. The new quantum subroutines can also be used for the first quantum algorithm, to the best of our knowledge, to compute the geometric Rényi relative entropies and new quantum algorithms to compute quantum fidelity by means of the Fuchs–Caves observable. In the latter case, we demonstrate optimal scaling Ω(1/ϵ) in precision.

While most of the applications introduced above are for quantum problems for which there is no direct classical equivalent (although the quantum learning algorithm can also be applied to learning Euclidean distances for classical data), there are potential benefits that the new quantum subroutine can have over purely classical methods. This could be exploited for future applications. For example, classical numerical algorithms to compute the matrix geometric mean have cost O(polyD) for D × D matrices50,51. The same is also true for solving the differential matrix Riccati equation and algebraic matrix Riccati equation17 through iterative methods and other methods based on finding the eigendecomposition of a larger matrix72. For quantum processing on the other hand, we showed conditions under which the block-encodings of some of these solutions can be obtained with cost \(O({\rm{poly}}\log d)\).

For example, there are many classical problems for which it is important to compute the matrix geometric mean between two matrices. They appear in imaging73,74 and in the analysis of multiport electrical networks75. The algebraic Riccati equation of the form in Eq. (9) also appears in optimal control and Kalman filters. Under the assumptions in Lemma 5 when uniqueness of its solution is also satisfied, it can be possible to construct its block-encoding in Lemma 9. Although these assumptions are not generally satisfied, this still gives an idea of the extent and reach of the matrix geometric mean. Extensions of our algorithms to the matrix geometric mean consisting of more than two matrices can also be explored, which already find applications in areas like elasticity and radars76,77. It is also intriguing to consider purely quantum extensions of these problems. The main difficulty associated with constructing block-encodings of multivariate geometric means is that they are not known to have an analytical form as they do in the bivariate case; rather, they are constructed as the solutions of nonlinear equations generalising the simple algebraic Riccati equation10. It is worth mentioning that there are many other quantum algorithms for learning problems with different loss functions and the solutions to these problems do not have general analytical forms. Examples include semi-definite programming78,79,80,81, linear programming82,83,84, and general matrix games85. It is interesting to ask whether the techniques developed in this paper can be used in these problems.

In addition to usefulness in applications, the standard and weighted matrix geometric means also have an elegant interpretation in terms of geodesics in Riemannian space. Despite the importance and beauty of Riemannian geometry in mathematics and other areas in physics, sensing, and machine learning, it has not appeared too much in quantum computation yet, apart from very notable exceptions like86. This geometric perspective is useful in understanding the weighted quantum learning algorithm, and we showed how it provided an alternative motivation for the form of quantum fidelity via the Fuchs–Caves observable. There is more potential here for the matrix geometric mean to bring the ideas of geometry closer to quantum information and computation.

Methods

Proof of Lemma 5

This follows by observing that (9) is a matrix version of the quadratic equation and by following an argument similar to what is well known as completing the square. Consider that

Then

and so (9) is equivalent to

where