Abstract

Quantum annealing (QA) has the potential to significantly improve solution quality and reduce time complexity in solving combinatorial optimization problems compared to classical optimization methods. However, due to the limited number of qubits and their connectivity, the QA hardware did not show such an advantage over classical methods in past benchmarking studies. Recent advancements in QA with more than 5000 qubits, enhanced qubit connectivity, and the hybrid architecture promise to realize the quantum advantage. Here, we use a quantum annealer with state-of-the-art techniques and benchmark its performance against classical solvers. To compare their performance, we solve over 50 optimization problem instances represented by large and dense Hamiltonian matrices using quantum and classical solvers. The results demonstrate that a state-of-the-art quantum solver has higher accuracy (~0.013%) and a significantly faster problem-solving time (~6561×) than the best classical solver. Our results highlight the advantages of leveraging QA over classical counterparts, particularly in hybrid configurations, for achieving high accuracy and substantially reduced problem solving time in large-scale real-world optimization problems.

Similar content being viewed by others

Introduction

Quantum computers mark a paradigm shift to tackle challenging tasks that classical computers cannot solve in a practical timescale1,2. The quantum annealer is a special quantum computer designed to solve combinatorial optimization problems with problem-size-independent time complexity3,4,5. This unique quantum annealing (QA) capability is based on the so-called adiabatic process6,7. During this process, entangled qubits naturally evolve into the ground state of a given Hamiltonian to find the optimal vector of binary decisions for the corresponding quadratic unconstrained binary optimization (QUBO) problem8,9,10. The adiabatic theorem of quantum mechanics ensures that QA identifies the optimal solution regardless of the size and landscape of the combinatorial parametric space, highlighting QA as a powerful and practical solver11,12,13,14. The ability to efficiently explore high-dimensional combinational spaces makes QA capable of handling a wide range of optimization tasks4,5,10,15,16.

The potential merit of QA motivates the systematic comparison with classical counterparts (e.g., simulated annealing, integer programming, steepest descent method, tabu search, and parallel tempering with isoenergetic cluster moves), focusing on the solution quality and the time complexity. While previous benchmarking studies showed some advantages of QA, most used low-dimensional or the sparse configurations of QUBO matrices due to the lack of available qubits in the QA hardware and poor topology to connect qubits17,18,19. For example, O’Malley et al.17 compared the performance of QA with classical methods (mathematical programming), but they limited the number of binary variables to 35 due to the QA hardware limitation. Similarly, Tasseff et al.18 highlighted the potential advantages of QA compared to classical methods (such as simulated annealing, integer programming, and Markov chain Monte Carlo) for sparse optimization problems containing up to 5000 decision variables and 40,000 quadratic terms. Haba et al.19 demonstrated that a classical solver (integer programming) could be faster than QA for small problems, e.g., ~100 decision variables. Consequently, these benchmarking studies show that QA methods and their classical counterparts can exhibit similar solution quality and time complexity. However, such low-dimensional or sparse QUBOs considered in the previous benchmarking studies are challenging to map to a wide range of practical problems, which usually require high-dimensional and dense configuration of QUBO matrices4,5,10,20. For example, in our previous QA optimization of one-dimensional and two-dimensional optical metamaterials, the QUBO matrices exhibit these properties (Fig. S1)4,5,16,20.

The state-of-the-art QA hardware (D-Wave Advantage System) features more than 5000 qubits, advanced topology to connect qubits, and efficient hybrid algorithms (e.g., Leap Hybrid sampler). For example, the recent development (e.g., Pegasus topology) has increased qubit connectivity from 6 to 1521,22,23. Improved qubit connectivity reduces the need for complex embedding processes, which map problem variables to physical qubits on the hardware. With better connectivity, such as in D-Wave’s Pegasus topology, the embedding process becomes more efficient and can better preserve the structure of dense optimization problems. This enhancement allows the quantum annealer to increase the potential for finding high-quality solutions24,25. In addition, a QUBO decomposition algorithm (i.e., QBSolv) splits a large QUBO matrix into small pieces of sub-QUBO matrices, allowing us to handle a QUBO matrix with dimensions higher than the maximum number of qubits in the QA hardware26,27. Given these advancements, it is imperative to study the performance of the state-of-the-art QA system for high-dimensional and dense configuration of QUBO matrices, and systemically compare solution quality and the time complexity with the classical counterparts.

In this work, we benchmark the performance of quantum solvers against classical algorithms in solving QUBO problems with large and dense configurations to represent real-world optimization problems. We analyze the solution quality and the required time to solve these benchmark problems using several quantum and classical solvers. This benchmarking study provides important insights into employing QA in practical problem-solving scenarios.

Results

We present a benchmarking study on combinatorial optimization problems representing real-world scenarios, e.g., materials design, characterized by dense and large QUBO matrices (Fig. S1). These problems are non-convex and exhibit a highly complex energy landscape, making it challenging and time-consuming to identify accurate solutions. Classical solvers, such as integer programming (IP), simulated annealing (SA), steepest descent (SD), tabu search (TS), parallel tempering with isoenergetic cluster moves (PT-ICM), perform well for small-scale problems. However, they are often relatively inaccurate for larger problems (problem size ≥ 1000; Fig. 1a). In particular, SD and TS show low relative accuracy compared to other solvers. The combination of PT and ICM leverages the strengths of both techniques: PT facilitates crossing energy barriers, while ICM ensures exploration of the solution space, effectively covering broad and diverse regions. This makes PT-ICM particularly effective for exploring complex optimization spaces and enhancing convergence toward the global optimum28,29. However, the performance of PT-ICM can be problem-dependent30. While it can work well for sparse problems, its effectiveness decreases for denser problems28. Consequently, although SA and PT-ICM perform better than SD and TS, they also fail to find high-quality solutions for large-scale problems.

To address these limitations, QUBO decomposition strategies can be employed to improve the relative accuracy. For example, integrating QUBO decomposition with classical solvers (e.g., SA–QBSolv and PT–ICM–QBSolv) improves their performance. Nonetheless, these approaches often remain insufficient for handling massive problems effectively, particularly considering problem-solving time (Fig. 1b), which will be further discussed in the following. On the other hand, quantum solvers provide excellent performance for solving these dense and large-scale problems representing real-world optimization scenarios. Although QA can perform excellently for small problems, it has difficulty solving large and dense QUBOs due to the limited number of qubits (5000+) and connectivity (15). Several prior studies reported that QA may not be efficient since it cannot effectively handle dense and large QUBOs due to hardware limitations23,31,32. However, when it runs with the QUBO decomposition strategy (i.e., QA–QBSolv), large-scale problems (n ≥ 100) can be effectively handled. Furthermore, hybrid QA (HQA), which integrates quantum and classical approaches, can also solve large-scale problems efficiently. As a result, the quantum solvers consistently identify high-quality solutions across all problem sizes (Fig. 1a).

Computational time is also a critical metric for evaluating solver performance. Classical solvers exhibit rapidly increasing solving times as problem sizes grow, making them impractical for large-scale combinatorial optimization problems (Fig. 1b). While SD and TS are faster than other classical solvers, their relative accuracies are low, as can be seen in Fig. 1a. It is worth noting that the SA, and PT-ICM solvers struggle to handle problems with more than 3000 variables due to excessively long solving time or computational constraints (e.g., memory limits). Although the IP solver is faster than SA and PT-ICM, its solving time increases greatly with problem size. The QUBO decomposition strategy significantly reduces computational time, yet quantum solvers remain faster than their classical counterparts across all problem sizes. For instance, for a problem size of 5000, the solving time for HQA is 0.0854 s and for QA–QBSolv is 74.59 s, compared to 167.4 s and 195.1 s for SA–QBSolv and PT–ICM–QBSolv, respectively, highlighting superior efficiency of the quantum solvers.

To further evaluate scalability, we conduct a systematic benchmarking study on QUBO problems (size: up to 10,000 variables), designed to mimic real-world scenarios through randomly generated elements. PT-ICM is excluded from this analysis due to excessive solving times compared to other solvers (Fig. 1b). As shown in Fig. 2, classical solvers (IP, SA, SD, and TS) are accurate for smaller problems but become inaccurate as the problem size increases. Consistent with the results in Fig. 1, the SD and TS solvers exhibit low relative accuracy even for a relatively small problem (e.g., 2000). IP and SA are more accurate than SD and TS but fail to identify the optimal state for large problems. It is known that IP can provide global optimality guarantees33, but our study highlights that proving a solution is globally optimal is challenging for large and dense problems. For example, in one case (n = 7000), the optimality gap remains as large as ~17.73%, where the best bound is −19,660 while the solution obtained from the IP solver is −16,700, with the optimality gap not narrowing even after 2 h of runtime. The relative accuracy can be improved by employing the QUBO decomposition strategy (e.g., SA–QBSolv), yet it still fails to identify high-quality solutions for problem sizes exceeding 4000. In contrast, quantum solvers demonstrate superior accuracy for large-scale problems. Notably, the HQA solver consistently outperforms all other methods, reliably identifying the best solution regardless of problem size (Fig. 2).

Figure 3a shows that the solving time rapidly increases as the problem size increases for the classical solvers, indicating that solving combinatorial optimization problems with classical solvers can become intractable for large-size problems (Fig. 3b). The solving time trends with increasing problem size agree well with the theoretical time complexities of the classical solvers (Figs. 3b and S3, see 2-4-2. Computational Time section). While the IP solver can be faster than other classical solvers, it also requires significant time for large problems (e.g., n > 5000). The use of the QUBO decomposition strategy dramatically reduces the solving time, but the quantum solvers consistently outpace classical counterparts (Fig. 3a). For example, the solving time (n = 10,000) is 0.0855 s for HQA, 101 s for QA–QBSolv, and 561 s for SA–QBSolv.

Decomposing a large QUBO into smaller pieces leads to a higher relative accuracy, as a solver can find better solutions for each decomposed QUBOs, mitigating the current hardware limitations. Note that the accuracy of QA for QUBOs with problem sizes of 30 and 100 is, respectively, 1.0 and 0.9956 (without leveraging the QUBO decomposition method). Hence, the accuracy of QA–QBSolv with a sub-QUBO size of 30 is higher than that with a sub-QUBO size of 100, as decomposed QUBOs with a smaller size fit the QA hardware better (Fig. 4a). However, a smaller sub-QUBO size results in a greater number of sub-QUBOs after decomposition, leading to increased time required to solve all decomposed problems (Fig. 4b). It is noted that the QA–QBSolv solver does not guarantee finding the best solution for large problems (size > 4000), resulting in lower accuracies regardless of sub-QUBO sizes, as can be seen in Fig. 2 and Fig. 4a.

Our results show that HQA, which incorporates QA with classical algorithms to overcome the current quantum hardware limitations, is currently the most efficient solver for complex real-world problems that require the formulation of dense and large QUBOs. This quantum-enhanced solver (i.e., HQA) achieves high accuracy and significantly faster problem-solving time compared to the classical solvers for large-scale optimization problems. Our findings suggest that leveraging quantum resources, particularly in hybrid configurations, can provide a computational advantage over classical approaches. Besides, as the current state of HQA demonstrates, we expect QA will have much higher accuracy and require much shorter time to solve QUBO problems with the development of the quantum hardware with more qubits and better qubit connectivity.

Discussion

This work comprehensively compares state-of-the-art QA hardware and software against several classical optimization solvers for large and dense QUBO problems (up to 10,000 variables, fully connected interactions). The classical solvers struggled to solve large-scale problems, but their performance can be improved when combined with the QUBO decomposition method (i.e., QBSolv). Nevertheless, they become inaccurate and inefficient with increasing problem size, indicating that classical methods can face challenges for complex real-world problems represented by large and dense QUBO matrices. On the contrary, HQA performs significantly better than its classical counterparts, exhibiting the highest accuracy (~0.013% improvement) and shortest time to obtain solutions (~6561× acceleration) for 10,000 dimensional QUBO problems. Pure QA and QA with the QUBO decomposition method still exhibit limitations in solving large problems due to the current QA hardware limitations (e.g., number of qubits and qubit connectivity). However, we anticipate that QA will eventually reach the efficiency of HQA with the ongoing development of the quantum hardware. Thus, we expect QA to demonstrate true “Quantum Advantage” in the future.

Methods

Definition of a QUBO

QA hardware is designed to efficiently solve combinatorial optimization problems that are formulated with a QUBO matrix, which can be given by refs. 34,35:

where \({Q}_{i,j}\) is the i-th row and j-th column real-number element of the QUBO matrix (\({\bf{Q}}\)), which is an \(n\times n\) Hermitian, i.e., \({\bf{Q}}\in {{\mathbb{R}}}^{{\rm{n}}\times {\rm{n}}}\), and \({x}_{i}\) is the i-th element of a binary vector \({\boldsymbol{x}}\) with a length of \(n,\) i.e., \({\boldsymbol{x}}\in {0,1}^{n}\). \({{\rm{Q}}}_{i,j}\) is often referred to as a linear coefficient for i = j and a quadratic interaction coefficient for i ≠ j. The objective of QA is to identify the optimal binary vector of a given QUBO, which minimizes the scalar output y as35:

In optimization problems, the linear coefficients correspond to cost or benefit terms associated with individual variables, while the quadratic coefficients represent interaction terms or dependencies between pairs of variables. These coefficients can be learned using machine learning models, such as the factorization machine (FM), trained on datasets containing input structures and their corresponding performance metrics. By mapping these learned coefficients into a QUBO formulation, we effectively represent an energy function of a material system or other real-world optimization problem. This QUBO then describes the optimization space, enabling the identification of the optimal state with the best performance36,37.

Methods to solve a QUBO

Various methods have been proposed to solve QUBO problems. For our benchmarking study, we consider seven representative methods: QA, hybrid QA (HQA), integer programming (IP), simulated annealing (SA), steepest descent (SD), tabu search (TS), parallel tempering with isoenergetic cluster moves (PT-ICM). Below, we provide a brief introduction to each of the solvers used in solving combinatorial optimization problems:

Quantum annealing and hybrid quantum annealing

QA starts with a superposition state for all qubits, which has the lowest energy state of the initial Hamiltonian (\({H}_{0}\)). In the annealing process, the system evolves toward the lowest energy state of the final Hamiltonian (also called a problem Hamiltonian, \({H}_{p}\)) by minimizing the influence of the initial Hamiltonian. The measured state at the end of the annealing is supposed to be the ground state of \({H}_{p}\), which can be expressed as the following Eqs.38,39:

Here, \(t\) is the elapsed annealing time, and \({t}_{a}\) is the total annealing time. Equation (3) evolves from \(A\left(t/{t}_{a}\right)=1\), \(B\left(t/{t}_{a}\right)\approx 0\) at the beginning of the annealing \((t/{t}_{a}=0)\) to \(A\left(t/{t}_{a}\right)\approx 0\), \(B\left(t/{t}_{a}\right)=1\) at the end of the annealing \((t/{t}_{a}=1)\). Sufficiently slow evolution from \({H}_{0}\) to \({H}_{p}\) enables the quantum system to stay at the ground state, which leads to the identification of the optimal solution of a given combinatorial optimization problem3,40. We use D-Wave Systems’ quantum annealer (Advantage 4.1) to solve the problems using QA, and we set the number of reads for QA to 1000 with a total annealing time of 20 μs. We select the best solution corresponding to the lowest energy state found among 1000 reads.

The D-Wave Ocean software development kit (SDK, ver. 3.3.0) provides many useful libraries, which include quantum or classical samplers such as the QA, HQA, SA, SD, and TS. They allow us to solve QUBO problems22,41,42. We employ these samplers, which are implemented in the D-wave Ocean SDK, for the benchmarking study. Classical or QA solvers often benefit from decomposition algorithms to identify a high-quality solution (i.e., an optimal solution or a good solution close to the global optimum) for large QUBO problems. Hence, the decomposition of a QUBO matrix into sub-QUBOs is very useful when the size of QUBO matrix is larger than the physical volume of a sampler (i.e., QUBO size > physical number of qubits in QA or memory capacity of a classical computer). We employ the QBSolv package implemented in D-wave Ocean SDK for QUBO decomposition. The QBSolv splits a QUBO matrix into smaller QUBO matrices, and each of them is sequentially solved by classical or QA solvers. This algorithm enables us to handle a wide range of complex real-world problems21,22,43. The size of the decomposed QUBOs is set to 30 unless otherwise specified. HQA (Leap Hybrid solver), developed by D-Wave systems, also decomposes large QUBO into smaller subproblems well-suited for QA’s QPU, and then aggregates the results27,44. The detailed algorithm of HQA, however, is not publicly released. We utilize a D-Wave sampler (dwave-system 1.4.0) for SA, SD, and TS with a specified number of reads (1000) and default settings for other parameters. Furthermore, we employ D-Wave hybrid framework for PT-ICM.

Integer programming

IP uses branch-and-bound, cutting planes, and other methods to search the solution space for optimal integer decisions and prove global optimality within a tolerance (gap). We use Gurobi (version 10.0.2)45 for benchmarking with the default settings (0.1% global optimality gap) plus a two-hour time limit and 240 GB software memory limit per optimization problem. The benchmark QUBO problem is implemented in the Pyomo modeling environment (version 6.6.2)33. We also experimented with a large gap and observed the first identified integer solution often had a poor objective function value. These results are not further reported for brevity.

Simulated annealing

SA, which is inspired by the annealing process in metallurgy, is a probabilistic optimization algorithm designed to approximate a global optimum of a given objective function. It is considered a metaheuristic method, which can be applied to a wide range of optimization problems46,47. In SA, temperature and cooling schedule are major factors that determine how extensively the algorithm explores the solution space48. This algorithm often identifies near-optimal solutions but cannot guarantee that local or global optimality conditions are satisfied. For SA, the hyperparameters are configured as follows: 1000 reads, 1000 sweeps, a “random” initial state generation, and a “geometric” temperature schedule.

Steepest descent

SD operates by employing variable flips to reduce the energy of a given QUBO through local minimization computations rather than relying on a calculated gradient in a traditional gradient descent algorithm49. This algorithm is computationally inexpensive and beneficial for local refinement; thus, it can be used to search for local optima. In our benchmarking study, SD utilizes hyperparameters set to 1000 reads and a “random” strategy for initial state generation.

Tabu search

TS is designed to solve combinatorial and discrete optimization problems by using memory to guide the search for better solutions, as introduced by Glover50. This algorithm can escape already visited local minima by remembering those points called “Tabu List” to keep track of moves during the search, aiming to identify high-quality solutions in a large solution space. This algorithm works well for combinatorial optimization problems with small search spaces. However, it can be hard to evaluate neighboring solutions and to maintain and update the Tabu List with increasing problem sizes. The hyperparameter settings for TS are as follows: 1000 reads, a timeout of 100 ms, and “random” initial state generation.

Parallel tempering with isoenergetic cluster moves (PT-ICM)

PT-ICM is an advanced Monte Carlo method designed to navigate optimization space, such as QUBO problems28,29,30. PT operates by maintaining multiple replicas of the system at different temperatures and allowing exchanges between replicas based on a Metropolis criterion. This approach helps lower-temperature replicas escape local minima with the aid of higher-temperature replicas. ICM identifies clusters of variables that can flip without changing the system’s energy28. In this study, the hyperparameters for PT-ICM are set as follows: the number of sweeps is 1000, the number of replicas is 10, and the number of iterations is 10.

Benchmarking problems

Real-world problems

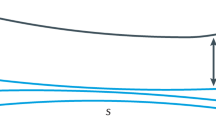

Material optimization is selected to represent real-world problems, with the design of planar multilayers (PMLs) optical film as a testbed for benchmarking. PMLs can be seen in many applications. For example, they have been explored for transparent radiative cooling windows to address global warming by emitting thermal radiation through the atmospheric window (8 μm < λ < 13 μm)4, while transmitting visible photons. PMLs consist of layers with one of four dielectric materials: silicon dioxide, silicon nitride, aluminum oxide, and titanium dioxide. The configuration of these layers can be expressed as a binary vector, where each layer is assigned a two-digit binary label. Optical characteristics and corresponding figure-of-merit (FOM) of the PML can be calculated by solving Maxwell’s equations using the transfer matrix method (TMM). To formulate QUBOs, layer configurations (input binary vectors) and their FOMs (outputs) are used to train the FM model. FM learns the linear and quadratic coefficients, effectively modeling the optimization landscape of the material system. QUBO matrices are then generated using these coefficients36,37. PML configurations are randomly generated for training datasets, and their FOMs are calculated using TMM. The resulting QUBO matrices represent real-world materials optimization problems, characterized by highly dense (fully connected) configurations (Fig. S1), which are used for the benchmarking study in Fig. 1.

Benchmarking problems

We formulate QUBO matrices with random elements to further systematically explore scalability (Figs. 2 and 3), following the characteristics of QUBOs from real-world problems, for the benchmarking study as the following:

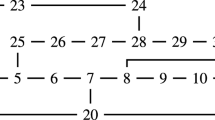

• Problem size: The problem size, corresponding to the length of a binary vector (\(n\)), varies from 120 to 10,000 (120, 200, 500, 1000, 1500, 2000, 2500, 3000, 4000, 5000, 6000, 7000, 8000, 9000 and 10,000).

• Distribution of elements: For each problem size, four QUBO matrices with different distributions of elements are studied. These elements are random numbers with a mean value of 0 and standard deviations of 0.001, 0.01, 0.1, or 1. These distributions reflect the variability observed in QUBO coefficients derived from real-world problems (Table S1). A QUBO configured with elements having a large deviation yields a significant variation in the energy landscape, potentially resulting in high energy barriers that must be overcome to find the ground state.

• Density of matrices: The density of QUBO matrices reflects the proportion of pairwise interactions among variables relative to the maximum possible interactions. Fully connected QUBOs, such as those derived from real-world problems, represent cases where all variables interact with each other. For example, in layered photonic structures, each layer interacts with every other layer, influencing optical responses, which leads to a fully connected QUBO. In contrast, Max-Cut problems typically result in sparse QUBOs, where only a subset of variables (nodes) interact through edges. The maximum number of interaction coefficients (i.e., the number of edges in Max-Cut problems) is nC2, where n denotes the problem size. The density of a QUBO can be calculated as:

For example, a benchmark problem instance (G10) with 800 nodes and 19,176 edges has a density of 6%, calculated as: density = 19,176/319,600 = 0.06. The density of Max-Cut problems can be adjusted by changing the number of edges, with typical instances having densities ranging from 0.02% to 6% (Fig. S1 and Table S2). In contrast, real-world problems feature fully connected configurations, corresponding to a density of 100%. QUBOs for this benchmarking study have dense matrices fully filled with real-number elements in the upper triangular part (i.e., fully connected graph nodes, Fig. S2). This configuration aims to approximate real-world optimization problems, which usually require a dense QUBO matrix4,34.

Performance metrics: relative accuracy and computational time

Relative accuracy

For small-scale problems, brute-force search guarantees the identification of the global optimum by evaluating all possible solutions. However, this approach becomes infeasible for large-scale problems due to the exponential growth of the search space. The IP solver, such as Gurobi, utilizes the branch-and-bound method to efficiently explore the solution space and prove global optimality within an optimality gap. However, due to computational limitations or time constraints, IP may struggle to find the global optimum for large-scale problems. To address this challenge in our benchmarking study, we employ a “Relative Accuracy” metric to compare the relative performance of different solvers. Relative accuracy is defined as the ratio of a solver’s objective value to the best objective found across all solvers:

This metric provides a way to evaluate the solution quality when the global optimum cannot be definitively found or proven for large-scale problem instances. Note that the best solution is the lowest value among the solutions obtained from all solvers since the solvers are designed to find the lowest energy state (generally negative values for the QUBOs used in this study). The relative accuracies of the solvers are plotted as a function of problem sizes. In Fig. 1, the relative accuracy represents the average value calculated from three different QUBOs that represent material optimization, and in Fig. 2, it represents the average from four different QUBOs with varying standard deviations for each problem size (ranging from 120 to 10,000). Error bars on the plot represent the standard deviation of accuracies calculated from the four different QUBOs for each problem size, relative to the average values. By definition, the relative accuracy is 1.0 when the solver finds a solution with the best-known objective function value (Eq. 5).

Computational time

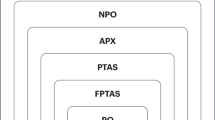

Computational time is another important factor in determining the solvers’ performance. Combinatorial optimization problems are considered NP-hard, so increasing problem sizes can lead to an explosion of search space, posing challenges in optimization processes. We measure the computational time dedicated solely to solving given problems, excluding problem reading time, queue time, or communication time between the local computer and quantum annealer. This is consistent with other benchmarking studies17,18. For problems solved on D-Wave systems’ QPU for QA, the execution time includes programming and sampling times (anneal, readout, and delay time). QPU access time is calculated for all of them after programmed anneal-read cycles, corresponding to the time charged to users in their allocations, which is used as the computational time for QA and HQA. Classical solvers (SA, SD, TS, and PT-ICM) run on a workstation (AMD Ryzen Threadripper PRO 3975WX @ 3.5 GHz processor with 32 cores and 32GB of RAM), and IP (Gurobi) run on a cluster node (an Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50 GHz processor with 24 cores and 256 GB of RAM). Problem reading time can be significant when the problem size is large, but it is excluded from the computational time consideration. We measure the time solely taken to solve given problems with classical solvers. In Figs. 1b and 3, the solution time for classical and quantum solvers is presented as a function of problem sizes. Note that a QUBO problem is NP-hard51. Evaluating the energy of a given solution has a computational cost of \(O({n}^{2})\), where \(n\) (=problem size) is the number of variables. The number of reads or sweeps does not scale with \(n\), but the cost for each sweep scales as \(O(n)\) for SA. Consequently, the theoretical time complexities of the classical solvers are known as \(O\left({n}^{3}\right)\) for SA52, \(O({n}^{2})\) for SD53, and \(O({n}^{2})\) for TS54. On the other hand, the theoretical time complexity of the quantum solvers can be considered constant.

Data availability

All data generated and analyzed during the study are available from the corresponding author upon reasonable request.

Code availability

The codes used for generating and analyzing data are available from the corresponding author upon reasonable request.

References

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Daley, A. J. et al. Practical quantum advantage in quantum simulation. Nature 607, 667–676 (2022).

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473, 194–198 (2011).

Kim, S. et al. High-performance transparent radiative cooler designed by quantum computing. ACS Energy Lett. 7, 4134–4141 (2022).

Kim, S., Jung, S., Bobbitt, A., Lee, E. & Luo, T. Wide-angle spectral filter for energy-saving windows designed by quantum annealing-enhanced active learning. Cell Rep. Phys. Sci. 5, 101847 (2024).

Li, R. Y., Di Felice, R., Rohs, R. & Lidar, D. A. Quantum annealing versus classical machine learning applied to a simplified computational biology problem. NPJ Quantum Inf. 4, 14 (2018).

Vinci, W., Albash, T. & Lidar, D. A. Nested quantum annealing correction. NPJ Quantum Inf. 2, 16017 (2016).

Santoro, G. E. & Tosatti, E. Optimization using quantum mechanics: quantum annealing through adiabatic evolution. J. Phys. A Math. Gen. 39, R393–R431 (2006).

Mandra, S., Zhu, Z. & Katzgraber, H. G. Exponentially biased ground-state sampling of quantum annealing machines with transverse-field driving hamiltonians. Phys. Rev. Lett. 118, 070502 (2017).

Kitai, K. et al. Designing metamaterials with quantum annealing and factorization machines. Phys. Rev. Res. 2, 013319 (2020).

Santoro, G. E., Martonák, R., Tosatti, E. & Car, R. Theory of quantum annealing of an Ising spin glass. Science 295, 2427–2430 (2002).

Hen, I. & Spedalieri, F. M. Quantum annealing for constrained optimization. Phys. Rev. Appl. 5, 034007 (2016).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355–5363 (1998).

Morita, S. & Nishimori, H. Mathematical foundation of quantum annealing. J. Math. Phys. 49, 125210 (2008).

Hobbs, J., Khachatryan, V., Anandan, B. S., Hovhannisyan, H. & Wilson, D. Machine learning framework for quantum sampling of highly constrained, continuous optimization problems. Appl Phys. Rev. 8, 627009 (2021).

Kim, S., Wu, S., Jian, R., Xiong, G. & Luo, T. Design of a high-performance titanium nitride metastructure-based solar absorber using quantum computing-assisted optimization. ACS Appl. Mater. Interfaces 15, 40606–40613 (2023).

O’Malley, D., Vesselinov, V. V., Alexandrov, B. S. & Alexandrov, L. B. Nonnegative/Binary matrix factorization with a D-Wave quantum annealer. PLoS ONE 13, e0206653 (2018).

Tasseff, B. et al. On the emerging potential of quantum annealing hardware for combinatorial optimization. J. Heuristics. 30, 325–358 (2024).

Haba, R., Ohzeki, M. & Tanaka, K. Travel time optimization on multi-AGV routing by reverse annealing. Sci. Rep. 12, 17753 (2022).

Kim, S. et al. Quantum annealing-aided design of an ultrathin-metamaterial optical diode. Nano Converg. 11, 16 (2024).

Pelofske, E., Hahn, G. & Djidjev, H. N. Noise dynamics of quantum annealers: estimating the effective noise using idle qubits. Quantum Sci. Technol. 8, 035005 (2023).

Yoneda, Y., Shimada, M., Yoshida, A. & Shirakashi, J. -i Searching for optimal experimental parameters with D-Wave quantum annealer for fabrication of Au atomic junctions. Appl. Phys. Exp. 16, 057001 (2023).

Willsch, D. et al. Benchmarking Advantage and D-Wave 2000Q quantum annealers with exact cover problems. Quantum Inf. Process 21, 141 (2022).

Yarkoni, S., Raponi, E., Back, T. & Schmitt, S. Quantum annealing for industry applications: introduction and review. Rep. Prog. Phys. 85, 104001 (2022).

Kasi, S., Warburton, P., Kaewell, J. & Jamieson, K. A cost and power feasibility analysis of quantum annealing for nextg cellular wireless networks. IEEE Trans. Quantum Eng. 4, 1–17 (2023).

Teplukhin, A., Kendrick, B. K. & Babikov, D. Solving complex eigenvalue problems on a quantum annealer with applications to quantum scattering resonances. Phys. Chem. Chem. Phys. 22, 26136–26144 (2020).

Atobe, Y., Tawada, M. & Togawa, N. Hybrid annealing method based on subQUBO model extraction with multiple solution instances. IEEE Trans. Comput 71, 2606–2619 (2022).

Aramon, M. et al. Physics-inspired optimization for quadratic unconstrained problems using a digital annealer. Front. Phys. 7, 444894(2019).

Zhu, Z., Ochoa, A. J. & Katzgraber, H. G. Fair sampling of ground-state configurations of binary optimization problems. Phys. Rev. E. 99, 063314 (2019).

Mandrà, S. & Katzgraber, H. G. A deceptive step towards quantum speedup detection. Quantum Sci. Technol. 3, 04LT01 (2018).

Delgado, A. & Thaler, J. Quantum annealing for jet clustering with thrust. Phys. Rev. D. 106, 094016 (2022).

Mao, Z., Matsuda, Y., Tamura, R. & Tsuda, K. Chemical design with GPU-based Ising machines. Digit Discov. 2, 1098–1103 (2023).

Bynum, M. L. et al. Pyomo—optimization modeling in python, 3rd edn. (Springer International Publishing, 2021).

Zaman, M., Tanahashi, K. & Tanaka, S. PyQUBO: python library for mapping combinatorial optimization problems to QUBO form. IEEE Trans. Comput. 71, 838–850 (2022).

Tao, M. et al. Towards Stability in the Chapel Language. In Proc. IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW) 557–566 (IEEE, 2020).

Kim, S. et al. A review on machine learning-guided design of energy materials. Prog. Energy 6, 042005 (2024).

Kim, S., Luo, T., Lee, E. & Suh, I.-S. Quantum annealing-aided design of an ultrathin-metamaterial optical diode, distributed quantum approximate optimization algorithm on integrated high-performance computing and quantum computing systems for large-scale optimization. arXiv:2407.20212 11, 16 (2024).

Gemeinhardt, F., Garmendia, A., Wimmer, M., Weder, B. & Leymann, F. Quantum combinatorial optimization in the NISQ era: a systematic mapping study. ACM Comput. Surv. 56, 1–36 (2023).

Willsch, M., Willsch, D., Jin, F., De Raedt, H. & Michielsen, K. Benchmarking the quantum approximate optimization algorithm. Quantum Inf. Process 19, 197 (2020).

Hauke, P., Katzgraber, H. G., Lechner, W., Nishimori, H. & Oliver, W. D. Perspectives of quantum annealing: methods and implementations. Rep. Prog. Phys. 83, 054401 (2020).

Carugno, C., Ferrari Dacrema, M. & Cremonesi, P. Evaluating the job shop scheduling problem on a D-wave quantum annealer. Sci. Rep. 12, 6539 (2022).

Irie, H., Liang, H., Doi, T., Gongyo, S. & Hatsuda, T. Hybrid quantum annealing via molecular dynamics. Sci. Rep. 11, 8426 (2021).

Raymond, J. et al. Hybrid quantum annealing for larger-than-QPU lattice-structured problems. ACM Trans. Quantum Comput. 4, 1–30 (2023).

Ceselli, A. & Premoli, M. On good encodings for quantum annealer and digital optimization solvers. Sci. Rep. 13, 5628 (2023).

Song, J., Lanka, R., Yue, Y. & Dilkina, B. A general large neighborhood search framework for solving integer linear programs. In Proc. 34th Conference on Neural Information Processing Systems (NeurIPS, 2020).

Alnowibet, K. A., Mahdi, S., El-Alem, M., Abdelawwad, M. & Mohamed, A. W. Guided hybrid modified simulated annealing algorithm for solving constrained global optimization problems. Mathematics 10, 1312 (2022).

Rere, L. M. R., Fanany, M. I. & Arymurthy, A. M. Simulated annealing algorithm for deep learning. Procedia Comput. Sci. 72, 137–144 (2015).

Gonzales, G. V. et al. A comparison of simulated annealing schedules for constructal design of complex cavities intruded into conductive walls with internal heat generation. Energy 93, 372–382 (2015).

Wadayama, T. et al. Gradient descent bit flipping algorithms for decoding LDPC codes. IEEE Trans. Commun. 58, 1610–1614 (2010).

Glover, F., Laguna, M. & Martı´, R. Principles of tabu search. Comput. Oper. Res. 13, 533, 1986.

Yasuoka, H. Computational complexity of quadratic unconstrained binary optimization. arXiv:2109.10048 (2022).

Hansen, P. B. Simulated annealing. Electrical Eng. Comput. Sci. Tech. Rep. 170, 1–12 (1992).

Dupin, N., Nielsen, F. & Talbi, E. Dynamic Programming heuristic for k-means Clustering among a 2-dimensional Pareto Frontier. In Proc. 7th International Conference on Metaheuristics and Nature Inspired Computing, Springer (2018).

Sakabe, M. & Yagiura, M. An efficient tabu search algorithm for the linear ordering problem. J. Adv. Mech. Des. Syst. Manuf. 16, JAMDSM0041–JAMDSM0041 (2022).

Acknowledgements

This research used resources of the Oak Ridge Leadership Computing Facility at the Oak Ridge National Laboratory, which is supported by the Office of Science of the US Department of Energy under Contract No. DE-AC05-00OR22725. This research was supported by the Quantum Computing Based on Quantum Advantage Challenge Research (RS-2023-00255442) through the National Research Foundation of Korea (NRF) funded by the Korean Government (Ministry of Science and ICT(MSIT)). Notice: This manuscript has in part been authored by UT-Battelle, LLC under Contract No. DE-AC05-00OR22725 with the U.S. Department of Energy. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the US Government retains a non-exclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of the manuscript, or allow others to do so, for US Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-publicaccess-plan).

Author information

Authors and Affiliations

Contributions

S.K., A.D., E.L., and T.L. conceived the idea. S.K. and S.A. performed benchmarking studies to generate data. A.D. and S.K. implemented the IP benchmark. S.K. analyzed the data with advice from I.S., A.D., E.L., and T.L. All authors discussed the results and contributed to the writing of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kim, S., Ahn, SW., Suh, IS. et al. Quantum annealing for combinatorial optimization: a benchmarking study. npj Quantum Inf 11, 77 (2025). https://doi.org/10.1038/s41534-025-01020-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-025-01020-1