Abstract

Black tea is among the most widely consumed tea. The fermentation process is crucial for developing the flavor of black tea. Currently, many producers rely on personal experience to gauge fermentation, which can be inconsistent and subjective. Additionally, large models are impractical for use in production. Based on this, this paper introduces a lightweight convolutional neural network utilizing transfer learning to assess the fermentation level of black tea. Initially, we applied a model-based transfer learning strategy and conducted pre-training weight experiments to compare and select the student model and the teacher model. Next, we modified the loss function with PolyLoss and optimizer with AdamW for the student model. Finally, we performed a knowledge distillation experiment on the student model. Results indicated that the improved model’s accuracy, precision, recall, and F1 improved by 0.0415, 0.0215, 0.0902, and 0.0645, respectively. This research offers technical assistance for digital production of black tea.

Similar content being viewed by others

Introduction

Black tea is classified as a type of fully fermented tea, which is the second largest tea category in China1. According to customs statistics, the export value of Chinese tea in 2024 was 1.419 billion dollars, with an export volume of 374,100 t. Among them, the export volume of black tea was 24,800 t, accounting for 6.62%. In 2024, the import value of Chinese tea was 157 million dollars, with an import volume of 54,000 t. Among them, the import volume of black tea was 41,900 t, accounting for 77.63%. It is named after the red color of the tea broth and the bottom of the leaves after the dry tea is brewed. It is made from the buds and leaves of the tea tree and refined through typical processes such as withering, kneading, fermentation, and drying. Fermentation is an important step to form the flavor of black tea, so for the recognition of the black tea fermentation degree becomes crucial2. Presently, in the processing of black tea, the identification of fermentation levels is entirely based on the tea master’s own experience in tea making, which is arbitrary and subjective, and is not conducive to the mass production of high-quality black tea3. Consequently, precisely quantifying the fermentation stages of black tea remains a significant obstacle to advancing toward digitalized tea processing methods.

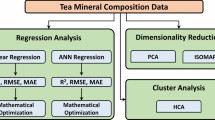

For the last few years, some scholars have done a large number of studies on the discrimination of the degree of fermentation. Wei et al. analyzed the differences in the content of volatile organic compounds in pomelo wine at different stages of fermentation by PLS regression. The PLS model showed that the ratio of α-phellandrene/geraniol alcohol in pomelo wine could be a potential indicator for determining the degree of fermentation of pomelo wine4. Jiang et al. qualitatively identified the solid-state fermentation degree using PLS-DA after wavelength variable screening using FT-NIR spectroscopy technology. CARS and SCARS were used to screen important wavelengths. The experimental results showed that the SCARS-PLS-DA model gained even superior outcome during validation with a discrimination rate of 91.43%5. Riza et al. developed the YOLO-CoLa model within the YOLOv8 framework to accurately detect the degree of fermentation of cocoa beans. The proposed model achieved a mAP@0.5 of 70.4%, representing an improvement of 9.3% compared to the original model, effectively enhancing detection performance6.

The fermentation of cacao beans and pomelo wine in the above study is different from that of tea fermentation, and the discrimination of the degree of fermentation is also extremely different. Last several years, some scholars have also done some research on the tea fermentation degree of identification. Chen et al. conducted spectral analysis of total catechins and theanine in 161 tea samples. The best scaling models for these compounds demonstrated strong predictive capabilities. The results indicated that NIR could be an effective method to detect the degree of tea fermentation quickly and accurately7. Fraser et al. studied the biochemical components of oolong tea during fermentation using non-targeted methods. Correlation of the spectra revealed two volatile compounds whose concentrations increased during the fermentation phase of the process. This study highlighted the latent capacity of DART-MS for rapid monitoring of complex production processes such as tea fermentation8. Cao et al. developed a sensing system based on carbon quantum dots doped with cobalt ions to assess the fermentation levels of black tea. The least squares support vector machine model developed was 100% accurate in distinguishing the degree of fermentation. This was an accurate and effective method to measure the levels of black tea fermentation9. A specific comparison is shown in Table 1.

All of the above discriminations of tea fermentation level are realized by using traditional techniques, but the high cost of spectrometers, the susceptibility of spectral reflectance to interference, and the large production cost of high-quality, high-purity carbon quantum dots are not conducive to large-scale applications in actual production. For the past few years, deep learning technology has been widely used in agriculture10. Chawla et al. proposed a new method for identifying okra infected with yellow vein mosaic virus using deep learning models. This study showed that the MobileNet model achieved excellent accuracy when combined with all three RNNs, exceeding 99.27%11. Chen et al. proposed an automated detection model MTD-YOLOv7, for fruit and fruit bundle maturity. The total score of MTD-YOLOv7 in multi-task learning was 86.6%12. Model detection has high accuracy and fast speed. Tian et al. proposed an apple detection model based on YOLOv3 for different growth stages in complex orchards. The average detection time of this model was 0.304 s/frame, which could perform real-time detection of apples in the orchard13. For deep learning technology application in tea fermentation degree discriminant has rarely been reported. However, large models are not suitable to be deployed due to the limitations of hardware conditions in the actual production environment14. In addition, there is a high demand for real-time performance in actual production, which cannot be met by large models. Lightweight models have certain advantages in addressing these challenges, including high computational efficiency, low memory usage, and ease of deployment to edge devices15. Zhang et al. put forward a lightweight framework based on the knowledge distillation strategy, which greatly reduced the complexity of the multimodal solar irradiance prediction model while guaranteeing an acceptable accuracy and facilitates the actual deployment16. Sun et al. proposed a lightweight, high-accuracy model for detecting passion fruit in complex environments. Knowledge distillation was utilized to transfer knowledge from the teacher model with strong ability to the student model with weak ability. The detection accuracy is significantly enhanced17. In the case of average accuracy and detection capability, the proposed model superior to the most advanced models. The aforementioned study provides a reference for the lightweight research study in this paper. But it can’t be used to distinguish the fermentation level of black tea. On this basis, a lightweight convolutional neural network based on transfer learning was proposed to determine the level of black tea fermentation. In this paper, the main contributions are as follows. (1) Using transfer learning strategies, 14 types of convolutional neural networks are experimentally compared, and student model and teacher model are selected. (2) By replacing the loss function, the student model’s discriminative performance has been improved. (3) The optimizer of the model is changed, which further improves the model discrimination performance. (4) The above model is subjected to knowledge distillation experiments at different Distillation Loss ratios. The model shows the best discriminative performance when the Distillation Loss ratio is 2.0. The research process of this paper can be understood in detail in Fig. 1.

Results

Experimental environment and parameter settings

The training framework and parameter settings used in this research experiment are listed in detail in Table 2.

Model evaluation indicators

This study belongs to image classification, so FLOPs, Params, Accuracy, Precision, Recall, F1, and FPS are used to evaluate the performance of the discrimination model.

-

(1)

FLOPs: the floating-point operations in the model reasoning process, reflecting the complexity of the model.

-

(2)

Params: the number of parameters of the model, which reflects the complexity of the model.

-

(3)

Accuracy: the proportion of samples with accurate classification to the total number of such samples.

-

(4)

Precision: the proportion of correct predictions that are positive (TP) over all predictions that are positive (TP + FP).

-

(5)

Recall: the proportion of positive examples (TP + FN) predicted correctly (TP) in the sample.

-

(6)

F1: Sometimes, Precision and Recall alone cannot fully evaluate the performance of a model. F1 scores can be used to evaluate a model comprehensively.

-

(7)

FPS is the refresh frequency of an image.

The calculation formula is shown in Eq. (1).

Loss changes during training

The curve of loss value change in the training process of the improved model is made, as shown in Fig. 2. Figure 2 shows that the loss value of the improved model gradually decreased with the increase of the number of training generations, and the overall tendency was stabilized.

Comparative results of basic network experiments

In the same experimental conditions, the pre-trained model was added by applying the strategy of transfer learning to experiment with the aforementioned selected convolutional neural network models (Table 3). Table 3 shows that all the models were able to determine in black tea fermentation level, among which Efficientnet_v2_m had the best discriminative result, which was used as the teacher model. The FLOPs, Params, Accuracy, Precision, Recall, F1, and FPS of the model were 5.445 G, 52.862 M, respectively, 0.9706, 0.9740, 0.9379, 0.9550, and 13.78. Considering the problem that large models are not suitable to be deployed in the process of practical application, and trying to minimize the FLOPs and Params of the model under the guarantee of the discriminative accuracy, ResNet18 was selected as the student model, and the model’s FLOPs, Params, Accuracy, Precision, Recall, F1 and FPS were 1.824 G, 11.178 M, 0.9037, 0.9065, 0.8153, 0.8519 and 75.24 respectively.

Optimizer comparison experiment

Under the same experimental conditions, Table 4 shows the experimental results of three optimizers for the model after replacing the loss function. Comparing the experimental results in Table 4, it can be seen that all three optimizers do not change the FLOPs and Params of the model. When the optimizer was AdamW, the model had the highest Accuracy, Precision, Recall, F1, and FPS, which were 0.9425, 0.9272, 0.8881, 0.9064, and 74.60, respectively, followed by RMSProp, SGD was the worst. The reason for this is that AdamW can adaptively adjust the learning rate based on the first-order and second-order moment estimates of the gradient. At the same time, weight decay can be performed after calculating the gradient, which is a more accurate implementation method that can better regularize the model and enhance its generalization ability.

Knowledge distillation experiment results

AT method was applied to the student model after the optimizer was replaced, and knowledge Distillation experiments were carried out with a Distillation Loss ratio of 0.1–2.0 (Table 5). Table 5 shows that when the Distillation Loss ratio was 0.1, 0.5, 0.8, 1.4, 1.8, and 2.0, the discriminant performance of the model was enhanced, the complexity of the models had not increased, and the speed was similar.

At this point, relying solely on Precision and Recall can’t evaluate the superiority or inferiority of the models. The judging indexes of F1 can be combined. Therefore, the Distillation Loss ratio of 2.0 had the best effect; the model’s Accuracy, Precision, Recall, F1, FPS were 0.9452, 0.9280, 0.9055, 0.9164, 74.22, respectively, for the model.

Results of ablation experiments

To test and verify the effectiveness of each step of improvement, ablation experiments were done under equal experimental conditions. The results are presented in Table 6, and the improvement process model metrics are visualized as shown in Fig. 3. From Table 7 and Fig. 3, it can be seen that for the selected student model ResNet18, replacing the loss function with PolyLoss, the FLOPs, Params, Accuracy, Precision, Recall, F1, and FPS of the model were 1.824 G, 11.178 M, 0.9265, 0.9164, 0.8630, 0.8836, and 73.75, respectively. This indicates that PolyLoss can guide model learning in a richer information space, enabling the model to capture data features more comprehensively and improve discrimination accuracy. After replacing the optimizer with AdamW, the FLOPs, Params, Accuracy, Precision, Recall, F1, and FPS of the model were 1.824 G, 11.178 M, 0.9425, 0.9272, 0.8881, 0.9064, and 74.60, respectively. This demonstrates that the AdamW optimizer combines the advantages of various optimization algorithms, such as RMSProp, and can adaptively adjust the parameter update step size during training. This adaptive capability allows AdamW to update parameters more accurately, thereby accelerating the model’s convergence speed. After conducting knowledge distillation experiments using the AT method, the FLOPs, Params, Accuracy, Precision, Recall, F1, and FPS of the model were 1.824 G, 11.178 M, 0.9452, 0.9280, 0.9055, 0.9164, and 74.22, respectively. This indicates that when the Distillation Loss ratio is 2.0, the model can effectively mine knowledge from the teacher model without affecting speed, thereby optimizing the performance of the student model.

Confusion matrix comparison

The confusion matrix of the model before and after improvement has been created (Fig. 4). Figure 4 shows that the enhanced model has improved the accuracy of distinguishing each fermentation level of black tea, but the probability of discriminating mild fermentation and excessive fermentation as moderate fermentation was increased, compared with the original model. The reason may be that moderate fermentation was in the middle of mild fermentation and excessive fermentation, which was a transitional stage and had some overlap with both mild fermentation and excessive fermentation.

Comparison of model detection effects

Two photos were randomly selected in the test set for testing and thermogram visualization comparison (Fig. 5). Figure 5 shows that the original model misjudged moderate fermentation as excessive fermentation in the first group, and the modified model avoided the misjudgment phenomenon. At the second set, the improved model had a higher confidence in the identification of the level of tea fermentation. From the heat map in the second group, it can be seen that the original model focused on a smaller range, and the improved model focused on a wider range of fermented black tea, and the judgment was more integrated and comprehensive.

Discussion

In this study, a lightweight convolutional neural network based on transfer learning was proposed to identify the fermentation level of black tea. Firstly, the transfer learning strategy was used to experimentally compare 14 kinds of convolutional neural network. The student model ResNet18 and the teacher model Efficientnet_v2_m were comprehensively selected according to the model complexity and the experimental results. Secondly, the student model’s loss function was replaced with PolyLoss, and then the original optimizer RMSProp was replaced with AdamW. Finally, the AT method was used to distill knowledge from the model after replacing the optimizer. The results from experiments conducted on a custom dataset indicated that the Accuracy, Precision, Recall, F1, and FPS of the improved model were 0.9452, 0.9280, 0.9055, 0.9164, and 74.22, respectively. The model improved Accuracy, Precision, Recall, and F1 by 0.0415, 0.0215, 0.0902, and 0.0645, respectively, without increasing complexity, with comparable speed. The improved model demonstrated enhanced accuracy in distinguishing the various levels of black tea fermentation compared to the original model, but the probability of discriminating mild fermentation and excessive fermentation as moderate fermentation increased. The reason may be that moderate fermentation was in the middle of mild fermentation and excessive fermentation, which was a transitional stage and has some overlap with both mild fermentation and excessive fermentation. The model should be optimized for this to further reduce the misjudgment rate of the model.

Although the improved model has improved its discriminative performance and achieved lightweight effects, there are still certain limitations. For example, for deep learning models, there is limited image data, and the model may not be able to fully learn the complex features and subtle differences of images during the fermentation process of black tea. Especially when facing some rare or special fermentation states, there may be insufficient generalization ability. When collecting images, the lighting conditions and background are also relatively simple, and it still cannot cover all possible situations in the actual production environment. Furthermore, the deployment of models in actual production will also be a technical challenge. In terms of hardware compatibility, it is undoubtedly one of the primary challenges. The existing production environment is often equipped with diverse hardware devices, with different models, specifications, and performance. Our model needs to be deeply adapted to these different hardware components in order to integrate smoothly. In terms of real-time processing requirements, production scenarios have extremely strict requirements for the response speed of models. The model must analyze input data and output results in a very short amount of time to meet the continuity and efficiency of the production process.

Next, we aim to further refine discriminative model and design effective deployment strategies to ensure the successful deployment of the model to actual production. Moving forward, we plan to gather additional images of black tea fermentation from diverse varieties and complex settings to broaden the dataset and enhance the model’s generalization capabilities. In addition, in order to improve the robustness of the model under different lighting conditions, we will introduce lighting normalization processing to adaptively adjust the pixel values of the image, simulate the imaging effect under different lighting environments, and enable the model to learn more robust feature representations. Simultaneously, we will employ deep learning techniques to integrate the image features of fermented black tea with its internal chemical components, allowing for a more thorough and comprehensive assessment of the fermentation level.

Methods

Dataset production

The images used in this study were collected from the Tea Research Institute of the Chinese Academy of Agricultural Sciences, located at 120.03°E longitude and 30.18°N latitude. Tea bud was a bud and a leaf of Tie Guanyin. In this study, fermentation experiments were conducted in an artificial climate box (LHS-150), with the fermentation temperature and relative humidity set to 30 °C and 90%, respectively. In this experiment, black tea was fermented for 5 h, and a total of 187 black tea fermentation images were collected at 0 h, 1 h, 2 h, 3 h, 4 h and 5 h using Canon camera (EOS80D, Canon). The image samples covered the entire stage of black tea fermentation. During the image collection process, the height of the camera from the fermented tea samples was set to 400 mm.

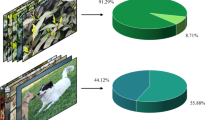

Due to the small number of collected image samples, in order to enhance the robustness of the model, this paper performed operations such as rotation, mirroring, noise addition, and cropping on the 187 images. The rotation operation simulates the actual changes in shooting angles, allowing the model to recognize image features from different angles, as there may be differences in shooting angles in reality. Mirror processing simulates the symmetry of images in the horizontal or vertical direction, increasing the diversity of data, considering that some fermentation features may have symmetry. Noise addition simulates potential interference during the image acquisition process, such as device noise, to make the model adaptable to noise and improve robustness. Trimming is the process of removing unnecessary background or redundant parts from an image, highlighting key information, and allowing the model to better focus on the key features of black tea fermentation, thereby improving the model’s generalization ability. The amount of data has been expanded from 187 to 374018. The samples from the six different fermentation time points were categorized into three groups based on fermentation degree, 0–3 h for mild fermentation, 4 h for moderate fermentation, and 5 h for excessive fermentation. The data set was divided into a ratio of 60% for training, 20% for validation, and 20% for testing. Figure 6 shows the specific examples of fermentation degree of black tea and the production of dataset.

Convolutional neural network

Convolutional neural network is one of the representative algorithms of deep learning. It consists of a number of convolutional layers and pooling layers, especially in image processing, convolutional neural network has a very good performance19. There are many convolutional neural network models for different tasks and scene image classification. This article chooses the convolutional neural network models as shown in Table 7.

Transfer learning

Transfer learning plays a very vital role in the field of deep learning. The core idea is that models trained on large datasets can be “migrated” to new tasks, thus avoiding the need to start training from scratch20. Using pre-trained models is a special transfer learning strategy. In this study, a pre-trained convolutional neural network model is loaded during the training process to shorten the model training time and improve the model performance.

ResNet18

The Resnet model was proposed by He et al. in 201521 and has been widely applied in various computer vision tasks, with its performance and stability fully validated. It has a simple structure, efficient training speed, and good generalization ability, which can achieve good training results in a short period of time. Based on model maturity, discriminant effect, and considerations of resource and time efficiency, this paper selects ResNet18 network as the student model, which is constructed by stacking multiple residual blocks. Each residual block contains two 3 × 3 convolutional layers, and the input is directly added to the output through skip connections, thus solving the problem of gradient vanishing and degradation in deep networks. This design enables the network to effectively train at deeper levels. The specific network framework is shown in Fig. 7.

Efficientnet_v2_m

Efficientnetv2 is the second-generation model of the Efficientnet family, presented by Google at the ICML 2021 conference22. Efficientnetv2 inherits the core concept of Efficientnetv1, the composite scaling method, but makes several improvements to achieve smaller model size, faster training speed, and better parameter efficiency. Efficientnetv2 has several variants, including s, m, l, etc., each with different complexity and performance. Efficientnetv2 adopts the Fused MBConv structure, which is an improvement on the traditional MBConv structure. It combines expansion convolution, and depthwise convolution into a standard 3 × 3 convolutional layer, simplifying the network structure, reducing computational costs, and accelerating the training process. In the initial part of the network, using Fused MBConv can significantly improve training speed. As the network depth increases, it gradually returns to using more traditional MBConv modules to maintain a balance between model performance and efficiency. In addition, Efficientnet_v2_m further optimizes the scaling strategy to adapt to the new Fused MBConv structure, enabling the model to achieve optimal efficiency and performance balance at different scales. The network structure of Efficientnet_v2_m is presented in Fig. 8.

PolyLoss

Cross-Entropy Loss is used to measure the difference between the actual output of a neural network and the correct label, and updates network parameters through backpropagation23. It effectively prevents class imbalance during the training process and has robustness in class sorting. The calculation formula is shown in Eq. (2), where \({\alpha }_{j}\in R+\) is a polynomial coefficient and \({P}_{t}\) is the predicted probability of the target class label.

PolyLoss is an optimized version of Cross-Entropy Loss, which approximates the loss function using Taylor expansion as a simple framework24. The loss function is designed as a linear combination of polynomial functions, and the specific calculation formula is (3). Among them, N represents the number of important coefficients to be adjusted, and \({\varepsilon }_{j}\in [-\frac{1}{j},\infty ]\) is the perturbation term. In this study, the original loss function Cross-Entropy loss of the selected student model is replaced with PolyLoss.

AdamW

The AdamW optimizer is a variant of the Adam optimizer that combines weight decay (L2 regularization) with the Adam optimizer25. The key to AdamW is that it treats weight decay separately from gradient updating, which helps to address the incompatibility of L2 regularization with adaptive learning rate algorithms. In this study, the optimizer of the model after replacing the loss function is replaced with AdamW instead of RMSProp.

Knowledge distillation

In recent years, computing power has been continuously improving, and deep learning network models are becoming larger and larger. However, limited by resource capacity, deep neural models are difficult to deploy on devices. As an effective method of model optimization, knowledge distillation can reduce model complexity and computational overhead while retaining the key knowledge of high-performance models26.

Attention Transfer (AT), proposed at ICLR2017 conference, is a knowledge distillation method27. It draws attention from a network of teachers and distills the learned attention map into the student network as a kind of knowledge, so that the learning network tries to generate attention map similar to the teacher network, so as to improve the performance of the student network. This study uses the Efficientnet_v2_m model as the teacher model and employs the AT method to conduct knowledge distillation experiments on the student model ResNet18 at different distillation loss ratio. The specific schematic diagram is shown in Fig. 9.

Data availability

Data presented in this study are available on request from the corresponding author.

Code availability

The code used to support the results of this study can be obtained from the corresponding author.

References

Dong, C. et al. Rapid detection of catechins during black tea fermentation based on electrical properties and chemometrics. Food Biosci. 40, 100855 (2021).

An, T. et al. Hyperspectral imaging technology coupled with human sensory information to evaluate the fermentation degree of black tea. Sens. Actuators B Chem. 366, 131994 (2022).

Jia, J. et al. Establishment of a rapid detection model for the sensory quality and components of Yuezhou Longjing tea using near-infrared spectroscopy. LWT 164, 113625 (2022).

Wei, Q. et al. Identification of characteristic volatile compounds and prediction of fermentation degree of pomelo wine using partial least squares regression. LWT 154, 112830 (2022).

Jiang, H. et al. Identification of solid state fermentation degree with FT-NIR spectroscopy: comparison of wavelength variable selection methods of CARS and SCARS. Spectrochimica Acta Part A: molecular and biomolecular. Spectroscopy 149, 1–7 (2015).

Riza, D. F. A., Tulsi, A. A. & Momin, A. Assessing cacao beans fermentation degree with improved YOLOv8 instance segmentation. Comput. Electron. Agric. 227, 109507 (2024).

Chen, S. et al. Fermentation quality evaluation of tea by estimating total catechins and theanine using near-infrared spectroscopy. Vib. Spectrosc. 115, 103278 (2021).

Fraser, K. et al. Monitoring tea fermentation/manufacturing by direct analysis in real time (DART) mass spectrometry. Food Chem. 141, 2060–2065 (2013).

Cao, S. et al. Rapid fluorescence detection of black tea fermentation degree based on cobalt ion mediated carbon quantum dots. Food Control 165, 110610 (2024).

Ding, Z. et al. Quality detection and grading of rose tea based on a lightweight model. Foods 13, 1179 (2024).

Chawla, T., Mittal, S. & Azad, H. K. MobileNet-GRU fusion for optimizing diagnosis of yellow vein mosaic virus. Ecol. Inform. 81, 102548 (2024).

Chen, W. et al. MTD-YOLO: multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 216, 108533 (2024).

Tian, Y. et al. MD-YOLO: multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 213, 108233 (2023).

Gui, Z. et al. A lightweight tea bud detection model based on Yolov5. Comput. Electron. Agric. 205, 107636 (2023).

Ding, Z. et al. Impurity detection of premium green tea based on improved lightweight deep learning model. Food Res. Int. 200, 115516 (2025).

Zhang, Y. et al. A new lightweight framework based on knowledge distillation for reducing the complexity of multi-modal solar irradiance prediction model. J. Clean. Prod. 475, 143663 (2024).

Sun, Q. et al. A lightweight and high-precision passion fruit YOLO detection model for deployment in embedded devices. Sensors 24, 4942 (2024).

Paul, A. et al. Smart solutions for capsicum Harvesting: unleashing the power of YOLO for detection, segmentation, growth stage classification, counting, and real-time mobile identification. Comput. Electron. Agric. 219, 108832 (2024).

Ding, Z. et al. Lightweight CNN combined with knowledge distillation for the accurate determination of black tea fermentation degree. Food Res. Int. 194, 114929 (2024).

Yang, L., Finnerty, P. & Ohta, C. Applications of cluster-based transfer learning in image and localization tasks. Mach. Learn. Appl. 18, 100601 (2024).

He, K. et al. Deep residual learning for image recognition. CVPR. https://doi.org/10.48550/arXiv.1512.03385 (2015).

Tan, M. & Le, Q. V. EfficientNetV2: smaller models and faster training. ICML. https://doi.org/10.48550/arXiv.2104.00298 (2021).

Mao, A., Mohri, M. & Zhong, Y. Cross-entropy loss functions: theoretical analysis and applications. ICML. https://doi.org/10.48550/arXiv.2304.07288 (2023).

Leng, Z. et al. PolyLoss: a polynomial expansion perspective of classification loss functions. CVPR. https://doi.org/10.48550/arXiv.2204.12511 (2022).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. CVPR. https://doi.org/10.48550/arXiv.1711.05101 (2017).

Kim, H. et al. AI-KD: adversarial learning and implicit regularization for self-knowledge distillation. Knowl. Based Syst. 293, 111692 (2024).

Zagoruyko, S. & Komodakis, N. Paying more attention to attention: improving the performance of convolutional neural networks via attention transfer. ICLR. https://doi.org/10.48550/arXiv.1612.03928 (2017).

Acknowledgements

We extend our gratitude to the Key R&D Projects in Shandong Province (2023CXGC010702, 2023LZGCQY015), the Innovation Project of SAAS(CXGC2024A08, CXGC2025A02), the Key R&D Projects in Zhejiang Province (2023C02043), the Agricultural Science and Technology Research Project of Jinan City (GG202415) and the Technology System of Modern Agricultural Industry in Shandong Province (SDAIT19) for their support and services throughout this project.

Author information

Authors and Affiliations

Contributions

Z.Z.D., Y.L.C. and C.W.D.: Conceptualization. X.S.Z., Z.Z.D. and S.J.L.: Methodology. S.J.L.: Investigation. X.S.Z., Z.Z.D. and M.W.: Software. Z.Z.D.: Formal analysis. X.S.Z. and M.W.: Validation. X.S.Z. and M.W.: Data curation. S.J.L. and Y.L.C.: Visualization. Y.L.C. and C.W.D.: Supervision. C.W.D.: Project administration. C.W.D.: Funding acquisition. X.S.Z. and Z.Z.D.: Writing—original draft. Y.L.C. and C.W.D.: Writing—review and editing. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhu, X., Ding, Z., Wang, M. et al. Convolutional neural network based on transfer learning for discriminating the fermentation degree of black tea. npj Sci Food 9, 170 (2025). https://doi.org/10.1038/s41538-025-00516-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41538-025-00516-6