Abstract

Microbial contamination threatens food safety, and traditional microbial risk assessment methods struggle with complex supply chains and microbial diversity. This paper reviews emerging detection technologies’ applications in food safety, highlighting their role in advancing risk assessment and future research directions.

Similar content being viewed by others

Introduction

In the field of food safety, microbial contamination constitutes a significant and unavoidable challenge. With the advancement of globalization and the increasing complexity of food supply chains, the risks associated with microbial contamination continue to rise. Such contamination may occur at various stages, including the collection of raw materials, processing, storage, transportation, and, ultimately, sales and consumption. Each stage carries the potential risk of microbial contamination, posing threats to consumers’ health. Therefore, conducting microbial risk assessments (MRAs) is particularly important1 (Fig. 1). MRA is a scientific methodology designed to systematically identify, analyze, and evaluate the potential hazards related to microbial contamination. Through such assessments, we can better understand the sources of microbial contamination, their transmission pathways, and their potential impacts on human health. This enables timely preventive measures and provides a scientific basis for policymaking2,3.

In risk assessment, begin by identifying the hazard and establishing its minimum level of concern; next, use dose-response relationships to estimate health impacts and integrate exposure assessment to calculate the probability of contamination. Finally, convert the hazard into a numerical risk value, then proceed to risk management and communication, prioritizing appropriate mitigation strategies.

Despite years of development, MRA still faces several critical bottlenecks: (1) the complexity arising from the diversity and widespread distribution of microorganisms4; (2) uncertainties caused by dynamic changes stemming from microbial interactions and environmental factors; and (3) limitations caused by data scarcity and the assessment methods5. The first two challenges are inherent to microorganisms and are objectively difficult to overcome, whereas the third—limitations in data and methodologies—can be addressed through advancements in microbial detection technologies. These technologies form an essential component of MRA frameworks, providing critical data support. Consequently, the performance and applicability of detection technologies significantly influence the accuracy and reliability of risk assessments6.

Building on this understanding, we take the development process of microbial detection technologies and risk assessment as examples to deeply analyze how the iterative advancements in detection technologies influence the developmental trajectory of MRA. Our aim was to provide insights into optimizing risk assessment strategies and enhancing the accuracy and reliability of evaluations, and to offer a perspective on the future development trends of both fields.

Traditional detection technologies of MRA

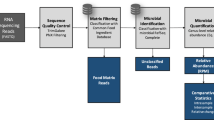

The culture method, as a representative of traditional detection technologies, is considered the gold standard for detecting the presence of microorganisms in food7. It allows researchers to simulate the growth and reproduction of microorganisms under laboratory conditions, thereby assessing their potential risks. This makes it a critical tool in MRA. The primary advantage of the culture method lies in its ability to provide direct and specific evidence, confirming the presence of microbial contamination in samples such as food8. However, it also has several limitations, including being labor-intensive, time-consuming, and constrained by sensitivity and specificity. Moreover, the delayed results of traditional detection technologies make it difficult to perform real-time detection and assessment simultaneously9 (Fig. 2).

The microbial detection technologies ranging from traditional culture‐based methods to advanced molecular and nanotech solutions. Core techniques include gene chips, PCR, DNA sequencing, mass spectrometry, turbidimetry, ELISA/ELFA, and biosensors, alongside emerging platforms such as nanotechnology, microfluidics, and multi-omics analyses.

Emerging detection technologies and their breakthroughs in risk assessment

Microbial detection technologies represent a critical advancement in the field of microbial monitoring, bringing revolutionary breakthroughs to MRA10. Emerging microbial detection technologies refer to a series of innovative methods and tools developed in recent years that, compared with traditional approaches, offer significant improvements in detection efficiency, sensitivity, specificity, and cost-effectiveness (Table 1). Polymerase chain reaction (PCR) technology, with its high specificity and sensitivity, enables the rapid amplification and detection of specific microbial DNA fragments, reducing false-negative risks through quantitative analysis of target gene copy numbers (e.g., qPCR sensitivity reaches 10 CFU/g for Listeria detection in dairy products, outperforming traditional culture methods).Mass spectrometry allows the rapid identification of microbial species, delivering more precise data to support risk evaluations. For instance, MALDI-TOF achieves >95% classification accuracy by matching protein fingerprints, distinguishing pathogenic E.coli O157:H7 from non-pathogenic strains11. Sequencing technologies, particularly next-generation sequencing, allow for large-scale sequencing of DNA molecules, offering a powerful tool for identifying unknown pathogens and conducting traceability analyses12. However, challenges such as sequencing platform variability (e.g., Illumina error rate 0.1% vs. Nanopore 5%) and high computational demands (>100 GB storage per sample, >24 h analysis time) require standardization and HPC infrastructure. Additionally, nanotechnology and electrochemical sensor technologies, known for their portability, speed, and accuracy, enable on-site rapid detection, providing real-time and efficient solutions for MRA13. Integration with blockchain and IoT enables real-time monitoring (e.g., WGS data combined with blockchain reduces contamination response time to 48 h in poultry supply chains). The integration of these technologies has significantly enhanced the precision and timeliness of MRA, offering robust support for safeguarding public health. For example, AI-driven models integrating multi-omics data reduce prediction uncertainty (e.g., error decreases from ±1.5 log CFU to ±0.8 log CFU). Most importantly, emerging technologies continue to evolve and emerge, presenting both new opportunities and challenges for MRA14 (Fig. 2).

Current bottlenecks in MRA for food safety

MRA consists of four key components: hazard identification, hazard characterization, exposure assessment, and risk characterization. MRA can be classified into either qualitative or quantitative microbial risk assessment (QMRA)15. QMRA plays a critical role in evaluating the risks associated with food consumption and in justifying the implementation of control measures16. Despite nearly two decades of development, MRA still faces several pressing challenges that need to be addressed. These challenges are outlined below in their entirety, reflecting key aspects that require resolution (Fig. 3).

The diagram maps the bidirectional relationship between microbial risk assessment (MRA) and detection technologies. Advanced tools such as mass spectrometry, multiomics, microfluidics/biosensors, PCR, DNA sequencing and nanotechnology generate high-quality, comprehensive data that improve hazard identification, dose–response modelling and exposure assessment, thereby strengthening MRA. Conversely, the demands of MRA—better sensitivity, recognition of unknown microbes, rapid detection and handling of data overload—drive innovation in these technologies, while traditional culture and immunological methods remain limited by cultivation bias and cost–benefit uncertainties.

Insufficient completeness and reliability of the data

MRA relies heavily on high-quality data. However, the heterogeneity and insufficiency of the data remain significant obstacles. For example, whereas DNA sequencing methods have introduced potential advancements for QMRA, the challenges of the heterogeneity, availability, and integration of the data have hindered their widespread application in risk assessments. PCR technology, because of its strong operability, has been widely applied in food safety. However, the data generated often lack detailed characterization, making the results insufficient to support comprehensive risk assessments. PCR has been increasingly replaced by the emerging multi-omics approaches. Over the past two decades, whole-genome sequencing (WGS) has achieved remarkable progress, facilitating rapid data accumulation17,18. This progress presents unprecedented opportunities to incorporate genomic data into QMRA19. Currently, the bottleneck in applying molecular tools for MRA has shifted from data collection (in terms of cost and time) to the challenges of data analysis and developing methods.

Deficiencies in data specificity and correlation

Typically, there is a lack of a direct correlation between genotypic variation and the phenotypic characteristics of microbial populations, making it challenging and complex to utilize genomic data for food safety risk assessments. For instance, the variability or uncertainty in conventional data such as concentrations and prevalence rates can often be described using probabilistic distributions. However, when the data consist of genomic sequences, it remains unclear how to apply similar probabilistic methods to describe such variability. Consequently, one of the greatest challenges in genomics is to predict the phenotypic traits of specific pathogens in the food chain on the basis of genotypic data. Mapping gene sequences to a quantitative description of the risk poses significant difficulties, as the number of genes far exceeds the number of strain samples by several orders of magnitude. Statistical analysis of WGS data is not straightforward, and before genetic data can be applied for making food safety decisions, it is essential to establish reproducible and meaningful associations between genetic variations and phenotypic traits5. Recent studies have demonstrated the feasibility of new methods that re-quantify disease and outbreak risks based on differential gene expression. By combining feature selection algorithms with new models (e.g., AI-based learning models20), it is possible to better quantify the risks of diseases caused by pathogens such as Listeria monocytogenes, Escherichia coli, and Salmonella, as well as to predict the severity or endpoints of diseases caused by these pathogens21. An important contribution of this new perspective to bacterial predictive modeling is the integration of feature selection algorithms, which streamline the transformation of WGS data into a format that is more suitable for predictive models. This helps address the issues of model overfitting and biases that arise when the number of predictive variables significantly exceeds the sample size.

Bacteria genome-wide association studies (BGWAS) have emerged as a core technology for dissecting complex genotype-phenotype relationships, particularly when integrated with machine learning approaches. BGWAS leverages pan-genomic features (e.g., SNPs, k-mers, unitigs, and gene presence/absence matrices) and statistical models to correct for population structure and identify key genetic markers22. For example, Cuénod et al. demonstrated that Scoary, by applying Fisher’s exact test to pan-genomic data, confirmed the direct association between the papGII gene and uropathogenicity in E. coli23. Lees et al. developed Pyseer, which uses elastic net models to integrate unitigs and phylogenetic information, achieving high-precision prediction of cefixime resistance in Neisseria gonorrhoeae24. Additionally, treeWAS quantifies “terminal scores” via phylogenetic trees to map vitamin B5 biosynthesis genes to host specificity in Campylobacter spp25, while SEER enables reference-free phenotype prediction using k-mer analysis, making it applicable to uncultured microbes26.

Building on these methodological advancements, the deep integration of machine learning and AI has further enhanced BGWAS performance and applicability. Elastic net regression, by imposing L1/L2 regularization, has reduced prediction errors for β-lactam resistance in Streptococcus pneumoniae by 47% across 3701 isolates27. Random forest (RF) models have identified the H481Y mutation in the rpoB gene as a key determinant of vancomycin intermediate resistance in Staphylococcus aureus28. These developments exemplify how data-driven modeling is transforming BGWAS from hypothesis-generating research to a precision prediction tool in MRA.

Uncertainty and complexity of the models

MRA relies on mathematical models to predict risks, yet every model involves uncertainties and assumptions29. Because of the complexity of the systems involved, constructing a fully accurate model is challenging, and such uncertainties may lead to either overestimation or underestimation of the risks30,31. Traditional MRA methods often rely on simplified assumptions, such as the uniform distribution of virulence traits within a population of a specific species. This assumption can result in inaccurate risk estimations for particular subpopulations. Additionally, given the rapid evolution and adaptive capacity of microorganisms in the face of environmental changes, microbial risks in food represent a dynamic concept. However, traditional MRA methods struggle to incorporate these dynamic and stochastic events into the models, thereby highlighting the limitations of these methods in practical applications.

In recent years, relevant studies have applied Bayesian methods and dynamic modeling to MRA, significantly improving the accuracy and reliability of risk predictions by integrating multi-source data, quantifying uncertainties, and simulating complex scenarios. For example, Sun et al. developed a Bayesian network (BN) model for risk assessment of Listeria in bulk cooked meats in China, which incorporated production, retail, and consumption stages. By using Markov chain Monte Carlo (MCMC) simulation to integrate literature data, expert opinions, and monitoring data, the model quantified risks at each stage and identified retail cross-contamination as a key risk factor through scenario analysis32. Garre et al. used BN combined with Bootstrap and Monte Carlo (MC) simulations to assess risks associated with microbial contamination of leafy greens irrigated with reclaimed water in southeastern Spain. The study found that microbial concentrations at wastewater treatment plant outlets had a relatively minor impact on the probability of E. coli concentrations on plants exceeding 2 log CFU/g (a common threshold). Conversely, when organic amendments were used as fertilizers, soil-to-plant contamination via splashing dominated the likelihood of exceeding the threshold33. Additionally, hierarchical Bayesian models provide a reliable approach to quantify different sources of variation within datasets and are increasingly being used to quantitatively estimate contributions of different factors to uncertainty34. For example, Karamcheti et al. used hierarchical Bayesian linear mixed models to estimate the variability of Listeria thermal inactivation parameters. The parameters estimated for different Listeria species may be applicable to processing aqueous foods (e.g., milk) and liquid products (e.g., sauces and gravies) within a temperature range of 55–70 °C and a pH range of 3–835.

In predictive microbial modeling, dynamic models integrate primary and secondary models to predict bacterial growth under changing environmental conditions (such as temperature fluctuations), as opposed to the two-step approach using traditional primary and secondary models. Although mathematically and computationally more complex, they are more efficient and effective for developing accurate predictive models, significantly reducing the uncertainty of prediction models36. For example, Park et al. constructed dynamic predictive models for the growth of Salmonella and Staphylococcus aureus in fresh egg yolks, demonstrating good applicability to omelets and S. aureus food isolates37. Jia et al. developed a dynamic model using a one-step fitting method to predict the growth of L. monocytogenes in pasteurized milk under temperature fluctuations during storage and temperature abuse. The study found that the estimated minimum growth temperature and maximum cell concentration were 0.6 ± 0.2 °C and 7.8 ± 0.1 log cfu/mL, respectively. The model and related kinetic parameters were validated using data collected under dynamic and isothermal conditions (not used in model development), with validation results showing that the predictive model was highly accurate, with a relatively small root-mean-square error (RMSE). The developed model can be used to predict the growth of L. monocytogenes in contaminated milk during storage38.

Applying dynamic modeling and Bayesian methods to predictive modeling, exposure assessment, and other areas can effectively reduce uncertainties and variability in the MRA process, further enhancing the accuracy of predictive outcomes.

Enhancing the accuracy of dose–response evaluations

With advancements in omics technologies, we can now delve deeper into the mechanisms underlying host–pathogen interactions4. For example, by analyzing the adhesion and invasion capabilities of foodborne pathogens on the host’s esophageal epithelial cells, alongside data on the host’s immune responses, it becomes possible to map dose–response relationships with greater accuracy. Accounting for variability in the subjects’ sensitivity is crucial in dose–response assessments. For instance, Mageiros et al.39 used genome-wide association studies to investigate the risk factors associated with E. coli. This study identified potential complex nonlinear relationships between disease-associated risk factors and phenotypes and ranked the isolates based on their ability to predict sources, such as invasive disease versus carriers. However, the practical application of omics data to dose-response assessments requires large-scale clinical trials. Additionally, relevant studies have highlighted that current omics technologies still face challenges of insufficient standardization and reproducibility. For instance, experimental designs in omics-based research-such as those involving over-parameterization and ill-defined sampling populations-may introduce biases where false positives outnumber true positives or increase error probabilities. Genomic, transcriptomic, and metabolomic data lack unified metadata standards (e.g., quantification units for gene expression levels, metabolite annotation rules), frequently leading to the “curse of dimensionality” (high-dimensional data causing model overfitting) during cross-platform data integration. Omics studies often suffer from low statistical power due to small sample sizes and limited replicates, making it difficult to distinguish genuine biological signals from random noise40. For regulatory agencies, applying omics technologies to characterize host-pathogen relationships for improved understanding of virulence and risk remains problematic, exemplified by reductionist tendencies in translating microbial information comprising thousands of genetic loci into risk probability scales within practical policy frameworks41.

Current efforts are focused on leveraging transcriptomics and metabolomics data, combined with functional invasion assays, to gain a more comprehensive understanding of the host’s unique responses to pathogens.

Impact of climate change on microbial distribution

Climate change may lead to alterations in the distribution and activity patterns of certain pathogenic microorganisms, requiring updates and adjustments to the existing risk assessment models to maintain their relevance and accuracy42. Environmental factors play a critical role in modulating the pathogenicity of foodborne pathogens, as unfavorable conditions for pathogen growth can induce diverse survival mechanisms in bacteria. These mechanisms significantly influence the overall prevalence, disease risk, and burden caused by pathogens. Several studies have explored the effects of environmental factors, particularly temperature and precipitation, on the incidence of Salmonella-related foodborne outbreaks. Research has shown that the risk of Salmonella contamination and subsequent infections increases under higher environmental temperatures, especially at or above 30 °C. Similarly, increased precipitation has been associated with a heightened risk of salmonellosis. This is attributed to surface runoff, which elevates the pathogen load in water sources, enabling wider distribution of these pathogens and creating favorable conditions for bacterial growth, such as high water activity43. A recent study found that an increase in temperature was positively correlated with Campylobacter contamination, thereby increasing exposure risk through all transmission routes44. Model predictions indicate that by the year 2100, the average annual temperature in Nordic countries may rise by an average of 4.2 °C, leading to an overall increase in the incidence of Campylobacter infections by nearly 200% by the end of this century45. Froelich et al. noted that warming seawater and projected ongoing climate change have led to sustained increases in Vibrio infections in the United States and globally. In general, infections are seasonal, with most cases traditionally occurring between May and October, although warm temperatures may now persist into autumn46. Additionally, due to already warmed tropical conditions, projected increases in environmental temperatures from climate change are expected to exacerbate the risk of listeriosis in Africa47.

Thus, when assessing the overall risk posed by foodborne pathogens to human health, it is essential to account for the influence of these environmental changes. Laboratory evolution experiments involving microorganisms may offer valuable insights into adaptive evolutionary trends and dynamics. Exposing microorganisms to different stress conditions, such as high salt concentrations, the presence of heavy metals, preservatives, and temperature fluctuations, and analyzing the changes in the transcriptomes and genomes of resistant strains is a viable approach for understanding these adaptation mechanisms.

Uncertainty in the risks of emerging pathogens

Emerging pathogens may pose unforeseen risks, presenting significant challenges for identifying and evaluating their hazards. Conducting in-depth analyses of representative strains requires a comprehensive understanding of their genomic diversity and population structures, which are crucial for effective hazard identification48. By comparing large-scale genomic data, it is possible to link genome sequences with specific phenotypic traits, thereby identifying the molecular basis of these phenotypes. Characteristics identified through genomic sequences can serve as markers for specific phenotypes, such as virulence, stress tolerance, host specificity, or environmental distribution, once they have been validated by other omics approaches, including transcriptomics and proteomics49. Studies have indicated that the application of WGS to MRA is feasible50. In hazard identification, the primary advantage of WGS lies in enabling more targeted risk assessments by shifting the focus from species/genus levels to strain/subtype levels. These strains/subtypes are characterized by genetic markers or combinations thereof that encode traits increasing the likelihood of persistence throughout the food chain and/or causing severe adverse health effects, leading to high risks of infection or disease51. For hazard characterization, integrating WGS into multi-genic genetic analysis and combining it with phenotypic data holds promise for revising current dose-response models to evaluate more targeted pathogen-human interactions52. In exposure assessment, different subtypes within specific bacterial species often exhibit unique behaviors, such as varying abilities to grow or survive under common conditions in food processing and distribution. In this regard, WGS can be used to predict microbial growth or survival capabilities within hosts or foods, as well as during food processing, storage, and distribution53. Risk characterization is also expected to benefit from WGS due to its implementation in prior risk assessment steps.

In the face of unknown bacterial strains or novel species, WGS offers predictive insights, enabling more proactive approaches to hazard assessment54. In the future, these marker sequences will play a pivotal role in refining methods for hazard identification, offering enhanced precision and reliability.

Challenges posed by complex supply chains in a globalized world

The rapid advancement of globalization has led to increasingly complex supply chains for food, water, and other products. This complexity not only heightens the risk of microbial contamination but also complicates MRA. Antibiotic resistance remains a shared challenge for the global food industry, and WGS has demonstrated significant potential in predicting and understanding resistance mechanisms55,56.

Although preliminary insights into the mechanisms of cross-resistance driven by adaptive evolution have been gained, translating genomic data into predictable phenotypic variations and using these insights to forecast pathogens’ virulence behavior remains a critical unresolved issue. Pornsukarom et al.57 utilized single-nucleotide polymorphism and feature frequency profiling analyses to successfully predict the serotypes of Salmonella enterica in 200 samples and revealed the distribution of virulence genes among strains from different environmental sources. The study, leveraging computer-assisted analytical resources, identified sul1, tetR, and tetA as the most prevalent resistance genes within the samples.

Additionally, of the 175 virulence genes identified through WGS, 39 were found to be universally present across all samples. The study further demonstrated a significant correlation between phenotypic resistance and the determinants of resistance predicted by WGS, with sensitivity and specificity reaching 87.61% and 97.13%, respectively. These advancements highlight how the application of advanced genomic technologies is gradually enhancing our ability to predict and understand microbial resistance risks in the face of challenges posed by the complexity of the globalized supply chain. This progress holds critical implications for global food safety and public health.

Impact of advances in detection technology on food-related MRA

With technological advancements, detection methods have undergone multiple iterations, evolving from traditional culture-based techniques to modern molecular biology approaches. Each technological innovation has profoundly influenced food-related MRA. The impact of developments in detection technology on MRA is dual-faceted, encompassing both benefits and challenges. On the positive side, technological updates have significantly enhanced the sensitivity and specificity of detection while reducing the detection time, thereby enabling more rapid and accurate risk assessments50. However, the introduction of new technologies has also brought challenges, including increased demands for data processing, higher costs, and the need for specialized expertise—issues that must be addressed to advance food-related MRA.

Enhancing the reliability and completeness of risk assessments

The rapid advancement of microbial detection technologies has significantly improved the reliability and completeness of food-related MRA. Advanced techniques, such as gene probes and PCR, provide high sensitivity and specificity, ensuring the accuracy of evaluations. Moreover, rapid detection enables timely responses to food safety issues, effectively reducing the associated risks58. Standardization and traceability further enhance the reliability of assessments. The widespread application of these technologies allows risk assessments to encompass a broader range of microbial species and toxins, achieving quantitative evaluations and real-time monitoring. These advancements not only enhance the precision of risk assessments but also provide robust support for developing scientifically sound preventive measures, thereby safeguarding food safety.

Accelerating the speed of risk assessments

The continuous emergence of novel testing equipment, combined with the application of technologies such as AI and machine learning59, has made the detection process increasingly automated and intelligent60. This significantly enhances the speed and efficiency of testing, reducing the time gap between the production and consumption of food. Faster risk assessments enable the timely identification and resolution of food safety issues, allowing for more rapid implementation of the necessary control measures to minimize the impact of contaminated food on consumers’ health. With the advancement of Internet of Things (IoT) technology, future food microbiological detection systems are expected to achieve real-time online monitoring. This means that every stage of the food production process can be continuously monitored. If microbial contamination is detected, the system will immediately issue an alert, enabling prompt action to prevent food safety incidents.

Enhancing the comprehensiveness of risk assessments

With the increasing diversity of food sources, every stage of the food supply chain—including production, processing, and transportation—has the potential to become a source of microbial contamination. Advances in detection technologies have enabled food safety testing systems to target a wider range of contamination sources, thus providing a more comprehensive evaluation of the microbial risks in food. This holistic approach to risk assessment aids in maintaining the overall quality and safety of food products. Modern detection technologies generate vast amounts of high-precision, multidimensional data from various sources, including molecular assays, mass spectrometry, spectroscopy, immunological tests, and optical phenotyping61. Concurrently, numerous databases have accumulated extensive information that is relevant to food safety and risk assessments. The integration of such multimodal data is emerging as a pivotal direction in evaluating microbial risks in food. For example, risk assessors can leverage databases such as EnteroBase, NCBI (National Center for Biotechnology Information), and NMDC (National Microbiology Data Center) to construct traceability networks and dynamic early-warning systems, thereby enhancing the scientific validity and timeliness of risk assessments.

By applying traditional statistical methods and machine learning models to datasets derived from diverse detection technologies (e.g., PCR, mass spectrometry, spectroscopy, and biosensors), hidden patterns can be uncovered, and risk trends can be assessed62. This integration further enhances the accuracy of risk assessments.

However, integrating datasets generated by different technologies remains highly challenging. First, data integration requires multidisciplinary research spanning microbiology, statistics, and computer science, but the shortage of interdisciplinary professionals restricts data integration and analysis53. Second, data types produced by different technologies (e.g., sequencing, mass spectrometry, sensors) vary significantly (e.g., nucleotide sequences, mass-to-charge ratio spectra, electrical signals), and the lack of data standardization leads to difficulties in cross-platform data integration63. Third, different institutions focus on distinct stages: farms/raw material suppliers prioritize production sources (e.g., microbial monitoring of soil, water, and livestock breeding), food manufacturers/processors concentrate on processing stages (e.g., cleanliness of production equipment, microbial counts in processing environments, effectiveness testing of sterilization processes), and market regulatory agencies/testing institutions emphasize end markets (e.g., sampling of microbial overages in retail products, risk warnings at the consumption stage). The lack of communication between these institutions creates data silos, which not only limits data integration but also makes it difficult to trace the transmission pathways of microbial contamination risks across multiple stages, preventing risk assessments from covering the entire “farm-to-table” chain64.

In response, global initiatives like the Global Alliance for Genomics and Health (GA4GH) have proposed standardized metadata schemas and cross-platform interface protocols to facilitate efficient and interoperable microbial data sharing worldwide65. In terms of methodology, the standardization of sequencing workflows, including unified quality control criteria and data preprocessing steps for Illumina platforms, has been widely adopted by regulatory agencies, including the U.S. FDA’s Whole Genome Sequencing Network. These standardized practices have significantly reduced uncertainty in data analysis and improved the consistency and reliability of risk assessment models.

Enhancing early warning systems and dynamic prediction methods for food safety risks

As food safety standards improve and regulatory oversight strengthens, the advancement of predictive microbiology enables us to forecast microbial growth and inactivation without direct microbial testing. This has significant implications for MRA in food safety, healthcare, and other related fields. The development and application of predictive modeling software provide convenient platforms for quickly evaluating the impacts of environmental and food composition variables on microbial growth. These models can be adjusted on the basis of environmental factors such as temperature, humidity, and pH to rapidly assess the microbial risks under varying conditions. Machine learning algorithms, including decision trees, random forests, support vector machines, and deep learning models, can uncover hidden patterns and associations from large-scale datasets. By building machine learning-based predictive models, it becomes possible to evaluate risk trends under different conditions, thereby strengthening early warning systems for food safety risks66. For rapidly changing potential risks, traditional statistical methods and basic machine learning algorithms may fall short in capturing dynamic characteristics, especially when the risks exhibit strong time-dependence and nonlinear variations. Tools such as Bayesian networks and dynamic causal graphs can be introduced to utilize continuously updated real-time data, predict the temporal evolution of risks, capture dynamic features, and assess potential future risks67,68.

Several industries and regulatory agencies have begun integrating dynamic modeling and real-time data analytics into microbial risk management systems to enhance early warning capabilities. For example, the U.S. FDA has developed the GenomeTrakr genomic database, which is used not only for pathogen source tracking but also, in conjunction with temporal and spatial data, for predicting the dynamics of outbreak risks69. Recent efforts have also explored the use of Dynamic Bayesian Networks (DBNs) linked with real-time environmental monitoring data to estimate the probability of outbreaks involving key foodborne pathogens under high-risk conditions70. Beyond regulatory-led initiatives, some food companies have deployed sensor- and IoT-based systems to capture real-time data on temperature, humidity, and product history. These data feed into machine learning models that provide predictive shelf-life and risk alerts for high-risk products such as fresh meat and seafood71. These applications demonstrate that dynamic prediction models can improve the timeliness of risk detection and support proactive interventions and tiered risk management.

Advancing the internationalization of risk assessment

Although there is currently a lack of unified international standards and regulations for food safety detection technologies, continuous advancements in technology and the strengthening of international exchanges are fostering closer collaboration among nations in food safety testing. By learning from and adopting the advanced technologies and experiences of other countries, China can enhance its own MRA capabilities and gradually promote the internationalization of food safety detection technologies. With the acceleration of globalization, international cooperation and exchange are becoming increasingly critical in MRA. In this process, professional organizations such as the World Health Organization (WHO), Food and Agriculture Organization of the United Nations (FAO), and Codex Alimentarius Commission (CAC) play critical roles. For example, WHO’s Global Food Safety Strategy 2022–2030, launched in 2023, aims to coordinate national efforts in microbiological risk monitoring and support low-income countries in enhancing their testing technical capabilities72. The FAO primarily focuses on microbial risk prevention and control along agricultural industry chains, integrating microbial data from farm to table through the Global Environment Monitoring System for Food (GEMS/Food) to facilitate cross-regional contamination tracing and risk early-warning collaboration73. Additionally, the series of monographs on MRA published by the Joint Expert Meeting on Microbiological Risk Assessment (JEMRA) provides scientific risk assessments for selected pathogens and develops guidelines and expert recommendations for MRA74. As an international standard-setting body established jointly by FAO and WHO, the CAC has primarily developed frameworks for MRA and promoted standard mutual recognition and trade coordination, dedicating itself to establishing globally unified food quality and safety guidelines75.

By sharing experiences and exchanging technologies with international counterparts, countries can collectively tackle the challenges of microbial contamination in food and improve global food safety standards.

Developing personalized risk assessments

With the expansion and deepening of the scope of risk assessments, technological advancements a now enable MRA that are tailored to individual-specific conditions. These include factors such as genetic predispositions and lifestyle habits, providing more precise risk predictions. As consumers’ demands diversify, personalized risk assessments are emerging as a new trend in microbial risk evaluation. Leveraging novel technologies, MRA plans can be customized to individual consumers according to their health status, dietary habits, and other personal factors. These personalized assessments offer more precise food safety recommendations, catering to the unique needs of each consumer.

For example, while the U.S. National Institutes of Health (NIH) Nutrition for Precision Health does not directly target food safety, its approach to developing disease prediction models by integrating individual genomic, microbiome, and environmental exposure data (e.g., diet, living environment) can be adapted to MRA. NIH’s “All of Us” Program, for instance, has collected over 500,000 samples, and its data-sharing platform supports cross-disciplinary researchers in building personalized food risk models76. Additionally, the EU’s Food4Me project aims to leverage current understanding of food, genes, and physical characteristics to tailor healthier diets for each individual77.

Challenges brought by the development of detection technologies for food-related MRA

The iteration of new technologies may disrupt traditional food microbial detection methods, leading to the gradual phasing out of some conventional techniques. This shift could result in the loss of expertise and technical know-how, as well as the increased cost of training personnel. As detection technologies continue to evolve, it is necessary to regularly update food safety standards and detection methodologies. This constant change may lead to confusion and uncertainty, especially when new technologies are not yet widely adopted or validated. The research and application of new technologies require significant investment in terms of both time and funds, potentially increasing the costs for food production enterprises and testing institutions. Moreover, new technologies demand continuous maintenance and updates to ensure their sustained effectiveness and accuracy.

Emerging technologies such as artificial intelligence (AI) and big data analytics face several practical barriers to widespread adoption, including concerns over data privacy, limited algorithm transparency, and regulatory acceptance78. For instance, the European Union’s General Data Protection Regulation (GDPR) imposes strict limitations on the sharing and use of genomic data, which hampers cross-regional data integration and the development of predictive risk models65. In the United States, the FDA maintains stringent regulatory frameworks for whole genome sequencing (WGS), requiring that laboratories follow specific protocols for data submission and analysis, thereby raising the threshold and cost of implementation79. To address these challenges, future efforts should emphasize interdisciplinary collaboration, establish clear policy guidance on data usage boundaries, and improve the interpretability of algorithms. Additionally, the development of standardized procedures and closer coordination between regulatory bodies and industry stakeholders can help reduce uncertainty and barriers in technology deployment, ultimately enhancing the role of innovative tools in food safety risk assessment.

Notably, advancements in automation, AI, and cloud-based tools offer solutions to this challenge80. For example, the centrifugal LabTube platform proposed by Hoehl et al. enables integrated automated DNA purification, amplification, and detection without operator training, reducing processing time from approximately 50 min to 3 min. This platform not only exhibits high sensitivity and specificity but also significantly reduces labor and time costs81. AI has gradually become a key technology in automated quality control processes for food inspection, ensuring food safety and quality while lowering costs. For instance, Nithya et al. developed a computer vision system for mango defect detection based on deep convolutional neural networks, which automatically detects and grades appearance defects (e.g., spots, discoloration, or surface damage) in mangoes with an accuracy rate of 98%, drastically reducing production costs82. In recent years, cloud computing technologies have rapidly evolved, offering advantages such as reduced operational costs, server consolidation, flexible system configuration, and elastic resource provisioning. Yeng et al. proposed a cloud-based digital farm management system (FMIS) that employs a cloud framework alongside quick response (QR) codes and radio-frequency identification (RFID) technology to efficiently collect field planting data and enable precise tracking and traceability of production information, effectively lowering labor costs83.

These cases demonstrate that implementing automation, AI, and cloud-based tools can effectively improve food detection technologies and reduce associated costs. Additionally, government agencies can support enterprises through policy initiatives to lower the implementation costs of automation, AI, and cloud technologies, further promoting the adoption of new technologies and reducing production and detection costs for food companies and testing firms.

Future prospects

Transition from passive and on-demand assessments to proactive and real-time risk evaluation

With the continuous advancement of detection technologies, MRA is shifting from traditional passive and on-demand models to more proactive and real-time evaluation approaches. This transformation enhances the effectiveness of preventing and controlling microbial hazards in food. Future research should focus on advancing the miniaturization and intelligent integration of real-time monitoring tools, such as the development of nano-biosensors that can be embedded in food processing equipment to enable online quantitative detection of pathogenic microorganisms. The continuous improvement of evaluation models and methodologies has promoted the application of modular modeling frameworks and BN, making quantitative assessments more accurate and systematic. By integrating predictive microbiology approaches, the estimation of both the quantity and occurrence probability of microbial hazards can be further refined. Therefore, future efforts should concentrate on the multi-scale coupling of Bayesian networks and predictive microbiology. This shift from reactive responses to proactive prevention significantly improves the efficiency of food safety management and reduces the occurrence of food safety incidents caused by microbial contamination.

Zhiteneva et al. applied BN for QMRA of a non-membrane indirect potable water reuse system. The study constructed a BN in Netica, integrating data such as pathogen removal rates, concentrations, and dose-response models across treatment steps for probabilistic simulation. Through forward and backward inference, sensitivity analysis, and scenario analysis, critical control points (CCPs) were identified. Results showed that the disease burden for all pathogens met standards at the 95th percentile, with Cryptosporidium posing the highest risk. SMARTplus and UV treatments were identified as CCPs, and additionally, the BN provided intuitive results for decision-making84. The modernization of MRA, supported by advanced detection technologies and scientific methodologies, not only enhances the level of food safety management but also provides a robust foundation for the sustainable development of the food industry.

The increasing role of systems biology and multi-omics in risk assessments

The integration of systems biology and multi-omics technologies, such as genomics, transcriptomics, and proteomics, will provide more comprehensive data support for MRA. These advancements enable researchers to better understand the interactions and adaptability of microorganisms in complex environments. For instance, genomics can reveal genetic information and evolutionary history, transcriptomics can uncover gene expression patterns under varying environmental conditions, and proteomics can detail protein expression levels in these environments. This integration not only enhances the accuracy of MRAs but also provides new insights and methodologies for the treatment and prevention of microbial diseases.

Njage et al. conducted a QMRA of L. monocytogenes in drinking fermented milk based on WGS data, developing a novel risk assessment method grounded in strain genetic heterogeneity. First, a finite mixture model was employed to characterize phenotypic heterogeneity of L. monocytogenes under acid stress, quantifying the proportions of subpopulations and their growth parameter profiles. For a pan-genomic similarity matrix constructed from 7343 genes, six machine learning algorithms—including RF and support vector machine with recursive feature elimination (SVMR)-were applied to build subpopulation classification models after removing near-zero-variance genes and addressing class imbalance via upsampling. The results showed that RF achieved prediction accuracies of 91–97% for cold/salt/dry stress, while SVMR reached 89% accuracy for acid stress. When applied to predict 201 strains, the model revealed that 57% of acid stress subpopulations were tolerant, and 99% were cold stress tolerant. Using fermented milk as a case study for integrating an exposure assessment model, the expected number of cases among transplant recipients was 790 cases per million people when the ratio of acid-tolerant to sensitive subpopulations was 1:1, whereas the traditional homogeneous growth parameter model overestimated risk to 803 cases. Scenario analysis confirmed a significant positive correlation between bacterial concentration growth during storage and the number of cases as the proportion of tolerant bacteria increased from 0% to 75%. This study demonstrates that ignoring intra-species phenotypic heterogeneity can lead to risk overestimation, and the established “WGS-phenotypic subpopulation-exposure dose-risk quantification” framework provides a new paradigm for precision risk assessment of foodborne pathogens85.

Accordingly, it is necessary to establish core omics databases for highly pathogenic microorganisms, enabling systematic integration of key virulence factors, resistance mechanisms, and environmental adaptation traits. These databases will provide essential data support and a decision-making foundation for the accurate identification and dynamic risk assessment of high-risk microbial hazards.

Novel detection technologies integrated with AI to transform risk assessments

In the field of food-related MRA, the integration of AI with emerging detection technologies has seen significant progress and innovation. AI, particularly machine learning and deep learning, is becoming a critical factor driving the microbial detection industry toward faster and more intelligent solutions. These advancements improve sensitivity, accuracy, and efficiency while addressing the growing demand for detection in the food safety sector. AI applications, such as image analysis, interpreting mass spectrometry data, and comprehensive genomic sequence analysis, show immense potential for real-time monitoring and identifying microbial hazards at every stage of food production, transportation, and consumption. In response to the diverse sources of microbial data (such as genome sequencing, environmental monitoring, clinical records), inconsistent formats (structured and unstructured data), and the problems of noise and missing values, we recommend using a new solution of AI/machine learning/manual confirmation. The first two are mainly used for data format standardization and evidence grading, while manual confirmation is mainly used for data authenticity judgment and high-order data grading judgment, to compensate for the drawbacks of machine learning. Beyond detection, AI can permeate the entire risk assessment process, integrating seamlessly with other technologies like blockchain and the IoT, further enhancing precision and efficiency.

Yi et al.86 developed an AI-driven detection system integrating phage biosensing and deep learning, enabling rapid and highly sensitive identification of pathogens in liquid foods and agricultural water. The core of the system lies in leveraging the specific infectivity of T7 bacteriophages to target pathogens (e.g., E. coli), inducing their lysis and release of DNA. After lysed cells are labeled with SYBR Green fluorescent dye, high-resolution fluorescence microscopy imaging is performed at excitation/emission wavelengths of 480 ± 30 nm/535 ± 40 nm to capture characteristic lysis signals. Sample pretreatment using selective media (e.g., EC broth) effectively suppresses the growth of non-target bacteria to reduce interference. The acquired microscopy image data are input into a deep learning model based on the Faster R-CNN architecture for analysis. This model was trained on a constructed laboratory dataset that includes not only single-bacterium lysis images of E. coli at different concentrations but also images of multiple common non-target bacteria (e.g., L. innocua, Bacillus subtilis, Pseudomonas fluorescens), with model generalization enhanced via data augmentation techniques (random flipping, rotation, brightness/contrast adjustment). By learning to distinguish specific fluorescent signal patterns generated by phage lysis from various background noises, the model achieves automatic identification and enumeration of target bacteria. Experimental results show that the system completes detection in just 5.5 h for real-world samples including coconut water, spinach washing water, and irrigation water, demonstrating excellent detection capability for E. coli at concentrations as low as 10 CFU/mL. In liquid food samples, the model achieved prediction accuracies of 95–100%; even in highly noisy irrigation water samples, combined with selective enrichment and multi-species training strategies, the accuracy reached 80%. Its sensitivity significantly outperformed traditional plate counting and real-time quantitative PCR, providing an automated, high-efficiency, and high-precision solution for food and agricultural water safety monitoring.

Therefore, future research in the field of “AI + MRA” should aim to develop highly interpretable model structures to ensure traceability within risk assessment systems. At the same time, efforts should be made to promote cross-platform generalizability evaluation and standardization of AI models, thereby clarifying the application boundaries and performance criteria of different models across various nodes of the food supply chain.

Enhancing the role of MRA in global food safety management

Emerging advanced microbial detection technologies are enhancing our capacity for data acquisition and analysis, facilitating the establishment of global data-sharing platforms and the harmonization of cross-national standards. These global data-sharing platforms play a pivotal role in MRA and global food safety management. International Food Safety Authorities Network (INFOSAN), a global emergency response network established jointly by the WHO and FAO, serves as a core entity for cross-regional microbial risk data sharing, facilitating activities such as transnational pathogen tracing and harmonization of risk assessment model parameters across borders87. The Guidelines for Microbiological Risk Assessment in Foods jointly published by FAO and WHO provides critical guidance for unifying international assessment standards and optimizing risk management. Additionally, the guidelines advocate the application of molecular biology techniques (e.g., gene sequencing), AI (risk prediction models), and rapid detection methods to enhance the efficiency and accuracy of assessments88. EFSA has developed multiple MRA models for various foodborne pathogens, including L. monocytogenes and Salmonella89. In 2019, EFSA Panel on Biological Hazards (EFSA BIOHAZ Panel) comprehensively explored the applications of WGS and metagenomics in foodborne pathogen outbreak investigations, source attribution, and risk assessment. These efforts are fundamental for cross-border outbreak investigations and the development of international standardized risk assessments for foodborne microbes90.

These advancements bolster international cooperation and knowledge sharing, improving the scientific rigor and consistency of risk assessments. Additionally, these technological advancements influence the transformation of global trade regulations, promoting adjustments in multilateral trade systems and digital trade rules to accommodate new trade formats while ensuring fair competition. Amid challenges such as geopolitical tensions and supply chain restructuring, these technologies provide essential support for optimizing supply chains, ensuring food safety, and stabilizing trade. In the future, it is also necessary to build internationally shared microbial risk databases and standardized metadata interface systems, in order to enhance the mutual recognition and coordination of food safety assessment results among countries. The integration and innovation of microbial detection technologies, advancement of standardization, deepening of international collaboration, and refinement of policies and regulations will collectively promote the development of global food safety governance toward a more scientific, coordinated, and sustainable direction.

Advocating for proactive research strategies to address the negative impacts of developments in detection technology on risk assessment

Detection technologies are the front end for data acquisition and a prerequisite for advancing risk assessment. Addressing the adverse effects of iterative microbial detection technologies is crucial for driving the development of risk assessment methods. Proactive research strategies are important from multiple perspectives. First, from the standpoint of advancing risk assessment, early research ensures that risk assessment methodologies are updated in tandem with the latest detection technologies, enhancing predictive capabilities and fostering interdisciplinary integration to drive methodological progress. Second, from a food safety perspective, proactive strategies improve the accuracy and reliability of detection, reduce food safety incidents, and ensure the uniformity of regulations and standards to protect consumers’ health and stabilize food supply chains. Lastly, from a resource-saving perspective, developing strategies in advance optimizes resource allocation, reduces redundancy, and lowers operational costs while enhancing risk assessments’ efficiency to minimize the long-term economic and social costs.

Meanwhile, the advancement of detection technologies in driving risk assessment is also faced with multiple challenges, including: (1) Regulatory delays: Novel detection technologies (e.g., nanomaterials-based sensors, CRISPR-Cas detection platforms) require complex standardization and validation processes from research and development to regulatory approval. Additionally, global inconsistency in detection technologies persists—for example, developed countries have advanced the application of WGS in microbial testing, while many developing countries still rely on traditional culture methods, severely hindering transnational data sharing and collaborative risk assessment. (2) Data complexity: Data generated by novel detection technologies (e.g., sequencing, mass spectrometry, sensors) exhibit structural heterogeneity, and unified standards for cross-platform integration remain lacking. (3) Economic burdens: Emerging technologies (e.g., next-generation sequencing, AI-driven monitoring systems) demand substantial investments in equipment and maintenance costs, posing significant financial barriers for small and medium-sized enterprises and some developing countries. Furthermore, technological iteration in detection may lead to enormous resource waste. To address these challenges, strategies such as establishing multi-regional collaborative frameworks, developing cross-platform data integration tools, and promoting shared access to detection equipment may be effective.

Nevertheless, by improving predictive and response capabilities, promoting interdisciplinary integration, enhancing the accuracy of detection, optimizing resource allocation, ensuring regulatory updates, and strengthening management systems’ resilience, these strategies will bolster the scientific rigor and reliability of risk assessments, providing a solid foundation for ensuring food safety and fostering its sustainable development.

Data availability

No datasets were generated or analysed during the current study.

References

Abebe, E., Gugsa, G. & Ahmed, M. Review on major food-borne zoonotic bacterial pathogens. J. Trop. Med. 2020, 4674235 (2020).

Van der Fels-Klerx, H. J. et al. Framework for evaluation of food safety in the circular food system. NPJ Sci. Food 8, 36 (2024).

Stathas, L., Aspridou, Z. & Koutsoumanis, K. Quantitative microbial risk assessment of Salmonella in fresh chicken patties. Food Res. Int. 178, 113960 (2024).

Faust, K. & Raes, J. Microbial interactions: from networks to models. Nat. Rev. Microbiol. 10, 538–550 (2012).

Knight, R. et al. Best practices for analysing microbiomes. Nat. Rev. Microbiol. 16, 410–422 (2018).

Jasson, V. et al. Alternative microbial methods: an overview and selection criteria. Food Microbiol. 27, 710–730 (2010).

Swaminathan, B. & Feng, P. Rapid detection of food-borne pathogenic bacteria. Annu. Rev. Microbiol. 48, 401–427 (1994).

Mandal, P. K. et al. Methods for rapid detection of foodborne pathogens: an overview. Am. J. Food Technol. 6, 87–102 (2011).

Awang, M. S. et al. Advancement in Salmonella detection methods: from conventional to electrochemical-based sensing detection. Biosensors 11, 346 (2021).

Bosch, A. et al. Foodborne viruses: detection, risk assessment, and control options in food processing. Int. J. Food Microbiol. 285, 110–128 (2018).

Sauer, S. & Kliem, M. Mass spectrometry tools for the classification and identification of bacteria. Nat. Rev. Microbiol. 8, 74–82 (2010).

Metzker, M. L. Sequencing technologies—the next generation. Nat. Rev. Genet. 11, 31–46 (2010).

Justino, C. I. L. et al. Recent developments in recognition elements for chemical sensors and biosensors. Trends Anal. Chem. 68, 2–17 (2015).

Aladhadh, M. A review of modern methods for the detection of foodborne pathogens. Microorganisms 11, 1111 (2023).

Membré, J.-M. & Guillou, S. Latest developments in foodborne pathogen risk assessment. Curr. Opin. Food Sci. 8, 120–126 (2016).

Koutsoumanis, K. et al. Application of quantitative microbiological risk assessment (QMRA) to food spoilage: principles and methodology. Trends Food Sci. Technol. 114, 189–197 (2021).

Jackson, B. R. et al. Implementation of nationwide real-time whole-genome sequencing to enhance listeriosis outbreak detection and investigation. Clin. Infect. Dis. 63, 380–386 (2016).

Timme, R. E. et al. Gen-FS coordinated proficiency test data for genomic foodborne pathogen surveillance, 2017 and 2018 exercises. Sci. Data 7, 402 (2020).

Mather, A. E. et al. Foodborne bacterial pathogens: genome-based approaches for enduring and emerging threats in a complex and changing world. Nat. Rev. Microbiol. 22, 543–555 (2024).

Tsoumtsa Meda, L. et al. Using GWAS and machine learning to identify and predict genetic variants associated with foodborne bacteria phenotypic traits. Methods Mol. Biol. 2852, 223–253 (2025).

Karanth, S. et al. Machine learning to predict foodborne salmonellosis outbreaks based on genome characteristics and meteorological trends. Curr. Res. Food Sci. 6, 100525 (2023).

Yang, Q. et al. Bacterial genome-wide association studies: exploring the genetic variation underlying bacterial phenotypes. Appl. Environ. Microbiol. 0, e02512–02524 (2025).

Cuénod, A. et al. Bacterial genome-wide association study substantiates papGII of Escherichia coli as a major risk factor for urosepsis. Genome Med. 15, 89 (2023).

Lees, J. et al. Improved prediction of bacterial genotype-phenotype associations using interpretable pangenome-spanning regressions. mBio 11, 4 (2020).

Sheppard, S. K. et al. Genome-wide association study identifies vitamin B5 biosynthesis as a host specificity factor in Campylobacter. Proc. Natl. Acad. Sci. USA 110, 11923–11927 (2013).

Lees, J. A. et al. Sequence element enrichment analysis to determine the genetic basis of bacterial phenotypes. Nat. Commun. 7, 12797 (2016).

Chewapreecha, C. et al. Comprehensive identification of single nucleotide polymorphisms associated with beta-lactam resistance within pneumococcal mosaic genes. PLoS Genet. 10, e1004547 (2014).

Petit, R. A. 3rd et al. Dissecting vancomycin-intermediate resistance in staphylococcus aureus using genome-wide association. Genome Biol. Evol. 6, 1174–1185 (2014).

Allende, A., Bover-Cid, S. & Fernández, P. S. Challenges and opportunities related to the use of innovative modelling approaches and tools for microbiological food safety management. Curr. Opin. Food Sci. 45, 100839 (2022).

Nauta, M. J. Modelling bacterial growth in quantitative microbiological risk assessment: is it possible?. Int. J. Food Microbiol. 73, 297–304 (2002).

Franz, E. & van Bruggen, A. H. Ecology of E. coli O157:H7 and Salmonella enterica in the primary vegetable production chain. Crit. Rev. Microbiol. 34, 143–161 (2008).

Sun, W. et al. Quantitative risk assessment of Listeria monocytogenes in bulk cooked meat from production to consumption in China: a Bayesian approach. J. Sci. Food Agric. 99, 2931–2938 (2019).

Garre, A. et al. The use of bayesian networks and bootstrap to evaluate risks linked to the microbial contamination of leafy greens irrigated with reclaimed water in Southeast Spain. Microb. Risk Anal. 22, 100234 (2022).

Garre, A., Zwietering, M. H. & den Besten, H. M. W. Multilevel modelling as a tool to include variability and uncertainty in quantitative microbiology and risk assessment. Thermal inactivation of Listeria monocytogenes as proof of concept. Food Res. Int. 137, 109374 (2020).

Karamcheti, S. T. et al. Hierarchical Bayesian linear mixed model to estimate variability in the thermal inactivation parameters for Listeria species. Food Microbiol. 128, 104731 (2025.

Li, M., Huang, L. & Yuan, Q. Growth and survival of Salmonella Paratyphi A in roasted marinated chicken during refrigerated storage: Effect of temperature abuse and computer simulation for cold chain management. Food Control 74, 17–24 (2017).

Park, J. H. et al. A dynamic predictive model for the growth of Salmonella spp. and Staphylococcus aureus in fresh egg yolk and scenario-based risk estimation. Food Control 118, 107421 (2020).

Jia, Z. et al. Dynamic kinetic analysis of growth of Listeria monocytogenes in pasteurized cow milk. J. Dairy Sci. 104, 2654–2667 (2021).

Mageiros, L. et al. Genome evolution and the emergence of pathogenicity in avian Escherichia coli. Nat. Commun. 12, 765 (2021).

Haddad, N. et al. Next generation microbiological risk assessment—potential of omics data for hazard characterisation. Int. J. Food Microbiol. 287, 28–39 (2018).

Pielaat, A. et al. First step in using molecular data for microbial food safety risk assessment; hazard identification of Escherichia coli O157:H7 by coupling genomic data with in vitro adherence to human epithelial cells. Int. J. Food Microbiol. 213, 130–138 (2015).

Awad, D. A., Masoud, H. A. & Hamad, A. Climate changes and food-borne pathogens: the impact on human health and mitigation strategy. Clim. Change 177, 92 (2024).

Jiang, C. et al. Climate change, extreme events and increased risk of salmonellosis in Maryland, USA: evidence for coastal vulnerability. Environ. Int. 83, 58–62 (2015).

Austhof, E. et al. Exploring the association of weather variability on Campylobacter—a systematic review. Environ. Res. 252, 118796 (2024).

Kuhn, K. G. et al. Campylobacter infections expected to increase due to climate change in Northern Europe. Sci. Rep. 10, 13874 (2020).

Froelich, B. A. & Daines, D. A. In hot water: effects of climate change on Vibrio–human interactions. Environ. Microbiol. 22, 4101–4111 (2020).

Sibanda, T. et al. Listeria monocytogenes at the food–human interface: a review of risk factors influencing transmission and consumer exposure in Africa. Int. J. Food Sci. Technol. 58, 4114–4126 (2023).

Goodwin, S., McPherson, J. D. & McCombie, W. R. Coming of age: ten years of next-generation sequencing technologies. Nat. Rev. Genet. 17, 333–351 (2016).

Bao, H. et al. Genome-wide whole blood microRNAome and transcriptome analyses reveal miRNA-mRNA regulated host response to foodborne pathogen Salmonella infection in swine. Sci. Rep. 5, 12620 (2015).

Rantsiou, K. et al. Next generation microbiological risk assessment: opportunities of whole genome sequencing (WGS) for foodborne pathogen surveillance, source tracking and risk assessment. Int. J. Food Microbiol. 287, 3–9 (2018).

Wright, A., Ginn, A. & Luo, Z. Molecular tools for monitoring and source-tracking Salmonella in wildlife and the environment. in Food Safety Risks from Wildlife: Challenges in Agriculture, Conservation, and Public Health (eds Jay-Russell, M. & Doyle, M. P.) 131–150 (Springer International Publishing, 2016).

Pielaat, A. et al. Phenotypic behavior of 35 Salmonella enterica serovars compared to epidemiological and genomic data. Procedia Food Sci. 7, 53–58 (2016).

Fritsch, L. et al. Insights from genome-wide approaches to identify variants associated to phenotypes at pan-genome scale: application to L. monocytogenes’ ability to grow in cold conditions. Int. J. Food Microbiol. 291, 181–188 (2019).

Imanian, B. et al. The power, potential, benefits, and challenges of implementing high-throughput sequencing in food safety systems. NPJ Sci. Food 6, 35 (2022).

Donaghy, J. A. et al. Big data impacting dynamic food safety risk management in the food chain. Front. Microbiol. 12, 668196 (2021).

Punina, N. V. et al. Whole-genome sequencing targets drug-resistant bacterial infections. Hum. Genomics 9, 19 (2015).

Pornsukarom, S., van Vliet, A. H. M. & Thakur, S. Whole genome sequencing analysis of multiple Salmonella serovars provides insights into phylogenetic relatedness, antimicrobial resistance, and virulence markers across humans, food animals and agriculture environmental sources. BMC Genomics 19, 801 (2018).

Agrimonti, C. et al. Application of real-time PCR (qPCR) for characterization of microbial populations and type of milk in dairy food products. Crit. Rev. Food Sci. Nutr. 59, 423–442 (2019).

Eze, J. et al. Machine learning-based optimal temperature management model for safety and quality control of perishable food supply chain. Sci. Rep. 14, 27228 (2024).

Chhetri, K. B. Applications of artificial intelligence and machine learning in food quality control and safety assessment. Food Eng. Rev. 16, 1–21 (2024).

Stahlschmidt, S. R., Ulfenborg, B. & Synnergren, J. Multimodal deep learning for biomedical data fusion: a review. Brief. Bioinforma. 23, 569 (2022).

Umesha, S. & Manukumar, H. M. Advanced molecular diagnostic techniques for detection of food-borne pathogens: current applications and future challenges. Crit. Rev. Food Sci. Nutr. 58, 84–104 (2018).

Hamilton, K. A. et al. Research gaps and priorities for quantitative microbial risk assessment (QMRA). Risk Anal. 44, 2521–2536 (2024).

Gilsenan, M. B. et al. Open risk assessment: data. EFSA J. 14, e00509 (2016).

GA4GH. GDPR Brief: Can Genomic Data Be Anonymised? (Global Alliance for Genomics and Health, 2020).

Zhou, Z. et al. Machine learning assisted biosensing technology: an emerging powerful tool for improving the intelligence of food safety detection. Curr. Res. Food Sci. 8, 100679 (2024).

Ginsberg, J. et al. Detecting influenza epidemics using search engine query data. Nature 457, 1012–1014 (2009).

Imbens, G. W. & Rubin, D. B. Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction (Cambridge University Press, 2015).

Timme, R. E. et al. GenomeTrakr proficiency testing for foodborne pathogen surveillance: an exercise from 2015. Microb. Genomics 4, 185 (2018).

Beaudequin, D. et al. Beyond QMRA: modelling microbial health risk as a complex system using Bayesian networks. Environ. Int. 80, 8–18 (2015).

Tutul, M. J. I., Alam, M. & Wadud, M. A. H. Smart food monitoring system based on iot and machine learning. IEEE 6, 1–6 (2023).

WHO. WHO Global Strategy For Food Safety 2022-2030: Towards Stronger Food Safety Systems And Global Cooperation (World Health Organization, 2022).

Héraud, F., Barraj, L. M. & Moy, G. G. GEMS/food consumption cluster diets. in Total Diet Studies (eds Moy, G.G. & Vannoort, R.W.) 427–434 (Springer, New York, 2013).

LeJeune, J. T. et al. FAO/WHO joint expert meeting on microbiological risk assessment (JEMRA): twenty years of international microbiological risk assessment. Foods 10, 1873 (2021).

Randall, A., Miyagishima, K. & Maskeliunas, J. Codex Alimentarius Commission: protecting food today and in the future. Food Nutr. Agric. 18–23 (1998).

Qi, L. Nutrition for precision health: the time is now. Obesity 30, 1335–1344 (2022).

Celis-Morales, C. et al. Effect of personalized nutrition on health-related behaviour change: evidence from the Food4Me European randomized controlled trial. Int. J. Epidemiol. 46, 578–588 (2017).

Bonomi, L., Huang, Y. & Ohno-Machado, L. Privacy challenges and research opportunities for genomic data sharing. Nat. Genet. 52, 646–654 (2020).

Amézquita, A. et al. The benefits and barriers of whole-genome sequencing for pathogen source tracking: a food industry perspective. Food Safety Magazine (2020).

Hassoun, A. et al. Food quality 4.0: From traditional approaches to digitalized automated analysis. J. Food Eng. 337, 111216 (2023).

Hoehl, M. M. et al. Centrifugal LabTube platform for fully automated DNA purification and LAMP amplification based on an integrated, low-cost heating system. Biomed. Microdevices 16, 375–385 (2014).

Nithya, R. et al. Computer vision system for mango fruit defect detection using deep convolutional neural network. Foods 11, 3483 (2022).

Yang, F. et al. A cloud-based digital farm management system for vegetable production process management and quality traceability. Sustainability 10, 4007 (2018).

Zhiteneva, V. et al. Quantitative microbial risk assessment of a non-membrane based indirect potable water reuse system using Bayesian networks. Sci. Total Environ. 780, 146462 (2021).

Njage, P. M. K. et al. Quantitative microbial risk assessment based on whole genome sequencing data: case of Listeria monocytogenes. Microorganisms 8, 1772 (2020).

Yi, J. et al. AI-enabled biosensing for rapid pathogen detection: from liquid food to agricultural water. Water Res. 242, 120258 (2023).

Savelli, C. J. et al. The FAO/WHO international food safety authorities network in review, 2004–2018: learning from the past and looking to the future. Foodborne Pathog. Dis. 16, 480–488 (2019).

WHO. Microbiological Risk Assessment–Guidance for Food (World Health Organization, 2021).

Allende, A. et al. Listeria monocytogenes contamination of ready-to-eat foods and the risk for human health in the EU. EFSA J. 16, e05134 (2018).

Allende, A. et al. Whole genome sequencing and metagenomics for outbreak investigation, source attribution and risk assessment of food-borne microorganisms. EFSA J. 17, e05898 (2019).

Acknowledgements

This study was supported by grants from Intergovernmental International Science and Technology Innovation Cooperation key project of China’s National Key R&D Program (Grant No. 2024YFE0102600) and Shanghai Municipal Health System Key Discipline Construction Project (Grant No. 2024ZDXK0063). We thank the South Hospital of the Sixth People’s Hospital affiliated with Shanghai Jiaotong University for providing the laboratory and policy support for this study.

Author information

Authors and Affiliations

Contributions

L.L.X. and Q.L.D. conceived the study. Z.L. and X.D. coordinated the study. L.L.X., Z.L., and X.D. analyzed the data and wrote the manuscript. Y.T.L., Z.Z.L., Y.L.L., and Q.L.D. revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xiao, L., Li, Z., Dou, X. et al. Advancing microbial risk assessment: perspectives from the evolution of detection technologies. npj Sci Food 9, 157 (2025). https://doi.org/10.1038/s41538-025-00527-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41538-025-00527-3