Abstract

Accurate, non-destructive classification of winter jujube maturity is critical for quality control and intelligent harvesting. This study proposes a dual-stream attention-fused residual network (DSAF-ResNet) combining hyperspectral and GLCM-based texture features at the feature level. The multimodal fusion significantly improved classification performance, with ResNet34 achieving 92.27% test accuracy under fused inputs. The DSAF-ResNet, integrating RepVGGBlock, SimAM attention, and a dual-stream architecture, achieved 98.61% training accuracy and 97.24% test accuracy, with 97.31% precision and 97.24% recall. Ablation experiments confirmed the contribution of each module. DSAF-ResNet demonstrated excellent generalization, stability, and robustness in distinguishing subtle maturity differences, even under class imbalance. This work provides an effective, scalable framework for non-destructive fruit maturity classification, advancing intelligent agricultural practices and supporting precision agriculture applications.

Similar content being viewed by others

Introduction

Winter jujube is a high-value fruit crop known for its distinctive flavor, rich nutritional composition, and considerable economic importance1,2. Accurate assessment of its maturity stage is critical in both agricultural production and postharvest processing, as it directly influences fruit quality, shelf life, and market value3,4. Traditionally, maturity evaluation relies heavily on the subjective visual inspection by experienced workers5. However, this manual approach is time-consuming, labor-intensive, and inherently inconsistent, often yielding inaccurate and non-objective results—especially when distinguishing between subtle differences in maturity stages. Although chemical analytical methods, such as measuring sugar content or acidity, can provide more precise results, they are inherently destructive and require sample extraction and laboratory procedures, making them unsuitable for real-time quality control during field operations or postharvest sorting6,7,8.

In recent years, with the rapid development of computer vision and machine learning technologies, non-destructive methods for fruit maturity classification have gained increasing attention in agricultural research9. Specifically for winter jujube, a number of studies have explored image-based maturity classification approaches. For example, Xu Zheng10 developed a multi-layer feature fusion ResNet18 model (MLFF-ResNet18) based on 986 winter jujube images, achieving an accuracy of 91.1%, which surpassed VGG16 and standard ResNet18 by 5.8 and 2.6%, respectively. Similarly, ref. 11 proposed a ShuffleNetV2-5×5-SRM-[3,6,3] model using manually segmented Lingwu jujube images, reaching an accuracy of 90.56%. These results underscore the potential of deep learning methods in capturing surface-level maturity cues of jujubes. Apart from RGB image-based methods, hyperspectral imaging has emerged as a powerful tool for fruit maturity assessment due to its capacity to capture both external morphology and internal physicochemical properties12,13,14. Although many existing studies focus on other fruit species, such as kiwifruit15, oil palm16, and plums17, their findings offer valuable references. In the case of jujube, Cao et al. 1 and Liu et al. 6 employed hyperspectral techniques to assess soluble solids content (SSC) and maturity levels, suggesting the applicability of spectral data in jujube quality evaluation. Furthermore, recent studies have started incorporating image texture features, such as those extracted by the gray-level co-occurrence matrix (GLCM), to reflect surface characteristics associated with maturity. Sunandar et al. 18 applied GLCM combined with K-NN to classify banana ripeness with 90% accuracy, while ref. 19 demonstrated that combining GLCM with color and shape descriptors improves multi-fruit classification accuracy using SVM. Despite these advancements, most existing winter jujube maturity classification models are either limited to single-modality data—relying solely on RGB images or hyperspectral spectra—or lack effective strategies to fuse and leverage multimodal information. As noted by ref. 16, unimodal approaches often suffer from reduced generalizability and robustness under real-world conditions, particularly when dealing with subtle maturity stage transitions. This highlights a clear research gap: the need for a robust multimodal fusion framework that integrates both spectral and texture features to achieve more accurate and generalizable maturity classification for winter jujube.

To address these challenges, this study proposes a novel deep learning-based framework for winter jujube maturity classification. The proposed DSAF-ResNet integrates hyperspectral data and image texture features at the feature level to fully leverage their respective advantages. By combining both modalities, the model provides a more comprehensive representation of maturity-related attributes. Additionally, the architecture incorporates several innovative components—including RepVGGBlocks20, the SimAM attention mechanism21, and a dual-stream structure—to enhance feature extraction and classification performance. This study aims not only to improve classification accuracy but also to strengthen generalization capability, training stability, and robustness against class imbalance and subtle inter-class differences. The proposed method contributes to the development of intelligent and precise agricultural systems, offering reliable technical support for agricultural modernization.

Results

Comparison of model performance under different input modalities

In order to systematically evaluate the impact of different input modalities on the jujube maturity classification task, this study employs texture features (GLCM), spectral features, and a fusion of both feature types as inputs to six representative classification models. These models include traditional machine learning algorithms (SVM and MLP) and typical convolutional neural networks (AlexNet, EfficientNet, VGG16, and ResNet34). A comprehensive comparative analysis is conducted from multiple perspectives, including training and testing accuracy, precision, recall, and other evaluation metrics.

When only GLCM texture features are used as input, the overall classification performance of all models is relatively limited, indicating that the single modality of texture information has certain shortcomings in distinguishing jujube maturity. Among these, ResNet34 performs the best under this modality, achieving a training accuracy of 88.87%, a testing accuracy of 81.22%, and both precision and recall at 81.22%, demonstrating good convergence and generalization capabilities. In contrast, EfficientNet achieved high precision (96.31%) and recall (96.24%) on the training set, but its testing accuracy was only 79.01%, suggesting that the model may have overfitted the GLCM features during training and has limited adaptability to unseen samples. AlexNet and VGG16 also did not demonstrate strong competitiveness under this input condition, with testing accuracies both falling below 80%. SVM and MLP showed the most average performance, especially SVM, which achieved only 76.24% accuracy on the test set, indicating a certain bottleneck in handling high-dimensional texture statistical features. The experimental results of the models are presented in Table 1.

After using spectral features as the sole input, the classification performance of all models showed significant improvement. Compared to GLCM texture features, spectral information can more intuitively and comprehensively reflect the physical structure and chemical composition of the jujube peel and its internal tissues. As a result, it exhibits stronger feature expression capability when distinguishing between different maturity levels. This advantage is especially evident in deep neural networks. ResNet34 achieved the best performance with an accuracy of 86.74% on the test set, while precision and recall reached 89.07% and 86.74%, respectively, demonstrating excellent generalization ability and robustness. VGG16 followed closely with a test accuracy of 85.64%, precision of 87.14%, and recall of 85.64%. This marks a significant improvement compared to its performance with texture features, indicating the superiority of deep networks in processing continuous spectral information. AlexNet also achieved an accuracy of 85.08%, with both precision and recall exceeding 85%, showing similar substantial progress. Traditional models, such as SVM and MLP, also benefited from the high information density of spectral features, with test accuracy increasing to 84.53 and 82.87%, respectively. Notably, SVM achieved precision and recall rates over 84%, further confirming the empowering effect of spectral input on traditional shallow models. Overall, the single spectral feature input is capable of achieving high-level classification performance, further validating its core value in winter jujube maturity classification. The experimental results of each model with spectral feature input are shown in Table 2.

To further explore the complementarity between spectral and texture features, this study combines both features at the feature level as model input, resulting in a significant improvement in classification performance. Among all models, ResNet34 performs the best under the fused input, with training accuracy rising to 94.44% and test accuracy reaching 92.27%. Additionally, precision and recall also show high levels, indicating that the model not only excels in feature representation but also effectively integrates heterogeneous information to build more discriminative decision boundaries. EfficientNet also performs strongly under this input modality, achieving a test accuracy of 90.61%, which is superior to the single spectral input condition. Traditional models, such as SVM and MLP, also reached a test accuracy of 86.74% with feature fusion input, demonstrating the effective enhancement of the fusion strategy for non-deep models. Both AlexNet and VGG16 benefit from the fusion strategy, with their test accuracies increasing to 87.29 and 88.40%, respectively. Overall, the feature fusion strategy significantly enhances the model’s ability to discriminate between samples at different maturity levels, making it a key factor in improving classification accuracy in this study. The experimental results of each model with feature fusion input are shown in Table 3.

Comparative analysis across the three input modalities demonstrates that deep neural networks possess distinct advantages in processing multimodal information. Notably, when GLCM texture features and spectral data are integrated at the feature level as input, the classification performance of all evaluated models improves significantly. This highlights the effectiveness of multimodal fusion in capturing complementary information relevant to fruit maturity. Consequently, this study adopts the fused spectral-texture features as the final input strategy for model development. The confusion matrix of ResNet34 under this fused input condition is presented in Fig. 1, while Fig. 2 illustrates the accuracy and loss convergence trends during training and testing, further validating the performance benefits of feature-level fusion.

a Training and test accuracy of ResNet34 using fused spectral-texture features. b Training and test loss of ResNet34 using fused spectral-texture features. The blue line represents the accuracy and loss of the training set, respectively, and the red line represents the accuracy and loss of the test set, respectively.

To further examine the impact of network depth on classification performance, we conducted additional experiments using deeper ResNet architectures--ResNet50 and ResNet101--across the three input modalities.The results are summarized in Table 4.

While ResNet50 and ResNet101 are theoretically capable of learning more complex and hierarchical feature representations due to their increased depth, their performance in this study did not surpass that of ResNet34 across any input condition. Specifically, under fused spectral-texture features--the most informative modality--ResNet34 achieved the highest test accuracy (92.27%), outperforming both ResNet50 (91.71%) and ResNet101 (89.50%). Notably, although ResNet50 showed stronger fitting ability on the training set, it underperformed on the test set when compared to ResNet34, suggesting potential overfitting. This observation can be attributed to two primary factors. First, the size of the dataset(900 samples) may not be sufficient to fully exploit the representational capacity of deeper architectures like ResNet101, leading to suboptimal generalization. Second, the highly structured nature of spectral and GLCM-derived texture features might not necessitate extremely deep networks for effective pattern extraction. In contrast, ResNet34 provides a better balance between capacity and generalization, making it more suitable for the multimodal maturity classification task in this study.

Comparison of the performance between DSAF-ResNet and standard ResNet34

To further enhance the model’s capability in representing fused features and improving classification accuracy, this study proposes an improved architecture based on ResNet34, termed DSAF-ResNet. The proposed model integrates a dual-stream attention fusion (DSAF) mechanism designed to strengthen the extraction and integration of multimodal spectral and texture features. This section compares the performance of the baseline ResNet34 and the proposed DSAF-ResNet under identical feature-level fusion input conditions. Evaluation metrics include training and test accuracy, precision, and recall, to validate the effectiveness of the architectural improvements. The comparative results are summarized in Table 5.

When using GLCM texture features only, DSAF-ResNet achieved a training accuracy of 88.73% and a test accuracy of 83.43%, outperforming ResNet34, which yielded a lower test accuracy of 81.22%. This indicates that the attention-guided dual-stream structure enhances the model’s capacity to extract more informative patterns from relatively low-level texture inputs. Under the spectral input modality, DSAF-ResNet again demonstrated superior performance, reaching a training accuracy of 93.74% and a test accuracy of 91.16%, with precision and recall both exceeding 91%. In contrast, ResNet34 attained a test accuracy of 86.74%, highlighting the enhanced spectral feature learning capability conferred by the RepVGGBlock and SimAM modules. The most remarkable gains were observed under fused spectral-texture input, where DSAF-ResNet attained a training accuracy of 98.61% and perfect training precision and recall (100.00%). On the test set, the model achieved an accuracy of 97.24%, precision of 97.31%, and recall of 97.24%, significantly surpassing ResNet34, which reached 92.27% accuracy under the same input. These results confirm that DSAF-ResNet not only fits the training data well but also generalizes effectively to unseen samples, owing to its ability to exploit complementary multimodal information through dynamic fusion mechanisms. Overall, across all input modalities, DSAF-ResNet consistently outperformed the standard ResNet34, demonstrating higher accuracy, better precision-recall balance, and stronger robustness. This confirms that the integration of RepVGGBlocks, SimAM attention, and a dual-stream architecture significantly enhances the model’s representation learning ability.

Figure 3 illustrates the convergence behavior of DSAF-ResNet in terms of accuracy and loss during training. Compared to ResNet34, DSAF-ResNet not only converged more rapidly but also maintained a lower loss, indicating improved training stability and reduced overfitting risk. Figure 4 presents the confusion matrices of both models using fused input, Fig. 5 shows the comparison of accuracy between ResNet34 and DSAF-ResNet on the training set and test set, visually supporting the numerical comparison and demonstrating the superior fine-grained classification ability of the proposed model.

a Training and test accuracy of DSAF-ResNet using fused spectral-texture features. b Training and test loss of DSAF-ResNet using fused spectral-texture features. The blue line represents the accuracy and loss of the training set, respectively, and the red line represents the accuracy and loss of the test set, respectively.

The blue solid line represents the accuracy of ResNet34 on the training set under three different inputs, the blue dashed line represents the accuracy of ResNet34 on the test set under three different inputs, the red solid line represents the accuracy of DSAF-ResNet on the training set under three different inputs, and the red dashed line represents the accuracy of DSAF-ResNet on the test set under three different inputs.

To address the practical applicability of the proposed and comparative models in real-world deployment scenarios, especially in the context of portable field devices or edge-based fruit sorting systems, this study further evaluates each model’s computational efficiency. As shown in Table 6, we report the parameter count and inference time per sample alongside the classification accuracy.

Compared with several baseline deep learning models, the proposed DSAF-ResNet achieves the highest test accuracy (97.24%), while maintaining a relatively compact architecture with only 2.06 M parameters and an inference time of 0.18 s per sample. This efficiency is comparable to standard ResNet34 (2.13 M, 0.15 s), but with significantly improved accuracy. Notably, EfficientNet, while lightweight (0.40 M), underperforms with an accuracy of 90.61%, illustrating a trade-off between compactness and accuracy. AlexNet and VGG16, despite higher parameter sizes (5.70 M and 13.43 M, respectively), fail to achieve competitive accuracy or inference speed. Traditional models like SVM and MLP exhibit minimal computational cost, but their accuracy stagnates at 86.74%, indicating limited scalability in real scenarios.

Moreover, previously proposed networks specifically designed for Lingwu jujube classification, such as ShuffleNetV2-5×5-SRM-[3,6,3], rely solely on RGB image inputs, which limits their ability to capture internal fruit characteristics and results in relatively slow processing speeds. Specifically, the model requires an inference time of 93.03 s per sample, making it unsuitable for real-time or embedded applications. In contrast, the proposed DSAF-ResNet achieves superior classification accuracy while maintaining a favorable balance between model complexity and computational efficiency, with an inference time of only 0.18 s per sample. This advantage significantly enhances its practicality for deployment in embedded systems or mobile platforms for fruit maturity detection. These findings highlight the critical need to design architectures that not only ensure high predictive performance but also meet the real-time processing demands of resource-constrained agricultural environments.

Ablation study on the DSAF-ResNet model

To rigorously assess the contribution of each module integrated into the proposed DSAF-ResNet architecture, a comprehensive ablation study was conducted under the condition of fused input features. As shown in Table 7, the baseline ResNet34 model already demonstrated solid performance, achieving a training accuracy of 94.44% and a testing accuracy of 92.27%, with corresponding precision and recall values of 92.80 and 92.27%, respectively.

Introducing the RepVGGBlock into the ResNet34 backbone led to notable performance improvements, increasing the testing accuracy to 93.37%. This result confirms the effectiveness of the RepVGGBlock in enhancing feature expressiveness and improving the model’s generalization through structural reparameterization during inference. In parallel, replacing standard convolutional operations with SimAM, a lightweight attention mechanism, also yielded consistent gains, achieving identical test accuracy (93.37%) and slightly improved precision and recall. These results validate SimAM’s capability in highlighting salient features without introducing additional trainable parameters.

Further integrating the dual-stream structure with either RepVGGBlock or SimAM significantly enhanced model performance. The combination of ResNet34 + RepVGGBlock + dual-stream achieved a test accuracy of 94.48%, along with precision and recall values approaching 95%, demonstrating that the dual-branch pathway effectively facilitates multi-granularity feature fusion and learning. Notably, while the configuration of ResNet34 + SimAM + dual-stream achieved comparable performance (test accuracy of 93.92%), the overall gains were less pronounced than those achieved with RepVGGBlock, indicating the complementary role of reparameterized convolution in dual-branch contexts.

To further interpret the dynamic behavior of each ablation configuration, Fig. 6a, b presents the test accuracy and loss curves. From Fig. 6a, it is evident that the DSAF-ResNet not only achieves the highest test accuracy but also demonstrates exceptional stability, with minimal fluctuations during the middle and later training stages. The accuracy rapidly increases in early epochs and maintains a consistent performance above 95% from epoch 30 onwards. This indicates superior convergence and learning capability resulting from the synergistic effect of RepVGGBlock, SimAM attention, and the dual-stream structure.

a Test accuracy comparison across ablation models. b Test loss comparison across ablation models. The blue, green, red, cyan, purple, and yellow solid lines, respectively, represent the accuracy and loss rate of DSAF-ResNet, ResNet34 + RepVGGBlock + dual-stream, ResNet34 + RepVGGBlock, ResNet34 + SimAM + dual-stream, ResNet34 + SimAM, and ResNet34 under fused spectral-texture features input test set.

In contrast, the baseline ResNet34 and its variants exhibit relatively slower convergence and lower peak accuracy. For example, the ResNet34 + SimAM configuration shows early instability and lower final accuracy, suggesting that attention mechanisms alone may not suffice without complementary architectural components. The ResNet34 + RepVGGBlock model, while more stable, plateaus at a lower accuracy, highlighting the limited impact of structural reparameterization when used in isolation.

Figure 6b further supports this observation through the test loss curves. The DSAF-ResNet achieves the lowest and smoothest loss descent trajectory, converging to near-zero loss after around 35 epochs. This behavior reflects not only strong optimization but also reduced overfitting and better feature discrimination. Other models, particularly ResNet34 + SimAM, show high volatility in loss, with sharp spikes indicating training instability and poor generalization. The loss curve of ResNet34 + RepVGGBlock is relatively smooth but converges at a higher final loss value than DSAF-ResNet.

Finally, the full DSAF-ResNet model, which integrates RepVGGBlock, SimAM attention, and a dual-stream architecture, exhibited superior classification performance. It reached a training accuracy of 98.61% and a test accuracy of 97.24%, with perfect precision and recall on the training set and 97.31 and 97.24% on the test set, respectively. This significant performance leap not only confirms the necessity of incorporating both structural reparameterization and spatial attention but also highlights the synergistic effect of combining them in a dual-stream framework. The results clearly demonstrate that DSAF-ResNet can more effectively capture fine-grained differences among jujube maturity levels while maintaining strong generalization, thereby validating the robustness and necessity of the proposed architectural innovations.

Discussion

This study proposes a novel framework for winter jujube maturity classification, termed the DSAF-ResNet, aiming to address the challenge of non-destructive, high-precision recognition of subtle maturity variations in fruits. Based on feature-level fusion of hyperspectral and texture information, the proposed model incorporates multiple deep learning architectural innovations that significantly enhance its discriminative power and generalization ability. Through large-scale experiments on 900 samples across four maturity stages and comprehensive model comparisons, the proposed approach achieves state-of-the-art performance in both classification accuracy and training stability.

The experimental results strongly underscore the value of multimodal information integration. When using only GLCM-derived texture features, the classification performance of all tested models was relatively limited, indicating that surface morphology alone is insufficient for accurately distinguishing jujube maturity stages. In contrast, spectral features—owing to their ability to capture internal physicochemical changes such as pigment concentration and moisture content—demonstrated stronger discriminative power. Notably, feature-level fusion of spectral and texture information led to significant performance improvements across all models, validating the effectiveness of multimodal fusion in enhancing the separability of maturity stages and enriching the semantic representation of input features. For example, ResNet34 achieved a test accuracy of 92.27% under fused input, compared to 86.74% with spectral input alone and 81.22% with texture input alone. These findings confirm the synergistic potential of combining complementary data modalities for fruit maturity classification.

In addition to exploring input modalities, this study introduces DSAF-ResNet, a novel deep learning architecture designed specifically to exploit the advantages of multimodal input. The model integrates three key components: (1) the RepVGGBlock, which enhances feature expressiveness via structural reparameterization, enabling more effective learning of complex spatial patterns; (2) the SimAM attention mechanism, which enhances salient feature extraction without introducing additional trainable parameters, thereby maintaining computational efficiency; and (3) a dual-stream architecture that enables parallel extraction and deep fusion of spectral and texture features. Ablation experiments confirmed that each of these modules contributes significantly to performance gains. The complete DSAF-ResNet model achieved a training accuracy of 98.61% and a test accuracy of 97.24%, with corresponding precision and recall values of 97.31 and 97.24%, respectively—representing a 4.97% improvement in accuracy over baseline ResNet34.

Furthermore, DSAF-ResNet demonstrated outstanding training stability and convergence behavior. The model achieved rapid reduction and stabilization of cross-entropy loss during training, with both training and test accuracy curves showing smooth and consistent convergence. These results indicate enhanced learning efficiency and a reduced risk of gradient vanishing. The model also exhibited strong generalization capacity in the presence of class imbalance and subtle inter-class differences. Confusion matrix analysis revealed that DSAF-ResNet substantially outperformed baseline models in differentiating closely related maturity stages, addressing a common challenge in fine-grained fruit classification tasks.

From a practical standpoint, the proposed model demonstrates strong potential for real-world deployment in automated grading systems, industrial sorting lines, and portable detection devices. Its ability to perform non-destructive, high-precision maturity classification supports broader applications in intelligent agriculture and postharvest processing.

Beyond its high classification performance, the DSAF-ResNet framework embodies a scalable and transferable architecture for quality monitoring across various crops. By leveraging the synergy of structural reparameterization, lightweight attention, and multimodal fusion, this framework sets a foundation for expanding to other precision agriculture tasks such as disease detection and quality grading in citrus, grapes, and tomatoes. Future research may further explore the incorporation of dynamic feature selection mechanisms (e.g., attention-guided weighting and channel pruning) to reduce model complexity and inference latency without compromising performance. Additionally, cross-regional, multi-seasonal, and multi-varietal validation experiments are warranted to enhance the robustness and generalizability of the model under diverse environmental and agricultural conditions.

In summary, this study contributes both a novel technical solution and a methodological paradigm for multimodal deep learning in agriculture. By addressing the limitations of unimodal approaches and enhancing the interpretability and scalability of model architectures, DSAF-ResNet provides a valuable reference for developing intelligent visual perception systems, advancing the digital transformation of agricultural production and quality assessment.

Methods

Acquisition of sample images and spectral data

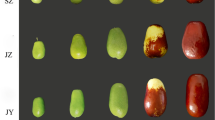

The jujube samples utilized in this study were sourced from Xiawa Town, Zhanhua District, Binzhou City, Shandong Province, China (117.9°E, 37.7°N). This region experiences a warm temperate monsoon climate, characterized by a long-term average annual temperature of ~12 °C and an average annual precipitation of 575.5 mm. The fertile soils, adequate water supply, and optimal soil pH collectively provide favorable agroecological conditions for winter jujube cultivation. Sample collection occurred between September and October 2024. A total of 900 fruits were harvested, classified into four distinct maturity stages (S1–S4) based on the visual maturity indices defined in the Chinese national standard GB/T 32714-2016 (Classification of Winter Jujube), with supplementary confirmation by experienced local jujube growers. Representative samples illustrating each maturity stage are presented in Fig. 7. Postharvest, the jujubes were thoroughly washed, gently blotted dry, and individually stored in zip-lock polyethylene bags under refrigerated conditions (0–5 °C) to maintain freshness and biochemical properties prior to analysis.

The image acquisition of winter jujube samples was conducted under uniform indoor lighting conditions to ensure consistency and high image quality. A Sony ILCE-6400L mirrorless camera, equipped with a Sony E PZ 18–105 mm F4 G OSS lens, was employed for static image capture. The background of the shooting platform was composed of a high-reflectivity matte white PVC board, which enhanced image contrast and facilitated subsequent image segmentation. Each jujube sample was placed on a fixed PVC surface and photographed from a top-down perspective with the camera mounted vertically at a fixed distance of 40 cm. The camera was set to manual mode, with a shutter speed of 1/250 s, aperture set to F/5.6, ISO fixed at 100, and focal length adjusted to 50 mm to balance image sharpness and depth of field. White balance and exposure settings were kept constant throughout the acquisition process to minimize variability across samples. Each winter jujube was imaged from both the front and back sides, resulting in a total of 1800 high-resolution images. All images were saved in lossless PNG format to preserve original visual information. In the preprocessing stage, all images were uniformly cropped and resized to 224 × 224 pixels, followed by pixel intensity normalization to meet the input requirements of deep neural network models.

The spectral data of the jujube samples were acquired using the FIGSPEC FS-13 hyperspectral imaging system, manufactured by Caimatech Co., Ltd. This system covers a spectral range of 390–1000 nm, with a spectral resolution of 2.5 nm and a sampling interval of 2 nm, resulting in a total of 300 spectral bands. During data acquisition, each jujube sample was placed on a stage and imaged in a scanning mode. The hyperspectral camera was positioned vertically at a fixed height of 20 cm above the sample. The system’s enclosed imaging chamber was equipped with eight halogen lamps—four on each side—positioned at a 45° angle to the sample, serving as the sole illumination source to minimize the impact of ambient light on the experimental results. To ensure measurement accuracy, the instrument was preheated for 5 min and calibrated using a white reference panel prior to each imaging session. For spectral acquisition, five sampling points were selected around the “equatorial” region of each jujube. The hyperspectral data for each point were recorded using the FigSpec software provided with the FS-13 system. The spectral readings from the five points were then averaged arithmetically to reduce random error, resulting in a single representative spectral curve for each sample. To improve the accuracy of spectral measurements and enhance the signal-to-noise ratio (SNR), spectral bands with high noise levels at both ends of the spectrum (390–400 nm and 980–1000 nm) were excluded22. As a result, the effective wavelength range used in this study was 400.46–979.62 nm. The spectral curves of all 900 samples are illustrated in Fig. 8.

To ensure a balanced and fair evaluation of model performance, the 900 winter jujube samples were stratified according to the four defined maturity stages (S1–S4). The results of the dataset partition are shown in Table 8. The class distribution was based on the labeling criteria outlined in GB/T 32714-2016, and the final number of samples in each class was: 212 (S1), 201 (S2), 323 (S3), and 164 (S4). Each class was then split into training and test sets using a fixed ratio. Specifically, 169/43 samples were assigned for S1, 161/40 for S2, 258/65 for S3, and 131/33 for S4 in the training/test sets, respectively, resulting in a total of 719 training samples and 181 testing samples. This partitioning was carefully implemented using stratified sampling to maintain the original class distribution.

Extraction of image texture features

To enhance the recognition of surface characteristics associated with jujube maturity, image texture features were extracted using the gray-level co-occurrence matrix (GLCM), a classical and widely used method in statistical texture analysis. GLCM captures the spatial relationship between pixel pairs in an image by analyzing how frequently different combinations of pixel gray levels occur at a specific spatial offset. This method enables the characterization of subtle surface patterns that are often imperceptible through RGB values alone.

In this study, each RGB jujube image was first converted to a grayscale format to facilitate texture analysis. Gray-level co-occurrence matrices (GLCMs) were computed in four principal directions (0°, 45°, 90°, and 135°) with a pixel offset of 1. From each directional GLCM, six statistical texture descriptors were extracted to characterize the surface structure of the winter jujubes: Contrast, which quantifies local intensity variations; Energy (angular second moment), representing texture uniformity; Entropy, indicating the degree of randomness in pixel pair distribution; Homogeneity, measuring the closeness of pixel distributions to the GLCM diagonal; Correlation, which reflects the linear dependency of gray levels in the matrix; and Inverse difference moment (IDM), which evaluates the local homogeneity by penalizing large intensity differences. These features collectively describe both the fine and coarse texture patterns on the jujube surface, which are closely associated with different stages of fruit maturity. The sample texture feature data obtained are shown in Table 9.

For each image, the descriptors computed from the four directions were averaged to obtain a set of direction-invariant texture features. The resulting texture feature vector was then normalized and concatenated with the original RGB image features through a channel-level fusion strategy. Specifically, the one-dimensional texture vector was reshaped and expanded to match the spatial dimensions of the image input and was concatenated with the RGB channels before being fed into the texture stream of the DSAF-ResNet model. This fusion ensured that both global spectral information and fine-grained textural patterns were effectively utilized during training.

DSAF-ResNet (Dual-stream attention-fused residual network) for maturity classification

To fully leverage the spectral and textural information present in winter jujube images and enhance the model’s ability to discriminate fine-grained differences in maturity levels, this study proposes an improved deep learning architecture based on the ResNet34 backbone, termed DSAF-ResNet (dual-stream attention-fused residual network). The model integrates RepVGGBlocks, the SimAM attention mechanism, a dual-branch structure, and a dynamic feature fusion module, collectively enhancing its feature extraction capacity and classification performance. To prevent overfitting, DropBlock regularization is applied, and an early stopping strategy is introduced to halt training once the model’s performance on the validation set ceases to improve. The network structure is shown in Fig. 9. The proposed DSAF-ResNet is an enhanced version of the standard ResNet34 architecture, specifically designed to improve multimodal feature representation and classification performance for winter jujube maturity detection. Compared with the original ResNet34, DSAF-ResNet introduces three key modifications: (1) RepVGGBlocks are adopted to replace standard convolutional layers, enabling structural reparameterization for improved feature expressiveness and faster inference during deployment; (2) a dual-stream structure is constructed to allow separate feature extraction from spectral and texture inputs; (3) a SimAM attention mechanism is embedded into each branch to highlight informative spatial features without adding learnable parameters;

The red-highlighted dashed box components represent the architectural improvements over standard ResNet34, including RepVGGBlocks, SimAM attention, dual-stream feature extraction, and dynamic fusion modules. The blue dotted line box is the input part of the model, and the blue bold dotted line box is the structure of the ResNet34 network model.

To further elaborate on the architecture, DSAF-ResNet consists of two independent feature extraction branches designed for hyperspectral and GLCM-based texture inputs, respectively. Each branch employs RepVGGBlocks to enhance nonlinear feature learning and reduce inference complexity via structural reparameterization. This design addresses the limitations of conventional convolution in representing structured spectral and textural patterns. Following the RepVGG-based layers, SimAM attention modules are incorporated into both branches to reinforce salient spatial features without introducing trainable parameters. SimAM provides lightweight attention that helps highlight maturity-relevant local patterns, such as surface roughness or color transitions, thereby improving discriminative representation. The high-level features from both streams are adaptively fused through a dynamic feature fusion module, which learns sample-specific modality weights. This mechanism allows the model to emphasize more informative cues depending on the context, mitigating issues caused by static or equal-weight fusion strategies. Finally, the fused representation is passed to a residual classifier with DropBlock regularization and early stopping, improving generalization and reducing overfitting. Collectively, the modular design of DSAF-ResNet enables fine-grained maturity discrimination and stable training across multimodal inputs.

Performance evaluation metrics of the model

To comprehensively evaluate the classification performance of the models on the winter jujube maturity task, this study adopts several widely accepted quantitative evaluation metrics, including Accuracy (%), Precision(%), Recall(%), Loss, and the Confusion Matrix23. These metrics provide both global and class-level insights into the model’s discriminative ability, generalization performance, and training stability.

Accuracy serves as the most intuitive indicator of a model’s overall performance. It is defined as the ratio of correctly classified samples (true positives and true negatives) to the total number of samples, calculated as:

where TP (true positives), TN (true negatives), FP (false positives), and FN (false negatives) represent the fundamental classification outcomes. While accuracy provides a broad overview of model effectiveness, it can be insufficient in imbalanced datasets. Hence, Precision and Recall are introduced as complementary metrics.

Precision measures the proportion of correctly predicted positive instances among all instances predicted as positive, reflecting the model’s reliability in identifying a specific class. It is calculated as:

Recall, also known as sensitivity or the true positive rate, quantifies the ability of the model to identify all actual positive samples within a given class, formulated as:

Together, Precision and Recall allow for a more nuanced understanding of classification quality, especially when assessing performance on individual categories of maturity.

Loss is another fundamental metric that quantifies the discrepancy between the predicted class probabilities and the ground truth labels during both training and testing phases. It plays a central role in guiding the optimization process and reflects the model’s learning dynamics and convergence behavior. In this study, cross-entropy loss24 is primarily employed for multi-class classification tasks. It serves as the principal objective function during model training and is defined as:

where n denotes the total number of classes, yi represents the ground truth one-hot encoded label for class i, and \({\hat{y}}_{i}\) is the predicted probability for class i. Cross-entropy loss effectively penalizes the divergence between the predicted and actual class distributions, driving the model toward producing more accurate class probability estimates.

In addition, confusion matrix is constructed for each model under different input modalities. These matrices present a detailed view of true versus predicted label distributions across all maturity levels, helping to identify specific misclassification trends and structural weaknesses in the model’s decision-making.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Cao, X. et al. Hyperspectral technology combined with characteristic wavelength/spectral index for visual discrimination of winter jujube maturity. Spectrosc. Spectr. Anal. 38, 2175–2182 (2018).

Zhang, J., Wang, W. & Che, Q. Innovative research on intelligent recognition of winter jujube defects by applying convolutional neural networks. Electronics 13, 2941–2941 (2024).

Shao, Y. et al. Soluble solids content monitoring and shelf life analysis of winter jujube at different maturity stages by Vis-NIR hyperspectral imaging. Postharvest Biol. Technol. 210, 112773 (2024).

Quancheng, L. et al. Detection of dried jujube from fresh jujube with different variety and maturity after hot air drying based on hyperspectral imaging technology. J. Food Compos. Anal. 133, 106378 (2024).

Wang, T. et al. Different maturity levels of winter jujube recognition based on data-balanced deep learning. Trans. Chin. Soc. Agric. Machinery 51, 457–463 (2020).

Liu, A., Song, Y., Xu, Z., Meng, X. & Liu, Z. Non-destructive determination of the soluble solid content in winter jujubes using hyperspectral technology and the SCARS-PLSR prediction model. Int. J. Comput. Sci. 52, 555–565 (2025).

Sun, H., Zhang, S., Ren, R., Xue, J. & Zhao, H. Detection of soluble solids content in different cultivated fresh jujubes based on variable optimization and model update. Foods 11, 2522 (2022).

Wei, Y. P., Yuan, M., Hu, H., Xu, H. & Mao, X. Estimation for soluble solid content in Hetian jujube using hyperspectral imaging with fused spectral and textural Features. J. Food Compos. Anal. 128, 106079 (2024).

Li, Y., Ma, B. X., Li, C. & Yu, G. Accurate prediction of soluble solid content in dried Hami jujube using SWIR hyperspectral imaging with comparative analysis of models. Comput. Electron. Agric.193, 106655 (2022).

Xu, Z. Research on detection method of winter jujube maturity and soluble solidcontent. Hebei Agric. Univ. https://doi.org/10.27109/d.cnki.ghbnu.2022.000426 (2022).

Junrui, X. Research on Lingwu long jujube detection and maturity classification system based on deep learning. https://doi.org/10.27257/d.cnki.gnxhc.2022.000435 (2022).

Han, Y. et al. Predicting the ripening time of ‘Hass’ and ‘Shepard’ avocado fruit by hyperspectral imaging. Precis. Agric. 24, 1889–1905 (2023).

Chollette, C. et al. Convolutional neural network ensemble learning for hyperspectral imaging-based blackberry fruit ripeness detection in uncontrolled farm environment. Eng. Appl. Artif. Intell. 132, 107945 (2024).

Ben Jmaa, A. B., Chaieb, F. & Fabijańska, A. Fruit-HSNet: a machine learning approach for hyperspectral image-based fruit ripeness prediction. In Proc. 17th International Conference on Agents and Artificial Intelligence 102–111 (SciTePress, 2025).

Lee, J.-E. et al. Evaluating ripeness in post-harvest stored kiwifruit using VIS-NIR hyperspectral imaging. Postharvest Biol. Technol. 225, 113496 (2025).

Shiddiq, M., Candra, F., Anand, B. & Rabin, M. F. Neural network with k-fold cross validation for oil palm fruit ripeness prediction. TELKOMNIKA https://doi.org/10.12928/TELKOMNIKA.v22i1.24845 (2024).

Zhou, C. et al. A method for classifying plum maturity based on hyperspectral data. J. Forest. Eng. 1–12 (2025).

Sunandar, E., Hartomo, K. D., Nataliani, Y. & Sembiring, I. Enhanced banana ripeness detection using GLCM and K-NN methods. In 2024 3rd International Conference on Creative Communication and Innovative Technology(ICCIT) 1–7 (IEEE, 2024).

Nayak, A. M., Manjesh R. & Dhanusha. Fruit recognition using image processing. Int. J. Eng. Res. Technol. 7 (2019).

Ding, X. et al. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR) 13733–13742 (IEEE, 2021).

Yang, L., Zhang, R. -Y., Li, L. & Xie, X. SimAM: a simple, parameter-free attention module for convolutional neural networks. In Proc. 38th International Conference on Machine Learning 11863–11874 (MLR Press, 2021).

Zhao, L. et al. Defect detection and visualization of strawberries by hyperspectral imaging. Spectrosc. Spectr. Anal. 45, 1310–1318 (2025).

Zhao, P. et al. A study on mobileViT-CBAM fresh tobacco leaf maturity recognition model based on transfer learning. China Tob. Sci. 46, 93–100 (2025).

Mao, A., Mohri, M. & Zhong, Y. Cross-entropy loss functions: theoretical analysis and applications. In Proc. 40th International Conference on Machine Learning (ICML'23) 23803–23828 (2023).

Acknowledgements

This research was financially supported by the Central Government's Guidance Fund for Local Science and Technology Development (246Z0309G), the Higher Education Research Project of Hebei Province (BJ2025097), the Agricultural Science and Technology Achievement Transformation Fund of Hebei Province (202460104030028), and the Hebei Province Doctoral Student Innovation Capability Training Fund (CXZZBS2024073), China.

Author information

Authors and Affiliations

Contributions

Y.S.: Supervision, project administration, and funding acquisition. A.L.: Conceptualization, methodology, investigation, visualization, and writing original draft. X.M.: Review and editing. Z.L.: Resources, review, and editing. P.L.: Resources, review, and editing. X.Z.: Data collection.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Song, Y., Liu, A., Meng, X. et al. A maturity classification model for winter jujubes based on DSAF-ResNet. npj Sci Food 9, 187 (2025). https://doi.org/10.1038/s41538-025-00551-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41538-025-00551-3