Abstract

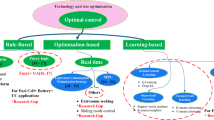

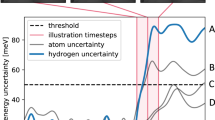

Optimizing nonlinear time-dependent control in complex energy systems such as direct methanol fuel cells (DMFCs) is a crucial engineering challenge. The long-term power delivery of DMFCs deteriorates as the electrocatalytic surfaces become fouled. Dynamic voltage adjustment can clean the surface and recover the activity of catalysts; however, manually identifying optimal control strategies considering multiple mechanisms is challenging. Here we demonstrated a nonlinear policy model (Alpha-Fuel-Cell) inspired by actor–critic reinforcement learning, which learns directly from real-world current–time trajectories to infer the state of catalysts during operation and generates a suitable action for the next timestep automatically. Moreover, the model can provide protocols to achieve the required power while significantly slowing the degradation of catalysts. Benefiting from this model, the time-averaged power delivered is 153% compared to constant potential operation for DMFCs over 12 hours. Our framework may be generalized to other energy device applications requiring long-time-horizon decision-making in the real world.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available within the Article and its Supplementary Information. Source data are provided with this paper.

Code availability

The αFC code associated with this manuscript is available via GitHub at https://github.com/parkyjmit/alphaFC.

References

Yao, Z. et al. Machine learning for a sustainable energy future. Nat. Rev. Mater. 8, 202–215 (2022).

Attia, P. M. et al. Closed-loop optimization of fast-charging protocols for batteries with machine learning. Nature 578, 397–402 (2020).

Jordan, M. I. & Mitchell, T. M. Machine learning: trends, perspectives, and prospects. Science 349, 255–260 (2015).

Rosen, A. S. et al. Machine learning the quantum-chemical properties of metal–organic frameworks for accelerated materials discovery. Matter 4, 1578–1597 (2021).

Yao, Z. et al. Inverse design of nanoporous crystalline reticular materials with deep generative models. Nat. Mach. Intell. 3, 76–86 (2021).

Jiao, K. et al. Designing the next generation of proton-exchange membrane fuel cells. Nature 595, 361–369 (2021).

Zhu, G. et al. Rechargeable Na/Cl2 and Li/Cl2 batteries. Nature 596, 525–530 (2021).

Wu, Y., Jiang, Z., Lu, X., Liang, Y. & Wang, H. Domino electroreduction of CO2 to methanol on a molecular catalyst. Nature 575, 639–642 (2019).

Davidson, D. J. Exnovating for a renewable energy transition. Nat. Energy 4, 254–256 (2019).

Feng, Y., Liu, H. & Yang, J. A selective electrocatalyst–based direct methanol fuel cell operated at high concentrations of methanol. Sci. Adv. 3, e1700580 (2017).

Martín, A. J., Mitchell, S., Mondelli, C., Jaydev, S. & Pérez-Ramírez, J. Unifying views on catalyst deactivation. Nat. Catal. 5, 854–866 (2022).

Poerwoprajitno, A. R. et al. A single-Pt-atom-on-Ru-nanoparticle electrocatalyst for CO-resilient methanol oxidation. Nat. Catal. 5, 231–237 (2022).

Wang, J. et al. Toward electrocatalytic methanol oxidation reaction: longstanding debates and emerging catalysts. Adv. Mater. 35, 2211099 (2023).

Xu, H. et al. A cobalt-platinum-ruthenium system for acidic methanol oxidation. Chem. Mater. 36, 6938–6949 (2024).

Timoshenko, J. et al. Steering the structure and selectivity of CO2 electroreduction catalysts by potential pulses. Nat. Catal. 5, 259–267 (2022).

Xu, L. et al. In situ periodic regeneration of catalyst during CO2 electroreduction to C2+ products. Angew. Chem. Int. Ed. 61, e202210375 (2022).

Zhang, X.-D. et al. Asymmetric low-frequency pulsed strategy enables ultralong CO2 reduction stability and controllable product selectivity. J. Am. Chem. Soc. 145, 2195–2206 (2023).

Rabissi, C., Brightman, E., Hinds, G. & Casalegno, A. In operando investigation of anode overpotential dynamics in direct methanol fuel cells. Int. J. Hydrogen Energy 41, 18221–18225 (2016).

Rabissi, C., Brightman, E., Hinds, G. & Casalegno, A. In operando measurement of localised cathode potential to mitigate DMFC temporary degradation. Int. J. Hydrogen 43, 9797–9802 (2018).

Bresciani, F., Casalegno, A., Bonde, J. L., Odgaard, M. & Marchesi, R. A comparison of operating strategies to reduce DMFC degradation: a comparison of operating strategies to reduce DMFC degradation. Int. J. Energy Res. 38, 117–124 (2014).

Kusne, A. G. et al. On-the-fly closed-loop materials discovery via Bayesian active learning. Nat. Commun. 11, 5966 (2020).

Szymanski, N. J. et al. An autonomous laboratory for the accelerated synthesis of novel materials. Nature 624, 86–91 (2023).

Severson, K. A. et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 4, 383–391 (2019).

Seo, J. et al. Avoiding fusion plasma tearing instability with deep reinforcement learning. Nature 626, 746–751 (2024).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Silver, D. et al. Mastering the game of go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Silver, D. et al. Mastering the game of go without human knowledge. Nature 550, 354–359 (2017).

Schulman, J., Wolski, F., Dhariwal, P., Radford, A. & Klimov, O. Proximal policy optimization algorithms. Preprint at https://arxiv.org/abs/1707.06347 (2017).

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor–critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proc. 35th International Conference on Machine Learning 1861–1870 (PMLR, 2018).

Hessel, M. et al. Rainbow: combining improvements in deep reinforcement learning. In Thirty-second AAAI Conference on Artificial Intelligence (AAAI-18) 3215–3222 (AAAI, 2018).

Mnih, V. et al. Playing Atari with deep reinforcement learning. Preprint at https://arxiv.org/abs/1312.5602 (2013).

Panzer, M. & Bender, B. Deep reinforcement learning in production systems: a systematic literature review. Int. J. Prod. Res. 60, 4316–4341 (2022).

Degrave, J. et al. Magnetic control of tokamak plasmas through deep reinforcement learning. Nature 602, 414–419 (2022).

Shorten, C. & Khoshgoftaar, T. M. A survey on image data augmentation for deep learning. J. Big Data 6, 60 (2019).

Kort-Kamp, W. J. M. et al. Machine learning-guided design of direct methanol fuel cells with a platinum group metal-free cathode. J. Power Sources 626, 235758 (2025).

Sweigart, A. PyAutoGUI documentation. Read the Docs https://pyautogui.readthedocs.org (2020).

Acknowledgements

We acknowledge support by the Defense Advanced Research Projects Agency (DARPA) under agreement number HR00112490369. This work made use of the Materials Research Laboratory Shared Experimental Facilities at Massachusetts Institute of Technology. This work was carried out in part through the use of MIT.nano’s facilities. This work was performed in part at the Center for Nanoscale Systems of Harvard University. H.X. acknowledges Y. Rao for discussion and support. Y.J.P. also acknowledges support by a grant from the National Research Foundation of Korea (NRF) funded by the Korean government, Ministry of Science and ICT (MSIT) (number RS-2024-00356670).

Author information

Authors and Affiliations

Contributions

J.L., H.X. and Y.J.P. conceived the original idea. H.X. performed the synthesis, the electrochemical measurements, data collection and model construction. Y.J.P. developed and trained the αFC model. Z.R. developed automated measurements for experiments. H.X., D.J.Z. and D.M. performed ECMS measurements. D.Z., H.J., C.D. and G.Z. participated in the discussion. H.X. and Y.J.P. verified and analysed data. H.X., Y.J.P., J.L., Y.S.-H. and Y.R.-L. drafted the manuscript. All authors contributed to the revision of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Energy thanks Zhongbao Wei, Lei Xing and the other, anonymous, reviewer for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Discussion and Figs. 1–28.

Supplementary Video 1

Demonstration video of Alpha-Fuel-Cell operation.

Source data

Source Data Fig. 2

Source data for Fig. 2.

Source Data Fig. 3

Source data for Fig. 3.

Source Data Fig. 4

Source data for Fig. 4.

Source Data Fig. 5

Source data for Fig. 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, H., Park, Y.J., Ren, Z. et al. An actor–critic algorithm to maximize the power delivered from direct methanol fuel cells. Nat Energy 10, 951–961 (2025). https://doi.org/10.1038/s41560-025-01804-x

Received:

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s41560-025-01804-x