Abstract

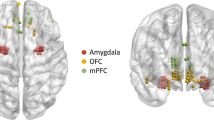

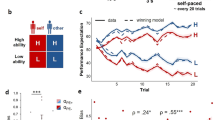

The neurological basis of affective behaviours in everyday life is not well understood. We obtained continuous intracranial electroencephalography recordings from the human mesolimbic network in 11 participants with epilepsy and hand-annotated spontaneous behaviours from 116 h of multiday video recordings. In individual participants, binary random forest models decoded affective behaviours from neutral behaviours with up to 93% accuracy. Both positive and negative affective behaviours were associated with increased high-frequency and decreased low-frequency activity across the mesolimbic network. The insula, amygdala, hippocampus and anterior cingulate cortex made stronger contributions to affective behaviours than the orbitofrontal cortex, but the insula and anterior cingulate cortex were most critical for differentiating behaviours with observable affect from those without. In a subset of participants (N = 3), multiclass decoders distinguished amongst the positive, negative and neutral behaviours. These results suggest that spectro-spatial features of brain activity in the mesolimbic network are associated with affective behaviours of everyday life.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The collected neural and behavioural data are a modified version of clinical recordings for the purpose of seizure localization and clinical decisions. Thus, the minimum de-identified dataset used to generate the findings of this study will be available upon reasonable request to the corresponding author. Source data for the figures are available upon reasonable request. Contact M.B. via e-mail with enquiries.

Code availability

The code written to train the classifiers is available at: https://github.com/MBijanzadeh/DecodingAffect. The code to generate the figures will be available upon request. Contact M.B. via e-mail with any inquiries.

References

Ochsner, K. & Gross, J. The cognitive control of emotion. Trends Cogn. Sci. 9, 242–249 (2005).

Barrett, L. F., Mesquita, B., Ochsner, K. N. & Gross, J. J. The experience of emotion. Annu. Rev. Psychol. 58, 373–403 (2007).

Ochsner, K. N., Silvers, J. A. & Buhle, J. T. Functional imaging studies of emotion regulation: a synthetic review and evolving model of the cognitive control of emotion: functional imaging studies of emotion regulation. Ann. N. Y. Acad. Sci. 1251, E1–E24 (2012).

Lieberman, M. D. et al. Affect labeling disrupts amygdala activity in response to affective stimuli. Psychol. Sci. 18, 421–428 (2007).

Lieberman, M. D. Social cognitive neuroscience: a review of core processes. Annu. Rev. Psychol. 58, 259–289 (2007).

Touroutoglou, A., Hollenbeck, M., Dickerson, B. C. & Feldman Barrett, L. Dissociable large-scale networks anchored in the right anterior insula subserve affective experience and attention. NeuroImage 60, 1947–1958 (2012).

Uddin, L. Q. Salience processing and insular cortical function and dysfunction. Nat. Rev. Neurosci. 16, 55–61 (2015).

Zhang, Y. et al. The roles of subdivisions of human insula in emotion perception and auditory processing. Cereb. Cortex 29, 517–528 (2019).

Seeley, W. W. et al. Dissociable intrinsic connectivity networks for salience processing and executive control. J. Neurosci. 27, 2349–2356 (2007).

Chouchou, F. et al. How the insula speaks to the heart: cardiac responses to insular stimulation in humans. Hum. Brain Mapp. 40, 2611–2622 (2019).

Oya, H., Kawasaki, H., Howard, M. A. & Adolphs, R. Electrophysiological responses in the human amygdala discriminate emotion categories of complex visual stimuli. J. Neurosci. 22, 9502–9512 (2002).

Adolphs, R., Tranel, D., Damasio, H. & Damasio, A. Fear and the human amygdala. J. Neurosci. 15, 5879 (1995).

Takahashi, H. et al. Brain activations during judgments of positive self-conscious emotion and positive basic emotion: pride and joy. Cereb. Cortex 18, 898–903 (2008).

Lindquist, K. A., Satpute, A. B., Wager, T. D., Weber, J. & Barrett, L. F. The brain basis of positive and negative affect: evidence from a meta-analysis of the human neuroimaging literature. Cereb. Cortex 26, 1910–1922 (2016).

Phelps, E. A. & LeDoux, J. E. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48, 175–187 (2005).

Strange, B. A. & Dolan, R. J. Adrenergic modulation of emotional memory-evoked human amygdala and hippocampal responses. Proc. Natl Acad. Sci. USA 101, 11454–11458 (2004).

Krolak-Salmon, P. et al. An attention modulated response to disgust in human ventral anterior insula: disgust in ventral insula. Ann. Neurol. 53, 446–453 (2003).

Meletti, S. et al. Fear and happiness in the eyes: an intra-cerebral event-related potential study from the human amygdala. Neuropsychologia 50, 44–54 (2012).

Omigie, D. et al. Intracranial markers of emotional valence processing and judgments in music. Cogn. Neurosci. 6, 16–23 (2015).

Hajcak, G. & Nieuwenhuis, S. Reappraisal modulates the electrocortical response to unpleasant pictures. Cogn. Affect. Behav. Neurosci. 6, 291–297 (2006).

Jung, J. et al. Intracerebral gamma modulations reveal interaction between emotional processing and action outcome evaluation in the human orbitofrontal cortex. Int. J. Psychophysiol. 79, 64–72 (2011).

Wang, X.-W., Nie, D. & Lu, B.-L. Emotional state classification from EEG data using machine learning approach. Neurocomputing 129, 94–106 (2014).

Merkl, A. et al. Modulation of beta-band activity in the subgenual anterior cingulate cortex during emotional empathy in treatment-resistant depression. Cereb. Cortex 26, 2626–2638 (2016).

Zheng, J. et al. Multiplexing of theta and alpha rhythms in the amygdala–hippocampal circuit supports pattern separation of emotional information. Neuron 102, 887–898.e5 (2019).

Hu, X. et al. EEG correlates of ten positive emotions. Front. Hum. Neurosci. 11, 26 (2017).

Guillory, S. A. & Bujarski, K. A. Exploring emotions using invasive methods: review of 60 years of human intracranial electrophysiology. Soc. Cogn. Affect. Neurosci. 9, 1880–1889 (2014).

Mukamel, R. & Fried, I. Human intracranial recordings and cognitive neuroscience. Annu. Rev. Psychol. 63, 511–537 (2012).

Kragel, P. A. & LaBar, K. S. Multivariate pattern classification reveals autonomic and experiential representations of discrete emotions. Emotion 13, 681–690 (2013).

Sani, O. G. et al. Mood variations decoded from multi-site intracranial human brain activity. Nat. Biotechnol. 36, 954–961 (2018).

Rao, V. R. et al. Direct electrical stimulation of lateral orbitofrontal cortex acutely improves mood in individuals with symptoms of depression. Curr. Biol. 28, 3893–3902.e4 (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Nuyujukian, P. et al. Cortical control of a tablet computer by people with paralysis. PLoS ONE 13, e0204566 (2018).

Kashihara, K. A brain–computer interface for potential non-verbal facial communication based on EEG signals related to specific emotions. Front. Neurosci. 8, 244 (2014).

Ashburner, J. & Friston, K. Multimodal image coregistration and partitioning—A unified framework. NeuroImage 6, 209–217 (1997).

Zajonc, R. B. Preferences need no inferences. Am. Psychol. 25, 151–175 (1980).

Popov, T., Steffen, A., Weisz, N., Miller, G. A. & Rockstroh, B. Cross-frequency dynamics of neuromagnetic oscillatory activity: two mechanisms of emotion regulation: oscillatory activity during emotion regulation. Psychophysiology 49, 1545–1557 (2012).

Ezzyat, Y. et al. Direct brain stimulation modulates encoding states and memory performance in humans. Curr. Biol. 27, 1251–1258 (2017).

Seeley, W. W. The salience network: a neural system for perceiving and responding to homeostatic demands. J. Neurosci. 39, 9878–9882 (2019).

Craig, A. D. How do you feel? Interoception: the sense of the physiological condition of the body. Nat. Rev. Neurosci. 3, 655–666 (2002).

Anumanchipalli, G. K., Chartier, J. & Chang, E. F. Speech synthesis from neural decoding of spoken sentences. Nature 568, 493–498 (2019).

Satopää, V., Albrecht, J., Irwin, D. & Raghavan, B. Finding a ‘kneedle’ in a haystack: detecting knee points in system behavior. Proc. Int. Conf. Distrib. Comput. Syst. https://doi.org/10.1109/ICDCSW.2011.20 (2011).

Inman, C. S. et al. Human amygdala stimulation effects on emotion physiology and emotional experience. Neuropsychologia 145, 106722 (2020).

Phelps, E. A. Human emotion and memory: interactions of the amygdala and hippocampal complex. Curr. Opin. Neurobiol. 14, 198–202 (2004).

Bickart, K. C., Dickerson, B. C. & Feldman Barrett, L. The amygdala as a hub in brain networks that support social life. Neuropsychologia 63, 235–248 (2014).

Zheng, J. et al. Amygdala-hippocampal dynamics during salient information processing. Nat. Commun. 8, 14413 (2017).

Fournier, N. M. & Duman, R. S. Illuminating hippocampal control of fear memory and anxiety. Neuron 77, 803–806 (2013).

Kirkby, L. A. et al. An amygdala-hippocampus subnetwork that encodes variation in human mood. Cell 175, 1688–1700.e14 (2018).

Gross, J. J. & Feldman Barrett, L. Emotion generation and emotion regulation: one or two depends on your point of view. Emot. Rev. 3, 8–16 (2011).

Kragel, P. A., Knodt, A. R., Hariri, A. R. & LaBar, K. S. Decoding spontaneous emotional states in the human brain. PLoS Biol. 14, e2000106 (2016).

Fischl, B. FreeSurfer. NeuroImage 62, 774–781 (2012).

Fischl, B., Sereno, M. I., Tootell, R. B. H. & Dale, A. M. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum. Brain Mapp. 8, 272–284 (1999).

Sloetjes, H. & Wittenburg, P. Annotation by category - ELAN and ISO DCR. 5 (European Language Resources Association (ELRA), 2008).

Delorme, A. & Makeig, S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004).

Schnitzler, A. & Gross, J. Normal and pathological oscillatory communication in the brain. Nat. Rev. Neurosci. 6, 285–296 (2005).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

James, G., Witten, D., Hastie, T. & Tibshirani R. An Introduction to Statistical Learning: with Applications in R (Springer, 2013).

Acknowledgements

We thank Chang laboratory members B. Speidel, D. Chandramohan, K. Sellers, L. Kirkby and P. Hullet and raters N. Goldberg-Boltz, L. Bederson, M. Solberg, C. Eun, J. Gordon, D. Tager, V. Cheng, N. Mummaneni and N. Kunwar. This research was funded by the NIMH (R01MH122431) and the Defense Advanced Research Projects Agency (DARPA) under Cooperative Agreement Number W911NF-14-2-0043. The views, opinions and/or findings contained in this material are those of the authors and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. Government. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

M.B. performed all analysis. M.D., D.L.W. and E.F.C. designed the study. D.L.W. and M.D. assisted with subject recruitment, data collection and leading behavioural annotations. M.B. and A.N.K. conceptualized the analytical framework. M.B., M.D. and A.S. performed neural data cleaning from epileptiform activity. M.B., A.N.K. and V.E.S. wrote the manuscript with input from other authors. H.E.D. and E.F.C. supervised the experimental work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks Anna Weinberg, Liang Wang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Behavioral annotations.

a) Example of annotated behaviors for an example participant during three days of their hospital stay. Behaviors in black are marked using onset and offset of the activity, while the affective behaviors are marked as instances. Purple shading represents neutral moments where there were no affective behaviors, but the participant may have been engaged in other tasks (here, using the phone). The red shading displays where there was no activity (called ‘rest’, per supplementary tables 1 and 2). b) Percentages of naturalistic affective behaviours displayed across the 11 participants in this study. c) distribution of the time jitter between different rater pairs for the positive and negative affective behaviours.

Extended Data Fig. 2

Preprocessing and decoding pipeline.

Extended Data Fig. 3 Comparison of decoder performance using rest vs. neutral moments.

Decoders were trained using rest instances vs, positive (blue) and negative (red) affective behaviours. All panels are comparing these decoders with the neutral vs. affective behaviours as shown in Fig. 2. Green and orange curves show the original model AUCs for positive and negative decoders, respectively. The boxplots show the sample distribution of the average AUC for positive behaviours vs. neutral (green, n = 10 participants), and positive behaviours vs. rest (blue, n = 9 participants) in the top row and negative behaviours vs. neutral (orange, n = 5 participants) as well as negative behaviours vs. rest (red, n = 5 participants) in the bottom row. There were no significance difference between the positive (p = 0.6, two-sided non-parametric pairwise ranskum test) and negative (p = 0.66, two-sided pairwise ranksum test) decoders. In the box plots, central lines represent the median and the two edges represent 25 and 75 percentiles; whiskers show the most extreme datapoints, and outliers are shown individually (see MATLAB boxplot function).

Extended Data Fig. 4 Decoding results for neutral vs. affective behaviours that included conversational moments.

a & b) Accuracy for all 10 and 5 participants on which the positive and negative decoders were trained, respectively. Permuted models (black) that were trained the same way using the shuffled labels across all participants. The significance level was assumed as 0.0005 to correct for n = 100 runs (refer to the Methods section ‘Statistical Analyses’). P values regarding panel A are as following for all participants: \(1.4 \ast 10^{ - 33},5.9 \ast 10^{ - 7}\), \(5.1 \ast 10^{ - 29},3.3 \ast 10^{ - 16}\), 6.8*10−26, \(1.6 \ast 10^{ - 13},6.35 \ast 10^{ - 5},2.3 \ast 10^{ - 15}\), \(1 \ast 10^{ - 32},2.1 \ast 10^{ - 14}\), respectively. P values regarding panel B are as following: 9.25*10−30, 9.13*10−27, 0.0031, 1.8*10−10, 2.7*10−11. c) F1-scores for the three-class RF models from the three participants. All F1-Scores were significantly above chance level (33%, dashed lines) and different from the shuffled models (p values are in the order of neutral, positive and negative behaviour for each participant: Subj1: 2.9*10−32, 7.1*10−32, 1.4*10−18; Subj2: 2.7*10−15: 6.9*10−11, 7.7*10−22; Subj6: 1.3*10−7, 2.1*10−18, 0.025, two-sided pairwise ranksum test). In the box plots(A-C) central lines represent the median and the two edges represent 25 and 75 percentiles, whiskers show the most extreme datapoints and outliers are shown individually (see MATLAB boxplot function). *** signifies p < 0.0001.

Extended Data Fig. 5 Clustering analyses populated across all participants for the binary classifiers.

a,b) Pie charts show the percentage of frequency bands that were selected across all participants for positive and negative decoders, respectively. The histograms show the percentage count of each frequency band within each cluster, implying that the low frequency cluster was mainly made up of the theta, alpha and beta bands. The gamma cluster was mainly made up of the high and low gamma bands for both decoder types. c) left and right panels show the populated normalized feature importance and the stability across all 10 participants for the positive decoders(n = 149 and n = 124 for gamma and low-frequency clusters, respectively), with p values obtained by two-sided pairwise ranksum tests at the bottom of each panel. d) represents similar panels as in C for negative decoders (n = 62 and n = 45 for gamma and low-frequency clusters, respectively). e,f) ratio is defined as (number of features in gamma cluster – number of feature in low frequency clyster) /total number of features contributing to both gamma and low frequency clusters (from Fig. 4b,d), positive ratio means the region have more selected features in gamma cluster and negative ratio means the region has more selected features in low-frequency cluster across subjects. INS: insula, VCin = Ventral cingulate, DCin = dorsal cingulate, AMY: amygdala, OFC = orbitofrontal cortex, HPC = hippocampus. We have generated permuted distributions (that is, null distributions) by shuffling (1000000 times) the region label of each feature and recomputing the ratio (gray boxplots). Confidence intervals are based on the t-statistics since the permuted distribution are normally distributed. All real values of the ratio shown in green(E) and orange(F) circles are outside the confidence interval of the permuted distributions. Confidence intervals in panel E are as following: VCin = [0.0908, 0.0917], DCin = [0.0914, 0.092], HPC = [0.0913, 0.0918], AMY = [0.0913, 0.0919], INS & OFC = [0.0914, 0.0919]. Confidence intervals in panel F for VCin = [0.1584, 0.1594], DCin = [0.1584, 0.1593], HPC = [0.1580, 0.1593], AMY = [0.1582, 0.1594], INS & OFC = [0.1586, 0.1592]. In the box plots(C-F) central lines represent the median and the two edges represent 25 and 75 percentiles; whiskers show the most extreme datapoints, and outliers are shown individually (see MATLAB boxplot function).

Extended Data Fig. 6 Decoding AUC for all participants using spectral features from those contacts that are on same lead for positive vs. neutral behaviours.

The green and black box plots are from the full and shuffled models across n = 100 runs as in Fig. 2-F. Other boxplots show the trained model across n = 100 datasets in which only the spectral features from each brain region were used. One-way Krusksal-wallis multi-comparison tests with Bonferroni corrections were used to examine which regions reached the highest performance (refer to supplementary table 6). OFC = orbitofrontal cortex, INS = insula, DCin = dorsal cingulate, VCin = ventral cingulate, HPC = hippocampus, AMY = amygdala. POFC = posterior OFC and AOFC = anterior OFC. In the box plots central lines represent the median and the two edges represent 25 and 75 percentiles; whiskers show the most extreme datapoints, and outliers are shown individually (see MATLAB boxplot function). *** signifies p < 0.0001, ** signifies p < 0.01 and * signifies p < 0.05.

Extended Data Fig. 7 Decoding AUC for all participants using spectral features from those contacts that were on the same lead for negative vs. neutral behaviours.

The orange and black box plots are from the full and shuffled models across n = 100 runs as in Fig. 2-G. Other boxplots show trained model across n = 100 datasets in which only spectral features from each brain region were used. One-way Krusksal-wallis multi-comparison tests with Bonferroni corrections were used to examine which regions reached the highest performance (refer to supplementary table 7). OFC = orbitofrontal cortex, INS = insula, DCin = dorsal cingulate, VCin = ventral cingulate, HPC = hippocampus, AMY = amygdala. In the box plots, central lines represent the median and the two edges represent 25 and 75 percentiles; whiskers show the most extreme datapoints, and outliers are shown individually (see MATLAB boxplot function). *** signifies p < 0.0001, ** signifies p < 0.01 and * signifies p < 0.05.

Extended Data Fig. 8 Decoder performance of multiclass RF models run using features from each lead within a given region.

Explanation of the trained models is similar as in Extended Data Fig. 7. Accuracy = number of true predicted samples / all samples. F-Score = 2*(precision*recall)/(precision + recall)). In the box plot, central lines represent the median and the two edges represent 25 and 75 percentiles; whiskers show the most extreme datapoints, and outliers are shown individually (see MATLAB boxplot function). *** signifies p < 0.0001, ** signifies p < 0.01 and * signifies p < 0.05.

Supplementary information

Supplementary information

Supplementary results, Figs. 1–15, Tables 1–10, references and extended data figure legends.

Rights and permissions

About this article

Cite this article

Bijanzadeh, M., Khambhati, A.N., Desai, M. et al. Decoding naturalistic affective behaviour from spectro-spatial features in multiday human iEEG. Nat Hum Behav 6, 823–836 (2022). https://doi.org/10.1038/s41562-022-01310-0

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41562-022-01310-0

This article is cited by

-

Naturalistic acute pain states decoded from neural and facial dynamics

Nature Communications (2025)

-

How mood-related physiological states bias economic decisions

Communications Psychology (2025)

-

Challenges and opportunities of acquiring cortical recordings for chronic adaptive deep brain stimulation

Nature Biomedical Engineering (2024)

-

EEG-based emotional valence and emotion regulation classification: a data-centric and explainable approach

Scientific Reports (2024)

-

Neural evidence for attentional capture by salient distractors

Nature Human Behaviour (2024)