Abstract

Brain-phenotype predictive models seek to identify reproducible and generalizable brain-phenotype associations. External validation, or the evaluation of a model in external datasets, is the gold standard in evaluating the generalizability of models in neuroimaging. Unlike typical studies, external validation involves two sample sizes: the training and the external sample sizes. Thus, traditional power calculations may not be appropriate. Here we ran over 900 million resampling-based simulations in functional and structural connectivity data to investigate the relationship between training sample size, external sample size, phenotype effect size, theoretical power and simulated power. Our analysis included a wide range of datasets: the Healthy Brain Network, the Adolescent Brain Cognitive Development Study, the Human Connectome Project (Development and Young Adult), the Philadelphia Neurodevelopmental Cohort, the Queensland Twin Adolescent Brain Project, and the Chinese Human Connectome Project; and phenotypes: age, body mass index, matrix reasoning, working memory, attention problems, anxiety/depression symptoms and relational processing. High effect size predictions achieved adequate power with training and external sample sizes of a few hundred individuals, whereas low and medium effect size predictions required hundreds to thousands of training and external samples. In addition, most previous external validation studies used sample sizes prone to low power, and theoretical power curves should be adjusted for the training sample size. Furthermore, model performance in internal validation often informed subsequent external validation performance (Pearson’s r difference <0.2), particularly for well-harmonized datasets. These results could help decide how to power future external validation studies.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The following datasets are publicly available but require permission to access. Relevant instructions for data access are available at each individual link below.

The main datasets are available through the Healthy Brain Network Dataset21 (International Neuroimaging Data-sharing Initiative, https://fcon_1000.projects.nitrc.org/indi/cmi_healthy_brain_network/), the Adolescent Brain Cognitive Development Study22 (NIMH Data Archive, https://nda.nih.gov/abcd), the Human Connectome Project Development Dataset23,24 (NIMH Data Archive, https://www.humanconnectome.org/study/hcp-lifespan-development/data-releases) and the Philadelphia Neurodevelopmental Cohort Dataset25,26 (dbGaP Study Accession: phs000607.v3.p2, https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000607.v3.p2).

For the additional datasets, the Queensland Twin Adolescent Brain Project dataset30 is available via OpenNeuro (https://openneuro.org/datasets/ds004146/versions/1.0.4) and the non-imaging phenotypes are available via Zenodo at https://zenodo.org/records/7765506 (ref. 92). Preprocessed structural connectivity data were downloaded from the developmental datasets from https://brain.labsolver.org/hbn.html (HBN), https://brain.labsolver.org/hcp_d.html (HCPD) and https://brain.labsolver.org/greesland_twin.html (QTAB). The Chinese Human Connectome Project dataset36 is available via the Science Data Bank: https://www.scidb.cn/en/detail?dataSetId=f512d085f3d3452a9b14689e9997ca94. The Human Connectome Project34 is available via the ConnectomeDB database (https://db.humanconnectome.org). Source data are provided with this paper.

Code availability

We used Python 3.11.3 to conduct the analyses. Code for the analyses is available on GitHub at https://github.com/mattrosenblatt7/external_validation_power (ref. 93) and on Zenodo at https://zenodo.org/records/10975870 (ref. 94). Preprocessing was carried out using Bioimage Suite v.3.01, which is freely available (https://medicine.yale.edu/bioimaging/suite/). Additional preprocessing was performed with the Human Connectome Project minimal preprocessing pipeline v.3.4.0 (https://github.com/Washington-University/HCPpipelines/releases).

References

Horien, C. et al. A hitchhiker’s guide to working with large, open-source neuroimaging datasets. Nat. Hum. Behav. 5, 185–193 (2021).

Marek, S. et al. Reproducible brain-wide association studies require thousands of individuals. Nature 605, E11 (2022).

Yarkoni, T. Big correlations in little studies: inflated fMRI correlations reflect low statistical power—commentary on Vul et al. (2009). Perspect. Psychol. Sci. 4, 294–298 (2009).

Yarkoni, T. & Braver, T. S. in Handbook of Individual Differences in Cognition: Attention, Memory, and Executive Control (eds Gruszka, A. et al.) 87–107 (Springer, 2010).

Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013).

Cremers, H. R., Wager, T. D. & Yarkoni, T. The relation between statistical power and inference in fMRI. PLoS ONE 12, e0184923 (2017).

Liu, S., Abdellaoui, A., Verweij, K. J. H. & van Wingen, G. A. Replicable brain–phenotype associations require large-scale neuroimaging data. Nat. Hum. Behav. 7, 1344–1356 (2023).

Klapwijk, E. T., van den Bos, W., Tamnes, C. K., Raschle, N. M. & Mills, K. L. Opportunities for increased reproducibility and replicability of developmental neuroimaging. Dev. Cogn. Neurosci. 47, 100902 (2021).

Rosenberg, M. D. & Finn, E. S. How to establish robust brain–behavior relationships without thousands of individuals. Nat. Neurosci. 25, 835–837 (2022).

Spisak, T., Bingel, U. & Wager, T. D. Multivariate BWAS can be replicable with moderate sample sizes. Nature 615, E4–E7 (2023).

Goltermann, J. et al. Cross-validation for the estimation of effect size generalizability in mass-univariate brain-wide association studies. Preprint at bioRxiv https://doi.org/10.1101/2023.03.29.534696 (2023).

Makowski, C. et al. Leveraging the adolescent brain cognitive development study to improve behavioral prediction from neuroimaging in smaller replication samples. Cereb. Cortex 34, bhae223 (2024).

Genon, S., Eickhoff, S. B. & Kharabian, S. Linking interindividual variability in brain structure to behaviour. Nat. Rev. Neurosci. 23, 307–318 (2022).

Yeung, A. W. K., More, S., Wu, J. & Eickhoff, S. B. Reporting details of neuroimaging studies on individual traits prediction: a literature survey. Neuroimage 256, 119275 (2022).

Rosenberg, M. D., Casey, B. J. & Holmes, A. J. Prediction complements explanation in understanding the developing brain. Nat. Commun. 9, 589 (2018).

Woo, C.-W., Chang, L. J., Lindquist, M. A. & Wager, T. D. Building better biomarkers: brain models in translational neuroimaging. Nat. Neurosci. 20, 365–377 (2017).

Wu, J. et al. Cross-cohort replicability and generalizability of connectivity-based psychometric prediction patterns. Neuroimage 262, 119569 (2022).

Dockès, J., Varoquaux, G. & Poline, J.-B. Preventing dataset shift from breaking machine-learning biomarkers. Gigascience 10, giab055 (2021).

Kopal, J., Uddin, L. Q. & Bzdok, D. The end game: respecting major sources of population diversity. Nat. Methods 20, 1122–1128 (2023).

Lachin, J. M. Introduction to sample size determination and power analysis for clinical trials. Control. Clin. Trials 2, 93–113 (1981).

Alexander, L. M. et al. An open resource for transdiagnostic research in pediatric mental health and learning disorders. Sci. Data 4, 170181 (2017).

Casey, B. J. et al. The Adolescent Brain Cognitive Development (ABCD) study: imaging acquisition across 21 sites. Dev. Cogn. Neurosci. 32, 43–54 (2018).

Somerville, L. H. et al. The lifespan human connectome project in development: a large-scale study of brain connectivity development in 5–21 year olds. Neuroimage 183, 456–468 (2018).

Harms, M. P. et al. Extending the Human Connectome Project across ages: imaging protocols for the Lifespan Development and Aging projects. Neuroimage 183, 972–984 (2018).

Satterthwaite, T. D. et al. Neuroimaging of the Philadelphia Neurodevelopmental Cohort. Neuroimage 86, 544–553 (2014).

Satterthwaite, T. D. et al. The Philadelphia Neurodevelopmental Cohort: a publicly available resource for the study of normal and abnormal brain development in youth. Neuroimage 124, 1115–1119 (2016).

Cohen, J. Statistical Power Analysis for the Behavioral Sciences (Routledge, 1988).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Ioannidis, J. P. A. Why most discovered true associations are inflated. Epidemiology 19, 640–648 (2008).

Strike, L. T. et al. The Queensland Twin Adolescent Brain Project, a longitudinal study of adolescent brain development. Sci. Data 10, 195 (2023).

Strike, L. T. et al. Queensland Twin Adolescent Brain (QTAB). OpenNeuro https://doi.org/10.18112/openneuro.ds004148.v1.0.1 (2022).

Barch, D. M. et al. Function in the human connectome: task-fMRI and individual differences in behavior. Neuroimage 80, 169–189 (2013).

Smith, R., Keramatian, K. & Christoff, K. Localizing the rostrolateral prefrontal cortex at the individual level. Neuroimage 36, 1387–1396 (2007).

Van Essen, D. C. et al. The WU-Minn Human Connectome Project: an overview. Neuroimage 80, 62–79 (2013).

Glasser, M. F. et al. The minimal preprocessing pipelines for the Human Connectome Project. Neuroimage 80, 105–124 (2013).

Ge, J. et al. Increasing diversity in connectomics with the Chinese Human Connectome Project. Nat. Neurosci. 26, 163–172 (2023).

Ooi, L. Q. R. et al. MRI economics: balancing sample size and scan duration in brain wide association studies. Preprint at bioRxiv https://doi.org/10.1101/2024.02.16.580448 (2024).

Chandler, C., Foltz, P. W. & Elvevåg, B. Using machine learning in psychiatry: the need to establish a framework that nurtures trustworthiness. Schizophr. Bull. 46, 11–14 (2020).

Mitchell, M. et al. Model cards for model reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency 220–229 (Association for Computing Machinery, 2019).

Benkarim, O. et al. The cost of untracked diversity in brain-imaging prediction. Preprint at bioRxiv https://doi.org/10.1101/2021.06.16.448764 (2021).

Greene, A. S. et al. Brain–phenotype models fail for individuals who defy sample stereotypes. Nature 609, 109–118 (2022).

Li, J. et al. Cross-ethnicity/race generalization failure of behavioral prediction from resting-state functional connectivity. Sci. Adv. 8, eabj1812 (2022).

Rosenblatt, M. et al. Connectome-based machine learning models are vulnerable to subtle data manipulations. Patterns https://doi.org/10.1016/j.patter.2023.100756 (2023).

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K. & Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 54, 1–35 (2021).

Tejavibulya, L. et al. Predicting the future of neuroimaging predictive models in mental health. Mol. Psychiatry 27, 3129–3137 (2022).

Finlayson, S. G. et al. Adversarial attacks on medical machine learning. Science 363, 1287–1289 (2019).

Uffelmann, E. et al. Genome-wide association studies. Nat. Rev. Methods Primers 1, 59 (2021).

Poldrack, R. A. et al. Scanning the horizon: towards transparent and reproducible neuroimaging research. Nat. Rev. Neurosci. 18, 115–126 (2017).

Open Science Collaboration. PSYCHOLOGY. Estimating the reproducibility of psychological science. Science 349, aac4716 (2015).

Munafò, M. R., Stothart, G. & Flint, J. Bias in genetic association studies and impact factor. Mol. Psychiatry 14, 119–120 (2009).

Greenwald, A. G. Consequences of prejudice against the null hypothesis. Psychol. Bull. 82, 1–20 (1975).

Gratton, C., Nelson, S. M. & Gordon, E. M. Brain-behavior correlations: two paths toward reliability. Neuron 110, 1446–1449 (2022).

Searle, A. K. et al. Tracing the long-term legacy of childhood lead exposure: a review of three decades of the port Pirie cohort study. Neurotoxicology 43, 46–56 (2014).

Cohen, J. The earth is round (p < .05). Am. Psychol. 49, 997–1003 (1994).

Gigerenzer, G. Mindless statistics. J. Socio Econ. 33, 587–606 (2004).

Subbaswamy, A. & Saria, S. From development to deployment: dataset shift, causality, and shift-stable models in health AI. Biostatistics 21, 345–352 (2020).

Finlayson, S. G. et al. The clinician and dataset shift in artificial intelligence. N. Engl. J. Med. 385, 283–286 (2021).

Larrazabal, A. J., Nieto, N., Peterson, V., Milone, D. H. & Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl Acad. Sci. USA 117, 12592–12594 (2020).

Barch, D. M. et al. Common measures for National Institute of Mental Health funded research. Biol. Psychiatry 79, e91–e96 (2016).

Rosenberg, M. D. et al. A neuromarker of sustained attention from whole-brain functional connectivity. Nat. Neurosci. 19, 165–171 (2016).

Adkinson, B. D. et al. Brain-phenotype predictions can survive across diverse real-world data. Preprint at bioRxiv https://doi.org/10.1101/2024.01.23.576916 (2024).

Lea, A. S. & Jones, D. S. Mind the gap — machine learning, dataset shift, and history in the age of clinical algorithms. N. Engl. J. Med. 390, 293–295 (2024).

Ricard, J. A. et al. Confronting racially exclusionary practices in the acquisition and analyses of neuroimaging data. Nat. Neurosci. 26, 4–11 (2023).

Kuczmarski, R. J. et al. 2000 CDC Growth Charts for the United States: methods and development. Vital Health Stat. 11, 1–190 (2002).

Cole, T. J., Bellizzi, M. C., Flegal, K. M. & Dietz, W. H. Establishing a standard definition for child overweight and obesity worldwide: international survey. BMJ 320, 1240–1243 (2000).

Freedman, D. S. et al. Validity of the WHO cutoffs for biologically implausible values of weight, height, and BMI in children and adolescents in NHANES from 1999 through 2012. Am. J. Clin. Nutr. 102, 1000–1006 (2015).

Wechsler, D. WISC-V: Technical and Interpretive Manual (Pearson, 2014).

Bilker, W. B. et al. Development of abbreviated nine-item forms of the Raven’s Standard Progressive Matrices test. Assessment 19, 354–369 (2012).

Moore, T. M., Reise, S. P., Gur, R. E., Hakonarson, H. & Gur, R. C. Psychometric properties of the Penn Computerized Neurocognitive Battery. Neuropsychology 29, 235–246 (2015).

Tulsky, D. S. et al. NIH Toolbox Cognition Battery (NIHTB-CB): list sorting test to measure working memory. J. Int. Neuropsychol. Soc. 20, 599–610 (2014).

Gur, R. C. et al. A cognitive neuroscience-based computerized battery for efficient measurement of individual differences: standardization and initial construct validation. J. Neurosci. Methods 187, 254–262 (2010).

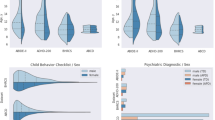

Achenbach, T. M. & Ruffle, T. M. The Child Behavior Checklist and related forms for assessing behavioral/emotional problems and competencies. Pediatr. Rev. 21, 265–271 (2000).

Miller, T. J. et al. Prodromal assessment with the structured interview for prodromal syndromes and the scale of prodromal symptoms: predictive validity, interrater reliability, and training to reliability. Schizophr. Bull. 29, 703–715 (2003).

Moore, T. M. et al. Development of a computerized adaptive screening tool for overall psychopathology (‘p’). J. Psychiatr. Res. 116, 26–33 (2019).

Papademetris, X. et al. BioImage Suite: an integrated medical image analysis suite: an update. Insight J. 2006, 209 (2006).

Rosenblatt, M., Tejavibulya, L., Jiang, R., Noble, S. & Scheinost, D. Data leakage inflates prediction performance in connectome-based machine learning models. Nat. Commun. 15, 1829 (2024).

Holmes, C. J. et al. Enhancement of MR images using registration for signal averaging. J. Comput. Assist. Tomogr. 22, 324–333 (1998).

Shen, X., Tokoglu, F., Papademetris, X. & Constable, R. T. Groupwise whole-brain parcellation from resting-state fMRI data for network node identification. Neuroimage 82, 403–415 (2013).

Snoek, L., Miletić, S. & Scholte, H. S. How to control for confounds in decoding analyses of neuroimaging data. Neuroimage 184, 741–760 (2019).

Chyzhyk, D., Varoquaux, G., Milham, M. & Thirion, B. How to remove or control confounds in predictive models, with applications to brain biomarkers. Gigascience 11, giac014 (2022).

Schulz, M.-A. et al. Different scaling of linear models and deep learning in UKBiobank brain images versus machine-learning datasets. Nat. Commun. 11, 4238 (2020).

Wu, J., Li, J., Eickhoff, S. B., Scheinost, D. & Genon, S. The challenges and prospects of brain-based prediction of behaviour. Nat. Hum. Behav. 7, 1255–1264 (2023).

Shen, X. et al. Using connectome-based predictive modeling to predict individual behavior from brain connectivity. Nat. Protoc. 12, 506–518 (2017).

Dwan, K. et al. Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS ONE 3, e3081 (2008).

Yeh, F.-C. et al. Population-averaged atlas of the macroscale human structural connectome and its network topology. Neuroimage 178, 57–68 (2018).

Yeh, F.-C., Wedeen, V. J. & Tseng, W.-Y. I. Generalized q-sampling imaging. IEEE Trans. Med. Imaging 29, 1626–1635 (2010).

Towns, J. et al. XSEDE: accelerating scientific discovery. Comput. Sci. Eng. 16, 62–74 (2014).

Gu, S. et al. The energy landscape of neurophysiological activity implicit in brain network structure. Sci. Rep. 8, 2507 (2018).

Sun, H. et al. Network controllability of structural connectomes in the neonatal brain. Nat. Commun. 14, 5820 (2023).

Yeh, F.-C., Verstynen, T. D., Wang, Y., Fernández-Miranda, J. C. & Tseng, W.-Y. I. Deterministic diffusion fiber tracking improved by quantitative anisotropy. PLoS ONE 8, e80713 (2013).

Spence, S. H., Barrett, P. M. & Turner, C. M. Psychometric properties of the Spence Children’s Anxiety Scale with young adolescents. J. Anxiety Disord. 17, 605–625 (2003).

Strike, L. T. et al. Queensland Twin Adolescent Brain (QTAB) non-imaging phenotypes. Zenodo https://doi.org/10.5281/zenodo.7765506 (2022).

Rosenblatt, M. External_validation_power. GitHub https://github.com/mattrosenblatt7/external_validation_power (2024).

Rosenblatt, M. External_validation_power: v1.0.0a. Zenodo https://doi.org/10.5281/zenodo.10975870 (2024).

Acknowledgements

This study was supported by the National Institute of Mental Health grant R01MH121095 (obtained by D.S.). M.R. was supported by the National Science Foundation Graduate Research Fellowship under grant DGE2139841. L.T. was supported by the Gruber Science Fellowship. C.C.C. was supported by the Gruber Science Fellowship and the National Science Foundation Graduate Research Fellowship under grant DGE2139841. B.D.A. was supported by NIH Medical Scientist Training Program Training Grant T32GM136651. S.N. was supported by the National Institute of Mental Health under grant R00MH130894. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript. Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect those of the funding agencies. The Healthy Brain Network (http://www.healthybrainnetwork.org) and its initiatives are supported by philanthropic contributions from the following individuals, foundations and organizations: Margaret Bilotti; Brooklyn Nets; Agapi and Bruce Burkard; James Chang; Phyllis Green and Randolph Cōwen; Grieve Family Fund; Susan Miller and Byron Grote; Sarah and Geoff Gund; George Hall; Jonathan M. Harris Family Foundation; Joseph P. Healey; The Hearst Foundations; Eve and Ross Jaffe; Howard & Irene Levine Family Foundation; Rachael and Marshall Levine; George and Nitzia Logothetis; Christine and Richard Mack; Julie Minskoff; Valerie Mnuchin; Morgan Stanley Foundation; Amy and John Phelan; Roberts Family Foundation; Jim and Linda Robinson Foundation, Inc.; The Schaps Family; Zibby Schwarzman; Abigail Pogrebin and David Shapiro; Stavros Niarchos Foundation; Preethi Krishna and Ram Sundaram; Amy and John Weinberg; Donors to the 2013 Child Advocacy Award Dinner Auction; Donors to the 2012 Brant Art Auction. Additional data used in the preparation of this article were obtained from the Adolescent Brain Cognitive Development (ABCD) Study (https://abcdstudy.org), held in the NIMH Data Archive (NDA). This is a multisite, longitudinal study designed to recruit more than 10,000 children age 9–11 and follow them over 10 years into early adulthood. The ABCD Study® is supported by the National Institutes of Health and additional federal partners under award numbers U01DA041048, U01DA050989, U01DA051016, U01DA041022, U01DA051018, U01DA051037, U01DA050987, U01DA041174, U01DA041106, U01DA041117, U01DA041028, U01DA041134, U01DA050988, U01DA051039, U01DA041156, U01DA041025, U01DA041120, U01DA051038, U01DA041148, U01DA041093, U01DA041089, U24DA04112 and U24DA041147. A full list of supporters is available at https://abcdstudy.org/federal-partners.html. A listing of participating sites and a complete listing of the study investigators can be found at https://abcdstudy.org/consortium_members/. ABCD consortium investigators designed and implemented the study and/or provided data but did not necessarily participate in the analysis or writing of this report. This manuscript reflects the views of the authors and may not reflect the opinions or views of the NIH or ABCD consortium investigators. The Human Connectome Project Development data was supported by the National Institute Of Mental Health of the National Institutes of Health under Award Number U01MH109589 and by funds provided by the McDonnell Center for Systems Neuroscience at Washington University in St Louis. The HCP-Development 2.0 Release data used in this report came from https://doi.org/10.15154/1520708. Additional data were provided by the PNC (principal investigators H. Hakonarson and R. Gur; phs000607.v1.p1). Support for the collection of these datasets was provided by grant RC2MH089983 awarded to R. Gur and RC2MH089924 awarded to H. Hakonarson. This research has been conducted in part using the QTAB project resource, which was funded by the National Health and Medical Research Council (NHMRC), Australia (Project Grant ID: 1078756 to M.L.W.), the Queensland Brain Institute, University of Queensland, and with the assistance of resources from the Centre for Advanced Imaging and the Queensland Cyber Infrastructure Foundation, University of Queensland. Additional data were provided in part by the Chinese Human Connectome Project (CHCP, PI: J.-H. Gao) funded by the Beijing Municipal Science and Technology Commission, Chinese Institute for Brain Research (Beijing), National Natural Science Foundation of China, and the Ministry of Science and Technology of China. Data were provided in part by the HCP, WUMinn Consortium (principal investigators D. Van Essen and K. Ugurbil; 1U54MH091657) funded by the 16 National Institutes of Health institutes and centres that support the National Institutes of Health Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

Author information

Authors and Affiliations

Contributions

M.R., S.N. and D.S. conceptualized the study. L.T., M.R., H.S., M.K., B.D.A. and D.S. curated the data. M.R. performed the formal analysis. M.R. and D.S. drafted the manuscript. L.T., H.S., C.C.C., M.K., B.D.A., R.J., M.L.W., S.N. and D.S. reviewed and edited the manuscript. M.R., R.J. and D.S. contributed to the visualizations. D.S. supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks Camille Maumet and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–67, Tables 1–12 and brief descriptions/discussion where necessary.

Source data

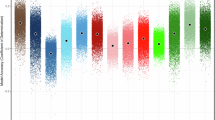

Source Data Fig. 1

Aggregated summary statistics (median, 2.5th percentile, 97.5th percentile of Pearson’s r) for internal validation performance grouped by training dataset, sample size and phenotype.

Source Data Fig. 2

Aggregated performance summary statistics (median, 2.5th percentile, 97.5th percentile of Pearson’s r) for external validation grouped by training dataset, test dataset, training sample size, test sample size and phenotype.

Source Data Fig. 3

Statistical power for external validation grouped by training dataset, test dataset, training sample size, test sample size and phenotype.

Source Data Fig. 4

Statistical power for external validation grouped by training sample size, test sample size and effect size group (high, medium, low).

Source Data Fig. 5

Effect size inflation for external validation performance grouped by training dataset, test dataset, training sample size, test sample size and phenotype.

Source Data Fig. 6

Effect size inflation for external validation grouped by training sample size, test sample size and effect size group (high, medium, low).

Source Data Fig. 7

Difference between internal and external validation performance grouped by training dataset, test dataset, training sample size and phenotype.

Source Data Fig. 8

Statistical power and effect size inflation for external validation (structural connectivity datasets) grouped by training sample size, test sample size and effect size group (high, medium, low).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rosenblatt, M., Tejavibulya, L., Sun, H. et al. Power and reproducibility in the external validation of brain-phenotype predictions. Nat Hum Behav 8, 2018–2033 (2024). https://doi.org/10.1038/s41562-024-01931-7

Received:

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s41562-024-01931-7