Abstract

There is a growing focus on making clinical trials more inclusive but the design of trial eligibility criteria remains challenging1,2,3. Here we systematically evaluate the effect of different eligibility criteria on cancer trial populations and outcomes with real-world data using the computational framework of Trial Pathfinder. We apply Trial Pathfinder to emulate completed trials of advanced non-small-cell lung cancer using data from a nationwide database of electronic health records comprising 61,094 patients with advanced non-small-cell lung cancer. Our analyses reveal that many common criteria, including exclusions based on several laboratory values, had a minimal effect on the trial hazard ratios. When we used a data-driven approach to broaden restrictive criteria, the pool of eligible patients more than doubled on average and the hazard ratio of the overall survival decreased by an average of 0.05. This suggests that many patients who were not eligible under the original trial criteria could potentially benefit from the treatments. We further support our findings through analyses of other types of cancer and patient-safety data from diverse clinical trials. Our data-driven methodology for evaluating eligibility criteria can facilitate the design of more-inclusive trials while maintaining safeguards for patient safety.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The Flatiron Health and the FH-FMI CGDB data used in this study were licensed from Flatiron Health (https://flatiron.com/real-world-evidence/) and Foundation Medicine. These de-identified data may be made available upon request; interested researchers can contact DataAccess@flatiron.com and cgdb-fmi@flatiron.com. Information on the clinical studies can be found on clinicaltrials.gov and EUdraCT.

Code availability

The open source Python code for Trial Pathfinder is available on GitHub (https://github.com/RuishanLiu/TrialPathfinder).

References

Food and Drug Administration. Enhancing the Diversity of Clinical Trial Populations — Eligibility Criteria, Enrollment Practices, and Trial Designs Guidance for Industry. https://www.fda.gov/regulatory-information/ search-fda-guidance-documents/enhancing-diversity-clinical-trial-populations- eligibility-criteria-enrollment-practices-and-trial (2020).

Van Spall, H. G., Toren, A., Kiss, A. & Fowler, R. A. Eligibility criteria of randomized controlled trials published in high-impact general medical journals: a systematic sampling review. J. Am. Med. Assoc. 297, 1233–1240 (2007).

Fehrenbacher, L., Ackerson, L. & Somkin, C. Randomized clinical trial eligibility rates for chemotherapy (CT) and antiangiogenic therapy (AAT) in a population-based cohort of newly diagnosed non-small cell lung cancer (NSCLC) patients. J. Clin. Oncol. 27, 6538 (2009).

Huang, G. D. et al. Clinical trials recruitment planning: a proposed framework from the Clinical Trials Transformation Initiative. Contemp. Clin. Trials 66, 74–79 (2018).

National Cancer Institute. Report of the National Cancer Institute Clinical Trials Program Review Group. http://deainfo.nci.nih.gov/advisory/bsa/bsa_program/bsactprgmin.pdf (2017).

Mendelsohn, J. et al. A National Cancer Clinical Trials System for the 21st Century: reinvigorating the NCI Cooperative Group Program (National Academies Press, 2010).

George, S. L. Reducing patient eligibility criteria in cancer clinical trials. J. Clin. Oncol. 14, 1364–1370 (1996).

Fuks, A. et al. A study in contrasts: eligibility criteria in a twenty-year sample of NSABP and POG clinical trials. J. Clin. Epidemiol. 51, 69–79 (1998).

Kim, E. S. et al. Modernizing eligibility criteria for molecularly driven trials. J. Clin. Oncol. 33, 2815–2820 (2015).

Kim, E. S. et al. Broadening eligibility criteria to make clinical trials more representative: American Society of Clinical Oncology and Friends of Cancer Research Joint Research Statement. J. Clin. Oncol. 35, 3737–3744 (2017).

Labrecque, J. A. & Swanson, S. A. Target trial emulation: teaching epidemiology and beyond. Eur. J. Epidemiol. 32, 473–475 (2017).

Danaei, G., García Rodríguez, L. A., Cantero, O. F., Logan, R. W. & Hernán, M. A. Electronic medical records can be used to emulate target trials of sustained treatment strategies. J. Clin. Epidemiol. 96, 12–22 (2018).

Woo, M. An AI boost for clinical trials. Nature 573, S100–S102 (2019).

Kang, T. et al. EliIE: an open-source information extraction system for clinical trial eligibility criteria. J. Am. Med. Inform. Assoc. 24, 1062–1071 (2017).

Ni, Y. et al. Increasing the efficiency of trial–patient matching: automated clinical trial eligibility pre-screening for pediatric oncology patients. BMC Med. Inform. Decis. Mak. 15, 28 (2015).

Jonnalagadda, S. R., Adupa, A. K., Garg, R. P., Corona-Cox, J. & Shah, S. J. Text mining of the electronic health record: an information extraction approach for automated identification and subphenotyping of HFPEF patients for clinical trials. J. Cardiovasc. Transl. Res. 10, 313–321 (2017).

Ni, Y. et al. Will they participate? Predicting patients’ response to clinical trial invitations in a pediatric emergency department. J. Am. Med. Inform. Assoc. 23, 671–680 (2016).

Miotto, R. & Weng, C. Case-based reasoning using electronic health records efficiently identifies eligible patients for clinical trials. J. Am. Med. Inform. Assoc. 22, e141–e150 (2015).

Yuan, C. et al. Criteria2Query: a natural language interface to clinical databases for cohort definition. J. Am. Med. Inform. Assoc. 26, 294–305 (2019).

Zhang, K. & Demner-Fushman, D. Automated classification of eligibility criteria in clinical trials to facilitate patient–trial matching for specific patient populations. J. Am. Med. Inform. Assoc. 24, 781–787 (2017).

Shivade, C. et al. Textual inference for eligibility criteria resolution in clinical trials. J. Biomed. Inform. 58, S211–S218 (2015).

Sen, A. et al. Correlating eligibility criteria generalizability and adverse events using big data for patients and clinical trials. Ann. NY Acad. Sci. 1387, 34–43 (2017).

Li, Q. et al. Assessing the validity of a a priori patient–trial generalizability score using real-world data from a large clinical data research network: a colorectal cancer clinical trial case study. AMIA Annu. Symp. Proc. 2019, 1101–1110 (2019).

Kim, J. H. et al. Towards clinical data-driven eligibility criteria optimization for interventional COVID-19 clinical trials. J. Am. Med. Inform. Assoc. 28, 14–22 (2021).

Abernethy, A. P. et al. Real-world first-line treatment and overall survival in non-small cell lung cancer without known EGFR mutations or ALK rearrangements in US community oncology setting. PLoS ONE 12, e0178420 (2017).

Khozin, S. et al. Real-world progression, treatment, and survival outcomes during rapid adoption of immunotherapy for advanced non-small cell lung cancer. Cancer 125, 4019–4032 (2019).

Ma, X. et al. Comparison of population characteristics in real-world clinical oncology databases in the US: Flatiron Health, SEER, and NPCR. Preprint at https://doi.org/10.1101/2020.03.16.20037143 (2020).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems 4765–4774 (2017).

Soria, J.-C. et al. Osimertinib in untreated EGFR-mutated advanced non-small-cell lung cancer. N. Engl. J. Med. 378, 113–125 (2018).

Soria, J.-C. et al. Afatinib versus erlotinib as second-line treatment of patients with advanced squamous cell carcinoma of the lung (LUX-Lung 8): an open-label randomised controlled phase 3 trial. Lancet Oncol. 16, 897–907 (2015).

Brahmer, J. et al. Nivolumab versus docetaxel in advanced squamous-cell non-small-cell lung cancer. N. Engl. J. Med. 373, 123–135 (2015).

Borghaei, H. et al. Nivolumab versus docetaxel in advanced nonsquamous non-small-cell lung cancer. N. Engl. J. Med. 373, 1627–1639 (2015).

Wu, Y.-L. et al. Nivolumab versus docetaxel in a predominantly Chinese patient population with previously treated advanced NSCLC: CheckMate 078 randomized phase III clinical trial. J. Thorac. Oncol. 14, 867–875 (2019).

Herbst, R. S. et al. Pembrolizumab versus docetaxel for previously treated, PD-L1-positive, advanced non-small-cell lung cancer (KEYNOTE-010): a randomised controlled trial. Lancet 387, 1540–1550 (2016).

Gandhi, L. et al. Pembrolizumab plus chemotherapy in metastatic non-small-cell lung cancer. N. Engl. J. Med. 378, 2078–2092 (2018).

Paz-Ares, L. et al. Pembrolizumab plus chemotherapy for squamous non-small-cell lung cancer. N. Engl. J. Med. 379, 2040–2051 (2018).

Zhou, C. et al. BEYOND: a randomized, double-blind, placebo-controlled, multicenter, phase III study of first-line carboplatin/paclitaxel plus bevacizumab or placebo in Chinese patients with advanced or recurrent nonsquamous non-small-cell lung cancer. J. Clin. Oncol. 33, 2197–2204 (2015).

Rittmeyer, A. et al. Atezolizumab versus docetaxel in patients with previously treated non-small-cell lung cancer (OAK): a phase 3, open-label, multicentre randomised controlled trial. Lancet 389, 255–265 (2017).

Curtis, M. D. et al. Development and validation of a high-quality composite real-world mortality endpoint. Health Serv. Res. 53, 4460–4476 (2018).

Carrigan, G. et al. An evaluation of the impact of missing deaths on overall survival analyses of advanced non-small cell lung cancer patients conducted in an electronic health records database. Pharmacoepidemiol. Drug Saf. 28, 572–581 (2019).

Suissa, S. Immortal time bias in pharmaco-epidemiology. Am. J. Epidemiol. 167, 492–499 (2008).

Ghorbani, A. & Zou, J. Data shapley: equitable valuation of data for machine learning. In International Conference on Machine Learning 2242–2251 (2019).

Singal, G. et al. Association of patient characteristics and tumor genomics with clinical outcomes among patients with non-small cell lung cancer using a clinicogenomic database. J. Am. Med. Assoc. 321, 1391–1399 (2019).

Frampton, G. M. et al. Development and validation of a clinical cancer genomic profiling test based on massively parallel DNA sequencing. Nat. Biotechnol. 31, 1023–1031 (2013).

Acknowledgements

We thank T. Ton, D. Hibar, A. Bier, D. Heinzmann, M. Beattie, A. Kelman, M. Heidelberg, J. Law and L. Tian for comments and discussions; M. D’Andrea, M. Lim and H. Rangi for help with the clinical trials data and M. Hwang for administrative support. S.R., S.W., N.P., A.L.P., M.L., B.A., W.C. and R.C. are supported by funding from Roche. J.Z. is supported by NSF CAREER and grants from the Chan-Zuckerberg Initiative. Y.L. is supported by 1UL1TR003142 and 4P30CA124435 from National Institutes of Health.

Author information

Authors and Affiliations

Contributions

R.L., A.L.P., S.R., S.W., R.C. and J.Z. designed the study. R.L., S.R., S.W. and N.P. carried out the analysis. R.L. and S.R. wrote the code. M.L., B.A., Y.L. and W.C. provided clinical interpretations. R.L., S.R., S.W., N.P., R.C. and J.Z. drafted the manuscript. R.C. and J.Z. supervised the study. All of the authors provided discussion points, and reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

A.L.P., S.R., M.L., B.A., N.P., S.W., R.C. and W.C. are employees of Genentech, a member of the Roche Group. R.L., Y.L. and J.Z. declare no competing interests.

Additional information

Peer review information Nature thanks Richard Hooper and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

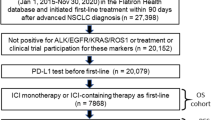

Extended Data Fig. 1 Selection of aNSCLC clinical trials.

Workflow implemented in a Python script to perform a systematic selection of trials using the six filters described in the Methods. Twenty clinical trials met the first five filters, but only six of them had a protocol that was publicly available either on ClinicalTrials.gov or as supplementary material in the associated publications. Additionally, four trials were included in the model that were suggested by subject matter experts at Roche. These four trials had not originally been identified by our systematic search owing to errors in their clinicaltrials.gov entries (for example, one trial was listed as having eight arms despite having only two).

Extended Data Fig. 2 Differential use of eligibility criteria.

The trial and criteria grid shows which eligibility criteria are present in each aNSCLC trial (criteria coloured in yellow are included in the trial protocol). The trials are divided into first-line and second-line therapies, depending on their protocol design; the eligibility criteria are grouped into categories depending on the type of variable that is measured.

Extended Data Fig. 3 Balance assessment for treatment and control groups.

a–j, For each aNSCLC trial, we plot the standardized mean difference (SMD) for every patient covariate between the treatment and control cohorts generated from the Flatiron data. SMD values close to 0 indicate that the cohorts are balanced. The inverse propensity weighting used in our analysis (IPTW) effectively balances the cohort. ‘Raw’ corresponds to the unadjusted cohorts.

Extended Data Fig. 4 Convergence of the Shapley value for the bilirubin criterion.

The x axis indicates the number of randomly generated subsets of criteria used for Shapley value computation.

Extended Data Fig. 5 Example of the effect of relaxing the eligibility criteria.

a–c, Survival curves, hazard ratios and the number of patients in trial Keynote189 when the eligibility criteria scenarios are: the original trial criteria (a), fully relaxed criteria (that is, all of the patients who took the relevant treatments) (b) and the data-driven criteria identified by Trial Pathfinder (c).

Extended Data Fig. 6 Comparison of patient baselines.

a–e, Violin plots for the laboratory values of the patients at the start of treatment. We partition the sampled patients with aNSCLC from the Flatiron database into two groups depending on whether or not they had withdrawn from first-line aNSCLC treatments due to toxicity (82 patients with toxicity = true and 918 patients with toxicity = false). The violin plots show the distribution of each of the laboratory values at the start of the trial. There is no significant difference in the baseline laboratory values between patients who later withdrew from treatment due to toxicity and the patients who did not (unadjusted two-sided Student’s t-test; P > 0.2 for all five laboratory tests).

Extended Data Fig. 7 Effects of varying laboratory cut-off values.

a–d, Changes in the Shapley value of the hazard ratios of the overall survival for different laboratory values thresholds. The x axis corresponds to different values of the inclusion threshold for bilirubin (serum bilirubin less than threshold for inclusion) (a), platelets (platelet count larger than the threshold) (b), haemoglobin (whole-blood haemoglobin level less than the threshold) (c) and ALP (ALP concentration larger than the threshold) (d). Changing a threshold to the right on the x axis corresponds to more relaxed criteria that would include more patients. The thresholds used in the original trials are provided in the key and their Shapley values are set as the baseline 0. For most of the trials, relaxing the laboratory value thresholds would not significantly change the hazard ratio or would decrease the hazard ratio (that is, curve below 0). The range of values shown for each laboratory test corresponds to the range of thresholds used in actual trials (Supplementary Table 35). In all of the panels, the error bars correspond to the bootstrap standard deviation and the centres correspond to the bootstrap mean of five replications.

Supplementary information

Supplementary Information

This file contains Supplementary Methods, Supplementary Tables 1-39, and Supplementary Discussion.

Rights and permissions

About this article

Cite this article

Liu, R., Rizzo, S., Whipple, S. et al. Evaluating eligibility criteria of oncology trials using real-world data and AI. Nature 592, 629–633 (2021). https://doi.org/10.1038/s41586-021-03430-5

Received:

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s41586-021-03430-5

This article is cited by

-

Artificial intelligence in drug development

Nature Medicine (2025)

-

Survey and perspective on verification, validation, and uncertainty quantification of digital twins for precision medicine

npj Digital Medicine (2025)

-

Clinical trials gain intelligence

Nature Biotechnology (2025)

-

Hallmarks of artificial intelligence contributions to precision oncology

Nature Cancer (2025)

-

Evaluating generalizability of oncology trial results to real-world patients using machine learning-based trial emulations

Nature Medicine (2025)