Abstract

Multiplexed imaging offers a powerful approach to characterize the spatial topography of tissues in both health and disease. To analyze such data, the specific combination of markers that are present in each cell must be enumerated to enable accurate phenotyping, a process that often relies on unsupervised clustering. We constructed the Pan-Multiplex (Pan-M) dataset containing 197 million distinct annotations of marker expression across 15 different cell types. We used Pan-M to create Nimbus, a deep learning model to predict marker positivity from multiplexed image data. Nimbus is a pretrained model that uses the underlying images to classify marker expression of individual cells as positive or negative across distinct cell types, from different tissues, acquired using different microscope platforms, without requiring any retraining. We demonstrate that Nimbus predictions capture the underlying staining patterns of the full diversity of markers present in Pan-M, and that Nimbus matches or exceeds the accuracy of previous approaches that must be retrained on each dataset. We then show how Nimbus predictions can be integrated with downstream clustering algorithms to robustly identify cell subtypes in image data. We have open-sourced Nimbus and Pan-M to enable community use at https://github.com/angelolab/Nimbus-Inference.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The Pan-M dataset can be downloaded via huggingface at https://huggingface.co/datasets/JLrumberger/Pan-Multiplex. Interactive jupyter notebooks to enable easier viewing of multiplexed imaging data are available via GitHub at https://github.com/angelolab/Nimbus-Inference/blob/main/templates/3_interactive_viewer.ipynb and https://colab.research.google.com/drive/1LW0vHC3sKKA3TyvW_9FeIaHj3PonzhGS. Source data are provided with this paper.

Code availability

Lightweight and easy to use inference and fine-tuning code for Nimbus is available via GitHub at https://github.com/angelolab/Nimbus-Inference. Code for preparing the dataset, model training and evaluation is available via GitHub at https://github.com/angelolab/Nimbus and code for figure generation is available via GitHub at https://github.com/angelolab/publications/tree/main/2024-Rumberger_Greenwald_etal_Nimbus.

References

Black, S. et al. CODEX multiplexed tissue imaging with DNA-conjugated antibodies. Nat. Protoc. 16, 3802–3835 (2021).

Liu, C. C. et al. Reproducible, high-dimensional imaging in archival human tissue by multiplexed ion beam imaging by time-of-flight (MIBI-TOF). Lab Invest. 102, 762–770 (2022).

Giesen, C. et al. Highly multiplexed imaging of tumor tissues with subcellular resolution by mass cytometry. Nat. Methods 11, 417–422 (2014).

Hickey, J. W. et al. Organization of the human intestine at single-cell resolution. Nature 619, 572–584 (2023).

Jackson, H. W. et al. The single-cell pathology landscape of breast cancer. Nature 578, 615–620 (2020).

McCaffrey, E. F. et al. The immunoregulatory landscape of human tuberculosis granulomas. Nat. Immunol. 23, 318–329 (2022).

Le, H. et al. Machine learning for cell type classification from single nucleus RNA sequencing data. PLoS ONE 17, e0275070 (2022).

Liu, C. C. et al. Robust phenotyping of highly multiplexed tissue imaging data using pixel-level clustering. Nat. Commun. 14, 4618 (2023).

Samusik, N., Good, Z., Spitzer, M. H., Davis, K. L. & Nolan, G. P. Automated mapping of phenotype space with single-cell data. Nat. Methods 13, 493–496 (2016).

Van Gassen, S. et al. FlowSOM: using self-organizing maps for visualization and interpretation of cytometry data. Cytom. A 87, 636–645 (2015).

Zhang, W. et al. Identification of cell types in multiplexed in situ images by combining protein expression and spatial information using CELESTA. Nat. Methods 19, 759–769 (2022).

Levine, J. H. et al. Data-driven phenotypic dissection of AML reveals progenitor-like cells that correlate with prognosis. Cell 162, 184–197 (2015).

Traag, V., Waltman, L. & Van Eck, N. From Louvain to Leiden: guaranteeing well-connected communities. Sci. Rep. 9, 5233 (2019).

Fu, R. et al. clustifyr: an R package for automated single-cell RNA sequencing cluster classification. F1000Res. 9, 223 (2020).

Kang, J. B. et al. Efficient and precise single-cell reference atlas mapping with Symphony. Nat. Commun. 12, 5890 (2021).

Lotfollahi, M. et al. Mapping single-cell data to reference atlases by transfer learning. Nat. Biotechnol. 40, 121–130 (2022).

Greenwald, N. F. et al. Whole-cell segmentation of tissue images with human-level performance using large-scale data annotation and deep learning. Nat. Biotechnol. 40, 555–565 (2022).

Stringer, C., Wang, T., Michaelos, M. & Pachitariu, M. Cellpose: a generalist algorithm for cellular segmentation. Nat. Methods 18, 100–106 (2021).

Schmidt, U., Weigert, M., Broaddus, C. & Myers, G. Cell detection with star-convex polygons. In Proc. Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Part II (eds Frangi, A. F. et al.) 11, 265–273 (Springer, 2018).

Israel, U. et al. A foundation model for cell segmentation. Preprint at bioRxiv https://doi.org/10.1101/2023.11.17.567630 (2023).

Goldsborough, T. et al. A novel channel invariant architecture for the segmentation of cells and nuclei in multiplexed images using InstanSeg. Preprint at bioRxiv https://doi.org/10.1101/2024.09.04.611150 (2024).

Geuenich, M. J. et al. Automated assignment of cell identity from single-cell multiplexed imaging and proteomic data. Cell Syst. 12, 1173–1186 (2021).

Brbić, M. et al. Annotation of spatially resolved single-cell data with STELLAR. Nat. Methods 19, 1411–1418 (2022).

Shaban, M. et al. MAPS: pathologist-level cell type annotation from tissue images through machine learning. Nat. Commun. 15, 28 (2024).

Chen, R. et al. Single-frame deep-learning super-resolution microscopy for intracellular dynamics imaging. Nat. Commun. 14, 2854 (2023).

Laubscher, E. et al. Accurate single-molecule spot detection for image-based spatial transcriptomics with weakly supervised deep learning. Cell Syst. 15, 475–482 (2024).

Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Kather, J. N. et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat. Med. 25, 1054–1056 (2019).

Kather, J. N. et al. Pan-cancer image-based detection of clinically actionable genetic alterations. Nat. Cancer 1, 789–799 (2020).

Amitay, Y. et al. CellSighter: a neural network to classify cells in highly multiplexed images. Nat. Commun. 14, 4302 (2023).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583–589 (2021).

Avsec, Ž. et al. Base-resolution models of transcription-factor binding reveal soft motif syntax. Nat. Genet. 53, 354–366 (2021).

Greenbaum, S. et al. A spatially resolved timeline of the human maternal–fetal interface. Nature 619, 595–605 (2023).

Falk, T. et al. U-Net: deep learning for cell counting, detection, and morphometry. Nat. Methods 16, 67–70 (2019).

Isensee, F., Jaeger, P. F., Kohl, S. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211 (2021).

Otsu, N. et al. A threshold selection method from gray-level histograms. Automatica 11, 23–27 (1975).

Harris, C. R. et al. Quantifying and correcting slide-to-slide variation in multiplexed immunofluorescence images. Bioinformatics 38, 1700–1707 (2022).

Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 28, 129–137 (1982).

Xiong, J. et al. GammaGateR: semi-automated marker gating for single-cell multiplexed imaging. Bioinformatics 40, btae356 (2024).

Wang, H., Xiao, R., Dong, Y., Feng, L. & Zhao, J. ProMix: combating label noise via maximizing clean sample utility. In Proc. International Joint Conference on Artificial Intelligence (ed. Elkind, E.) 4442–4450 (2023).

Lee, M. Y. et al. CellSeg: a robust, pre-trained nucleus segmentation and pixel quantification software for highly multiplexed fluorescence images. BMC Bioinf. 23, 46 (2022).

Greenwald, N. F. et al. Temporal and spatial composition of the tumor microenvironment predicts response to immune checkpoint inhibition. Preprint at bioRxiv https://doi.org/10.1101/2025.01.26.634557 (2025).

Bankhead, P. et al. QuPath: open source software for digital pathology image analysis. Sci. Rep. 7, 16878 (2017).

Zoph, B., Vasudevan, V., Shlens, J. & Le, Q. V. Learning transferable architectures for scalable image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 8697–8710 (IEEE, 2018).

Tan, M. & Le, Q. Efficientnetv2: smaller models and faster training. In Proc. International Conference on Machine Learning (eds Meila, M. & Zhang, T.) 10096–10106 (PMLR, 2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. Preprint at https://doi.org/10.48550/arXiv.1711.05101 (2019).

Jung, A. B. et al. imgaug. GitHub https://github.com/aleju/imgaug (2020).

Rumberger, J. L. et al. How shift equivariance impacts metric learning for instance segmentation. In Proc. IEEE/CVF International Conference on Computer Vision 7128–7136 (IEEE, 2021).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Ross, B. C. Mutual information between discrete and continuous data sets. PLoS ONE 9, e87357 (2014).

Schiemann, R., Gherardini, P. F., Kageyama, R., Travers, M. & Kitch, L. Mantis Viewer. Zenodo https://doi.org/10.5281/zenodo.4009579 (2020).

Acknowledgements

This work was supported by the IFI program of the German Academic Exchange Service (DAAD) (J.L.R.); grant no. NCI CA264307 (N.F.G.) and the Stanford Graduate Fellowship (N.F.G.); grant no. NIAID F31AI165180 (C.C.L.) and the Stanford Graduate Fellowship (C.C.L.); NIH grant nos. 5U54CA20997105 (M.A.), 1R01CA24063801A1 (M.A.), 5R01AG06827902 (M.A.), 5UH3CA24663303 (M.A.), 5R01CA22952904 (M.A.), 1U24CA22430901 (M.A.), 5R01AG05791504 (M.A.), 5R01AG05628705 (M.A.), 5U24CA22430903 (M.A.), 3U54HL165445-03S1 (M.A.), 5R01AG05628705 (M.A.), 5R01AG05791505 (M.A); the Department of Defense grant nos. W81XWH2110143 (M.A.), 5U54CA261719-05 (M.A.).

Author information

Authors and Affiliations

Contributions

J.L.R., N.F.G., D.K. and M.A. formulated the project. J.L.R. created the deep learning pipeline and trained the models. J.L.R., N.F.G., A.K., S.R.V., C.S. and C.C.L. wrote the software. J.S.R., H.P. and I.A. ran the cell phenotyping workflows. J.L.R., N.F.G., P.B. and C.W. provided manual annotations for the gold-standard dataset. R.V., I.N., M.K. and T.J.H. helped to generate training data. J.L.R. performed the analyses and generated the figures. J.F. revised the figures. J.L.R., N.F.G. and M.A. wrote the paper. X.J.W. and D.V.V. provided guidance. N.F.G. and M.A. supervised the work. All authors reviewed the paper and provided feedback.

Corresponding authors

Ethics declarations

Competing interests

M.A. is an inventor on patents related to MIBI technology (patent nos. US20150287578A1, WO2016153819A1 and US20180024111A1). N.F.G. is an advisor for CellFormatica. The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Methods thanks Anniina Färkkilä and Yasmin Kassim for their contribution to the peer review of this work. Peer reviewer reports are available. Primary Handling Editor: Madhura Mukhopadhyay, in collaboration with the Nature Methods team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

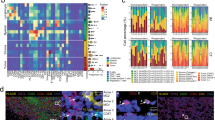

Extended Data Fig. 1 Pan-M conversion matrices and NIMBUS accuracy comparison.

a-c, Conversion matrices used to transform the cell types in the MIBI-TOF Decidua, CODEX Colon and MIBI-TOF Breast to marker positivity estimates. d, Performance metrics of naïve vs. noise-robust training procedures. e, Performance metrics for models trained with additional nuclei and cell membrane channels (four channels) as input. f, Performance metrics for full resolution (20x) vs. quarter resolution (10x) input data. g, Performance metrics for different backbone architectures. h, Performance metrics vs. gold standard annotations of the final model split by the datasets in Pan-M. i, F1 score comparison between specialized models trained only on specific subsets of Pan-M with general Nimbus model trained on the full Pan-M dataset. j-k, F1 score comparison of FoV-specific and FoV-agnostic unsupervised benchmarking methods applied on the Codex Colon and MIBI Breast subset of Pan-M. Bars show averages and error bars indicate standard deviations across three independent training runs in h-k.

Extended Data Fig. 2 NIMBUS F1 score comparison for individual channels.

a-e, F1 scores for the individual channels of the data subsets of Pan-M. f, Receiver Operating Characteristic (ROC) curves for the individual datasets and combined Pan-M dataset. g, Inference time for Nimbus as a function of increasing image size. h, Comparison of inference time between Nimbus and integrated expression as a function of increasing image size. i, Comparison of inference time between Nimbus, MAPS, and STELLAR, with preprocessing (integrated expression calculation) included. Bars show averages and error bars indicate standard deviations across three independent training runs in a-e.

Extended Data Fig. 3 NIMBUS positivity score correlation with integrated expression across datasets.

a-e, Correlation of Nimbus scores (x-axis) with integrated expression (y-axis) across the five datasets in Pan-M. Scores are colored based on their annotation as positive or negative from the gold-standard evaluations. f-g, Robustness analysis demonstrating the impact of modifying the radius for sparsity (f) and density (g) calculations.

Extended Data Fig. 4 NIMBUS positivity score correlation with integrated expression in specific channels.

a-e, Correlation of Nimbus scores (x-axis) with integrated expression (y-axis) across the five datasets in Pan-M, with representative channels shown for each. Scores are colored based on their annotation as positive or negative from the gold-standard evaluations.

Extended Data Fig. 5 Example of individual channels of the Codex Colon subset of Pan-M and their predicted Nimbus scores.

Codex colon markers that bind to the membrane are shown in a. b, Codex colon markers that bind to the nucleus. c, Codex colon markers binding to the cell membrane.

Extended Data Fig. 6 Example of individual channels of the Codex Colon and Vectra Colon subset of Pan-M and their predicted Nimbus scores.

Codex Colon markers that bind to the membrane are shown in a. b, Vectra Colon markers that bind to the nucleus. c, Vectra colon markers binding to the cell membrane.

Extended Data Fig. 7 Example of individual channels of the Vectra Pancreas and MIBI Breast subset of Pan-M and their predicted Nimbus scores.

Vectra Pancreas markers that bind to the membrane are shown in a. b, MIBI Breast markers that bind to the nucleus. c, MIBI Breast extracellular markers and d, markers binding to the cell membrane.

Extended Data Fig. 8 Example of individual channels of the MIBI Breast and MIBI Decidua subset of Pan-M and their predicted Nimbus scores.

MIBI Breast markers that bind to the membrane are shown in a. b, MIBI Decidua extracellular markers. c, MIBI Decidua markers that bind to the cell membrane.

Extended Data Fig. 9 Data Annotation.

a-c, Image samples with corresponding gold standard labels (positive cells marked with a yellow star) for the imaging platforms Vectra, CODEX and MIBI-TOF, respectively. d, overview of available, included and excluded markers for each individual subset of the Pan-M dataset.

Supplementary information

Supplementary Table 1

Overview of dataset subsets contributing to the Pan-M dataset. Summary of multiplexed imaging datasets included in the Pan-M dataset, spanning multiple imaging platforms (MIBI-TOF, CODEX and Vectra), tissue types (breast, decidua, colon and pancreas) and segmentation and clustering methodologies.

Supplementary Table 2

Accuracy metrics across a range of different model designs, backbones and training setups.

Source data

Source Data Fig. 2

Dataset metrics.

Source Data Fig. 4

Performance benchmark.

Source Data Fig. 5

Phenotyping experiments.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rumberger, J.L., Greenwald, N.F., Ranek, J.S. et al. Automated classification of cellular expression in multiplexed imaging data with Nimbus. Nat Methods 22, 2161–2170 (2025). https://doi.org/10.1038/s41592-025-02826-9

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41592-025-02826-9