Abstract

Large, population-based magnetic resonance imaging (MRI) studies of adolescents promise transformational insights into neurodevelopment and mental illness risk. However, youth MRI studies are especially susceptible to motion and other artifacts that introduce non-random noise. After visual quality control of 11,263 T1 MRI scans obtained at age 9–10 years through the Adolescent Brain Cognitive Development study, we uncovered bias in measurements of cortical thickness and surface area in 55.1% of the samples with suboptimal image quality. These biases impacted analyses relating structural MRI and clinical measures, resulting in both false-positive and false-negative associations. Surface hole number, an automated index of topological complexity, reproducibly identified lower-quality scans with good specificity, and its inclusion as a covariate partially mitigated quality-related bias. Closer examination of high-quality scans revealed additional topological errors introduced during image preprocessing. Correction with manual edits reproducibly altered thickness measurements and strengthened age–thickness associations. We demonstrate here that inadequate quality control undermines advantages of large sample size to detect meaningful associations. These biases can be mitigated through additional automated and manual interventions.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data from all ABCD-related analyses were downloaded from the NIMH Data Archive (NDA) version 4.0 (https://nda.nih.gov/study.html?id=1299). Derived variables, including MQC ratings and SHN, as well as ROI-level data for cortical thickness, surface area and volume processed in FreeSurfer 7.1, have been uploaded to the NDA (https://nda.nih.gov/study.html?id=1944). Data from MGH analyses contain sensitive patient information that was obtained following a waiver of informed consent, and, as such, have not been uploaded to a publicly available repository. Contact the corresponding author for additional information.

Code availability

R code is available at https://doi.org/10.5281/zenodo.14872906. Source files are available at the NIMH Data Repository (https://nda.nih.gov/study.html?id=1944).

References

Thompson, P. M. et al. ENIGMA and global neuroscience: a decade of large-scale studies of the brain in health and disease across more than 40 countries. Transl. Psychiatry 10, 100 (2020).

Mills, K. L. & Tamnes, C. K. Methods and considerations for longitudinal structural brain imaging analysis across development. Dev. Cogn. Neurosci. 9, 172–190 (2014).

Becht, A. I. & Mills, K. L. Modeling individual differences in brain development. Biol. Psychiatry 88, 63–69 (2020).

Dick, A. S. et al. Meaningful associations in the adolescent brain cognitive development study. Neuroimage 239, 118262 (2021).

Marquand, A. F. et al. Conceptualizing mental disorders as deviations from normative functioning. Mol. Psychiatry 24, 1415–1424 (2019).

Karcher, N. R. & Barch, D. M. The ABCD study: understanding the development of risk for mental and physical health outcomes. Neuropsychopharmacology 46, 131–142 (2021).

Dick, D. M. et al. Post-GWAS in psychiatric genetics: a developmental perspective on the ‘other’ next steps. Genes Brain Behav. 17, e12447 (2018).

Alexander-Bloch, A. et al. Subtle in-scanner motion biases automated measurement of brain anatomy from in vivo MRI. Hum. Brain Mapp. 37, 2385–2397 (2016).

Blumenthal, J. D., Zijdenbos, A., Molloy, E. & Giedd, J. N. Motion artifact in magnetic resonance imaging: implications for automated analysis. Neuroimage 16, 89–92 (2002).

Reuter, M. et al. Head motion during MRI acquisition reduces gray matter volume and thickness estimates. Neuroimage 107, 107–115 (2015).

Dale, A. M., Fischl, B. & Sereno, M. I. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9, 179–194 (1999).

Rosen, A. F. G. et al. Quantitative assessment of structural image quality. Neuroimage 169, 407–418 (2018).

White, T. et al. Automated quality assessment of structural magnetic resonance images in children: comparison with visual inspection and surface-based reconstruction. Hum. Brain Mapp. 39, 1218–1231 (2018).

Waters, A. B., Mace, R. A., Sawyer, K. S. & Gansler, D. A. Identifying errors in Freesurfer automated skull stripping and the incremental utility of manual intervention. Brain Imaging Behav. 13, 1281–1291 (2019).

Monereo-Sanchez, J. et al. Quality control strategies for brain MRI segmentation and parcellation: practical approaches and recommendations—insights from the Maastricht study. Neuroimage 237, 118174 (2021).

Ross, M. C. et al. Gray matter volume correlates of adolescent posttraumatic stress disorder: a comparison of manual intervention and automated segmentation in FreeSurfer. Psychiatry Res. Neuroimaging 313, 111297 (2021).

McCarthy, C. S. et al. A comparison of FreeSurfer-generated data with and without manual intervention. Front. Neurosci. 9, 379 (2015).

Beelen, C., Phan, T. V., Wouters, J., Ghesquiere, P. & Vandermosten, M. Investigating the added value of FreeSurfer’s manual editing procedure for the study of the reading network in a pediatric population. Front. Hum. Neurosci. 14, 143 (2020).

Eryilmaz, H. et al. Association of prenatal exposure to population-wide folic acid fortification with altered cerebral cortex maturation in youths. JAMA Psychiatry 75, 918–928 (2018).

Pulli, E. P. et al. Feasibility of FreeSurfer processing for T1-weighted brain images of 5-year-olds: semiautomated protocol of FinnBrain Neuroimaging Lab. Front. Neurosci. 16, 874062 (2022).

White, N. et al. PROMO: real-time prospective motion correction in MRI using image-based tracking. Magn. Reson. Med. 63, 91–105 (2010).

Tisdall, M. D. et al. Prospective motion correction with volumetric navigators (vNavs) reduces the bias and variance in brain morphometry induced by subject motion. Neuroimage 127, 11–22 (2016).

Ducharme, S. et al. Trajectories of cortical thickness maturation in normal brain development—the importance of quality control procedures. Neuroimage 125, 267–279 (2016).

Wainberg, M., Jacobs, G. R., Voineskos, A. N. & Tripathy, S. J. Neurobiological, familial and genetic risk factors for dimensional psychopathology in the Adolescent Brain Cognitive Development study. Mol. Psychiatry 27, 2731–2741 (2022).

Wang, C., Hayes, R., Roeder, K. & Jalbrzikowski, M. Neurobiological clusters are associated with trajectories of overall psychopathology in youth. Biol. Psychiatry Cogn. Neurosci. Neuroimaing 8, 852–863 (2023).

Hughes, D. E. et al. Genetic patterning for child psychopathology is distinct from that for adults and implicates fetal cerebellar development. Nat. Neurosci. 26, 959–969 (2023).

Marek, S. et al. Reproducible brain-wide association studies require thousands of individuals. Nature 603, 654–660 (2022).

Szucs, D. & Ioannidis, J. P. Sample size evolution in neuroimaging research: an evaluation of highly-cited studies (1990–2012) and of latest practices (2017–2018) in high-impact journals. Neuroimage 221, 117164 (2020).

Open Science Collaboration. Estimating the reproducibility of psychological science. Science 349, aac4716 (2015).

Kaplan, R. M., Chambers, D. A. & Glasgow, R. E. Big data and large sample size: a cautionary note on the potential for bias. Clin. Transl. Sci. 7, 342–346 (2014).

Bozek, J., Griffanti, L., Lau, S. & Jenkinson, M. Normative models for neuroimaging markers: impact of model selection, sample size and evaluation criteria. Neuroimage 268, 119864 (2023).

Sonuga-Barke, E. J. S. Editorial: ‘Safety in numbers’? Big data discovery strategies in neuro-developmental science—contributions and caveats. J. Child Psychol. Psychiatry 64, 1–3 (2023).

Pardoe, H. R., Kucharsky Hiess, R. & Kuzniecky, R. Motion and morphometry in clinical and nonclinical populations. Neuroimage 135, 177–185 (2016).

Smith, J. et al. Can this data be saved? Techniques for high motion in resting state scans of first grade children. Dev. Cogn. Neurosci. 58, 101178 (2022).

Sacca, V. et al. Aging effect on head motion: a machine learning study on resting state fMRI data. J. Neurosci. Methods 352, 109084 (2021).

Casey, B. J. et al. The Adolescent Brain Cognitive Development (ABCD) study: imaging acquisition across 21 sites. Dev. Cogn. Neurosci. 32, 43–54 (2018).

Backhausen, L. L., Herting, M. M., Tamnes, C. K. & Vetter, N. C. Best practices in structural neuroimaging of neurodevelopmental disorders. Neuropsychol. Rev. 32, 400–418 (2022).

Duffy, B. A. et al. Retrospective motion artifact correction of structural MRI images using deep learning improves the quality of cortical surface reconstructions. Neuroimage 230, 117756 (2021).

Garavan, H. et al. Recruiting the ABCD sample: design considerations and procedures. Dev. Cogn. Neurosci. 32, 16–22 (2018).

Auchter, A. M. et al. A description of the ABCD organizational structure and communication framework. Dev. Cogn. Neurosci. 32, 8–15 (2018).

Tustison, N. J. et al. N4ITK: improved N3 bias correction. IEEE Trans. Med. Imaging 29, 1310–1320 (2010).

Esteban, O. et al. MRIQC: advancing the automatic prediction of image quality in MRI from unseen sites. PLoS ONE 12, e0184661 (2017).

Achenbach, T. M. The Achenbach System of Empirically Based Assessment (ASEBA): Development, Findings, Theory, and Applications (University of Vermont, Research Center for Children, Youth, & Families, 2009).

Acknowledgements

Presented in part at the Society for Biological Psychiatry 2022 Annual Meeting, New Orleans, and the Society for Neuroscience 2022 Annual Meeting, San Diego. A pre-review version of this paper was published on bioRxiv at https://doi.org/10.1101/2023.02.28.530498 on 1 March 2023. This work was supported by the National Institutes of Health (NIH) (R01MH124694, R01MH120402 and T32MH112485 to J.L.R.; R01MH113550, R01MH120482, R01MH112847, R37MH125829 and R01EB022572 to T.D.S.; K23DA057486 to B.T.-C.); the Harvard Medical School Dupont Warren Fellowship (to J.A.C.); the Louis V. Gerstner Scholar Award (to J.A.C.); the MQ Foundation (to J.L.R.); and the Mass General Hospital Early Brain Development Initiative (to J.L.R.). The authors are grateful to R. L. Buckner and E. C. Dunn for helpful comments on the paper and to S. Perdomo and A. Blum for conducting additional statistical analysis and assisting with final paper preparation. We thank the investigators and staff at the Adolescent Brain Cognitive Development (ABCD) sites and coordinating centers as well as study participants and their families for their essential contributions to this work. Data used in the preparation of this article were obtained from the ABCD study (https://abcdstudy.org), held in the NIMH Data Archive. This is a multisite, longitudinal study designed to recruit more than 10,000 children age 9–10 years and follow them over 10 years into early adulthood. The ABCD study is supported by the NIH and additional federal partners under award numbers U01DA041048, U01DA050989, U01DA051016, U01DA041022, U01DA051018, U01DA051037, U01DA050987, U01DA041174, U01DA041106, U01DA041117, U01DA041028, U01DA041134, U01DA050988, U01DA051039, U01DA041156, U01DA041025, U01DA041120, U01DA051038, U01DA041148, U01DA041093, U01DA041089, U24DA041123 and U24DA041147. A full list of supporters is available at https://abcdstudy.org/federal-partners.html. A listing of participating sites and a complete listing of the study investigators can be found at https://abcdstudy.org/consortium_members/. ABCD consortium investigators designed and implemented the study and/or provided data but did not necessarily participate in the analysis or writing of this report. This article reflects the views of the authors and may not reflect the opinions or views of the NIH or ABCD consortium investigators.

Author information

Authors and Affiliations

Contributions

Conception and experimental design: K.K., J.A.C., A.E.D., H.L., B.T.-C., H.E., T.D.S. and J.L.R. Data acquisition: C.E.H., H.E., R.L.H., K.F.D. and J.L.R. Data analysis: S.E., H.E., K.K., J.A.C., E.L., K.A.K., D.E.H., O.B., R.F.S., H.L., K.F.D. and J.L.R. Data interpretation: S.E., K.K., J.A.C., E.L., K.A.K., D.E.H., O.B., A.E.D., H.L., B.T.-C., R.L.H., D.M.B., T.D.S., K.F.D. and J.L.R. Drafting and revision of the paper: all authors. All authors approved the submitted version of the paper and have agreed to be personally accountable for their own contributions.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Neuroscience thanks Eduard Klapwijk and Aristotle Voineskos for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Stability of manual quality control (MQC) ratings over time (n = 10,295).

Scans were assigned to deciles based on the sequence in which they received MQC ratings by a single trained rater. (a) Box and whisker plots show distribution of MQC ratings for each time period, after adjusting for age, gender, scanner manufacturer, and externalizing psychopathology. Adjacent marks show unadjusted mean ratings for the same period. (b) Box and whisker plots show distribution of the log of surface hole numbers (SHN), stratified by decile and MQC rating. For both plots, box indicates median and interquartile range (IQR), whiskers indicate 1.5x IQR, circles indicate mild outliers (1.5 to 3 x IQR), and asterisks indicate extreme outliers (>3 x IQR).

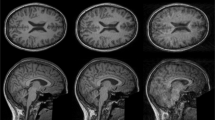

Extended Data Fig. 2 Signal dropout in sMRI processing (n = 228).

(a) Examples of dropout regions where FreeSurfer segmentation failed and did not include a substantial portion of cortex. (b) Distribution of approximate volume of dropout area estimated by ellipsoid volume calculated and distribution of (c) sagittal, (d) coronal, and (e) axial extent. (f) Distributions of drop-out regions overlaid on exemplar brain thresholded at n = 10 subjects. Heat map represents number of overlapping subjects.

Extended Data Fig. 3 Comparison of manual quality control (MQC) and surface hole number (SHN) to other automated quality control metrics (QCMs) at Baseline (n = 10,294) and Year 2 (n = 999).

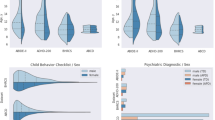

At Baseline, SHN values correlated more strongly with MQC ratings than did any other IQM (a), and SHN tiers closely approximated MQC ratings in detecting variance in other IQMs (b). The same patterns were apparent using Year 2 scans (c, d). In (b) and (d) box plots embedded within violin plots indicate median and interquartile range (IQR), whiskers indicate 1.5x IQR, and boxed numbers indicate the number outliers >6 standard deviations from the mean. FBER: Foreground-background energy ratio; BG; EFC: Entropy-focus criterion; QI2: Mortamet’s quality index 2.

Extended Data Fig. 4 Comparison of SHN tier effects on sMRI indices at (a) Baseline (n = 10,295) and (b) Year 2 (n = 6,941); compare to Fig. 2.

Maps at left show linear associations of SHN tier (A to D) with cortical thickness, surface area, and volume. Maps at right contrast thickness, surface area, and volume highest quality images (SHN = A) with those assigned to lower quality ratings. Covariates included age, gender, estimated intracranial volume (fixed effects), site, and scanner manufacturer (random effects).

Extended Data Fig. 5 Unique contributions of SHN tiers versus MQC to variance in sMRI indices, n = 10,295.

(a) Linear association of MQC on cortical indices after controlling for SHN tiers. (b) Linear association of SHN tiers on cortical indices after controlling for MQC. Covariates included age, gender, estimated intracranial volume (fixed effects), site, and scanner manufacturer (random effects).

Extended Data Fig. 6 Included Year 2 follow-up scans.

Among 11,875 total participants at baseline, Year 2 T1 scans were available from 7,829; of these, 6,941 were eligible for processing with FreeSurfer, and 1,000 were semi-randomly selected for MQC ratings (see Methods for additional details).

Extended Data Fig. 7 Relationship of surface hole number (SHN) to manual quality control (MQC) in selected Year 2 follow-up scans (n = 999).

(a) Density plot of SHN values, stratified by MQC ratings. (b) Distribution of MQC ratings as related to SHN for each SHN tier.

Extended Data Fig. 8 Effects of manual edits on sMRI indices, stratified by MQC rating.

Edits were conducted on 150 scans with MQC = 1 and 30 scans with MQC = 2. Maps reflect effect sizes of pre-to-post edit changes in (a) cortical thickness, (b) cortical surface area, and (c) cortical volume. Note increased effects of edits in MQC = 2 relative to MQC = 1. (d) Post-edit thickness reduction along the superior sagittal sinus, which is frequently misattributed to pial surface during preprocessing.

Extended Data Fig. 9 Composite maps showing location and direction of sMRI measurement errors detected by manual quality control and cortical edits, among MQC = 1 and 2 scans only.

Highlighted regions show either significant differences in sMRI indices between MQC = 1 and MQC = 2 scans, significant effects of cortical edits, or both. Note that, when co-occurring within the same region, errors due to poor scan quality (assessed by MQC) do not necessarily occur in the same direction as errors requiring manual edits.

Extended Data Fig. 10 Effects of manual edits on cortical thickness and age-thickness relationships MGH sample, stratified by age group (n = 292).

(a) Violin plots show effect size and related variance of manual edits on cortical thickness in the MGH sample, stratified by age group. The 18 included ROIs are those that also showed significant effects of edits on cortical thickness in the ABCD cohort, in the same direction. Regions are ordered by effect size in the 8- to 10-year-old group. Means are represented by black circles. Note that effect sizes and variance diminished with age. (b) Effects of edits on the magnitude of age-thickness relationships within the MGH sample across 68 cortical ROIs, stratified by age group. Each marker shows the age-thickness effect size for a given ROI. Edits strengthened age-thickness effects (that is, effect sizes became more negative, indicated by lower intercept of the best-fit line compared to the dashed unity line) at age 8-10, but not in other age groups.

Supplementary information

Supplementary Tables

Supplementary Tables 1–14.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Elyounssi, S., Kunitoki, K., Clauss, J.A. et al. Addressing artifactual bias in large, automated MRI analyses of brain development. Nat Neurosci 28, 1787–1796 (2025). https://doi.org/10.1038/s41593-025-01990-7

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41593-025-01990-7