Abstract

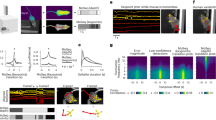

Spontaneous mouse behavior is composed from repeatedly used modules of movement (e.g., rearing, running or grooming) that are flexibly placed into sequences whose content evolves over time. By identifying behavioral modules and the order in which they are expressed, researchers can gain insight into the effect of drugs, genes, context, sensory stimuli and neural activity on natural behavior. Here we present a protocol for performing Motion Sequencing (MoSeq), an ethologically inspired method that uses three-dimensional machine vision and unsupervised machine learning to decompose spontaneous mouse behavior into a series of elemental modules called ‘syllables’. This protocol is based upon a MoSeq pipeline that includes modules for depth video acquisition, data preprocessing and modeling, as well as a standardized set of visualization tools. Users are provided with instructions and code for building a MoSeq imaging rig and acquiring three-dimensional video of spontaneous mouse behavior for submission to the modeling framework; the outputs of this protocol include syllable labels for each frame of the video data as well as summary plots describing how often each syllable was used and how syllables transitioned from one to the other. In addition, we provide instructions for analyzing and visualizing the outputs of keypoint-MoSeq, a recently developed variant of MoSeq that can identify behavioral motifs from keypoints identified from standard (rather than depth) video. This protocol and the accompanying pipeline significantly lower the bar for users without extensive computational ethology experience to adopt this unsupervised, data-driven approach to characterize mouse behavior.

Key points

-

Motion Sequencing uses three-dimensional machine vision and unsupervised machine learning on depth videos to decompose spontaneous mouse behavior into a series of elemental modules called ‘syllables’, revealing how often syllables are used and how they transition over time.

-

Motion Sequencing identifies temporal boundaries between syllables, identifying individual syllables based on the sequence context in which each syllable occurs, not just on the animal’s pose, as in other approaches.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All data supporting the findings of this study are available at http:// www.MoSeq4all.org.

Code availability

Code used in this study are available at http:// www.MoSeq4all.org, Depth MoSeq repository: https://github.com/dattalab/moseq2-app/, and Keypoint-MoSeq repository: https://github.com/dattalab/keypoint-moseq

References

Tinbergen, N. The Study of Instinct (Clarendon Press, 1951).

Baerends, G. P. The functional organization of behaviour. Anim. Behav. 24, 726–738 (1976).

Dawkins, R. in Growing Points in Ethology (eds Bateson, P. P. G. & Hinde, R. A.) Ch. 1 (Cambridge Univ. Press, 1976).

Anderson, D. J. & Perona, P. Toward a science of computational ethology. Neuron 84, 18–31 (2014).

Datta, S. R., Anderson, D. J., Branson, K., Perona, P. & Leifer, A. Computational neuroethology: a call to action. Neuron 104, 11–24 (2019).

Pereira, T. D., Shaevitz, J. W. & Murthy, M. Quantifying behavior to understand the brain. Nat. Neurosci. 23, 1537–1549 (2020).

Bateson, P. P. G. & Hinde, R. A. eds. Growing Points in Ethology (Cambridge Univ. Press, 1976).

Tinbergen, N. On aims and methods of ethology. Z. f.ür. Tierpsychologie 20, 410–433 (1963).

Berman, G. J. Measuring behavior across scales. BMC Biol. 16, 23 (2018).

Mathis, A., Schneider, S., Lauer, J. & Mathis, M. W. A primer on motion capture with deep learning: principles, pitfalls, and perspectives. Neuron 108, 44–65 (2020).

Mathis, M. W. & Mathis, A. Deep learning tools for the measurement of animal behavior in neuroscience. Curr. Opin. Neurobiol. 60, 1–11 (2020).

Kennedy, A. The what, how, and why of naturalistic behavior. Curr. Opin. Neurobiol. 74, 102549 (2022).

Branson, K., Robie, A. A., Bender, J., Perona, P. & Dickinson, M. H. High-throughput ethomics in large groups of Drosophila. Nat. Methods 6, 451–457 (2009).

Dankert, H., Wang, L., Hoopfer, E. D., Anderson, D. J. & Perona, P. Automated monitoring and analysis of social behavior in Drosophila. Nat. Methods 6, 297–303 (2009).

Kabra, M., Robie, A. A., Rivera-Alba, M., Branson, S. & Branson, K. JAABA: interactive machine learning for automatic annotation of animal behavior. Nat. Methods 10, 64–67 (2013).

Machado, A. S., Darmohray, D. M., Fayad, J., Marques, H. G. & Carey, M. R. A quantitative framework for whole-body coordination reveals specific deficits in freely walking ataxic mice. eLife 4, 18 (2015).

Mueller, J. M., Ravbar, P., Simpson, J. H. & Carlson, J. M. Drosophila melanogaster grooming possesses syntax with distinct rules at different temporal scales. PLoS Comput. Biol. 15, e1007105 (2019).

Ravbar, P., Branson, K. & Simpson, J. H. An automatic behavior recognition system classifies animal behaviors using movements and their temporal context. J. Neurosci. Methods 326, 108352 (2019).

Chaumont, F. D. et al. Real-time analysis of the behaviour of groups of mice via a depth-sensing camera and machine learning. Nat. Biomed. Eng. 3, 930–942 (2019).

Hong, W. et al. Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc. Natl Acad. Sci. USA 112, E5351–E5360 (2015).

Goodwin, N. L. et al. Simple Behavioral Analysis (SimBA) as a platform for explainable machine learning in behavioral neuroscience. Nat. Neurosci. https://doi.org/10.1038/s41593-024-01649-9 (2024).

Berman, G. J., Bialek, W. & Shaevitz, J. W. Predictability and hierarchy in Drosophila behavior. Proc. Natl Acad. Sci. USA 113, 11943–11948 (2016).

Berman, G. J., Choi, D. M., Bialek, W. & Shaevitz, J. W. Mapping the stereotyped behaviour of freely moving fruit flies. J. R. Soc. Interface 11, 20140672 (2013).

Todd, J. G., Kain, J. S. & de Bivort, B. L. Systematic exploration of unsupervised methods for mapping behavior. Phys. Biol. 14, 015002 (2017).

Marques, J. C., Lackner, S., Félix, R. & Orger, M. B. Structure of the zebrafish locomotor repertoire revealed with unsupervised behavioral clustering. Curr. Biol. 28, 181–195.e185 (2018).

Hsu, A. I. & Yttri, E. A. B-SOiD, an open-source unsupervised algorithm for identification and fast prediction of behaviors. Nat. Commun. 12, 5188 (2021).

Wiltschko, A. B. et al. Mapping sub-second structure in mouse behavior. Neuron 88, 1121–1135 (2015).

Wiltschko, A. B. et al. Revealing the structure of pharmacobehavioral space through motion sequencing. Nat. Neurosci. 23, 1433–1443 (2020).

Vogelstein, J. T. et al. Discovery of brainwide neural-behavioral maps via multiscale unsupervised structure learning. Science 344, 386–392 (2014).

Johnson, M., Duvenaud, D. K., Wiltschko, A., Adams, R. P. & Datta, S. R. Structured VAEs: composing probabilistic graphical models and variational autoencoders. Adv. Neural Inf. Process Syst. 2016, 2946–2954 (2015).

Markowitz, J. E. et al. The striatum organizes 3D behavior via moment-to-moment action selection. Cell 174, 44–58.e17 (2018).

Pisanello, F. et al. Dynamic illumination of spatially restricted or large brain volumes via a single tapered optical fiber. Nat. Neurosci. 20, 1180–1188 (2017).

Datta, S. R. Q&A: understanding the composition of behavior. BMC Biol. 17, 44 (2019).

Levy, D. R. et al. Mouse spontaneous behavior reflects individual variation rather than estrous state. Curr. Biol. https://doi.org/10.1016/j.cub.2023.02.035 (2023).

Weinreb, C. et al. Keypoint-MoSeq: parsing behavior by linking point tracking to pose dynamics. Preprint at bioRxiv https://doi.org/10.1101/2023.03.16.532307 (2023).

Favuzzi, E. et al. GABA-receptive microglia selectively sculpt developing inhibitory circuits. Cell 184, 4048–4063.e4032 (2021).

Rudolph, S. et al. Cerebellum-specific deletion of the GABAA receptor δ subunit leads to sex-specific disruption of behavior. Cell Rep. 33, 108338–108338 (2020).

Hadjas, L. C. et al. Projection-specific deficits in synaptic transmission in adult Sapap3-knockout mice. Neuropsychopharmacology 45, 2020–2029 (2020).

Gschwind, T. et al. Hidden behavioral fingerprints in epilepsy. Neuron https://doi.org/10.1016/j.neuron.2023.02.003 (2023).

Markowitz, J. E. et al. Spontaneous behaviour is structured by reinforcement without explicit reward. Nature 614, 108–117 (2023).

Mathis, A. et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21, 1281–1289 (2018).

Nath, T. et al. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 14, 2152–2176 (2019).

Pereira, T. D. et al. Fast animal pose estimation using deep neural networks. Nat. Methods 16, 117–125 (2019).

Graving, J. M. et al. DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. eLife 8, e47994 (2019).

Pereira, T. D. et al. SLEAP: a deep learning system for multi-animal pose tracking. Nat. Methods 19, 486–495 (2022).

Bohnslav, J. P. et al. DeepEthogram, a machine learning pipeline for supervised behavior classification from raw pixels. eLife 10, e63377 (2021).

Berman, G. J., Choi, D. M., Bialek, W. & Shaevitz, J. W. Mapping the stereotyped behaviour of freely moving fruit flies. J. R. Soc. Interface 11, 20140672 (2014).

Cande, J. et al. Optogenetic dissection of descending behavioral control in Drosophila. eLife 7, 970 (2018).

Klibaite, U., Berman, G. J., Cande, J., Stern, D. L. & Shaevitz, J. W. An unsupervised method for quantifying the behavior of paired animals. Phys. Biol. 14, 015006 (2017).

Acknowledgements

S.R.D. is supported by SFARI, the Simons Collaboration on the Global Brain, the Simons Collaboration for Plasticity and the Aging Brain, and by National Institutes of Health grants U24NS109520, U19NS113201, R01NS114020 and RF1AG073625. W.F.G. is supported by National Institutes of Health grant F31NS113385, and C.W. is supported by a postdoctoral fellowship from the Jane Coffin Childs foundation.

Author information

Authors and Affiliations

Contributions

S.L., W.F.G., C.W., J.M. and A.Z. wrote the codebase and the protocol, S.C.J., E.M.R. and S.R.D. tested the protocol. S.L., W.F.G. and S.R.D. wrote and edited the paper.

Corresponding author

Ethics declarations

Competing interests

S.R.D. sits on the scientific advisory boards of Neumora, Inc. and Gilgamesh Therapeutics, which have licensed the MoSeq technology.

Peer review

Peer review information

Nature Protocols thanks Max Tischfielf and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Related links

Key references using this protocol

Wiltschko, A. B. et al. Neuron 88, 1121–35 (2015): https://doi.org/10.1016/j.neuron.2015.11.031

Levy, D. R. et al. Curr. Biol. 33, 1358–1364.e4 (2023): https://doi.org/10.1016/j.cub.2023.02.035

Wiltschko, A. B. et al. Nat. Neurosci. 23, 1433–1443 (2020): https://doi.org/10.1038/s41593-020-00706-3

Supplementary information

Supplementary Information

Supplementary manual for recording rig construction and camera mounting.

Supplementary Video 1

A crowd movie of 20 superimposed examples of mice performing the syllable Dart.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lin, S., Gillis, W.F., Weinreb, C. et al. Characterizing the structure of mouse behavior using Motion Sequencing. Nat Protoc 19, 3242–3291 (2024). https://doi.org/10.1038/s41596-024-01015-w

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41596-024-01015-w

This article is cited by

-

MoSeq based 3D behavioral profiling uncovers neuropathic behavior changes in diabetic mouse model

Scientific Reports (2025)

-

Comprehensive behavioral characterization and impaired hippocampal synaptic transmission in R1117X Shank3 mutant mice

Translational Psychiatry (2025)

-

Functional synaptic connectivity of engrafted spinal cord neurons with hindlimb motor circuitry in the injured spinal cord

Nature Communications (2025)

-

Elevated synaptic PKA activity and abnormal striatal dopamine signaling in Akap11 mutant mice, a genetic model of schizophrenia and bipolar disorder

Nature Communications (2025)